Abstract

Despite a wealth of knowledge about the neural mechanisms behind emotional facial expression processing, little is known about how they relate to individual differences in social cognition abilities. We studied individual differences in the event-related potentials (ERPs) elicited by dynamic facial expressions. First, we assessed the latent structure of the ERPs, reflecting structural face processing in the N170, and the allocation of processing resources and reflexive attention to emotionally salient stimuli, in the early posterior negativity (EPN) and the late positive complex (LPC). Then we estimated brain–behavior relationships between the ERP factors and behavioral indicators of facial identity and emotion-processing abilities. Structural models revealed that the participants who formed faster structural representations of neutral faces (i.e., shorter N170 latencies) performed better at face perception (r = –.51) and memory (r = –.42). The N170 amplitude was not related to individual differences in face cognition or emotion processing. The latent EPN factor correlated with emotion perception (r = .47) and memory (r = .32), and also with face perception abilities (r = .41). Interestingly, the latent factor representing the difference in EPN amplitudes between the two neutral control conditions (chewing and blinking movements) also correlated with emotion perception (r = .51), highlighting the importance of tracking facial changes in the perception of emotional facial expressions. The LPC factor for negative expressions correlated with the memory for emotional facial expressions. The links revealed between the latency and strength of activations of brain systems and individual differences in processing socio-emotional information provide new insights into the brain mechanisms involved in social communication.

Similar content being viewed by others

The ability to process the socially relevant information provided by faces, including emotional state, age, sex, and identity, is argued to be a fundamental component of social cognition (Herzmann, Danthiir, Wilhelm, Sommer, & Schacht, 2007) and may contribute to individual differences in emotional intelligence (e.g., Mayer, Roberts, & Barsade, 2008). Individuals may differ markedly in their emotional responses to stimuli in their environment. A spider, for example, may elicit great interest in entomologists, but panic in arachnophobes. Such differences are modulated by interactions between socio-cultural and biological variables. In the present study, we addressed the still poorly understood question of how the responsiveness of the brain to emotional facial stimuli—as measured by an electroencephalogram (EEG)—contributes to individual differences in the processing of social and emotional information.

Emotion specificity of brain responses to facial expressions of emotion

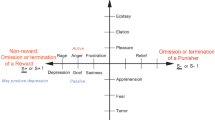

Some emotion theories postulate the existence of a discrete number of basic emotions, characterized by distinct biological and behavioral markers, including the display of unique and universally recognizable combinations of facial muscles (e.g., Ekman, 1999), and recognized by dedicated brain systems (e.g., Adolphs, 2002). In contrast to this view, others propose broader, dimensional concepts to define emotion, such as core affect, valence, and arousal (e.g., Barrett, 2011; Russell, 2003), with overlapping brain networks across basic emotions, dedicated to the processing of valence—for example, the orbitofrontal cortex—and arousal—for example, the amygdala (e.g., Wilson-Mendenhall, Barrett, & Barsalou, 2013).

Event-related potentials (ERPs) elicited by facial stimuli are considered neurocognitive markers of different stages in face processing (see Schweinberger, 2011, for an overview). The N170 component is often seen as a manifestation of the structural and holistic encoding of faces (e.g., Eimer, 2011). Differences in ERP amplitudes between emotional and neutral stimuli consistently appear as negative deflections over posterior electrodes around 200–350 ms—the early posterior negativity (EPN)—and in later time windows as increased positivities at centro-parietal sites—the late positive complex (LPC; see Schupp, Flaisch, Stockburger, & Junghöfer, 2006). The EPN reflects an increase in the amount of sensory processing resources in the extrastriate cortex, modulated by brain systems in which visual representations are evaluated in terms of their meaning, such as the amygdala and the prefrontal cortex (e.g., Pourtois, Schettino, & Vuilleumier, 2012). The LPC has been related to the concept of motivated attention and is considered to reflect the sustained processing of emotional stimuli due to their intrinsic motivational relevance (e.g., Schupp et al., 2006).

Studies comparing ERPs across different facial expressions of emotion can inform as to the nature and magnitude of emotion specificity. The processing of facial expression in the ERPs may show up, for example, as nonspecific differences in amplitude for emotional relative to neutral expressions, which has been observed for both the N170 (Luo, Feng, He, Wang, & Luo, 2010; Recio, Schacht, & Sommer, 2014) and the EPN (e.g., Recio, Schacht, & Sommer, 2014). Specificity may also appear between negative and positive emotions (e.g., the LPC in Recio, Schacht, & Sommer, 2014), or even for a specific emotion category relative to expressions of other emotions and neutral faces—for instance, the enhanced N170 to fear (Batty & Taylor, 2003; Williams, Palmer, Liddell, Song, & Gordon, 2006). The emotion specificity of the N170 and the further above-mentioned components might be related to task demands and the allocation of attention resources (e.g., Calvo & Beltrán, 2014).

Emotion specificity of behavioral responses

Recently, a few have studies investigated the emotion specificity of the ability to recognize emotional expressions by applying psychometric modeling to behavioral data. Modeling observable indicators as latent factors has allowed identifying the underlying latent structure and detecting systematic individual differences. For example, using intensity ratings of morphed face stimuli with mixed emotional expressions, Suzuki, Hoshino, and Shigemasu (2006, 2010) have presented a higher-order solution for general emotion recognition traits, with separable sensitivities for happiness (path coefficient = .183) and negative expressions (.635). This finding suggests that individuals showing high sensitivity to a specific negative expression also show a similar sensitivity to other negative expressions, but not necessarily to happiness, and vice versa. However, other studies using audio–visual stimuli have reported no emotion, valence, or modality specificity, indicating a unidimensional structure for emotion recognition ability (Schlegel, Grandjean, & Scherer, 2012).

These studies employed one or two tasks to measure emotion recognition abilities. Using multiple indicators allows abstracting from particular task specificities and measurement error. A recent study applied structural equation modeling to the accuracy data obtained from more than ten different tasks, measuring the abilities of face perception, face memory, and the perception and memory of emotional facial expressions (Hildebrandt, Sommer, Schacht, & Wilhelm, 2015). The study showed little or no emotion specificity for expression perception and memory, indicating that individuals with good performance identifying a given emotion are likely to also perform well for other emotions.

Brain–behavior relationships in the perception of social and affective information

In line with the traditional differentiation between face perception and face memory, on the one hand, and the recognition of face identity and emotional expressions, on the other (Bruce & Young, 1986), psychometric studies have identified four abilities that account for individual differences in face processing: face perception, face memory, facial emotion perception, and facial emotion memory (e.g., Hildebrandt et al., 2015; Wilhelm et al., 2010).

Functional brain-imaging studies suggest that individual differences in emotional reactions and in the perception and recall of affective stimuli are in part related to differences in brain responses (e.g., Hamann & Canli, 2004). Activations of the amygdala and prefrontal cortex are related to individual differences in the processing and recognition of facial expressions of emotion (e.g., Leppänen & Nelson, 2009; Stevens & Hamann, 2012). For example, Cornwell and colleagues (2011) observed a link between individual differences in anxiety—both as personality trait and emotional state—and the reactivity of amygdala and prefrontal cortex to fearful faces. Activation in these areas and the anterior frontal cortex were found to underlie individual differences in valence attributions to expressions of positive or negative surprise (Whalen et al., 2013).

Psychophysiological studies have identified links between electromyogram, heart rate, electrodermal, or EEG measures and individual differences in personality and emotional responses (e.g., Stemmler & Wacker, 2010). For instance, involuntary activation of the corrugator muscle measured while one is seeing dynamic facial expressions correlated with facial emotion perception ability (Künecke, Hildebrandt, Recio, Sommer, & Wilhelm, 2014) and with the experience of negative valence (Sato, Fujimura, Kochiyama, & Suzuki, 2013).

Using multiple tasks and multiple indicators, Herzmann, Kunina, Sommer, and Wilhelm (2009) reported a negative correlation between the speed of structural encoding of faces, reflected in the N170 latency, and face perception accuracy. The authors also reported a positive correlation between two memory-related ERP components, the early and late repetition effects (ERE and LRE, respectively), and individual differences in face perception accuracy and speed. The findings regarding the N170 latency and ERE were replicated and extended by Kaltwasser, Hildebrandt, Recio, Wilhelm, and Sommer (2014). They applied latent difference score modeling (LDS; McArdle, 2009) to parameterize individual differences in ERPs, defined as difference waves between primed and unprimed conditions (i.e., ERE, LRE—for details, see Kaltwasser et al., 2014). LDS helps to solve the problem of the limited variance and reliability of difference scores when two initial measures are highly correlated (e.g., Rogosa & Willett, 1983).

Emotion specificity is a longstanding topic of debate in the emotion literature, which has been addressed with different measurements and analytic approaches (see Hildebrandt et al., 2015, for an overview). However, the question of the emotion specificity of individual differences in ERPs in response to emotional faces has never been addressed at the level of latent variables. Psychometric modeling of multitask data can provide new insights into this longstanding question. To this end, we applied LDS models to data from ERP components reflecting the visual processing of faces and motivated attention to emotional expressions (the N170, EPN, and LPC), and investigated the neural underpinnings of individual differences in emotion perception as one aspect of social cognition. First, we investigated individual differences in these brain responses to six dynamic facial expressions of emotion and two neutral facial movements (chewing and blinking). The main question was whether emotion-specific individual differences in brain processes are involved in the perception of facial expressions; namely, do individuals who show strong brain activation to happy faces show similar reactions to other expressions? Second, assuming that individual differences in social abilities are influenced by the variance in brain activation, we estimated the relationship between these ERPs and indicators of performance accuracy in face cognition and emotion processing, as well as their temporal dynamics.

Indicators of behavioral and brain responses were estimated in different tasks in separate testing sessions to ensure experimental independence. Measurement models were specified to test four competing hypotheses. The first model represented the case in which ERP responses are distinct for each emotion, and hence that individual differences can be observed for each category. The second model showed distinct individual differences for valence categories (positive vs. negative); that is, individuals with enhanced ERPs to given negative expressions would also show strong reactions to other negative expressions, but not to positive expressions, and vice versa. The third model represented distinct individual differences in the brain responses to emotional relative to neutral faces, but was unspecific for emotion or valence categories. The fourth model assumed no emotion specificity at all.

We hypothesized that the N170 component would either show no emotion-specific individual differences (fourth model) or would just show differences for emotional expressions relative to neutral faces (third model), because prior results had shown rather inconsistent effects of emotional expressions on this component (see Eimer, 2011). For the EPN and LPC data we expected to confirm Model 1, 2, or 3, because both components reflect the processing of emotion (e.g., Schupp et al., 2006). A previous study had shown similar topographic distributions and neural sources for an EPN-like component, reflecting the processing of facial movement per se (i.e., nonemotional facial movements), and for the EPN itself, reflecting the processing of emotional facial expressions (Recio, Schacht, & Sommer, 2014). Hence, we also modeled an LDS variable representing the difference in amplitude between two neutral conditions.

Regarding brain–behavior relationships, we expected to replicate the negative correlation between the N170 latency and face identity perception (e.g., Kaltwasser et al., 2014). Moreover, we predicted that the N170 measures of amplitude and latency, reflecting structural encoding, would be related to face perception and memory abilities. In contrast, we expected the EPN and LPC amplitudes to be more strongly associated with emotion-related abilities than with face perception. The relationships involving differences in amplitude between neutral conditions and the face cognition factors were less clear, and these analyses were thus explorative.

ERPs represent cognitive processing occurring in a temporal sequence, and individual differences may depend on the interdependencies between different ERPs, and their temporal dynamics. For example, the onset of the processing of emotional expressions (EPN) might overlap in time with the N170 (e.g., Rellecke, Sommer, & Schacht, 2013). To estimate such dependencies, we calculated two autoregressive models with the N170, EPN, and LPC amplitudes.

Method

The study consisted of a behavioral and a psychophysiological part. In the behavioral part, we assessed individual differences in face cognition abilities in general, and the specific abilities of emotion perception and recognition, using a multivariate test battery consisting of several tasks for each latent variable (Hildebrandt et al., 2015; Wilhelm, Hildebrandt, Manske, Schacht, & Sommer, 2014). In the psychophysiological part, we recorded ERPs while a subsample, randomly selected from the psychometric sample, completed three different tasks: (1) learning and recognition of facial identity (Kaltwasser et al., 2014), (2) the classification of dynamic facial expressions of emotion (Künecke et al., 2014; Recio, Schacht & Sommer, 2014), and (3) the production of facial expressions (Recio, Shmuilovich, & Sommer, 2014). In the present study, we focused on the emotion specificities of the ERPs recorded during the emotion classification task, and on their relationship to the accuracy of face identity and facial emotion processing obtained in the psychometric part. Therefore, we considered 14 tasks measuring abilities relevant for our hypotheses (see the supplementary materials). The relationships of these abilities with speed indicators or with other abilities like expressivity were beyond the scope of the present report.

Participants

In general, we aimed to test similar numbers of women and men in our sample, from heterogeneous educational backgrounds. The psychometric study was completed by 269 young adults (52.4% women), mean age M = 25.9 years (SD = 5.9), with normal or corrected-to-normal visual accuracy and diverse educational backgrounds (26.8% without high school degrees, including occupational education; 62.5% with high school degrees; 10.7% with academic degrees).

We randomly recruited participants for the psychometric sample by keeping sex and educational background constant to given values. A total of 110 agreed to participate in the psychophysiological part of the experiment. The demographic structure of the subsample was similar to that of the original sample (45.5% women; mean age M = 26.5 years, SD = 4.8, 25.4% without high school degrees, including occupational education; 47.3% with high school degrees; 27.3% with academic degrees). Although the proportion of persons with academic degrees increased, leading to a subsample in the EEG study that did not fully reflect the larger sample, the subsample of persons without high school degree remained sufficiently large, which improved generalizability to a more heterogeneous population. Participants’ data with error rates more than two SDs beyond the mean (more than 30% errors overall across trials) in the emotion classification task (n = 3), or with excessive artifacts in the EEG (n = 5), were excluded for all subsequent analyses. The final sample with complete ERP and behavioral data was n = 102 (46 women, 56 men), mean age M = 26.64 years (SD = 4.82).

All participants provided informed consent for the study and were paid for their contribution. A local ethics committee approved the experiments.

Stimuli, apparatus, and procedure

The supplementary materials provide a brief description of the 14 tasks used in the present report. During the psychophysiological part, participants completed an emotion classification task with dynamic facial expressions. We used dynamic expressions to aim at a more ecologically valid appearance of our facial expressions, which has been shown to produce a greater impact on brain responses than static pictures (e.g., Arsalidou, Morris, & Taylor, 2011; Recio, Sommer, & Schacht, 2011).

Face stimuli were obtained from the Radboud Faces Database (Langner et al., 2010) and morphed with the computer software FantaMorph (Abrosoft, 2010). Using the morphing technique, we manipulated the intensity of the expressions by showing them in an intermediate state between emotional and neutral expressions. The intensity manipulation aimed to increase the task difficulty and thus avoid ceiling effects for expressions of high intensity (e.g., Suzuki et al., 2006). Videos of our dynamic emotional stimuli started with a neutral face and progressively increased in expression intensity over six frames, reaching maximal intensity 200 ms after onset. The frame with maximal intensity was repeated (static) for 400 ms—that is, until the end of the video (600 ms after onset). Neutral-expression videos also started with a neutral face, and showed either a chewing or a blinking movement within the first 200 ms after onset, returning to the neutral expression of the first frame until video offset (also 600 ms). The stimuli consisted of 38 models displaying six facial expressions (anger, disgust, fear, happiness, sadness, and surprise) at two intensity levels (moderate and full intensity), as well as two types of nonemotional movements (blinking, chewing). Each model was shown once with both intensity levels. Half of the models were shown a third time in the low-intensity condition, and the other half in the high-intensity condition.

The task consisted of 798 trials, 57 per condition, presented in fully randomized order, with short breaks after every 200 trials. All participants saw the same randomized sequence. The stimuli were presented as color video clips of 600-ms duration, at 30 frames per second over a dark gray background and with an oval mask of the same color covering the hair and shoulders, and were preceded by a fixation cross presented for 700 ms. After each video, a scale appeared on the screen depicting the names of the six emotion categories and “neutral” in German. Participants were to classify the facial expressions by clicking on the scale with the mouse. There was no time limitation for the response.

Signal processing

Psychophysiological recordings were obtained from 42 electrodes referenced to the left mastoid with a bandpass filter of 0.032–70 Hz. The signal was filtered offline with a low-pass filter (30 Hz, 24 dB/oct) and transformed to the average reference. An electrooculogram was recorded from electrodes placed below and lateral to the eyes. Blinks and other eye movement artifacts were removed by means of independent-component analyses. The artifact-free signal was then segmented into 1.2-s epochs, starting 200 ms before stimulus onset (used as the prestimulus baseline). For the psychometric modeling, we needed at least four statistically independent indicators (measured values) representing individual differences in the ERPs for each of the seven latent ERP factors of the facial expressions. With this aim, ERPs were averaged separately for each condition and for odd and even trials. The N170 peak amplitudes and latencies were detected by a software algorithm as the maximal amplitude within the time window 155–210 ms at the P10 channel, where the N170 was overall most pronounced. Amplitudes of the ERP components without a clear peak deflection, but that rather were sustained for a longer period of time, were estimated as the mean amplitudes in the time window during which they were maximal. The time windows and electrode clusters were based on visual inspection of the ERP wave shapes and their topographies (see the supplementary material) and covered scalp regions similar to those reported in previous studies. For the EPN component, the mean amplitudes were averaged at 12 posterior electrodes (P7, P8, P9, P10, PO7, PO8, PO9, PO10, O1, O2, Oz, and Iz) in the time window 220–400 ms, and for the LPC at five centro-parietal electrodes (CP1, CP2, Pz, P3, and P4) in the 400- to 500-ms interval—hence, before stimulus offset—avoiding possible overlap with offset potentials. Only about 2.5% of the RTs felt within the LPC time window. Four ERP values (odd, even × low, high intensity) for each of the six facial expressions served as indicators of the latent factors modeling the brain responses (see Fig. 1). Indicators from the blinking condition served as the baseline in all models, because they showed the lowest amplitude, allowing for estimations of the EPN effects for emotional expressions and also for the chewing condition. Trials with errors were not excluded, in order to obtain sufficient and similar numbers of trials in the ERPs used as indicators. The overall good performance—90% hits (SD = .06) across conditions and participants in the final sample (n = 102)—argues against strong impact of averaging trials with errors.

First measurement model used for all ERP components. ERPs served as the indicators after being averaged separately for each facial expression and intensity level, and then being spilt into odd and even trials. Each emotional expression (E1–E6) was split across four indicators (i.e., moderate-intensity odd trials, moderate-intensity even trials, high-intensity odd trials, and high-intensity even trials). Neutral blinking (N1) always served as the baseline condition, with two indicators (odd, even). The indicators loaded to seven latent variables representing the processing of each facial expression: neutral (N), angry (AN), disgust (DI), fear (FE), sadness (SA), surprise (SU), and happiness (HA). The latent difference scores (LDSs) ΔAN_N, ΔDI_N, ΔFE_N, ΔSA_N, ΔSU_N, and ΔHA_N represent individual differences in the processing of emotional as compared with neutral expressions. The residual variances of these LDSs, in which baseline dependency is partialed out, are represented at the top by εΔAN_N, εΔDI_N, εΔFE_N, εΔSA_N, εΔSU_N, and εΔHA_N. In the first model, the correlations between the baseline-free LDS values for each expression are freely estimated. The second model, for N170 latencies, is very similar, but these correlations are fixed at zero. All facial stimuli were taken from the Radboud Face Database (Langner et al., 2010)

Data analyses

Our indicators of performance accuracy derived from the psychometric measures included proportions of correct responses across the trials of a given task. For tasks with multiple emotions as response options (fEP1, fEP2; see the supplementary materials), in which confusions among the response categories would often occur, hit rates were corrected for response bias and recalculated as unbiased hit rates (Wagner, 1993). Measurement models of the behavioral indicators for all 269 participants are reported in Hildebrandt et al. (2015).

In the present study, we applied LDS models in the measurement models for the neurocognitive indicators to parameterize the differences in brain responses (ERPs) between emotional (experimental conditions) versus neutral (baseline condition) facial expressions, in the time windows of the N170, EPN, and LPC components. We did not consider the P1 component in our analyses because the dynamic stimuli started with a neutral face (first frame, from onset to 33 ms), changing to a very low-expressive intensity (second frame, interval 33–66 ms). The P1 response (peaking 90 ms post-stimulus-onset) would necessarily reflect the processing of neutral faces in all conditions, and therefore was not suitable to investigate emotional specificity.

Measurement models

We entered all emotion conditions into a single model, considering each of them as separated targeted experimental condition in one LDS model containing six latent variables representing individual differences during emotional face processing (AN, DI, FE, HA, SA, and SU in Fig. 1). These latent variables were completely determined by the neutral baseline condition (latent variable N in Fig. 1) and an LDS representing the difference in amplitude between each emotion (e.g., anger, AN) and the neutral condition (e.g., ΔAN_N in Fig. 1). To specify this assumption in a psychometric model, regressions of the brain responses elicited by the emotion condition onto (a) the brain responses measured during the neutral condition and (b) the differences between the two (e.g., the EPN) was fixed at 1. That is, the brain response to an emotional expression was completely determined by the baseline (neutral) condition and the difference between the baseline and the experimental (emotion) conditions. Importantly, the residual of the latent variable representing ERPs to emotional faces was fixed at zero. The LDS variables ΔAN_N, ΔDI_N, ΔFE_N, ΔHA_N, ΔSA_N, and ΔSU_N in Fig. 1 represent the sizes of individual differences in ERP amplitudes or latencies between the emotional and neutral conditions, adjusted for measurement error.

Because we modeled only one neutral condition as the baseline (i.e., blinking), subtracted from all six emotion conditions, a baseline correction of the LDS variables was needed to prevent correlations between the LDS results being biased because they included the same baseline subtraction. That would clearly lead to statistical dependency between the LDS variables. In LDS models, the baseline condition is generally modeled as being correlated with the LDS variable (McArdle, 2009). However, regressing the LDS variable onto the baseline allows for controlling for this baseline dependency of the difference scores (see Fig. 1). The residualized LDS is consequently represented as being baseline-controlled, and will be referred to as baseline-free LDS throughout this article (εΔAN_N, εΔDI_N, εΔFE_N, εΔHA_N, εΔSA_N, and εΔSU_N in Fig. 1). Likewise, these latent factors represent individual differences in brain responses to the emotional versus neutral condition.

Models estimating brain–behavior relationships

The relationships between ERPs and face cognition abilities were estimated as the correlations in the LDS models. These correlations were calculated separately for each ERP component, modeled as the baseline-free LDS, representing the difference in amplitude or latency between (all) emotional and neutral expressions, and each face cognition ability, modeled as a behavioral latent factor.

As measures of model fit, we examined the χ 2 value, the root mean-square error of approximation (RMSEA), the standardized root-mean-square residual (SRMR), and the comparative fit index (CFI). We followed standards suggesting that for an acceptable fit, a model should show CFI values of .95 or higher, RMSEA values of .06 or less, and SRMR values of .08 or less (e.g., Bollen & Long, 1993). Differences in the fits of the nested models were inferentially tested by means of the χ 2 difference test. Significant values of these tests would indicate that introducing constraints on the parameters hampered the model fits in a statistically meaningful way. All models were computed with Mplus, version 7 (Muthén & Muthén, 2012).

Because we had a clear hypothesis regarding the emotion specificities of different components and their relationships with the behavioral factors, we did not apply Bonferroni corrections, but exact p values will be provided.

We conducted a post-hoc Monte Carlo simulation for testing the robustness of the measurement models and our power to detect emotion specificity with Mplus. The simulation, based on the model depicted in Fig. 1, included observations from 102 persons and followed recommendations by Muthén and Muthén (2002). The results revealed satisfactory robustness and power.

Results

The mean amplitudes and latencies of the ERPs and their difference scores from the neutral baseline (blinking) are provided in Table 1. In this section, we focus on the two aims of the present study: the measurement models of the ERP components, followed by the brain–behavior relationships. For detailed results for the experimental effects observed for the different tasks used in the psychometric study, please see Wilhelm et al. (2014). The experimental effects and waveforms of all components in the psychophysiological studies are provided in Recio, Schacht, and Sommer (2014). The supplementary materials provide a short description of the tasks and a summary of the results.

Measurement models

By estimating a series of measurement models, we tested for the emotion and valence specificity of individual differences in the ERP amplitudes and latencies. Detailed results for all measurement models are provided in Tables 2, 3, and 4. Tables with factor loadings for all models are available online in the supplementary materials. The first measurement model, shown in Fig. 1, was equivalent for all ERP components. It examined whether the difference in brain responses (N170, EPN, LPC) between emotional and neutral expressions varied significantly across persons, by estimating the variances of the LDSs for each emotion separately. In this model, the correlations between the baseline-free LDSs for all emotion categories were freely estimated. This model assumes systematic individual differences for the processing of each emotion category as compared with the neutral condition. The same sequence of steps was used to construct the subsequent models for each ERP component. However, the model sequence was component-specific, since it was based on the results of the first and further models. For example, if the variance of the LDS of a given emotion was not significant in the first model, this nonsignificant variance was fixed at zero in the next model. Also, if all variances for each emotional expression considered separately were nonsignificant, they were all fixed at zero and collapsed into one global emotional LDS factor. See the detailed elaborations for each component below, and Tables 2, 3, and 4 for overviews of the successive models.

N170 latencies

In the first measurement model (fits provided in Table 2), none of the baseline-free LDSs showed significant variances, and the latent means of these factors did not statistically differ from zero. In other words, (a) we found no overall difference between the neutral and emotional conditions in the N170 latencies averaged across participants, and (b) individuals did not vary with respect to this difference. In the second measurement model, the variances of the baseline-free LDSs were fixed at zero, hence assuming no individual differences in brain responses for any emotional expression. Comparing the model fit with that for the first model shows whether emotion specificity generally held for the N170 latencies. The restriction of variances to zero did not impair the model fit significantly: Δχ 2 = 20.19, Δdf = 21, p = .51 (see Table 2).

The third model (Table 2 and left side of Fig. 2a) tested individual differences over all emotion categories compared with the neutral condition. Hence, the indicators related to all emotion categories (across intensity levels and odd and even trials) were aggregated into one simplified score that served as a latent variable representing the difference in N170 latencies between emotional and neutral expressions. The variance of the simplified, baseline-free LDS of N170 latencies in the third model was not significant. Fixing the variance of the baseline-free LDS at zero in the fourth model did not impair the model fit significantly, Δχ 2 = 0.54, Δdf = 1, p = .77.

In the final measurement models for the N170 components (amplitude and latency), the indicators for emotional expressions (Emo) are aggregates, with estimated loadings to one simplified LDS (ΔEMO_N) common to all emotional expressions. The baseline-free LDS (εΔEMO_N) represents the amount of the individual change difference in amplitude between emotional expressions and the neutral condition (N). (a) For N170 latencies, the variance of this LDS εΔEMO_N was fixed at zero, and only the latent factor representing the processing of neutral expressions (N) was correlated with the behavioral factors (in the figure shown as face perception, FP, for example). (b) In contrast, for N170 amplitudes, only the LDS εΔEMO_N was correlated with the behavioral factors

Summarizing, the results of the model series for N170 latencies did not indicate emotion-specific individual differences for (a) any emotional expressions in particular, or (b) overall emotional expressions as compared with neutral faces. Hence, in the final measurement model, only the variance of the latent factor representing the N170 latency to neutral faces was freely estimated (Table 2, right side of Fig. 2a).

N170 amplitudes

In contrast to the model for N170 latencies, the first measurement model estimated for the N170 amplitudes revealed significant variances of the baseline-free LDSs for anger, σ 2 εΔAN_N = .45, p < .05; fear, σ 2 εΔFE_N = .67, p < .05; and happiness, σ 2 εΔHA_N = .66, p < .05. When we included a higher-order factor in a second model, these variances were not significant (see the structure depicted in Fig. 3). This second model indicated a significant variance only for the higher-order N170 amplitude factor, σ 2 εΔEMO_N = .73, p < .05. In the third model, the variance of the first-order baseline-free difference score factors for all emotional expression categories (εΔAN_N, εΔDI_N, εΔFE_N, εΔHA_N, εΔSA_N, εΔSU_N) were fixed at zero, and only the variance of the higher-order factor—representing the shift in amplitude of all emotional relative to neutral expressions—was freely estimated. Fixing these variances to zero did not diminish the fit, Δχ 2 = 14.78, Δdf = 11 (see Table 3 and Fig. 3). This model still showed significant variance for the N170 higher-order factor, σ 2 εΔEMO_N = .40, p < .05.

In all, measurement models of N170 amplitudes revealed systematic individual differences regarding the shift in amplitude for emotional relative to neutral expressions. The final measurement model for N170 amplitudes (Table 3, Fig. 2b) used aggregated indicators across trials for processing different emotion categories as indicators, and one baseline-free LDS variable representing the processing of all emotional expressions relative to the neutral baseline.

EPN and LPC amplitudes

The sequences of models are equivalent for ERP amplitudes in the time frame of the EPN and LPC, because the results across subsequent models testing emotion specificity were similar. The first model (Fig. 1) revealed significant individual differences for the baseline-free LDS estimated for all facial expressions in both the EPN and LPC components (Table 4). The correlations between emotion-specific factors (εΔAN_N, εΔDI_N, εΔFE_N, εΔHA_N, εΔSA_N, ε ΔSU_N) were very high (see Supplementary Table 4).

Hence, introducing higher-order EPN and LPC factors, respectively, to account for these correlations was a plausible and parsimonious modeling step. After including the higher-order factor in the second model (see Fig. 3), only the variance of the first-order baseline-free LDS for happiness remained significant, σ 2 εΔHA_N = .28, p < .01, in case of the EPN, and happiness and surprise remained significant for the LPC, σ 2 εΔHA_N = .32, p < .01, and σ 2 εΔSU_N = .11, p < .05, respectively. Consequently, in the third model for the EPN and LPC, the residual variances of all expressions but happiness (EPN) or happiness and surprise (LPC) were fixed at zero (see Table 4, EPN and LPC sections). The comparison with the second model did not reveal significant fit impairments: EPN, Δχ 2 = 5.70, Δdf = 9, p = .77; and LPC, Δχ 2 = 8.21, Δdf = 7, p = .31. As a further statistical test, in a fourth model the residual variance for the first-order happiness LDS factor was also fixed at zero, as well as that for surprise (in the case of LPC, given the relatively low residual variance σ 2 εΔSU_N = .11 of the first-order surprise factor). However, as a consequence of these parameter restrictions, the model fits deteriorated relative to the third model: EPN, Δχ 2 = 24.56, Δdf = 2, p < .001, and LPC Δχ 2 = 47.21, Δdf = 4, p < .001.

Summarizing, the measurement models revealed systematic and specific individual differences in EPN and LPC amplitudes for expressions of happiness, on the one hand, and for a higher-order factor including all negative expressions, on the other. The final measurement models used aggregates across all trials as indicators for expressions of anger, disgust, fear, sadness, and surprise, collapsed onto one baseline-free LDS factor for negative expressions, and a separate baseline-free LDS factor for happiness (see Fig. 4).

In the final measurement models for the EPN and LPC components, indicators for the ERPs of facial expressions of anger, disgust, fear, sadness, and surprise were aggregated and simplified in one latent factor (Neg_Emo), which was compared with the latent factor for neutral (N). The indicators for happiness were modeled separately (HA). The two resulting baseline-free LDSs for negative and positive expressions are both correlated with the behavioral factors—in the figure shown as face perception (FP), for example

Measurement model for the EPN contrasting the neutral conditions

In this model, the chewing condition was considered as the experimental condition and contrasted to blinking as the baseline (see Fig. 5). Each latent factor in the model (blinking, chewing) contained two indicators. The model showed a very good fit to the data: CFI = 1, RMSEA = .00, SRMR = .001, χ 2(1) = 0.35, and revealed significant individual differences of the baseline-free LDS variable contrasting chewing with blinking, σ 2 ΔN2_N1 = .69, p < .01.

Measurement models for the difference in amplitude between the two neutral conditions (blinking, chewing) in the EPN interval, using blinking as the baseline condition. The LDS representing the processing of nonemotional facial movement is correlated with the behavioral factors—in the figure shown as face perception (FP), for example

Brain–behavior relationships

In structural models, ability factors were related to the final measurement models for the N170 latency and amplitude and the EPN and LPC amplitudes—that is, four single models correlating each measure of each component and one behavior factor at a time. Table 5 provides the results of all correlations and model fit indices obtained for the models estimating brain–behavior relationships. To visualize the data, Fig. 7 in the supplementary material shows scatterplots of the substantial correlations reported in Table 5.

The most parsimonious measurement model for the N170 latency did not include an LDS factor representing emotion specificity. Thus, only the latent factor representing the N170 latency in response to neutral faces was correlated to the ability factors in four structural models (Fig. 2a). All four models showed good fits to the data (Table 5, N170 latency section). The results showed negative correlations with face perception, r = –.51, p < .001, and face memory, r = –.42, p < .001. The correlations with facial emotion perception and memory were not significant, r = .19, p = .122, and r = .19, p = .148, respectively.

For the N170 amplitude, the most parsimonious measurement model included an LDS factor representing individual differences in the negative shift in N170 amplitude for emotional (aggregated across emotion categories) relative to neutral expressions. The four structural models estimating brain–behavior relationships (Table 5, N170 amplitude section) revealed the following correlations: face perception (r = .27, p = .162), face memory (r = –.13, p = .443), facial emotion perception (r = .26, p = .194), and facial emotion memory (r = .04, p = .824).

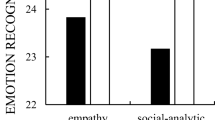

The final measurement models for the EPN and LPC were similar and included two valence-specific LDS factors, one representing the processing of happiness as compared to neutral expressions (Table 5, EPN/LPC Ha factors), and one for negative as compared to neutral expressions (Table 5, EPN/LPC Ne factors). The structural models estimating relationships with the behavioral factors showed acceptable fits. The correlation of the EPN baseline-free LDS for negative expressions with facial emotion perception was r = .32, p = .035. The EPN baseline-free LDS representing individual differences in the processing of happiness versus neutral expressions correlated with facial emotion perception, r = .45, p < .001, and with face perception in general, r = .41, p = .002. Other correlations involving the latent EPN factors were not significant. For the LPC (Table 5, LPC section), we observed only a moderate correlation between the LDS factor for negative expressions and emotion memory, r = .29, p = .029.

Finally, the four models estimating the relation of the measurement model contrasting the two different neutral conditions (blinking vs. chewing) in the EPN amplitudes with behavioral factors showed a significant correlation with facial emotion perception, r = .51, p < .001. All other correlations did not reach significance (Table 5, EPN neutral section).

Autoregressive models relating the N170, EPN and LPC components

These models estimated the temporal dynamics and linear dependency between the LDSs for the N170, EPN, and LPC amplitudes. Toward this end, later components were regressed onto earlier ones. That is, the LPC was regressed onto the EPN, and by means of an autoregressive path of the second-order factor, onto the N170 LDS representing the processing of emotional versus neutral expressions. Because the EPN and LPC turned out to be valence-specific, we calculated separate autoregressive models for negative and happy expressions, using the same LDS modeling as we described in the measurement models.

The first autoregressive model, representing the processing of negative as compared with neutral expressions, fitted the data well: CFI = .98, RMSEA = .07, SRMR = .02, χ 2(180) = 276.23. The autoregressive paths were β EPN_N170 = .73, p < .01; β LPC_N170 = –.26, p = .45; and β LPC_EPN = .09, p = .79, indicating a strong linear dependency of the EPN determined by emotion effects in N170 amplitudes. The second autoregressive model, representing the processing of happiness relative to the neutral condition, also showed very good fit: CFI = .96, RMSEA = .09, SRMR = .04, χ 2(126) = 249.39. The autoregressive paths were similar to those for negative emotions: β EPN_N170 = .83, p < .01; β LPC_N170 = .18, p = .66; and β LPC_EPN = –.27, p = .51.Footnote 1

Discussion

In the present study, we investigated individual differences in brain responses to emotional face stimuli. Toward this end, ERPs—modeled as latent factors—were considered as neurocognitive correlates of different stages in the processing of facial identity and expression information, which arguably underlie individual differences in social cognition abilities. Two questions stand at the center of the present report: Do individuals who show strong brain activation to happy faces tend to show similar reactions to other facial expressions, like anger or sadness? And are ERPs to facial expressions of emotion related to individual differences in face cognition abilities?

Emotion specificity

The results of the N170 latencies were straightforward and confirmed our hypothesis. The measurement models showed no effect of emotion on the N170 latency, with respect either to the average across persons or to individual differences. Only the latent factor representing the speed of structural encoding of neutral stimuli showed systematic individual differences. This finding indicates that individuals differ in how fast they create structural and holistic representations of neutral faces, but any possible differences in the encoding of facial expressions of emotion are not statistically significant at this stage of processing. Thus, our data indicate that the speed of structural face encoding is an emotion-unspecific process, overlapping for neutral and emotional faces, at least as far as individual differences are concerned.

The measurement model that fitted the N170 amplitudes best included an LDS factor aggregated across all facial expressions, illustrative of a negative shift in amplitude for emotional as compared to neutral expressions. This latent factor revealed systematic individual differences, indicating that individuals differ in two aspects of the structural encoding process: (1) the amount of resources required to form structural representations of faces in general, and (2) modulation of the structural encoding of faces displaying emotional expressions, relative to neutral faces. This is in line with the view that the distinction between emotional and neutral expressions starts in the N170 time window, partially overlapping the process of structural encoding (e.g., Luo et al., 2010). Brain–behavior relationships and autoregressive models for the ERPs helped us to deepen the implications of this finding.

Emotion-specific individual differences in ERPs appeared during the EPN time interval and continued to be discernible for the LPC. The measurement models of the EPN and LPC amplitudes indicated a latent structure involving two dimensions, one representing the difference in amplitude between happy and neutral expressions, and another representing the difference between negative and neutral expressions. Both dimensions showed significant and distinct individual differences. A similar latent structure, with distinct contributions for the valence dimensions was reported for a behavioral measure of sensitivity to emotional expressions (Suzuki et al., 2010). Our results extend this finding to brain responses and indicate that individuals who allocate more processing resources to visual brain systems (EPN), and those who provide sustained processing (LPC) to a given negative expression due to its intrinsic relevance (e.g., threat), are likely to show similar enlargements for other negative expressions, but not necessarily of the same amount for expressions of happiness, and vice versa. Hence, the amount of processing resources devoted to process emotional stimuli and the selective attention triggered by their intrinsic relevance may engage distinct brain systems for the perception and inhibition of positive and negative emotional information.

The second aim of the present study was to examine whether this enhancement in the ERPs reflecting emotion processing was associated with individual differences in facial emotion perception and memory. This will be discussed after we address the neutral conditions.

Models comparing the ERPs to neutral facial movements—that is, blinking versus chewing—revealed systematic individual differences in the processing of facial movement per se during the time window of the EPN. Hence, beyond the experimental effects (Recio, Schacht, & Sommer, 2014), our results demonstrate that some individuals show particularly enhanced processing of nonemotional facial movement. These individuals might engage more reflexive attention in response to facial movement per se, and thus track facial changes unfolding over time more accurately than others.

Taken together, our estimation of individual differences in ERPs reflecting the processing of dynamic facial expressions argue in favor of theories that posit brain systems for processing of the broader emotional dimensions of valence and arousal (e.g., Russell, 2003), rather than distinct systems for each facial expression (e.g., Ekman, 1999).

Brain–behavior relationships

Notably, we replicated the negative correlation of N170 latencies with face perception and memory abilities that had been observed in two previous studies, indicating that a faster process of creating structural representations of faces facilitates accurate performance in recognizing and remembering identity from faces (Herzmann et al., 2009; Kaltwasser et al., 2014). Since this relationship appears to be consistent across different tasks that focus on face identity or emotion processing, different stimulus materials (neutral or emotional, dynamic or static), and different modeling approaches (confirmatory factor analysis, LDS), the N170 latency seems to be a robust psychophysiological predictor of an individual’s performance at identifying and remembering identity from faces.

Individual differences in the baseline-free LDS representing the shift in N170 amplitudes for emotional relative to neutral expressions were unrelated to differences in general face cognition or emotion-processing abilities. Autoregressive models capturing the temporal dynamics of ERPs across the N170, EPN, and LPC components, however, revealed a strong dependency between individual differences in the N170 and EPN, suggesting an overlap between the structural encoding of faces and emotion processing in these time windows.

Brain–behavior relations involving the EPN amplitude were separately calculated for expressions of negative and positive (happiness) emotions. The latent EPN factor representing the amplitude difference for happiness versus neutral expressions correlated with face perception and facial emotion perception. This finding is in line with our hypothesis, and indicates that individuals who devote more sensory resources to the processing of facial expressions of happiness tend to perform better in tasks that involve perceiving both the identity and the emotion revealed by faces. The latent EPN factor representing the effect for negative expressions showed a moderate but, after correction for multiple comparisons, nonsignificant correlation with facial emotion perception. The same effect for negative emotions might be observed in future studies with more power, thereby increasing the generality of this finding.

Interestingly, the latent factor representing the amplitude difference between two neutral expressions—which according to Recio, Schacht, and Sommer (2014) could represent a low-level pattern detector that engages reflexive attention to search for meaningful patterns in facial movement—showed a moderate to high correlation with facial emotion perception ability. This intriguing finding suggests that this ability relies to some extent on the reactivity of the visual cortex to facial movement per se. This result is not surprising, given the type of information necessary to categorize facial expressions. At the perceptual level, this information is precisely how changes in facial configuration unfold over time during a facial expression. Hence, individuals who allocate more processing resources to brain systems dedicated to the visual processing of any kinds of facial movement might also dedicate more reflexive attention to the reconfiguration of facial features during emotional expressions. Enhanced processing and greater attention put individuals at an advantage for perceiving and categorizing facial expressions.

Taken together, the results from the EPN indicate that facial emotion perception ability is related not only to the amount of sensory resources devoted to the processing of emotional information, but also to the quick detection of facial movements as meaningful patterns in the flow of incoming sensory information. This novel finding fits well with the notion that the brain systems involved in selective attention driven by salient perceptual features (e.g., motion) or motivationally relevant information (e.g., emotion) aim at the same targets in the visual brain systems (e.g., Pourtois et al., 2012).

The amount of sensory resources mobilized during the processing of dynamic expressions of happiness correlated with face perception abilities, as well, indicating that individual differences in this ability are related to activation in the brain systems engaged during the processing of facial expressions of happiness. This unexpected finding argues against the independence of perceptions of facial identity and emotional expressions and their underlying brain systems, as was proposed in early models of face processing (e.g., Bruce & Young, 1986). However, our results are in line with recent data indicating interdependence between the processing of facial identity and facial expressions (e.g., Haxby & Gobbini, 2011), and more particularly, with the “smiling effect,” a benefit for the recognition and encoding of smiling faces that has been observed in some studies (e.g., Lander & Butcher, 2015).

The latent LPC factor for negative expressions showed a moderate correlation with individual differences in memory for emotional facial expressions. This relation should be interpreted with care, since it would not be significant if a Bonferroni correction were applied. It suggests that participants who devote more sustained processing and motivated attention to facial expressions of negative emotions perform better in tasks involving remembering and recognizing emotions from faces. Negative expressions might be very effective at recruiting attention resources because they signal danger, and this gain in attention seems to benefit the representation of emotion in memory. Alternatively, the emotion-specific relationships observed for the LPC could relate to the lower probability of the positive (one expression), relative to the negative (five expressions), category. Happiness being the only positive expression could benefit processing, for example, if participants used polarity to solve the task, and then the motivated attention toward happy faces would occur at an earlier stage. This explanation does not seem to be plausible, because facial expressions of happiness showed lower LPC amplitudes than did the more frequent negative ones, a result opposite to typical oddball effects (e.g., Duncan-Johnson & Donchin, 1977). Future studies might control for this issue by increasing the number of positive expressions.

We used a condition showing moderate-intensity expressions in order to increase the task difficulty and facilitate individual differences. Still, it might be argued that the task in the EEG study was rather easy (performance was nearly ceiling for some emotion categories). Furthermore, excluding those participants with the worst performance (n = 3) may have reduced individual differences in the ERPs. We do not see a limitation here, because we aimed to measure individual differences associated with the processing of the emotional content of stimuli, rather than the task difficulty per se. Future research could investigate task effects, controlling such aspects as task difficulty or the level of processing.

Main contributions of the present study, and future directions

Do individuals who show strong brain activation to a given expressions tend to show similar reactions to other facial expressions? As expected, individual differences in the N170 component showed little emotion specificity; they mainly seemed related to overlap with the EPN effect. Emotion-specific individual differences in brain responses appeared for the EPN and LPC components, in the form of valence specificity—that is, individuals with a large EPN (or LPC) for a given negative expression tended to show large effects for other negative expressions, as well, but not necessarily for happiness, and vice versa. This finding suggests distinct brain systems for the processing of valence categories.

Are the ERPs to facial expressions related to individual differences in face cognition abilities? Our results confirm that the speed of structural encoding, measured in the N170 latency, is negatively correlated with face perception and face memory abilities (Herzmann et al., 2009; Kaltwasser et al., 2014), highlighting the utility of the N170 latency as a neurocognitive correlate of face perception. The latent EPN factor for happiness expressions correlated with face perception and facial emotion perception. Thus, individual differences in these abilities to some extent may be related to the amount of sensory resources devoted to the processing of facial expressions of happiness. A similar brain–behavior relationship was observed for the LPC to negative expressions and individual differences in memory for emotional facial expressions.

The brain–behavior relationships reported here might reflect a particular time course in the processing of valence, first positive and neutral, then negative expressions. This time course could relate to the lower probability of the positive and neutral categories in the design we employed in the EEG experiment. We believe, however, that these findings fit well with the advantage for happy faces observed in many studies, which has been considered to rely on an earlier encoding of expressive intensity (e.g., Calvo & Beltrán, 2013). The EPN results presented here further indicate that enhanced early encoding of happiness expressions underlies individual differences in recognizing emotion from faces.

The roles of perceptual and affective processes in the recognition of facial expressions have often been debated in the emotion literature. A recent integrative review concluded that expression recognition relies to a greater extent on perceptual processing of morphological features in the face than on affective processes (Calvo & Nummenmaa, 2016). An intriguing finding in the present study is that individual differences in the ability to recognize emotion from faces are related to the amounts of sensory resources available in two brain systems: those dedicated to the processing of affective information—namely, the encoding of valence categories—and also those dedicated to perceptual processing per se—namely, the encoding of (nonemotional) changes in facial features unfolding over time.

An important consideration when evaluating behavioral factors is the distinction between accuracy and speed in performance (e.g., Carroll, 1993). Hildebrandt, Schacht, Sommer, and Wilhelm (2012) showed that speed in facial emotion perception is highly correlated with the speed of face perception, but is still separable from the speed of perceiving nonfacial stimuli. Here we focused on accuracy indicators. In future research, it will be interesting to estimate the relationships of the ERPs reflecting face and emotion processing with different indicators of performance speed.

Notes

One reviewer was concerned whether the psychometric performance variables and the performance in emotion recognition during the EEG recording were correlated, since both are indicators of the same latent variable, facial emotion perception. To answer this question, we estimated one-factor measurement models separately for each emotion category, including the three performance indicators for facial emotion perception measured in the psychometric session and, additionally, two performance indicators during the EEG session. The latter were calculated as unbiased hit rates for each facial expression in the high- and moderate-intensity conditions. These measurement models showed that the factor loadings of the performance indicators from the EEG session were somewhat lower than those from the psychometric session; still, they nicely went along with the indicators of the latent variable emotion perception. The main reason for the difference in factor loadings between the two sessions was that the emotion recognition performance data in the EEG session task were close to ceiling.

One reviewer had doubts about whether we could replicate the observed brain–behavior relationships using ERP and performance data from the EEG session only. We calculated Pearson correlations between the ERPs, separated for each facial expression and each intensity condition, as brain measures, and unbiased hit rates, also separated by condition, as behavioral measures. The correlations tended to replicate the direction of the relationships observed in the data from the psychometric session—for example, a negative correlation between the N170 latency and face perception—but were somewhat lower. However, the limited variance in the performance data from the EEG session—close to ceiling for several conditions—complicates the interpretation of these correlations. Our multitask approach should be seen as a strength of our study, because, in contrast to single-task approaches, neither task specificities nor statistical artifacts can account for the observed relationships.

References

Abrosoft. (2010). FantaMorph (version 5.0) [Computer software]. Retrieved from www.fantamorph.com/index.html

Adolphs, R. (2002). Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews, 1, 21–61.

Arsalidou, M., Morris, D., & Taylor, M. J. (2011). Converging for the advantage of dynamic facial expressions. Brain Topography, 24, 149–163.

Barrett, L. F. (2011). Constructing emotion. Psychological Topics, 3, 359–380.

Batty, M., & Taylor, M. J. (2003). Early processing of the six basic facial emotional expressions. Cognitive Brain Research, 17, 613–620.

Bollen, K. A., & Long, J. S. (Eds.). (1993). Testing structural equation models. Newbury Park, CA: Sage.

Bruce, V., & Young, A. W. (1986). Understanding face recognition. British Journal of Psychology, 77, 305–327.

Calvo, M. G., & Beltrán, D. (2013). Recognition advantage of happy faces: Tracing the neurocognitive processes. Neuropsychologia, 51, 2051–2060.

Calvo, M. G., & Beltrán, D. (2014). Brain lateralization of holistic versus analytic processing of emotional facial expressions. NeuroImage, 92, 237–247.

Calvo, M. G., & Nummenmaa, L. (2016). Perceptual and affective mechanisms in facial expression recognition: An integrative review. Cognition and Emotion, 30, 1081–1106. doi:10.1080/02699931.2015.1049124

Carroll, J. B. (1993). Human cognitive abilities: A survey of factor-analytic studies. Cambridge, UK: Cambridge University Press.

Cornwell, B. R., Alvarez, R. P., Lissek, S., Kaplan, R., Ernst, M., & Grillon, C. (2011). Anxiety overrides the blocking effects of high perceptual load on amygdala reactivity to threat-related distractors. Neuropsychologia, 49, 1363–1368.

Duncan-Johnson, C. C., & Donchin, E. (1977). On quantifying surprise: The variation of event-related potentials with subjective probability. Psychophysiology, 14, 456–467.

Eimer, M. (2011). The face-sensitive N170 component of the event-related brain potential. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The Oxford handbook of face perception (pp. 329–344). Oxford, UK: Oxford University Press.

Ekman, P. (1999). Basic emotions. In T. Dalgleish & T. Power (Eds.), Handbook of cognition and emotion (pp. 45–60). New York, NY: Wiley.

Hamann, S., & Canli, T. (2004). Individual differences in emotion processing. Current Opinion in Neurobiology, 14, 233–238.

Haxby, J. V., & Gobbini, M. I. (2011). Distributed neural systems for face perception. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The Oxford handbook of face perception (pp. 93–110). Oxford, UK: Oxford University Press.

Herzmann, G., Danthiir, V., Wilhelm, O., Sommer, W., & Schacht, A. (2007). Face memory: A cognitive and psychophysiological approach to the assessment of antecedents of emotional intelligence. In G. Matthews, M. Zeidner, & R. D. Roberts (Eds.), Emotional intelligence: Knowns and unknowns (pp. 199–229). Oxford, UK: Oxford University Press.

Herzmann, G., Kunina, O., Sommer, W., & Wilhelm, O. (2009). Individual differences in face cognition: Brain–behavior relationships. Journal of Cognitive Neuroscience, 22, 571–589.

Hildebrandt, A., Schacht, A., Sommer, W., & Wilhelm, O. (2012). Measuring the speed of recognising facially expressed emotions. Cognition and Emotion, 26, 650–666.

Hildebrandt, A., Sommer, W., Schacht, A., & Wilhelm, O. (2015). Perceiving and remembering emotional facial expressions—A basic facet of emotional intelligence. Intelligence, 50, 52–67.

Kaltwasser, L., Hildebrandt, A., Recio, G., Wilhelm, O., & Sommer, W. (2014). Neurocognitive mechanisms of individual differences in face cognition: A replication and extension. Cognitive, Affective, & Behavioral Neuroscience, 14, 861–878. doi:10.3758/s13415-013-0234-y

Künecke, J., Hildebrandt, A., Recio, G., Sommer, W., & Wilhelm, O. (2014). Facial EMG responses to emotional expressions are related to emotion perception ability. PLoS ONE, 9, e84053. doi:10.1371/journal.pone.0084053

Lander, K., & Butcher, N. (2015). Independence of face identity and expression processing: Exploring the role of motion. Frontiers in Psychology, 6, 255. doi:10.3389/fpsyg.2015.00255

Langner, O., Dotsch, R., Bijlstra, G., Wigboldus, D. H. J., Hawk, S. T., & van Knippenberg, A. (2010). Presentation and validation of the Radboud Faces Database. Cognition and Emotion, 24, 1377–1388.

Leppänen, J. M., & Nelson, C. A. (2009). Tuning the developing brain to social signals of emotions. Nature Reviews, 10, 37–47.

Luo, W., Feng, W., He, W., Wang, N. Y., & Luo, Y. J. (2010). Three stages of facial expression processing: ERP study with rapid serial visual presentation. NeuroImage, 49, 1857–1867.

Mayer, J. D., Roberts, R. D., & Barsade, S. G. (2008). Human abilities: Emotional intelligence. Annual Review of Psychology, 59, 507–536. doi:10.1146/annurev.psych.59.103006.093646

McArdle, J. J. (2009). Latent variable modeling of differences and changes with longitudinal data. Annual Review of Psychology, 60, 577–605.

Muthén, L. K., & Muthén, B. O. (2002). How to use a Monte Carlo study to decide on sample size and determine power. Structural Equation Modeling, 9, 599–620. doi:10.1207/S15328007SEM0904_8

Muthén, L. K., & Muthén, B. (2012). Mplus: The comprehensive modelling program for applied researchers: User’s guide (Version 6). Los Angeles, CA: Muthén & Muthén.

Pourtois, G., Schettino, A., & Vuilleumier, P. (2012). Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biological Psychology, 92, 492–512.

Recio, G., Schacht, A., & Sommer, W. (2014). Recognizing dynamic facial expressions of emotion: Specificity and intensity effects in event-related brain potentials. Biological Psychology, 96, 111–125.

Recio, G., Shmuilovich, O., & Sommer, W. (2014). Should I smile or should I frown? An ERP study on the voluntary control of emotion-related facial expressions. Psychophysiology, 51, 789–799.

Recio, G., Sommer, W., & Schacht, A. (2011). Psychophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Research, 1376, 66–75.

Rellecke, J., Sommer, W., & Schacht, A. (2013). Emotion effects on the N170: A question of reference? Brain Topography, 26, 62–71.

Rogosa, D. R., & Willett, J. B. (1983). Demonstrating the reliability of the difference score in the measurement of change. Journal of Educational Measurement, 20, 335–343.

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychological Review, 110, 145–172. doi:10.1037/0033-295X.110.1.145

Sato, W., Fujimura, T., Kochiyama, T., & Suzuki, N. (2013). Relationships among facial mimicry, emotional experience, and emotion recognition. PLoS ONE, 8, e57889. doi:10.1371/journal.pone.0057889

Schlegel, K., Grandjean, D., & Scherer, K. R. (2012). Emotion recognition: Unidimensional ability or a set of modality- and emotion-specific skills? Personality and Individual Differences, 53, 16–21.

Schupp, H. T., Flaisch, T., Stockburger, J., & Junghöfer, M. (2006). Emotion and attention: Event-related brain potential studies. In G. E. S. Anders, M. Junghöfer, J. Kissler, & D. Wildgruber (Eds.), Understanding emotions (pp. 31–51). Amsterdam, The Netherlands: Elsevier.

Schweinberger, S. R. (2011). Neurophysiological correlates of face recognition. In A. J. Calder, G. Rhodes, M. H. Johnson, & J. V. Haxby (Eds.), The Oxford handbook of face perception (pp. 345–366). Oxford, UK: Oxford University Press.

Stemmler, G., & Wacker, J. (2010). Personality, emotion, and individual differences in physiological responses. Biological Psychology, 84, 541–551.

Stevens, J. S., & Hamann, S. (2012). Sex differences in brain activation to emotional stimuli: A meta-analysis of neuroimaging studies. Neuropsychologia, 50, 1578–1593.

Suzuki, A., Hoshino, T., & Shigemasu, K. (2006). Measuring individual differences in sensitivities to basic emotions in faces. Cognition, 99, 327–353. doi:10.1016/j.cognition.2005.04.003

Suzuki, A., Hoshino, T., & Shigemasu, K. (2010). Happiness is unique: A latent structure of emotion recognition traits revealed by statistical model comparison. Personality and Individual Differences, 48, 196–201.

Wagner, H. (1993). On measuring performance in category judgement studies for nonverbal behaviour. Journal of Nonverbal Behaviour, 17, 3–28.

Whalen, P. J., Raila, H., Bennett, R., Mattek, A., Brown, A., Taylor, J., … Palmer, A. (2013). Neuroscience and facial expressions of emotion: The role of amygdala–prefrontal interactions. Emotion Review, 5, 78–83. doi:10.1177/1754073912457231

Wilhelm, O., Herzmann, G., Kunina, O., Danthiir, V., Schacht, A., & Sommer, W. (2010). Individual differences in face cognition. Journal of Personality and Social Psychology, 99, 530–548.

Wilhelm, O., Hildebrandt, A., Manske, K., Schacht, A., & Sommer, W. (2014). Test battery for measuring the perception and recognition of facial expressions of emotion. Frontiers in Psychology, 5, 404. doi:10.3389/fpsyg.2014.00404

Williams, L. M., Palmer, D., Liddell, B. J., Song, L., & Gordon, E. (2006). The “when” and “where” of perceiving signals of threat versus non-threat. NeuroImage, 31, 458–467.

Wilson-Mendenhall, C., Barrett, L. F., & Barsalou, L. W. (2013). Situating emotional experience. Frontiers in Human Neuroscience, 7(764), 1–16. doi:10.3389/fnhum.2013.00764

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 175 kb)

ESM 2

(PDF 206 kb)

ESM 3

(PDF 106 kb)

Fig. 6

ERPs and topographic maps (polarity symbols overlapped) for the EPN (top) and LPC (bottom), separated for the six facial expressions of emotion on study: angry (AN), disgust (DI), fear (FE), happiness (HA), sadness (SA), and surprise (SU). (PDF 1847 kb)

Fig. 7

Correlation plots based on factor scores estimated for each latent variable in the models depicted in Table 5. Please note that factors scores do not completely retain the model structure, and thus, latent level correlations estimated in the model do not always perfectly match the correlations between factor scores. However, these plots allow a visualization of some of the results presented in Table 5. In the Discussion we interpret the correlations from the latent variable model only. Left panel (N170 latency) top plot – in the model r = −.51, for factor scores depicted in the Figure r = −.61; bottom plot – in the model r = −.41, for factor scores depicted in the Figure r = −.41. Right panel (EPN amplitude), top plot – in the model r = .44, for factor scores depicted in the Figure r = .53; bottom plot – in the model r = .32, for factor scores depicted in the Figure r = .38. (PDF 339 kb)

Rights and permissions

About this article

Cite this article

Recio, G., Wilhelm, O., Sommer, W. et al. Are event-related potentials to dynamic facial expressions of emotion related to individual differences in the accuracy of processing facial expressions and identity?. Cogn Affect Behav Neurosci 17, 364–380 (2017). https://doi.org/10.3758/s13415-016-0484-6

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13415-016-0484-6