Abstract

Approximately 40 years of research on modality dominance has shown that humans are inclined to focus on visual information when presented with compounded visual and auditory stimuli. The current paper reports a series of experiments showing evidence of both auditory and visual dominance effects. Using a behavioral oddball task, we found auditory dominance when examining response times to auditory and visual oddballs—simultaneously presenting pictures and sounds slowed down responses to visual but not auditory oddballs. However, when requiring participants to make separate responses for auditory, visual, and bimodal oddballs, auditory dominance was eliminated with a reversal to visual dominance (Experiment 2). Experiment 3 replicated auditory dominance and showed that increased task demands and asking participants to analyze cross-modal stimuli conjunctively (as opposed to disjunctively) cannot account for the reversal to visual dominance. Mechanisms underlying sensory dominance and factors that may modulate sensory dominance are discussed.

Similar content being viewed by others

Existence of competing modality dominances

Many important tasks, such as learning a language and driving a car, require processing and responding to multisensory information (e.g., young word learners often associate spoken words with visually/haptically presented objects). How this information is sorted and ultimately perceived can depend on a number of factors, including, but not limited to, its physical origin, temporal relationship with other stimuli, salience, and which sensory modalities are activated. Assuming that attentional reservoirs are not depleted, information arriving at multiple sensory modalities often is efficiently and easily integrated, such as the case with speech perception (Alsius, Navarra, Campbell, & Soto-Faraco, 2005; Massaro, 1998) even when the two sensory sources provide incongruent messages (McGurk & MacDonald, 1976). However, in other situations, multisensory information can sometimes compete for resources, with one sensory modality dominating the other. Given that it is largely unknown as to what factors lead to a particular sensory modality being dominant in one situation, or being dominated in another, the primary goal of the current study is to better understand possible mechanisms underlying modality dominance effects.

Theoretical developments

While many studies have examined modality dominance effects, mechanisms underlying modality dominance are debated and the pattern of results appears to change across development. According to the modality appropriateness hypothesis, certain properties of stimuli are better processed by a specific sense, leading to a particular modality dominating. For example, it has been purported that the visual modality is superior to the auditory modality at processing spatial information (Howard & Templeton, 1966; O’Connor & Hermelin, 1972; Shimojo & Shams, 2001), whereas the reverse has been theorized for temporal information (Shams, Kamitani, & Shimojo, 2002; Stein, London, Wilkenson, & Price, 1996). Accordingly, which type of sensory dominance (visual or auditory) emerges may be dependent on the conditions with which the stimuli are presented. However, examples of visual dominance are more prevalent in the adult literature, even on temporal tasks that typically favor the auditory modality (Sinnett, Spence, & Soto-Faraco, 2007; and Spence, 2009 for a review).

According to a different account, visual dominance has been explained as an attentional bias toward the visual modality to compensate for the poor alerting qualities of the visual system (Posner, Nissen, & Klein, 1976). Thus, when participants are asked to quickly respond to auditory and visual information, the visual modality often dominates processing and/or responding because participants are trying to compensate for low alerting properties of visual input. However, such an explanation cannot solely account for visual dominance as there is little support showing that visual dominance can be reversed by asking adults to direct their attention to the auditory modality (Koppen & Spence, 2007; Sinnett et al., 2007).

More recent theoretical developments focus directly on the competitive nature of cross-modal processing. For example, extending on the biased/integrated competition hypothesis (Desimone & Duncan 1995; Duncan, 1996), Spence, Parise, and Chen (2012) have argued that brain systems dedicated to the different sensory systems also may compete, with connections between sensory modalities being inhibitory in nature. Given that approximately half of the brain is dedicated to visual processing (Sereno et al., 1995), visual stimuli and the visual system should be more likely to inhibit processing in other sensory systems.

While the sensory system competition account may explain why the visual modality is more likely to dominate other sensory modalities, a different account has been put forward to explain auditory dominance in young children (Robinson & Sloutsky, 2010a for review). The underlying idea is that auditory stimuli are often dynamic and transient in nature, whereas visual stimuli are frequently presented for longer durations and might be less alerting (Posner, et al., 1976). Therefore, it might be beneficial to delegate greater attentional resources to auditory stimuli, at least early in the stream of information processing, in order to process the information before it disappears. Assuming that the auditory and visual modalities share the same pool of attentional resources (for example, Eimer & Van Velzen, 2002) and compete for these resources, attentional resources automatically deployed to the auditory modality should come with a cost—attenuated or delayed visual processing. Whereas this account has gained some support early in development, there is little to no support for this account in adults. One goal of the current study was to explore whether some of the assumptions underlying auditory dominance extend to an adult population.

Empirical support for visual dominance

Recent support for visual dominance primarily comes from research examining the Colavita visual dominance effect (Koppen, Alsius, & Spence, 2008; Ngo, Sinnett, Soto-Faraco, & Spence, 2010; Ngo, Cadieux, Sinnett, Soto-Faraco, & Spence, 2011; Sinnett, Soto-Faraco, & Spence, 2008; Sinnett et al., 2007; see Spence, 2009 for a review). In the original demonstration, Colavita (1974) presented participants with unimodal visual or auditory stimuli (a flash or a beep, respectively), with the instruction to respond as quickly as possible to either by pressing a designated response key (see also Colavita, Tomko, & Weisberg, 1976; Colavita & Weisberg, 1979; Egeth & Sager, 1977). The key analysis involved the error rate made on bimodal trials, where the instruction was to respond by pressing both unimodal (visual and auditory) response keys. In these trials, a large number of errors were made, almost exclusively by pressing only the visual unimodal response button. This seminal prepotency finding has since been referred to as the Colavita visual dominance effect (Spence, 2009 and Spence et al., 2012 for reviews). Furthermore, the effect emerged despite response latency to unimodal events often favoring audition over vision, although later research has shown that this is not a requisite effect (Sinnett et al., 2007).

The robustness of the Colavita (1974) visual dominance effect has been demonstrated in a number of recent findings. For instance, visual dominance effects have been observed in investigations designed to control for some potential methodological shortcomings of the original work (Sinnett et al., 2007), to extend the findings to more realistic stimuli and settings (Sinnett et al., 2007; 2008), and to modulate the effect to determine if auditory dominance can be observed (Ngo et al., 2010; 2011). In all situations, visual dominance effects have been observed, with the exception of Ngo et al. (2011) and Robinson and Sloutsky (2013), which are described further below.

Both recent and original demonstrations of the Colavita visual dominance effect (Colavita, 1974) have used error rates to bimodal stimuli as the main measure of visual dominance. That is, when presented with compound auditory and visual stimuli, participants erroneously respond only to the visual event more frequently than they incorrectly respond to only the auditory event (note, a correct answer would be a response indicating the presence of both visual and auditory events). For instance, Sinnett et al. (2007) used this measure in six different experiments designed to explore whether visual dominance findings are due to attentional or sensory-based factors. The findings supported the notion that attention could modulate the effect, and in fact eliminated visual dominance when attention was biased towards the auditory modality (i.e., an equal number of incorrect visual only or auditory only responses were made to bimodal events; see Experiment 5, but note auditory dominance was not observed).

Response demands of the task can modulate whether facilitation or inhibition is observed for multisensory stimuli (Sinnett et al., 2008). For example, participants in Sinnett et al. (2008) were presented with a typical Colavita visual dominance task, requiring responses to specific visual, auditory, or audiovisual targets. When responding with only one response button, a redundant target effect (RTE) was observed, manifested by faster responses to bimodal items compared with either unimodal item. However, when required to use three buttons (i.e., more similar to the Colavita visual dominance task), the reaction time advantage for bimodal events disappeared, and error rates to bimodal events suggested visual dominance. Importantly, a second experiment demonstrated that multisensory facilitation and inhibition could be elicited using exactly the same task and stimuli. To observe this, the sensory modality to which participants were instructed to respond was manipulated. When responding only to visual stimuli, response latencies were faster for bimodal stimuli compared with unimodal visual responses, whereas when responding to auditory stimuli only, responses were inhibited (i.e., slowed down) for bimodal items when compared with unimodal auditory responses. Demonstrating that multisensory inhibition or facilitation is dependent on response task demands shows that these effects can be modulated (but not reversed) by attentional manipulations and response demands.

Preliminary evidence for auditory dominance

While most of the research points to robust visual dominance in adults, several recent studies have been able to reverse the effect to auditory dominance. Ngo et al. (2011) presented participants with a stream of sounds and pictures that were simultaneously presented, and required them to respond to repetitions of auditory, visual, or bimodal items. When responding to bimodal targets, participants made more auditory based errors (i.e., auditory dominance). Most importantly, to elicit auditory dominance a masking item was included between trials (note, without an interleaving mask visual dominance was still observed using this paradigm; Ngo et al., 2010). Thus, a reversal from visual to auditory dominance was only possible with substantial modifications to the original paradigm (Colavita, 1974; Sinnett et al., 2007), once again indicating the robustness of visual dominance. Ngo et al. (2011) suggested that the presence of a mask could differentially affect the retention of visual and auditory information, thereby possibly leading to different sensory dominances.

Partial support for differential retention comes from a recent statistical learning study conducted by Robinson and Sloutsky (2013). As in Ngo et al. (2011), participants in Robinson and Sloutsky’s study had to detect repeating auditory, visual, or bimodal stimuli. However, unbeknownst to the participants, the sequences presented during training conformed to a statistical pattern. After a short delay, participants at test had to determine if a sequence was presented during training (i.e., shared same statistical regularity as training sequence) or if it was new. Auditory dominance was found—increasing the task demands by randomizing one of the sequences attenuated visual but not auditory statistical learning. Specifically, randomizing the auditory sequence attenuated visual statistical learning, whereas randomizing the visual sequence had no negative effect on auditory statistical learning. However, it is important to note that the auditory modality appears to be superior at processing temporal information (Conway & Christiansen, 2005; Gori, Sandini, & Burr, 2012; Shams, Kamitani, & Shimojo, 2000). A more stringent test of auditory dominance would be to use a procedure that is equally learnable in both modalities. Moreover, learning of the auditory and visual sequences was tested after a short delay. While it is possible that cross-modal interference stemmed from the randomized auditory sequences disrupting encoding of the visual sequences, a more rigorous test would be to examine the effects of auditory input on visual processing while participants were viewing the visual images.

A study examining psychophysiological responses to changing auditory and visual information addresses both of these concerns and provides some support for auditory dominance in adults. Robinson, Ahmar, and Sloutsky (2010) measured visual and auditory oddball detection in a passive oddball task (i.e., no responses) while collecting electrophysiological responses in an Event Related Potential (ERP) design. Participants were presented with frequent (i.e., standards) and infrequent stimuli (i.e., oddballs) embedded in a stream of auditory and visual events. The main comparison involved unimodal baselines and the potential modulation of these baselines under multimodal presentation, as indicated by increased or decreased latency of the P300 component, a signature component of oddball detection. Compared with their respective, unimodal baselines, bimodal presentation slowed down visual P300 and sped up auditory P300. Robinson et al. claimed that these findings demonstrate auditory dominance, as auditory information interfered with visual processing, while visual information had no negative effect on auditory processing. A possible explanation for why these results contrast with Sinnett et al.’s (2008) inhibition and facilitation findings may be due to the Robinson et al. study utilizing a passive task while the Sinnett et al. study required explicit responses.

The possibility that visual dominance might be modulated by task demands is further bolstered by infant studies demonstrating auditory dominance in passive tasks (Lewkowicz, 1988a, 1988b; Robinson & Sloutsky, 2004; 2010b; Sloutsky & Robinson, 2008; for review, Robinson & Sloutsky, 2010a). The general paradigm with infants involved a series of auditory, visual, or bimodal items repeatedly presented for an extended period of time. When novel items were then presented, infants looked longer at these new items, suggesting that they discriminated between old and new items. While infants ably discriminated old and new auditory and visual items when presented unimodally, they often failed to notice changes in visual input when paired with the old sound (i.e., the sound that had been presented during habituation/familiarization). This was not the case for auditory stimuli. Infants reliably increased looking when a novel sound was paired with an old picture. This was taken as evidence for auditory dominance as auditory input attenuated visual processing, whereas visual input had no negative effect on auditory processing. Furthermore, auditory dominance effects seem to persist into childhood with simple discrimination and Colavita tasks pointing to auditory dominance in children between 4 and 6 years of age and a decrease in auditory dominance or transition to visual dominance across development (Nava & Pavani, 2013; Sloutsky & Napolitano, 2003).

Current study

While many studies have examined visual dominance effects in adults, mechanisms underlying these effects are poorly understood (Spence, 2009; Spence et al., 2012). Furthermore, developmental studies often point to auditory dominance, with auditory dominance decreasing with age (Nava & Pavani, 2013; Robinson & Sloutsky, 2004; Sloutsky & Napolitano, 2003; Robinson & Sloutsky, 2010a). One goal of the current study was to test some of the assumptions underlying auditory dominance in adults to determine if there is any evidence that auditory input disrupts encoding and/or responding to visual input. A second goal of the study was to examine shifts in modality dominance while using similar tasks, potentially shedding light on potential mechanisms underlying modality dominance effects.

To address these issues, in Experiment 1 we adapted the oddball paradigm used by Robinson et al. (2010) to a behavioral task involving explicit responses to determine whether auditory dominance is indeed observed when using an active task with response latency as a measure (i.e., comparing the potential slow down or speed up of bimodal responses compared with their respective unimodal responses). If auditory dominance is observed, it would be analogous to the electrophysiological findings observed by Robinson et al. (2010), demonstrated by a slowdown in reaction time when responding to visual targets when auditory information is added compared with responding to visual items in isolation. Contrarily, if it is the explicit nature of the task that leads to visual dominance, then requiring participants to respond actively with a key press should elicit visual dominance, in line with most research exploring the visual dominance effect (Colavita, 1974; Koppen et al., 2008; Sinnett et al., 2007; Spence, 2009) where a physical response has always been required. Experiments 2 and 3 employ the same stimuli and basic methodology but changed the task and response demands to examine which factors best account for modality dominance reversals, potentially shedding light on mechanism underlying modality dominance effects.

Experiment 1

In Experiment 1, participants were presented with an oddball task and were required to actively respond to infrequent oddballs by pressing the same button to auditory, visual, and bimodal oddballs. The current experiment, while explicit, differs from traditional Colavita studies, because it only requires participants to utilize one key. Thus, error rates cannot be measured in Experiment 1, because an error can only be recorded when a participant erroneously presses the wrong key. Instead, reaction times will be the main measure and modality dominance will be inferred by comparing discrimination of auditory and visual oddballs in the bimodal condition to the respective unimodal baselines.

Method

Participants

Twenty-four undergraduate participants were recruited from The Ohio State University in exchange for course credit. The sample size is consistent with previous behavioral research examining cross-modal processing in children and adults (Sloutsky & Napolitano, 2003; Napolitano & Sloutsky, 2004). Participants were naïve to the experiment and had normal or corrected-to-normal hearing and vision, self-reported. Written, informed consent was obtained before participation in the experiment occurred.

Materials

Visual stimuli were novel shapes created in Microsoft Word and exported as 400 x 400 pixel jpeg images. Visual stimuli were monochromatic and centrally presented for 200 ms on a Dell 17” LCD monitor with a refresh rate of 60 Hz. Auditory stimuli were pure tones ranging between 200 Hz to 1000 Hz and were created in CoolEdit 2000. The auditory stimuli were 200 ms in duration, saved as 22 kHz wav files, and presented from Sony MDR-CD770 headphones at approximately 68 dB. The bimodal stimuli were created by simultaneously presenting the auditory and visual stimuli for 200 ms The onset and offset of bimodal stimuli were correlated perfectly, and all unimodal and bimodal stimuli had an interstimulus interval of 1000 ms. Participants sat approximately 60 cm from the screen. Stimulus presentation and responses were recorded using a Dell Inspiron computer the software Presentation, and stimulus order was fully randomized within each block.

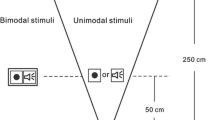

The stimulus pool consisted of five visual stimuli and five auditory stimuli, with the visual stimuli differing in shape and the auditory stimuli differing in pitch. For each participant, one visual stimulus and one auditory stimulus were chosen to be the standard and the remaining four stimuli were considered oddballs. The selection of the standard and oddballs was counterbalanced across participants. In the unimodal conditions, approximately 77 % of the stimuli were the standard and 23 % of the stimuli were oddballs. In the bimodal condition, auditory oddballs were constructed by pairing an auditory oddball with the visual standard, and visual oddballs were created by pairing a visual oddball with the auditory standard (see Fig. 1 and Table 1 for examples of stimuli). In the bimodal condition, we also presented “double oddballs” where both the auditory and visual components were oddballs (standards, oddballs, and double oddballs in the bimodal condition were presented approximately 75 %, 20 %, and 5 % of the time, respectively). Double oddballs were used in Experiment 2 to assess the types of errors people make when both modalities change. Consistent with Colavita visual dominance tasks (Colavita, 1974), these trials were presented less frequently than trials where only one modality changed.

Procedure

Three different oddball tasks were used (see Fig. 1 and Table 1 for an overview of each task). Approximately half of the participants were first presented with the unimodal oddball tasks (auditory or visual), and then participated in the multimodal task. The remaining participants were presented with the multimodal task, followed by the two unimodal tasks. The order of unimodal tasks was counterbalanced across participants. Prior to each task, participants were instructed that one stimulus (i.e., the standard) would be frequently presented throughout the experiment. The computer then briefly presented the standard, and participants were instructed to not respond to this stimulus. They were then instructed that they had to press “1” on the keyboard when the picture changed (i.e., visual oddball), when the sound changed (i.e., auditory oddball), or when both the picture and sound changed (i.e., double oddball). Participants were not instructed which finger to use to make a response; however, most participants used their index finger. Participants in the bimodal task pressed the same button for all three bimodal oddball types, thus, making it impossible to develop a modality specific response bias. In the unimodal auditory and unimodal visual tasks, participants were only instructed to press 1 on the keyboard when the sound or picture was different from the standard.

Results and discussion

Preliminary analyses indicated that participants were at ceiling when detecting auditory, visual, and double oddballs (accuracy > 96 % in all conditions). Furthermore, the auditory and visual unimodal conditions did not differ in accuracy or response times, suggesting that they were comparable in discriminability. Primary analyses focused exclusively on response times to auditory and visual oddballs in the unimodal and bimodal conditions (see Fig. 2 for mean response times). Responses to double oddball trials were analyzed in a subsequent analysis.

RTs to auditory and visual oddballs in Experiment 1 where the same response was required for auditory, visual, and bimodal oddballs. Error bars represent standard error of the mean

A Modality (Auditory vs. Visual) x Presentation Mode (Unimodal vs. Bimodal) ANOVA with Modality and Presentation Mode as repeated measures revealed a main effect of Presentation Mode, F (1, 23) = 12.69, p = 0.002, with participants responding faster to unimodal oddballs (396 ms) than bimodal oddballs (415 ms). The Modality x Presentation Mode interaction also reached significance, F (1, 23) = 5.18, p = 0.032. Paired t tests indicate that response times to auditory oddballs did not differ when presented unimodally (394 ms) or bimodally (403 ms), t (23) = 1.21, p = 0.27, whereas responding to visual oddballs was slower in the bimodal condition (427 ms) than in the unimodal condition (398 ms), t(23) = 5.23, p < 0.001. Thus, consistent with auditory dominance research, the presence of auditory stimuli slowed down visual processing but the visual stimulus had no effect on auditory processing.

Lastly, mean response times to double oddballs (347 ms) were significantly faster than all of the other response times to auditory and visual oddballs in the unimodal and bimodal conditions, t > 5, p < 0.001 (Fig. 2). The finding that double oddballs also were not slower suggests that it is not the bimodal nature of the stimulus presentation slowing down response times; rather, the slowdown can be attributed to the competition between auditory and visual information.

Experiment 1 demonstrates auditory dominance in an adult population in which visual dominance has been most common (Colavita, 1974; Koppen et al., 2008; Sinnett et al., 2007; see also Spence, 2009 for a review); however, the paradigm nevertheless fails to be analogous to traditional examples of visual dominance. That is, visual dominance has typically used a dependent variable that measures the proportion of unimodal response errors (either visual or auditory) to bimodal stimuli. Experiment 2 addressed this concern by having participants make three separate responses to auditory, visual, and double oddballs.

Experiment 2

Experiment 2 used the same oddball task as reported in Experiment 1; however, participants made three different responses to auditory, visual, and double oddballs. Note that the current study is more difficult than Experiment 1, because the participants not only have to detect and quickly report oddballs, but they also have to make a distinction between auditory, visual, and double oddballs. If visual dominance stems from a response bias or from increased competition under cognitive load, then it is possible that the current manipulation will result in a reversal to visual dominance. Based on visual dominance research (Colavita, 1974), it was expected that participants would make errors on infrequent bimodal oddball trials, with many of these errors resulting from participants pressing the visual button.

Method

Participants, materials and procedure

Eighteen new undergraduate participants were recruited from The Ohio State University. The experimental paradigm was identical to Experiment 1, except that participants were instructed to press one button when only the auditory stimulus changed, a second button when only the visual stimulus changed, and a third button when both auditory and visual components changed. Response buttons associated with the different oddball types were counterbalanced across participants. The buttons assigned were consistent across unimodal and multimodal blocks. For example, participants assigned to button 1 for visual, button 2 for auditory, and button 3 for bimodal only pressed button 1 in the unimodal visual condition and only pressed button 2 in the unimodal auditory condition. Participants were not instructed which fingers to use; however, most of the participants used their index finger to press button 1, their middle finger for button 2, and their ring finger for button 3.

Results and discussion

As in Experiment 1, mean response times for correct responses were analyzed using a Modality (Auditory vs. Visual) x Presentation Mode (Unimodal vs. Bimodal) ANOVA, with Modality and Presentation Mode serving as repeated measures. The analysis revealed an effect of Presentation Mode, F(1, 17) = 310.84, p < 0.001, with mean response times being slower in the bimodal condition (663 ms) than in the unimodal condition (398 ms). The main effect of Modality was marginally significant, F (1,17) = 3.58, p = 0.076, with faster reaction times for auditory oddballs (522 ms) when compared with visual oddballs (540 ms). The Modality x Presentation Mode interaction was significant, F (1,17) = 5.44, p = 0.032. However, the overall pattern differed from Experiment 1, see Fig. 3 for means and standard errors. First, as can be seen in the figure, bimodal presentation slowed response times to both auditory oddballs (664 ms) and visual oddballs (662 ms) relative to unimodal baselines (379 ms vs. 418 ms, respectively), t (17) > 14.15, p < 0.001. Compared with the unimodal baselines, cross-modal presentation slowed down visual response times by 245 ms and slowed auditory response times by 285 ms. While unimodal auditory response times were faster than unimodal visual response times, t (17) = 3.04, p = 0.007, bimodal presentation attenuated this difference with no difference between auditory and visual RTs in the bimodal condition, t (17) = 0.15, p = 0.882.

RTs to auditory and visual oddballs in Experiment 2 where separate responses were required for auditory, visual, and double oddballs. Error bars represent standard error of the mean

The overall error rate to double oddballs (i.e., the presentation of compound visual and auditory targets) was 29.5 % (170 total errors were made across all 18 participants). Of the 170 errors, there were 12 occasions where participants failed to make any response to double oddballs. The percentage of visual based errors was calculated for each participant (number of visual errors/number of visual + auditory errors). Participants pressed only the visual button on 65 % of the trials and only pressed the auditory button on 35 % of the trials. A one-sample t test comparing the percentage of visual based errors to chance (50 %) showed a significant bias in favor of the visual modality, t (17) = 2.56, p = 0.02. Thus, the examination of response times on auditory and visual oddball trials and errors made on double oddball trials both point to visual dominance.

The critical finding of Experiment 2 was that when using multiple response keys visual dominance was observed, whereas when using only a single response key (Experiment 1), auditory dominance was observed. Thus, it appears that differing response strategies can have the effect of changing which type of sensory dominance is observed. However, there are several potential explanations that may account for this effect; therefore, the primary goal of Experiment 3 was to rule out potential explanations.

Experiment 3

What is driving the reversal from auditory dominance in Experiment 1 to visual dominance in Experiment 2? First, it is possible that participants in Experiment 2 bias their response in favor of visual input (Posner et al., 1976). Recall that in Experiment 2 participants had separate responses for auditory and visual oddballs, whereas in Experiment 1 it was impossible to develop a modality-specific response bias because auditory and visual discrimination was associated with the same response. It also is possible that visual dominance increases under cognitive load. In Experiment 2, participants not only had to detect and report changes in auditory and visual information, but they also had to indicate which modality changed at test or if both modalities changed at test. As can be seen by comparing response times to bimodal stimuli in Experiments 1 and 2, participants in Experiment 2 (663 ms) were significantly slower to indicate which modality changed compared to Experiment 1 (415 ms) where they only had to indicate that something changed, t (40) = 13.28, p < 0.001. It also is possible that Experiments 1 and 2 differ in another way, with changing response demands affecting how participants attended to the cross-modal stimuli. In Experiment 1, participants could quickly respond after detecting a change in either modality, whereas in Experiment 2 participants had to check both modalities to determine if they should report whether a specific modality changed or report that both modalities changed. Thus, it is possible that visual dominance and increased response times in Experiment 2 do not stem from increasing the response set or task demands, but instead arises from participants having to analyze cross-modal stimuli conjunctively rather than disjunctively.

Experiment 3 addressed this issue by making a small change to Experiment 1. As in Experiment 1, participants were instructed to make the same response if the auditory or visual modality changed. However, in contrast to Experiment 1, participants were instructed not to respond if both modalities changed. If separate responses for auditory and visual input (i.e., response bias) are driving the reversal to visual dominance in Experiment 2, then auditory dominance should be found in Experiment 3, because participants cannot develop a visual response bias if they are making the same response to both modalities. However, if increasing task demands or requiring participants to analyze cross-modal stimuli conjunctively is driving the reversal to visual dominance, then visual dominance also should be found in the current experiment, because participants have to take into account both modalities before responding.

Method

Participants, materials and procedure

Fifty-five new undergraduate participants were recruited from The Ohio State University at Newark. The experimental paradigm was identical to Experiment 1, except that participants were instructed to quickly press 1 on the keyboard for both auditory and visual oddballs. They also were explicitly told not to respond to double oddballs. Stimulus presentation and timing were controlled by Dell Optiplex 9010 computers using DirectRT v2014 software. Images were presented on Planar PXL2230MW monitors and sounds were presented via Kensington KMW33137 headphones.

Results and discussion

The task was difficult and many participants responded to double oddballs even though they were instructed to not to respond if both modalities changed. On average, participants responded to the double oddballs 41 % of the time. Rather than exclude half of the sample, we first report analyses for the entire group and then we report only those participants who were accurate on double oddball trials.

As in Experiment 1, mean response times for correct responses were analyzed using a Modality (Auditory vs. Visual) x Presentation Mode (Unimodal vs. Bimodal) ANOVA, with Modality and Presentation Mode serving as repeated measures. The analysis revealed an effect of Presentation Mode, F(1, 54) = 118.29, p < 0.001, with mean response times being slower in the bimodal condition (613 ms) than in the unimodal condition (496 ms). The Modality x Presentation Mode interaction also was significant, F (1,54) = 7.11, p = 0.01 (Fig. 4). Responding to auditory oddballs (607 ms) and visual oddballs (619 ms) were both slower in the bimodal condition than the unimodal conditions (504 vs. 489, respectively). Compared with the respective baselines, cross-modal presentation slowed down visual processing by 130 ms, t (54) = 11.06, p < 0.001, and auditory processing by 103 ms, t (54) = 8.60, p < 0.001. Whereas auditory and visual response times did not differ in the unimodal conditions, t (54) = 1.50, p = 0.14, auditory response times were faster in the bimodal condition, t (54) = 3.65, p < 0.001.

RTs to auditory and visual oddballs in Experiment 3 where the same response was required for auditory and visual oddballs. Note that participants were instructed to not respond to double oddballs. Error bars represent standard error of the mean

We also conducted a median split on double oddball accuracies and only analyzed data from participants who made fewer than 30 % errors on double oddballs trials (N = 27). Participants in this subgroup only false alarmed 16 % of the time on double oddball trials. If the three button task used in Experiment 2 forced participants to analyze cross-modal stimuli conjunctively, then auditory dominance effects should disappear or possibly even reverse when only examining participants in the current experiment who followed the instructions and attended to the cross-modal stimuli conjunctively. Overall, the pattern closely replicated the whole group analyses, with effects increasing rather than decreasing. As in the previous analysis, the ANOVA revealed an effect of Presentation Mode, F(1, 26) = 172.50, p < 0.001, with mean response times being slower in the bimodal condition (663 ms) than in the unimodal condition (512 ms). The Modality x Presentation Mode interaction was also significant, F (1,26) = 14.34, p = 0.001. Responding to auditory oddballs (665 ms) and visual oddballs (672 ms) were both slower in the bimodal condition than the unimodal conditions (531 vs. 494, respectively). Compared with the respective baselines, cross-modal presentation slowed down visual processing by 178 ms, t (26) = 15.28, p < 0.001, and slowed down auditory processing by 134 ms, t (26) = 8.19, p < 0.001. Whereas visual response times were faster than auditory response times in the unimodal conditions, t (26) = 2.80, p = 0.01, the pattern reversed with auditory response times being faster than visual response times in the bimodal condition, t (26) = 4.15, p < 0.001. These findings are consistent with auditory dominance and show that visual dominance in Experiment 2 does not stem from analyzing cross-modal stimuli conjunctively.

As in the previous experiment, participants in Experiment 3 also were significantly slower to respond to auditory and visual oddballs in the bimodal condition (613 ms) compared with Experiment 1 (415 ms), t (77) = 10.45, p < 0.001. Assuming that increased response times in Experiment 3 at least partially reflects increased task demands, it is unlikely that the reversal to visual dominance in Experiment 2 stemmed from increased task demands. Otherwise, increasing the task demands in the current experiment should have also reversed modality dominance effects, with visual dominance being observed.

General discussion

There are a number of important findings that warrant discussion. First, sensory dominance was observed in all of the experiments reported. However, the type of dominance (auditory or visual) was different depending on specific task conditions. Accordingly, it is possible to argue that we have shown evidence for the coexistence of competing dominance types within an adult population where visual dominance has been most commonly observed (Colavita, 1974; Koppen et al., 2008; Sinnett et al., 2007; Spence, 2009). It appears that the main paradigm change that leads to different dominance types is the number of responses used or at least requiring different responses for reporting changes in auditory and visual information. That is, when using multiple response keys, visual dominance appears to be robustly demonstrated, as seen in Experiment 2, and in numerous recent examples (Koppen et al., 2008; Sinnett et al., 2007). However, when using only a single response key, such as in Experiments 1 and 3, or when no responses are made (Robinson et al., 2010), auditory dominance is observed.

Despite robust visual dominance effects in adults reported during the past 40 years, Experiment 1 shows novel evidence of auditory dominance while using a similar experiment as Robinson et al. (2010). This demonstrates that Robinson et al.’s findings are not limited to passive tasks where no responses are made. Interestingly, the distinction between explicit and implicit tasks did not appear to change modality dominance type. That is, the task in Experiment 1 was explicit in that participants were told that they had to discriminate standards from oddballs and that they had to quickly respond when they encountered an oddball, but auditory dominance nevertheless persisted. It appears simply changing a task that was originally an implicit passive task to include a single explicit response was not enough to change dominance type.

Experiment 2 used a similar methodology but required participants to make three different responses on bimodal trials: press one button for an auditory oddball, a second button for a visual oddball, and a third button for a double oddball (both auditory and visual components were oddballs). Visual dominance was observed when examining response times and accuracies on double oddball trials. Dovetailing with most research that measures error rates to bimodal trials, participants erroneously pressed the visual key more often than the auditory key in bimodal trials, demonstrating visual dominance.

Mechanisms underlying modality dominance

Although we only increased the response set from one to three in Experiment 2, several factors could account for the reversal to auditory dominance. First, increasing the response set increased the task demands, reflected by increased reaction times in the second experiment. Accordingly, it is possible that the visual modality dominates when attentional resources are depleted. More specifically, along with detecting oddballs in the unimodal conditions, participants also had to detect changes in one or both modalities using separate keys. Thus, it is not surprising that response times in the bimodal conditions also increased. Second, requiring participants to make separate responses to auditory and visual oddballs made it possible to develop a visual response bias, whereas this was not possible in Experiment 1, because participants used a single response key to detect auditory and visual oddballs. Finally, in Experiment 1 participants could quickly respond after noticing a change in a single modality, whereas they had to attend to and also check the second modality in Experiment 2 to determine if they should report that modality 1 changed or both modalities changed. Any one of these possibilities, or combined effects, could account for a shift from auditory to visual dominance.

Experiment 3 addressed this concern by requiring participants to make the same response to auditory and visual oddballs, while at the same time not responding to double oddballs where both modalities changed. If increased task demands/depleted resources or if analyzing bimodal stimuli conjunctively are driving the reversal to visual dominance, then visual dominance should have been the dominant pattern found in Experiment 3. While an increase in RT was present (i.e., responses to unimodal oddball trials were approximately 100 ms longer in Experiment 3), the data closely replicate Experiment 1 (auditory dominance), which suggests that requiring participants to make different responses to auditory and visual input is needed, at least in this set of experiments, to observe visual dominance. At the same time, response competition was not needed to exert auditory dominance effects.

While future research is needed, it is likely that auditory dominance and visual dominance stem from different underlying mechanisms. When auditory and visual information provide conflicting information and participants have to make different responses to this conflicting information, it is possible that adults bias their responding in favor of visual input (Posner et al., 1976) and this response bias masks or overshadows auditory dominance effects. However, when visual response biases are eliminated such as in Experiments 1 and 3, auditory dominance effects appear to be more pronounced. The fact that these effects also occur on implicit tasks where no responses are made (Robinson et al., 2010) suggests that this interference might be happening early in the course of processing. In other words, the current findings in conjunction with passive tasks examining auditory dominance might suggest that auditory stimuli might disrupt visual processing during stimulus encoding, whereas visual dominance effects happen later in processing while participants are making a decision and/or are responding to bimodal input.

In summary, the findings from this study demonstrate novel evidence of auditory dominance in adults. The findings also point to conditions that elicit visual dominance, with different responses to auditory and visual input playing a role in modulating sensory dominance. These findings have implications for tasks that require processing and responding to multisensory input and possibly suggest different mechanisms underlying auditory and visual dominance, with the former possibly disrupting encoding of visual input and the latter possibly interfering with auditory processing during the response and/or decision phase.

References

Alsius, A., Navarra, J., Campbell, R., & Soto-Faraco, S. (2005). Audiovisual integration of speech falters under high attention demands. Current Biology, 15, 839–843.

Colavita, F. B. (1974). Human sensory dominance. Perception & Psychophysics, 16, 409–412.

Colavita, F. B., Tomko, R., & Weisberg, D. (1976). Visual pre-potency and eye orientation. Bulletin of the Psychonomic Society, 8, 25–26.

Colavita, F. B., & Weisberg, D. (1979). A further investigation of visual dominance. Attention, Perception, & Psychophysics, 25, 345–347.

Conway, C. M., & Christiansen, M. H. (2005). Modality-constrained statistical learning of tactile, visual, and auditory sequences. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31(1), 24–39.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annual Review of Neuroscience, 18(1), 193–222.

Duncan, J. (1996). Cooperating brain systems in selective perception and action. In Attention and performance (pp. 549–578). Cambridge, MA: The MIT Press.

Egeth, H. E., & Sager, L. C. (1977). On the locus of visual dominance. Attention, Perception, & Psychophysics, 22, 77–86.

Eimer, M., & Van Velzen, J. (2002). Cross-modal links in spatial attention are mediated by supramodal control processes: Evidence from event-related brain potentials. Psychophysiology, 39, 437–449.

Gori, M., Sandini, G., & Burr, D. (2012). Development of visuo-auditory integration in space and time. Frontiers in Integrative Neuroscience, 6(77), doi:10.3389/fnint.2012.00077

Howard, I. P., & Templeton, W. B. (1966). Human spatial orientation. New York, NY: Wiley.

Koppen, C., Alsius, A., & Spence, C. (2008). Semantic congruency and the Colavita visual dominance effect. Experimental Brain Research, 184, 533–546.

Koppen, C., & Spence, C. (2007). Seeing the light: Exploring the Colavita visual dominance effect. Experimental Brain Research, 180, 737–754.

Lewkowicz, D. J. (1988a). Sensory dominance in infants: 1. Six-month-old infants’ response to auditory-visual compounds. Developmental Psychology, 24, 155–171.

Lewkowicz, D. J. (1988b). Sensory dominance in infants: 2. Ten-month-old infants’ response to auditory-visual compounds. Developmental Psychology, 24, 172–182.

Massaro, D. W. (1998). Perceiving talking faces: From speech perception to a behavioral principle. Cambridge, MA: MIT Press.

McGurk, H., & MacDonald, J. (1976). Hearing lips and seeing voices. Nature, 264, 746–748.

Napolitano, A., & Sloutsky, V. M. (2004). Is a picture worth a thousand words? The flexible nature of modality dominance in young children. Child Development, 75(6), 1850–1870.

Nava, E., & Pavani, F. (2013). Changes in sensory dominance during childhood: Converging evidence from the Colavita effect and the sound-induced flash illusion. Child Development, 84(2), 604–616.

Ngo, M. K., Cadieux, M. L., Sinnett, S., Soto-Faraco, S., & Spence, C. (2011). Reversing the Colavita visual dominance effect. Experimental Brain Research, 214(4), 607–618.

Ngo, M. K., Sinnett, S., Soto-Faraco, S., & Spence, C. (2010). Repetition blindness and the Colavita effect. Neuroscience Letters, 480, 186–190.

O’Connor, N., & Hermelin, B. (1972). Seeing and hearing and space and space and time. Perception & Psychophysics, 11(1), 46–48.

Posner, M. I., Nissen, M. J., & Klein, R. M. (1976). Visual dominance: An information-processing account of its origins and significance. Psychological Review, 83, 157–171.

Robinson, C. W., Ahmar, N., & Sloutsky, V. M. (2010). Evidence for auditory dominance in a passive oddball task. In S. Ohlsson & R. Catrambone (Eds.), Proceedings of the 32nd Annual Conference of the Cognitive Science Society (pp. 2644–2649). Austin, TX: Cognitive Science Society.

Robinson, C. W., & Sloutsky, V. M. (2004). Auditory dominance and its change in the course of development. Child Development, 75, 1387–1401.

Robinson, C. W., & Sloutsky, V. M. (2010a). Development of cross-modal processing. Wiley Interdisciplinary Reviews: Cognitive Science, 1, 135–141.

Robinson, C. W., & Sloutsky, V. M. (2010b). Effects of multimodal presentation and stimulus familiarity on auditory and visual processing. Journal of Experimental Child Psychology, 107, 351–358.

Robinson, C. W., & Sloutsky, V. M. (2013). When audition dominates vision: Evidence from cross-modal statistical learning. Experimental Psychology, 60, 113–121.

Sereno, M. I., Dale, A. M., Reppas, J. B., Kwong, K. K., Belliveau, J. W., Brady, T. J., et al. (1995). Borders of multiple visual areas in humans revealed by functional magnetic resonance imaging. Science, 268(5212), 889–893.

Shams, L., Kamitani, Y., & Shimojo, S. (2000). Illusions what you see is what you hear. Nature, 408, 788.

Shams, L., Kamitani, Y., & Shimojo, S. (2002). Visual illusion induced by sound. Cognitive Brain Research, 14, 147–152.

Shimojo, S., & Shams, L. (2001). Sensory modalities are not separate modalities: Plasticity and interactions. Current Opinion in Neurobiology, 11, 505–509.

Sinnett, S., Soto-Faraco, S., & Spence, C. (2008). The co-occurrence of multisensory competition and facilitation. Acta Psychologica, 128, 153–161.

Sinnett, S., Spence, C., & Soto-Faraco, S. (2007). Visual dominance and attention: Revisiting the Colavita effect. Perception & Psychophysics, 69, 673–686.

Sloutsky, V. M., & Napolitano, A. (2003). Is a picture worth a thousand words? Preference for auditory modality in young children. Child Development, 74(3), 822–833.

Sloutsky, V. M., & Robinson, C. W. (2008). The role of words and sounds in visual processing: From overshadowing to attentional tuning. Cognitive Science, 32, 354–377.

Spence, C. (2009). Explaining the Colavita visual dominance effect. Progress in Brain Research, 176, 245–258.

Spence, C., Parise, C., & Chen, Y. C. (2012). The Colavita visual dominance effect. In M. M. Murray & M. T. Wallace (Eds.), The neural bases of multisensory processes (pp. 529–556). Boca Raton, FL: CRC Press.

Stein, B. E., London, N., Wilkinson, L. K., & Price, D. D. (1996). Enhancement of perceived visual intensity by auditory stimuli: A psychophysical analysis. Journal of Cognitive Neuroscience, 8, 497–506.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Robinson, C.W., Chandra, M. & Sinnett, S. Existence of competing modality dominances. Atten Percept Psychophys 78, 1104–1114 (2016). https://doi.org/10.3758/s13414-016-1061-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-016-1061-3