Abstract

Actions produced in response to familiar objects are predominantly mediated by the visual structure of objects, and less so by their semantic associations. Choosing an action in response to an object tends to be faster than choosing the object’s name, leading to the suggestion that there are direct links between the visual representations of objects and their actions. The relative contribution of semantics, however, is unclear when actions are produced in response to novel objects. To investigate the role of semantics when object–action associations are novel, we had participants learn to use and name novel objects and rehearse the object, action, and name associations over one week. Each object–action pair was associated with a label that was either semantically similar or semantically distinct. We found that semantic similarity only affected action and name production when the object associations were novel, suggesting that semantic information is recruited when actions are produced in response to novel objects. We also observed that the advantage to producing an action was absent when associations were novel, suggesting that practice is necessary for these direct links to develop.

Similar content being viewed by others

The actions we produce when using objects can be driven by visual cues from the object or by knowledge about the object (Yoon, Heinke, & Humphreys, 2002). Yet, access to semantic information is not necessary for producing actions (Buxbaum, Schwartz, & Carew, 1997), suggesting that there are direct links between the visual representations of objects and actions (Yoon et al., 2002). However, these findings are based on familiar objects: Visual and semantic information may be weighted differently when the objects are novel.

Neurological patient data have shown that action deficits can occur despite intact access to semantic information (Negri, Rumiati, et al., 2007; Rumiati, Zanini, Vorano, & Shallice, 2001), and that actions can be correctly produced in the absence of access to semantic information (Goodale & Milner, 1992; Negri, Lunardelli, Gigli, & Rumiati, 2007; Negri, Rumiati, et al., 2007; Riddoch & Humphreys, 1987). For our purposes, “semantic information” refers exclusively to the lexical–semantic information associated with objects, as opposed to action semantics (e.g., the end locations of the movements associated with objects; see van Elk, van Schie, & Bekkering, 2014). Patient data have demonstrated that semantic information is neither sufficient nor necessary for producing actions in response to objects. Furthermore, in neurotypical individuals, action decisions are faster than name decisions in response to objects (Chainay & Humphreys, 2002), suggesting privileged links between objects and their associated actions.

The “naming and action model” (NAM) builds on the above data and proposes that action production and name production are influenced by converging information from the structural properties of objects or words and their associated semantic information (Yoon et al., 2002). According to the NAM, the visual structure of stimuli feeds into stored visual representations of objects and words. When these visual representations are activated, activation spreads to the representations of other structurally similar stimuli. While structural identity is being resolved, the semantic information associated with the presented stimulus is retrieved (the same semantic representation is accessed independently of stimulus type), and then the corresponding action or name is activated. The NAM’s semantic representation is quasi-modular, segregated, and based on “an interactive activation and competition network” (Yoon et al., 2002, p. 623). This “object representation → semantics → action output” constitutes the indirect route between objects and actions. The model also includes a direct route from objects’ visual representations to action production that bypasses semantic information. According to the model, naming objects and producing actions in response to words require access to semantics, but producing actions in response to objects can be done without accessing semantics.

The NAM effectively predicts how actions can be produced without semantic access when using familiar objects, but semantic information may play a larger role in action production when the object–action associations are novel. Paulus, van Elk, and Bekkering (2012) and van Elk, Paulus, Pfeiffer, van Schie, and Bekkering (2011) first trained participants to use novel objects that had to be moved toward the nose or ear, and then asked the participants to respond (e.g., “same”–“different”) to sequentially presented pictures of the learned objects. Stimuli depicting faulty end locations slowed performance, an observation interpreted as being due to the activation of incorrect semantic information. However, recall that this information referred to the end locations of movements: Therefore, the interference may reflect the activation of motor patterns that include end locations for actions that can be accessed via the direct route, and not necessarily via semantic knowledge.

In sum, familiar actions can clearly be produced independently of access to semantic information. It is less clear, however, whether semantic information can impact the production of newly acquired actions. The semantic interference reported by Paulus et al. (2012) and van Elk et al. (2011) is promising but could reflect the activation of end locations within action representations, and not semantic representations. Here, we planned to demonstrate that, contrary to what is observed with familiar objects, semantic information impacts action performance when object–action associations are novel, and that it ceases to impact performance when these associations are well-practiced. In our study, participants first learned to associate novel objects with an action; each pair was identified by a semantically similar or distinct name. Participants were then asked to produce names and actions in response to words and pictures, and practiced the associations for five days before repeating the name and action tasks. If semantic information is recruited, and if the activation of a representation spreads to similar representations (Yoon et al., 2002), then items associated with semantically similar labels should be confused more often than items associated with distinct labels. We therefore hypothesized that on Day 1 semantic similarity would impact naming and gesturing to objects, and gesturing to words, but that it would only affect naming objects and gesturing to words on Day 5. If actions are produced through an indirect (semantic) route at first, there should also not be an advantage for gesturing over naming until Day 5.

Method

Participants

A group of 12 right-handed undergraduate students from Mount Allison University (nine women, three men; mean age = 20 years) received $80 in compensation for their participation.

Materials

We used eight gray PVC objects (81 mm long) that varied in curvature, thickness, and tapering. Each object was mounted on a pin that could be inserted in the arm of a manipulandum (see Fig. 1), allowing the object to be pulled out, slid to the left, or twisted to the left, as well as allowing participants to execute eight combinations of these action dimensions.

Each object–action pair was identified by a label; four of the labels referred to semantically similar concepts (bright, clever, witty, and smart) and four to semantically distinct concepts (honest, rare, soft, and easy). These labels did not differ in verbal frequency, t(6) = –1.935, n.s. Frequency information retrieved from http://websites.psychology.uwa.edu.au/school/MRCDatabase/uwa_mrc.htm (see Table 1).Footnote 1

During the experimental phase, we used pictures of the objects to keep the presentation format consistent with word stimuli on a computer screen and as free from human error as possible. Stimuli were presented at a visual angle of 9.1°, and the words were printed in 20-point capital Tahoma font. Stimuli were presented on a Dell Optiplex 755 mini tower computer using the Superlab 4.5 software, pro edition. Actions were performed on an 81-mm black PVC cylinder (42-mm diameter).

Crucially, the labels allowed us to create one group of objects for which associations were semantically similar, and one group for which the associations were semantically distinct. In this object set, some objects were visually similar and some were visually distinct—the same could be said for the actions. We expected visually similar objects to be confused more often than visually distinct objects, and similar actions to be confused more often than distinct actions, an observation confirmed by Desmarais, Dixon and Roy (2007) and Desmarais, Pensa, Dixon, and Roy (2007) with these stimuli. The specific object–action–name associations were counterbalanced across participants (see Table 2 for sample combinations), but in order to control for visual and action similarity, each group (semantically similar vs. distinct) comprised four equally similar objects and four equally similar actions.

Procedure

Participants were tested over five consecutive days (see Fig. 2).

Day 1

Training phase

Participants completed 20 blocks of eight learning trials and eight test trials. They sat at arm’s length in front of the manipulandum, with their eyes closed. The experimenter signaled the beginning of learning trials by saying “this is how you use this object.” Participants opened their eyes and saw an object on the manipulandum, as well as a 9 × 6 cm card bearing the object’s name. The researcher said the name of the object and demonstrated its associated action directly onto the object (approximately 1 s). Participants did not produce a response. The experimenter signaled the end of each learning trial by asking participants to close their eyes. This sequence continued until all eight objects had been presented once, in random order. Participants began test trials with their eyes closed. The experimenter signaled the beginning of each test trial by asking participants to name the object mounted on the manipulandum. An 18 × 26 cm sheet displayed all eight labels in random order in case participants did not remember a particular label. Once participants had named the object, they grasped it and performed its action directly on the manipulandum. If participants were unsure, they were instructed to make their best guess. The experimenter recorded the participants’ responses (no feedback was provided). The experimenter signaled the end of a test trial by asking participants to close their eyes. Each object was presented once in random order. Participants were then presented with eight more learning trials, followed by eight more test trials. This pattern of eight learning and eight test trials continued until participants had completed 20 blocks of eight learning and eight test trials (approximately 70 min). By the end of the training phase, all participants could flawlessly identify each object and perform all actions.

Experimental phase

The experimental phase included naming the novel objects, naming words, gesturing in response to pictures of the novel objects, and gesturing in response to words. Before each task, participants were presented with 16 reminder trials identical to the learning trials described above. The order of the tasks was counterbalanced between participants, but individual participants received the same order on Days 1 and 5. The experimental phase took approximately 30 min.

-

Naming pictures of objects Trials began with a 1,000-ms, centrally located fixation cross followed by the picture of one of the objects, which was displayed until participants had named the object. The word “answer” replaced the picture, and the experimenter recorded participants’ answers. This sequence was repeated until each object had been presented ten times in random order (80 trials in total). Reaction times (RTs) from the onset of the stimulus to the participant’s vocal response were collected, as was accuracy.

-

Naming words This task was identical to “naming the pictures of novel objects,” except that the stimuli were words instead of pictures. This task amounted to “reading words,” but we labeled it “naming words” to keep the terminology consistent across the tasks.

-

Gesturing to objects Participants placed their right hand above a response pad located in front of them, with their index finger over a button. Trials began with a 1,000-ms, centrally presented fixation cross followed by the picture of one of the eight objects. Participants initiated their response by pressing the button, after which they produced the action directly on the cylinder, and pressed the button again. The stimulus disappeared at the first button press. After the second button press, the word “answer” appeared and the experimenter recorded participants’ response. This sequence was repeated until each object had been presented ten times in random order (80 trials in total). Accuracy, RT (interval between the onset of the stimulus and the first button press), and movement time (interval between the first and second button presses) were collected.

-

Gesturing to words This task was identical to “gesturing to objects,” except that the stimuli were words instead of pictures.

Days 2–4

Participants completed 20 blocks of eight learning trials and 16 test trials identical to those described for the training phase. Feedback was provided when errors occurred.

Day 5

Participants completed practice trials as described for Days 2–4 and an experimental phase as described above.

Results

RTs to correct trials were trimmed recursively at three standard deviations (Van Selst & Jolicœur, 1994) and were entered in a 2 (day) × 2 (task) × 2 (stimulus type) × 2 (semantic similarity) analysis of variance (ANOVA). The analysis revealed a number of significant effects.Footnote 2 Importantly, we observed a four-way interaction between all of the factors, F(1, 11) = 4.60, p = .055 (see Fig. 3). An analysis of the simple main effects revealed that semantic similarity affected performance for three tasks on Day 1: RTs were faster for stimuli associated with semantically distinct labels when gesturing to novel objects (mean RTs = 1,143 ms for semantically distinct items and 1,189 ms for semantically similar items), F(1, 11) = 6.99, p < .025, and gesturing to words (mean RTs of 798 ms for semantically distinct items and 854 ms for semantically similar items), F(1, 11) = 10.18, p < .01. Surprisingly, naming objects was slower when objects were associated with semantically distinct labels (mean = 1,311 ms) rather than semantically similar labels (mean = 1,218 ms), F(1, 11) = 28.39, p < .01. On Day 5, semantic similarity did not impact any task.

We directly tested our prediction that participants would only produce actions faster than names in response to objects on Day 5, using two planned directional paired-samples t tests on data grouped across semantic similarity. On Day 1, gesturing (mean RT = 1,166 ms) and naming (mean RT = 1,265 ms) did not differ, t(11) = –0.794, n.s. However, on Day 5, RTs were faster for gesturing (mean RT = 624 ms) than for naming (mean RT = 968.97 ms), t(11) = –6.389, p < .001.

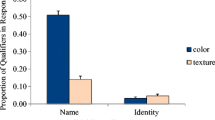

The proportions of errors were entered in a 2 (day) × 2 (task) × 2 (stimulus type) × 2 (semantic similarity) ANOVA. Only confusions between two items of the same category were included; errors across categories were not included because they did not reflect within-category confusions. We observed a main effect of stimulus type: Participants produced fewer errors in response to words (mean = 1.8 % errors) than in response to objects (mean = 4.1 % errors), F(1, 11) = 8.52, p = .014. This main effect was qualified by a three-way interaction between day, task, and stimulus type, F(1, 11) = 4.79, p = .051. To keep these comparisons consistent with the previous analysis, we contrasted the mean proportions of errors for naming and gesturing to the stimuli on Days 1 and 5. Uncorrected t tests showed no difference on Day 1: There were as many errors when gesturing (mean = 4.9 %) as when naming (mean = 3.0 %) objects, t(11) = 0.751, n.s., as well as when gesturing (mean = 3.1 %) and naming (mean = 1.4 %) words, t(11) = 1.449, n.s. On Day 5, we noticed a trend toward more errors for naming (mean = 5.6 %) than for gesturing to objects (mean = 2.8 %), t(11) = –1.685, p = .06 (one-tailed), and more errors for gesturing (mean = 2.78 %) than for naming words (no errors), t(11) = 2.345, p = .02 (one-tailed).

We entered movement times in a 2 (day) × 2 (stimulus type) × 2 (semantic similarity) repeated measures ANOVA, and observed a main effect of day, F(1, 11) = 59.14, p < .001. Actions were completed more slowly on Day 1 (mean = 2,113 ms) than on Day 5 (mean = 1,695 ms). This was qualified by a two-way interaction between day and semantic similarity, F(1, 11) = 5.248, p = .043. To be consistent with our RT analysis, we compared the impacts of semantic similarity on Days 1 and 5. On Day 1, we found a weak trend to complete actions faster when stimuli were associated with semantically distinct labels (mean = 2,066 ms) rather than semantically similar labels (mean = 2,160 ms), t(11) = 1.176, p = .12 (one-tailed). This difference disappeared on Day 5 (means = 1,690 ms for semantically distinct stimuli and 1,699 ms for semantically similar stimuli), t(11) = 0.189, n.s.

Discussion

We showed that semantic similarity impacts most tasks when object–action associations are novel, but not after one week of training, and that the advantage for producing actions over names in response to objects is absent when the associations are novel, but present after one week of training.

On Day 1, semantic similarity affected naming objects and, more importantly, gesturing in response to objects and words. According to the NAM (Yoon et al., 2002), when a stimulus from the semantically similar category (e.g., “bright”) was presented, its structural representation was activated. While the structural representation was being resolved, activation fed forward to semantic representations, activating both its representation in semantic memory and the representations of its similar neighbors (“clever,” “smart,” and “witty”). The activation for all four concepts then fed forward to the action output, leading to longer RTs to determine an appropriate action. When a stimulus from the semantically distinct group, such as “easy,” was presented, its semantic representation received more activation because it had no close neighbors. When activity fed forward to the action representations, the target action received more activation, and action production was initiated faster. Semantic similarity therefore mediated the RTs for gesturing in response to both objects and words.

Unexpectedly, and contrary to past research, when participants named objects, this effect was reversed. Typically, naming RTs are faster for items from semantically distinct categories (Dickerson & Humphreys, 1999; Humphreys, Riddoch, & Quinlan, 1988; Lloyd-Jones & Humphreys, 1997a, 1997b). It is possible that grouping objects under one umbrella term such as “intelligent” helped participants resolve the objects’ identities faster for naming but not for action production. Because there were only eight objects, having four of these objects labeled as “intelligent” may have facilitated identification. Nonetheless, the conflicting result for naming objects supports the idea that semantic associations impacted performance.

Our results are consistent with planning and control action models (Glover, 2004): Semantic similarity generally impacted action planning (RT) but not action control (movement time). The observation of an impact of semantic information in action control in other studies may have arisen from the specific choice of verbs as stimuli. For example, Boulenger et al. (2006) asked participants to perform actions following presentations of both nouns and verbs, and reported that the presentation of verbs facilitated reaching movements (action control). This is not surprising and not inconsistent with our findings. Processing verbs is known to generate activity in motor areas (Pulvermüller, Shtyrov, & Ilmoniemi, 2005). It is therefore possible that the facilitation observed in action execution arose from this motor activity, which occurred in addition to semantic activation. Our stimuli were specifically selected not to elicit action-related semantic information—it is therefore not surprising that their impact on action execution was different from the presentation of verbs that can activate action-related semantics.

On Day 5, semantic similarity had no impact on gesturing in response to objects, suggesting that these actions were now done via a direct route. However, contrary to the NAM’s prediction (Yoon et al., 2002), semantics did not impact gesturing to words and naming objects. It is possible that the small number of competitors resulted in less competition from semantically similar concepts than occurs for objects in the real world that have numerous competitors. Consequently, the impact of semantic similarity was more subtle in our sample. The amount of practice may also have led to a floor effect, preventing us from detecting an effect.

As predicted, we observed no advantage for gesturing relative to naming objects on Day 1, whereas on Day 5 gesturing in response to objects was more effective (faster and marginally more accurate) than naming objects. The direct route described by the NAM (Yoon et al., 2002) may therefore require practice to develop. A change in the use of direct versus indirect routes can also be observed as people learn to imitate actions. Tessari, Bosanac, and Rumiati (2006) asked participants to learn to imitate meaningless (and objectless) gestures, and they observed that action imitation was initially done through a direct route that bypassed semantics. As actions became more familiar, imitation occurred through an indirect route that allows for actions themselves to be recognized—a process different from that of object use, in which an object (not an action) can be recognized before being used.

In conclusion, semantic similarity affects actions made in response to objects when object–action associations are novel. Models of action production such as the NAM may therefore be useful to explain the production of newly acquired actions, but they may require that various object cues (e.g., visual structure and semantic associations) be weighted differently.

Notes

We opted for abstract concepts because recent findings have suggested that the visual and action information associated with labels impacts novel object–action associations (Desmarais, Hudson, & Richards, 2015).

Our analysis revealed a main effect of day, F(1, 11) = 61.88, p < .001, as well as a main effect of stimulus type, F(1, 11) = 72.28, p < .001. Participants generally responded faster on the fifth day of training (mean = 665 ms) than on the first day (mean = 939 ms), and they generally responded faster in response to words (mean = 598 ms) than in response to objects (mean = 1,006 ms). These main effects were qualified by three two-way interactions: between day and task, F(1, 11) = 16.35, p = .002; between day and stimulus type, F(1, 11) = 20.02, p = .001; and between task and stimulus type, F(1, 11) = 30.08, p < .001.

References

Boulenger, V., Roy, A. C., Paulignan, Y., Deprez, V., Jeannerod, M., & Nazir, T. A. (2006). Cross-talk between language processes and overt motor behavior in the first 200 ms of processing. Journal of Cognitive Neuroscience, 18, 1607–1615.

Buxbaum, L. J., Schwartz, M. F., & Carew, T. G. (1997). The role of semantic memory in object use. Cognitive Neuropsychology, 14, 219–254.

Chainay, H., & Humphreys, G. W. (2002). Privileged access to action for objects relative to words. Psychonomic Bulletin & Review, 9, 348–355.

Desmarais, G., Dixon, M. J., & Roy, E. A. (2007). A role for action knowledge in visual object identification. Memory & Cognition, 35, 1712–1723. doi:10.3758/BF03193504

Desmarais, G., Hudson, P., & Richards, E. D. (2015). Influences of visual and action information on object identification and action production. Consciousness and Cognition, 34, 124–139.

Desmarais, G., Pensa, M. C., Dixon, M. J., & Roy, E. A. (2007). The importance of object similarity in the production and identification of actions associated with objects. Journal of the International Neurological Society, 13, 1021–1034.

Dickerson, J., & Humphreys, G. W. (1999). On the identification of misoriented objects: Effects of task and level of stimulus description. European Journal of Cognitive Psychology, 11, 145–166.

Glover, S. (2004). Separate visual representations in the planning and control of action. Behavioral and Brain Sciences, 27, 3–78.

Goodale, M. A., & Milner, A. D. (1992). Separate visual pathways for perception and action. Trends in Neurosciences, 15, 20–25. doi:10.1016/0166-2236(92)90344-8

Humphreys, G. W., Riddoch, M. J., & Quinlan, P. T. (1988). Cascade processes in picture identification. Cognitive Neuropsychology, 5, 67–103.

Lloyd-Jones, T. J., & Humphreys, G. W. (1997a). Categorizing chairs and naming pears: Category differences in object processing as a function of task and priming. Memory & Cognition, 25, 606–624. doi:10.3758/BF03211303

Lloyd-Jones, T. J., & Humphreys, G. W. (1997b). Perceptual differentiation as a source of category effects in object processing: Evidence from naming and object decision. Memory & Cognition, 25, 18–35. doi:10.3758/BF03197282

Negri, G. A., Lunardelli, A., Gigli, G. L., & Rumiati, R. I. (2007a). Degraded semantic knowledge and accurate objects use. Cortex, 2, 376–388.

Negri, G. A., Rumiati, R. I., Zadini, A., Ukmar, M., Mahon, B. Z., & Caramazza, A. (2007b). What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cognitive Neuropsychology, 24, 795–816. doi:10.1080/02643290701707412

Paulus, M., van Elk, M., & Bekkering, H. (2012). Acquiring functional object knowledge through motor imagery? Experimental Brain Research, 218, 181–188.

Pulvermüller, F., Shtyrov, Y., & Ilmoniemi, R. (2005). Brain signatures of meaning access in action word recognition. Journal of Cognitive Neuroscience, 17, 884–892. doi:10.1162/0898929054021111

Riddoch, M. J., & Humphreys, G. W. (1987). Visual object processing in a case of optic aphasia: A case of semantic agnosia. Cognitive Neuropsychology, 4, 131–185.

Rumiati, R. I., Zanini, S., Vorano, L., & Shallice. (2001). A form of ideational apraxia as a deficit of contention scheduling. Cognitive Neuropsychology, 18, 617–642.

Tessari, A., Bosanac, D., & Rumiati, R. I. (2006). Effect of learning on imitation of new actions: Implications for a memory model. Experimental Brain Research, 3, 507–513.

van Elk, M., Paulus, M., Pfeiffer, C., van Schie, H. T., & Bekkering, H. (2011). Learning to use novel objects: A training study on the acquisition of novel action representations. Consciousness and Cognition, 20, 1304–1314. doi:10.1016/j.concog.2011.03.014

van Elk, M., van Schie, H., & Bekkering, H. (2014). Action semantics: A unifying conceptual framework for the selective use of multimodal and modality-specific object knowledge. Physics of Life Reviews, 11, 220–250.

Van Selst, M., & Jolicœur, P. (1994). A solution to the effect of sample size on outlier elimination. Quarterly Journal of Experimental Psychology, 47A, 631–650. doi:10.1080/14640749408401131

Yoon, E. Y., Heinke, D., & Humphreys, G. W. (2002). Modelling direct perceptual constraints on action selection: The naming and action model (NAM). Visual Cognition, 9, 615–661.

Author note

This research was supported by a Natural Sciences and Engineering Research Council of Canada Discovery Grant (PIN-246231-07), awarded to the second author, as well as by a Marjorie-Young Bell faculty fellowship awarded to the third author.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Macdonald, S.N., Richards, E.D. & Desmarais, G. Impact of semantic similarity in novel associations: Direct and indirect routes to action. Atten Percept Psychophys 78, 37–43 (2016). https://doi.org/10.3758/s13414-015-1041-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-015-1041-z