Abstract

Background

Improving access to high-quality healthcare for individuals in correctional settings is critical to advancing health equity in the United States. Compared to the general population, criminal-legal involved individuals experience higher rates of chronic health conditions and poorer health outcomes. Implementation science frameworks and strategies offer useful tools to integrate health interventions into criminal-legal settings and to improve care. A review of implementation science in criminal-legal settings to date is necessary to advance future applications. This systematic review summarizes research that has harnessed implementation science to promote the uptake of effective health interventions in adult criminal-legal settings.

Methods

A systematic review of seven databases (Academic Search Premier, Cumulative Index to Nursing and Allied Health Literature, PsycINFO, Social Work Abstracts, ProQuest Criminal Justice Database, ProQuest Sociological Abstracts, MEDLINE/PubMed) was conducted. Eligible studies used an implementation science framework to assess implementation outcomes, determinants, and/or implementation strategies in adult criminal-legal settings. Qualitative synthesis was used to extract and summarize settings, study designs, sample characteristics, methods, and application of implementation science methods. Implementation strategies were further analyzed using the Pragmatic Implementation Reporting Tool.

Results

Twenty-four studies met inclusion criteria. Studies implemented interventions to address infectious diseases (n=9), substance use (n=6), mental health (n=5), co-occurring substance use and mental health (n=2), or other health conditions (n=2). Studies varied in their operationalization and description of guiding implementation frameworks/taxonomies. Sixteen studies reported implementation determinants and 12 studies measured implementation outcomes, with acceptability (n=5), feasibility (n=3), and reach (n=2) commonly assessed. Six studies tested implementation strategies. Systematic review results were used to generate recommendations for improving implementation success in criminal-legal contexts.

Conclusions

The focus on implementation determinants in correctional health studies reflects the need to tailor implementation efforts to complex organizational and inter-agency contexts. Future studies should investigate policy factors that influence implementation success, design, and test implementation strategies tailored to determinants, and investigate a wider array of implementation outcomes relevant to criminal-legal settings, health interventions relevant to adult and juvenile populations, and health equity outcomes.

Trial registration

A study protocol (CRD42020114111) was registered with Prospero.

Similar content being viewed by others

Methods

The research team conducted a systematic review in accordance with PRISMA guidelines [22]. A study protocol (CRD42020114111) was registered with Prospero prior to article searching and data extraction. The research team conducted an initial search in December 2019 and a secondary search for new publications in September 2021 due to COVID-related delays.

Database sources and search strategy

The research team searched peer-reviewed literature registered in seven computerized article databases, including Academic Search Premier, Cumulative Index to Nursing and Allied Health Literature, PsycINFO, Social Work Abstracts, ProQuest Criminal Justice Database, ProQuest Sociological Abstracts, and MEDLINE/PubMed. The team also hand searched for relevant articles across four journals (i.e., the Journal of Offender Rehabilitation, Health and Justice, Implementation Science, and the Administration and Policy in Mental Health and Mental Health Services Research) to ensure that no articles were overlooked by database search criteria. Members of the research team met with a reference librarian to confirm search terms for three key concepts: implementation, corrections, and health intervention. A complete list of search terms is in Appendix 1.

Inclusion criteria

Articles were included in the systematic review if they (1) described an empirical study of implementation outcomes, determinants, and/or implementation strategies related to a health intervention; (2) cited an implementation science theory, model, framework, or taxonomy in their approach; (3) were conducted within a criminal-legal setting (i.e., jails, prisons, community supervision, and courts); (4) focused on interventions for adult populations; (5) were conducted within the U.S.; (6) were published in a peer-reviewed journal, (7) were published in English, and (8) were published between January 1, 1998, and August 31, 2021. Since the implementation science field is relatively young, and most public health intervention research in criminal-legal settings began to increase during the 2000s, we selected 1998 as the starting year to capture implementation research. Studies were excluded if they solely described efficacy or effectiveness results of health interventions or EBPs (i.e., did not assess implementation outcomes), described a study protocol, viewpoint (e.g., conceptual articles, perspectives), systematic reviews, dissertations, conference abstracts, studies that focused on youth or juvenile justice populations (whose needs and correctional environments differ from those of the adult population), or were published outside of the aforementioned date range. Studies that were conducted outside of the U.S. were also excluded given the significant differences in criminal-legal and health service systems across the global community.

Study screening, data abstraction and synthesis

Search results were managed using Zotero and uploaded into Covidence [23] for de-duplication, screening, and full text review. All abstracts were reviewed by two members of the research team. Disagreements over study eligibility were discussed until a consensus decision was reached or adjudicated by a third reviewer, and reasons for exclusion were documented. All articles eligible for full text review were evaluated by two reviewers, with consensus discussions and a third reviewer resolving any disagreements on article eligibility for the systematic review.

The team developed a standardized abstraction template to summarize study information including location, study aims, type of criminal-legal setting, description of the intervention being implemented, study design, sample characteristics, analytic approach, outcomes measured, implementation framework(s) applied, relevant framework domains named in the findings, contextual factors, a description of implementation strategies used and their corresponding targets. All extracted data were reviewed by the research team to ensure accuracy. Specific implementation outcomes (e.g., acceptability, adoption) identified in the studies were extracted and coded using Proctor et al.’s [9] taxonomy of implementation outcomes. Proctor et al.’s taxonomy of implementation outcomes is widely used and offers a summary of outcome measurement language referenced in many implementation theories, models, and frameworks. Several studies in this review used Proctor et al.’s taxonomy to define their implementation outcomes of interest, and those specific outcomes were extracted for this review. When authors described implementation outcomes that were not framed using the Proctor taxonomy, two reviewers consensus coded the implementation outcome based on its description in the study and then categorized the outcome using the Proctor taxonomy.

Implementation determinants were extracted and categorized based on the five domains of the Consolidated Framework for Implementation Research (CFIR; [11]). The research team selected this framework as it is one of the most widely cited [13] and recognizable implementation frameworks and was developed by consolidating domains and definitions from multiple implementation frameworks. Additionally, many studies in this review used CFIR to code their implementation determinants. When authors did not use the CFIR domains in their analysis, two reviewers consensus coded the implementation determinant based on its description in the study and then categorized the determinant using the CFIR domains to promote standardized terminology across our synthesis.

We operationalized implementation strategies reported in all implementation trials using the Pragmatic Implementation Reporting Tool [24]. The research team selected this tool because it provides a standardized approach to specifying implementation strategy components used in clinical and implementation research. The Pragmatic Implementation Reporting Tool integrates implementation strategy reporting guidelines from Proctor et al.’s “Specify It” criteria and Presseau et al.’s [25] Action, Actor, Context, Target, Time framework. Implementation trials were defined as studies that evaluated the effectiveness of an implementation strategy on a specified implementation outcome (e.g., the effectiveness of a “facilitation” strategy on adoption of an EBP). Implementation trials that did not report implementation outcomes were not included in this step of the coding. Using the Pragmatic Implementation Reporting Tool [24] as the guide, one reviewer examined all relevant implementation trial manuscripts and identified relevant protocol papers to extract and document information relating to the strategy specification. A second co-author reviewed the data extraction to promote rigor.

Results

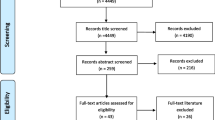

A total of 4382 articles were identified in the searched databases and 30 studies were identified through hand searching (Fig. 1). Of these, 230 articles met criteria for full text review. The majority of excluded articles did not frame the study as implementation science or cite implementation science relevant references (n = 124), were ineligible publication types (e.g., protocols, conference abstracts, n = 49), were not conducted in a criminal-legal setting (n = 18) or outside of the U.S. (n = 15). In total, 24 articles were included in the study sample (see Table 1).

Design, sample and setting characteristics of included studies

Table 2 summarizes the study design, sample, and setting-related characteristics of all studies included in this systematic review. In terms of study design, 42% (n = 10) of the articles identified were cross-sectional studies (i.e., data collected from a single measurement point), 28% (n = 9) were experimental (i.e., random assignment), and 21% (n = 5) were pre-experimental studies with pre- and post-test measures across a single group. Few (24%; n = 6) used a hybrid design, an increasingly common approach to addressing effectiveness and implementation aims within the same trial (see [50]). Approximately 42% (n = 10) exclusively employed quantitative methods, 33% (n = 8) used qualitative methods, and 25% (n = 6) used both qualitative and quantitative methods.

Studies included representation from multiple criminal-legal staff, community-based partners, clients, and researchers. Few studies only included criminal-legal staff (21%, n = 5), while other studies included criminal-legal staff and community-based partners (33%, n = 8), or staff, community-based partners and clients (8% n = 2), or staff and research partners (8%, n = 2). One study included community-based staff and clients (4%), and 6 studies used administrative data (25%). Most studies (71%; n = 17) included at least one prison setting and half (n = 12) included at least one jail setting. Less than a third of studies (29%; n = 7) were conducted in a community corrections setting such as probation or parole. No identified studies focused on specialty courts (e.g., drug or mental health treatment courts).

Approximately 38% (n = 9) of studies investigated interventions to address infectious diseases, 25% (n = 6) addressed substance use, 21% (n =5) focused on mental health, and 8% (n = 2) addressed co-occurring mental health and substance use. Nearly half of all studies (46%, n = 11) were funded by either phase 1 or phase 2 of NIDA’s CJ-DATS initiative.

Implementation focus and findings

Table 3 presents a summary of the implementation science approaches used in the design and/or analyses of the studies. Implementation science theories, models, frameworks, and taxonomies applied in these studies included the following: Proctor’s Implementation Research Model [51]; Consolidated Framework for Implementation Research [11]; Exploration, Preparation, Implementation, & Sustainment Framework [12]; TCU Program Change Model [52], Promoting Action on Research Implementation in Health Services [53]; Rogers’ Organizational Diffusion of Innovations [54, 55]; Proctor’s Taxonomy of Implementation Outcomes [9]; Proctor’s Implementation Strategy Specification [15]; Practical, Robust, Implementation and Sustainability Model [56]; Expert Recommendations for Implementing Change [57].

Of the 24 studies, 63% (n = 16) examined implementation determinants (e.g., barriers and facilitators). Examples of studies focused on implementation determinants include a pre-implementation assessment of factors that could impact intervention implementation (e.g., [26]), a formative evaluation of an intervention (e.g., [30]), a post-implementation assessment of provider perspectives on implementation of an intervention (e.g., [32, 43]), and a retrospective examination of factors impacting implementation and sustainment of an intervention (e.g., [49]). Of the 16 studies examining implementation determinants, factors impacting implementation were most commonly associated with the inner setting (i.e., the organization in which the intervention was implemented; 88%, n = 14), followed by the outer setting (63%, n = 10), implementation process (38%, n = 6), characteristics of individuals engaged in the implementation effort (31%, n = 5), and intervention characteristics (31%, n =5).

In addition, 50% (n = 12) of studies measured implementation outcomes. Studies examining implementation outcomes primarily cited those included in the Proctor et al. [9] taxonomy. Of the 12 studies examining implementation outcomes, 42% (n =5) measured acceptability, which is the perception that a service or practice is “agreeable, palatable, or satisfactory” ([9], p. 67). Further, 33% (n = 4) of studies measured feasibility or the extent to which an intervention can be used within an organization. In addition, 17% (n = 2) of studies measured penetration or reach which is the degree to which a practice was integrated within the service setting ([9], p. 70). Additional implementation outcomes measured were appropriateness (8%, n = 1), fidelity (8%, n = 1), cost (8%, n = 1), sustainability (8%, n = 1), and adoption (8%, n = 1).

Few studies explicitly tested implementation strategies (25%, n =6). Tested implementation strategies included the following: conducting a series of rapid cycle processes [46], conducting a local needs assessment and strategic planning process [37, 45, 46], creating a local change team comprised of cross-disciplinary and cross-agency staff [37, 45, 46], utilizing a training coach to support the improvement process [37, 45, 46], promoting network weaving through local councils [29, 47], and providing education and outreach (Friedman et al., 2015; [47, 48]). Three articles [37, 45, 46] examined the implementation strategies from the HIV Services and Treatment Implementation in Corrections (HIV-STIC) studies, which was part of the NIDA-funded CJ-DATS. Two studies [29, 47] examined the same organizational linkage strategy from the MAT Implementation in Community Correctional Environments (MATICCE) studies, also part of CJ-DATS. It is also important to note that the level of detail provided across studies was sometimes incomplete, and determinations related to the implementation strategy were based on the best available information within the included articles and their related protocols.

Application of implementation science frameworks and taxonomies

Table 4 provides an overview of the implementation science methods used in each study. Although all studies cited a framework, application of the frameworks varied by study. Of the 24 studies included in this review, 50% (n = 10) used an implementation science framework or taxonomy to select or develop their data collection methods (e.g., [27, 33, 35]; Tables 1 and 4). For example, in some cases, the Proctor et al. [9] taxonomy was used to inform the researchers’ decisions about what implementation outcomes (e.g., acceptability, appropriateness) to select for a study and how to operationalize them (e.g., [45]). Additionally, implementation science frameworks were used for data analysis and coding for 38% (n = 9) of the studies (e.g., [30, 32, 43, 44, 49]). Most studies (67%; n = 16) applied frameworks in the introduction and discussion sections to frame the study and its results. In another 13% (n = 3) of the studies, an implementation science framework was identified or cited, but the authors did not specify how the framework was applied to their study or the authors did not include sufficient detail to code the application of the implementation science framework.

Discussion

This systematic review documented the use of implementation science theory and approaches in studies aiming to implement health interventions in criminal-legal settings. Our review identified 24 articles for inclusion that spanned a 14-year period from 2007 to 2021. Thus, on average, less than two articles each year were published that employed implementation science methods to researching the uptake of health interventions in criminal-legal settings. That correctional health research has not kept pace with advancements in implementation science research and methodology is troubling given the complex health needs of the 1.8 million people incarcerated in the nation’s prisons and jails and the 3.7 million people under community supervision [58,59,60]. Mass incarceration and complex co-occurring health conditions both disproportionately impact communities of color and low-income communities [61]. In addition to reforming the criminal-legal system to reduce these inequities, researchers can also investigate effective implementation strategies to rapidly increase access to high-quality health services that meet the health needs of people currently incarcerated and under community supervision. In this way, greater testing of dissemination and implementation strategies in criminal-legal settings can help address health inequities stemming from societal injustices of mass incarceration and the impact of criminal-legal involvement on social and economic wellbeing [62, 63]. This review provides a summary of published correctional health intervention research that integrated implementation science approaches and provides a foundation to inform future studies seeking to employ implementation frameworks, strategies, and outcomes in their study designs.

Limitations

There are two notable limitations when considering the results of the study. First, it is possible that relevant studies may have been excluded within the parameters of the systematic search. Specifically, if a study was not framed as implementation science or clearly indicated the use of implementation science methods, it was excluded. For example, a study that examined barriers and facilitators of implementing a program but did not integrate implementation science within the study justification or methods would be excluded. This is also true for studies that may have been supported by funding from CJ-DATS but did not explicitly integrate implementation science methods. To include as many relevant studies as possible, we scanned articles for implementation science relevant citations that might indicate an implementation science approach. Second, the study used 1998 as a start date because of the initiation of Veterans Affairs Quality Enhancement Initiative (VA QUERI). Although most of the studies included in our review were from the 2010s, it is possible that earlier relevant studies could have been excluded. Finally, this systematic review focused on identifying implementation approaches, strategies, and outcomes from efforts aimed at providing evidence-based care to the adult criminal-legal involved population. Thus, we are unable to draw any lessons or comparisons from efforts conducted in juvenile justice settings (e.g., JJ-TRIALS). Adult and juvenile correctional settings differ in their populations, structure, and programming. Implementation strategies that are effective in juvenile settings where there is a stronger focus on healthcare and rehabilitation programming and support youths who typically serve shorter sentences, are likely to differ than implementation strategies used in adult correctional settings.

Implications

Despite these limitations, this study also highlights a number of important future directions related to the implementation of EBPs in criminal-legal contexts.

Focus on determinants reflects complexity of the implementation environment

The fact that implementation determinants make up the majority of the studies in this review, most of which focus on the inner setting (i.e., the organizational context), reflects the need to understand the factors impacting implementation within a complex implementation environment. Correctional health interventions, by nature, often involve an inter-organizational and multi-disciplinary context in which practitioners who may be trained in one type of service (e.g., healthcare) are operating within the context of another agency environment (e.g., corrections). For example, a mental health intervention operated by a private service provider but co-located within a county-based detention center is cross-sectoral (i.e., private and public sector, respectively) and is susceptible to a broad range of multi-level factors originating from both the mental health service system and the criminal-legal system. Influences from both of these systems can create an implementation environment that may require significant intervention adaptation to render the intervention fit or appropriate for the implementation environment. Consequently, the fact that much of the implementation science focus in correctional health research has been about contextual inquiry (i.e., understanding implementation determinants) is appropriate and expected. Given the relative nascency of leveraging implementation science within correctional health research, and the variation in types of health interventions and correctional environments (i.e., jail, prison, courts, community supervision), the focus on contextual inquiry and implementation determinants should continue.

Additionally, results show that much of the focus on implementation determinants has been on factors related to the inner setting, or the organization in which the intervention is being implemented. Although critically important, a singular focus on the organizational factors impacting intervention implementation does not account for significant external factors relevant in contextual inquiry. Notably, policy-level factors and those associated with inter-organizational relationships are significant determinants of whether and how an intervention is adopted into practice [64]. For example, recent opportunities to promote Medicaid enrollment prior to release from incarceration will impact costs and access to care in carceral settings and need to be considered in the implementation of new health interventions [65]. In addition, for interventions and implementation strategies focused on enhancing referral networks (e.g., [29, 47]) to increase service uptake, the presence, quality, and characteristics of inter-organizational relationships are critically important. Consequently, as researchers and practitioners continue to examine implementation determinants, a greater focus on domains beyond the inner setting is needed.

Increase the focus on implementation outcomes and strategies

Although contextual inquiry studies focused on implementation determinants are still needed, the field should also build on the knowledge produced by the two NIDA-funded initiatives, JCOIN and CJ-DATS. These initiatives accelerated the application of implementation science methods in correctional health interventions and focused on developing implementation strategies to enhance uptake of EBPs. Moving forward, researchers and practitioners can develop sequential study aims in which implementation determinants are studied in the first phase of research in a small pilot study and then addressed in subsequent stages, first through a pilot test of an implementation strategy and later through a larger study employing rigorous methods to test the efficacy of the implementation strategy. NIDA’s strategy of speeding up translation in correctional settings through federally funded consortium sites could be expanded to other institutes. Resulting studies from such initiatives would provide the field with invaluable information about the variation in the factors that impact implementation by fields of practice and health foci (e.g., substance use, mental health, infectious diseases) and the implementation strategies that enhance uptake of their respective EBPs. In addition to federal funding initiatives to speed up translation, researchers can consider using hybrid designs. Hybrid effective implementation designs challenge the typical sequencing of efficacy, effectiveness, and implementation research by promoting simultaneous examination of effectiveness and implementation aims [50, 66].

Standardize specification of implementation science methods

Assuming greater focus on the application of implementation science methods in correctional health research moving forward, it is imperative that researchers and practitioners standardize specification of methods in their reports and articles. Better specification will make it easier for other researchers and practitioners to understand, adapt, and apply these methods to their work and advance the research. Suggestions for better specification of implementation science methods in correctional health research articles include (1) providing a clear justification of the use of implementation science methods, including citations; (2) clear description of the implementation focus (i.e., implementation determinants, outcomes, and strategies) as well as the correctional setting (i.e., prison, jail, court, community supervision) and health focus (e.g., mental health, substance use, infectious disease); (3) identification, justification, and meaningful operationalization, and integration of selected implementation science frameworks throughout the study (e.g., include detailed descriptions of the chosen framework(s) and rationale; explain how the framework guided the study methods, such as instrument development, data collection, data analysis; describe the degree to which the framework fit the study context; explain how the framework can aid in the interpretation of results and transferability); (4) clear definition of implementation outcomes that map onto an implementation framework; and (5) standardized specification of implementation strategies, preferably using a framework (e.g., [15, 24]). Standardizing these methods helps to align the correctional health research with other health service fields to help better understand the role of the correctional context in implementation of EBPs.

Conclusion

Although application of implementation science methods in correctional health intervention research is limited, integration of these methods appears to be accelerating, likely fueled by federally funded implementation-focused research consortiums. Overall, the implementation research on correctional health interventions has largely focused on understanding the environment in which health interventions are implemented. Although focusing on these implementation determinants is necessary given the complex environment in which health interventions are implemented, the field should increase its focus on developing implementation strategies to address the known factors that impede successful implementation and to standardize the way that implementation science methods are specified in correctional health research.

Availability of data and materials

Data analyzed in this study may be made available from the corresponding author upon reasonable request.

Abbreviations

- CJ-DATS:

-

Criminal Justice Drug Abuse Treatment Studies

- CFIR:

-

Consolidated Framework for Implementation Research

- EBP:

-

Evidence-based practices

- EPIS:

-

Exploration Preparation Implementation Sustainment

- JCOIN:

-

Justice Community Opioid Innovation Network

- JJ-TRIALS:

-

Juvenile Justice – Translational Research on Interventions for Adolescents in the Legal System

- MATICCE:

-

MAT Implementation in Community Correctional Environments

- NIDA:

-

National Institute of Drug Abuse

- VA QUERI:

-

Veterans Affairs Quality Enhancement Initiative

References

Khan MR, Kapadia F, Geller A, Mazumdar M, Scheidell JD, Krause KD, Halkitis PN. Racial and ethnic disparities in “stop-and-frisk” experience among young sexual minority men in New York City. PLoS One. 2021;16(8):e0256201. https://doi.org/10.1371/journal.pone.0256201.

Pierson E, Simoiu C, Overgoor J, Corbett-Davies S, Jenson D, Shoemaker A, et al. A large-scale analysis of racial disparities in police stops across the United States. Nat Hum Behav. 2020;4(7):736–45. https://doi.org/10.1038/s41562-020-0858-1.

LeMasters K, Brinkley-Rubinstein L, Maner M, Peterson M, Nowotny K, Bailey Z. Carceral epidemiology: mass incarceration and structural racism during the COVID-19 pandemic. Lancet Public Health. 2022;7(3):e287–90. https://doi.org/10.1016/s2468-2667(22)00005-6.

Binswanger IA, Krueger PM, Steiner JF. Prevalence of chronic medical conditions among jail and prison inmates in the USA compared with the general population. J Epidemiol Community Health. 2009;63(11):912–9. https://doi.org/10.1136/jech.2009.090662.

Fazel S, Yoon IA, Hayes AJ. Substance use disorders in prisoners: an updated systematic review and meta-regression analysis in recently incarcerated men and women. Addiction. 2017;112(10):1725–39. https://doi.org/10.1111/add.13877.

Harzke AJ, Pruitt SL. Chronic medical conditions in criminal justice involved populations. J Health Hum Serv Adm. 2018;41(3):306–47.

Wildeman C, Wang EA. Mass incarceration, public health, and widening inequality in the USA. Lancet. 2017;389(10077):1464–74. https://doi.org/10.1016/s0140-6736(17)30259-3.

Wilper AP, Woolhandler S, Boyd JW, Lasser KE, McCormick D, Bor DH, Himmelstein DU. The health and health care of US prisoners: Results of a nationwide survey. Am J Public Health. 2009;99(4):666–72. https://doi.org/10.2105/ajph.2008.144279.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38:65–76. https://doi.org/10.1007/s10488-010-0319-7.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1) https://doi.org/10.1186/s13012-015-0242-0.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing science. Implement Sci. 2009;4(1):1–15. https://doi.org/10.1186/1748-5908-4-50.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health Ment Health Serv Res. 2011;38:4–23. https://doi.org/10.1007/s10488-010-0327-7100Stat.3207 Alcohol and Drug Abuse Amendments of 1986. https://www.govinfo.gov/app/details/STATUTE-100/STATUTE-100-Pg3207.

Kirk MA, Kelley C, Yankey N, et al. A systematic review of the use of the Consolidated Framework for Implementation Research. Implement Sci. 2015;11:72. https://doi.org/10.1186/s13012-016-0437-z.

Moullin JC, Dickson KS, Stadnick NA, Rabin B, Aarons GA. Systematic review of the exploration, preparation, implementation, sustainment (EPIS) framework. Implement Sci. 2019;14(1):1–16. https://doi.org/10.1186/s13012-018-0842-6.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):1–11. https://doi.org/10.1186/1748-5908-8-139.

Lewis CC, Klasnja P, Lyon AR, Powell BJ, Lengnick-Hall R, Buchanan G, et al. The mechanics of implementation strategies and measures: advancing the study of implementation mechanisms. Implement Sci Commun. 2022;3(1):1–11.

Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136. https://doi.org/10.1186/s43058-022-00358-3.

Kerkhoff AD, Farrand E, Marquez C, Cattamanchi A, Handley MA. Addressing health disparities through implementation science—a need to integrate an equity lens from the outset. Implement Sci. 2022;17(1):1–4. https://doi.org/10.1186/s13012-022-01189-5.

National Institute of Health. Grants & Funding. U.S. Department of Health and Human Services. 2002. Retrieved July 31, 2023, from https://grants.nih.gov/grants/guide/rfa-files/RFA-DA-02-011.html.

National Institute of Health. Grants & Funding. U.S. Department of Health and Human Services. 2007. Retrieved July 31, 2023, from https://grants.nih.gov/grants/guide/rfa-files/RFA-DA-08-002.html#PartI.

Knight DK, Belenko S, Wiley T, Robertson AA, Arrigona N, Dennis M, et al. Juvenile Justice—Translational Research on Interventions for Adolescents in the Legal System (JJ-TRIALS): a cluster randomized trial targeting system-wide improvement in substance use services. Implement Sci. 2015;11(1):1–18.

Moher D, Liberati A, Tetzlaff J, Altman DG, PRISMA Group*, T. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9. https://doi.org/10.7326/0003-4819-151-4-200908180-00135.

Covidence systematic review software. Veritas Health Innovation. Melbourne, Australia; 2022. Available at www.covidence.org.

Rudd BN, Davis M, Beidas RS. Integrating implementation science in clinical research to maximize public health impact: A call for the reporting and alignment of implementation strategy use with implementation outcomes in clinical research. Implement Sci. 2020;15(1):1–11. https://doi.org/10.1186/s13012-020-01060-5.

Presseau J, McCleary N, Lorencatto F, Patey AM, Grimshaw JM, Francis JJ. Action, actor, context, target, time (AACTT): A framework for specifying behaviour. Implement Sci. 2019;14(1):102. https://doi.org/10.1186/s13012-019-0951-x.

Belenko S, Hiller M, Visher C, Copenhaver M, O’Connell D, Burdon W, et al. Policies and practices in the delivery of HIV services in correctional agencies and facilities: results from a multisite survey. J Correct Health Care. 2013;19(4):293–310. https://doi.org/10.1177/1078345813499313.

Emerson A, Allison M, Kelly PJ, Ramaswamy M. Barriers and facilitators of implementing a collaborative HPV vaccine program in an incarcerated population: a case study. Vaccine. 2020;38(11):2566–71. https://doi.org/10.1016/j.vaccine.2020.01.086.

Ferguson WJ, Johnston J, Clarke JG, Koutoujian PJ, Maurer K, Gallagher C, et al. Advancing the implementation and sustainment of medication assisted treatment for opioid use disorders in prisons and jails. Health Justice. 2019;7(1):1–8. https://doi.org/10.1186/s40352-019-0100-2.

Friedmann PD, Wilson D, Knudsen HK, Ducharme LJ, Welsh WN, Frisman L, et al. Effect of an organizational linkage intervention on staff perceptions of medication-assisted treatment and referral intentions in community corrections. J Subst Abus Treat. 2015;50:50–8. https://doi.org/10.1016/j.jsat.2014.10.001.

Hanna J, Kubiak S, Pasman E, Gaba A, Andre M, Smelson D, Pinals DA. Evaluating the implementation of a prisoner re-entry initiative for individuals with opioid use and mental health disorders: Application of the consolidated framework for implementation research in a cross-system initiative. J Subst Abus Treat. 2020;108:104–14. https://doi.org/10.1016/j.jsat.2019.06.012.

Johnson JE, Stout RL, Miller TR, Zlotnick C, Cerbo LA, Andrade JT, et al. Randomized cost-effectiveness trial of group interpersonal psychotherapy (IPT) for prisoners with major depression. J Consult Clin Psychol. 2019;87(4):392. https://doi.org/10.1037/ccp0000379.

Johnson JE, Hailemariam M, Zlotnick C, Richie F, Sinclair J, Chuong A, Stirman SW. Mixed methods analysis of implementation of Interpersonal Psychotherapy (IPT) for major depressive disorder in prisons in a Hybrid Type I randomized trial. Adm Policy Ment Health Ment Health Serv Res. 2020;47:410–26. https://doi.org/10.1007/s10488-019-00996-1.

Lehman WE, Greener JM, Rowan-Szal GA, Flynn PM. Organizational readiness for change in correctional and community substance abuse programs. J Offender Rehabil. 2012;51(1–2):96–114. https://doi.org/10.1080/10509674.2012.633022.

Mitchell SG, Willett J, Swan H, Monico LB, Yang Y, Patterson YO, et al. Defining success: insights from a random assignment, multisite study of implementing HIV prevention, testing, and linkage to care in US Jails and prisons. AIDS Educ Prev. 2015;27(5):432–45. https://doi.org/10.1521/aeap.2015.27.5.432.

Oser CB, Tindall MS, Leukefeld CG. HIV testing in correctional agencies and community treatment programs: The impact of internal organizational structure. J Subst Abus Treat. 2007;32(3):301–10. https://doi.org/10.1016/j.jsat.2006.12.016.

Pankow J, Willett J, Yang Y, Swan H, Dembo R, Burdon WM, et al. Evaluating Fidelity to a modified NIATx process improvement strategy for improving HIV Services in Correctional Facilities. J Behav Health Serv Res. 2018;45:187–203. https://doi.org/10.1007/s11414-017-9551-1.

Pearson FS, Shafer MS, Dembo R, del Mar Vega-Debién G, Pankow J, Duvall JL, et al. Efficacy of a process improvement intervention on delivery of HIV services to offenders: A multisite trial. Am J Public Health. 2014;104(12):2385–91. https://doi.org/10.2105/ajph.2014.302035.

Pinals DA, Gaba A, Shaffer PM, Andre MA, Smelson DA. Risk–need–responsivity and its application in behavioral health settings: A feasibility study of a treatment planning support tool. Behav Sci Law. 2021;39(1):44–64. https://doi.org/10.1002/bsl.2499.

Prendergast M, Welsh WN, Stein L, Lehman W, Melnick G, Warda U, et al. Influence of organizational characteristics on success in implementing process improvement goals in correctional treatment settings. J Behav Health Serv Res. 2017;44:625–46. https://doi.org/10.1007/s11414-016-9531-x.

Shelton D, Reagan L, Weiskopf C, Panosky D, Nicholson M, Diaz D. Baseline indicators and implementation strategies in a statewide correctional nurse competencies program: mid-year report. J Contin Educ Nurs. 2015;46(10):455–61. https://doi.org/10.3928/00220124-20150918-03.

Showalter D, Wenger LD, Lambdin BH, Wheeler E, Binswanger I, Kral AH. Bridging institutional logics: Implementing naloxone distribution for people exiting jail in three California counties. Soc Sci Med. 2021;285:114293. https://doi.org/10.1016/j.socscimed.2021.114293.

Swan H, Hiller ML, Albizu-Garcia CE, Pich M, Patterson Y, O’Connell DJ. Efficacy of a process improvement intervention on inmate awareness of HIV services: A multi-site trial. Health Justice. 2015;3(1):1–7. https://doi.org/10.1186/s40352-015-0023-5.

Van Deinse TB, Bunger A, Burgin S, Wilson AB, Cuddeback GS. Using the consolidated framework for implementation research to examine implementation determinants of specialty mental health probation. Health Justice. 2019;7:1–12. https://doi.org/10.1186/s40352-019-0098-5.

Van Deinse TB, Crable EL, Dunn C, Weis J, Cuddeback G. Probation officers’ and supervisors’ perspectives on critical resources for implementing specialty mental health probation. Adm Policy Ment Health Ment Health Serv Res. 2021;48:408–19. https://doi.org/10.1007/s10488-020-01081-8.

Visher CA, Hiller M, Belenko S, Pankow J, Dembo R, Frisman LK, et al. The effect of a local change team intervention on staff attitudes towards HIV service delivery in correctional settings: A randomized trial. AIDS Educ Prev. 2014;26(5):411–28. https://doi.org/10.1521/aeap.2014.26.5.411.

Visher CA, Yang Y, Mitchell SG, Patterson Y, Swan H, Pankow J. Understanding the sustainability of implementing HIV services in criminal justice settings. Health Justice. 2015;3(1):1–9. https://doi.org/10.1186/s40352-015-0018-2.

Welsh WN, Knudsen HK, Knight K, Ducharme L, Pankow J, Urbine T, et al. Effects of an organizational linkage intervention on inter-organizational service coordination between probation/parole agencies and community treatment providers. Adm Policy Ment Health Ment Health Serv Res. 2016;43:105–21. https://doi.org/10.1007/s10488-014-0623-8.

White Hughto JMW, Clark KA, Altice FL, Reisner SL, Kershaw TS, Pachankis JE. Improving correctional healthcare providers’ ability to care for transgender patients: Development and evaluation of a theory-driven cultural and clinical competence intervention. Soc Sci Med. 2017;195:159–69. https://doi.org/10.1016/j.socscimed.2017.10.004.

Zielinski MJ, Allison MK, Brinkley-Rubinstein L, Curran G, Zaller ND, Kirchner JAE. Making change happen in criminal justice settings: Leveraging implementation science to improve mental health care. Health Justice. 2020;8(1):1–10. https://doi.org/10.1186/s40352-020-00122-6.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: Combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217. https://doi.org/10.1097/mlr.0b013e3182408812.

Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health Ment Health Serv Res. 2009;36:24–34. https://doi.org/10.1007/s10488-008-0197-4.

Simpson DD, Flynn PM. Moving innovations into treatment: A stage-based approach to program change. J Subst Abus Treat. 2007;33(2):111–20. https://doi.org/10.1016/j.jsat.2006.12.023.

Rycroft-Malone J. The PARIHS framework—a framework for guiding the implementation of evidence-based practice. J Nurs Care Qual. 2004;19(4):297–304. https://doi.org/10.1097/00001786-200410000-00002.

Lundblad JP. A review and critique of Rogers’ diffusion of innovation theory as it applies to organizations. Organ Dev J. 2003;21(4):50.

Rogers EM. Diffusion of innovations. 4th ed. New York: The Free Press; 1995.

Feldstein AC, Glasgow RE. A Practical, Robust, Implementation and Sustainability Model (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf. 2008;34(4):228–43. https://doi.org/10.1016/s1553-7250(08)34030-6.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):1–14. https://doi.org/10.1186/s13012-015-0209-1.

Binswanger IA, Redmond N, Steiner JF, Hicks LS. Health disparities and the criminal justice system: an agenda for further research and action. J Urban Health. 2012;89:98–107. https://doi.org/10.1007/s11524-011-9614-1.

Bui J, Wendt M, Bakos A. Understanding and addressing health disparities and health needs of justice-involved populations. Public Health Rep. 2019;134(1_suppl):3S-7S. https://doi.org/10.1177/0033354918813089.

Carson EA, Kluckow R. Correctional populations in the United States, 2021—Statistical tables. NCJ: BJS Statistician; 2023. p. 305542.

Sawyer W. Visualizing the racial disparities in mass incarceration. Prison Policy Initiative. 2020;27.

Hickson A, Purbey R, Dean L, et al. A consequence of mass incarceration: county-level association between jail incarceration rates and poor mental health days. Health Justice. 2022;10:31. https://doi.org/10.1186/s40352-022-00194-6.

Phelps MS, Osman IH, Robertson CE, et al. Beyond “pains” and “gains”: untangling the health consequences of probation. Health Justice. 2022;10:29. https://doi.org/10.1186/s40352-022-00193-7.

Crable EL, Lengnick-Hall R, Stadnick NA, Moullin JC, Aarons GA. Where is “policy” in dissemination and implementation science? Recommendations to advance theories, models, and frameworks: EPIS as a case example. Implement Sci. 2022;17(1):80. https://doi.org/10.1186/s13012-022-01256-x.

California Department of Health Care Services. California set to become first state in nation to expand Medicaid services for justice-involved individuals [Press release]. 2023. https://www.dhcs.ca.gov/formsandpubs/publications/oc/Documents/2023/23-02-Justice-Involved-Initiative-1-26-23.pdf.

Landes SJ, McBain SA, Curran GM. Reprint of: an introduction to effectiveness-implementation hybrid designs. Psychiatry Res. 2020;283:112630. https://doi.org/10.1016/j.psychres.2019.112630.

Acknowledgements

The authors gratefully acknowledge the contributions of Sophia Durant, MSW, MPH and Lia Kaz, MSW for their work as research assistants in the early phases of this study. We also acknowledge the support from the Lifespan/Brown University Criminal Justice Research Training Program.

Funding

Drs. Van Deinse, Zielinski, Holliday, and Crable are alumni of the Lifespan/Brown University Criminal Justice Research Training Program on Substance Use, HIV, and Co-morbidities (R25DA037190) which provided funding for manuscript publication. Manuscript preparation was also supported by K01MH129619 (Van Deinse), K23DA048162 (Zielinski), K01DA056838 (Crable), and K23MH129321 (Rudd), which provided individual salary support for named co-authors. Dr. Rudd is a fellow, and Drs. Zielinski and Crable are alumni of the Implementation Research Institute (IRI), at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institute of Mental Health (R25 MH080916).

Author information

Authors and Affiliations

Contributions

TBV was responsible for initiating and conceptualizing the study and conducting the systematic search. All authors (i.e., TBV, MJZ, SBH, BNR, ELC) conducted title and abstract review, full text review, and data abstraction. All authors significantly contributed to manuscript development.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Van Deinse, T.B., Zielinski, M.J., Holliday, S.B. et al. The application of implementation science methods in correctional health intervention research: a systematic review. Implement Sci Commun 4, 149 (2023). https://doi.org/10.1186/s43058-023-00521-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-023-00521-4