Abstract

Background

Recent significant advancements in speed and machine learning have profoundly changed artificial intelligence (AI). In order to evaluate the value of AI in the detection and diagnosis of BIRADS 4 and 5 breast lesions visible on digital mammography pictures, we compared it to a radiologist. The gold standard was tissue core biopsy and pathology. A total of 130 individuals with 134 BIRADS 4 or 5 mammography lesions were included in the study, and all relevant digital mammography pictures were exported to an AI software system.

Objectives

The goal of this investigation was to determine how well artificial intelligence performs in digital mammography when compared to a radiologist in identifying and diagnosing BIRADS 4 and 5 breast lesions.

Methods

A total of 134 BIRADS 4 and 5 breast lesions in 130 female patients were discovered using digital mammography on both the craniocaudal and mediolateral oblique planes. All mammograms were transferred to an AI software system for analysis, and the results were compared in accordance with the histopathological results, which served as standard of reference in all lesions.

Results

Artificial intelligence was found to be more accurate (90.30%) than radiologist (82.84%) and shows higher positive predictive value (94.5%) than radiologist (82.8%) regarding suspecting malignancy in digital mammography with BIRADS 4 and 5 lesions, while the radiologist achieved higher sensitivity (100%) than AI (93.7%) in detecting malignancy in BIRADS 4 and 5 lesions.

Conclusions

Radiologist was found to be more sensitive than AI in detecting malignancy in BIRADS 4 and 5 lesions but AI had a higher positive predictive value. However, specificity as well as negative predictive value could not be assessed for the radiologist, hence could not be compared with AI values because the inclusion criteria of the study did not include BIRADS 1, 2 and 3 so benign-looking lesions by digital mammography were not involved to measure specificity and negative predictive values. All in all, based on the available data, AI was found to be more accurate than radiologist regarding suspecting malignancy in digital mammography. AI can run hand in hand with human experience to give best health-care service in screening and/or diagnosing patients with breast cancer.

Similar content being viewed by others

Background

The first step in the diagnostic pathway to reduce breast cancer-related deaths is adequate access to breast cancer detection imaging [1]. The most effective method in finding malignant breast lesions is digital mammography. As patients and health-care professionals become more aware of the limits of digital mammography, particularly in dense breasts, additional modalities for breast cancer screening are becoming increasingly important [2].

Two-dimensional digital mammography images cause tissue superposition, which lowers specificity, especially in dense breasts, which make up around half of screened breasts and are the reason for one-third of missed malignancies. Normal tissues may mimic a worrisome lesion in these mammographic images [3].

Radiologists use the American College of Radiology Breast Imaging Reporting and Data System categories frequently in their visual analysis while interpreting mammograms. For breast parenchymal density evaluation, lesion detection, lesion characterization and risk prediction, AI may be helpful [4].

The main goal of this current study was to assess the effectiveness of AI in comparison with a stand-alone radiologist in the detection and diagnosis of BIRADS 4 and 5 breast lesions that were visible in digital mammography pictures.

Methods

This research was carried out at our department between March 2022 and December 2022 by the women's imaging unit. The data were retrospectively collected by reviewing the PACS system in our unit and selecting mammographically allocated BIRADS 4 and 5 lesions which were categorized using standard reporting BIRADS ACR 5th edition; then, these selected mammograms were exported to the AI software and analyzed.

A total of 130 patients with 134 breast lesions were involved in this study (four patients have bilateral breast lesions). The involved cases ranged in age from 28 to 84 years old (mean age, 48.89 ± 12).

Inclusion criteria: female patients with or without breast complaint, i.e., screening who did digital mammography and revealed suspicious/malignant-looking breast lesion, i.e., classified as BIRADS 4 and BIRADS 5 category. All lesions were histopathologically analyzed.

Exclusion criteria: females with normal digital mammography (BIRADS 1), females with BIRADS 2 (benign findings) and BIRADS 3 (probably benign) breast lesions, pregnant females as digital mammography is a relatively contraindicated, female with current history of breast implants to avoid unnecessary breast compression and patients with inconclusive/lost histopathology reports.

All the involved patients were coming for screening or with breast complaints, they perform basic digital mammography. Ultrasound-guided or stereotactic biopsy was performed for all included lesions. In bilateral breast lesion, biopsy from each breast lesion was taken.

The AI software system receives an export of all the relevant mammographic pictures. The pathology was used as the usual reference in correlating the AI and DM outcomes.

Image interpretation was carried out by an experienced radiologists with at least 8 years of experience in breast imaging and about 2 years of experience in AI-aided reading. The final diagnostic and clinical data were concealed from the radiologist.

Digital mammography was used to perform breast examinations (manufacturer: Amulet Innovality, Fujifilm Global, Japan). A five megapixel "Bellus" workstation was used to support digital mammography devices. For each breast, standard two images in the mediolateral and craniocaudal planes were taken. An average digital mammography with two images of each breast received a total dosage of roughly 0.4 milliSieverts (mSv).

For the Fujifilm digital mammography system, AI photos were created from digital mammography images by Lunit INSIGHT MMG, Korea, version 2019. This AI program creates a score (susceptibility to malignancy) on a scale of 0–100, with a score of less than 10% being labeled "Low" (no registered scoring percentage). The AI system offers pixel-level abnormality scores as a heat map for an input digital mammography picture (i.e., one of the four views) and an abnormality score, which is the maximum of the pixel-level abnormality scores. Based on a per-image analysis of the algorithm, the resulting diagnostic support software provides four-view heat maps and an abnormality score per breast (i.e., the maximum of the craniocaudal and mediolateral oblique abnormality scores) for each input mammogram to detect breast lesions. The abnormality scores range from 0 to 100. With a 10% cutoff, the sensitivity and specificity were estimated (i.e., if the abnormality score is less than 10%, then positive; otherwise, negative).

High-frequency linear probe (7–12 MHz) ultrasound equipment (LOGIQ S8-GE) was used for ultrasound-guided biopsies.

The statistical data and methods: The Statistical Package for the Social Sciences (SPSS) version 28 (IBM Corp., Armonk, NY, USA) was used to code and enter the data. For summarizing quantitative data, the mean, standard deviation, median, minimum and maximum were used; for categorical data, frequency (count) and relative frequency (%) were used. The non-parametric Mann–Whitney test was used to compare quantitative variables [5]. An analysis using the Chi-square (χ2) test was done to compare categorical data. When the anticipated frequency is less than 5, the exact test was used instead [6]. According to the methodology provided by [7], standard diagnostic indices such as sensitivity, specificity, PPV, negative predictive value (NPV) and diagnostic efficacy have been calculated. Statistics were considered significant for P-values under 0.05.

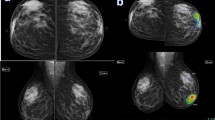

According to a study by Mansour et al. [8], which showed that malignant-looking and suspicious lesions that were classified by radiologists as belonging to the BIRADS 4 and 5 category and were confirmed to be malignant by the pathology (n = 623/642) represented 97% with a range of abnormality scoring at the AI of 59–100%, we used 59% as the cutoff value for malignancy in our study (Figs. 1 and 2).

A 61-year-old female patient came with the left bloody nipple discharge. a Digital mammography revealed an irregular shaped dense lesion with indistinct margins at outer central region of the left breast associated with adjacent tubular opacity extending to the nipple and was given BIRADS 4 score. b AI highlighted area with 60% risk of malignancy at outer central region. Pathology was invasive mucoid carcinoma. Although AI gave it a score of 60% which is borderline yet it is considered malignant by the AI, so both the AI and the radiologist suspected a malignant lesion; therefore, AI was non-inferior to the radiologist

A 43-year-old female patient came with bilateral breast pain. a and b Digital mammography with magnification revealed grouped micro-calcifications at outer central retro-areolar region of the left breast that was given BIRADS 4a. c AI highlighted same area with 94% score denoting high risk of malignancy. Pathology result was fibrocystic changes of the breast. AI and the radiologist both gave false-positive results

Results

The age range of the included patients was 28–84 years, with a mean age of 48.9 ± 12 SD (standard deviation) table. The most important collected clinical data was obesity (48.4%), prolonged use of contraception (17.6%) and positive family history (12.3%).

Regarding the side of the lesion, 44.00% (n = 59) of lesions were right-sided breast lesions, and 56.00% (n = 75) were left-sided breast lesions.

The majority of patients had "ACR b" mammographic breast density (45.5%) and then “ACR c” (43.4%).

Pathologically proven benign lesions were 23 (17.2%) while pathologically proven malignant lesions were 111 (82.8%), Table 1.

Invasive ductal carcinoma (IDC), the most prevalent histopathological form, made up 63.96% (N = 71) of the malignant lesions that were included in this study followed by invasive lobular carcinoma (ILC) represented 18.02% (N = 22), ductal carcinoma in situ (DCIS) represented 12.61% (N = 14) and other breast malignancies represented 3.60% (N = 4) (Figs 3, 4 and 5).

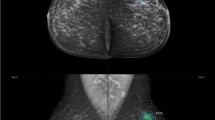

A 59-year-old female came for screening: a Digital mammography revealed focal asymmetry with distortion seen at lower central region of the right breast BIRADS 4. b AI highlighted an area with 93% risk of malignancy at lower central and upper central regions of the right breast. Pathology result was IDC. AI and radiologist matched the pathology results

A 34-year-old female patient came with the left breast lump. a Digital mammography revealed an irregular shape dense mass lesion with spiculated margins seen at lower outer quadrant of the left breast with related parenchymal distortion and ipsilateral suspicious axillary lymph nodes, BIRADS 5 lesion. b AI highlights the same area with 99% risk of malignancy as well as the suspicious axillary lymph node. Pathology result was IDC. AI and radiologist matched the pathology results

A 69-year-old patient presented with the left breast lump. a Digital mammography shows an irregular shape dense mass lesion with spiculated margins deeply seated at lower outer quadrant of the left breast, BIRADS 5 lesion. b AI highlighted area at the left breast with 52% risk of malignancy which is interpreted low risk of malignancy on its scale. Pathology result was invasive lobular carcinoma. AI mismatched the pathology results

The BIRADS score was given to each breast according to ACR BIRADS lexicon. All cases had a high BIRADS score as 42 breast lesions (31.3%) had a BIRADS 5 score and 92 breast lesions (68.7%) had a BIRADS 4 score, Table 2.

Digital mammography/radiologist demonstrated a sensitivity of 100% in detecting malignancy in BIRADS 4 and 5 lesions with PPV of 82.84% and accuracy of 82.84 while AI demonstrated sensitivity of 93.69% with PPV of 94.55% and accuracy of 90.3%. The specificity of AI was 73.91 with NPV of 70.83 where specificity as well as NPV could not be assessed (zero) for the radiologist, hence could not be compared with AI values because the inclusion criteria of the study did not include BIRADS 1, 2 and 3 so benign-looking lesions by digital mammography were not involved to measure specificity and negative predictive values, Table 3.

In our study, 23 cases were proved benign by pathology results, 8/23 were inflammatory cases (breast abscess and granulomatous mastitis), 8/23 were focal fibroadenosis/fibrocystic diseases or stromal fibrosis, 3/23 were fat necrosis, 3/23 were fibroadenoma with hyalinosis and 1/23 was sclerosing adenosis, Table 4.

Most of the benign lesions presented in digital mammography as asymmetry with distortion (n = 16/23) while the rest presented as mass with obscured or indistinct margins (n = 7/23) (Figs. 6 and 7).

A 51-year-old female patient came with the left breast lump. a Digital mammography revealed a rounded dense mass lesion with obscured margin at inner central region of the left breast, BIRADS 4. b AI gives 11% score which denotes low risk of malignancy and no color map in this case due to very low score. Pathology was complicated benign cyst. Radiologist mismatched the pathology result

A 41-year-old female patient came complaining of the right breast lump. a Digital mammography revealed focal asymmetry with distortion seen at upper outer quadrant of the right breast, BIRADS 4. b AI highlighted same area with 54% score denoting low risk for malignancy. Pathology was granulomatous mastitis with micro-abscesses. Radiologist mismatched the pathology results

Considering pathologically benign lesions, the AI matches the pathology results by giving low malignancy score (< 59) in 17/23 of total benign lesions and gives false-positive results in 6/23 of lesions.

Most of the mismatched benign cases (false positive) was inflammatory cases representing 5/6 of mismatched benign cases while 1/6 was fibrocystic disease of the breast.

Artificial intelligence matches the pathology results in 7/8 of focal fibrocystic/fibroadenosis and 3/8 of inflammatory cases, 3/3 of fat necrosis cases and 3/3 of fibroadenomas.

We calculated our own cutoff value in AI for malignant lesions, and it revealed to be 62% with sensitivity of 92.8% and specificity of 82.6%.

Discussion

Cancer is one of the top causes of death worldwide. In 2008, malignant diseases are estimated to have killed 8 million people; by 2030, that figure is predicted to increase to 11 million. Breast cancer is the most common disease in women and one of the main causes of death for them [9].

According to reports, employing screening techniques to find breast cancer early enhances prognosis and reduces the rates of death and morbidity. The most reliable and efficient screening tool for finding breast lesions at an early stage is digital mammography [10].

In a breast cancer screening program, AI-based screening could identify normal, moderate-risk and suspicious mammograms, which could lessen the workload of the radiologist [11].

This study compared the performance of the AI with that of the radiologist in detecting malignant breast lesions in cases with BIRADS 4 and BIRADS 5 breast lesions in digital mammography, finally referring to the histopathology results as the standard of reference and definite diagnosis.

Most of the studies in the literature showed the general performance of AI in digital mammography compared to other modalities. In our study, we focused on BIRADS 4 and 5 lesions in particular.

In our study, 134 lesions were included with 111 proven malignant breast lesions and 23 proven benign. Their age ranged between 28 and 84 years (mean age 48.89 ± 11.99).

Regarding the pathological subtypes: IDC was seen in 63.96% of cases, ILC was 18.02%, DCIS was 12.61% while other breast malignancies were 3.60% in our study.

These results are in co-ordinance with the results of Raafat et al. [12], plotted at a study conducted on 2169 malignant breast lesions showing that IDC was seen in 76% of cases, ILC was 10.65%, DCIS was 3.9% while other breast malignancies were 9.4%.

In our study in BIRADS 4 and BIRADS 5 lesions, the radiologist sensitivity in detecting malignancy was 100.00% while the AI sensitivity in detecting malignancy was 93.69%. Radiologist was found to be more sensitive than AI in detecting malignancy in BIRADS 4 and 5 lesions; but AI had a higher positive predictive value (94.55%) than the radiologist positive predictive value (82.84%).

In the Danish Capital Region breast cancer screening program, Lauritzen et al. [11] conducted a research on 114 000 women, and their findings were in agreement with our findings. The radiologist's screening sensitivity was 70.8% (791 of 1118; 95% CI: 68.0, 73.5), while the AI-aided screening sensitivity was 69.7% (779 of 1118; 95% CI: 66.9, 72.4).

Our study also mismatched another retrospective multireader multicase study by Rodríguez et al. [13] which was conducted using screening digital mammographic examinations from 240 women which were analyzed by qualified radiologists, both with and without the help of AI. Sensitivity increased with AI aid (86% [86 of 100] vs. 83% [83 of 100]; P = 0.046) which proves the efficacy of radiology in double reading.

Another large study by Rodriguez et al. [14] with a total of 2652 examinations (653 malignant) and analysis by 101 radiologists disagreed with us as AI showed greater sensitivity than 55 of 95 radiologists (57.9%).

Our results also did not agree with Raafat et al. [12] study which was conducted on 123 female patients where regarding the results of AI where AI outperformed digital mammography in terms of sensitivity for identifying cancerous breast lesions. AI has a sensitivity of 96.6% and a false-negative rate of 3.4%, compared to digital mammography's 87.3% and a false-negative rate of 12.7%.

Lehman et al. [15] also conducted a study using CAD (computer-aided detector) which mismatched our results in 323 973 women from 2003 to 2009, where 271 radiologists from 66 institutions in the Breast Cancer Surveillance Consortium examined mammograms. It showed that the sensitivity of digital mammography was 85.3% (95% CI, 83.6%-86.9%) when CAD was present, and 87.3% (95% CI, 84.5%-89.7%) when it was not.

Kim et al. [16] study involved 320 mammograms that were independently acquired from two institutions, in which 14 radiologists participated as readers and evaluated each mammogram. Radiologists were less sensitive than AI to find tumors with mass (46 [78%] vs. 53 [90%] of 59 cancers found; p = 0.044), distortion or asymmetry (10 [50%] vs. 18 [90%] of 20 cancers detected; p = 0.023).

Upon correlation with final diagnosis, we found that the AI results were as follows, 104/134 (77.6%) lesions were true positive, 6/134 (4.4%) lesions were false positive, 17/134 (12.6%) lesions were true negative and 7/134 (5.2%) lesions were false negative.

False-negative lesions on AI (n = 7) were invasive ductal carcinoma (n = 5) and invasive lobular (n = 2). While, false-positive lesions on AI (n = 6) were breast abscess (n = 3), granulomatous mastitis (n = 2) and fibrocystic disease (n = 1).

True-positive results were 111/134 (82.8%) by radiologist in digital mammography while false-positive results were 23/134 (17.2%).

All the cases included in our study were BIRADS 4 and 5 by the radiologist (digital mammography), we did not include BIRADS 3, 2 or 1 cases, that is, why 100% of pathologically proven benign cases were false positive by the radiologist and also, that is, why there is no true- or false-negative cases by the radiologist. This explains the high sensitivity of radiologist.

However, this high false-positive incidence by the radiologist attributed to two factors, first of all is the fact that the inclusion criteria were highly suspicious cases by radiologist (BIRADS 4 and 5), and the other reason is that in our study, the radiologist did not include ultrasound or clinical data/complaint of the patient and patients previous imaging if available (e.g., fever, inflammation, being a postoperative case, past medical or surgical history) while deciding on the BIRADS score in each mammogram to ensure a fair comparison between the performance of the AI and the radiologist. Looking back at the benign cases involved after adding clinical data and ultrasound assessment, almost all of the pathologically proven benign cases were given a BIRADS 3 classification rather than BIRADS 4 and 5, after using complementary ultrasound (US) which is the real case in clinical practice.

Eventually, all falsely diagnosed cases by AI were 13 cases out of 134 compared to 23/134 by radiologist. So, AI demonstrated higher accuracy of 90.3% while digital mammography demonstrated accuracy of 82.84% and higher specificity of 73.91% compared to 0% specificity by radiologist in BIRADS 4 and BIRADS 5 lesions.

Our results matched a study done by Lotter et al. [17] in breast cancer detection by digital mammography using annotation-efficient deep learning approach showed that at the average reader sensitivity, the model (AI) achieved an absolute increase in specificity of 24.0% (95% CI: 17.4–30.4%; P < 0.0001).

Another study done by Schaffter et al. [18] agreed with our results and showed that combining AI algorithms and radiologist assessments resulted in a higher area under the curve of 0.942 and achieved a significantly improved specificity (92.0%) at the same sensitivity and stated that AI algorithms combined with radiologist assessment in a single-reader screening environment improved overall accuracy.

This mismatched the results stated by Marinovich et al. [19] showing that stand-alone accuracy of the AI was lower than that of radiologists who interpreted the mammograms in practice and that AI has lower specificity than radiologist (0.81 [95% CI: 0.81–0.81] versus 0.97 [95% CI: 0.97–0.97]).

We recognized in our study that AI software always gives different scales in each view then we consider the higher scale, in most of cases—not all cases—the scale is higher in the CC view, and this is because in CC view, the breast is thicker and this increases the summation of shadows and tissue overlap due to less compression compared to the MLO view and so the lesions show higher density and consequently higher AI score in CC view.

Limitations

In this study, neither specificity nor negative predictive value could be assessed for the radiologist, hence could not be compared with AI values because the inclusion criteria of the study did not include BIRADS 1, 2 and 3 so benign-looking lesions by digital mammography were not involved to measure specificity and negative predictive values which is considered a limitation in our study.

Most of the false-positive cases detected by the mammogram were benign inflammatory lesions, which draws our attention to the importance of clinical history; however, we could not reach a solid conclusion or comment on its effect on the sensitivity due to the small number of these cases included in our study.

The limited number of cases included in our study also did not allow us to find a proper correlation or explanation for the false-negative cases by AI in our study.

Conclusions

All in all, based on the available data in our study, AI was found to be more accurate (90.30%) than radiologist (82.84%) regarding suspecting malignancy in digital mammography, and AI wins the comparison and that partly owes to the fact that in our study, the essential role of ultrasound and clinical history—which is a corner stone in the performance of the radiologist—was omitted so when computerizing the radiologist the computer won of course.

Availability of data and materials

The corresponding author is responsible for sending the user data and materials upon request.

Abbreviations

- AI:

-

Artificial intelligence

- AUC:

-

Area under curve

- BIRADS:

-

Breast imaging reporting and data system

- CAD:

-

Computer-aided detector

- CC:

-

Craniocaudal

- DCIS:

-

Ductal carcinoma in situ

- DL:

-

Deep learning

- IDC:

-

Invasive duct carcinoma

- ILC:

-

Invasive lobular carcinoma

- MLO:

-

Mediolateral oblique

- MMG:

-

Digital mammography

- mSv:

-

MilliSievert

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- US:

-

Ultrasound

References

Sood R, Rositch AF, Shakoor D, Ambinder E, Pool KL, Pollack E, Mollura DJ, Mullen LA, Harvey SC (2019) Ultrasound for breast cancer detection globally: a systematic review and meta-analysis. J Glob Oncol 5:1–17. https://doi.org/10.1200/JGO.19.00127

Geisel J, Raghu M, Hooley R (2018) The role of ultrasound in breast cancer screening: the case for and against ultrasound. Semin Ultrasound CT MR 39(1):25–34. https://doi.org/10.1053/j.sult.2017.09.006

Sechopoulos I, dos Reis CS (2022) Digital mammography equipment. In: Digital mammography: A Holistic Approach (pp. 199–216). Springer. https://doi.org/10.1007/978-3-031-10898-3_18

Yoon JH, Kim EK (2021) Deep learning-based artificial intelligence for digital mammography. Korean J Radiol 22(8):1225–1239. https://doi.org/10.3348/kjr.2020.1210

Chan YH (2003) Biostatistics 102: quantitative data–parametric & non-parametric tests. Singapore Med J 44(8):391–396

Chan YH (2003) Biostatistics 103: qualitative data - tests of independence. Singapore Med J 44(10):498–503

Galen RS (1980) Predictive value and efficiency of laboratory testing. Pediatr Clin North Am 27(4):861–869. https://doi.org/10.1016/s0031-3955(16)33930-x

Mansour S, Kamal R, Hashem L, AlKalaawy B (2021) Can artificial intelligence replace ultrasound as a complementary tool to mammogram for the diagnosis of the breast cancer? Br J Radiol 94(1128):20210820. https://doi.org/10.1259/bjr.20210820

Momenimovahed Z, Salehiniya H (2019) Epidemiological characteristics of and risk factors for breast cancer in the world. Breast cancer (Dove Medical Press) 11:151–164. https://doi.org/10.2147/BCTT.S176070

Al-Mousa DS, Alakhras M, Hossain SZ, Al-Sa’di AG, Al Hasan M, Al-Hayek Y, Brennan PC (2020) Knowledge, attitude and practice around breast cancer and digital mammography screening among Jordanian women. Breast Cancer (Dove Medical Press) 12:231–242. https://doi.org/10.2147/BCTT.S275445

Lauritzen AD, Rodríguez-Ruiz A, von Euler-Chelpin MC, Lynge E, Vejborg I, Nielsen M, Karssemeijer N, Lillholm M (2022) An artificial intelligence-based digital mammography screening protocol for breast cancer: outcome and radiologist workload. Radiology 304(1):41–49. https://doi.org/10.1148/radiol.210948

Raafat M, Mansour S, Kamal R et al (2022) Does artificial intelligence aid in the detection of different types of breast cancer? Egypt J Radiol Nucl Med 53:182. https://doi.org/10.1186/s43055-022-00868-z

Rodríguez-Ruiz A, Krupinski E, Mordang JJ, Schilling K, Heywang-Köbrunner SH, Sechopoulos I, Mann RM (2019) Detection of breast cancer with digital mammography: effect of an artificial intelligence support system. Radiology 290(2):305–314. https://doi.org/10.1148/radiol.2018181371

Rodriguez-Ruiz A, Lång K, Gubern-Merida A, Broeders M, Gennaro G, Clauser P, Helbich TH, Chevalier M, Tan T, Mertelmeier T, Wallis MG, Andersson I, Zackrisson S, Mann RM, Sechopoulos I (2019) Stand-alone artificial intelligence for breast cancer detection in digital mammography: comparison with 101 radiologists. J Natl Cancer Inst 111(9):916–922. https://doi.org/10.1093/jnci/djy222

Lehman CD, Wellman RD, Buist DS, Kerlikowske K, Tosteson AN, Miglioretti DL, Breast Cancer Surveillance Consortium (2015) Diagnostic accuracy of digital screening digital mammography with and without computer-aided detection. JAMA Intern Med 175(11):1828–1837. https://doi.org/10.1001/jamainternmed.2015.5231

Kim HE, Kim HH, Han BK, Kim KH, Han K, Nam H, Lee EH, Kim EK (2020) Changes in cancer detection and false-positive recall in digital mammography using artificial intelligence: a retrospective, multireader study. The Lancet Digital health 2(3):e138–e148. https://doi.org/10.1016/S2589-7500(20)30003-0

Marinovich ML, Wylie E, Lotter W, Lund H, Waddell A, Madeley C, Pereira G, Houssami N (2023) Artificial intelligence (AI) for breast cancer screening: breastscreen population-based cohort study of cancer detection. EBioMedicine 90:104498. https://doi.org/10.1016/j.ebiom.2023.104498

Schaffter T, Buist DSM, Lee CI, Nikulin Y, Ribli D, Guan Y, Lotter W, Jie Z, Du H, Wang S, Feng J, Feng M, Kim HE, Albiol F, Albiol A, Morrell S, Wojna Z, Ahsen ME, Asif U, Jimeno Yepes A, Jung H (2020) Evaluation of combined artificial intelligence and radiologist assessment to interpret screening mammograms. JAMA Netw Open 3(3):e200265. https://doi.org/10.1001/jamanetworkopen.2020.0265

Lotter W, Diab AR, Haslam B, Kim JG, Grisot G, Wu E, Gregory Sorensen A (2021) Robust breast cancer detection in digital mammography and digital breast tomosynthesis using an annotation-efficient deep learning approach. Nat Med 27(2):244–249. https://doi.org/10.1038/s41591-020-01174-9

Acknowledgements

We would like to acknowledge Prof. Dr. Sahar Mansour, women imaging unit, the radiology department, at Cairo University for her great help.

Funding

No source of funding.

Author information

Authors and Affiliations

Contributions

BE is the guarantor of the integrity of the entire study. HR and BE contributed to the study concepts and design. All authors contributed to the literature research. BE and SF contributed to the clinical studies. All authors contributed to the experimental studies/data analysis. BE and SF contributed to the statistical analysis. BE contributed to the manuscript preparation. BE and HR contributed to the manuscript editing. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the ethical committee of the Radiology Department of Kasr-Al-Ainy Hospital, Cairo University, which is an academic governmental supported highly specialized multidisciplinary hospital. The included patients gave written informed consent.

Consent for publication

All patients included in this research were legible and above 16 years of age. They gave written informed consent to publish the data contained within this study.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Badawy, E., Shalaby, F.S., Saif-El-nasr, S.I. et al. The synergy between AI and radiologist in advancing digital mammography: comparative study between stand-alone radiologist and concurrent use of artificial intelligence in BIRADS 4 and 5 female patients. Egypt J Radiol Nucl Med 54, 191 (2023). https://doi.org/10.1186/s43055-023-01136-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43055-023-01136-4