Abstract

In this paper, the generalized Sylvester matrix equation AV + BW = EVF + C over reflexive matrices is considered. An iterative algorithm for obtaining reflexive solutions of this matrix equation is introduced. When this matrix equation is consistent over reflexive solutions then for any initial reflexive matrix, the solution can be obtained within finite iteration steps. Furthermore, the complexity and the convergence analysis for the proposed algorithm are given. The least Frobenius norm reflexive solutions can also be obtained when special initial reflexive matrices are chosen. Finally, numerical examples are given to illustrate the effectiveness of the proposed algorithm.

Similar content being viewed by others

Introduction and preliminaries

Consider the generalized Sylvester matrix equation

where A, E ∈ Rm × p, B ∈ Rm × q, F ∈ Rn × n, and C ∈ Rm × n while V ∈ Rp × n and W ∈ Rq × n are matrices to be determined. An n × n real matrix P ∈ Rn × n is called a generalized reflection matrix if PT = P and P2 = I. An n × n matrix A is said to be reflexive matrix with respect to the generalized reflection matrix P if A = PAP for more details see [1, 2]. The symbol A ⊗ B stands for the Kronecker product of matrices A and B. The vectorization of an m × n matrix A, denoted by vec(A), is the mn × 1 column vector obtains by stacking the columns of the matrix A on top of one another: \( vec(A)={\left({a}_1^T\kern0.5em {a}_2^T\dots {a}_n^T\right)}^T \). We use tr(A) and ATto denote the trace and the transpose of the matrix A respectively. In addition, we define the inner product of two matrices A, B as 〈A, B〉 = tr(BTA). Then, the matrix norm of A induced by this inner product is Frobenius norm and denoted by ‖A‖ where 〈A, A〉 = ‖A‖2.

The reflexive matrices with respect to the generalized reflection matrix P ∈ Rn × n have many special properties and widely used in engineering and scientific computations [2, 3]. Several authors have studied the reflexive solutions of different forms of linear matrix equations; see for example [4,5,6,7]. Ramadan et al. [8] considered explicit and iterative methods for solving the generalized Sylvester matrix equation. Dehghan and Hajarian [9] constructed an iterative algorithm to solve the generalized coupled Sylvester matrix equations (AY − ZB, CY − ZD) = (E, F) over reflexive matrices. Also, Dehghan and Hajarian [10] proposed three iterative algorithms for solving the linear matrix equation A1X1B1 + A2X2B2 = C over reflexive (anti -reflexive) matrices. Yin et al. [11] presented an iterative algorithm to solve the general coupled matrix equations \( \sum \limits_{j=1}^q{A}_{ij}{X}_j{B}_{ij}={M}_i\left(i=1,2,\cdots, p\right) \) and their optimal approximation problem over generalized reflexive matrices. Li [12] presented an iterative algorithm for obtaining the generalized (P, Q)-reflexive solution of a quaternion matrix equation \( \sum \limits_{l=1}^u{A}_l{XB}_l+\sum \limits_{s=1}^v{C}_s\tilde{X}{D}_s=F \). In [13], Dong and Wang presented necessary and sufficient conditions for the existence of the {P, Q, k + 1}-reflexive (anti-reflexive) solution to the system of matrices AX = C, XB = D. In [14], Nacevska found necessary and sufficient conditions for the generalized reflexive and anti-reflexive solution for a system of equations ax = b and xc = d in a ring with involution. Moreover, Hajarian [15] established the matrix form of the biconjugate residual (BCR) algorithm for computing the generalized reflexive (anti-reflexive) solutions of the generalized Sylvester matrix equation \( \sum \limits_{i=1}^s{A}_i{XB}_i+\sum \limits_{j=1}^t{C}_j{YD}_j=M \). Liu [16] established some conditions for the existence and the representations for the Hermitian reflexive, anti-reflexive, and non-negative definite reflexive solutions to the matrix equation AX = B with respect to a generalized reflection P by using the Moore-Penrose inverse. Dehghan and Shirilord [17] presented a generalized MHSS approach for solving large sparse Sylvester equation with non-Hermitian and complex symmetric positive definite/semi-definite matrices based on the MHSS method. Dehghan and Hajarian [18] proposed two algorithms for solving the generalized coupled Sylvester matrix equations over reflexive and anti-reflexive matrices. Dehghan and Hajarian [19] established two iterative algorithms for solving the system of generalized Sylvester matrix equations over the generalized bisymmetric and skew-symmetric matrices. Hajarian and Dehghan [20] established two gradient iterative methods extending the Jacobi and Gauss Seidel iteration for solving the generalized Sylvester-conjugate matrix equation A1XB1 + A2XB2 + C1YD1 + C2YD2 = E over reflexive and Hermitian reflexive matrices. Dehghan and Hajarian [21] proposed two iterative algorithms for finding the Hermitian reflexive and skew–Hermitian solutions of the Sylvester matrix equation AX + XB = C. Hajarian [22] obtained an iterative algorithm for solving the coupled Sylvester-like matrix equations. El–Shazly [23] studied the perturbation estimates of the maximal solution for the matrix equation \( X+{A}^T\sqrt{X^{-1}}A=P \). Khader [24] presented numerical method for solving fractional Riccati differential equation (FRDE). Balaji [25] presented a Legendre wavelet operational matrix method for solving the nonlinear fractional order Riccati differential equation. The generalized Sylvester matrix equation has numerous applications in control theory, signal processing, filtering, model reduction, and decoupling techniques for ordinary and partial differential equations (see [26,27,28,29]).

In this paper, we will investigate the reflexive solutions of the generalized Sylvester matrix equation AV + BW = EVF + C. The paper is organized as follows: First, in the “Iterative algorithm for solving AV + BW = EVF + C” section, an iterative algorithm for obtaining reflexive solutions of this problem is derived. The complexity of the proposed algorithm is presented. In the “Convergence analysis for the proposed algorithm” section, the convergence analysis for the proposed algorithm is given. Also, the least Frobenius norm reflexive solutions can be obtained when special initial reflexive matrices are chosen. Finally, in “Numerical samples” section, four numerical examples are considered for ensuring the performance of the proposed algorithm.

Iterative algorithm for solving AV + BW = EVF + C

In this part, we consider the following problem:

Problem 2.1. For given matrices A, B, E, C ∈ Rm × n, F ∈ Rn × n, and two generalized reflection Matrices P, S of size n, find the matrices \( V\in {R}_r^{n\times n}(P) \) and \( W\in {R}_r^{n\times n}(S) \) such that

Where the subspace \( {R}_r^{n\times n}(P) \) is defined by \( {R}_r^{n\times n}(P)=\left\{Q\in {R}^{n\times n}:Q= PQP\right\} \), where P is the generalized reflection matrix: P2 = I, PT = P.

An iterative algorithm for solving the consistent Problem 2.1

This subsection, an iterative algorithm is proposed for solving Problem 2.1 assuming that this problem is consistent.

In the next theorem, we prove that the solutions {Vk + 1} and {Wk + 1} are reflexive solutions for the matrix Eq. (2.1).

Theorem 2.1 The solutions {Vk + 1} and{Wk + 1} generated from Algorithm 2.1 are reflexive solutions with respect to the generalized reflection matrices P and S of the matrix Eq. ( 2.1 ).

Proof By using the induction we can prove this theorem as follows:

For k = 1,

Assume that PVkP = Vk i.e.,

Now, \( {\displaystyle \begin{array}{l}{PV}_{k+1}P={PV}_kP+\frac{{\left\Vert {R}_k\right\Vert}^2}{{\left\Vert {P}_k\right\Vert}^2+{\left\Vert {Q}_k\right\Vert}^2}{PP}_kP\\ {}={V}_k+\frac{{\left\Vert {R}_k\right\Vert}^2}{{\left\Vert {P}_k\right\Vert}^2+{\left\Vert {Q}_k\right\Vert}^2}\left(\frac{1}{2}\left[{PA}^T{R}_kP+{P}^2{A}^T{R}_k{P}^2-{PE}^T{R}_k{F}^TP-{P}^2{E}^T{R}_k{F}^T{P}^2\right]+\frac{{\left\Vert {R}_k\right\Vert}^2}{{\left\Vert {R}_{k-1}\right\Vert}^2}{PP}_{k-1}P\right)\\ {}={V}_k+\frac{{\left\Vert {R}_k\right\Vert}^2}{{\left\Vert {P}_k\right\Vert}^2+{\left\Vert {Q}_k\right\Vert}^2}\left(\frac{1}{2}\left[{PA}^T{R}_kP+{A}^T{R}_k-{PE}^T{R}_k{F}^TP-{E}^T{R}_k{F}^T\right]+\frac{{\left\Vert {R}_k\right\Vert}^2}{{\left\Vert {R}_{k-1}\right\Vert}^2}{P}_{k-1}\right)={V}_{k+1}\cdot \end{array}} \)

Similarly, we can prove Wk + 1 is reflexive solution with respect to the generalized reflection matrix S of the matrix Eq. (2.1).

The complexity of the proposed iterative algorithm

Algorithmic complexity is concerned about how fast or slow particular algorithm performs. We define complexity as a numerical function T(n) —time versus the input size n. The complexity of an algorithm signifies the total time required by the program to run till its completion. The time complexity of algorithms is most commonly expressed using the big O notation. It is an asymptotic notation to represent the time complexity. A theoretical and very crude measure of efficiency is the number of floating point operations (flops) needed to implement the algorithm. A “flop” is an arithmetic operation: +, x, or /. In this subsection, we compute the flops of the proposed Algorithm 2.1 of the Sylvester matrix equation AV + BW = EVF + C.

The flop counts for step 3:

The residual R1 requires 4mn(2n − 1) + 3mn flops, computing the reflection matrix P1 requires 4n2(2m − 1) + 4n2(2n − 1) + 2mn(2n − 1) + 4n2 flops, and computing the reflection matrix Q1 requires 2n2(2m − 1) + n2(2n − 1) + mn(2n − 1) + 2n2 flops.

The flop counts for step 5:

Computing Vk + 1 requires [6n2 + 2mn + 5] flops, Wk + 1 requires [6n2 + 2mn + 5] flops, Rk + 1

requires [4mn(2n − 1) + 4n2 + 6mn + 5] flops, Qk + 1 requires [2n2(2m − 1) + n2(2n − 1) + mn(2n − 1) + 4n2 + 4mn + 3] flops, and Pk + 1 requires [4n2(2m − 1) + 3n2(2n − 1) + 2mn(2n − 1) + 6n2 + 4mn + 3] flops.

Thus, the total count of Algorithm 2.1 is:

where k represents the number of iterations which is needed to find the reflexive solutions of Eq. (2.1). We can conclude that the total flop count of Algorithm 2.1 is O(n3).

Convergence analysis for the proposed algorithm

In this section, first, we present two lemmas which are important tools for the convergence of Algorithm 2.1.

Lemma 3.1 Assume that the sequences {Ri}, {Pi} and{Qi} are obtained by Algorithm 2.1, if there exists an integer number s > 1, such that \( {R}_i\ne \mathbf{0}, \) for all i = 1, 2, …, s, then we have

Proof In view of the fact that tr(Y) = tr(YT) for arbitrary matrix Y. Therefore, we only need to prove that

We prove the conclusion (3.2) by induction through the following two steps.

Step 1: First, we show that

To prove (3.3), we also use induction.

For i = 1, noting that P1 = PP1P, and Q1 = SQ1S, from the iterative Algorithm 2.1, we can write \( tr\left({R}_2^T{R}_1\right)= tr\left({\left[{R}_1-\frac{{\left\Vert {R}_1\right\Vert}^2}{{\left\Vert {P}_1\right\Vert}^2+{\left\Vert {Q}_1\right\Vert}^2}\left({AP}_1-{EP}_1F+{BQ}_1\right)\right]}^T{R}_1\right) \)

Similarly, we can write

Now, assume that (3.3) holds for 1 < i ≤ t − 1 < s, noting that Pt = PPtP, and Qt = SQtS, then we have for i = t

Also, we have

Therefore, the conclusion (3.3) holds for i = t. Hence, (3.3) holds by the principle of induction.

Step 2: In this step, we show for 1 ≤ i ≤ s − 1

for l = 1, 2, …, s. The case of l = 1 has been proven in step 1. Assume that (3.8) holds for l ≤ ν.

Now, we prove that \( tr\left({R}_{i+\nu +1}^T{R}_i\right)=0 \) and \( tr\left({P}_{i+\nu +1}^T{P}_i+{Q}_{i+\nu +1}^T{Q}_i\right)=0 \) through the following two substeps.

Substep 2.1: In this substep, we show that

By Algorithm 2.1 and the induction assumptions, we have

and \( tr\left({P}_{\nu +2}^T{P}_1+{Q}_{\nu +2}^T{Q}_1\right) \)

Substep 2.2: By Algorithm 2.1 and the induction assumptions, we can write

Also, we have

Repeating the above process (3.11) and (3.12), we can obtain, for certain α and β

\( tr\left({R}_{i+\nu +1}^T{R}_i\right)=\alpha tr\left({P}_{\nu +2}^T{P}_1+{Q}_{\nu +2}^T{Q}_1\right) \), and \( tr\left({P}_{i+\nu +1}^T{P}_i+{Q}_{i+\nu +1}^T{Q}_i\right)=\beta tr\left({P}_{\nu +2}^T{P}_1+{Q}_{\nu +2}^T{Q}_1\right) \).

Combining these two relations with (3.10) implies that (3.8) holds for l = ν + 1.

From steps 1 and 2, the conclusion (3.1) holds by the principle of induction.

Lemma 3.2 Let Problem 2.1 be consistent over reflexive matrices, and V ∗ and W ∗ be arbitrary reflexive solutions of Problem 2.1. Then for any initial reflexive matrices V 1 andW 1 , we have

where the Sequences {Ri}, {Pi}, {Qi}, {Vi} and{Wi} are generated by Algorithm 2.1.

Proof We can prove the conclusion (3.13) by using the induction as follows

For i = 1, noting that V∗ − V1 = P(V∗ − V1)P, and W∗ − W1 = Z(W∗ − W1)Z, we have

Assume that the conclusion (3.13) holds for i = t. Now, for i = t + 1, we have

Hence, Lemma 3.2 holds for all i = 1, 2, … by the principle of induction.

Theorem 3.1 Assume that Problem 2.1 is consistent over reflexive matrices, then by using Algorithm 2.1 for any arbitrary initial reflexive matrices \( {V}_1\in {R}_r^{n\times n}(P) \) and \( {W}_1\in {R}_r^{n\times n}(S) \) , reflexive solutions of Problem 2.1 can be obtained within a finite iterative steps by Algorithm 2.1 in absence of roundoff errors.

Proof Assume that \( {R}_i\ne \mathbf{0} \) for i = 1, 2, …, mn. From Lemma 3.2, we get \( {P}_i\ne \mathbf{0} \) or \( {Q}_i\ne \mathbf{0} \) for i = 1, 2, …, mn. Therefore, we can compute Rmn + 1, Vmn + 1 and Wmn + 1 by Algorithm 2.1. Also from Lemma 3.1, we have

and

Therefore, the set {R1, R2, …, Rmn} is an orthogonal basis of the matrix space Rm × n, which implies that \( {R}_{mn+1}=\mathbf{0} \), i.e., Vmn + 1, and Wmn + 1 are reflexive matrices solutions of Problem 2.1. Hence, the proof is completed.

To obtain least Frobenius norm solution of the generalized solution pair of Problem 2.1, we first present the following lemma.

Lemma 3.3 [4] Assume that the consistent system of linear equations Ax = b has a solution x∗ ∈ R(AT), then x∗ is a unique least Frobenius norm solution of the system of linear equations.

Theorem 3.2 Suppose that Problem 2.1 is consistent over reflexive matrices. Let the initial iteration matrices \( {V}_1={A}^TG+{PA}^T\tilde{G}P-{E}^T{GF}^T-{PE}^T\tilde{G}{F}^TP \) and \( {W}_1={B}^TG+{SB}^T\tilde{G}S \) where G and \( \tilde{G} \) are arbitrary, or especially \( {V}_1=\mathbf{0} \) and \( {W}_1=\mathbf{0} \) , then the reflexive solutions V ∗ andW ∗ obtained by Algorithm 2.1, are the least Frobenius norm reflexive solutions of Eq. ( 2.1 ).

Proof The solvability of the matrix Eq. ( 2.1 ) over reflexive matrices is equivalent to the solvability of the system of equations

And the system of equations ( 3.18 ) is equivalent to

Now, assume that G and \( \tilde{G} \) are arbitrary matrices, we can write

If we consider \( {V}_1={A}^TG+{PA}^T\tilde{G}P-{E}^T{GF}^T-{PE}^T\tilde{G}{F}^TP \) and \( {W}_1={B}^TG+{SB}^T\tilde{G}S \) then all Vk And Wk generated by Algorithm 2.1 satisfy

By applying Lemma 3.3 with the initial iteration matrices \( {V}_1={A}^TG+{PA}^T\tilde{G}P-{E}^T{GF}^T-{PE}^T\tilde{G}{F}^TP \) and \( {W}_1={B}^TG+{SB}^T\tilde{G}S \) where G and \( \tilde{G} \) are arbitrary, or especially \( {V}_1=\mathbf{0} \) and \( {W}_1=\mathbf{0} \), the reflexive solutions V∗ and W∗ obtained by Algorithm 2.1 are the least Frobenius norm reflexive solutions of Eq. (2.1).

Numerical examples

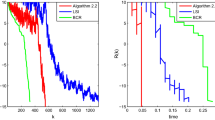

In this section, four numerical examples are presented to illustrate the performance and the effectiveness of the proposed algorithm. We implemented the algorithms in MATLAB (writing our own programs) and ran the programs on a PC Pentium IV.

Example 4.1

Consider the generalized Sylvester matrix equation AV + BW = EVF + C where

Choosing arbitrary initial matrices V1 = W1 = 0. Applying Algorithm 2.1, we get the reflexive solutions of the matrix Eq. (2.1) as follows:

where \( P=S=\left(\begin{array}{cccc}1& 0& 0& 0\\ {}0& 1& 0& 0\\ {}0& 0& -1& 0\\ {}0& 0& 0& -1\end{array}\right) \), with the corresponding residual

‖R28‖ = ‖C − (AV28 + BW28 − EV28F)‖ = 6.8125 × 10−10. Moreover, It can be verified that PV28P = V28 and SW28S = W28. Table 1 indicates the number of iterations k and norm of the corresponding residual:

Now let \( \hat{V}=\left(\begin{array}{l}\kern0.5em 1\kern1.25em 1\kern1.25em 0\kern1.25em 0\\ {}-1\kern0.5em -1\kern1.25em 0\kern1.25em 0\\ {}\kern0.5em 0\kern1.25em 0\kern0.5em -2\kern1.25em 1\\ {}\kern0.5em 0\kern1.25em 0\kern1.25em 3\kern0.5em -1\end{array}\right),\hat{W}=\left(\begin{array}{l}\ 1\kern0.75em -1\kern1em 0\kern1.25em 0\\ {}\ 1\kern0.75em -1\kern1em 0\kern1.25em 0\\ {}\ 0\kern1.25em 0\kern1.25em 1\kern1.25em 2\\ {}\ 0\kern1.25em 0\kern0.75em -2\kern1.25em 1\end{array}\right) \).

By applying Algorithm 2.1 for the generalized Sylvester matrix equation \( A\overline{V}+B\overline{W}=E\overline{V}F+\overline{C}, \) and letting the initial pair \( {\overline{V}}_1={\overline{W}}_1=\mathbf{0} \), we can obtain the least Frobenius norm generalized solution \( {\overline{V}}^{\ast },{\overline{W}}^{\ast } \) of the generalized Sylvester matrix Eq. (2.1) as follows

\( {\overline{W}}^{\ast }={\overline{W}}_{29}=\left(\begin{array}{l}1.0000\kern1em 2.0000\kern2em 0.0000\kern1em 0.0000\\ {}2.0000\kern1em 4.0000\kern2em 0.0000\kern1em 0.0000\\ {}0.0000\kern1em 0.0000\kern1.75em 3.0000\kern0.5em -0.0000\\ {}0.0000\kern1em 0.0000\kern1.75em 1.0000\kern1em 2.0000\end{array}\right) \), with the corresponding residual

Table 2 indicates the number of iterations k and norm of the corresponding residual with \( {\overline{V}}_1={\overline{W}}_1=\mathbf{0} \).

Example 4.2

(Special case) Consider the generalized Sylvester matrix equation AV + BW = EVF + C where

Choosing arbitrary initial iterative matrices \( {V}_1={W}_1=\mathbf{0} \). Applying Algorithm 2.1, we get the reflexive solutions of the matrix Eq.(2.1) after 7 iterations when ε = 10−10 as follows:

where \( P=\left(\begin{array}{cccc}1& 0& 0& 0\\ {}0& 1& 0& 0\\ {}0& 0& -1& 0\\ {}0& 0& 0& -1\end{array}\right) \) and S = P.

It can be verified that PV7P = V7 and SW7S = W7. Moreover, the corresponding residual ‖R7‖ = ‖C − AV7 + EV7F − BW7‖ = 8.1907e − 10.

Example 4.3

Consider the generalized Sylvester matrix equation AV + BW = EVF + C where

Choosing arbitrary initial matrices \( {V}_1={W}_1=\mathbf{0} \). Applying Algorithm 2.1, we get the reflexive solutions of the matrix Eq. (2.1) after 108 iterations when ε = 10−10 as follows:

where \( P=S=\left(\begin{array}{l}1\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 1\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 1\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\end{array}\right) \), with the corresponding residual

‖R108‖ = ‖C − (AV108 + BW108 − EV108F)‖ = 3.0452 × 10−10. Moreover, It can be verified that PV108P = V108 and SW108S = W108. Table 3 indicates the number of iterations k and norm of the corresponding residual:

Example 4.4

Consider the generalized Sylvester matrix equation AV + BW = EVF + C where

Choosing arbitrary initial matrices \( {V}_1={W}_1=\mathbf{0} \). Applying Algorithm 2.1, we get the reflexive solutions of the matrix Eq. (2.1) after 96 iterations when ε = 10−12 as follows:

where \( P=S=\left(\begin{array}{l}1\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 1\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 1\kern1.25em 0\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\kern1.25em 0\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\kern1.25em 0\\ {}0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1.25em 0\kern1em \hbox{-} 1\end{array}\right) \), with the corresponding residual ‖R96‖ = ‖ diag(C − (AV96 + BW96 − EV96F))‖ = 3.0152 × 10−12.

Moreover, It can be verified that PV96P = V96 and SW96S = W96. Table 4 indicates the number of iterations k and norm of the corresponding residual.

Conclusions

In this paper, an iterative method to solve the generalized Sylvester matrix equations over reflexive matrices is derived. With this iterative method, the solvability of the generalized Sylvester matrix equation can be determined automatically. Also, when this matrix equation is consistent, for any initial reflexive matrices, one can obtain reflexive solutions within finite iteration steps. In addition, both the complexity and the convergence analysis for our proposed algorithm are presented. Furthermore, we obtained the least Frobenius norm reflexive solutions when special initial reflexive matrices are chosen. Finally, four numerical examples were presented to support the theoretical results and illustrate the effectiveness of the proposed method.

Availability of data and materials

All data generated or analyzed during this study are included in this article.

References

Horn, R.A., Johnson, C.R.: Topics in matrix analysis. Cambridge University Press, England (1991)

Golub, G.H., Van Loan, C.F.: Matrix computations, 3rd edn. The Johns Hopkins University Press, Baltimore and London (1996)

Chen, H.C.: Generalized reflexive matrices: special properties and applications. SIAM J. Matrix Anal. Appl. 19, 140–153 (1998)

Peng, X.Y., Hu, X.Y., Zhang, L.: An iteration method for the symmetric solutions and the optimal approximation solution of the matrix equation AXB = C. Appl. Math. Comput. 160, 763–777 (2005)

Peng, X.Y., Hu, X.Y., Zhang, L.: The reflexive and anti-reflexive solutions of the matrix equation A H XB = C. Appl. Math. Comput. 186, 638–645 (2007)

Wang, Q.W., Zhang, F.: The reflexive re-nonnegative definite solution to a quaternion matrix equation. Electron. J. Linear Algebra. 17, 88–101 (2008)

Zhan, J.C., Zhou, S.Z., Hu, X.Y.: The (P, Q) generalized reflexive and anti-reflexive solutions of the matrix equation AX = B. Appl. Math. Comput. 209, 254–258 (2009)

Ramadan, M.A., Abdel Naby, M.A., Bayoumi, A.M.: On the explicit and iterative solutions of the matrix equation AV + BW = EVF + C. Math. Comput. Model. 50, 1400–1408 (2009)

Dehghan, M., Hajarian, M.: An iterative algorithm for the reflexive solutions of the generalized coupled Sylvester matrix equations and its optimal approximation. Appl. Math. Comput. 202, 571–588 (2008)

Dehghan, M., Hajarian, M.: Finite iterative algorithms for the reflexive and anti-reflexive solutions of the matrix equation A 1 X 1 B 1 + A 2 X 2 B 2 = C. Math. Comput. Model. 49, 1937–1959 (2009)

Yin, F., Guo, K., Huang, G.X.: An iterative algorithm for the generalized reflexive solutions of the general coupled matrix equations. Journal of Inequalities and Applications. 2013, 280 (2013)

Li, N.: Iterative algorithm for the generalized (P, Q) - reflexive solution of a quaternion matrix equation with j-conjugate of the unknowns. Bulletin of the Iranian Mathematical Society. 41, 1–22 (2015)

Dong, C.Z., Wang, Q.W.: The {P, Q, k + l}- reflexive solution to system of matrices AX = C, XB = D. Mathematical Problems in Engineering. 2015, 9 (2015)

Nacevska, B.: Generalized reflexive and anti-reflexive solution for a system of equations. Filomat. 30, 55–64 (2016)

Hajarian, M.: Convergence properties of BCR method for generalized Sylvester matrix equation over generalized reflexive and anti-reflexive matrices. Linear and Multilinear Algebra. 66(10), 1–16 (2018)

Liu, X.: Hermitian and non-negative definite reflexive and anti-reflexive solutions to AX = B. International Journal of Computer Mathematics. 95(8), 1666–1671 (2018)

Dehghan, M., Shirilord, A.: A generalized modified Hermitian and skew–Hermitian splitting (GMHSS) method for solving complex Sylvester matrix equation. Applied Mathematics and Computation. 348, 632–651 (2019)

Dehghan, M., Hajarian, M.: On the reflexive and anti–reflexive solutions of the generalized coupled Sylvester matrix equations. International Journal of Systems Science. 41(6), 607–625 (2010)

Dehghan, M., Hajarian, M.: On the generalized bisymmetric and skew–symmetric solutions of the system of generalized Sylvester matrix equations. Linear and Multilinear Algebra. 59(11), 1281–1309 (2011)

Hajarian, M., Dehghan, M.: The reflexive and Hermitian reflexive solutions of the generalized Sylvester–conjugate matrix equation. The Bulletin of the Belgian Mathematical Society. 20(4), 639–653 (2013)

Dehghan, M., Hajarian, M.: Two algorithms for finding the Hermitian reflexive and skew–Hermitian solutions of Sylvester matrix equations. Applied Mathematics Letters. 24(4), 444–449 (2011)

Hajarian, M.: Solving the coupled Sylvester like matrix equations via a new finite iterative algorithm. Engineering Computations. 34(5), 1446–1467 (2017)

El-Shazly, N.M.: On the perturbation estimates of the maximal solution for the matrix equation \( X+{A}^T\sqrt{X^{-1}}A=P \). Journal of the Egyptian Mathematical Society. 24, 644–649 (2016)

Khader, M.M.: Numerical treatment for solving fractional Riccati differential equation. Journal of the Egyptian Mathematical Society. 21, 32–37 (2013)

Balaji, S.: Legendre wavelet operational matrix method for solution of fractional order Riccati differential equation. Journal of the Egyptian Mathematical Society. 23, 263–270 (2015)

Moore, B.: Principle component analysis in linear systems: controllability, observability and model reduction. IEEE Transactiona on Automatic Control. 26, 17–31 (1981)

Kenney, C.S., Laub, A.J.: Controllability and stability radii for Companion form systems. Mathematics Control Signals and Systems. 1, 239–256 (1988)

Lam, J., Yan, W., Hu, T.: Pole assignment with eigenvalue and stability robustness. International Journal of Control. 72, 1165–1174 (1999)

Avrachenkov, K.E., Lasserre, J.B.: Analytic perturbation of Sylvester matrix equation. IEEE Transactiona on Automatic Control. 47, 1116–1119 (2002)

Acknowledgements

The authors are grateful to the referees for their valuable suggestions.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

MAR proposed the main idea of this paper. MAR and NME-S prepared the manuscript and performed all the steps of the proofs in this research. BIS has made substantial contributions to conception and designed the numerical methods. All authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Authors’ information

Mohamed A. Ramadan works in Egypt as Professor of Pure Mathematics (Numerical Analysis) at the Department of Mathematics and Computer Science, Faculty of Science, Menoufia University, Shebein El-Koom, Egypt. His areas of expertise and interest are: eigenvalue assignment problems, solution of matrix equations, focusing on investigating, theoretically and numerically, the positive definite solution for class of nonlinear matrix equations, introducing numerical techniques for solving different classes of partial, ordering and delay differential equations, as well as fractional differential equations using different types of spline functions. Naglaa M. El-Shazly works in Egypt as Assistant Professor of Pure Mathematics (Numerical Analysis) at the Department of Mathematics and Computer Science, Faculty of Science, Menoufia University, Shebein El-Koom, Egypt. Her areas of expertise and interest are: eigenvalue assignment problems, solution of matrix equations, theoretically and numerically, iterative positive definite solutions of different forms of nonlinear matrix equations. Basem I. Selim works in Egypt as Assistant Lecturer of Pure Mathematics at the Department of Mathematics and Computer Science, Faculty of Science, Menoufia University, Shebein El-Koom, Egypt. His research interests include Finite Element Analysis, Numerical Analysis, Differential Equations and Engineering, Applied and Computational Mathematics.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Ramadan, M.A., El–shazly, N.M. & Selim, B.I. Iterative algorithm for the reflexive solutions of the generalized Sylvester matrix equation. J Egypt Math Soc 27, 27 (2019). https://doi.org/10.1186/s42787-019-0030-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42787-019-0030-0

Keywords

- Generalized Sylvester matrix equation

- Iterative method

- Reflexive matrices

- Least Frobenius norm solution