Abstract

The main object of this article is the estimation of the unknown population parameters and the reliability function for the generalized Bilal model under type-II censored data. Both maximum likelihood and Bayesian estimates are considered. In the Bayesian framework, although we have discussed mainly the squared error loss function, any other loss function can easily be considered. Gibb’s sampling procedure is used to draw Markov Chain Monte Carlo (MCMC) samples, which have been used to compute the Bayes estimates and also to construct their corresponding credible intervals with the help of two different importance sampling techniques. A simulation study is carried out to examine the accuracy of the resulting Bayesian estimates and compare them with their corresponding maximum likelihood estimates. Application to a real data set is considered for the sake of illustration.

Similar content being viewed by others

Introduction

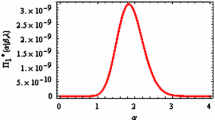

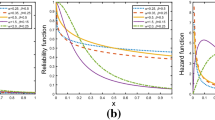

The generalized Bilal (GB) model coincides with the distribution of the median in a sample of size three from the Weibull distribution. It was first introduced by Abd-Elrahman [1]. He showed that its failure rate function can be upside-down bathtub shaped. The failure rate can also be decreasing or increasing. Therefore, the GB model can be used for several practical data analysis.

Suppose that n items are put on a life-testing experiment and we observe only the first r failure times, say x1<x2<⋯<xr. Then, x = (x1, x2, ⋯, xr)′ is called a type-II censored sample. The remaining (n − r) items are censored and are only known to be greater than xr. This article will be based on a type-II censored sample drawn from the GB model. Type-II censoring have been discussed by too many authors, among them, Ahmad et al. [2], Raqab [3], Wu et al. [4], Chana et al. [5], ElShahat and Mahmoud [6], and Abd-Elrahman and Niazi [7].

Likewise, the Weibull distribution, the cumulative distribution function (CDF) of the GB distribution can have any of the two following functional forms:

It is well known that, based on the maximum likelihood (ML) method, the results of any statistical inference that may be obtained by using one of these two forms is applied to the other functional form. This is true by using some re-parametrization techniques together with the invariance property of the ML estimators, see, e.g., Dekking et al. [8]. In this article, formula (1) is used as the CDF of the GB distribution. The corresponding probability density function (PDF) and reliability function are, respectively, given by:

and

The qth quantile, xq, is an important quantity, especially for generating random variates using the inverse transformation method. In view of (1), following Abd-Elrahman [9], xq of the GB distribution is given by:

where

for \(a_{q}\,=\,\frac {1}{3}\, \arctan (\frac {2\sqrt {q(1-q)}}{2\,q-1})\).

The layout of this paper is organized as follows:

In the “Maximum likelihood estimation” section, ML estimates of β and λ are obtained. By using the missing information principle, variance-covariance matrix of the unknown population parameters is obtained, which is used to construct the asymptotic confidence intervals for β, λ, and the reliability function s(t). In the “Bayesian estimation” section, two different importance sampling techniques are introduced. These techniques are used, separately, to compute the Bayes estimates of β, λ, and s(t) and also to construct their corresponding credible intervals. In the “Simulation study” section, Monte Carlo simulations are carried out to compare the performances of the proposed estimators.

Further, in the “Data analysis” section, for the sake of illustration, application to a real life-time data set is presented.

Maximum likelihood estimation

It follows from (1) and (3) that, based on a given type-II censored sample x drawn from the GB distribution, the joint PDF of the papulation parameters β and λ is given by:

where

When λ is known

In this case, for fixed λ, say λ=λ(0), let θ=1/β and \(y_{i}=x_{i}^{\lambda ^{(0)}}\), i=1, 2, ⋯ r. Then, y1,⋯,yr is a type-II random sample from Bilal(θ) distribution. Abd-Elrahman and Niazi [7] established the existence and uniqueness theorem for the maximum likelihood estimate (MLE) of the parameter θ, say \(\hat \theta _{M}\). The MLE for the parameter β is then by \(\hat \beta _{M}\left (\lambda ^{(0)}\right)=1/\hat \theta _{M}\). Clearly, \(\hat \beta _{M}\left (\lambda ^{(0)}\right)\) exists and it is unique.

Now, we provide an iterative technique for finding \(\hat \beta _{M}\left (\lambda ^{(0)}\right)\) as follows. Let,

In view of (6) and (7), the likelihood equation of β is then given by:

For ν=0,1,2,⋯, we calculate \(\hat \beta _{M}({\lambda }^{(0)})\) by using the following formula:

iteratively until some level of accuracy is reached.

Remark 1

Note that, all of the functions W1 and W2j, j=1,2, ⋯, r, which appear in (8), need to have some initial value for β, say \(\hat \beta ^{(0)}\). This initial value can be obtained based on the available type-II censored sample as if it is complete, see Ng et al. [10]. We use the moment estimator of β as a starting point in the iterations (8). That is, in view of (3), \(\hat \beta ^{(0)}\) is given by

When β is known

When β is assumed to be known, say β(0), it follows from (6) that the likelihood equation of λ is given by

where W1 and W2j, j = 1,2,⋯,r, are as given by (7) after replacing β, λ(0) by β(0) and λ, respectively. In order to established the existence and uniqueness of the MLE for λ, the following theorem is needed.

Theorem 1

For a given fixed value of the parameter β = β(0), the MLE for the parameter λ, \(\hat \lambda _{M}\left (\beta ^{(0)}\right)\), exists and it is unique.

Proof

See Appendix. □

The MLE \(\hat \lambda _{M}\left (\beta ^{(0)}\right)\) can be iteratively obtained by using Newton’s method, i.e.,

for ν=0,1,2,⋯, where \({\mathcal {G}}_{1}(\cdot,\,\lambda |{\mathbf {x}})\) is as given by (10) and \({\mathcal {G}}_{2}(\cdot,\,\lambda |{\mathbf {x}})\) is the second derivative of lnL(·, λ|x) with respect to (w.r.t.) λ, which is given in the “Appendix” section.

Remark 2

An initial value for λ, \(\hat \lambda ^{(0)}_{M}\), can be obtained as follows: (1) Calculate the sample coefficient of variation (CV) based on a given type-II censored sample data as if it is complete. (2) Equating the sample CV with its corresponding CV from the population would results in an equation of λ only. (3) \(\hat \lambda ^{(0)}_{M}\) would be the solution of this equation, which provides a good starting point for (11). This technique have been used by, e.g., Kundu and Howlader [11] and Abd-Elrahman [1].

Here, the population CV of the GB distribution is given by

When both β and λ are unknown

In this case, first an initial value for λ, \(\hat \lambda ^{(0)}\), can be obtained as described in “When β is known” section. Once \(\hat \lambda ^{(0)}\) is obtained, an initial value for the parameter β, \(\hat \beta ^{(0)}\), can be calculated as the right hand side of (9) after replacing λ(0) by \(\hat \lambda ^{(0)}\).

Based on the initials \(\hat \beta ^{(0)}\) and \(\hat \lambda ^{(0)}\), an updated value for β, \(\hat \beta ^{(1)}\), can be obtained by using (8). Similarly, based on the pair (\(\hat \beta ^{(1)},\hat \lambda ^{(0)}\)), an updated value for λ, \(\hat \lambda ^{(1)}\), can be obtained by using (11), and so on. As a stopping rule, the iterations will be terminated after some value s<1000 with a level of accuracy, ε≤1.2×10−7, which is defined as

Hence, the limiting pair of estimates \(\left (\hat \beta ^{(s)}, \hat \lambda ^{(s)}\right)\) exists and it is unique, which would maximizes the likelihood function (6) w.r.t., the unknown population parameters β and λ. That is, \(\hat \beta _{M}\,=\,\hat \beta ^{(s)}\) and \(\hat \lambda _{M}\,=\,\hat \lambda ^{(s)}\).

Substituting the values of β and λ in (4) by their MLEs, the MLE for reliability function s(t) at some value of t = t0 can then be obtained.

Fisher information matrix (FIM)

In this section, by using the missing information principle, the Fisher information matrix (FIM) about the underlying population parameters based on type-II censoring is provided. Suppose that, x = (x1, x2, …,xr)′ and Y = (Xr+1, Xr+2, …, Xn)′ denote the ordered observed censored and the unobserved ordered data, respectively. The vector Y can be thought of as the missing data. Combine x and Y to form the complete data set W. It is easy to show that the amount of information about the unknown parameters β and λ, which is provided by W is given by:

with c1=1.92468,c2=0.05606,c3=1.79061, and c4=0.11211.

For s = r + 1,r + 2,…,n, the conditional distribution of each Xs∈Y given Xs > xr follows the truncated underlying distribution with left truncation at xr, see Ng et al. [10]. Therefore, in view of (1) and (3), the PDF of Xs∈Y given Xs > xr is given by

Hence, the expected ordered unobserved (missing) information matrix IY(β, λ), which is related to the vector Y, is then given by

In order to evaluate of the expectations involved in (15), calculations for the following expressions are required.

1) Part 1

where

Denote \( I_{0} = {{{\lim }_{y\,\to \,0^{+}}}} I^{(0)}(y) = 0.32078\), \( I_{1} = {{{\lim }_{y\,\to \,0^{+}}}} I^{(1)}(y) = 0.00934\) and \( I_{2} ={{{\lim }_{y\,\to \,0^{+}}}} I^{(2)}(y) = 0.13177\). Then, (16) can be rewritten as

The integrals involved in (17) can be calculated by using a simple numerical integration tool, e.g., Simpson’s rule.

2) Part 2

where \(I_{3 }\,=\,{\lim }_{y\,\to \, 0^{+}} I^{(3)}(y)\,=\,-\frac {9}{4}\,+\,2\, \sum _{i=1}^{\infty }\,i^{-3}\,=\,0.154114\,\).

Now, in view of (17) and (18), it is easy to show that the elements Ii j of IY|x(β, λ) after division by (n − r), i, j=1,2, are given by

where

and

Note that the elements Ii j, i,j = 1,2, constitute the Fisher information related to each Xs, s = r+1,r+2,⋯,n, where Xs is distributed as in (14). Therefore, in view of (19–21), the elements of the FIM about the parameters β and λ related to the complete data set W can be obtained as \(n\, {\lim }_{y\,\to \, 0^{+}}\, I_{i\,j},\,i,\,j=1,2\), which give as the same results as in (13).

Therefore, the FIM gains about the two unknown parameters β and λ from a given type-II censored sample, (x1,x2,⋯xr)′, is then given by

Asymptotic variances and covariance

Once Ix(β, λ) is calculated, at \(\beta \,=\,\hat \beta _{M}\) and \(\lambda \,=\,\hat \lambda _{M}\), the asymptotic variance-covariance matrix of the MLEs of the two unknown parameters β and λ is then given by

Again, once \({I^{-1}_{\mathbf {x}}\left (\hat \beta _{M},\,\hat \lambda _{M}\right)}\) is obtained, the asymptotic variance of the reliability function s(t0) can then be calculated as the lower bound of the Cram\(\acute {\mathrm {e}}\)r-Rao inequality of the variance of any unbiased estimator for s(t0). That is,

Consequently, the asymptotic (1 − α) 100 % confidence intervals, ACIs, for \(\hat {\beta }_{M}\), \(\hat {\lambda }_{M}\), and \(\widehat {s(t_{\,0})}_{M}\) are given by

respectively, where \(Z_{\frac {\alpha }{2}}\) is the percentile \((1\,-\,{\frac {\alpha }{2}})\) of the standard normal distribution.

Bayesian estimation

It is assumed that β and λ have two independent gamma priors with the hyper parameters a1>0 and b1>0 for β; and a2>0 and b2>0 for λ. That is,

Moreover, Jeffrey’s priors can be obtained as special cases of (24) by substituting a1 = b1 = a2 = b2 = 0.

The hyper parameters can be chosen to suit the prior belief of the experimenter in terms of location and variability of the prior distribution.

Combining (6) and (24), the joint posterior density function of β and λ is then given by

where T1 and T2 are as given in (6). The Bayes estimate of any function g(β, λ) under a squared error loss function (SEL) is given by

The integrals involved in (26) are usually not obtainable in closed form, but Lindley’s approximation [12] may be used to compute such ratio of integrals. It cannot however be used to construct credible intervals. Therefore, following Kundu and Howlader [11], we approximate (26) by using Gibb’s sampling procedure to draw MCMC samples, which can be used to compute the Bayes estimates and also to construct their corresponding credible intervals as suggested by Chen and Shao [13]. We propose the following two different importance sampling techniques.

First importance sampling technique (IS1)

The joint posterior density function (25) can be rewritten as

where \(\pi ^{\star }_{1}(\beta |\lambda,\,{\mathbf {x}})\) is a gamma density function given by

\(\pi ^{\star }_{2}(\lambda |{\mathbf {x}})\) is a proper density function given by

and

Now, since \(\pi ^{\star }_{1}(\beta |\lambda,\,{\mathbf {x}})\) follows a gamma distribution then, it is quite simple to generate from it. On the other hand, although the function \(\pi ^{\star }_{2}(\lambda |{\mathbf {x}})\) is a proper density, we can use the method developed by Devroye [14] for generating λ. This method requires to ensure that (29) has a log-concave density function property. Therefore, the following theorem is needed.

Theorem 2

The function \(\pi ^{\star }_{2}(\lambda |{\mathbf {x}})\), given by (29), has a log-concave density function.

Proof. See the “Appendix” section.

Using Theorem 2, a simulation-based consistent estimate of g(β, λ) can be obtained by using the following algorithm.

Algorithm 1.

Step 1: Generate λ from \(\pi ^{\star }_{2}(\cdot |{\mathbf {x}})\), by using the method developed by Devroye [14].

Step 2: Generate β from \(\pi ^{\star }_{1}(\cdot |\lambda,\,{\mathbf {x}})\).

Step 3: Repeat Steps 1 and 2 to obtain (βi,λi), i=1, 2, ⋯, M.

Step 4: For i=1, 2, ⋯, M, calculate gi as g(βi, λi); and ωi as \(\frac {h_{3}(\beta _{i},\,\lambda _{i})}{\sum _{i=1}^{M}\, h_{3}(\beta _{i},\,\lambda _{i})},\) where h3(β, λ) is as given by (30).

Step 5: Under a SEL function, an approximate Bayes estimate of g(β, λ) and its corresponding estimated variance can be, respectively, obtained as

Second importance sampling technique (IS2)

In this technique, we will start with another rewriting to the joint posterior density function (25) as

where \(\pi ^{\star }_{1}(\beta |\lambda,\,{\mathbf {x}})\) is as given by (28), while \(\pi ^{\star }_{3}(\lambda |{\mathbf {x}})\) is a gamma density function given by

This is true, since b2>0 and \(\frac {x_{r}}{x_{j}}>1,\, j=1,\,2,\,\cdots r-1\). Therefore,

In this technique, since \(\pi ^{\star }_{1}(\beta |\lambda,\,{\mathbf {x}})\) and \(\pi ^{\star }_{3}(\lambda,\,{\mathbf {x}})\) follow a gamma distribution each, it is quite simple to generate from them. Therefore, it is straight forward that a simulation-based consistent estimate of g(β,λ) can be obtained using the following algorithm:

Algorithm 2.

Step 1: Generate λ⋆ from \(\pi ^{\star }_{3}(\cdot |{\mathbf {x}})\).

Step 2: Generate β⋆ from \(\pi ^{\star }_{1}(\cdot |\lambda ^{\star },\,{\mathbf {x}})\).

Step 3: Repeat Steps 1 and 2 to obtain \((\beta ^{\star }_{i},\lambda ^{\star }_{i})\), i=1, 2, ⋯, M.

Step 4: For i=1,2, ⋯, M, calculate \(g^{\star }_{i}\) as \({g(\beta ^{\star }_{i},\,\lambda ^{\star }_{i})}\); and \(\omega ^{\star }_{i}\) as \(\frac {h_{4}\left (\beta ^{\star }_{i},\,\lambda ^{\star }_{i}\right)}{\sum _{i=1}^{M} h_{4}\left (\beta ^{\star }_{i},\lambda ^{\star }_{i}\right)},\) where h4(β, λ) is as given by (34).

Step 5: In this case, based on a SEL function, the approximate Bayes estimate of g(β, λ) and its corresponding estimated variance can be, respectively, obtained as

By using the idea of Chen and Shao [13], based on (gi, ωi) (or \((g^{\star }_{i},\,\omega ^{\star }_{i})\)), i = 1,2,⋯,M, the (1 − α) 100 % highest posterior credible interval of g(β, λ) related to IS1 (or IS2) technique can be easily obtained.

Simulation study

This section is devoted to compare the performance of the proposed Bayes estimators with the MLEs, we carry out a simulation study using different sample sizes (n), different effective sample sizes (r), and for different priors (non-informative and informative). For prior information, we have used non-informative prior, prior 1 with a1 = b1 = a2 = b2 =0, and informative prior, prior 2 with a1 = 2, b1 = 4, a2 = 3, and b2 =4.

The IMSL [15] routines DRNUN and DRNGAM are used in the generation of the uniform and gamma random variates, respectively.

In computing the estimates, first we generate β and λ from gamma (a1, b1) and gamma (a2, b2) distributions, respectively. These generated values are β0 = 0.5439 and λ0 = 0.7468. The corresponding value of the reliability function calculated at t0 = 0.9 is 0.8299. Second, we generate 5000 samples from the GB distribution with β = 0.5439 and λ = 0.7468. For the importance sampling techniques (IS1 and IS2), we set M=15,000, when we apply Algorithm 1 or 2. The average estimate of 𝜗⋆ and the associated mean squared error (MSEs) are computed, respectively, as:

where \(\vartheta ^{\star }_{k}\) stands for an estimator (ML or Bayes) of β, λ, or s(0.9), at the kth iteration, and 𝜗 stands for β0 = 0.5439, λ0 = 0.7468, or s(0.9) = 0.8299.

The computational results are displayed in Tables 1, 2, and 3, where the first entry in each cell is for the average estimate and the second entry, which is given in parentheses, is for the corresponding MSE. It has been noticed from Tables 1, 2, and 3, that

1) As expected, the MSEs of all estimates (ML or Bayes) decrease as n or r increases.

2) The Bayes estimators under prior 1 or prior 2 by using IS2 technique are mainly better than the corresponding estimators by using IS1 technique in terms of in terms of average bias and MSE.

3) In all cases, the MSEs of the MLEs are less than the corresponding Bayes estimators under prior 1 by using IS1 technique.

On the other hand, the performances in terms of average bias and the MSE of the Bayes estimators under prior 1 by using IS2 technique and the MLE are very similar.

4) For small and moderate sample or censoring sizes, the Bayes estimators under prior 2 by using IS2 technique clearly outperform the MLEs in terms of average bias and MSE.

5) For large sample or censoring sizes, the performances in terms of average bias and the MSE of the Bayes estimators under prior 2 with IS2 technique and the MLE are very similar.

Data analysis

This section concerns with illustration of the methods presented in the “Maximum likelihood estimation” and “Bayesian estimation” sections, where a real data set is considered. This data set is from Hinkley [16] and consists of thirty successive values of March precipitation in Minneapolis/St. Paul. The data set points are in inches as follows:

0.32, 0.47, 0.52, 0.59, 0.77, 0.81, 0.81, 0.9, 0.96, 1.18, 1.20, 1.20, 1.31, 1.35, 1.43, 1.51, 1.62, 1.74, 1.87, 1.89, 1.95, 2.05, 2.10, 2.20, 2.48, 2.81, 3.0, 3.09, 3.37, 4.75.

This data is used by Barreto-Souza and Cribari-Neto [17] in fitting the generalized exponential-Poisson distribution (GEP), and by Abd-Elrahman [1, 9] in fitting the Bilal and GB distributions. For the complete sample case, the MLEs of β and λ, respectively, are 0.4168 and 1.2486, which are obtained as described in the “Maximum likelihood estimation” section with r = n. The negative of the log likelihood, Kolmogorov-Smirnov (K-S) test statistics and its corresponding p value related to these MLEs are 38.1763, 0.0532, and 1.0, respectively. Based on this p value, it is clear that the GB distribution is found to fit the data very well. These results agree with the results in Abd-Elrahman [1], where in (2) the MLEs of θ and λ are equal to 0.4168−1/1.2486=2.016 and 1.2486, respectively.

If only the first 20 data points are observed, the corresponding sample mean and CV of this 20 observed sample points are 1.1225 and 0.4206, respectively. Equating the right hand side of (12) by 0.4206 and solving for λ would results in the unique solution λ0 = 1.7385. Based on this value of λ, it follows from (9) that β0 is calculated as 0.6147. The iterative scheme, which is described in the “Maximum likelihood estimation” section, starts with the initials λ(0) = 1.7385 and β(0) = 0.6147. The estimates of β and λ, converge to \(\hat \beta _{M}\,=\,0.41417\) and \(\hat \lambda _{M}\,=\, 1.29926\) with a level of accuracy less than 1.2×10−10 of the absolute relative errors. From these data, we have

Hence,

Therefore, the estimated variance-covariance matrix of \(\hat {\beta }_{M}\) and \(\,\hat \lambda _{M}\) is

Therefore, the standard errors of the MLEs of β and λ are 0.07576 and 0.24595, respectively.

The MLE of s(0.9) and its corresponding asymptotic standard error are 0.78002 and 0.06340, respectively. The 99 % ACIs for β, λ, and s(0.9) are (0.21897, 0.60938), (0.66575, 1.93278), and (0.61672, 0.94331), respectively.

On the other hand, the simulation study given in the “Simulation study” section shows that, the Bayes estimators by using IS2 technique is better than the corresponding estimators obtained by using IS1 technique in terms of average bias and MSE. Therefore, under non-informative prior, we compute Bayes estimate by generating an importance sample of size M = 15,000 with their corresponding importance weights according to Algorithm 2. The Bayes estimates of β, λ, and s(0.9), and their corresponding standard errors (given in parentheses), respectively, are \(\hat \beta _{IS2}= 0.39034 \, (0.04907)\), \(\hat \lambda _{IS2}= 1.34910\, (0.19207)\), and \(\widehat {s(0.9)}_{IS2}=0.79899\, (0.03866)\). The 99 % credible intervals for β, λ, and s(0.9) are (0.24320, 0.43781), (0.85632, 1.92996), and (0.73657, 0.91060), respectively.

Concluding remarks

(1) In this article, the ML and Bayes estimation of the parameters as well as the reliability function of the GB distribution based on a given type-II censored sample are obtained.

(2) The existence and uniqueness theorem for the ML estimator of the population parameter λ, when β is assumed to be known, is established. An iterative procedure for finding the ML estimators of the two unknown population parameters is also provided. The elements of the FIM are obtained, and they have been used in turn for calculating the asymptotic confidence intervals of λ, β, and the reliability function.

(3) Two different importance sampling techniques have been proposed, which can be used for further Bayesian studies.

Appendix

Proof of Theorem 1

It follows from (10) that the second of lnL(β, λ|x) w.r.t λ is given by

where \(z\,=\,{\beta \,x^{\lambda }_{r}}\), f1(z) = ez[z + ez(1 − e−z)(3 − 2 e−z)], \(y_{j}\,=\, {\beta \,x^{\lambda }_{j}}\), j=1, 2, ⋯, r, and \(\phantom {\dot {i}\!}f_{2}(y_{j})\,=\, 2\, {e^{2\,y_{j}}}\,-\,5\, {e^{y_{j}}}\!+3\,+\, y_{j}\, {e^{y_{j}}}\).

Now, in order to prove that \({\mathcal {G}}_{2}(\beta,\,\lambda |{\mathbf {x}})<0\),

it is sufficient to show that f1(z)>0 and f2(yj)>0. It is clear that f1(z)>0. On the other hand, by expanding the exponential functions involved in f2(yj) about z = 0, f2(yj) can be rewritten as

Therefore, \(\frac {\partial ^{2}{\ln L(\beta,\,\lambda |{\mathbf {x}})}}{\partial {\lambda ^{2}}}<0\). This implies that the ML estimate, \(\hat \lambda _{M}\), for λ is unique.

To insure that \(\hat \lambda _{M}\) exists, following Balakrishnan et al. [18], we rewrite (10) as h1(λ)=h2(λ), where h1(λ)=r/λ and

where W1 and W2j, j=1, 2, ⋯, r, are as given in (10).

Note that,

where \(\eta _{1}(\beta)\,=\,{\frac {\beta }{3\,e^{\beta }-2}}\), \(\eta _{2}(\beta)\,=\,\frac {\beta }{e^{\beta }-1}\),

Furthermore, it follows from (A1), that

which implies that ℓ1<ℓ2. Therefore, h2(λ) is an increasing function of λ. But h1(λ) is a positive strictly decreasing function with right limit +∞ at 0. This insures that h1(λ) = h2(λ) holds exactly once at some value λ=λ◇. Hence, the theorem is proved.

Proof of Theorem 2

It follows from (29) that, the second derivative of the logarithm base e of \(\pi ^{\star }_{2}(\lambda |{\mathbf {x}})\) w.r.t. λ is given by

where \(\xi (\lambda)\,=\,\frac {b_{1}}{2}+(n\,-\,r)\,{x^{\lambda }_{r}}+\sum _{j=1}^{r}{x^{\lambda }_{j}}\). In order to show that \(\frac {{{\mathrm {d}\!\,}}^{2}{\ln \left \{\pi ^{\star }_{2}(\lambda |{\mathbf {x}})\right \}}}{{\mathrm {d}\!\,} \lambda ^{2}}<0\), it is sufficient to show that ξ1=ξ″(λ) ξ(λ) − {ξ′(λ)}2>0. This is true, because

Hence, the theorem is proved.

Abbreviations

- CDF:

-

Cumulative distribution function

- CV:

-

Coefficient of variation

- FIM:

-

Fisher information matrix

- GB:

-

The generalized Bilal

- GEP:

-

The generalized exponential-Poisson distribution

- IMSL:

-

International Mathematical and Statistical Library

- IS1:

-

First importance sampling technique

- IS2:

-

Second importance sampling technique

- K-S:

-

Kolmogorov-Smirnov test statistic

- MCMC:

-

Markov Chain Monte Carlo

- ML:

-

Maximum likelihood

- MLE:

-

The maximum likelihood estimate

- MSE:

-

Mean squared error

- PDF:

-

Probability density function

- SEL:

-

Squared error loss function

References

Abd-Elrahman, A. M.: A new two-parameter lifetime distribution with decreasing, increasing or upside-down bathtub shaped failure rate. Commun. Stat-Theor. M. 46(18), 8865–8880 (2016).

Ahmad, K. E., Moustafa, H. M., Abd-ELrahman, A. M.: Approximate Bayes estimation for mixtures of two Weibull distributions under type-2 censoring. J. Stat. Comput. Sim. 58, 269–285 (1997).

Raqab, M. Z.: Exact bounds for the mean of total time on test under type-II censoring samples. J. Stat. Plan. Infer. 134(2), 318–331 (2005).

Wu, J., Wu, C., Tsai, M.: Optimal parameter estimation of the two-parameter bathtub-shaped lifetime distribution based on a type II right censored sample. Appl. Math. Comput. 167(2), 807–819 (2005).

Chana, P. S., Ngb, H. K. T., Balakrishnanc, N., Zhoud, Q.: Point and interval estimation for extreme-value regression model under type-II censoring. Comput. Stat. Data Anal. 52, 4040–4058 (2008).

ElShahat, M. A. T., Mahmoud, A. A. M.: A study on the mixture of exponentiated–Weibull distribution part ii (the method of Bayesian estimation). Pak. J. Stat. Oper. Res.XII(4), 709–737 (2016).

Abd-Elrahman, A. M., Niazi, S. F.: Approximate Bayes estimators applied to the Bilal model. J. Egypt. Math. Soc. 25, 65–70 (2017). http://doi.org/10.1016/j.joems.2016.05.001.

Dekking, F. M., Kraaikamp, C., Lopuhaa, H. P., Meester, L. E.: A modern introduction to probability and statistics: understanding why and how. Springer Science+Business Media springeronline.com Copyright Springer-Verlag London Limited (2005). ISBN 1-85233-896-2.

Abd-Elrahman, A. M.: Utilizing ordered statistics in lifetime distributions production: a new lifetime distribution and applications. J. Probab. Stat. Sci. 11(2), 153–164 (2013).

Ng, H. K. T., Chan, P. S., Balakrishnan, N.: Estimation of parameters from progressively censored data using EM algorithm. Comput. Stat. Data Anal. 39, 371–386 (2002).

Kundu, D., Howlader, H.: Bayesian inference and prediction of the inverse Weibull distribution for type-II censored data. Comput. Stat. Data Anal. 54, 1547–1558 (2010).

Lindley, D. V.: Approximate Bayesian method. Trabajos de Estadistica. 31, 223–237 (1980).

Chen, M. -H., Shao, Q. -M.: Monte Carlo estimation of Bayesian credible and HPD intervals. J. Comput. Graph. Stat. 8(1), 69–92 (1999).

Devroye, L.: A simple algorithm for generating random variates with a log-concave density function. Comput. 33, 247–257 (1984).

IMSL: IMSL STAT/LIBRARY user’s manual. IMSL, Inc, Houston (1991). https://m.tau.ac.il/~vaxman/imsl/imsl1_77.pdf.

Hinkley, D.: On quick choice of power transformations. Appl. Stat. 26, 67–96 (1977).

Barreto-Souza, W., Cribari-Neto, F.: A generalization of the exponential-Poisson distribution. Stat. Probab. Lett. 79, 2493–2500 (2009).

Balakrishnan, N, Kateri, M: On the maximum likelihood estimation of parameters of Weibull distribution based on complete and censored data. Stat. Probab. Lett. 78, 2971–2975 (2008).

Acknowledgements

The author would like to express my sincere thanks to to the editors and the referees for their helpful comments, which improved the current presentation of this article. On the other hand, the author contributed this article in the: “International Conference on Mathematics, Trends and Development (ICMTD17), The Egyptian Mathematical Society, 28 – 30 DEC. 2017, Cairo, Egypt”. Its ID number is: STA - 12.

Funding

The author declares that he had no funding.

Author information

Authors and Affiliations

Contributions

The author read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares that he have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License(http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Abd-Elrahman, A. Reliability estimation under type-II censored data from the generalized Bilal distribution. J Egypt Math Soc 27, 1 (2019). https://doi.org/10.1186/s42787-019-0001-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s42787-019-0001-5

Keywords

- Maximum likelihood estimation

- Fisher information matrix

- Bayesian estimations

- Gibb’s sampling

- Importance sampling techniques