Abstract

Background

The study tests the effects of data collection modes on patient responses associated with the multi-item measures such as Patient-Reported Outcomes Measurement System (PROMIS®), and single-item measures such as Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE), and Numerical Rating Scale (NRS) measures.

Methods

Adult cancer patients were recruited from five cancer centers and administered measures of anxiety, depression, fatigue, sleep disturbance, pain intensity, pain interference, ability to participate in social roles and activities, global mental and physical health, and physical function. Patients were randomized to complete the measures on paper (595), interactive voice response (IVR, 596) system, or tablet computer (589). We evaluated differential item functioning (DIF) by method of data collection using the R software package, lordif. For constructs that showed no DIF, we concluded equivalence across modes if the equivalence margin, defined as ± 0.20 × pooled SD, completely surrounds 95% confidence intervals (CI's) for difference in mean score. If the 95% CI fell totally outside the equivalence margin, we concluded systematic score difference by modes. If the 95% CI partly overlaps the equivalence margin, we concluded neither equivalence nor difference.

Results

For all constructs, no DIF of any kind was found for the three modes. The scores on paper and tablet were more comparable than between IVR and other modes but none of the 95% CI’s were completely outside the equivalence margins, in which we established neither equivalence nor difference. Percentages of missing values were comparable for paper and tablet modes. Percentages of missing values were higher for IVR (2.3% to 6.5% depending on measures) compared to paper and tablet modes (0.7% to 3.3% depending on measures and modes), which was attributed to random technical difficulties experienced in some centers.

Conclusion

Across all mode comparisons, there were some measures with CI’s not completely contained within the margin of small effect. Two visual modes agreed more than visual-auditory pairs. IVR may induce differences in scores unrelated to constructs being measured in comparison with paper and tablet. The users of the surveys should consider using IVR only when paper and computer administration is not feasible.

Similar content being viewed by others

Background

Capturing patients’ perspectives of quality of life (QOL) effectively and efficiently is critical to designing and evaluating interventions to ameliorate the impact of cancer and its treatments. Patient-reported outcomes (PROs) provide a unique method of collecting these patient perspectives directly from the patient and without interpretation by health care providers or others. One of the issues being addressed in the PRO literature is whether the assumption that items are related to the construct in identical ways for all individuals when an instrument originally developed and used for a certain mode is modified for other modes of administration. A common method is paper and pencil self-administered questionnaire (PSAQ), in which the respondent marks responses on a paper questionnaire. Computerized self-administered questionnaire (CSAQ) is a method of data collection in which the respondent uses a computer (or mobile device) to complete a questionnaire. Interactive voice recording (IVR) system, an automated telephone system navigates the respondent through the questionnaire with recording of the questions and response options,—an alternative to computer-based data collection that allows a computer to detect voice and/or keypad inputs via telephone—brings about a myriad of other potential virtues such as convenience, affordability, reliability, and clinically feasibility. There have been recommendations to administer PROs electronically when possible in adult oncology [1], because it enables a comprehensive process for screening, feedback system with scores available to patients and/or providers in a timely fashion, service provision, and data management [1,2,3].

Recent studies, systematic reviews, and meta-analyses evaluating the equivalence of paper- versus computer-based electronic administration of PRO measures have found evidence of equivalence between the two [4,5,6,7,8,9,10]. However, most of these authors indicated that their findings could not be generalized to all forms of electronic PRO administration and all called for further testing of how PROs vary across data collection modes using randomized comparability trials.

Some studies evaluated the equivalence of the visual formats associated with paper- and computer screen-based administration and aural formats such as IVR [11,12,13]: With 112 patients answering 28 Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE) items in three formats (i.e., paper-, computer screen-, and IVR administration), Bennett, Dueck, Mitchell et al. [11] showed moderate to high equivalence among modes using randomized crossover design, in which each participant answered the same questionnaires with more than one mode. One limitation of their study is that the screen-based or IVR questionnaires incorporated conditional branching or skip patterns that paper mode did not, which may induce mode-specific response style or non-response. Lundy, Coons, Flood et al. [13] concluded mode equivalence for paper, handheld, tablet, IVR, and web for EQ-5D-5L, in which each participant answered the questionnaire using three modes. The order of the modes was varied among participants. However, there is possibility that participants recalled their responses to the previous set of questions answered with different modes. Bjorner, Rose, Gandek, et al. [12] used a randomized crossover design where 923 adults answered parallel Patient reported Outcomes Measurement Information System (PROMIS®) static forms in fatigue, depression, and physical function using IVR, paper, personal digital assistant, or personal computer. They supported lack of differential item functioning in three PROMIS domains using multigroup confirmatory factor analysis and item response theory (IRT) as well as lack of clinically significant score differences across modes.

In the clinical realm, IVR has a host of features such as ease of access, increased perceived anonymity and privacy, and greater researcher control than other modes [14]. The principal downside to the use of IVR in clinical settings is that researchers cannot assume that an instrument that has been shown to have intended dimensionality, reliability, responsiveness, or interpretability using visual format has same qualities in IVRS [14]. Weiler et al. [15] found that, while there were no differences in the amount of symptoms recorded by IVR versus paper versions of allergic rhinitis response diaries, there were more missing data with the IVR, and patients overwhelmingly preferred to enter their data via paper-and-pencil. In comparing three versions of the CAHPS survey (standard print, illustration-enhanced, and telephone IVR), Shea et al. [16] found that administration times were shorter for IVR among individuals with low literacy levels. However, longer administration times were seen for IVR relative to its paper counterparts for Spanish speakers with high literacy levels, while the completion times were similar across modes for English speakers with high literacy levels.

One must be mindful of the possible effects of switching administration modes and plan to formally evaluate the effects of switching modes on non-response (both scale-level and item-level) and measurement error. To our knowledge, there have not been studies investigating the mode effects for numerical rating scales (NRS). In addition, the current study systematically tests the effect of three data collection methods (i.e., PSAQ, CSAQ which is tablet in this study, and IVR) on patient responses and potential measurement error associated with the PROMIS, PRO-CTCAE, and NRS within a variety of domains such as global health, physical function, social function, anxiety, depression, fatigue, sleep disturbance, and pain. The current study uses the randomized parallel groups design, in which each participant sees or hears each question only once because each patient answers the questionnaires using only one mode. This design overcomes possible memory effect. With regard to forced response vs. allowing patients to skip items, in many applications of electronic data capture, missing is not allowed. In PSAQ, respondents cannot be forced to respond to every item. In order to minimize features not intrinsic to modes, we opted to forego forced response in the IVR and CSAQ and allowed patients' nonresponse at the item level for all modes. Allowing research participants to skip questions they don’t wish to answer is also consistent with our IRB’s position on questionnaire-based research.

Methods

Sample

This study is a part of a larger study whose primary aim was to assess the convergent validity of PROMIS, PRO-CTCAE, and NRS by comparing item responses for two groups based on ECOG PS (0–1 vs. 2–4). A secondary analysis was to assess the relationship between survival status and PRO scores. In order to achieve power for all primary and secondary analyses, the sample size for the primary study was based on a superiority analysis of survival between high and low PRO score groups. In the current equivalence study comparing modes of administration, we claim equivalence when the confidence interval of the difference in outcomes between modes is within a predetermined equivalence margin that represents a clinically acceptable range of difference. Using σ of 2 based on the normative data on overall QOL NRS that include cancer trial patients [17], Δ of 0.45 corresponding to an effect size of 0.225, which may start to be considered non-negligible on a 0–10 scale, 2-sided type I error level of 5%, and the sample size of 1184, we obtain 94% statistical power. Using Δ of 0.40 corresponding to an effect size of 0.20, we obtain 86% statistical power.

There were five participating sites (Mayo Clinic, M.D. Anderson, Memorial-Sloan-Kettering, Northwestern University, and University of North Carolina). Patients with a diagnosis of cancer who were initiating active anti-cancer treatment within the next seven days, were currently receiving anti-cancer treatment, or underwent surgery for cancer treatment in the past 14 days, were recruited in-person by research study associates/data managers when arriving at a participating institution for a cancer-related appointment. Patients were accrued from the main hospital sites and satellite clinics for each institution. Eligibility criteria included adults who possess the ability to use and understand the informed consent and privacy protection documentation (written in English) and interact with the data collection modes (i.e., read and answer questions on a computer screen, listen to questions and respond using an IVR telephone system, or fill out a paper questionnaire). Each eligible patient provided informed consent. Enrollment and distribution of accrual across disease groups and institutions were facilitated by a recruitment coordinator.

The resulting sample were randomized to PSAQ (n = 604), CSAQ (n = 603), or IVR (n = 602). Participants were asked to complete the questionnaires while at the clinic for their visit. A study coordinator handed the participant a folded paper questionnaire booklet (PSAQ arm), an iPad tablet computer (CSAQ arm) or directed the patient to a landline (i.e., hardwired to a telephone jack) telephone with a keypad (IVR arm). Twenty-eight patients across three arms did not respond at all, and one person switched from IVR to PSAQ. Excluding these patients, we analyzed the remaining 595 patients who received paper, 596 IVR, and 589 CSAQ.

Measures

We used PROMIS short forms and analogous NRS and PRO-CTCAE single-item rating scales. The PROMIS domains included in the study were emotional distress-anxiety, emotional distress-depression, fatigue, pain interference, pain intensity, physical function, satisfaction with social roles, sleep disturbance, global mental health, and global physical health. We administered nine version 1.0 short forms derived from PROMIS item banks: Anxiety 8a, Depression 8a, Fatigue 7a with two added items from another fatigue short form, Sleep Disturbance 8a, Pain Intensity 3a, Pain Interference 8a, Ability to participate in Social Roles and Activities 8a, Global Mental Health, Global Physical Health, and Physical Function 10a. The PROMIS scores are on T-score scale, which we used for comparing the average scores between modes, and we did not transform the T-scores to 0–100 scale.

National Cancer Institute (NCI)’s PRO-CTCAE is a pool of adverse symptom items for patient self-reporting in NCI-sponsored clinical trials. The CTCAE is an existing lexicon of clinician-reported adverse event items required for use in all NCI-sponsored trials. Patient versions of CTCAE symptom items is intended to provide clinicians with more comprehensive information about the patient experience with treatment when trials are completed and reported. The PRO-CTCAE item bank consists of five “types” of items (present/not present, frequency, severity, interference with usual or daily activities, and amount of symptom). In this study, we included frequency, severity and interference items for PRO-CTCAE. The response options were never, rarely, occasionally, frequently, and almost constantly for frequency; none, mild, moderate, severe, and very severe for severity; and not at all, a little bit, somewhat, quite a bit, and very much for interference items.

We used NRS items for overall health-related QOL, five major QOL domains (e.g., sleep, pain, anxiety, depression, and fatigue), and items for each domain for which a PROMIs measure exists (e.g., social and physical function). NRS scores and PRO-CTCAE scores are on 0–10 and 1–5 integer rating scales respectively. For comparing mean scores, NRS and PRO-CTCAE item scores were linearly transformed to 0–100 scales with higher scores indicating more of the construct in question (e.g., more fatigue, better physical function). Respondents answered 62 PROMIS items, 16 PRO-CTCAE items, and 11 NRS items.

Health literacy was measured by an item, “how confident are you filling out medical form by yourself?” Based on the findings by Chew et al. [18] of the screening threshold that optimizes both sensitivity and specificity, “Extremely” and “quite a bit” were coded as having adequate health literacy, and “somewhat”, “a little bit”, and “not at all” were coded as not having adequate health literacy.

Measurement equivalence or lack of differential item functioning

A critical step before using instruments to compare scores from different modes of administration is determining whether items have the same meaning to members of different groups [19]. Psychometric concern for measurement equivalence arises whenever group comparisons on observable scores are the focus of research [20]. Once measurement equivalence between modes of administration has been established, quantitative cross-mode comparisons can be meaningfully conducted.

The R software package, lordif [21], was used to evaluate differential item functioning (DIF) in each of the PROMIS scales. Item-level data for all three types of measures (i.e., PROMIS, PRO-CTCAE, and NRS) were entered for each construct tested. Lordif assesses DIF using a hybrid of ordinal logistic regression and IRT framework. The main objective of fitting an IRT model under lordif is to obtain IRT trait estimates to serve as matching criterion. We tested whether the combined item set is unidimensional by conducting confirmatory factor analyses (CFA) treating the items as ordinal and using WLSMV estimator with lavaan R package [22]. Model fit was evaluated based on the Comparative Fit Index (CFI ≥ 0.95 very good fit) and the Standardized Root Mean Square Error Residual (SRMR ≤ 0.08) [23]. We also estimated the proportion of total variance attributable to a general factor (i.e., coefficient omega, ωh) [24]: Values of 0.70 or higher suggest that the item set is sufficiently unidimensional for most analytic procedures that assume unidimensionality [25] McFadden pseudo R2 change criterion of ≥ 0.02 was used to flag items for DIF [26]. A value of pseudo R2 less than 0.02 indicates a lack of evidence of differential interpretation of an item across modes.

Comparison of means by modes of administration

If no DIF is found, then we can meaningfully interpret the differences in scores between modes. If differences in average scores between modes are observed, then we can conclude that these differences come purely from the characteristics of the modes rather than DIF. If DIF is found for a certain mode in a given domain, we would score patients using newly derived item parameters for that mode before conducting mean comparisons between modes.

We compared the percentages of missing values among modes using the equality of proportion test with χ2 test statistic to ensure missing values are not driving differences across the modes. For constructs where lack of DIF was established, we concluded equivalence across modes if the margin of small effect size, defined as ± 0.20 × pooled SD, completely surrounds 95% confidence intervals for difference in mean score. Here, one fifth of the pooled standard deviation indicates a small difference, following the observation by Coons et al. [27] that a “small” effect size difference is between 0.20 SD and 0.49 SD and that these values indicate minimal difference worthy of attention. If the 95% CI fell completely outside the margin, we concluded systematic score difference by modes. If the 95% CI partly overlaps the equivalence margin, we concluded neither equivalence nor difference.

Results

Sample

Patient characteristics by mode were similar, confirming successful randomization (Table 1). There were no significant differences in demographic characteristics among three modes: Distributions of age, proportions of male and female, proportion of non-white race, proportions of four different categories of education, being married, being employed or on sick leave, and having adequate health literacy had no statistically significant difference among modes of administration. In terms of medical characteristics, proportions of different types of cancers did not differ between modes as well as disease stage, ECOG performance score, types of cancer treatment in the past two weeks, and current treatment intention (i.e., curative or palliative). The only statistically significant difference in patient characteristics was found in proportion of Hispanic: 4% in PSAQ, 8% in CSAQ, and 5% in IVR arm.

Differential item functioning

For the item sets combining PROMIS, NRS, and PRO-CTCAE, the CFA fit statistics based on CFI and SRMR were excellent for global physical health, global mental health, anxiety, depression, fatigue, sleep disturbance, pain intensity, pain interference, and ability to participate in social roles and activities. For physical function, CFI was 0.982 but SRMR was 0.126. For all constructs, ωh values exceeded 0.70. For all constructs, no DIF of any kind was found for three modes (Table 2 and “Appendix 1”). All items in all analyses had a McFadden pseudo R2 change below the criterion that indicates DIF (< 0.02). Because there was no item exhibiting DIF among PSAQ, CSAQ, and IVR, we did not have to let items take different item parameter values depending on the modes. Across methods, patients interpreted items in similar ways.

Comparisons of scores based on modes

Table 3 has the summary scores for each of the domains and modes. The average PROMIS T- scores indicated that the study population was not demonstrably different from the general population in most constructs. The exception was in physical function: Physical function PROMIS scores of our sample were 0.6 SDs lower compared to the general population. The average difference scores between modes are presented along with the margins of small effect size in Table 4 for ease of comparison. The scores on PSAQ and CSAQ were the most similar in that 29 out of 37 CI’s of the mean differences were completely surrounded by the margins of small effect size, in which case we inferred equivalence. There were fewer results indicating equivalence between IVR and other modes: 14 out of 37 CI’s for CSAQ-IVR and 9 CI’s for PSAQ-IVR were within the margin of small effect sizes. For 95% CI’s that were not within the margin of small effect sizes, none of the CI’s were completely outside the margins, which was somewhat inconclusive in that it indicates neither equivalence nor difference. In some instances, the observed point estimate of outcome difference lied outside the equivalence margins, which indicated a clearer lack of equivalence. For example, the difference between CSAQ and IVR on NRS fatigue, 5.69, was outside the equivalence margin of [− 5.19, 5.19]. There were more of such differences between IVR and PSAQ in NRS anxiety, NRS depression, NRS fatigue, PRO-CTCAE anxiety items, a PRO-CTCAE item asking the frequency of feeling sadness, and a PRO-CTCAE item asking the severity of sleep difficulty. In general, those who responded on IVR reported higher function and lower symptoms compared to other modes, and this trend was most marked in IVR-PSAQ comparison.

Although IVR mode tended to elicit slightly higher patient-reported function and lower symptoms, there were some inconsistencies. For example, IVR scores on PROMIS global mental health were higher (despite small effect size), whereas for NRS, those in IVR arm reported lower QOL and mental/ emotional well-being items compared to CSAQ. We investigated whether this could be attributed to possible primacy (choosing the first option) on NRS items in IVR given the auditory nature of IVR (“Appendix 2”). Across all the single-item measures, after Bonferroni correction, there was a statistically significant primacy effect for NRS overall QOL in the IVR mode. In addition, there were two comparisons (i.e., (1) NRS emotional well-being and (2) NRS mental well-being) in which patients in the PSAQ and CSAQ were more likely to choose 10 compared to those in the IVR arm; thus, suggesting a recency effect (choosing the last option) for PSAQ and CSAQ.

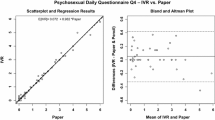

Percentages of missing values were comparable for PSAQ and CSAQ modes. However, percentages of missing values were slightly higher for IVR compared to PSAQ and CSAQ modes (Table 5). For multi-item scales (i.e., PROMIS short forms), we investigated whether some of the higher missing values for IVR could be due to fatigue and patients choosing not to respond to items that appear at the end of each scale. However, as “Appendix 3” shows, the percentages of missing values within each multi-item measure tended to be stable from the beginning to the end of each scale. In addition, the patients who did not respond to one scale tended to consistently not respond to other scales. Figure 1 shows that patients did not report more difficulty with IVR. All sites had more missingness from IVR than other modes. Some of these sites reported random technical problems of some data not being saved behind the scenes that patients were not aware of.

Patient preferences for three modes

The proportions of patients reporting that they were very comfortable answering surveys were about 6–7% lower for IVR than PSAQ and CSAQ (Fig. 2). Patients who were randomized to PSAQ (84%) and CSAQ (83%) were more likely to say that they are very willing to use PSAQ in the future (Fig. 3); The patients who were randomized to IVR were less likely (6–7% less likely) to respond that they are very willing to use PSAQ. Patients who were randomized to CSAQ were most likely to respond that they are very willing to use touchscreen computer or tablet in the future (18–22% more willing compared to other modes) (Fig. 4). Patients randomized to CSAQ were also 8–9% more willing to use regular computers with mouse and keyboard than those randomized to other modes (Fig. 5). Lastly, patients who were randomized to IVR were most likely to respond that they were very willing to use IVR (62% compared to 37% for PSAQ and 35% for CSAQ; Fig. 6). In general, the use of a specific mode positively influenced future receptiveness to that mode.

Discussion

The various patient demographic and medical characteristics mostly did not differ by mode. There was no DIF by mode using the lordif methods. Scores for IVR reflected higher function and lower symptoms on average than PSAQ and CSAQ for some domains and measures: When this pattern appeared, it was small effect size (e.g., 1 to 2 score point difference in PROMIS, 0.5 to 0.7 score difference in 0–10 NRS scale, and 0.17 to 0.34 score difference in 1–5 PRO-CTCAE scale). While the confidence intervals for the differences were not completely outside the equivalence margins, the straddle occurred most prominently for IVR compared with other modes in symptom domains. The highest agreement between PSAQ and CSAQ and lowest between PSAQ and IVR were also reported in the systematic review and meta-analysis of studies conducted between 2007 and 2013 [7]. Our randomized parallel groups design removes the potential practice effect, which may explain some of the non-equivalent findings between PSAQ and CSAQ that were not completely within the margin of small effect size.

Patients reporting lower symptoms and higher social or physical functions may suggest that the IVR that used recordings of a pleasant female voice in our study induced social desirability bias, where participants over-reported functional well-being and under-reported symptoms as if they were speaking with a real person. If so, this is consistent with the general survey research findings that auditory modes (e.g., IVR/ Phone) yield more positive responses than visual modes [28, 29]. However, social desirability bias for NRS could not be supported in the overall QOL and mental or emotional well-being, because IVR scores were lower on average than CSAQ. The finding that patients were less likely to choose the last option on NRS in these domains could partially explain this anomaly. It is possible that patients were less likely to press two digits to report the highest response option of 10, (i.e., one and zero), than a single digit on the keypad.

Conclusions

Because PRO instruments may be administered in a variety of ways, it is critical for the validity of the use of the scores to know if participants would provide the same answers regardless of the modes of administration. Across all comparisons PSAQ-CSAQ, CSAQ-IVR, IVR-PSAQ and across all three kinds of measures, PROMIS, NRS, and PRO-CTCAE, there were some mean differences with CI’s upper or lower limit exceeding the margin of small effect. In the current study, the two visual modes (i.e., PSAQ vs CSAQ) agreed more than visual-auditory pairs (i.e., PSAQ vs IVR or CSAQ vs IVR). Several point estimates of score difference lying outside the margin of equivalence suggest that the IVR mode may induce some real differences in scores that are unrelated to the construct being measured, in comparison with PSAQ and CSAQ, depending on the instruments and domains. Primacy effect was supported for IVR in NRS overall QOL and PRO-CTCAE anxiety frequency items. The tendency not to choose the last option was supported for IVR in NRS emotional and mental well-being items, which may be related to participants less willing to record 10 on keypad than single-digit numbers. The next step would to be conduct cognitive interviews to understand these effects for NRS items in IVR. Although the missing data percentages were small in general, there were more missing responses using IVR compared to other modes. The limitation of the study is that we could not differentiate patients who simply did not call in or who broke off the assessment from those whose data have not been saved due to technical problems. Further research should be conducted to understand what contributes to higher missing responses in IVR. In addition, considering some sites experienced issues regarding IVR data storage, the technical aspects of IVR implementation should be checked any time large data collection is planned through this mode. In their meta-analysis, Muehlhausen et al. [7] noted that further research into standards for IVR may be needed to support the equivalence between IVR and other platforms. Because of the non-conclusive equivalence, we may not yet need to consider adjusting for method of data collection when combining data collected via IVR with PSAQ or CSAQ for these PROs. However, because of the greater amount of inconclusive results for IVR, the users of the surveys should consider using IVR only when paper and computer administration is not feasible.

Availability of data and materials

Data can be made available upon reasonable request to the principal investigator (J. Sloan). All requests will be reviewed.

Abbreviations

- PROMIS:

-

Patient-Reported Outcomes Measurement System

- PRO-CTCAE:

-

Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events

- NRS:

-

Numerical rating scale

- IVR:

-

Interactive voice response

- DIF:

-

Differential item functioning

- PRO:

-

Patient-reported outcomes

- PSAQ:

-

Paper and pencil self-administered questionnaire

- CSAQ:

-

Computerized self-administered questionnaire

References

Smith SK, Rowe K, Abernethy AP (2014) Use of an electronic patient-reported outcome measurement system to improve distress management in oncology. Palliat Support Care 12(1):69–73

Kroenke K et al (2021) Choosing and using patient-reported outcome measures in clinical practice. Arch Phys Med Rehabil

Porter I et al (2016) Framework and guidance for implementing patient-reported outcomes in clinical practice: evidence, challenges and opportunities. J Comp Eff Res 5(5):507–519

Broering JM et al (2014) Measurement equivalence using a mixed-mode approach to administer health-related quality of life instruments. Qual Life Res 23(2):495–508

Campbell N et al (2015) Equivalence of electronic and paper-based patient-reported outcome measures. Qual Life Res 24(8):1949–1961

Gwaltney CJ, Shields AL, Shiffman S (2008) Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health 11(2):322–333

Muehlhausen W et al (2015) Equivalence of electronic and paper administration of patient-reported outcome measures: a systematic review and meta-analysis of studies conducted between 2007 and 2013. Health Qual Life Outcomes 13:167

Mulhern B et al (2015) Comparing the measurement equivalence of EQ-5D-5L across different modes of administration. Health Qual Life Outcomes 13:191

Rasmussen SL et al (2016) High level of agreement between electronic and paper mode of administration of a thyroid-specific patient-reported outcome, ThyPRO. Eur Thyroid J 5(1):65–72

Rutherford C et al (2016) Mode of administration does not cause bias in patient-reported outcome results: a meta-analysis. Qual Life Res 25(3):559–574

Bennett AV et al (2016) Mode equivalence and acceptability of tablet computer-, interactive voice response system-, and paper-based administration of the U.S. National Cancer Institute’s Patient-Reported Outcomes version of the Common Terminology Criteria for Adverse Events (PRO-CTCAE). Health Qual Life Outcomes 14:24

Bjorner JB et al (2014) Difference in method of administration did not significantly impact item response: an IRT-based analysis from the Patient-Reported Outcomes Measurement Information System (PROMIS) initiative. Qual Life Res 23(1):217–227

Lundy JJ et al (2020) Agreement among paper and electronic modes of the EQ-5D-5L. Patient Patient Cent Outcomes Res 13(4):435–443

Abu-Hasaballah K, James A, Aseltine RH Jr (2007) Lessons and pitfalls of interactive voice response in medical research. Contemp Clin Trials 28(5):593–602

Weiler K et al (2004) Quality of patient-reported outcome data captured using paper and interactive voice response diaries in an allergic rhinitis study: is electronic data capture really better? Ann Allergy Asthma Immunol 92(3):335–339

Shea JA et al (2008) Adapting a patient satisfaction instrument for low literate and Spanish-speaking populations: comparison of three formats. Patient Educ Couns 73(1):132–140

Singh JA et al (2014) Normative data and clinically significant effect sizes for single-item numerical linear analogue self-assessment (LASA) scales. Health Qual Life Outcomes 12:187

Chew LD et al (2008) Validation of screening questions for limited health literacy in a large VA outpatient population. J Gen Intern Med 23(5):561–566

Edwards MC, Houts CR, Wirth RJ (2018) Measurement invariance, the lack thereof, and modeling change. Qual Life Res 27(7):1735–1743

Meredith W, Teresi JA (2006) An essay on measurement and factorial invariance. Med Care 44:S69-77

Choi SW, Gibbons LE, Crane PK (2011) lordif: an R package for detecting differential item functioning using iterative hybrid ordinal logistic regression/item response theory and Monte Carlo simulations. J Stat Softw 39(8):1–30

Rosseel Y (2012) lavaan: an R package for structural equation modeling. J Stat Softw 48(2):1–36

Mueller RO, Hancock GR (2008) Best practices in structural equation modeling. In: Osborne J (ed) Best practices in quantitative methods. Sage Publications, Thousand Oaks, pp 488–508

McDonald RP (1999) Test theory: a unified treatment. Erlbaum, Mahwah

Reise SP, Scheines R, Widaman KF, Haviland MG (2013) Multidimensionality and structural coefficient bias in structural equation modeling: a bifactor perspective. Educ Psychol Measur 73:5–26

Condon DM et al (2020) Does recall period matter? Comparing PROMIS((R)) physical function with no recall, 24-hr recall, and 7-day recall. Qual Life Res 29(3):745–753

Coons SJ et al (2009) Recommendations on evidence needed to support measurement equivalence between electronic and paper-based patient-reported outcome (PRO) measures: ISPOR ePRO Good Research Practices Task Force report. Value Health 12(4):419–429

Elliott MN et al (2013) A randomized experiment investigating the suitability of speech-enabled IVR and Web modes for publicly reported surveys of patients’ experience of hospital care. Med Care Res Rev 70(2):165–184

French KA, Falcon CN, Allen TD (2019) Experience sampling response modes: comparing voice and online surveys. J Bus Psychol 34:575–586

Acknowledgements

Not applicable.

Funding

This study was supported by National Cancer Institute Grants R01CA154537 (Sloan) and P30CA015083 (Diasio). Lee was additionally supported by the Robert D. and Patricia E. Kern Center for the Science of Health Care Delivery at Mayo Clinic.

Author information

Authors and Affiliations

Contributions

Conceptualization: All authors; Data analyses: Novotny, Lee; Original draft preparation: Lee, Beebe, Novotny; Review and editing: all authors; Funding acquisition and supervision: Sloan. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was reviewed by the IRB of each of the participating sites, and all patients provided consent to enter the study.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interest to report.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, M.K., Beebe, T.J., Yost, K.J. et al. Score equivalence of paper-, tablet-, and interactive voice response system-based versions of PROMIS, PRO-CTCAE, and numerical rating scales among cancer patients. J Patient Rep Outcomes 5, 95 (2021). https://doi.org/10.1186/s41687-021-00368-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41687-021-00368-0