Abstract

Purpose

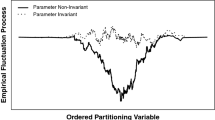

Measurement invariance issues should be considered during test construction. In this paper, we provide a conceptual overview of measurement invariance and describe how the concept is implemented in several different statistical approaches. Typical applications look for invariance over things such as mode of administration (paper and pencil vs. computer based), language/translation, age, time, and gender, to cite just a few examples. To the extent that the relationships between items and constructs are stable/invariant, we can be more confident in score interpretations.

Methods

A series of simulated examples are reported which highlight different kinds of non-invariance, the impact it can have, and the effect of appropriately modeling a lack of invariance. One example focuses on the longitudinal context, where measurement invariance is critical to understanding trends over time. Software syntax is provided to help researchers apply these models with their own data.

Results

The simulation studies demonstrate the negative impact an erroneous assumption of invariance may have on scores and substantive conclusions drawn from naively analyzing those scores.

Conclusions

Measurement invariance implies that the links between the items and the construct of interest are invariant over some domain, grouping, or classification. Examining a new or existing test for measurement invariance should be part of any test construction/implementation plan. In addition to reviewing implications of the simulation study results, we also provide a discussion of the limitations of current approaches and areas in need of additional research.

Similar content being viewed by others

Notes

This will allow us to avoid using awkward phrases such as “non-invariance” or “lack of invariance” from this point forward.

We focus on mean differences for simplicity. Many DIF procedures can also incorporate differences in variances as well.

References

Walton, M. K., Powers, J. H., Hobart, J., Patrick, D., Marquis, P., Vamvakas, S., et al. (2015). Clinical Outcome Assessments: Conceptual Foundation—Report of the ISPOR Clinical Outcomes Assessment—Emerging Good Practices for Outcomes Research. Value in Health, 18, 741–752.

Brundage, M., Blazeby, J., Revicki, D., Bass, B., de Vet, H., Duffy, H., et al. (2015). Patient-reported outcomes in randomized clinical trials: Development of ISOQOL reporting standard. Quality of Life Research, 22, 1161–1175.

Rothman, M., Burke, L., Erickson, P., Leidy, N. K., & Petrie, C. D. (2009). Use of existing patient-reported outcome (PRO) instruments and their modification: The ISPOR Good Research Practices for Evaluating and Documenting Content Validity for the Use of Existing Instruments and Their Modification PRO Task Force Report. Value in Health, 12, 1075–1083.

Patrick, D. L., Burke, L. B., Gwaltney, C. J., Leidy, N. K., Martin, M. L., Molsen, E., et al. (2011). Content validity—establishing and reporting the evidence in newly developed patient-reported outcomes (PRO) instruments for medical product evaluation: ISPOR PRO Good Research Practices Task Force Report: Part 1—eliciting concepts for a new PRO instrument. Value in Health, 14, 967–977.

Patrick, D. L., Burke, L. B., Gwaltney, C. J., Leidy, N. K., Martin, M. L., Molsen, E., et al. (2011). Content validity—establishing and reporting the evidence in newly developed patient-reported outcomes (PRO) instruments for medical product evaluation: ISPOR PRO Good Research Practices Task Force Report: Part 2—assessing respondent understanding. Value in Health, 14, 978–988.

U.S. Department of Health and Human Services, Food and Drug Administration, Center for Drug Evaluation and Research, Center for Biologics Evaluation and Research, Center for Devices and Radiological Health. Guidance for Industry Patient-Reported Outcome Measures: Use in Medical Product Development to Support Labeling Claims. http://www.fda.gov/downloads/Drugs/Guidances/UCM193282.pdf. Published December 2009. Accessed 5 May 2017.

Matza, L. S., Patrick, D., Riley, A. W., Alexander, J. J., Rajmil, L., Pleil, A. M., et al. (2013). Pediatric patient-reported outcome instruments for research to support medical product labeling: Report of the ISPOR PRO Good Research Practices for the Assessment of Children and Adolescents Task Force. Value in Health, 16, 461–479.

Staquet, M., Berzon, R., Osoba, D., & Machin, D. (1996). Guidelines for reporting results of quality of life assessments in clinical trials. Quality of Life Research, 5(5), 496–502.

Revicki, D. A., Osoba, D., Fairclough, D., Barofsky, I., Berzon, R., Leidy, N. K., et al. (2000). Recommendations on health-related quality of life research to support labeling and promotional claims in the United States. Quality of Life Research, 9(8), 887–900.

European Medicines Agency. (2005). Reflection paper on the regulatory guidance for the use of health-related quality of life (HRQL) measures in the evaluation of medicinal products. http://www.ema.europa.eu/docs/en_GB/document_library/Scientific_guideline/2009/09/WC500003637.pdf.

Byrne, B. M., Shavelson, R. J., & Muthen, B. (1989). Testing for the equivalence of factor covariance and mean structures: The issue of partial measurement invariance. Psychological Bulletin, 105, 456–466.

Meredith, W. (1993). Measurement invariance, factor analysis, and factorial invariance. Psychometrika, 58, 525–543.

Reise, S. P., Widaman, K. F., & Pugh, R. H. (1993). Confirmatory factor analysis and item response theory: Two approaches for exploring measurement invariance. Psychological Bulletin, 114, 552–566.

Thissen, D., Steinberg, L., & Wainer, H. (1993). Detection of differential item functioning using the parameters of item response models. In P. W. Holland & H. Wainer (Eds.), Differential item functioning (pp. 67–113). Hillsdale: Lawrence Erlbaum Associates, Inc.

Widaman, K. F., Ferrer, E., & Conger, R. D. (2010). Factorial invariance within longitudinal structural equation models: Measuring the same construct across time. Child Development Perspectives, 4(1), 10–18.

American Educational Research Association, The American Psychological Association, & The National Council on Measurement in Education. (2014). Standards for educational and psychological testing. Washington, DC: American Educational Research Association.

Teresi, J. (2006). Overview of quantitative measurement methods: Equivalence, invariance, and differential item functioning in health applications. Medical Care, 44(11), S39–S49.

Teresi, J. (2006). Different approaches to differential item functioning in health applications: Advantages, disadvantages and some neglected topics. Medical Care, 44(11), S152–S170.

Orlando Edelen, M., Thissen, D., Teresi, J., Kleinman, M., & Ocepek-Welikson, K. (2006). Identification of differential item functioning using item response theory and the likelihood-based model comparison approach: Application to the Mini-Mental State Examination. Medical Care, 44(11), S134–S142.

Langer, M. (2008). A reexamination of Lord’s Wald test for differential item functioning using item response theory and modern error estimation (Unpublished doctoral dissertation). Chapel Hill: University of North Carolina.

Thissen, D., & Orlando, M. (2001). Item response theory for items scored in two categories. In D. Thissen & H. Wainer (Eds.), Test scoring (pp. 73–140). New York: Lawrence Erlbaum Associates.

Wirth, R. J., & Edwards, M. C. (2007). Item factor analysis: Current approaches and future directions. Psychological Methods, 12, 58–79.

Edwards, M. C., & Edelen, M. O. (2009). Special topics in item response theory. In R. Millsap & A. Maydeu-Olivares (Eds.), Handbook of quantitative methods in psychology (pp. 178–198). New York, NY: Sage.

Woods, C. M., Cai, L., & Wang, M. (2012). The Langer-improved Walk test for DIF testing with multiple groups: Evaluation and comparison to two-group IRT. Educational and Psychological Measurement, 73, 532–547.

Hill, C.D. (2004). Precision of parameter estimates for the graded item response model. Unpublished manuscript. The University of North Carolina at Chapel Hill.

Cai, L. (2017). flexMIRT ® version 3.5: Flexible multilevel multidimensional item analysis and test scoring [Computer software]. Chapel Hill, NC: Vector Psychometric Group.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum Associates.

Cai, C., Thissen, D., & du Toit, S. H. C. (2011). IRTPRO for Windows [Computer software] (p. 2011). Lincolnwood, IL: Scientific Software International.

Chalmers, R. P. (2012). mirt: A multidimensional item response theory package for the R environment. Journal of Statistical Software, 48, 1–29.

Edwards, M. C., & Wirth, R. J. (2012). Valid measurement without factorial invariance: A longitudinal example. In J. R. Harring & G. R. Hancock (Eds.), Advances in longitudinal methods in the social and behavioral sciences (pp. 289–311). Charlotte, NC: Information Age Publishing.

Cohen, A. S., & Bolt, D. M. (2005). A mixture model analysis of differential item functioning. Journal of Educational Measurement, 42(2), 133–148.

Houts, C. R., Wirth, R. J., Edwards, M. C., & Deal, L. (2016). A review of empirical research related to the use of small quantitative samples in clinical outcome scale development. Quality of Life Research, 25, 2685–2691.

Woods, C. M. (2009). Evaluation of MIMIC-model methods for DIF testing with comparison to two-group analysis. Multivariate Behavioral Research, 44, 1–27.

Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: a practical and powerful approach to multiple testing. Journal of the Royal Statistical Society Series B (Methodological), 57, 289–300.

Edwards, M. C., & Wirth, R. J. (2009). Measurement and the study of change. Research in Human Development, 6, 74–96.

Rouquette, A., Hardouin, J. B., & Coste, J. (2016). Differential Item Functioning (DIF) and Subsequent Bias in Group Comparisons using a Composite Measurement Scale: A Simulation Study. Journal of Applied Measurement, 17, 312–334.

Coon, C., & Cappelleri, J. C. (2016). Interpreting change in scores on patient-reported outcome instruments. Therapeutic Innovation Regulatory Science, 50, 22–29.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The first and third author have a financial interest in the company which owns flexMIRT.

Ethical approval

This article does not contain any studies with human participants performed by the authors.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Edwards, M.C., Houts, C.R. & Wirth, R.J. Measurement invariance, the lack thereof, and modeling change. Qual Life Res 27, 1735–1743 (2018). https://doi.org/10.1007/s11136-017-1673-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-017-1673-7