Abstract

Neurological diseases are on the rise worldwide, leading to increased healthcare costs and diminished quality of life in patients. In recent years, Big Data has started to transform the fields of Neuroscience and Neurology. Scientists and clinicians are collaborating in global alliances, combining diverse datasets on a massive scale, and solving complex computational problems that demand the utilization of increasingly powerful computational resources. This Big Data revolution is opening new avenues for developing innovative treatments for neurological diseases. Our paper surveys Big Data’s impact on neurological patient care, as exemplified through work done in a comprehensive selection of areas, including Connectomics, Alzheimer’s Disease, Stroke, Depression, Parkinson’s Disease, Pain, and Addiction (e.g., Opioid Use Disorder). We present an overview of research and the methodologies utilizing Big Data in each area, as well as their current limitations and technical challenges. Despite the potential benefits, the full potential of Big Data in these fields currently remains unrealized. We close with recommendations for future research aimed at optimizing the use of Big Data in Neuroscience and Neurology for improved patient outcomes.

Similar content being viewed by others

Introduction

The field of Neuroscience was formalized in 1965 when the “Neuroscience Research Program” was established at the Massachusetts Institute of Technology with the objective of bringing together several varied disciplines including molecular biology, biophysics, and psychology to study the complexity of brain and behavior [1]. The methods employed by the group were largely data driven, with a foundation based on the integration of multiple unique data sets across numerous disciplines. As Neuroscience has advanced as a field, appreciation of the nervous system’s complexity has grown with the acquisition and analysis of larger and more complex datasets. Today, many Neuroscience subfields are implementing Big Data approaches, such as Computational Neuroscience [2], Neuroelectrophysiology [3,4,5,6], and Connectomics [7] to elucidate the structure and function of the brain. Modern Neuroscience technology allows for the acquisition of massive, heterogeneous data sets whose analysis requires a new set of computational tools and resources for managing computationally intensive problems [7,8,9]. Studies have advanced from small labs using a single outcome measure to large teams using multifaceted data (e.g., combined imaging, behavioral, and genetics data) collected across multiple international sites via numerous technologies and analyzed with high-performance computational methods and Artificial Intelligence (AI) algorithms. These Big Data approaches are being used to characterize the intricate structural and functional morphology of healthy nervous systems, and to describe and treat neurological disorders.

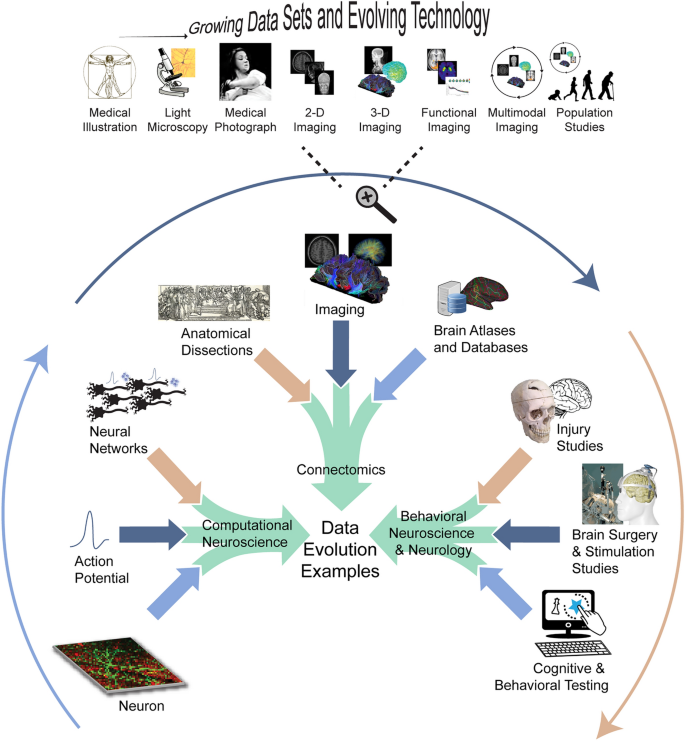

Jean-Martin Charcot (1825–1893), considered the father of Neurology, was a pioneering figure in utilizing a scientific, data-driven approach to innovate neurological treatments [10]. For example, in the study of multiple sclerosis (MS), once considered a general "nervous disorder" [10], Charcot's approach integrated multiple facets of anatomical and clinical data to delineate MS as a distinct disease. By connecting pathoanatomical data with behavioral and functional data, Charcot's work ultimately transformed our understanding and treatment of MS. Furthermore, Charcot’s use of medical photographs in his practice was an early instance of incorporating ‘imaging’ data in Neurology and Psychiatry [11]. Today, Neuroimaging, spurred on by new technologies, computational methods, and data types, is at the forefront of Big Data in Neurology [9, 12]—see Fig. 1. Current neurology initiatives commonly use large, highly heterogeneous datasets (e.g., neuroimaging, genetic testing, or clinical assessments from 1000s to 100,000s patients [13,14,15,16,17,18]) and acquire data with increasing velocity (e.g., using wearable sensors [6]) and technologies adapted from other Big Data fields (e.g., automatized clinical note assessment [19], social media-based infoveillance applications [16, 20]). Similar to how Big Data has spurred on Neuroscience, the exponentially growing size, variety, and collection speed of datasets combined with the need to investigate their correlations is revolutionizing Neurology and patient care (see Fig. 1).

Evolution of data types [21]. The evolution of Data types in the development of Computational Neuroscience can be traced from Golgi and Ramón y Cajal’s structural data descriptions of the neuron in the nineteenth century [22]; to Hodgkin, Huxley, and Ecceles’s biophysical data characterization of the “all-or-none” action potential during the early to mid-twentieth century [23]; to McCulloch and Pitts’ work on the use of ‘the "all-or-none" character of nervous activity’ to model neural networks descriptive of fundamentals of nervous system [24]. Similarly, Connectomics’ Data evolution [25] can be traced from Galen’s early dissection studies [26], to Wernicke’s and Broca’s postulations on structure and function [27], to imaging of the nervous system [28, 29], and brain atlases (e.g., Brodmann, Talairach) and databases [30, 31] into the Big Data field that is today as characterized by the Human Connectome Project [32] and massive whole brain connectome models [7, 33]. Behavioral Neuroscience and Neurology can be tracked from early brain injury studies [34] to stimulation and surgical studies [35, 36], to Big Data assessments in cognition and behavior [37]. All these fields are prime examples of the transformative impact of the Big Data revolution on Neuroscience and Neurology sub-fields

This paper examines the evolving impact of Big Data in Neuroscience and Neurology, with a focus on treating neurological disorders. We critically evaluate available solutions and limitations, propose methods to overcome these limitations, and highlight potential innovations that will shape the fields' future.

Problem definition

According to the United States (US) National Institutes of Health (NIH), neurological disorders affect ~ 50 M/yr. people in the US, with a total annual cost of hundreds of billions of dollars [38]. Globally, neurological disorders are responsible for the highest incidence of disability and rank as the second leading cause of death [39]. These numbers are expected to grow over time as the global population ages. The need for new and innovative treatments is of critical and growing importance given the tremendous personal and societal impact of diseases of the nervous system and brain.

Big Data holds great potential for advancing the understanding of neurological diseases and the development of new treatments. To comprehend how such advancements can occur and have been occurring, it is important to appreciate how this type of research is enabled, not only through methods classically used in clinical research in Neurology such as clinical trials but also via advancing Neuroscience research.

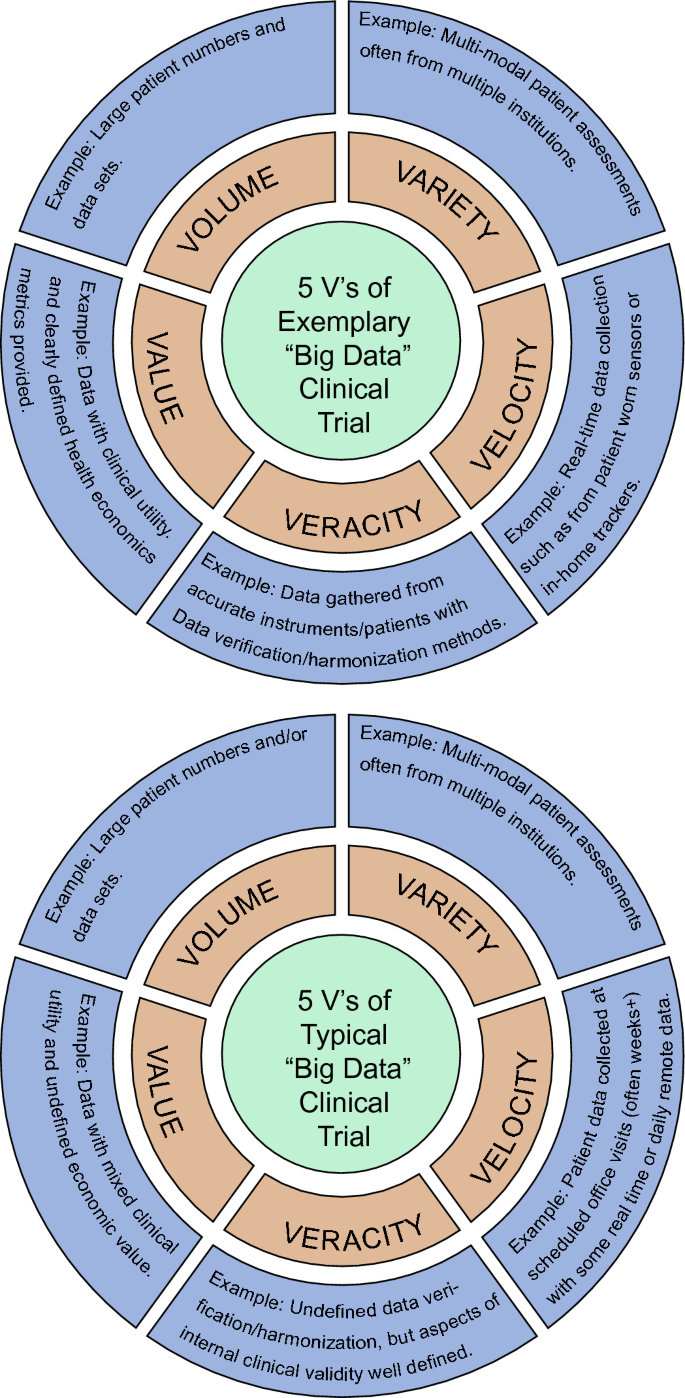

This paper aims to review how Big Data is currently used and transforming the fields of Neuroscience and Neurology to advance the treatment of neurological disorders. Our intent is not merely to survey the most prominent research in each area, but to give the reader a historical perspective on how key areas moved from an earlier Small Data phase to the current Big Data phase. For applications in Neurology, while numerous clinical areas are evolving with Big Data and exemplified herein (e.g., Depression, Stroke, Alzheimer’s Disease (AD)), we highlight its impact on Parkinson’s Disease (PD), Substance Use Disorders (SUD), and Pain to provide a varied, yet manageable, review of the impact of Big Data on patient care. To balance brevity and completeness, we summarize a fair amount of general information in tabular form and limit our narrative to exemplify the Big Data trajectories of Neurology and Neuroscience. Additionally, in surveying this literature, we have identified a common limitation; specifically, the conventional application of Big Data, as characterized by the 5 V’s (see Fig. 2), is often unevenly or insufficiently applied in Neurology and Neuroscience. The lack of standardization for the Big Data in studies across Neurology and Neuroscience as well as field-specific and study-specific differences in application limit the reach of Big Data for improving patient treatments. We will examine the reasons that contribute to any mismatch and areas where past studies have not reached their potential. Finally, we identify the limitations of current Big Data approaches and discuss possible solutions and opportunities for future research.

The 5 V’s. While the 5 V’s of Big Data (“Volume, Variety, Velocity, Veracity, and Value”) are clearly found in certain fields (e.g., social media) there are many "Big Data" Neuroscience and Neurology projects where categories are not explored or are underexplored. Many self-described “Big Data” studies are limited to Volume and/or Variety. Furthermore, most “Big Data” clinical trial speeds move at the variable pace of patient recruitment which can pale in comparison to the speeds of Big Data Velocity in the finance and social media spaces. “Big Data” acquisition and processing times are also sporadically detailed in the fields. Finally, there is not an accepted definition of data Veracity as it pertains to healthcare (e.g., error, bias, incompleteness, inconsistency) and Veracity can be assessed on multiple levels (e.g., from data harmonization techniques to limitations in experimental methods used in studies)

Our paper differs from other Big Data review papers in Neuroscience and/or Neurology (e.g., [12], [40,41,42,43]) as it specifically examines the crucial role of Big Data in transforming the clinical treatment of neurological disorders. We go beyond previous papers that have focused on specific subfields (such as network data (e.g., [44]), neuroimaging (e.g., [12]), stroke (e.g., [45]), or technical methodologies related to data processing (e.g., [46, 47]) and/or sharing (e.g., [48, 49]). Furthermore, our review spans a broad range of treatments, from traditional pharmacotherapy to neuromodulation and personalized therapy guided by Big Data methods. This approach allows for a comparison of the evolving impact of Big Data across Neurology sub-specialties, such as Pain versus PD. Additionally, we take a cross-disciplinary approach to analyze applications in both Neuroscience and Neurology, synthesizing and categorizing available resources to facilitate insights between neuroscientists and neurologists. Finally, our study appraises the present implementation of the Big Data definition within the fields of Neuroscience and Neurology. Overall, we differentiate ourselves in terms of scope, breadth, and interdisciplinary analysis.

Existing solutions

Big Data use in Neuroscience and Neurology has matured as a result of national and multi-national projects [40,41,42,43]. In the early to mid-2000’s, several governments started national initiatives aimed at understanding brain function, such as the NIH Brain Initiative in the US [50], the Brain Project in Europe [51, 52], and the Brain Mapping by Integrated Neurotechnologies for Disease Studies (Brain/MINDS) project in Japan [53]. Although not always without controversy [40, 51, 52], many initiatives soon became global and involved increasingly larger groups of scientists and institutions focused on collecting and analyzing voluminous data including neuroimaging, genetic, biospecimen, and/or clinical assessments to unlock the secrets of the nervous system (the reader is referred to Table 1 and Additional file 1: Table S1 for exemplary projects or reviews [40,41,42,43]). These projects spurred the creation of open-access databases and resource depositories (the reader is referred to Table 2 and Additional file 1: Table S2 for exemplary databases or reviews [41, 42]). The specific features of the collected data sets, such as large volume, high heterogeneity/variety, and inconsistencies across sites/missing data, necessitated the development of ad-hoc resources, procedures, and standards for data collection and processing. Moreover, these datasets created the need for hardware and software for data-intensive computing, such as supercomputers and machine learning techniques, which were not conventionally used in Neuroscience and Neurology [54,55,56,57,58]. Most significantly, the Big Data revolution is improving our understanding and treatment of neurological diseases, see Tables 3–6 and Additional file 1: Tables S3-S6.

National projects and big data foundations: Connectomes, neuroimaging, and genetics

The human brain contains ~ 100 billion neurons connected via ~ 1014 synapses, through which electrochemical data is transmitted [59]. Neurons are organized into discrete regions or nuclei and connect in precise and specific ways to neurons in other regions; the aggregated connections between all neurons in an individual comprises their connectome. The connectome is a term coined by Sporns et al. designed to be analogous to the genome; like the genome, the connectome is a large and complex dataset characterized by tremendous interindividual variability [60]. Connectomes, at the level of the individual or as aggregate data from many individuals, have the potential to produce a better understanding of how brains are wired as well as to unravel the “basic network causes of brain diseases” for prevention and treatment [60,61,62,63]. Major investments in human connectome studies in health and disease came in ~ 2009, when the NIH Blueprint for Neuroscience Research launched the Blueprint Grand Challenges to catalyze research. As part of this initiative, the Human Connectome Project (HCP) was launched to chart human brain connectivity, with two research consortia awarded approximately $40 M. The Wu-Minn-Ox consortium sought to map the brain connectivity (structural and functional) of 1200 healthy young adults and investigate the associations between behavior, lifestyle, and neuroimaging outcomes. The MGH-UCLA (Massachusetts General Hospital-University of California Los Angeles) consortium aimed to build a specialized magnetic resonance imager optimized for measuring connectome data. The Brain Activity Map (BAM) Project was later conceived during the 2011 London workshop “Opportunities at the Interface of Neuroscience and Nanoscience.” The BAM group proposed the initiation of a technology-building research program to investigate brain activity from every neuron within a neural circuit. Recordings of neurons would be carried out with timescales over which behavioral outputs or mental states occur [64, 65]. Following up on this idea, in 2013, the NIH BRAIN Initiative was initiated by the Obama administration, to “accelerate the development and application of new technologies that will enable researchers to produce dynamic pictures of the brain that show how individual brain cells and complex neural circuits interact at the speed of thought”. Other countries and consortia generated their own initiatives, such as the European Human Brain Project, the Japan Brain/MINDS project, Alzheimer’s Disease Neuroimaging Initiative (ADNI), Enhancing Neuroimaging Genetics through Meta-analysis (ENIGMA), and the China Brain Project. These projects aimed to explore brain structure and function, with the goal of guiding the development of new treatments for neurological diseases. The scale of these endeavors, and the insights they generated into the nervous system, were made possible by the collection and analysis of Big Data (see Table 1). Below, we succinctly exemplify ways in which Big Data is transforming Neuroscience and Neurology through the HCP (and similar initiatives), ADNI, and ENIGMA projects.

Connectome

Ways in which Big Data is transforming Neuroscience and Neurology are exemplified through advancements in elucidating the connectome (see for example Table 3 and Additional file 1: Table S3). Early studies in organisms such as the nematode C. elegans used electron microscopy (EM) to image all 302 neurons and 5000 connections of the animal [66], while analyses on animals with larger nervous systems collated neuroanatomical tracer studies to extract partial cerebral cortex connectivity matrices, e.g., cat [67] and macaque monkey [68, 69]. More recently, advancements in imaging and automation techniques, including EM and two-photon (2P) fluorescence microscopy, have enabled the creation of more complete maps of the nervous system in zebrafish and drosophila [7, 33, 70, 71]. Despite the diminutive size of their nervous systems, the amount of data is enormous. Scheffer and colleagues generated a connectome for portion of the central brain of the fruit fly “encompassing 25,000 neurons and 20 million chemical synapses” [7]. This effort required “numerous machine-learning algorithms and over 50 person-years of proofreading effort over ≈2 calendar years” processing > 20 TB of raw data into a 26 MB connectivity graph, “roughly a million fold reduction in data size” (note, a review of the specific computational techniques is outside this paper’s scope, see [7, 33, 58, 70, 71] for more examples). Thus, connectomes can be delineated in simple animal models; however, without automation and the capacity to acquire Big Data of this type, such a precise reconstruction could not be accomplished. Extending this detailed analysis to the human brain will be a larger challenge, as evidenced by the stark contrast between the 25,000 neurons analyzed in the above work and the 100 billion neurons and ~ 1014 synapses present in the human brain.

At present, the study of the human connectome has principally relied on clinical neuroimaging methods, including Diffusion Tensor Imaging (DTI) and Magnetic Resonance Imaging (MRI), to generate anatomical connectomes, and on neuroimaging techniques such as functional MRI (fMRI), to generate functional connectomes [9, 12]. For example, in what might be considered a “Small Data” step, P. van den Heuvel and Sporns, demonstrated “rich-club” organization in the human brain (“tendency for high-degree nodes to be more densely connected among themselves than nodes of a lower degree, providing important information on the higher-level topology of the brain”) via DTI and simulation studies based on imaging from 21 subjects focused on 12 brain regions [72]. This type of work has quickly become “Big Data” science, as exemplified by Bethlehem et al.’s study of “Brain charts for the human lifespan” which was based on 123,984 aggregated MRI scans, “across more than 100 primary studies, from 101,457 human participants between 115 days post-conception and 100 years of age” [13]. The study provides instrumental evidence towards neuroimaging phenotypes and developmental trajectories via MRI imaging. Human connectome studies are also characterized by highly heterogeneous datasets, owing to the use of multimodal imaging, which are often integrated with clinical and/or biospecimen datasets. For example, studies conducted under the HCP [32] have implemented structural MRI (sMRI), task fMRI (tfMRI), resting-state fMRI (rs-fMRI), and diffusion MRI (dMRI) imaging modalities, with subsets undergoing Magnetoencephalography (MEG) and Electroencephalography (EEG). These studies usually involve hundreds to thousands of subjects, such as the Healthy Adult and HCP Lifespan Studies [73]. While the above connectome studies have primarily focused on anatomical, functional, and behavioral questions, connectome studies are used across the biological sciences (e.g., study evolution by comparing mouse, non-human primates, and human connectomes [74]) and as an aid in assessing and treating neuropathologies (as will be elaborated on further below).

ADNI

In the same period that the NIH was launching its Neuroscience Blueprint Program (2005), it also helped launch the ADNI in collaboration with industry and non-profit organizations. The primary objectives of ADNI are to develop “biomarkers for early detection” and monitoring of AD; support “intervention, prevention, and treatment” through early diagnostics; and share data worldwide [75,76,77]. Its Informatics Core [78], which was established for data integration, analysis, and dissemination, was hosted at University of Southern California, and highlights the Big Data underpinnings of ADNI (https://adni.loni.usc.edu). ADNI was originally designed to last 5 years with bi-annual data collection of cognition; brain structural and metabolic changes via Positron Emission Technology (PET) and MRIs; genetic data; “and biochemical changes in blood, cerebrospinal fluid (CSF), and urine in a cohort of 200 elderly control subjects, 400 Mild Cognitive Impairment patients, and 200 mild AD patients" [75, 76, 79]. The project is currently in its fourth iteration, ADNI4, with funding through 2027 [80, 81]. To date, ADNI has enrolled > 2000 participants who undergo continuing longitudinal assessments. The ADNI study has paved the way for the diagnosis of AD through the usage of biomarker tests such as amyloid PET scans and lumbar punctures for CSF, and demonstrated that ~ 25% of people in their mid-70’s has a very early stage of AD (“preclinical AD”), which would have previously gone undetected. These results have helped encourage prevention and early treatment as the most effective approach to the disease.

ENIGMA

During the same period that major investments were beginning in connectome projects (2009), the ENIGMA Consortium was established [82, 83]. It was founded with the initial aim of combining neuroimaging and genetic data to determine genotype–phenotype brain relationships. As of 2022, the consortium included > 2000 scientists hailing from 45 countries and collaborating across more than 50 working groups [82]. These efforts helped spur on many discoveries, including genome-wide variants associated with human brain imaging phenotypes (see, the 60 + center large-scale study with > 30,000 subjects that provided evidence of the genetic impact on hippocampal volume [84, 85], whose reduction is possibly a risk factor for developing AD). The group has also conducted large scale MRI studies in multiple pathologies and showed imaging-based abnormalities or structural changes [82, 83] in numerous conditions, such as major depressive disorder (MDD) [86] and bipolar disorder [87]. Other genetics/imaging-based initiatives have made parallel advancements, such as the genome-wide association studies of UK Biobank [88,89,90], Japan’s Brain/MINDS work [53], and the Brainstorm Consortium [91]. For example, the Brainstorm Consortium assessed “25 brain disorders from genome-wide association studies of 265,218 patients and 784,643 control participants and assessed their relationship to 17 phenotypes from 1,191,588 individuals.” Ultimately, Big Data-based genetic and imaging assessments have permeated the Neurology space, significantly impacting patient care through enhanced diagnostics and prognostics, as will be discussed further below.

From discovery research to improved neurological disease treatment

The explosive development of studies spurred on by these national projects with growing size, variety, and speed of data, combined with the development of new technologies and analytics, has provoked a paradigm shift in our understanding of brain changes through lifespan and disease [7, 92,93,94,95,96], leading to changes in the investigation and treatment development for neurological diseases and profoundly impacting the field of Neurology. Over the past decade, such impact has occurred in multiple ways. First, Big Data has opened the opportunity to analyze combined large, incomplete, disorganized, and heterogenous datasets [97], which may yield more impactful results as compared to clean curated, small datasets (with all their external validity questions and additional limitations). Second, Big Data studies have improved our basic understanding (i.e., mechanisms of disease) of numerous neurological conditions. Third, Big Data has aided diagnosis improvement (including phenotyping) and subsequently refined the determination of a presumptive prognosis. Fourth, Big Data has enhanced treatment monitoring, which further aids treatment outcome prediction. Fifth, Big Data studies have recently started to change clinical research methodology and design and thus directly impact the development of novel therapies. In the remainder of this section, we will elaborate on the aforementioned topics, followed by the presentation of particular case studies in select areas of Neurology.

Opportunities and improved understanding

As introduced above, Big Data solutions have impacted our understanding of the fundamentals of brain sciences and disease, such as brain structure and function (e.g., HCP) and the genetic basis of disease (e.g., ENIGMA). Advancements in connectome and genetics studies, along with improved analytics, have advanced our understanding of brain changes throughout the lifespan and supported hypotheses linking abnormal connectomes to many neurological diseases [13, 72, 92, 98]. Studies have consistently shown that architecture and properties of functional brain networks (which can be quantified in many ways, e.g., with graph theoretical approaches [94]) correlate with individual cognitive performance and dynamically change through development, aging, and neurological disease states including neurodegenerative diseases, autism, schizophrenia, and cancer (see, e.g., [92, 93, 95, 96]). Beyond genetics and connectomes, Big Data methods are used in vast ways in brain research and the understanding of diseases, such as from brain electrophysiology [99], brain blood-flow [100], brain material properties [101], perceptual processing [102, 103], and motor control [104].

Diagnostics/prognostics/monitoring

Big Data methods are also increasing in prevalence in diagnostics and prognostics. For example, the US Veterans Administration recently reported on the genetic basis of depression based on analysis from > 1.2 M individuals, identifying 178 genomic risk loci, and confirming it in a large independent cohort (n > 1.3 M) [105]. Subsequent to the European Union (EU) neuGRID and neuGRID4You projects, Munir et. al. used fuzzy logic methods to derive a single “Alzheimer’s Disease Identification Number” for tracking disease severity [106]. Eshaghi et. al. identified MS subtypes via MRI Data and unsupervised machine learning [107] and Mitelpunkt et al. used multimodal data from the ADNI registry to identify dementia subtypes [108]. Big Data methods have also been used to identify common clinical risk factors for disease, such as gender, age, and geographic location for stroke [109] (and/or its genetic risk factors [110]). Big Data approaches to predict response to treatment are also increasing in frequency. For example, for depression, therapy choice often involves identifying subtypes of patients based on co-occurring symptoms or clinical history, but these variables are often not sufficient for Precision Medicine (i.e., predict unique patient response to specific treatment) nor even at times to differentiate patients from healthy controls [17, 111]. Noteworthy progress has been made in depression research, such as successful prediction of treatment response using connectome gradient dysfunction and gene expression [18], through resting state connectivity markers of Transcranial Magnetic Stimulation (TMS) response [17], and via a sertraline-response EEG signature [111]. As another example, the Italian I-GRAINE registry is being developed as a source of clinical, biological, and epidemiologic Big Data on migraine used to address therapeutic response rates and efficiencies in treatment [112].

Additionally, Big Data approaches of combining high volumes of varied data at high velocities are offering the potential for new "real-time" biomarkers [113]. For instance, data collected with wearable sensors has been increasingly used in clinical studies to monitor patient behavior at home or in real-world settings. While the classic example is the use of EEG for epilepsy [114], numerous other embodiments can be found in the literature. For example, another developing approach is utilizing smartphone data to evaluate daily changes in symptom severity and sensitivity to medication in PD patients [115]. This approach has led to a memory test and simple finger tapping and to track the status of study participants [116]. Collectively, these examples highlight Big Data’s potential for facilitating participatory Precision Medicine (i.e., tailored to each patient) in trials and clinical practice (which is covered in more detail in Sect. “Proposed Solutions”).

Evolving evaluation methods

The way in which new potential neurological therapies are being developed is also changing. Traditionally, Randomized Controlled Trials (RCTs) evaluate the safety and efficacy of potential new treatments. In an RCT the treatment group is compared to a control or placebo group, in terms of outcome measures, at predefined observation points. While RCTs are the gold standard for developing new treatments, they have several limitations [117], which can include high cost, lengthy completion times, limited generalizability of results, and restricted observations (e.g., made at a limited number of predefined time points in a protocol (e.g., baseline, end of treatment)). Thereby, clinical practice is currently limited by RCT and evidence-based medicine interpretations and limitations [118], which are largely responsible for a predominant physician’s responsive mindset. A wealth of recent manuscripts on Big Data analysis facilitates a potential solution for individual patient behavior prediction and proactive Precision Medicine management [119] by augmenting and extending RCT design [117]. Standardization and automation of procedures using Big Data make entering and extracting data easier and could reduce the effort and cost of running an RCT. They can also be used to formulate hypotheses fueled by large, preliminary observational studies and/or carry out virtual trials. For example, Peter et al. showed how Big Data could be used to move from basic scientific discovery to translation to patients in a non-linear fashion [120]. Given the potential pathophysiological connection between PD and inflammatory bowel disease (IBD), they evaluated the incidence of PD in IBD patients and investigated whether anti-tumor necrosis factor (anti-TNF) treatment for IBD affected the risk of developing PD. Rather than a traditional RCT, they ran a virtual repurposing trial using data from 170 million people in two large administrative claims databases. The study observed a 28% higher incidence rate of PD in IBD patients than in unaffected matched controls. In IBD patients, anti-TNF treatment resulted in 78% reduction in the rate of PD incidence relative to patients that did not receive the treatment [120, 121]. A similar approach was reported by Slade et al. They conducted experiments on rats to investigate the effects of Attention Deficit Hyperactivity Disorder (ADHD) medication (type and timing) on the “rats’ propensity to exhibit addiction-like behavior”, which led to the hypothesis that initiating ADHD medication in adolescence “may increase the risk for SUD in adulthood”. To test this hypothesis in humans, rather than running a traditional RCT, they used healthcare Big Data from a large claim database and, indeed, found that “temporal features of ADHD medication prescribing”, not subject demographics, predicted SUD development in adolescents on ADHD medication [122]. A hybrid approach was used in the study by Yu et al. [123]. Their study examined the potential of vitamin K2 (VK2) to reduce the risk of PD, given its anti-inflammatory properties and inflammation's role in PD pathogenesis. Initially, Yu et al. assessed 93 PD patients and 95 controls and determined that the former group had lower serum VK2 levels compared to the healthy controls. To confirm the connection between PD and inflammation, the study then analyzed data from a large public database, which revealed that PD patients exhibit dysregulated inflammatory responses and coagulation cascades that correlate with decreased VK2 levels [123].

Even though these pioneering studies demonstrate potential ways in which Big Data can be used to perform virtual RCT trials, several challenges remain. The processing pipeline of Big Data, from collection to analysis, has still to be refined. Moreover, it is still undetermined how regulatory bodies will ultimately utilize this type of data. In the US, the Food and Drug Administration (FDA) has acknowledged the future potential of “Big Data” approaches, such as using data that could be gathered from Electronic Health Records (EHRs), pharmacy dispensing, and payor records, to help evaluate the safety and efficacy of therapeutics [124]. Furthermore, the FDA has begun the exploration and use of High-Performance Computing (HPC) to internally tackle Big Data problems [125] and concluded that Big Data methodologies could broaden “the range of investigations that can be performed in silico” and potentially improve “confidence in devices and drug regulatory decisions using novel evidence obtained through efficient big data processing”. The FDA is also employing Big Data based on Real World Evidence (RWE), such as with their Sentinel Innovation Center, which will implement data science advances (e.g., machine learning, natural language processing) to expand EHR data use for medical product surveillance [126, 127]. Lastly, the exploration of crowdsourcing of data acquisition and analysis is an area still to be explored and outside the scope of this review [128].

Big Data case studies in neurology

To provide the reader with a sample of existing Big Data solutions for improving patient care (beyond those surveyed above), we focus on three separate disorders, PD, SUD, and Pain. While Big Data has positively impacted numerous other neuropathologies (e.g., [129,130,131,132]), we have chosen these three disorders due to their significant societal impact and their representation of varying stages of maturity in the application of Big Data to Neurology. Finally, we exemplify Big Data’s foreseeable role in therapeutic technology via brain stimulation, which is used in the aforementioned disorders and is particularly suitable for Precision Medicine.

PD

After AD, PD is the second most prevalent neurodegenerative disorder [133,134,135]. About 10,000 million people live with PD worldwide, with ~ 1 million cases in the US. The loss of dopamine-producing neurons leads to symptoms such as tremor, rigidity, bradykinesia, and postural instability [136]. Traditional treatments include levodopa, physical therapy, and neuromodulation (including Deep Brain Stimulation (DBS) and Noninvasive Brain Stimulation (NIBS) [36, 137, 138].

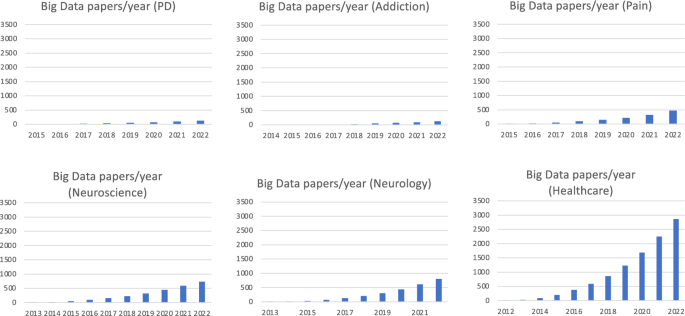

The increasing significance of Big Data in both PD research and patient care can be measured by the rising number of published papers over the past decade (Fig. 3). Several national initiatives have been aimed at building public databases to facilitate research. For example, the Michael J. Fox Foundation’s Parkinson’s Progression Markers Initiative (PPMI) gathers data from about 50 sites in several nations including the US, Europe, Israel, and Australia with the objective of identifying potential biomarkers of disease progression [139, 140]. A major area of research involving Big Data analytics focuses on PD’s risk factors, particularly through genetic data analysis. The goal is to enhance our comprehension of the causes of the disease and develop preventive treatments. The meta-analysis of PD genome-wide association studies by Nalls et al. illustrates this approach, which involved the examination of “7,893,274 variants” among “13,708 cases and 95,282 controls”. The findings revealed and confirmed “28 independent risk variants” for PD “across 24 loci” [141]. Patient phenotyping for treatment outcome prediction is another research area that utilizes Big Data analytics. Wong et al.’s paper discusses this approach, reviewing the use of structural and functional connectivity studies to enhance the efficacy of DBS treatment for PD and other neurological diseases [142]. An emerging area of patient assessment is wearable sensors and/or apps for potential real-time monitoring of symptoms and response to treatment [143]. A major project in this area is the iPrognosis mobile app, which was funded by the EU Research Programme Horizon 2020 and aimed at accelerating PD diagnosis and developing strategies to help improve and maintain the quality of life of PD patients via capturing data during user interaction with smart devices, including smartphones and smartwatches [144]. Similar to other diseases, PD analysis is also being conducted via social media (e.g., [16, 145]) and EHR [146, 147] analyses. See Table 4 and Additional file 1: Table S4 or review articles in [148,149,150,151,152,153,154] for further examples of Big Data research in PD.

Cumulative number of papers on Big Data over time for different areas, as per Pubmed. The panels illustrate when Big Data started to impact the area and allow a comparison across areas As graphs were simply created by using the keywords “Big Data” AND “area”, with "area" being “Parkinson’s Disease”, “Addiction”, etc. as opposed to using multiple keywords that may be used to describe each field, actual numbers are likely to be underestimated

SUD and Opioid Use Disorder (OUD)

The economic and social burden associated with SUDs is enormous. OUD is the leading cause of overdoses due to substance abuse disorders, where death rates have drastically increased, with over 68,000 people in 2020 [155]. The US economic cost of OUD alone and fatal opioid overdoses was $471 billion and $550 billion, respectively, in 2017 [156]. Treatments focus on replacement (e.g., nicotine and opioid replacement) and abstinence and are often combined with self-help groups or psychotherapy [157, 158].

Like PD, the increasing impact of Big Data in SUD and OUD research and patients care can be measured by the increased number of papers published in Pubmed over the past decade (Fig. 3). Several national initiatives have been aimed at building public databases to facilitate SUD research. For example, since 2009, the ENIGMA project includes a working group specifically focused on addiction, which has gathered genetic, epigenetic, and/or imaging data from 1000’s of SUD subjects from 33 sites as of 2020 [37]. As part of this research, Mackey et al. have been investigating the association between dependence and regional brain volumes, both substance-specific and general [159]. Similarly, studies implementing data sets from the UK BioBank and 23andMe (representing > 140,000 subjects) have been used for developing the Alcohol Use Disorder Identification Test (AUDIT) to identify the genetic basis of alcohol consumption and alcohol use disorder [160]. Big Data is also being used to devise strategies for retaining patients on medication for OUD, as roughly 50% of persons discontinue OUD therapy within a year [158]. The Veterans Health Administration is spearheading such an initiative based on data (including clinical, insurance claim, imaging, and genetic data) from > 9 M veterans [158]. Social media is also emerging as a method to monitor substance abuse and related behaviors. For example, Cuomo et al. reported on the results of an analysis of geo-localized Big Data collected in 2015 via 10 M tweets from Twitter regressed with Indiana State Department of Health data on non-fatal opioid-related hospitalizations and new “HIV cases from the US Centers for Disease Control and Prevention" to examine the transition from "opioid prescription abuse to heroin injection and HIV transmission risk” [161]. Leveraging Big Data from online content is likely to aid public health practitioners in monitoring SUD. Table 5 and Additional file 1: Table S5 summarize Big Data research in SUD and OUD.

Pain

Chronic pain is a widespread condition that affects a significant portion of the global population, with an estimated 20% of adults suffering from it and 10% newly diagnosed each year [162]. In the US, this condition is most prevalent and affects over 50 million adults. The most common pain locations are the back, hip, knee, or foot [163], which are chiefly due to neural entrapment syndromes (e.g., Carpal Tunnel Syndrome (CTS)), peripheral neuropathy (such as from diabetes), or unknown causes (such as non-specific chronic Lower Back Pain (LBP)). Pain treatment remains challenging and includes physical therapy, pharmacological and neuromodulation approaches [164]. As in other areas of Neurology, the Big Data revolution has been impacting pain research and management strategies. As reviewed by Zaslansky et al., multiple databases have been created to monitor pain, for example the international acute pain registry PAIN OUT, established in 2009 with EU funds, to improve the management of surgeries [165, 166]. Besides risk factors [167], such as those based on genetic data (e.g., see [168, 169]), pain studies using Big Data mainly focus on management of symptoms and improving therapy outcomes. Large-scale studies aimed at comparing different treatments [170, 171] or at identifying phenotypes in order to classify and diagnose patients (see for example [172]) are particularly common. Table 6 and Additional file 1: Table S6 summarize Big Data research in Pain, while Fig. 3 shows the increasing number of published papers in the field.

Example of Big Data impact on treatments and diagnostics-brain stimulation

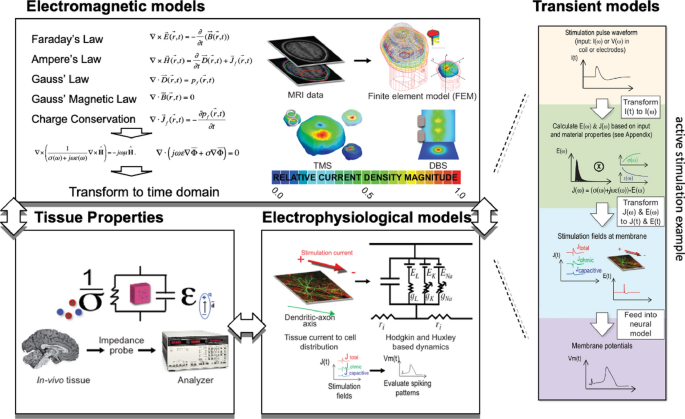

In the last twenty years, neurostimulation methods have seen a substantial rise in application for neurological disease treatment [36, 138, 173]. Among the most used approaches are invasive techniques like DBS [173,174,175,176], which utilize implanted devices to apply electrical currents directly into the neural tissue and modulate neural activity. Noninvasive techniques, on the other hand, like those applied transcranially, offer stimulation without the risks associated with surgical procedures (such as bleeding or infection) [36]. Both invasive and noninvasive approaches have been used for psychiatric and neurological disorders treatments, including those for depression, PD, addiction, and pain. While High Performance Computing has been used in the field for some time (see Fig. 4), Big Data applications have just recently started to be explored in brain stimulation. For example, structural and functional connectome studies have yielded new insights into the potential targets for stimulation, in the quest to enhance stimulation effectiveness. Although DTI has optimized the definition of targets for DBS and noninvasive stimulation technologies since mid-2000 [177,178,179], Big Data and advances in computational methods have enabled new venues for DTI to further improve stimulation, which have enhanced clinical results. For example, in 2017, Horn et al. utilized structural and functional connectivity data of open-source connectome databases (including healthy subjects connectome from the Brain Genomics Superstruct Project, the HCP, and PD connectome from the PPMI) to build a computational model to predict outcomes following subthalamic nucleus modulation with DBS in PD. As a result, Big Data allowed the identification of a distinct pattern of functional and structural connectivity, which independently accurately predicted DBS response. Additionally, the findings held external validity as connectivity profiles obtained from one cohort were able to predict clinical outcomes in a separate DBS center’s independent cohort. This work also demonstrated the prospective use of Big Data in Precision Medicine by illustrating how connectivity profiles can be utilized to predict individual patient outcomes [180]. For a more comprehensive review of application of functional connectome studies to DBS, the reader is referred to [142], where Wong et al. discuss application of structural and functional connectivity to phenotyping of patients undergoing DBS treatment and prediction of DBS treatment response. Big Data is also expected to augment current efforts in the pursuit of genetic markers to optimize DBS in PD (e.g., [148, 181, 182]).

High Performance Computing solutions for modeling brain stimulation dosing have been explored for well over a decade. The above figure is adapted from [183], where Sinusoidal Steady State Solutions of the electromagnetic fields during TMS and DBS were determined from MRI derived Finite Element Models based on frequency specific tissue electromagnetic properties of head and brain tissue. The sinusoidal steady state solutions were then transformed into the time domain to rebuild the transient solution for the stimulation dose in the targeted brain tissues. These solutions were then coupled with single cell conductance-based models of human motor neurons to explore the electrophysiological response to stimulation. Today, high resolution patient specific models are being developed (see below), implementing more complicated biophysical modeling (e.g., coupled electromechanical field models) and are being explored as part of large heterogenous data sets (e.g., clinical, imaging, and movement kinematic) to optimize/tune therapy

Compared to DBS, studies on NIBS have been sparser. However, the use of Big Data methodologies has facilitated the improvement and standardization of established TMS techniques (i.e., single and paired pulse), which had large inter-subject variability, by identifying factors that affect responses to this stimulation in a multicentric sample [184]. A similar paradigm was followed to characterize theta-burst stimulation [185]. Regarding disease, a large multisite TMS study (n = 1188), showed that resting state connectivity in limbic and frontostriatal networks can be used for neurophysiological subtype classification in depression. Moreover, individual connectivity evaluations predicted TMS therapy responsiveness better than isolated symptomatology in a subset of patients (n = 154) [17].

Proposed solutions

As reviewed above, Big Data has been improving the care of patients with neurological diseases in multiple ways. It has elevated the value of diverse and often incomplete data sources, enhanced data sharing and multicentric studies, streamlined multidisciplinary collaboration, and improved the understanding of neurological disease (diagnosis, prognosis, optimizing current treatment, and helping develop novel therapies). Nevertheless, existing methodologies suffer from several limitations, which have prevented the full realization of Big Data’s potential in Neuroscience and Neurology. Below, we discuss the limitations of current approaches and propose possible solutions.

Full exploitation of available resources

Many Neuroscience and Neurology purported “Big Data” studies do not fully implement the classic 3 V's (i.e., “Volume, Variety, and Velocity”) or 5 V’s (i.e., “Volume, Variety, Velocity, Veracity and Value”) and/or are characterized by the high heterogeneity in which the V’s can be interpreted. For example, in “Big Data” Neuroscience and Neurology studies, Volume sometimes refers to studies with hundreds of thousands of patients’ multidimensional datasets and other times to studies with 10's of patients’ unidimensional datasets. Value, a characteristic of Big Data typically defined in financial terms in other Big Data fields, is not usually considered in Big Data studies in Neuroscience and Neurology. In this paper, across studies and databases, we adopted a measure of clinical or preclinical Value where financial information was not given (see Tables 2–6 and Additional file 1: Tables S2–S6). Data Veracity is not standardized in Neuroscience or Neurology and thus, we focused our analysis on both typical data Veracity measures and potential experimental sources of error in the data sets from studies that we reviewed above. In terms of Variety, few clinical studies make use of large multimodal data sets and even fewer are acquired and processed at a rapid Velocity. Data Velocity information is sparsely reported throughout the literature, but its clear reporting would enable a better understanding and refinement of methodologies through the research community.

While these limitations may be simply labeled as semantics, we believe that these deficits often result in Big Data analytics being underexploited, which limits the potential impact of a study and possibly increases its cost. Thus, aligning studies in Neuroscience and Neurology to the V’s represents an opportunity to leverage the knowledge, technology, analytics, and principles established in fields that have been using Big Data more extensively, thereby improving the Big Data studies in Neurology and Neuroscience. Identifying whether a study is suitable for using Big Data approaches makes it easier to choose the best tools for the study and exploit the plethora of resources (databases, software, models, data management strategies) that are already available (part of which we have reviewed herein, see for example Tables 1–2 and Additional file 1: Tables S1, S2).

Tools for data harmonization

The overall lack of tools for data harmonization (particularly for multimodal datasets used in clinical research and care) is a significant issue of current Big Data studies. Creation of methods for sharing data and open-access databases has been a priority of Big Data initiatives since their inception. Data sharing is required by many funding agencies and scientific journals, and publicly available repositories have been established. While these repositories have become more common and organized (see Sect. “Existing Solutions”), there has been less emphasis on the development of tools for quality control, standardization of data acquisition, visualization, pre-processing, and analysis. With the proliferation of initiatives promoting data sharing and pooling of existing resources, the need for better tools in these areas is becoming increasingly urgent. Despite efforts made by the US Department of Health and Human Service to establish standardized libraries of outcome measures in various areas, such as Depression [186, 187], and by the NIH that has spearheaded Clinical Trials Network (CTN)-recommended Common Data Elements (CDEs) for use in RCTs and EHRs [188], more work is needed to ensure data harmonization across not only clinical endpoints but also across all data types that typically comprise Big Data in Neuroscience and Neurology. For example, in neuroimaging, quality control of acquired images is a long-standing problem. Traditionally, this is performed visually, but in Big Data sets, large volumes make this approach exceedingly expensive and impractical. Thus, methods for automatic quality control have become in high demand [189]. Quality control issues are compounded in collaborative datasets, where variability may stem from multiple sources. In multisite studies, a typical source of variability arises from the use of different MRI scanners (i.e., from different manufacturers, with different field strengths or hardware drifts [190, 191]). Variability can also arise from data pre-processing techniques and pipelines. For example, the pre-processing pipeline of MRI data involves a variety of steps (such as correcting field inhomogeneity and motion, segmentation, and registration) and continues to undergo refinement through algorithm development, ultimately affecting reproducibility/Veracity of study results. As an additional example, while working on data harmonization methods in genome-wide association studies Chen et. al. have noted similar problems where an “aggregation of controls from multiple sources is challenging due to batch effects, difficulty in identifying genotyping errors and the use of different genotyping platforms” [192].

Some progress towards harmonization of data and analysis procedures [193] has been enabled by the availability of free software packages that incorporate widely accepted sets of best practices, see, e.g., Statistical Parametric Mapping (SPM), FreeSurfer, FMRIB Software Library (FSL), Analysis of Functional NeuroImages (AFNI), or their combination (such as Fusion of Neuroimaging Processing (FuNP) [194]). In addition, open-access pre-processed datasets have been made available (see Table 2 and Additional file 1: Table S2); for example, the Preprocessed Connectome Project has been systematically pre-processing the data from the International Neuroimaging Data-sharing Initiative and 1000 Functional Connectomes Project [195, 196] or GWAS Central (Genome-wide association study Central) which “provides a centralized compilation of summary level findings from genetic association studies” [197]. As another example, EU-funded NeuGRID and neuGRID4You projects included a set of analysis tools and services for neuroimaging analysis [106]. Development of software like Combat (which was initially created to eliminate batch effects in genomic data [198] and subsequently adapted to handle DTI, cortical thickness measurements [199], and functional connectivity matrices [200]) can also help researchers harmonize data from various types of study, regardless of whether they are analyzing newly collected or retrospective data gathered with older standards. For more detailed discussions on efforts to address data harmonization challenges in neuroimaging, the reader is directed to the review papers of Li et al. [12], Pinto et al. [201], and Jovicich et al. [202]. In clinical studies using data different from neuroimaging (and/or biospecimen sources), standardization of clinical assessments and measures of outcome across multiple sites has also proven to be challenging. For example, as shown by the ENIGMA study group, multi-center addiction studies face notable methodological challenges due to the heterogeneity of measurements for substance consumption in the context of genomic studies [203].

Developing tools to harmonize datasets across different sources and data types (e.g., based on machine learning [191]) for Neurology-based clinical studies might allow researchers to exploit Big Data to their full potential. Tools for complex data visualization and interactive manipulation are also needed to allow researchers from different backgrounds to fully understand the significance of their data [204]. For studies that are in the design phase, identifying whether tools for data harmonization are available or developing such tools in an early phase of the study will allow researchers to enhance the Veracity, and ultimately the impact of the study, while cutting costs.

New technologies for augmented study design and patient data collection

Traditional clinical studies are associated with several recognized limitations. However, a few recent Big Data studies have shown potential in mitigating some of these limitations.

First, traditional clinical studies, particularly RCTs which serve as the standard in clinical trials, are often expensive and inefficient. The integration of Big Data, particularly in the form of diverse data types or multicenter trials, can further amplify these issues and lead to exponential increases in costs. Thus, there is a pressing need for tools that can optimize resources and contain expenses. Virtual trials are a promising but underutilized approach that can potentially enhance study design and address cost-related challenges. To achieve this, health economics methods could be used to compare different scenarios, such as recruitment strategies or inclusion criteria, and select the most effective one prior to initiating an actual clinical study. These methods can also assign quantitative values to data sets or methods [205]. For studies testing interventions, virtual experiments that use simulations can be performed. For example, in the area of brain stimulation, virtual DBS is being explored [206] to supplement existing study design. Similarly, for NIBS, our group and others are building biophysics-based models that can be used to personalize interventions [58].

Second, traditional clinical studies, including RCTs, often suffer from limited data and limited generalizability of conclusions. Collected data is often too limited to fully account for highly multidimensional and heterogenous neurological conditions. PD is an example of this, where patients’ clinical presentation, progression and response to different treatment strategies can vary significantly, even within a single day [153]. Limited external validity due to discrepancies between the study design (patient inclusion criteria) and real-world clinical scenarios, as well as limited generalizability of findings to different time points beyond those assessed during the study are other known limitations. Relaxing study criteria and increasing timepoints could provide more data, but often at the expense of increased patient burden and study cost. Mobile applications can potentially help overcome some of these limitations while offering other advantages. For example, by allowing a relatively close monitoring of patients mobile applications may help capture features of symptoms not easily observable during hospital visits. This richer dataset could be used to design algorithms for patient classification/phenotyping or medication tuning. However, data collected via mobile technology is often limited to questionnaires or by the type of data that can be collected with sensors that can be embedded in mobile/wearable devices (typically accelerometers in motor disorders studies). Leveraging Big Data in this context would require the development of technology to monitor patients outside the time and space constraints of a traditional clinical study/RCT (e.g., home, or other unstructured environments); such technology should be sufficiently inexpensive to be useful at scale, while still providing reliable and clinically valuable data. Other related approaches include additional nontraditional data sources, such as information gathered from Payer Databases, EHR, or social media particular to a disease and treatment to support conventional findings. For example, the FDA is poised to pursue Big Data approaches to continue to assess products through their life cycle to "fill knowledge gaps and inform FDA regulatory decision-making" [207].

Finally, clinical studies might be subject to bias due to important clinical information being missing. This is particularly true for studies that rely on databases for billing or claim purposes, part of which we have reviewed herein, as they use data which were not collected primarily for research (see Additional file 1: Tables S4–S6). A possible way to overcome this limitation is to more directly couple payer data with clinical data and correlating the results. This approach is still mostly theoretical: modern patient tracking systems like Epic are beginning to offer billing code data within the EHR, but the system was not designed for population-based analysis. Ideally, information such as payer data can be used for exploration purposes and results of the analysis can guide the design of more rigorous studies aimed at testing specific clinical hypotheses.

Tools for facilitating interdisciplinary research

As the use of Big Data continues to expand across various fields, there is a growing need for better tools that can facilitate collaborations among professionals with different backgrounds. A project that exemplifies this need is the American Heart Association (AHA) Precision Medicine Platform [208]. This platform aims to "realize precision cardiovascular and stroke medicine" by merging large, varying datasets and providing analytical tools and tutorials for clinicians and researchers. Despite the strong technological and community-based support of this platform, major challenges related to scalability, security, privacy, and ease of use have prevented it from being integrated into mainstream medicine, subsequently obstructing its full exploitation.

Creating tools to visualize and interactively manipulate multidimensional data (e.g., borrowing from fields such as virtual or augmented reality that already use these tools [209]) might help overcome this type of issue.

Future directions

We have identified current limitations in the application of Big Data to Neuroscience and Neurology and have proposed general solutions to overcome them. One area where the limitations in Big Data, as currently defined and implemented, could be addressed, and make a major impact is in the development of personalized therapies and Precision Medicine. In this field, the acceleration Big Data could enable has not yet occurred [210]. Unlike a traditional one-size-fits-all approach, Precision Medicine seeks to optimize patient care based on individual patient characteristics, including genetic makeup, environmental factors, and lifestyle. This approach can help in preventing, diagnosing, or treating diseases. Precision oncology has been a driver of Precision Medicine for approximately two decades [211] and exploited availability of big, multi-omics data to develop data-driven approaches to predict risk of developing a disease, help diagnosis, identify patient phenotypes, and identify new therapeutic targets. In Neurology, availability of large neuroimaging, connectivity, and genetics datasets has opened the possibility for data-driven approaches in Precision Medicine. However, these approaches have not yet been fully integrated with clinical decision making and personalized care. Diagnosis and treatment are still often guided by only clinical symptoms. Currently, there are no widely used platforms, systems, or projects that analytically combine personalized data, either to generate personalized treatment plans or assist physicians with diagnostics. However, the AHA Precision Medicine Platform [208] aims to address this gap by providing a means to supplement treatment plans with personalized analytics. Despite the strong technological and community-based support of this platform, integration of the software into mainstream medicine has been challenging, as discussed above (see SubSect. “Future Directions” in Sect. “Proposed Solutions").

As a potential way to acquire large real-time multimodal data sets for use in personalized care in the movement disorder, pain, and rehabilitation spaces we have been developing an Integrated Motion Analysis Suite (IMAS), which combines motion capture technology, inertial sensors (gyroscope/accelerometers), and force sensors to assess patient movement kinematics from multiple body joints as well as kinetics. The hardware system for movement kinematic and kinetic data capture is underpinned with an AI driven computational system with algorithms for data reduction, modeling, and prediction of clinical scales, prognostic potential for motor recovery (e.g., in the case of injury such as stroke), and response to treatment. Ultimately, the low-cost hardware package is coupled to computational packages to holistically aid clinicians in motor symptom assessments. The system is currently being investigated as part of a stroke study [212] and supporting other studies in the movement disorder [213] and Chronic Pain [214, 215] spaces. As for the Big Data component, the system has been designed for different data streams and systems to be networked and interconnected. As a result, data such as multiple patients’ kinematic/kinetic, imaging, EHR, payer database, and clinical data can be longitudinally assessed and analyzed to develop a continually improving model of patient disease progression. This approach also serves as a method to personalize and optimize therapy delivery and/or predict response to therapy (see below).

Our group is also developing a new form of NIBS, electrosonic stimulation (ESStim™) [138], and testing it in multiple areas (e.g., diabetic neuropathic pain [215], LBP, CTS pain [214], PD [138], and OUD [216]). While the RCTs that are being conducted for the device are based on classic safety and efficacy endpoints, several of our studies are also focused on developing models of stimulation efficacy through combined imaging data, clinical data, kinematic data, and/or patient specific biophysical models of stimulation dose at the targeted brain sites to identify best responders to therapy (e.g., in PD, OUD, and Pain). These computational models are being developed with the goal of not only identifying the best responders but as a future means to personalize therapy based on the unique characteristics of the individual patients [58] and multimodal disease models. It is further planned that the IMAS system, with its Big Data backbone, will be integrated with the ESStim™ system to further aid in personalizing patient stimulation dose in certain indications (e.g., PD, CTS pain).

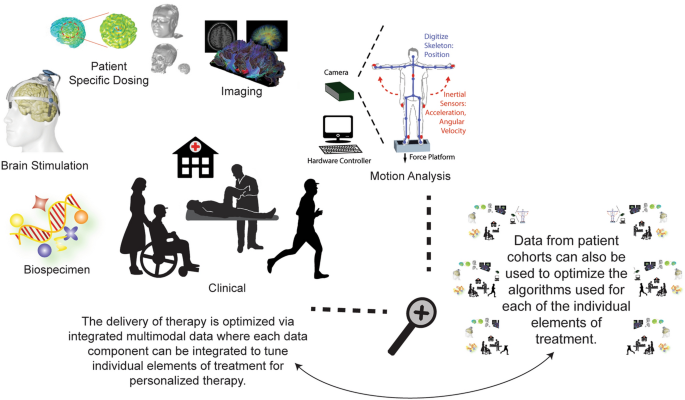

Finally, our group is working on developing a trial optimization tool based on health economics modeling (e.g., Cost Effective Analysis (CEA)) [205, 217]. The software we are generating allows for a virtual trial design and the predicting of the cost effectiveness of the trial. We anticipate that the software could also be implemented to quantify data set values in health economic terms or used to quantify non-traditional data for use in RCT design or assessment (e.g., for the OUD patient population CEA methodologies could be used to quantify the impact of stigma on the patient, caregiver, or society with traditional (e.g., biospecimen) and non-traditional data sets (e.g., EHR, social media)). Ultimately, we see all these systems being combined into a personalized treatment suite, based on a Big Data infrastructure, whereby the multimodal data sets (e.g., imaging, biophysical field-tissue interaction models, clinical, and biospecimen data) are coupled rapidly to personalize brain stimulation-based treatments in diverse and expansive patient cohorts (see Fig. 5).

Schematic of our suite under development for delivering personalized treatments based on a Big Data infrastructure, whereby multimodal data sets (e.g., imaging, biophysical field-tissue interaction models, clinical, biospecimen data) can be coupled to deliver personalized brain stimulation-based treatments in a diverse and expansive patient cohort. Each integrated step can be computationally intensive (e.g., see Fig. 4 for simplified dosing example for exemplary electromagnetic brain stimulation devices)

Elaboration

The Section “Existing Solutions” has reviewed the influence of Big Data on Neuroscience and Neurology, specifically in the context of advancing treatments for neurological diseases. Our analysis spans the last few decades and includes a diverse selection of cutting-edge projects in Neuroscience and Neurology that illustrate the continuing shift towards a Big Data-driven paradigm; also, it reveals that certain areas of neurological treatment development have not fully embraced the potential of the Big Data revolution, as demonstrated through our comprehensive review of clinical literature in Sect. “Proposed Solutions”.

One sign of this gap is that there are differences between the definition of Big Data and the use the 3 V's or 5 V’s across studies that are considered “Big Data” studies in Neuroscience and Neurology literature. Several definitions can be found in the literature from these fields. For example, van den Heuvel et al. noted that the term “Big Data” includes many data types, such as “observational study data, large datasets, technology-generated outcomes (e.g., from wearable sensors), passively collected data, and machine-learning generated algorithms” [153]; Muller-Wirtz and Volk stated that “Big Data can be defined as Extremely large datasets to be analyzed computationally to reveal patterns, trends, and associations, especially relating to human behavior and interactions” [166]; and Eckardt et al. referred to Big Data science as the “application of mathematical techniques to large data sets to infer probabilities for prediction and find novel patterns to enable data driven decisions” [218]. Other definitions also include the techniques required for data analysis. For example, van den Heuvel et al. stated that “these information assets (characterized by high Volume, Velocity, and Variety) require specific technology and analytical methods for its transformation into Value” [153]; and according to Banik and Bandyopadhyay, the term “Big Data encompassed massive data sets having large, more varied, and complex structure with the difficulties of storing, analyzing, and visualizing for further processes or results” [219]. Thus, what constitutes Big Data in Neuroscience and Neurology is not established nor always aligned with the definition of Big Data outside of these fields.

In addition, in the fields of Neuroscience and Neurology, often some V’s are incompletely considered or even dismissed. At present, Neuroscience study data from “Big Data” studies are often just big and sometimes multimodal, and Neurology studies with "Big Data" are often characterized by small multimodal datasets. Incorporating all the V’s into studies might spur innovation. The area of research focused on OUD treatments is a particularly salient example. Adding “Volume” to OUD studies by integrating OUD patient databases, as it has been done for other diseases, could lead to better use of Big Data techniques and ultimately help understand the underlying disease and develop new treatments (e.g., see the work of Slade et. al. discussed above [122]). Similarly, adding “Velocity” to OUD studies by developing technology for increasing dataflow (e.g., integrating clinical data collected during hospital visits with home monitoring signals collected with mobile apps) might lead to using Big Data techniques for uncovering data patterns that could ultimately translate into development of new, personalized OUD treatments. In this vein, Variety in OUD studies could significantly add to the clinical toolbox of caregivers or researchers developing new technologies. For example, infovelliance of social media combined with machine learning algorithms, such as those developed for use during the COVID Pandemic [220], could be used to assess the stigma associated with potential treatment options for OUD patients, and quantify potential methods to lower patient treatment hesitancy. As for data Veracity, additional metrics of veracity could be garnered from clinical data sets to further assessment of the internal and external validity of trial results. For example, in OUD, Big Data sets could be used to assess the validity of self-reported opioid use, such as data gathered from drug diaries, in reference to other components of the Data Set (e.g., social media presence, sleep patterns, biospecimens, etc.). Finally, while we characterized Value herein as direct or indirect in terms of clinical utility, one could assign economic value to the Neuroscience and Neurology data sets through health economics methods. For example, in the OUD patient population, CEA or cost benefit analysis methodologies could be used to quantify the value of the data in health economics terms and guide policy makers in the design of studies or programs for aiding OUD treatment.

Finally, the rapid growth of Big Data in Neuroscience and Neurology has brought to the forefront ethical considerations that must be addressed [221, 222]. For example, a perennial concern is data security and how to best manage patient confidentiality [223]. In the US, current laws and regulations require that SUD treatment information be kept separate from patient’s EHR, which can limit Big Data approaches for improving OUD treatment [158]. The cost versus benefit of making the information more accessible poses ethical challenges as there are risks to trying to acquire such sensitive protected health information (PHI). As of November 28, 2022, the US Health and Human Services Department, through the Office for Civil Rights (OCR) and the Substance Abuse and Mental Health Services Administration (SAMHSA) put forth proposed modifications to rules and has requested public comments on the issue [224]. Ultimately, as the use of Big Data in the treatment of neurological patients progresses, such challenges will need to be addressed in a manner which provides the most benefit to the patient with minimal risks [225, 226].

Conclusion

This paper has provided a comprehensive analysis of how Big Data has influenced Neuroscience and Neurology, with an emphasis on the clinical treatment of a broad sample of neurological disorders. It has highlighted emerging trends, identified limitations of current approaches, and proposed possible methodologies to overcome these limitations. Such a comprehensive review can foster further innovation by enabling readers to identify unmet needs and fill them with a Mendeleyevization-based approach; to compare how different (but related) areas have been advancing and assess whether a solution from an area can be applied to another (Cross-disciplinarization); or to use Big Data to enhance traditional solutions to a problem (Implantation) [227]. This paper has also tackled the issue of the application of the classic 5 V’s or 3 V’s definitions of Big Data in Neuroscience and Neurology, an aspect that has been overlooked in previous literature. Review of the literature under this perspective has contributed to highlight the limitations of current Big Data studies which, as a result, rarely take advantage of AI methods typical of Big Data analytics. This can significantly impact treatment of neurological disorders, which are highly heterogeneous in both symptom presentation and etiology, and would benefit significantly from the application of these methods. At the same time, assessing the missing V’s of Big Data can provide the basis to improve study design. In light of our findings, we recommend that future research should focus on the following areas:

-

A)

Augment and standardize the way the 5 V’s are currently defined and implemented, since not all "Big Data" studies are truly "Big Data" studies.

-

B)

Encourage collaborative, multi-center studies: especially in clinical research, adding Volume might help overcome the limitations of classical RCTs (e.g., type II error).

-

C)

Leverage new technologies for real-time data collection: for diseases characterized by time-varying patterns of symptoms, higher data Velocity such as implemented in home monitoring or wearables might help personalize treatments and/or improve treatment effectiveness.

-

D)

Diversify data types collected in the clinic and/or home: as data Variety can help uncover patterns in patients subtypes or treatment responses.

-

E)

Enforce protocols for data harmonization to improve Veracity.

-

F)

Consider each V in terms of Value and identify ways to categorize and increase Value out of a study, since adding V’s might amplify study costs (and not all data is preclinically or clinically meaningful).

-

G)

Funding agencies should encourage initiatives aimed at educating junior and established scientists on the methods, tools, and resources that Big Data challenges require.

It often happens that when new methods/techniques/technologies are developed or simply get the attention of researchers in a field, that field changes trajectory. In Neuroscience and Neurology, the use of Big Data has been an evolving trend, as evident from our review of over 300 papers and 120 databases. We discussed how Big Data is altering the course of these fields by leveraging computational tools to develop innovative treatments for neurological diseases, a major global health concern. While our analysis has identified significant advancements made in the fields, we also note that the use of Big Data remains fragmented. Nevertheless, we view this as an opportunity for progress in these rapidly developing fields, which can ultimately benefit patients with improved diagnosis and treatment options.

Availability of data and materials

Data sharing is not applicable to this survey article as no primary research datasets were generated during the survey (further, all data survey material is included in the manuscript and/or Additional file 1).

Change history

28 July 2023

The clean version of ESM has been updated.

Abbreviations

- AI:

-

Artificial Intelligence

- MS:

-

Multiple Sclerosis

- US:

-

United States

- NIH:

-

National Institutes of Health

- 5 V’s:

-

Volume, Variety, Velocity, Veracity, and Value

- AD:

-

Alzheimer’s Disease

- PD:

-

Parkinson’s Disease

- SUD:

-

Substance Use Disorder

- Brain/MINDS:

-

Brain Mapping by Integrated Neurotechnologies for Disease Studies

- HCP:

-

Human Connectome Project

- MGH:

-

Massachusetts General Hospital

- UCLA:

-

University of California Los Angeles

- BAM:

-

Brain Activity Map Project

- ADNI:

-

Alzheimer’s Disease Neuroimaging Initiative

- ENIGMA:

-

Enhancing Neuroimaging Genetics through Meta-Analysis

- EM:

-

Electron Microscopy

- 2P:

-

Two-photon Fluorescence Microscopy

- MRI:

-

Magnetic Resonance Imaging

- DTI:

-

Diffusion Tensor Imaging

- fMRI:

-

Functional Magnetic Resonance Imaging

- rs-MR:

-

Resting State Magnetic Resonance Imaging

- tfMRI:

-

Task Functional Magnetic Resonance Imaging

- dMRI:

-

Diffusion Magnetic Resonance Imaging

- MEG:

-

Magnetoencephalography

- EEG:

-

Electroencephalography

- PET:

-

Positron Emission Technology

- CSF:

-

Cerebrospinal Fluid

- MDD:

-

Major Depressive Disorder

- TMS:

-

Transcranial Magnetic Stimulation

- RCT:

-

Randomized Controlled Trial

- IBD:

-

Inflammatory Bowel Disease

- anti-TNF:

-

Anti-Tumor Necrosis Factor

- ADHD:

-

Attention Deficit Hyperactivity Disorder

- VK2:

-

Vitamin K2

- FDA:

-

Food and Drug Administration

- EHRs:

-

Electronic Health Records

- HPC:

-

High Performance Computing

- RWE:

-

Real World Evidence

- DBS:

-

Deep Brain Stimulation

- NIBS:

-

Non-Invasive Brain Stimulation

- PPMI:

-

Parkinson’s Progression Markers Initiative

- EU:

-

European Union

- OUD:

-

Opioid Use Disorder

- AUDIT:

-

Alcohol Use Disorder Identification Test

- CTS:

-

Carpal Tunnel Syndrome

- LBP:

-

Lower Back Pain

- 3 V’s:

-

Volume, Variety, and Velocity

- CTN:

-

Clinical Trials Network

- CDE:

-