Abstract

Previous studies have mostly considered the competing risks to be independent even when the interpretation of the failure modes implies dependency. This paper studies the dependent competing risks model from Gompertz distribution under Type-I progressively hybrid censoring scheme. We derive the maximum likelihood estimations of the model parameters, and then the asymptotic likelihood theory and Bootstrap method are used to obtain the confidence intervals. The simulation results are provided to investigate the effects of different dependence structures on the estimations of parameters. Finally, one data set was used for illustrative purpose.

Similar content being viewed by others

Background

The competing risks model involves multiple failure modes when only the smallest failure time and the associated failure mode are observed. This model is widely studied in the medical, actuarial, biostatistics and so on, under the assumption of independent competing risks. It is common that a failure is associated with one of the several competing failure modes. Previous studies have mostly considered the competing failure modes to be independent even when the interpretation of the failure modes implies dependency. Such as, in the study of colon cancer, the failure causes were cancer recurrence or death, obviously, such failure causes were dependent [see Lin et al. (1999)]. The competing risks model assuming independence among competing failure modes has been widely studied [see, e.g., Crowder (2001)]. Kundu et al. (2004) analyzed the progressively censored competing risks data, Sarhan (2007) analyzed the competing risks models with generalized exponential distributions, Cramer and Schmiedt (2011) studied the progressively censored competing risks data with Lomax distribution, other related works see, Bunea and Mazzuchi (2006); Balakrishnan and Han (2008); Pareek et al. (2009); Xu and Tang (2011), and so on.

The competing risks model under the assumption of dependent competing failure modes has been considered in the early work by Elandt-Johnson (1976). Afterwards, a number of corresponding works have been devoted to the dependent competing risks model. Zheng and Klein (1995) considered the dependence structure between failure modes is represented by an assumed Archimedean copula. Other works see Escarela and Carriere (2003); Kaishev et al. (2007).

In this paper, we present a dependent competing risks model from Gompertz distribution under Type-I progressively hybrid censoring scheme (PHCS). The Gompertz distribution is one of classical mathematical models and was first introduced by Gompertz (1825), which is a commonly used growth model in actuarial and reliability and life testing, and plays an important role in modeling human mortality and fitting actuarial tables and tumor growth. This distribution has been widely used, see, Ali (2010); Ghitany et al. (2014).

The Type-I PHCS was first proposed by Kundu and Joarder (2006) [see also Childs et al. (2008)]. This censoring scheme has been widely used in reliability analysis, see, Chien et al. (2011); Cramer and Balakrishnan (2013). It can be defined as follows: suppose n identical units are put to life test with progressive censoring scheme \((r_{1} ,r_{2} , \ldots ,r_{m} ),\;1 \le m \le n\), the experiment is terminated at time \(\tau\), where \(\tau \in (0,\infty ),\;r_{i} (i = 1, \cdots ,m)\) and m are fixed in advance. At the time of the first failure \(t_{1} ,\;r_{1}\) of the remaining units are randomly removed, at the time of the second failure \(t_{2} ,\;r_{2}\) of the remaining units are randomly removed and so on. If the mth failure time \(t_{m}\) occurs before time \(\tau\), all the remaining units \(R_{m}^{*} = n - m - (r_{1} + \cdots + r_{m - 1} )\) are removed and the terminal time of the experiment is \(t_{m}\). On the other hand, if the mth failure time \(t_{m}\) does not occur before time \(\tau\) and only J failures occur before time \(\tau\), where \(0 \le J \le m\). Then all the remaining units \(R_{J}^{*} = n - J - (r_{1} + \cdots + r_{J} )\) are removed and the terminal time of the experiment is \(\tau\). We denote the two cases as

Case I \(t_{1} < t_{2} < \cdots < t_{m} ,\;\;\;{\text{if}}\;t_{m} < \tau\)

Case II \(t_{1} < t_{2} < \cdots < t_{J} < \tau < t_{J + 1} < \cdots < t_{m} ,\;\;\;{\text{if}}\;t_{m} > \tau\)

The rest of the paper is organized as follows. “Model description” section provides the model description, “Maximum likelihood estimations (MLEs)” section presents the maximum likelihood estimations of the model parameters. The confidence intervals are provided in “Confidence intervals” section. “Simulation and data analysis” section presents the simulation and data analysis. Conclusion appears in “Conclusion” section.

Model description

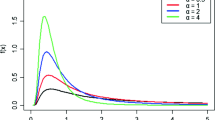

It is assumed that the Gompertz distribution with shape parameter λ and scale parameter θ has the following probability density function (PDF), cumulative distribution function (CDF) and survival function

respectively, where \(t > 0,\;\lambda > 0,\;\theta > 0\). We denote the Gompertz distribution by \(GP(\lambda ,\theta )\).

Suppose variables \(Y_{0} ,\;Y_{1} ,\;Y_{2}\) are independent and \(Y_{0}\) follows \((\sim )\;GP(\lambda ,\theta_{0} )\), \(Y_{1} \sim GP(\lambda ,\theta_{1} )\), \(Y_{2} \sim GP(\lambda ,\theta_{2} )\). Define \(T_{1} = \hbox{min} (Y_{0} ,Y_{1} )\), \(T_{2} = \hbox{min} (Y_{0} ,Y_{2} )\), then the distributions of \(T_{1} ,\;T_{2}\) are \(GP(\lambda ,\theta_{0} + \theta_{1} )\) and \(GP(\lambda ,\theta_{0} + \theta_{2} )\), respectively.

Theorem 1

The joint survival function of \((T_{1} ,\;T_{2} )\) is

Proof

Corollary 1

The joint PDF of \((T_{1} ,\;T_{2} )\) can be written as

Proof For the cases \(t_{1} > t_{2}\) and \(t_{1} < t_{2}\), \(f_{1} (t_{1} ,t_{2} ),\;\;f_{2} (t_{1} ,t_{2} )\) can be easily obtained by \(- \frac{{\partial^{2} S_{{T_{1} ,\;T_{2} }} (t_{1} ,t_{2} )}}{{\partial t_{1} \partial t_{2} }}\). For the case \(t_{1} = t_{2} = t\), by the full probability formula, we have the fact that

where

So from (4), we have

So we have \(f_{0} (t) = \frac{{\theta_{0} }}{{\theta_{0} + \theta_{1} + \theta_{2} }}f(t|\lambda ,\theta_{0} + \theta_{1} + \theta_{2} )\). □

Figure 1 presents the surface plot of \(f_{{T_{1} ,\;T_{2} }} (t_{1} ,t_{2} )\) for different values of \(\lambda ,\;\theta_{0} ,\;\theta_{1} ,\;\theta_{2}\), from Fig. 1, we can see that the \(f_{{T_{1} ,\;T_{2} }} (t_{1} ,t_{2} )\) is unimodal. Define \(X = \hbox{min} (T_{1} ,T_{2} )\), and the distribution of \(X\) is \(GP(\lambda ,\theta_{0} + \theta_{1} + \theta_{2} )\). \(\theta_{0} = 0\) indicates that \(T_{1} ,\;T_{2}\) are independent. Therefore, \(\theta_{0}\) can be regarded as the dependence structure between \(T_{1} ,\;T_{2}\).

The surface plot of the joint PDF of \(T_{1} ,\;T_{2}\) with different values of \(\theta_{0} ,\;\theta_{1} ,\;\theta_{2}\) when \(\lambda = 2\). a \(\theta_{0} = \theta_{1} = \theta_{2} = 1\), b \(\theta_{0} = 0.5,\theta_{1} = \theta_{2} = 0.8\), c \(\theta_{0} = 0,\theta_{1} = \theta_{2} = 0.4\), d \(\theta_{0} = \theta_{1} = \theta_{2} = 0.2\)

Competing risks model

Consider two competing failure modes with latent lifetimes \(T_{1} ,T_{2}\) in the experiment under Type-I PHCS, the failure of an individual is caused by any single one of the two failure modes, obviously, the actual lifetime span is \(X = \hbox{min} (T_{1} ,T_{2} )\). Let r denotes the number of failures that occur before time τ, τ* denotes the terminal time. Then, at time all the remaining \(R_{r}^{*} = n - r - \sum\nolimits_{l = 1}^{r} {r_{l} }\) units are removed and the experiment is terminated, where \(r = m\), \(\tau^{*} = t_{r}\), rm = 0 in Case I and r = J, \(\tau^{*} = \tau\) in Case II.

For the competing risks model under Type-I PHCS, \((x_{1} ,\alpha_{1} ),\;(x_{2} ,\alpha_{2} ),\; \ldots ,\;(x_{r} ,\alpha_{r} )\) are the observed failure data, where \(x_{1} ,x_{2} , \ldots ,x_{r}\) are order statistics, \(\alpha_{l}\) takes any integer in the set \(\{ 0,1,2\}\). For \(j = 0,1,2\), \(\delta_{j} (\alpha_{l} ) = \left\{ \begin{aligned} 1,\;if\;\alpha_{l} = j \hfill \\ 0,\;if\;\alpha_{l} \ne j \hfill \\ \end{aligned} \right.\). \(n_{0} = \sum\nolimits_{l = 1}^{r} {\delta_{0} (\alpha_{l} )}\) denotes the number of failures caused by the two competing failure modes, \(n_{j} = \sum\nolimits_{l = 1}^{r} {\delta_{j} (\alpha_{l} ),} \;j = 1,2\) denotes the number of failures caused by competing failure mode \(j\;(j = 1,2)\), where \(r = \sum\nolimits_{j = 0}^{2} {n_{j} }\).

Maximum likelihood estimations (MLEs)

The likelihood function for the two competing risks model under Type-I PHCS can be written as

where

So the likelihood function can be written as

By setting the first partial derivative of \(\log L\) about \(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda\) to zero, we get

From (7), (8) and (9), the estimates of \(\theta_{j} ,j = 0,1,2\) are given by

Substituting \(\hat{\theta }_{j} (\lambda )\) into \(\log L\) and ignoring the constant, we obtain the profile log-likelihood function of λ as

Lemma 1

The profile log-likelihood function \(g(\lambda )\) is concave.

Proof Denote \(q(\lambda ) = \sum\nolimits_{l = 1}^{r} {(r_{l} + 1)e^{{\lambda x_{l} }} } + c_{1} e^{{\lambda \tau^{*} }}\), where \(c_{1} = n - r - \sum\nolimits_{l = 1}^{r} {r_{l} }\). Therefore, we get \(q^{\prime}(\lambda ) = \sum\nolimits_{l = 1}^{r} {(r_{l} + 1)x_{l} e^{{\lambda x_{l} }} } + c_{1} \tau^{*} e^{{\lambda \tau^{*} }} ,\) \(q^{''}(\lambda ) = \sum\nolimits_{l = 1}^{r} {(r_{l} + 1)x_{l}^{2} e^{{\lambda x_{l} }} } e^{\lambda x_{l}} +c_{1} \tau ^{{*}2} e^{\lambda \tau^{*}}\)

where \(a_{l} = (r_{l} + 1)^{1/2} x_{l} e^{{\lambda x_{l} /2}}\), \(b_{l} = (r_{l} + 1)^{1/2} e^{{\lambda x_{l} /2}}\).

\(q^{\prime\prime}(\lambda )q(\lambda ) - \left( {q'(\lambda )} \right)^{2} \ge 0\) by the Cauchy–Schwarz inequality, therefore \(q^{\prime\prime}(\lambda )q(\lambda ) \ge \left( {q'(\lambda )} \right)^{2}\), which implies that the second derivative of \(g(\lambda )\) is negative, so \(g(\lambda )\) is concave. □

From Lemma 1, we know that \(g(\lambda )\) is unimodal and it has a unique maximum. Since \(g(\lambda )\) is unimodal, most of the standard iterative procedure can be used to find the MLE. So we propose to use the following simple algorithm. Substituting \(\hat{\theta }_{j} (\lambda )\) into (10), the MLE \(\hat{\lambda }\) of \(\lambda\) satisfies the following equation,

where \(h(\lambda ) = 1/\left[ {\frac{{\sum\nolimits_{l = 1}^{r} {(r_{l} + 1)x_{l} e^{{\lambda x_{l} }} } \,+\, c_{1} \tau^{*} e^{{\lambda \tau^{*} }} }}{{\sum\nolimits_{l = 1}^{r} {(r_{l} + 1)(e^{{\lambda x_{l} }} - 1)} \,+\, c_{1} (e^{{\lambda \tau^{*} }} - 1)}} \,-\, \frac{{\sum\nolimits_{l = 1}^{r} {x_{l} } }}{{\sum\nolimits_{j = 0}^{2} {n_{j} } }}} \right].\)

Using the method of a simple iterative scheme proposed in the literature by Kundu (2007), we can solve the shape parameter \(\lambda\) from (13). Start with an initial guess of \(\lambda\), say \(\lambda^{(0)}\), then obtain \(\lambda^{(1)} = h(\lambda^{(0)} )\) and proceed in this way to obtain \(\lambda^{(n + 1)} = h(\lambda^{(n)} )\). Stop the iterative procedure when \(\left| {\lambda^{(n + 1)} - \lambda^{(n)} } \right| < \varepsilon\), some pre-assigned tolerance limit. Once we obtain \(\hat{\lambda }\), the MLEs of \(\theta_{j} ,j = 0,1,2\) can be obtained from (11) as \(\hat{\theta }_{j} ,j = 0,1,2\).

Confidence intervals

Observed fisher information

In this section, we will construct the asymptotic confidence intervals (ACIs) for the parameters \(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda\) using the asymptotic likelihood theory. The observed Fisher information matrix is denoted by

\(I(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda ) = \left[ {\begin{array}{*{20}c} {I_{11} } &\quad {I_{12} } &\quad {I_{13} } &\quad {I_{14} } \\ {I_{21} } &\quad {I_{22} } &\quad {I_{23} } &\quad {I_{24} } \\ {I_{31} } &\quad {I_{32} } &\quad {I_{33} } &\quad {I_{34} } \\ {I_{41} } &\quad {I_{42} } &\quad {I_{43} } &\quad {I_{44} } \\ \end{array} } \right]\),

where the elements of which are negative second partial derivatives of \(\log L\).

Denote \(V\) as the approximate asymptotic variance–covariance matrix of the MLEs of \(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda\) and \(\hat{V}\) as the estimation of \(V\), we get

By the asymptotic distribution of MLEs, \((\hat{\theta } - \theta )/\sqrt {\hat{V}(\hat{\theta })}\) follows as approximately standard normal distribution. Therefore, the two-sided \(100(1 - \alpha )\,\%\) ACIs for \(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda\) are given by

where \(z_{\alpha /2}\) is the \(\alpha /2\) quantile of a standard normal distribution.

Bootstrap sample

Step1. Given \(n,\;m,\;\tau\) and progressive censoring scheme \((r_{1} , \ldots ,r_{m} )\), compute the MLEs \(\hat{\theta }_{0} ,\hat{\theta }_{1} ,\hat{\theta }_{2} ,\hat{\lambda }\) based on the original Type-I progressively hybrid censored sample \((x_{1} , \ldots ,x_{m} )\).

Step2. Based on \(n,\;m,\;\tau ,\;(r_{1} , \ldots ,r_{m} )\), \(\hat{\theta }_{0} ,\hat{\theta }_{1} ,\hat{\theta }_{2} ,\hat{\lambda }\), generate a Type-I progressively hybrid censored sample \((x_{1}^{*} , \ldots ,x_{m}^{*} )\).

a1. Generate a random sample \(w_{1} , \ldots ,w_{m}\) from Uniform distribution \(U(0,1)\), where \(w_{1} , \ldots ,w_{m}\) are order statistics. Let \(v_{l} = w_{l}^{{1/(l + r_{m} + r_{m - 1} + \cdots + r_{m - l + 1} )}}\), \(U_{l} = 1 - v_{m} v_{m - 1} \cdots v_{m - l + 1} ,\;\;l = 1,2, \ldots ,m\) are order statistics followed Uniform distribution \(U(0,1)\).

a2. We obtain the failures \(r\) before time \(\tau\) and the terminal time \(\tau^{*}\).

If \(U_{m} \le 1 - \exp \{ - ((\hat{\theta }_{0} + \hat{\theta }_{j} )/\hat{\lambda })(e^{{\hat{\lambda }\tau }} - 1)\}\), \(r = m\), \(\tau^{*} = (1/\hat{\lambda })\ln [1 - (\hat{\lambda }/(\hat{\theta }_{0} + \hat{\theta }_{j} ))\ln (1 - U_{m} )]\);

If \(U_{m} > 1 - \exp \{ - ((\hat{\theta }_{0} + \hat{\theta }_{j} )/\hat{\lambda })(e^{{\hat{\lambda }\tau }} - 1)\}\), \(r = J\), \(\tau^{*} = \tau\), where J is obtained from the inequality

\(U_{J} < 1 - \exp \{ - ((\hat{\theta }_{0} + \hat{\theta }_{j} )/\hat{\lambda })(e^{{\hat{\lambda }\tau }} - 1)\} \le U_{J + 1}\), for \(1 \le l \le r\), we set \(x_{l}^{*} = (1/\hat{\lambda })\ln [1 - (\hat{\lambda }/(\hat{\theta }_{0} + \hat{\theta }_{j} ))\ln (1 - U_{l} )]\).

Step3. Based on \(n,\;m,\;r,\;\tau^{*} ,\;(r_{1} , \ldots ,r_{r} )\) and \((x_{1}^{*} , \ldots ,x_{r}^{*} )\), we obtain the MLEs \(\hat{\theta }_{0}^{*} ,\hat{\theta }_{1}^{*} ,\hat{\theta }_{2}^{*} ,\hat{\lambda }^{*}\).

Step4. Repeat steps 2–3 N times, we obtain N estimates \(\left\{ {\hat{\theta }_{j}^{*(i)} ,\hat{\lambda }^{*(i)} } \right\}\;\;(i = 1,2, \ldots ,N;j = 0,1,2)\). Arrange them in ascending order to obtain the bootstrap sample \(\left\{ {\hat{\theta }_{j}^{*(1)} ,\hat{\theta }_{j}^{*(2)} , \ldots ,\hat{\theta }_{j}^{*(N)} ;\;\;\hat{\lambda }^{*(1)} ,\hat{\lambda }^{*(2)} , \ldots ,\hat{\lambda }^{*(N)} } \right\},\;\;j = 0,1,2\).

The two-sided \(100(1 - \alpha )\%\) percentile bootstrap confidence intervals (Boot-P CIs) for parameters \(\theta_{0} ,\theta_{1} ,\theta_{2} ,\lambda\)

Simulation and data analysis

Simulation

In this section, we presented some simulation results to evaluate the performance of all the methods proposed in the previous sections for different sample size n, different effective sample size m and different dependence structure \(\theta_{0}\).

Consider two competing failure modes, the initial values for parameters \((\theta_{1} ,\;\theta_{2} ,\;\lambda )\) are \((1.2,\;1,\;0.6)\). Take the dependence structure \(\theta_{0} = 0,\;0.3,\;0.8,1.2,1.6\), where \(\theta_{0} = 0\) indicates that the two competing failure modes are independent Generate the Type-I PHC samples from the Gompertz distribution \(GP(\lambda ,\theta_{0} + \theta_{j} )\) for competing failure mode \(j(j = 1,2)\) according to the algorithm proposed by Balakrishnan and Sandhu (1995). Take the terminal time \(\tau = 1\), and n = 20, 30, 50, m = 4, 6, 8, 10, 15, the pre-fixed scheme \((r_{1} , r_{2} , \ldots , r_{m} )\) are

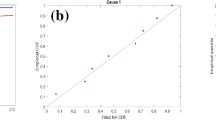

To compute the MLEs of \(\lambda\), we have used the iterative procedure described in “Maximum likelihood estimations (MLEs)” section and stopped the iterative procedure when the difference between two consecutive iterates is less than \(10^{ - 4}\). Before going to compute the MLEs, we plot the profile log-likelihood function of λ in Fig. 2. Figure 2 shows that the profile log-likelihood function of λ is unimodal, the MLE of λ is close to 0.6, so we start the iteration with the initial guess that \(\lambda^{(0)} = 0.6\).

Repeat 10,000 times for each given n, m, \(\theta_{0}\) and censoring scheme, the average mean squared errors (MSEs) and the average absolute relative bias (RABias) and the coverage percentage of the ACIs and Boot-P CIs are shown in Tables 1, 2 and 3.

From Tables 1, 2 and 3, the observations can be made. For fixed sampling scheme, sample size n and dependence structure \(\theta_{0}\), the MSEs and RABias decrease as the effective sample size m increase.

For fixed sampling scheme, sample size n and effective sample size m, as the dependence structure of competing failure modes become stronger, the MSEs and RABias get smaller, while the MSEs and RABias with \(\theta_{0} = 0\) are bigger, which shows that the performance of the MLEs depends on the strength of dependence. This also shows that the dependence structure is very important in the competing risks model.

For fixed sampling scheme, n, m and dependence structure \(\theta_{0}\), the ACIs are stable than the Boot-P CIs, they can maintain their coverage percentages at the pre-fixed normal level.

Data analysis

Using the procedures above, we generate the Type-I PHC samples when \((n,m,\tau ) = (30,10,1)\) with initial value for parameters \((\theta_{1} ,\;\theta_{2} ,\;\lambda )\) as \((1.2,\;1,\;0.6)\), and the dependence structure \(\theta_{0} = \;0.8\), the censoring scheme as \(r_{1} = r_{2} = \cdots = r_{m} = 2\). The simulated data is listed in Table 4. The MLEs and 95 % ACIs and Boot-P CIs are shown in Table 5. The trace plot of the MLE for parameter \(\lambda\) using the iterative procedure is shown in Fig. 3, which shows that the estimate of \(\lambda\) converges to a value after about 1000 iterations.

Conclusion

This paper proposed the dependent competing risks model from Gompertz distribution under Type-I PHCS. We obtained the MLEs and ACIs and Boot-P CIs for the parameters. Simulations showed that the ACIs are more stable than the Boot-P CIs and that the dependence structure is important in the competing risks model. For a given sample size, the performance of the MLEs declined with increasing dependence, which suggests that greater dependence will require a larger sample size to achieve a particular level of precision in estimation.

References

Ali A (2010) Bayes estimation of Gompertz distribution parameters and acceleration factor under partially accelerated life tests with Type-I censoring. J Stat Comput Simul 80(11):1253–1264

Balakrishnan N, Han D (2008) Exact inference for a simple step-stress model with competing risks for failure from exponential distribution under Type-II censoring. J Stat Plan Inference 138:4172–4186

Balakrishnan N, Sandhu RA (1995) A simple simulation algorithm for generating progressive Type-II censored samples. Am Statistician 49(2):229–230

Bunea C, Mazzuchi TA (2006) Competing failure modes in accelerated life testing. J Stat Plan Inference 136:1608–1620

Chien TL, Yen LH, Balakrishnan N (2011) Exact Bayesian variable sampling plans for the exponential distribution with progressive hybrid censoring. J Stat Comput Simul 81:873–882

Childs A, Chandrasekar B, Balakrishnan N (2008) Exact likelihood inference for an exponential parameter under progressive hybrid censoring schemes. In: Vonta F, Nikulin M, Limnios N, Huber-Carol C (eds) Statistical models and methods for biomedical and technical systems. Birkhauser, Boston, pp 323–334

Cramer E, Balakrishnan N (2013) On some exact distributional results based on Type-I progressively hybrid censored data from exponential distributions. Stat Methodol 10:128–150

Cramer E, Schmiedt AB (2011) Progressively Type-II censored competing risks data from Lomax distributions. Comput Stat Data Anal 55:1285–1303

Crowder MJ (2001) Classical competing risks. Chapman and Hall/CRC, Boca Raton (FL)

Elandt-Johnson RC (1976) Conditional failure time distributions under competing risk theory with dependent failure times and proportional hazard rates. Scand Actuar J 1:37–51

Escarela G, Carriere J (2003) Fitting competing risks with an assumed copula. Stat Methods Med Res 12(4):333–349

Ghitany ME, Alqallaf F, Balakrishnan N (2014) On the likelihood estimation of the parameters of Gompertz distribution based on complete and progressively Type-II censored samples. J Stat Comput Simul 84(8):1803–1812

Gompertz B (1825) On the nature of the function expressive of the law of human mortality and on the new mode of determining the value of life contingencies. Phil Trans Royal Soc A 115:513–583

Kaishev VK, Dimitrova DS, Haberman S (2007) Modelling the joint distribution of competing risks survival times using copula functions. Insurance Math Econ 41:339–361

Kundu D (2007) On hybrid censored Weibull distribution. J Stat Plan Inference 137(7):2127–2142

Kundu D, Joarder A (2006) Analysis of Type-II progressively hybrid censored data. Comput Stat Data Anal 50(10):2509–2528

Kundu D, Kannan N, Balakrishnan N (2004) Analysis of progressively censored competing risks data. In: Advances in survival analysis. Handbook of Statistics, Elsevier, Amsterda p 331–348

Lin DY, Sun W, Ying Z (1999) Nonparametric estimation of the gap time distribution for serial events with censored data. Biometrika 86:59–70

Pareek B, Kundu D, Kumar S (2009) On progressively censored competing risks data for Weibull distributions. Comput Stat Data Anal 53:4083–4094

Sarhan AM (2007) Analysis of incomplete, censored data in competing risks models with generalized exponential distributions. IEEE Trans Reliab 56(1):132–138

Xu A, Tang Y (2011) Objective Bayesian analysis of accelerated competing failure models under Type-I censoring. Comput Stat Data Anal 55:2830–2839

Zheng M, Klein JP (1995) Estimates of marginal survival for dependent competing risks based on an assumed copula. Biometrika 82(1):127–138

Authors’ contributions

The manuscript was written through contributions of all authors. All authors have given approval to the final version of the manuscript. These authors contributed equally. Both authors read and approved the final manuscript.

Acknowledgements

This work are supported by the National Natural Science Foundation of China (Grant Numbers 71171164, 71401134, 71571144), Natural Science Basic Research Program of Shaanxi Province (Grant Number 2015JM1003) and the Program of International Cooperation and Exchanges in Science and Technology Funded by Shaanxi Province (2016KW-033).

Competing interests

I, Yimin Shi, declare that I have read SpringerOpen’s guidance on competing interests and declare that none of the authors have any competing interests in the manuscript.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Shi, Y., Wu, M. Statistical analysis of dependent competing risks model from Gompertz distribution under progressively hybrid censoring. SpringerPlus 5, 1745 (2016). https://doi.org/10.1186/s40064-016-3421-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s40064-016-3421-9