Abstract

Serverless computing has gained importance over the last decade as an exciting new field, owing to its large influence in reducing costs, decreasing latency, improving scalability, and eliminating server-side management, to name a few. However, to date there is a lack of in-depth survey that would help developers and researchers better understand the significance of serverless computing in different contexts. Thus, it is essential to present research evidence that has been published in this area. In this systematic survey, 275 research papers that examined serverless computing from well-known literature databases were extensively reviewed to extract useful data. Then, the obtained data were analyzed to answer several research questions regarding state-of-the-art contributions of serverless computing, its concepts, its platforms, its usage, etc. We moreover discuss the challenges that serverless computing faces nowadays and how future research could enable its implementation and usage.

Similar content being viewed by others

Introduction

Cloud computing emerged after the appearance of virtualization in software and hardware infrastructures; hence cloud providers increasingly adopted it to offer their services to customers [1, 2]. Customers can access these cloud services via the Internet. Software developers have been using cloud technologies in their software solutions owing to their benefits including scalability, availability, and flexibility [3].

In general, cloud computing is divided into three main categories based on the provision of services, which are software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS). In the SaaS category, cloud providers offer different types of software as services to the users. For example, Google provides many applications as a service (e.g., Gmail, Google docs, Google sheets, and Google forms). In this type of cloud, the user is not responsible for the services development, deployment, and management. The user here only uses them without worrying about their settings, configurations, etc. Meanwhile, in the PaaS, cloud companies provide services such as network access, storage, servers, and operating systems to be purchased by developers. The developers access these services to deploy, run, and manage their applications. In this kind of cloud, the developer is responsible for the deployment and management (settings and configurations) of their software to ensure that the application is running, while they do not control the services. Finally, in the IaaS category, the cloud consumers control and manage services such as network access, servers, operating systems, and storage.

Managing cloud services is not an easy task at all. The authors in [4] have addressed several challenges while managing a cloud environment by a user such as availability, load balancing, auto-scaling, security, monitoring, etc. For example, the cloud user has to ensure the availability of the services in which if a single machine failure occurs, it does not affect the whole services. Also, he/she has to consider distributing copies of the services geographically to protect them when disasters happen. Another challenge is load balancing. In this case, the cloud user has to ensure that requests to the services are balanced to utilize all resources efficiently.

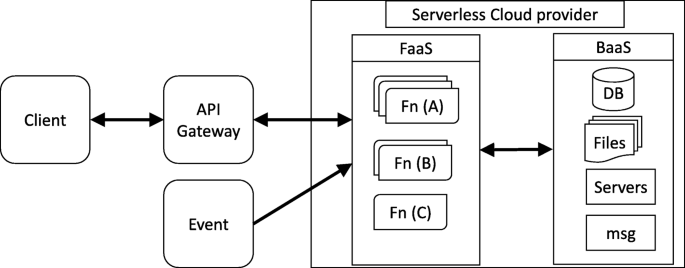

These challenges have led to introduce another cloud computing model, which is called serverless cloud computing [4]. Serverless cloud computing offers backend as a service (BaaS) and function as a service (FaaS), as shown in Fig. 1. The BaaS includes services like storage, messaging, user management, etc. While, the FaaS enables developers to deploy and execute their code on computing platforms. The FaaS relies on the services provided by the BaaS such as a database, messaging, user authentications, etc. The FaaS is considered as the most dominant model of serverless, and it is also known as “event-driven functions” [5, 6].

Serverless cloud model was for the first time introduced by Amazon Lambda in 2014, after which cloud companies like Google and Microsoft adopted it in 2016. Serverless cloud computing adds an additional abstraction layer to the existing cloud computing paradigms, while it abstracts away the server-side management from the developers [7]. Serverless model lets the developers focus on the application logic rather than the server-side management and configurations. For example, the developers deploy their applications to the serverless cloud as functions see Fig. 1. Then, the cloud provider takes responsibility for managing, scaling, and providing different resources to ensure the smooth running of these functions [8, 9].

However, FaaS and the term “serverless” could be used interchangeably, as the FaaS platform automatically configures and maintains the execution context of functions and connects them to cloud services without requiring server provision by developers [10, 11]. We refer to the FaaS when we use the term serverless computing.

Serverless cloud computing has many good characteristics [12, 13], one of which is scalability. Scaling could be vertical or horizontal; vertical scaling adds or removes cores from the running container, while horizontal scaling creates new containers or eliminates running ones without affecting the current resource allocations [14]. In serverless computing, the applications automatically scale up and down on demand, and the developer does not have to concern themselves about the scaling issues. For example, when an application runs on a serverless cloud, it will scale up automatically when the application requests increase. Another characteristic of serverless computing is the payment per resource usage. This paradigm of cloud computing charges developers based on the actual resource usage. For example, deploying an application will not cost the developer in the case where the application is idle, and the serverless provider will only charge whenever the application has started using resources.

However, any new technology will face numerous technical and operational issues and obstacles at the beginning. Since the recent introduction of serverless cloud computing, several drawbacks have been identified [7]. Serverless cloud computing lacks tools that help managing and monitoring serverless applications. Moreover, it might comprise security concerns. Further, the serverless providers have a vendor lock-in problem. Nevertheless, serverless cloud computing has gained positive attention in the industry, despite that it has not been studied extensively in academic research [7].

Therefore, the aim of this research is to answer some crucial research questions related to serverless cloud computing and thereby help researchers as well as developers to better understand serverless cloud computing and contribute to its development.

The rest of this paper is structured as follows: “Related works” section presents the related works for this study. “Research methodology” section describes in detail the research methodology used to conduct this survey study. “Results” section presents the results and outcomes of the study. “Threats to validity” section presents the threats to validity of this study. Finally, the conclusions of the study are provided in “Conclusions” section.

Related works

The most relevant studies published on the topic are briefly presented here. The authors in [15] and [16] discussed some important background to the origin and evolution of serverless computing and the long road that serverless computing has taken over the years. The authors in [9] thoroughly discussed the true meaning of serverless architectures and how they are changing the way in which applications are built, deployed, and distributed.

Numerous studies focused on technical interpretations of serverless computing, while other more recent research suggested various benefits that it brings to developers. Nowadays, this type of computing is being used in several ways. In an empirical study, the authors in [17] aimed to investigate the development practices of serverless computing in the industry. They concluded that for developers, it remains a barrier to adopt the right mindset to best utilize the tools inherent to serverless architecture. More documentation and easier access to such resources would help developers to embrace the possibilities that serverless computing has to offer.

The concept of serverless computing within the scope of the IT industry has the great potential of progressively increasing its capabilities to involve a wider set of domains. Thus, the implementation of serverless computing is not restricted only to the enhancement of infrastructure, and it can be employed for many different purposes, e.g., serverless messaging, neural network training [18], video processing [19], and big data [20]. Undeniably, their contributions are valuable to the general public and researchers in the field, as it is of primarily importance to comprehend how this technology works.

However, it is presently crucial to provide more than only theories and concepts: it is time to weigh the benefits and drawbacks of serverless computing and to analyze how far the field has progressed, to assess what remains to be done and improved. As an example, the authors in [21] discussed some possible new abstraction levels to reduce processing limitations. The authors in [22] discussed the results from an open-source framework to achieve on-premises serverless computing that can process big workloads with a scalable and sensible usage of resources. We can infer from these related publications that researchers everywhere are working to determine how to best exploit the potential that serverless computing frameworks could introduce to software development.

In [23], the authors described how serverless computing is becoming the next step in the evolution of cloud computing and its platforms. In our paper, we focus on the ongoing challenges, benefits, and drawbacks of using it.

The authors in [24] have conducted a systematic exploration of serverless computing-related research papers. As they mentioned, their work is not a survey, but it is a supporting source for future research papers. They proposed an open dataset for serverless computing papers. The dataset includes 60 papers for the period (2016-July 2018). Also, they have analyzed the dataset according to bibliometric, content, technology, and produced statistics about each section. In contrast, our paper aims to conduct a systematic survey. In this survey, we try to find answers to several critical questions related to serverless computing. In addition to that, our study covered the duration (2016–2020) and thus 275 papers have been considered.

The authors in [25] mainly focused on scheduling tasks in the cloud. They described the various techniques in scheduling workflows to reduce the execution time, cost, or both. Moreover, they proposed a hybrid method by both FaaS and IaaS. The small tasks could be executed remotely using the FaaS, which reduces the execution cost; hence, the number of virtual machines will be decreased as well. Therefore, the whole focus would be on the long-running tasks on IaaS.

The authors in [26] covered only 24 research papers during 2017–2019. In their paper, they considered both the white and grey literatures. Besides, they identified 32 characteristics of serverless and the possible issues related to them, only eight of them were stated by both white and grey literatures while the remaining are from grey literature only. All the characteristics are explained and presented briefly. In our paper, 275 research papers from 2016–2020 have been covered and more research questions have been answered. Besides, a well-defined systematic literature study process has been employed. Thus, the grey literature has been excluded in our paper and, our results are reproducible compared to their results.

The authors in [27] mainly concentrated on difficulties and gaps in data-centric and distributed computing using FaaS. Additionally, they evaluated the severity of these challenges via taking three case studies from big data and distributed computing settings: model training, low-latency prediction serving using the batch and, distributed computing. While our paper is a broad and comprehensive study on FaaS, 275 research papers are taken from the white literature during 2016–2020.

The paper [28] presented only four use cases of FaaS: event-triggered computing, video broadcasting, Internet of Things (IoT) data processing, and shared delivery system. Additionally, the paper only compared three platforms namely, Amazon web services (AWS) Lambda, Google Cloud Function, and Microsoft Azure Function. On the other hand, our paper presents a comprehensive study about FaaS. We identified in detail eight use cases: chatbot, information retrieval, file processing, smart grid, security, networks and, mobile and IoT. Moreover, our paper compared ten FaaS platforms namely, AWS Lambda, Apache OpenWhisk, Microsoft Azure functions, Google Cloud functions, OpenLambda, IBM Cloud functions, OpenFaaS, Knative, FunctionStage, Huawei Cloud, and Nuclio.

The authors in [29] covered only 15 papers during 2016–2018. They took both the white and grey literatures into account. On the other hand, our paper includes 275 research papers published in the period 2016–2020; they are taken from the white literature only. Moreover, our paper has formulated and answered eight clear and well-defined research questions.

The authors in [30] focused on the FaaS performance evaluation and their publication trends during 2016–2019. They identified the most commonly evaluated FaaS platforms. Additionally, they evaluated the performance features for benchmark types, micro-benchmarks, and common features across FaaS platforms. Moreover, they presented the most common platform configurations in FaaS, namely language runtimes, function triggers, and external services. This paper presents a survey of the most important and state of the art aspects of FaaS. Besides, comprehensive theoretical aspects of FaaS are covered taking from the white literature during 2016–2020.

The authors in [11] have conducted a systematic mapping study on serverless cloud computing. The main aim of their study is to concentrate on FaaS engineering. They raised two main concerns: (a) identifying publication research that considers developing or modifying serverless platforms and tools. (b) identifying the challenges and drivers related to these publications. On the other hand, our study extends the challenges and issues related to serverless computing. Moreover, we provide more details about serverless computing platforms and the use of these platforms in the literature. Also, it provides a detailed comparison among the most widely used serverless platforms. Besides, it addresses more aspects of serverless cloud computing such as application areas of serverless computing, future directions of serverless computing, etc.

The authors in [7] provided useful observations about serverless computing, its architecture, and use cases. Also, they discussed the challenges and benefits of moving forward from monolithic applications and the differences between traditional cloud services and serverless computing. Our work has extended the details of their work regarding the benefits and drawbacks of using serverless computing. It has also included more use cases and workloads to deepen the findings of previous studies.

The authors in [4] presented a technical report on serverless computing. They covered the serverless emergence with its limitations, including limited storage for fine-grained tasks, lack of coordination among functions, inadequate performance for standard communication patterns, and functions’ performance. Also, they compared AWS serverful with AWS serverless. Moreover, they also explained the challenges of architecture, networking, security, and abstractions of serverless computing. They identified five application areas including, video encoding in real-time, MapReduce, linear algebra, machine learning training, and databases. While our paper has covered 275 research papers from 2016–2020 forming a well-defined systematic literature study. We also identified 21 serverless challenges and issues. Besides, we compared serverless with the traditional cloud computing paradigm. We identified more application areas including, chatbot, information retrieval, file processing, smart grid, security, networks, IoT, and edge computing.

The authors in [31] presented a white paper based on published research papers during 2015–2017. They outlined the serverless definition alongside its advantages and disadvantages. Also, they classified serverless use-cases into six categories, namely, backends, web applications, chatbots, big data, IT automation, and Amazon Alexa. Moreover, they addressed a few research questions including, the need for the stateless feature in serverless, whether serverless could execute long-running tasks, programming models, serverless standards, and the importance of serverless for scientific research. While our paper is a comprehensive study on FaaS; we covered 275 research papers which are taken from the grey literature during 2016–2020. In our paper, eight application areas have been identified as mentioned earlier. We have identified and answered ten research questions that cover many aspects of the topic in detail compared to the aforementioned study.

We are in fact addressing with this paper ten important research questions about the topic, potentially making it a more complete guide to the development and use of serverless computing. Our work contributes to the analysis of the serverless paradigm in the context of similar applications and how could they better fit specific computing needs. Moreover, information about the current state of serverless platforms, tools, and frameworks has been updated for this survey. This due to the importance of the topic and its potential to change how both the industry and academia have managed the deployment of cloud applications until now. Updated information about the area could benefit future studies focused on the serverless computing paradigm as they make researchers aware of the latest resources and opportunities in the area.

Research methodology

Research questions

In this study, a number of research questions (RQs) have been identified and answered. Each RQ addresses a particular aspect of serverless computing as follows.

-

RQ1. What is the number and distribution of studies published on serverless computing in the period (2016–2020)?

-

RQ2. Which researchers, organizations, and countries are active in serverless computing research?

-

RQ3. What are the differences between serverless computing and traditional cloud computing?

-

RQ4. What are the benefits of using serverless computing?

-

RQ5. What are the most used software platforms that enable serverless computing in the literature?

-

RQ6. What are the application areas of serverless computing in the literature?

-

RQ7. What are the challenges and issues of using serverless computing?

-

RQ8. What tools are available for serverless computing? (serverless tools)

-

RQ9. What are the available research approaches to analyze the migration of monolithic applications to serverless computing?

-

RQ10. What are the potential future directions of research on serverless computing?

Search strategy

Literature sources

In this study, five standard online databases have been selected as sources that index the literature of software engineering and computer science. These sources are presented in Table 1.

Search string

To find the publications relevant to this study, the following extensive search string has been applied on the database sources of literature:

(serverless OR FaaS OR “function as a service” OR “function-as-a-service”) AND (computing OR paradigm OR architecture OR model OR application OR function OR service OR platform OR programming)

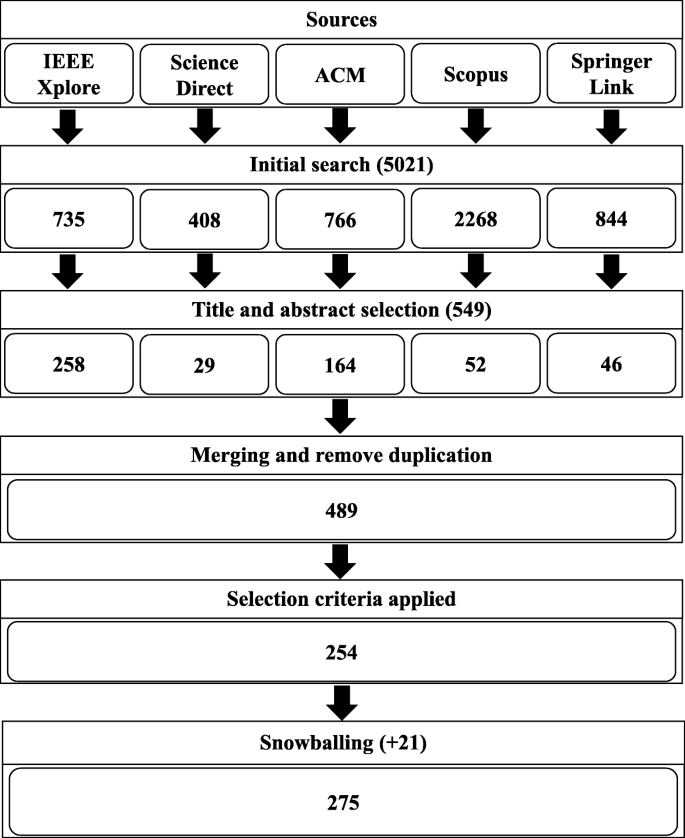

To obtain the best publication list, a generic search string is created. It contains serverless cloud computing-related keywords. The string with duration (2016 - 2020) have been applied to all libraries. Because the Springer Link library covers many fields, the result of search was greater than other libraries. This because the keyword FaaS is used in many research areas for different purposes. For instance, fish as a service (FaaS) and FPGA as a fervice (FaaS). Therefore, we used Computer Science subject filter with Springer Link, ScienceDirect, and Scopus to reduce the number of incorrect papers. The results of the initial search are shown in Fig. 2. Additionally, some inaccurate results have been obtained due to the partial similarity to FaaS, such as the federal aviation administration (FAA). The results of the initial search were 5,021 papers in total.

After obtaining the initial list of publications, some filters have been applied to reduce the number of incorrect results based on their relation to the serverless computing and FaaS topics. Most of the papers have been analyzed based on the title and abstract. However, when we were unable to make a decision based on the title and the abstract, we read the content of the paper to ascertain whether to include or exclude. As a result, the list of papers which are related to serverless computing has been decreased to 549 papers.

After filtering the papers based on the title and abstract, we merged all the papers that were relevant to serverless cloud computing, which was 549 papers into a single dataset. Then we removed the duplicated papers based on the combination of a title, author names, publication year, and venue. Thus, the number of publications has been reduced to 489 papers.

Then, the publications have been selected based on the content of the paper and based on a set of inclusion/exclusion criteria (see the following section) that have been selected carefully. Eventually, we could obtain 254 papers that are related to serverless cloud computing. In the next step, we applied backward snowballing to increase the set of relevant papers to serverless cloud computing. In this phase, we could add 21 more papers to our list of papers. As a result, the total numbers of relevant papers become 275 papers. The list of these papers and its meta-data have been published in Zenodo website as a dataset [32].

Paper inclusion/exclusion criteria

To decide whether a publication is relevant to the scope of this research, a set of inclusion and exclusion criteria have been established and employed as follows:

Inclusion criteria:

-

Publications in the field of software engineering and computer science.

-

Publications published online from 2016 – 2020.

-

Publications related directly to serverless computing.

Exclusion criteria:

-

Publications not published in English.

-

Publications without accessible full text.

-

Publications not formally peer reviewed (e.g., gray literature).

-

Publications not published electronically.

-

Publications that are duplicates of other previous publications.

Results

The selected publications were carefully read to answer the raised RQs. Here, a short title is used to represent each RQ. The following subsections present and discuss the results based on each RQ.

Distribution of publications (RQ1)

Publication frequency

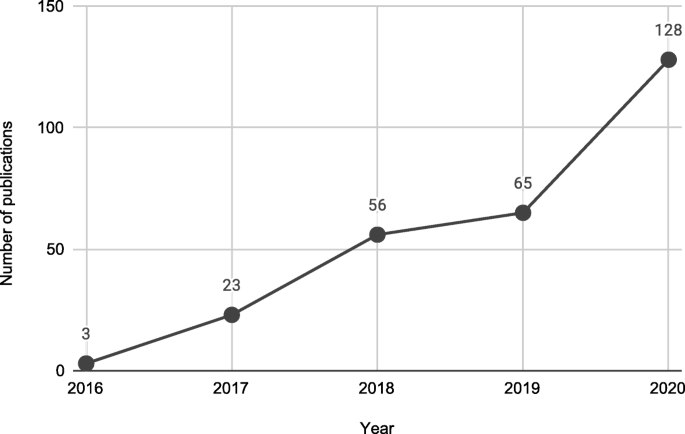

All the selected papers of this study were analyzed to determine their frequency and evolution. Figure 3 shows the results of this analysis. The results show that the average number of publications per year is approximately 55 papers.

Serverless computing has trended a significant engagement over the past two years. This boost has been caused by industry, academia, and developers for several reasons. The first important reason is the attractive engagement opportunities that serverless offers cloud providers. Serverless nature equipped cloud providers with more convenient and efficient methods to manage and utilize idle computing resources. Another reason is that the billing is only on the basis of function execution time and resource allocation. Also, the developers are not required to be aware of the underlying infrastructure and workflows. Hence, this attracts cloud providers and businesses to migrate and support serverless alongside many directions. At the same time, researchers are paying more attention to serverless as it is becoming the future paradigm for cloud computing. Moreover, current challenges and limits in serverless computing draw attention to more academics to address the issues and enhance the currently available features. For the aforementioned reasons, developers and customers are well encouraged and satisfied to select serverless computing for developing applications and services.

Publication venue

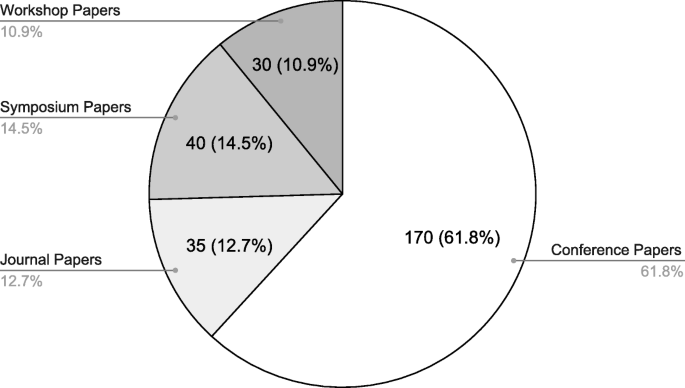

The distribution of the selected papers in various publication venues is shown in Fig. 4. The percentages of publications in conference papers, workshop papers, symposium papers, and journal papers are approximately 62%, 11%, 14%, and 13%, respectively. However, almost 13% of the studies have been published in journals, which indicates the immaturity of research in serverless computing [33, 34]. It is worth mentioning that some conference papers were published as book chapters. Thus, the original venues, which are conferences, of such papers were considered.

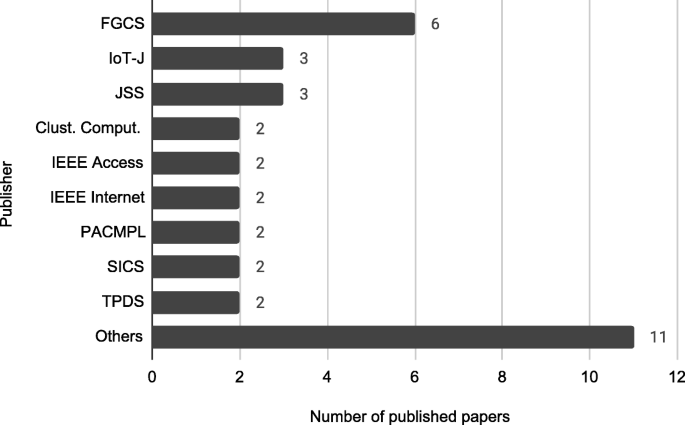

Following the interpretation of publications, the most productive and primary journals, symposiums, conferences, and workshops venues related to serverless computing can be clarified. Due to their long names, abbreviations are used in this paper. The active journals are shown in Fig. 5 and their full names can be found in Table 2. It can be observed from the figure that the top and vital three journals are “FGCS”, “IoT”, and “JSS”. Also, it can be noticed that the top three journals contain almost 34% of the published journal papers, while the others own approximately 66%.

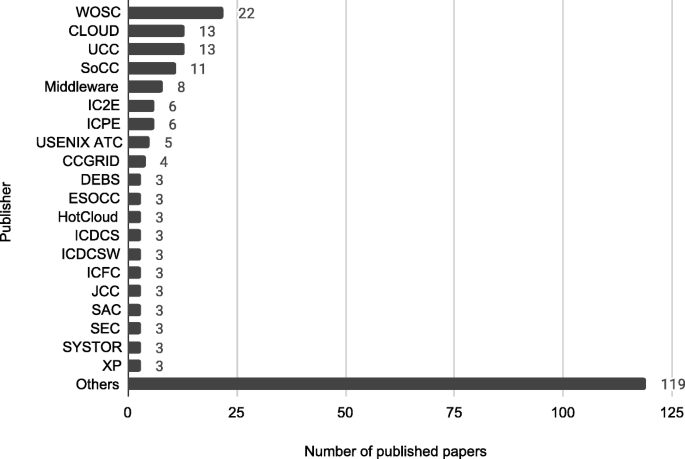

The active conferences are shown in Fig. 6 and their full names are presented in Table 3. The “WOSC”, “Cloud”, “UCC”, “SoCC”, and “Middleware” are considered the most active conferences that hold approximately 28% of the published conference papers. By including other conferences with three published papers or more, then approximately 23% of the conference papers are published in annual conferences. The majority (almost 49%) of the conference papers were published at individual conferences, which are denoted as “Others” in Fig. 6.

Active researchers (RQ2)

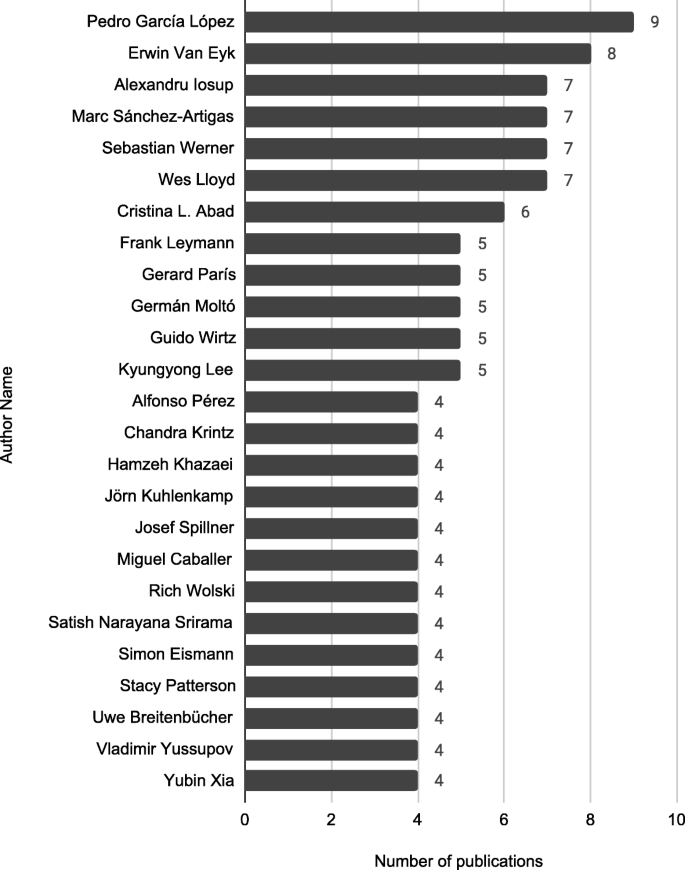

Serverless computing is a vital research area through the contribution of several scholars. Yet, the researchers are counted active if they contributed to more than two research studies, as presented in Fig. 7. The figure shows that the top six active researchers are “Pedro Garcáa López”, “Erwin Van Eyk”, “Alexandru Iosup”, “Marc Sánchez-Artigas”, “Sebastian Werner”, and “Wes Lloyd”. Table 4 presents the active nations, research institutions, researchers, references to the published papers, and the total number of publications.

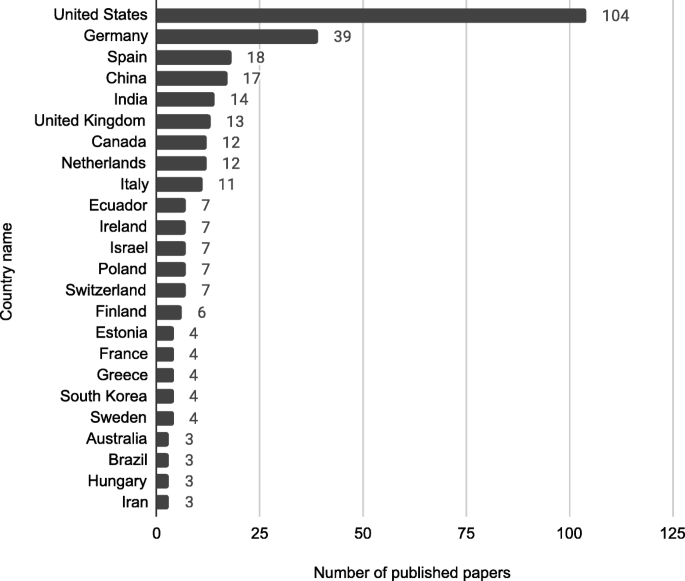

The active nations in the number of papers are obtained from the information presented in Table 4 by extracting the institutional affiliation of the authors and co-authors. An overview of the most active nations and the total number of publications is shown in Fig. 8. It is observable that the United States and Germany are the largest contributors to papers published on serverless computing with 104 and 39 published papers, respectively.

Serverless computing vs. traditional cloud

computing (RQ3) There are several differences between serverless and traditional cloud computing. In the traditional cloud architecture, the server acts as a monolithic system containing all business logic. Meanwhile, the serverless architecture is modeled into smaller, event-driven, and stateless ‘triggers’ (events) and ‘actions’ (functions) [175]. Each component handles different pieces of data and runs independently [176]. Spreading business logic into smaller functions increase the development efficiency [77, 177] and also decreases the chance of a single point of failure [77]. On the other hand, the component dependency within monolithic applications affects the availability of other services adversely.

In a serverless architecture, the developers are unable to take control of listening to the TCP socket, managing load balancers, maintenance or configuration of the server, as well as provisioning and resource allocation. Therefore, there is no need for system administrators; the developers only focus on handling client requests and paying attention to deliver valuable services [8].

Serverless computing also differs from monolithic computing as the functions have shorter life cycles.

The traditional monitoring and debugging tools that are used in monolithic applications are not included in the serverless architecture; the developers are compelled to use built-in tools for debugging and monitoring. The computing power is no longer a concern for the developers in the serverless paradigm, as it could scale horizontally almost indefinitely [178, 179]. Meanwhile, in the client-server architecture, it usually requires dedicating two server instances; the primary instance and a second in case if the former fails. This leads to higher costs in the monolith paradigm. Serverless architecture could be more economical for unsteady load conditions while the server-based is more suitable for steady loads [152]. As serverless applications scale up and down according to the requests, thus, unlike the traditional systems, it is unnecessary to keep the sessions in the memory [8]. Hence, it is difficult to keep track across requests.

FaaS boosts the security level as cloud providers continuously update their infrastructure with the latest security patches; this also removes the security burden on developers [17]. Directly accessing the backend resources in the traditional model is considered a critical security issue. Thus, any requests from the clients and internal functions in the serverless environment must go through a distributed request-level authorization mechanism that strengthens the security level [8]. Additionally, denial of service (DoS) attacks are controlled, as it is more difficult to attack distributed servers than a single server [175]. However, some security concerns remain due to the third-party API usage [9]. Besides, there is a lack of tools to identify vulnerabilities and access control risks. Table 5 summarizes the aforementioned differences.

Benefits of serverless computing (RQ4)

Serverless computing offers numerous benefits to its users, and Table 6 presents papers that states these benefits. This section summarizes those benefits as follows:

Cost effective

Serverless applications are abstracted from server infrastructure, which results in cost-based services depending on usage [180]. For example, applications run whenever a user makes a request to a service within the application. The cloud vendors charge only for the used space, and there is no cost while their applications are in an idle state.

Scalability

Serverless reasonably solved the resource allocation problem [191]. Therefore, developers do not have to concern themselves with the application scalability, because the application will scale automatically whenever user application requests are increased. If there are numerous requests to a function within the application, the serverless providers will start servers to handle these requests.

Server-side management

In serverless computing, developers do not need to concern themselves with the server-side and its management. Serverless cloud providers take care of managing and maintaining the hardware and software required to deploy applications. In addition to that, they handle all administration operations to let developers focus on different kinds of resources such as central processing unit (CPU), memory, and storage.

Easy to deploy

Serverless applications are easy to deploy. For example, to deploy an application, developers only need to upload some functions and release a new product. The serveless will take care of deployment management and infrastructure related concerns such as server provisioning and scaling.

Decrease latency

Serverless applications are not hosted on a specific server; the code can run from any server in any location. Therefore, cloud vendors can run the application on servers near the end user’ location. This reduces latency, because end user requests do not have to travel across the Internet to access the original server.

Serverless platforms in the literature (RQ5)

The software platforms are generally implemented to deal with resources from several clouds and ensure proper running of client applications. The heterogeneous nature of the cloud providers’ infrastructure (hardware and operating systems) led to the necessity to direct the developers’ focus to the functional part, rather than the underlying infrastructure [199].

With the emergence of the first serverless platform, AWS Lambda by Amazon in 2014 [8], cloud computing has evolved to a new generation referred to as serverless computing. However, serverless was not a brand-new paradigm; it emerged after the advancements in adopting virtual machines and container technologies [120]. By 2016, other competitors, namely Google, Microsoft, and IBM followed the trend. Several commercial and open-source platforms offer serverless computing. The well-known commercial systems are AWS Lambda, Google Cloud Functions, and Azure functions. Alternately, there are several open source platforms available including IBM Cloud Functions, and Apache OpenWhisk.

There are several criteria to help developers in selecting a serverless platform: cost, performance, supported programming languages and model, deployment easiness, easiness in composing functions from different providers, security, and monitoring and debugging tools [184].

Table 7 presents the serverless platforms used in the considered papers of this study. It can be noted that “AWS Lambda”, “Apache OpenWhisk”, and “Azure Functions” are the most used platforms with 78, 23, and 11 published papers, respectively. However, it is worth mentioning that each platform has its own set of features and differs from others.

The application areas of serverless computing in the literature (RQ6)

Serverless computing can be utilized in a number of application areas as follows:

Chatbot

A chatbot application is developed using serverless computing, which enables interaction with users via voice or text commands. Within this application, six functionalities have been implemented, namely the Date, News, Jokes, Weather, Music Tutor, and Alarm Service. For example, a user can ask for the current date using a voice or text command. The request is routed to a required serverless action on OpenWhisk for further processing. The Date action is activated via the issued command and retrieves the current date to the user in the form of text or voice [44].

Another example is the ticketing chatbot service developed using serverless computing and natural language processing (NLP). The architecture of the system consists of three parts: (1) the node.js Webhook, which works based on hypertext transfer protocol (HTTP) POST or GET requests (2) Wit.AI, which is a NLP service (3) Ticket.com, which is a ticketing order API. For example, when a user books a flight ticket; a specific function on Webhook will be activated, which routes the user query to the Wit.AI service. Wit.AI will process the query and extract useful parameters from the request such as destination, date, and time, then send it back to Webhook. After receiving the processed query from Wit.Ai; another action will be triggered and pass the processed query to Tickt.com API to retrieve flight information such as the flight number, airline name, departure time, and ticket price from several airline companies. Finally, Webhook will provide flight information to the user [44, 179, 248].

Information retrieval

A search engine web-based application is developed based on serverless architecture. Search engine functionalities are implemented as Amazon lambda functions. The search engine executes all the details of retrieval processing after receiving the user query (e.g., tokenization, stop-word removal, term weighting, similarity calculation, and ranking). Then, it sends back the results to the user as documents stored in the DynamoDB database to be accessed using the web application interface [173].

File processing

Serverless computing can been utilized in file processing applications [119, 249]. For instance, in [119] a model for highly parallel file processing applications based on serverless architecture is proposed. This model provides users with different ways to process their files.

The first method is by using the API gateway. In this method, users submit files using the HTTP request employing the API gateway to a lambda function to process the file (e.g., medical images and video files).

The second method is by uploading/reading files to the Amazon simple storage service (Amazon S3) bucket. This method provides the user with three different ways to execute a lambda function using S3 buckets: (a) by uploading a file to S3 buckets. When the file is uploaded, S3 creates an event to invoke a lambda function; (b) by copying a file from another bucket to the bucket linked with the lambda function. This will cause the trigger of an event from S3 to invoke a lambda function as in the previous manner; (c) by specifying a bucket where the files to be processed are stored. Then, for each file found, the lambda function is invoked in parallel using an S3 bucket.

The third method is by specifying the output file. By this method, the user can set a chain of lambda functions to be invoked by S3 buckets. In this case, the user defines the input/output buckets for each of the lambda functions. Thus, the output bucket can be used as an input to another lambda function [119]. Here, serverless functions can handle different types of data (stored in files) such as sensory, textual, and biological data [200]. Also, many preprocessing operations using NLP may be applied to data files before processing, such as stemming and noise removal [78].

Smart grid

A MATLAB simulation scenario is created to illustrate the use of the smart grid with serverless cloud computing to control and manage electrical loads (devices). In this scenario, the Simulink tool is employed for simulation. A MATLAB program is developed to indicate the start and end of the simulated grid model via a batch file. The batch file is used to upload grid model data generated by the program to Amazon S3. Afterwards, a lambda function in the serverless side will be activated to process the uploaded data, and subsequently the result will be sent back to the batch file as a response. In return, the program will read continuously the response from the batch file and interpret its content to manage the electrical switch (loads) [201].

Also, An electrical overload warning system is implemented in the smart grid, based on serverless architecture. The system uses some Amazon web services, including S3, lambda functions, simple notification service (SNS), and CloudWatch. S3 is used as a storage service in the system. Lambda functions constitute a computing service that executes the code of the application. CloudWatch is a monitoring tool that monitors AWS resources and applications. The SNS is a notification service that sends and receives notifications.

The main sections of this warning system consist of data collection, data acquisition, data analysis, data mining, conclusion verification, and conclusion publishing. In this architecture, the AWS Lambda is used in data analysis and data mining. AWS CloudWatch is used for data conclusion verification. The SNS is used to generate alarms. For instance, the data is uploaded to S3, and subsequently, a lambda function is activated for data analysis and data mining. After the lambda function execution, its log data is stored in CloudWatch logs. CloudWatch is used for conclusion verification. CloudWatch defines an alarm size to a specific value, upon which it compares the value of log data with a predefined alarm size to check the current state. Then, the CloudWatch uses SNS for publishing conclusions. If the receiving data is greater than the alarm size, an alarm signal will be triggered and send an email via SNS [5].

Security

An automated threat detection system is introduced using serverless cloud computing and Kubernetes. Kubernetes is an open source system to automatically deploy and manage application containers [243, 250]. The system deals with threats (e.g., software vulnerabilities and insecure configurations) automatically based on user-defined policies. The system includes a vulnerability scanner (VS), which is a thread detection component. Whenever users deploy new application containers, the containers are registered with the VS, and a scanner agent is installed. When a thread is detected by the scanner, a notification is sent to the OpenWhisk component, which activates a serverless function that takes actions to reduce the threat. OpenWhisk will invoke a Kubernetes API extension and let the security enforcement operator (SEO) handle the operation [35].

Networks

Serverless cloud computing has been employed in different networking domains[175, 188, 251, 252]. In [188], a variety of networking fields including software-defined networking (SDN) which can utilize advantages of serverless computing architecture have been discussed. The SDN is a network architecture approach that enables the network to be manageable and adaptive. This architecture separates the network control plane from the forwarding functions (the data plane). This decoupling enables network switches to become a simple forwarding device, and the network control is implemented as a network application that executed on a logically centralized controller. Serverless computing can be used in the SDN controllers. These controllers can be implemented as independent functions deployed on serverless platforms. For example, when a packet arrives to the SDN forwarding device, the device will parse the packet header and forward it to the SDN controller. The functions within the SDN controller will be activated then it will determine what action to be taken with the packet. After that, it will send the information to the forwarding device. The action might be modifying the header, dropping the packet, etc.

IoT

Serverless computing has been utilized in many IoT applications, as shown in Table 8. For example, a camera can be installed to monitor a house, after which processing images captured by the camera can be performed by some serverless functions provided by the OpenWhisk platform. When a camera detects an interesting object such as a car or a human, the camera sends its pictures to the serverless platform for further processing. To extract features, a serverless function is called to perform feature extraction and then reports its status to the users [232].

Edge computing

Serverless cloud computing and edge computing have been used to build different kinds of applications, as presented in Table 8. For instance, the authors of [217] have implemented an autonomous mobile robot (AMR) system based on serverless computing and edge computing. The system consists of three main components: an AMR with NVIDIA Jetson TX2 module for edge computing, a serverless architecture based on AWS, and a cross-platform mobile application developed using React Native. The main idea of the system is to deliver a package to a user. For example, the user will interact with the mobile application to send a package. Once the delivery request has been received from the user, the AWS IoT can activate related lambda functions, such as position coordinate. Then, the AMR would start its mission, sending the package to the receiver’s location. Also, facial images were regularly retrieved by AWS lambda to identify the receiver’s face. Finally, the task is completed when the receiver’s identification is confirmed [217].

Serverless computing challenges and issues (RQ7)

Studying the literature reveals a number of challenges and issues posed by employing serverless computing. These challenges cover the functional and non-functional aspects of serverless computing as follows:

Cost and pricing model

Cost is a fundamental challenge; therefore, serverless computing providers should reduce the usage of resources to the minimum, while functioning in both execution and idle states. Further, the pricing model is another challenge in serverless computing compared to other cloud computing approaches. For example, the CPU bound is cheap, whereas the input/output (I/O) bound functions may be more expensive from dedicated servers. Table 9 presents papers that investigate issues on cost and pricing models in serverless cloud computing.

Cold start

Serverless computing can scale to zero while there is no request for functions and services. Scaling to zero leads to a problem called cold start. A cold start occurs when serverless functions remain idle for some time, and the next time these functions are invoked, a longer start time is required. Methods and techniques to reduce the cold start problem are crucial as a result, many papers have been studied this problem, as shown in Table 9.

Resource limits

In serverless computing, resources are required to ensure that the platform can deal with load increasing. This includes CPU usage, memory, execution time, and bandwidth [94, 202, 210, 235, 280].

Security

Security is the most challenging issue in serverless cloud computing. One of the security issues is isolation, because functions are running on a shared platform by many users. Therefore, strong isolation is required. Another security issue is trust when it comes to process-sensitive data. The serverless applications work with many system components, which must function correctly to maintain security properties. Table 9 presents several papers associated with serverless security.

Scalability

Serverless computing must ensure function scalability and elasticity. For example, when many requests are issued to a serverless application, these requests should all be served and the used serverless cloud provider should provide the required resources to process all these requests and should scale up with the number of requests [210, 280, 281].

Long-running

Serverless computing runs function in a limited and short execution time, while there are some tasks might require long execution time. This does not support long execution running, since these functions are stateless, which means that if the function is paused it cannot be resumed again [11, 202, 234, 280].

Programming & debugging

There is currently a lack of debugging tools. Further, monitoring tools are required, since developers need to monitor the application and observe how functions are working. More advanced integrated development environments (IDEs) are needed, so developers can perform refactoring functions, such as merging or splitting functions, and reverting functions to the previous version. Moreover, logs from serverless function invocations need to be sent to the developer and provide detailed stack traces. When an error occurs, a good method is required to report details on the occurrence to the developer. The equivalent of a stack trace for serverless computing is currently not available. Table 9 shows many papers that consider programming and debugging challenges and issues.

Vendor lock-in

The FaaS paradigm separates the code from the data, which leads the functions to depend strongly on the could provider’s ecosystem for storing, obtaining, and transferring data [282]. This issue makes the customers dependent on the serverless provider for products and services, and the customers cannot easily use different vendors in the future without substantial cost. Thus, customers have to wait on the serverless provider for additional services [9, 130, 202].

Performance

Serverless computing has many performance challenges and issues such as scheduling and service calling overhead. For instance, scheduling means when a serverless function is activated in response to an event this function should be mapped to a specific resource (e.g., container or VM) to be run. The resource can have a significant effect on performance based on available resources, location of input data and code, load balancing, etc. Table 9 shows papers related to serverless performance.

Fault tolerance

It refers to a system that continues working and provides its services despite the failure in some components. It mostly occurs when some containers fail. To overcome this challenge, a basic retry mechanism is used [11, 210, 235].

Function composition

Serverless cloud vendors provide users the ability to deploy small stateless functions to the cloud to handle a specific task. However, some complex tasks require multiple functions to work with each other collaboratively to be performed. Therefore, more research needs to be done on how function composition can be used effectively and efficiently in serverless cloud computing [11, 38, 235].

Resource sharing

Functions in serverless cloud computing share resources to achieve inexpensive cloud computing. Sharing resources among functions and other serverless components is a challenging task. Therefore, good techniques are required to be investigated to achieve this goal [98, 210, 283].

Testing

A serverless application consists of many small functions. These functions work together to accomplish the application’s functionality. Therefore, integration testing for these functions is a crucial issue to make sure that the application works properly [9, 84, 284].

Naming and addressing system

Users deploy functions to serverless cloud computing to solve problems. These functions cannot listen to network communications. The existing serverless cloud computing frameworks do not support this feature. Instead, they use third party services such as Amazon S3 to communicate with other functions. Therefore, finding the internet protocol (IP) address of a function by other functions and services is a challenging issue in serverless cloud computing [98].

Legacy systems

Legacy systems refer to old technologies, techniques, hardware, and software systems that are still in use. It should be possible to reach these systems from serverless cloud computing. Also, these systems might be required to be transferred to cloud computing. Therefore, more work needs to be done on the migration process and how the functions can be extracted from legacy systems to be deployed as serverless cloud functions [84, 119, 120, 210, 280].

Managing hybrid cloud

In a hybrid cloud, a developer may deploy an application to different clouds (hybrid cloud). For example, if some functions of an application are on a specific serverless cloud vendor and others are hosted on other public clouds; then, managing these functions and their interactions is a challenging issue [84, 210, 280].

Lack of quality of service (QoS) support

Existing serverless platforms and frameworks do not provide users the control over the QoS of serverless functions [235]. Cold starts, queuing, and orchestration are the main reasons affecting the QoS in serverless computing [8].

Architecture complexity

A serverless application may consist of several functions; the number of functions increases the complexity of the architecture. Managing these functions and services related to the application also leads to a complex architecture [9].

Interactions tracking

Stateful requests are usually used by real-life applications. It means deployed systems keep track of the state of users’ interactions and store them on the server-side for further uses. However, in stateless serverless functions, it is not obvious how these functions will handle and manage the states of each user [210, 280].

Concurrency management

Concurrency means a function can handle any number of requests whenever a function is invoked. For example, if a request has been made to a serverless function, the function will process that request. However, if another request has been made to that function and the function is still processing the previous request, then the serverless should provide another instance of that function to serve the new request [210, 280].

Support for heterogeneous hardware

Existing serverless platforms may not support some specialized hardware such as graphics processing unit (GPU) and field programmable gate arrays (FPGAs). This is a challenging issue for vendors to provide support for heterogeneous hardware [210, 280].

Tools available for serverless computing (RQ8)

Nowadays, various providers strive to facilitate the adjustable use and allocation of machine resources on the cloud [9]. Likewise, plenty of supportive tools and services are aiding developers to more efficiently manage and deploy applications using serverless computing. Serverless computing is auto-scalable, reliable, and easily accessible [203]; for these reasons, big cloud providers such as Amazon, Microsoft, Google, IBM have realized the importance of offering frameworks, IDEs, software development kits (SDKs), function development kits (FDK), migrating mechanisms, logs, and monitoring tools to enhance and simplify the development, testing, deployment, and monitoring of serverless applications [17]. For instance, Amazon offers Cloud9 IDE for local deploying and testing [205].

Apart from the cloud providers’ specific tools, plenty of third-party tools exist for the developers. With the concept of these tools, developers can build and deploy applications on multi-cloud providers. Developers are also able to control platforms and resources by programming. The advantages of this are linking the applications with auto-scaling controllers and including advanced self-mechanisms into the code to automatically configure, secure, optimize, and recover the cloud applications. The core advantage of this feature is the acceleration in applying changes to the application environment [272].

There are several tools available to model serverless applications, which are based on deployment models as either imperative or declarative. The imperative model defines the execution steps to obtain a specific deployment task. While the declarative model describes the structure of a desired application deployment. However, to fully benefit from employing a serverless architecture, cloud providers should address issues that have arisen with the use of a serverless paradigm. For instance, debugging tools are unable to track and identify the exact reason behind errors [44], as most of them are limited to what cloud providers offer [179]. Although many powerful tools have been mentioned in this study and can be used in serverless computing in real scenarios, there is still a great opportunity to develop further tools and services.

Migration of monolithic applications to serverless computing (RQ9)

The nature of most existing applications is monolithic. Monolithic applications have several drawbacks; they are characterized by continuous growth in complexity and size over time.

The bigger size of the monolithic applications leads to slower startup time. Moreover, novice developers face difficulties in digesting the traditional programming paradigm. Economically, monolithic systems take more effort to be developed and debugged. Furthermore, integrating the latest technological development into monolithic systems is a tough and expensive process. Generally, monolithic applications are designed to be tightly coupled – the entire application will be unable to run or compile if one component is missing or fails [128]. It is also difficult to scale the application when multiple components have limited resources.

Another drawback is that updating any component will require redeployment of the entire project. The migration process to serverless computing involves transferring the legacy application code to serverless functions. This process could be more efficient and functional in applications with less size [76].

The key challenging aspect of migration is about extracting the serverless computing from the monolithic systems. There are several approaches to accomplish this task, one of them is Lift and shift [205]. This technique transfers the whole infrastructure to the cloud, however, this method also brings the already existing problems within the source to the destination. In [205] the authors proposed toLambda to automatically refactor, test, and deploy the monolithic applications (Java) into microservices (AWS Lambda Node.js). While rebuilding the legacy application from scratch is recommended for applications that no longer depend on the existing cloud services [130].

However, not all applications are suitable for migration to serverless computing [76, 128]; therefore, the first important aspect to be considered before rebuilding the applications is whether it would save money [188]. For such cases, newly desired features could be implemented and added via serverless computing as an extension to the current systems [128].

The other approach is to refactor the entire legacy code into FaaS services. During the migration phase, it is crucial to address the coupling of the systems not only in the application logic but also in the databases, as more functions will call the same database. However, migrating the server-side while keeping the user interface could lead to problems. Moreover, the client cannot obtain integrated data by a single request. As the functions are decoupled into smaller entities, the server is unable to aggregate data from different entities. Thus, it is the client’s responsibility to call the necessary entities to achieve this task [76].

Future directions of research (RQ10)

As the evolution of serverless computing is relatively new, there are several research paths available to be focused on as follows:

Function startup

One of the major research opportunities is overcoming the cold start problem without affecting the primary feature of serverless which is scaling to zero [160, 188]. The first call of functions needs initializing the required libraries, which will cause a cold start. To bypass this, the computing resources will be warm for a certain time. Hence, upcoming requests will be handled faster. This could be performed via enhancing scheduling policies and developing more accurate function performance measurements [86]. Serverless providers follow their approaches to keep the functions in the warm pool. However, most of them are based on the number of requests for a certain time. Thus, if a function is not called frequently, it will suffer again from the cold start.

Very few studies such as [272] suggested a periodic event scheduler for Kotless (a serverless framework for Kotlin) which will trigger a list of warm functions every few minutes. The authors of the study claimed that this will reduce the cold start without bringing extra costs. While in [233] argues that pre-warming methods are unnecessarily using resources with idle containers. The researchers are still working to avoid cold start by reducing high delayed function startups via optimizing compute resources [11].

Recycling and rebalancing minutes and hours of idle runtime is an expensive process for cloud providers. Therefore, reducing the cold start penalties will help cloud providers in the first place and hence customers. The authors in [202] proposed FaaStest an autonomous approach based on machine learning to capture the function call behavior and then dynamically select the optimal ones. This technique could reduce the cold start by 90%. They proposed a strategy to predict functions invoking time and warming the function using fine-grained regression method [285]. However, overcoming the issue of function startups is still considered as a research direction to be more investigated.

QoS

Keeping a guaranteed QoS level in the software level agreement (SLA) that describes the lower service level offered by the service providers [166] is a major obstacle for cloud providers to offer optimal performance metrics [167, 207]. However, serverless frameworks should consider the objectives of both providers and users [242]; customers and developers have none or little QoS support over the functions [236]. In addition, the auto-scaling feature lacks QoS guarantees. This lack of QoS affects the performance of serverless applications. Increasing response time leads to decreasing the QoS level [207]. It also raises the cost of the service [236]. Therefore, achieving an ideal resource allocation management is a complicated and challenging task as several objectives should be fulfilled together [209]. Hence, providing more efficient QoS management of functions by the auto-scaling is essential to be considered without degrading the fault-tolerance features and increasing the cost.

Pricing

Pricing is crucial for both customers and cloud providers. However, there is a shortage in pricing models, as there is an imbalance in needs between serverless providers, developers, and service end-users [236]. The pricing scheme for most cloud providers is based on the number of functions’ requests and execution time-the quantity of consumed resources [123, 200]. Currently, FaaS is less expensive when functions are bound to I/O than CPU. Moreover, services that dynamically adjust resource consumption are unable to predict the optical computing technology. It is crucial to implement solutions that offer cost-effective computing resources. FaStest reduced the cost by 50% via learning the behavioral pattern of functions using machine learning [202]. Price estimation has a great impact on selecting the most optimal provider. Therefore there should be more researches on developing tools to predict the pricing in advance.

Legacy systems

Since the serverless emergence, researchers are working on the open question of how to decompose legacy systems into FaaS without degrading performance [208]. Several works have been done on migrating to FaaS [76, 130, 286]. The currently available automated tools for migrating legacy code into FaaS are not fully practical due to the remaining manual work that needs to be done [17]. Therefore, finding optimal automatic migration solutions for existing legacy systems is an interesting research direction [130]. Moreover, research on tools for checking whether a legacy system will fit the serverless paradigm is a crucial line. Also, developing and enhancing automatic and semi-automatic analysis strategies based on artificial intelligence could be another future research field.

Debugging, testing, and benchmarking

The available tools for testing, debugging, and deployment are immature, this prevents some developers from entering the serverless environment. The shortage of tools in FaaS is a core problem, particularly the testing tools [17]. Moreover, most FaaS environments lack powerful local emulation platforms for testing. Therefore, developers are mostly depending on the server-side, which is expensive. Developers need to be ensured about the adequate testing tools before diving into the serverless world. A challenging aspect in benchmarking is the lack of information due to the heterogeneity of the cloud provider data center: hardware, software, and configurations [287]. Additionally, benchmarking FaaS platforms should take advantage of analyzing the cloud services, which lacks limited accessible measurements and hidden modification of services over time [55]. Thus, it is essential to have transparent, fair, and standardized benchmarking tools available for developers.

Threats to validity

Several threats might impact the validity of the literature mapping studies. In this paper, popular instructions and guidelines were taken into account to avoid threats to validity as follows:

-

Coverage of research questions: All up-to-date research aspects of serverless computing might not be included in this study. To overcome this threat, the brainstorming was conducted by all the authors in determining the most current research questions in the area.

-

Coverage of related papers: The process of obtaining all the related studies in serverless computing cannot be secured. In this study, various literature databases were employed; moreover, the method based on different terms and synonymous is followed by all the authors in determining the related questions.

-

Paper inclusion/exclusion criteria: The individual bias and interpretation could affect the implementation of the criteria. Therefore, to solve this problem, the agreements of all authors were considered in excluding or including a paper.

-

Accuracy of data extraction: The individual experience effects extracting the data, therefore online meetings were conducted after the data extraction process by each author. During the meetings, the outcomes from each author were compared with other findings to determine the differences and reach a final consensus.

-

Reproducibility of the study: Whether other researchers could obtain similar outcomes of this study is another threat. Thus, to address this, the research methodology contains the well-explained steps and actions conducted in this paper (as shown in “Research methodology” section).

Conclusions

The contributions of the work presented in this paper are threefold: (a) a methodical review of related literature on the topic of serverless computing, to address the issue of the lack of compiling information on the state-of-the-art of the field; (b) a comparison of the platforms and tools used in serverless computing; (c) an extensive analysis of the differences, benefits, and issues related to serverless computing, to provide a more complete understanding of the topic. Given the fast evolution and growing interest in the field, this survey focused on gathering the most outstanding trends and outcomes of serverless computing, as described by recent researchers. This survey could significantly reduce ambiguity and the entry barrier for novice developers to adapt to the serverless environment. Furthermore, the findings presented in this study could be of great value for future researchers interested in further investigating serverless computing. Finally, it is worth mentioning that the interest that both commercial and academic efforts fueled into studying, developing, and implementing serverless tools in forthcoming years could help maximize the potential that serverless computing could bring to the IT community.

Availability of data and materials

Not applicable.

References

Großmann M, Ioannidis C, Le DT (2019) Applicability of serverless computing in fog computing environments for iot scenarios In: Proceedings of the 12th IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC ‘19 Companion), 29–34.. Association for Computing Machinery, New York. https://doi.org/10.1145/3368235.3368834.

Boza EF, Abad CL, Villavicencio M, Quimba S, Plaza JA (2017) Reserved, on demand or serverless: Model-based simulations for cloud budget planning In: 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), 1–6. https://doi.org/10.1109/ETCM.2017.8247460.

Villamizar M, Garcés O, Ochoa L, Castro H, Salamanca L, Verano M, Casallas R, Gil S, Valencia C, Zambrano A, Lang M (2017) Cost comparison of running web applications in the cloud using monolithic, microservice, and aws lambda architectures. SOCA 11(2):233–247. https://doi.org/10.1007/s11761-017-0208-y.

Jonas E, Schleier-Smith J, Sreekanti V, Tsai C-C, Khandelwal A, Pu Q, Shankar V, Carreira J, Krauth K, Yadwadkar N, Gonzalez JE, Popa RA, Stoica I, Patterson DA (2019) Cloud Programming Simplified: A Berkeley View on Serverless Computing. http://arxiv.org/abs/1902.03383. Accessed 6 Jan 2021.

Geng X, Ma Q, Pei Y, Xu Z, Zeng W, Zou J (2018) Research on early warning system of power network overloading under serverless architecture In: 2018 2nd IEEE Conference on Energy Internet and Energy System Integration (EI2), 1–6. https://doi.org/10.1109/EI2.2018.8582355.

Kulkarni SG, Liu G, Ramakrishnan KK, Wood T (2019) Living on the edge: Serverless computing and the cost of failure resiliency In: 2019 IEEE International Symposium on Local and Metropolitan Area Networks (LANMAN), 1–6. https://doi.org/10.1109/LANMAN.2019.8846970.

Baldini I, Castro P, Chang K, Cheng P, Fink S, Ishakian V, Mitchell N, Muthusamy V, Rabbah R, Slominski A, Suter P (2017) Serverless Computing: Current Trends and Open Problems In: Research Advances in Cloud Computing, 1–20.. Springer, Singapore. https://doi.org/10.1007/978-981-10-5026-8_1.

Adzic G, Chatley R (2017) Serverless computing: Economic and architectural impact In: Proceedings of the 2017 11th Joint Meeting on Foundations of Software Engineering (ESEC/FSE 2017), 884–889.. Association for Computing Machinery, New York. https://doi.org/10.1145/3106237.3117767.

Jambunathan B, Yoganathan K (2018) Architecture decision on using microservices or serverless functions with containers In: 2018 International Conference on Current Trends Towards Converging Technologies (ICCTCT), 1–7. https://doi.org/10.1109/ICCTCT.2018.8551035.

Wolski R, Krintz C, Bakir F, George G, Lin W-T (2019) Cspot: Portable, multi-scale functions-as-a-service for iot In: Proceedings of the 4th ACM/IEEE Symposium on Edge Computing (SEC ‘19), 236–249.. Association for Computing Machinery, New York. https://doi.org/10.1145/3318216.3363314.

Yussupov V, Breitenbücher U, Leymann F, Wurster M (2019) A systematic mapping study on engineering function-as-a-service platforms and tools In: Proceedings of the 12th IEEE/ACM International Conference on Utility and Cloud Computing (UCC’19), 229–240.. Association for Computing Machinery, New York. https://doi.org/10.1145/3344341.3368803.

Brenner S, Kapitza R (2019) Trust more, serverless In: Proceedings of the 12th ACM International Conference on Systems and Storage (SYSTOR ‘19), 33–43.. Association for Computing Machinery, New York. https://doi.org/10.1145/3319647.3325825.

Kuhlenkamp J, Werner S (2018) Benchmarking faas platforms: Call for community participation In: 2018 IEEE/ACM International Conference on Utility and Cloud Computing Companion (UCC Companion), 189–194. https://doi.org/10.1109/UCC-Companion.2018.00055.

Somma G, Ayimba C, Casari P, Romano SP, Mancuso V (2020) When less is more: Core-restricted container provisioning for serverless computing In: IEEE INFOCOM 2020 - IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), 1153–1159. https://doi.org/10.1109/INFOCOMWKSHPS50562.2020.9162876.

Sewak M, Singh S (2018) Winning in the era of serverless computing and function as a service In: 2018 3rd International Conference for Convergence in Technology (I2CT), 1–5. https://doi.org/10.1109/I2CT.2018.8529465.

van Eyk E, Toader L, Talluri S, Versluis L, Ută̧ A, Iosup A (2018) Serverless is more: From paas to present cloud computing. IEEE Internet Comput 22(5):8–17. https://doi.org/10.1109/MIC.2018.053681358.

Leitner P, Wittern E, Spillner J, Hummer W (2019) A mixed-method empirical study of function-as-a-service software development in industrial practice. J Syst Softw 149:340–359. https://doi.org/10.1016/j.jss.2018.12.013.

Feng L, Kudva P, Da Silva D, Hu J (2018) Exploring serverless computing for neural network training In: 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), 334–341. https://doi.org/10.1109/CLOUD.2018.00049.

Ao L, Izhikevich L, Voelker GM, Porter G (2018) Sprocket: A serverless video processing framework In: Proceedings of the ACM Symposium on Cloud Computing (SoCC ‘18), 263–274.. Association for Computing Machinery, New York. https://doi.org/10.1145/3267809.3267815.

Werner S, Kuhlenkamp J, Klems M, Müller J, Tai S (2018) Serverless big data processing using matrix multiplication as example In: 2018 IEEE International Conference on Big Data (Big Data), 358–365. https://doi.org/10.1109/BigData.2018.8622362.

Al-Ali Z, Goodarzy S, Hunter E, Ha S, Han R, Keller E, Rozner E (2018) Making serverless computing more serverless In: 2018 IEEE 11th International Conference on Cloud Computing (CLOUD), 456–459. https://doi.org/10.1109/CLOUD.2018.00064.

Pérez A, Risco S, Naranjo DM, Caballer M, Moltó G (2019) On-premises serverless computing for event-driven data processing applications In: 2019 IEEE 12th International Conference on Cloud Computing (CLOUD), 414–421. https://doi.org/10.1109/CLOUD.2019.00073.

Glikson A, Nastic S, Dustdar S (2017) Deviceless edge computing: Extending serverless computing to the edge of the network In: Proceedings of the 10th ACM International Systems and Storage Conference (SYSTOR ‘17).. Association for Computing Machinery, New York. https://doi.org/10.1145/3078468.3078497.

Al-Ameen M, Spillner J (2019) A systematic and open exploration of faas research In: Proceedings of the European Symposium on Serverless Computing and Applications (CEUR Workshop Proceedings ; 2330), 30–35.. CEUR-WS, Zurich. https://doi.org/10.21256/zhaw-3271.

Alqaryouti O, Siyam N (2018) Serverless computing and scheduling tasks on cloud: A review. Am Sci Res J Eng Technol Sci (ASRJETS) 40(1):235–247.

Taibi D, El Ioini N, Pahl C, Niederkofler J (2020) Patterns for Serverless Functions (Function-as-a-Service): A Multivocal Literature Review In: Proceedings of the 10th International Conference on Cloud Computing and Services Science - Volume 1: CLOSER, 181–192. https://doi.org/10.5220/0009578501810192.

Hellerstein JM, Faleiro J, Gonzalez JE, Schleier-Smith J, Sreekanti V, Tumanov A, Wu C (2018) Serverless Computing: One Step Forward, Two Steps Back. http://arxiv.org/abs/1812.03651. Accessed 4 Oct 2021.

Rajan AP (2020) A review on serverless architectures - function as a service (faas) in cloud computing. Telecommun Comput Electron Control 18(1):530–537. https://doi.org/10.12928/telkomnika.v18i1.12169.

Sadaqat M, Colomo-Palacios R, Knudsen LES (2018) Serverless Computing: A Multivocal Literature Review. NOKOBIT - Norsk Konferanse for Organisasjoners Bruk Av Informasjonsteknologi 26(1):1–13.

Scheuner J, Leitner P (2020) Function-as-a-service performance evaluation: A multivocal literature review. J Syst Softw 170:110708. https://doi.org/10.1016/j.jss.2020.110708.

Fox GC, Ishakian V, Muthusamy V, Slominski A (2017) Status of Serverless Computing and Function-as-a-Service(FaaS) in Industry and Research. arXiv e-prints:1708–08028. http://arxiv.org/abs/1708.08028. Accessed 6 Jan 2021.

Hassan HB, Barakat SA, Sarhan QI (2021) Serverless Literature Dataset. Zenodo. https://doi.org/10.5281/zenodo.4660553.

Pedreira O, García F, Brisaboa N, Piattini M (2015) Gamification in software engineering - a systematic mapping. Inf Softw Technol 57:157–168.

Lopez-herrejon RE, Linsbauer L, Egyed A (2015) A systematic mapping study of search-based software engineering for software product lines. Inf Softw Technol 61:33–51.

Bila N, Dettori P, Kanso A, Watanabe Y, Youssef A (2017) Leveraging the serverless architecture for securing linux containers In: 2017 IEEE 37th International Conference on Distributed Computing Systems Workshops (ICDCSW), 401–404. https://doi.org/10.1109/ICDCSW.2017.66.

Chang KS, Fink SJ (2017) Visualizing serverless cloud application logs for program understanding In: 2017 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), 261–265. https://doi.org/10.1109/VLHCC.2017.8103476.

Ishakian V, Muthusamy V, Slominski A (2018) Serving deep learning models in a serverless platform In: 2018 IEEE International Conference on Cloud Engineering (IC2E), 257–262. https://doi.org/10.1109/IC2E.2018.00052.

Baldini I, Cheng P, Fink SJ, Mitchell N, Muthusamy V, Rabbah R, Suter P, Tardieu O (2017) The serverless trilemma: Function composition for serverless computing In: Proceedings of the 2017 ACM SIGPLAN International Symposium on New Ideas, New Paradigms, and Reflections on Programming and Software (Onward! 2017), 89–103.. Association for Computing Machinery, New York. https://doi.org/10.1145/3133850.3133855.

Kanso A, Youssef A (2017) Serverless: Beyond the cloud In: Proceedings of the 2nd International Workshop on Serverless Computing (WoSC ‘17), 6–10.. Association for Computing Machinery, New York. https://doi.org/10.1145/3154847.3154854.

Koller R, Williams D (2017) Will serverless end the dominance of linux in the cloud? In: Proceedings of the 16th Workshop on Hot Topics in Operating Systems (HotOS ‘17), 169–173.. Association for Computing Machinery, New York. https://doi.org/10.1145/3102980.3103008.

Mukhi NK, Prabhu S, Slawson B (2017) Using a serverless framework for implementing a cognitive tutor: Experiences and issues In: Proceedings of the 2nd International Workshop on Serverless Computing (WoSC ‘17), 11–15.. Association for Computing Machinery, New York. https://doi.org/10.1145/3154847.3154852.

Nadgowda S, Bila N, Isci C (2017) The less server architecture for cloud functions In: Proceedings of the 2nd International Workshop on Serverless Computing (WoSC ‘17), 22–27.. Association for Computing Machinery, New York. https://doi.org/10.1145/3154847.3154850.

Klimovic A, Wang Y, Stuedi P, Trivedi A, Pfefferle J, Kozyrakis C (2018) Pocket: Elastic ephemeral storage for serverless analytics In: Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation (OSDI’18), 427–444.. USENIX Association, USA.

Yan M, Castro P, Cheng P, Ishakian V (2016) Building a chatbot with serverless computing In: Proceedings of the 1st International Workshop on Mashups of Things and APIs (MOTA ‘16), 1–4.. Association for Computing Machinery, New York. https://doi.org/10.1145/3007203.3007217.

Barcelona-Pons D, García-López P, Ruiz A, Gómez-Gómez A, París G, Sánchez-Artigas M (2019) Faas orchestration of parallel workloads In: Proceedings of the 5th International Workshop on Serverless Computing (WOSC ‘19), 25–30.. Association for Computing Machinery, New York. https://doi.org/10.1145/3366623.3368137.