Abstract

This paper focuses on the stochastically exponential synchronization problem for one class of neural networks with time-varying delays (TDs) and Markov jump parameters (MJPs). To derive a tighter bound of reciprocally convex quadratic terms, we provide an improved reciprocally convex combination inequality (RCCI), which includes some existing ones as its particular cases. We construct an eligible stochastic Lyapunov–Krasovskii functional to capture more information about TDs, triggering signals, and MJPs. Based on a well-designed event-triggered control scheme, we derive several novel stability criteria for the underlying systems by employing the new RCCI and other analytical techniques. Finally, we present two numerical examples to show the validity of our methods.

Similar content being viewed by others

1 Introduction

Neural Networks (NNs) have attracted great attention in recent decades owing to their extensive application in many different fields, such as optimization and signal processing [1–5], pattern recognition [6–10], parallel computing [11–15], and so forth. In the implementation of such applications, the phenomenon of time-varying delays (TDs) is inevitably encountered due to the inherent communication time among neurons, the finite switching speed of the amplifier [16–19], and other reasons. Furthermore, the structure and parameters of NNs are often subject to random abrupt variations caused by the external environment sudden change, the information latching [20], and so on. Markov jump neural networks (MJNNs) with TDs, as a special kind of hybrid systems, are very suitable to describe those complicated dynamic characteristics of NNs [21–24]. The stability is a well-known important property of systems, but the existence of TDs and random abrupt variations often lead to chaos, oscillation, and even instability [25]. In addition, fast convergence of the networks is essential for realtime computation, and the exponential convergence rate is generally used to determine the speed of neural computations [26]. Thus it is of great theoretical and practical importance to study the exponential stability for MJNNs with TDs, and many fruitful results have been reported in the literature [27–32].

On the other hand, after the pioneer work [33], the problems of chaos synchronization for NNs have gained considerable focus in recent decades [34–36], and many effective control methods are designed to achieve exponential synchronization criteria for MJNNs with TDs, such as adaptive feedback control [37–40], sampled-data control [41–44], quantized output control [45–47], pinning control [48], impulsive control [49–56], and so on. However, in these control methods, the data packets are transmitted periodically, which implies that so many “unnecessary” data are also frequently sent. As a result, network resources may not be effectively and reasonably utilized, which is undesirable in practice, especially when the networks resources are limited. To reduce the “unnecessary” waste of network resources, the event-triggered control scheme (ETCS) is proposed [57] and developed [58–61]. [58] focused on the issue of exponential synchronization for chaotic delayed NNs by a hybrid ETCS. [59] studied the synchronization problems of coupled switched delayed NNs with communication delays via a discrete ETCS. By using an ETCS with discontinuous sign terms, [60] obtained the global synchronization criteria for memristor NNs. [61] discussed the event-triggered synchronization problem for semi-Markov NNs with TDs by using a generalized free-weighting-matrix integral inequality. However, as far as the author knows, the issue about event-triggered exponential synchronization for MJNNs with TDs has not been fully investigated, and there still remains much room for improvement.

It is worth mentioning that in the literature [30–32, 34–36, 39, 40, 43, 44, 47, 48, 58–61], reciprocally convex quadratic terms (RCQTs), that is, \({\mathcal{G}}_{n} (\alpha _{i} ) =\frac{1}{\alpha _{i}} \zeta _{i}^{T}(t) \Xi _{i} \zeta _{i}(t)\), \(\alpha _{i} \in (0,1)\), \(i =1, 2, 3, \ldots , n\), are often encountered when processing integral quadratic terms, such as \(- \int _{t-\tau (t)}^{t} {\dot{\xi }(s) \Phi \dot{\xi }(s)\,ds}\), \(- \int _{t-\tau }^{t-\tau (t)} {\dot{\xi }(s) \Phi \dot{\xi }(s)\,ds}\), which play a crucial role in deriving less conservative stability criteria. The reciprocally convex combination inequality (RCCI), as a powerful tool to estimate the bound of RCQTs, has been widely applied into the stability analysis and control synthesize for various systems with TDs since it was introduced in [62]. Then the RCCI in [62] with the case \(n =2\) was improved in [63–65]. However, as reported in [65], it is still challenging to derive such an RCCI in [63–65] if \({\mathcal{G}}_{n} (\alpha _{i} )\) includes more than three terms, that is, \(n\ge 3\). Consequently, motivated by the above discussion, in this paper, we consider the event-triggered exponential synchronization problem for MJNNs with TDs. First, we provide a more general RCCI, which includes several existing ones as its particular cases. Then we construct an eligible stochastic Lyapunov–Krasovskii functional (LKF) to capture more information about TDs and MJPs. Third, based on a suitable LKF, with the help of a well-designed ETCS, we derive two novel exponential synchronization criteria for the underlying systems by using the new RCCI and other analytical techniques. Finally, we verify the effectiveness of our methods by two numerical examples.

Notations: Let \(\mathbb{Z}_{+}\) denote the set of nonpositive integers, \(\mathbb{R}\) the set of real numbers, \(\mathbb{R}^{n}\) the n-dimensional real space equipped with the Euclidean norm \(\Vert \cdot \Vert \), \(\mathbb{R}^{m \times n}\) the set of all \({m \times n}\) real matrices, and \(\mathbb{S}_{+}^{n}\) and \(\mathbb{S}^{n}\) the sets of symmetric positive definite and symmetric matrices of \(\mathbb{R}^{n \times n}\), respectively. The symbol “∗” in a block matrix signifies the symmetric terms; \(col \{ \cdots \} \) and \(diag \{ \cdots \} \) express a column vector and a diagonal matrix, respectively. For any matrix \(X \in \mathbb{R}^{n \times n}\), \(\mathbb{H} \{ X \} \) means \(X+X^{T} \), and \(\lambda _{\max }(X)\) and \(\lambda _{\min }(X)\) stand for the maximum and minimum eigenvalues of X, respectively. The zero and identity matrices of appropriate dimensions are denoted by 0 and I, respectively; \(\bar{e}_{i} = (0, \ldots , 0, \underbrace{I}_{i}, 0, \ldots , 0, )\), (\({i} =1,\ldots ,n\)).

2 Problem statement and preliminaries

Consider the following master–slave MJNNs with TDs:

where \(x(t)=col\{x_{1}(t), \ldots , x_{n}(t)\} \in \mathbb{R} ^{n}\) and \(y(t)=col\{y_{1}(t), \ldots , y_{n}(t)\} \in \mathbb{R} ^{n}\) are the neuron state vectors of master system (1) and slave system (2), respectively, \(\phi (\theta )\) and \(\psi (\theta )\) are the initial values of systems (1) and (2), respectively, and \(g(\cdot ) = col \{g_{1}(\cdot ), \ldots , g_{n}(\cdot )\} \in \mathbb{R} ^{n}\) is the nonlinear neuron activation function satisfying

where \(\lambda _{l}^{-}\), \(\lambda _{l}^{+}\) are known scalars, which can be positive, negative, and zero; \(\mathcal{{U}}(t) \in {\mathbb{R}^{n}}\) is the control input of the slave system (2), \(\mathcal{B}_{\sigma (t)}\) is a positive diagonal matrix, \(\mathcal{A}_{\sigma (t)}\) and \(\mathcal{D}_{\sigma (t)}\) are the coefficient matrices of the connection weighted matrix and the time-varying delay connection weight matrix, respectively, and \(\{ \sigma (t), t \ge 0 \} \) is a continuous-time Markov process taking values in a finite space \(\mathcal{N} = ( {1,2, \ldots , {N}} )\) and governed by

where \(\Delta \ge 0\), \(\lim_{\Delta \to 0} {{o ( \Delta )} / \Delta } = 0\), \({\pi _{ij}} \ge 0\) for \(i \ne j \in \mathcal{N}\) is the transition rate from mode i at time t to mode j at time \(t + \Delta \), and \({\pi _{ii}} = - \sum_{j = 1, j \ne i}^{{N}} {{\pi _{ij}}} \); \({\delta }(t)\) represents the time-varying delay and satisfies

where δ and μ are known constants. To simplify some notations, for each \(\sigma (t) = {i} \in {\mathcal{N}}\), we denote \(\mathcal{{B}}_{\sigma (t)} = \mathcal{{B}}_{i}\), \(\mathcal{{A}}_{\sigma (t)}= { \mathcal{{A}}}_{i}\), and \(\mathcal{{D}}_{\sigma (t)} = \mathcal{{D}}_{i}\). Let \(r(t) = y(t) - x(t)\) be the error state. The error dynamics can be described by

where \(f({r(\cdot )})= g({y(\cdot )})- g({x(\cdot )})\) and \(\varphi (\theta ) =\phi (\theta ) -\psi (\theta )\). From condition (3) we can readily obtain that the function \(g_{i}(\cdot )\) satisfies

To mitigate unnecessary waste of network resources, in the following subsection, we will introduce a discrete ETCS. Assume that the system state is sampled periodically and that the sampling sequence is depicted by the set \(\Pi _{s}= \{0, h, 2h, \ldots , kh\}\) with \(k\in \mathbb{Z}_{+}\), where h is a constant sampling period, and the event-triggered sequence is described by the set \(\Pi _{e} = \{0, b_{1} h, b_{2}h, \ldots , b_{k} h\} \subseteq \Pi _{s}\) with \(b_{k}\in \mathbb{Z}_{+}\). To decide whether the current sampling state is sent out to the controller, we adopt the following event-triggered condition:

where \(l_{m} = {\min }\{{l} | e^{T}({b_{k}}h +{l}h) {\Omega }e({b_{k}}h +{l}h) \ge {\lambda } r^{T}({b_{k}}h ){\Omega } r({b_{k}}h )\}\) with \(l \in \mathbb{Z}_{+} \), \(\lambda \in [0, 1)\) is the threshold, \(\Omega \in \mathbb{S}_{+}^{n}\) is an unknown weighting matrix, and \(e({b_{k}}h +{l}h) = r({b_{k}}h +lh) - r({b_{k}}h )\) expresses the error between the two states at the latest transmitted instant and the current sampling one. We define the following event-triggered state-feedback controller:

Similarly with [66], to depict clearly the ETCS, the triggering interval \({\mathbb{I}}_{k}\) can be decomposed as \(\bigcup_{l=0}^{l_{m}-1} [(b_{k} + l) h , (b_{k}+l+1)h )\). Define the function

It is easy to see that \(\eta (t)\) is a linear piecewise function and satisfies \(0 \le \eta (t) \le \eta \), \(\dot{\eta }(t)=1\). By combining with (6), (9), and (10), for all \(t \in {\mathbb{I}}_{k}\), we have

We recall the following definition and lemmas, which play a key role in obtaining our main results.

Definition 2.1

([43])

System (2) is said to be stochastically exponentially synchronized in the mean square sense with system (1) if system (11) is stochastically exponentially stable in the mean square sense with convergence rate \(\alpha > 0\), that is, there exists \(M >0\) such that for all \(t \ge t_{0}\),

Lemma 2.1

([67])

For a matrix \(\Xi \in \mathbb{S}_{+}^{n}\), scalars \(a< b\), and a differentiable vector function \(\varpi (s): [a,b] \to \mathbb{R}^{n}\), we have the following inequality:

where \(\Upsilon =\int _{a}^{b} {\varpi (s)\,ds } - \frac{2}{{b - a}}\int _{a}^{b} {\int _{\theta }^{b} {\varpi (s)\,ds \,d\theta } }\).

Lemma 2.2

([62])

For scalars \(\alpha _{1}, \alpha _{2} \in (0,1)\) satisfying \(\alpha _{1} + \alpha _{2} = 1\) and matrices \(R_{1}, R_{2} \in \mathbb{S}_{+}^{n}\) and \(Y \in \mathbb{R}^{n\times n}\), we have the following inequality:

Lemma 2.3

For given matrices \(\mathcal{W}_{i} \in \mathbb{S}_{+}^{n}\) and scales \(\alpha _{i} \in (0,1)\) with \(\sum_{i=1}^{n}\alpha _{i} =1\), if there exist matrices \(\mathcal{Y}_{i} \in \mathbb{S}^{n}\) and \(\mathcal{Y}_{ij} \in \mathbb{R}^{n \times n}\) (\(i, j =1, 2, \ldots , n\), \(i < j\)) such that

then we have the following inequality for any vectors \(\zeta _{i}(t) \in {\mathbb{R}}^{n}\):

where \(\bar{\mathcal{W}}_{i}={\mathcal{W}}_{i} - 2{\mathcal{Y}}_{i}\), \(\bar{\mathcal{W}}_{j} ={\mathcal{W}}_{j} - 2{\mathcal{Y}}_{j}\), and \(\hat{\mathcal{W}}_{i} ={\mathcal{W}}_{i} +(1-{\alpha _{i}}){{ \mathcal{Y}}_{i}}\).

Proof

Let \({\mathcal{G}}_{n} (\alpha _{i} ) =\sum_{i=1}^{n} \frac{1}{\alpha _{i}}\zeta _{i}^{T}(t) {\mathcal{W}}_{i} \zeta _{i}(t)\). We can obtain

where \(\mathcal{G}_{w} =\frac{{\alpha }_{j}}{{\alpha }_{i}} {\zeta _{i}^{T}(t)} \mathcal{W}_{i} \zeta _{i}(t) + \frac{{\alpha }_{i}}{{\alpha }_{j}} { \zeta _{j}^{T}(t)} \mathcal{W}_{j} \zeta _{j}(t)\).

For \(\alpha _{i} \in (0,1)\), it follows from (15) that

By employing the Schur complement to (18) we have

Using the Schur complement again, from (19) it follows that

Pre- and postmultiplying inequality (20) by \(col \{\sqrt{{\alpha }_{j}/{\alpha }_{i}} \zeta _{i}(t), \sqrt{{\alpha }_{i}/{\alpha }_{j}} \zeta _{j}(t), - \sqrt{{\alpha }_{i}/{\alpha }_{j}} \zeta _{j}(t), - \sqrt{{\alpha }_{j}/{\alpha }_{i}} \zeta _{i}(t) \}\), we have

Combining (17)–(21), we can derive (16). The proof is completed. □

Remark 2.1

It is well known that the RCCI in [62] with \(n =2\) has been improved by the one in [63–65], because the RCCI in [63–65] could derive much tighter upper bound of RCQTs \({\mathcal{G}}_{2} (\alpha _{i} )\). However, as reported in [65], it is still challenging to derive such an RCCI in [63–65] if \({\mathcal{G}}_{n} (\alpha _{i} )\) includes more than three terms, that is, \(n\ge 3\). We clearly see that the RCCI in Lemma 2.3 is becoming degenerate into the one in [62] if \({\mathcal{Y}_{i}} =0\), \(\mathcal{Y}_{ij} =X_{ij}\), which implies that the RCCI in [62] can be seen as a case of Lemma 2.3. In other words, Lemma 2.3 improves the RCCI in [62] to some extent. Furthermore, when \(n =2\), Lemma 2.3 reduces to the RCCI in [63–65], which implies that Lemma 2.3 extends that in [63–65]. Thus we can say that an important issue mentioned in Remark 2.1 has been tackled. Moreover, by comparing (16) and the RCCI in [62] we can easily find that Lemma 2.3 has more flexibility than that in [62] due to the existence of the free matrices \({{Y}_{i}}\). Besides, we clearly see that the RCCI in Lemma 2.3 encompasses both merits of those in [62] and [63–65], simultaneously. Thus the RCCI in Lemma 2.3 can estimate the bound of RCQTs \({\mathcal{G}}_{n} (\alpha _{i} )\) much tighter than the those in [62] and [63–65].

3 Main result

Before describing the main results, for simplification, we define some vectors and matrices:

Theorem 3.1

For given positive scalars δ, μ, η, α, and ω, system (11) is said to be stochastically exponentially stable in the mean square sens, if there exist matrices \(P_{i}\), \(S_{i}\), \(Q_{1}\), \(Q_{2}\), \(Q_{3}\), \(Q_{4}\), \(R_{\nu }\in \mathbb{S}_{+}^{n}\), \(X_{\nu }\), \(Y_{\nu }\in \mathbb{S}^{2n}\) (\(\nu =1, 2\)), \(X_{12}\), \(Y_{12} \in \mathbb{R}^{2n \times 2n}\), \(J_{i}\), \(G_{i} \in \mathbb{R}^{n \times n}\) and diagonal matrices \(M_{1}\), \(M_{2} \in \mathbb{S}_{+}^{n}\) such that

where \(\Phi _{i} ={\Phi _{1 i}} +{\Phi _{2 i}} +{\Phi _{3 i}} +{\Phi _{4 i}} +{\Phi _{5 i}}\), and

In addition, the control gain is designed as \(\mathcal{K}_{i} =J_{i}^{-1} G_{i}\), \(i \in \mathcal{N}\).

Proof

Consider the following stochastic Lyapunov–Krasovskii functional (LKF):

where

Let \(\mathcal{L}\) be the week infinitesimal operator acting on LKF (25):

Then along with the solution of system (11), we have

For the last four integral quadratic terms, by utilizing Lemma 2.1, we obtain that

where \(\beta _{1} =\frac{\delta (t)}{\delta }\), \(\beta _{2} = \frac{\delta -\delta (t)}{\delta }\), \(\gamma _{1} =\frac{\eta (t)}{\eta }\), and \(\gamma _{2} =\frac{\eta -\eta (t)}{\eta }\).

Applying Lemma 2.3, from (32)–(33), together with (22)–(23), it follows that

In addition, when the current data need not be sent out, from the ETCS (8) we easily get that

Furthermore, from (11), for any matrices \(J_{i}, K_{i} \in \mathbb{R}^{n \times n}\), we have

According to (7), there exist diagonal matrices \(M_{1}, M_{2} \in \mathbb{S}_{+}^{n}\) such that

where \(\Lambda _{ 1 } = { diag } \{ \lambda _{ 1 } ^{ - } , \ldots , \lambda _{ n } ^{ - } \} \) and \(\Lambda _{ 2 } = { diag } \{ \lambda _{ 1 } ^{ + } , \ldots , \lambda _{ n } ^{ + } \} \).

Therefore, combining (29)–(40) and (24), we obtain

which implies that

From (25) we easily get that

where

where \(M = \frac{\Gamma _{ 1 } + \Gamma _{ 2 } + \Gamma _{ 3 }}{\lambda _{\min }(P_{i})}\).

Therefore, according to the Definition 2.1, system (11) is stochastically exponentially stable in the mean square sense with convergence rate \(\alpha >0\). In addition, the control gain is designed as \(\mathcal{K}_{i} =J_{i}^{-1} G_{i}\), \(i \in \mathcal{N}\). This completes the proof. □

Remark 3.1

It is found that LMI (24) in Theorem 3.1 depends on the TDs \(\delta (t)\) and \(\eta (t)\), which cannot be solved directly by Matlab LMI toolbox. Note that \(\Phi _{i}\) is an affine function of variables \(\delta (t)\) and \(\eta (t)\). We can deduce that condition (24) is satisfied for all \(\delta (t)\in [0, \delta ]\) and \(\eta (t)\in [0, \eta ]\) if \(\Phi _{i} |_{\delta (t)=0, \eta (t)=0, } <0\), \(\Phi _{i} |_{\delta (t)=0, \eta (t)=\eta , } <0\), \(\Phi _{i} |_{\delta (t)=\delta , \eta (t)=0, } <0\), and \(\Phi _{i} |_{\delta (t)=\delta , \eta (t)=\eta }<0\). On the other hand, the constructed LKF \({V}(x(t), i)\) plays a key role in deriving the event-triggered exponential synchronization result. Specifically, the triggering signal state related to Markov jump parameters is considered in \(V_{1}(r(t),i)\), and the information about the TDs, neuron activation function, and the Virtual delay η are taken into account in \(V_{2}(x(t),i)\) and \(V_{3}(x(t),i)\), which is more general than those given in [39, 40, 43, 44, 47, 48, 58–61] and is helpful to obtain less conservative stability criterion. Besides, in the proof of Theorem 3.1, we utilize a new RCCI to estimate the bound of RCQTs, which was shown to be more tighter than those based on other RCCIs [62]. Meanwhile, the new RCCI contains more coupled information between \(\delta (t) \in [0, \delta ]\) and \(\eta (t) \in [0, \eta ]\), which is effective in reducing the conservatism.

In what follows, as a particular case, when Markov jump parameters are not considered, system (11) will be reduced to the following equation:

Based on Theorem 3.1, we can readily derive the following criterion.

Theorem 3.2

For given positive scalars δ, μ, η, α, and ω, system (46) is exponentially stable in the mean square sense if there exist matrices P, S, \(Q_{1}\), \(Q_{2}\), \(Q_{3}\), \(Q_{4}\), \(R_{\nu }\in \mathbb{S}_{+}^{n}\), \(X_{\nu }\), \(Y_{\nu }\in \mathbb{S}^{2n}\) (\(\nu =1, 2\)), \(X_{12}\), \(Y_{12} \in \mathbb{R}^{2n \times 2n}\), J, \(G \in \mathbb{R}^{n \times n}\) and diagonal matrices \(M_{1}\), \(M_{2} \in \mathbb{S}_{+}^{n}\) such that

where \(\Phi ={\Phi _{1}} +{\Phi _{2}} +{\Phi _{3}} +{\Phi _{4}} +{\Phi _{5}}\), and

In addition, the control gain is designed as \(\mathcal{K} =J^{-1} G\).

4 Numerical examples

In this section, we give two examples to demonstrate the effectiveness of our proposed methods.

Example 4.1

Consider system (11) with the following parameters:

In this example, the generator matrix is taken as . Under these parameters, by applying the LMI toolbox in MATLAB soft to solve LMIs (22)–(24) in Theorem 3.1, the weighted matrix in ETCS and the control gain matrices are derived as , , and . Meanwhile, the feasible solution matrices in Theorem 3.1 can be obtained:

to list a few. Clearly, the effectiveness of our method is illustrated here.

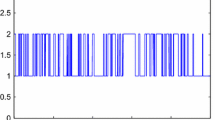

To reflect intuitively the feasibility and validity of the obtained result, when the neuron activation function \(f_{i}(x) =0.5 (|x+1| -|x-1| )\) and the time-varying delay \(\delta (t) =0.2 +0.2\sin (t)\), we present Figs. 1–3, which show the state response of systems (11) with parameters (50)–(51) under no any control and the well-designed ETCS (8), respectively. Clearly, ETCS (8) is effective. Furthermore, it is not hard to see from Fig. 2 that the frequency of control is reduced to large extent, which means that more network resources are saved by using the ETCS.

Curve of \(x(t)\) without control for Example 4.1 under \(r(0) = [-0.2;0.3]\)

Release instants and intervals for Example 4.1 under \(r(0) = [-0.2;0.3]\)

Curve of \(x(t)\) with ETCS for Example 4.1 under \(r(0) = [-0.2;0.3]\)

Example 4.2

Consider system (46) with the following parameters:

Under these parameters, by applying the LMI toolbox in MATLAB soft to solve LMIs (47)–(49) in Theorem 3.2 the weighted matrix in ETCS and the control gain matrix are derived as and , respectively. Meanwhile, the feasible solution matrices in Theorem 3.2 are obtained:

to list a few. Clearly, the effectiveness of our method provided in this paper is illustrated here.

To reflect intuitively the feasibility and validity of the obtained result in this paper, when the neuron activation function is \(f_{i}(x) =0.5 (|x+1| -|x-1| )\) and the time-varying delay is \(\delta (t) =0.4 +0.2\cos (t)\), we present Figs. 4–6, which show the state response of systems (46) with parameters (53)–(54) under no any control and the well-designed ETCS (8), respectively. Clearly, ETCS (8) is effective. Furthermore, it is not hard to see from Fig. 5 that the frequency of control is reduced to large extent, which means that more network resources are saved by using the ETCS.

Curve of \(x(t)\) without control for Example 4.2 under \(r(0) = [-0.2;0.3]\)

Release instants and intervals for Example 4.2 under \(r(0) = [-0.2;0.3]\)

Curve of \(x(t)\) with ETCS for Example 4.2 under \(r(0) = [-0.2;0.3]\)

5 Conclusions

In this paper, we studied an event-triggered exponential synchronization problem for a class of Markov jump neural networks with time-varying delay. To obtain a more tighter bound of reciprocally convex quadratic terms, we provided a general reciprocally convex combination inequality, which included several existing ones as its particular cases. Then we constructed a suitable Lyapunov–Krasovsikii functional by fully considering the information about time-varying delay, triggering signals, and Markov jump parameters. Based on a well-designed event-triggered control scheme, were presented two kinds of novel exponential synchronization criteria for the studied systems by employing the new reciprocally convex combination inequality and other analytical approaches. Finally, we gave two numerical examples to show the effectiveness of our results. By the way, we expect that the methods proposed in this paper can be used in the future to investigate other stability and control problems for various systems with mixed time-varying delays.

Availability of data and materials

Data sharing not applicable to this paper as no data sets were generated or analyzed during the current study.

References

Shi, K., Wang, J., Zhong, S., Tang, Y., Cheng, J.: Non-fragile memory filtering of T-S fuzzy delayed neural networks based on switched fuzzy sampled-data control. Fuzzy Sets Syst. 394, 40–64 (2020)

Shi, K., Wang, J., Zhong, S., Tang, Y., Cheng, J.: Hybrid-driven finite-time H∞ sampling synchronization control for coupling memory complex networks with stochastic cyber attacks. Neurocomputing 387, 241–254 (2020)

Li, P., Li, X., Cao, J.: Input-to-state stability of nonlinear switched systems via Lyapunov method involving indefinite derivative. Complexity 2018, 1–8 (2018)

Yang, X., Li, X.: Finite-time stability of linear non-autonomous systems with time-varying delays. Adv. Differ. Equ. 2018 101, 1–10 (2018)

Cochocki, A., Unbehauen, R.: Neural Networks for Optimization and Signal Processing, 1st edn. Wiley, New York (1993)

Shi, K., Wang, J., Tang, Y., Zhong, S.: Reliable asynchronous sampled-data filtering of T-S fuzzy uncertain delayed neural networks with stochastic switched topologies. Fuzzy Sets Syst. 381, 1–25 (2020)

Shi, K., Wang, J., Zhong, S., Zhang, X., Liu, Y., Cheng, J.: New reliable nonuniform sampling control for uncertain chaotic neural networks under Markov switching topologies. Appl. Math. Comput. 347, 169–193 (2019)

Li, X., Fu, X., Rakkiyappan, R.: Delay-dependent stability analysis for a class of dynamical systems with leakage delay and nonlinear perturbations. Appl. Math. Comput. 226, 10–19 (2014)

Li, X., Akca, H., Fu, X.: Uniform stability of impulsive infinite delay differential equations with applications to systems with integral impulsive conditions. Appl. Math. Comput. 219(14), 7329–7337 (2013)

Galicki, M., Witte, H., Dörschel, J., Eiselt, M., Griessbach, G.: Common optimization of adaptive preprocessing units and a neural network during the learning period, application in EEG pattern recognition. Neural Netw. 10(6), 1153–1163 (1997)

Shi, K., Tang, Y., Liu, X., Zhong, S.: Non-fragile sampled-data robust synchronization of uncertain delayed chaotic Lurie systems with randomly occurring controller gain fluctuation. ISA Trans. 66, 185–199 (2017)

Shi, K., Tang, Y., Liu, X., Zhong, S.: Secondary delay-partition approach on robust performance analysis for uncertain time-varying Lurie nonlinear control system. Optim. Control Appl. Methods 38(6), 1208–1226 (2017)

Liu, M., Li, S., Li, X., Jin, L., Yi, C., Huang, Z.: Intelligent controllers for multirobot competitive and dynamic tracking. Complexity 2018, 1–12 (2018)

Chen, J., Li, X., Wang, D.: Asymptotic stability and exponential stability of impulsive delayed Hopfield neural networks. Abstr. Appl. Anal. 2013, 205 (2013)

Chua, L., Yang, L.: Cellular neural networks: applications. IEEE Trans. Circuits Syst. 35(10), 1273–1290 (1988)

Shi, K., Wei, Y., Zhong, S., Wang, J.: Novel delay-dependent robust stability criteria for neutral-type time-varying uncertain Lurie nonlinear control system with mixed time delays. J. Nonlinear Sci. Appl. 10(4), 2196–2213 (2017)

Song, X., Yan, X., Li, X.: Survey of duality between linear quadratic regulation and linear estimation problems for discrete-time systems. Adv. Differ. Equ. 2019, 90 (2019)

Liu, J., Li, X.: Impulsive stabilization of high-order nonlinear retarded differential equations. Appl. Math. 58(3), 347–367 (2013)

Zhou, Q., Shi, P., Xu, S., Li, H.: Observer-based adaptive neural network control for nonlinear stochastic systems with time delay. IEEE Trans. Neural Netw. Learn. Syst. 24(1), 71–80 (2013)

Zhao, Z., Song, Q., He, S.: Passivity analysis of stochastic neural networks with time-varying delays and leakage delay. Neurocomputing 125, 22–27 (2014)

Cheng, J., Park, J.H., Cao, J., Qi, W.: Hidden Markov model-based nonfragile state estimation of switched neural network with probabilistic quantized outputs. IEEE Trans. Cybern. 50, 1900–1909 (2020)

Cheng, J., Zhan, Y.: Nonstationary \(l_{2}-l_{\infty }\) filtering for Markov switching repeated scalar nonlinear systems with randomly occurring nonlinearities. Appl. Math. Comput. 365, 124714 (2020)

Cheng, J., Park, J.H., Cao, J., Qi, W.: A hidden mode observation approach to finite-time SOFC of Markovian switching systems with quantization. Nonlinear Dyn. 100(1), 509–521 (2020)

Jun, C., Park, J.H., Zhao, X., Karimi, H., Cao, J.: Quantized nonstationary filtering of network-based Markov switching RSNSS: a multiple hierarchical structure strategy. IEEE Trans. Autom. Control (2019). https://doi.org/10.1109/TAC.2019.2958824

Wang, Z., Liu, Y., Yu, L., Liu, X.: Exponential stability of delayed recurrent neural networks with Markovian jumping parameters. Phys. Lett. A 356(4–5), 346–352 (2006)

Tao, L., Qi, L., Sun, C., Zhang, B.: Exponential stability of recurrent neural networks with time-varying discrete and distributed delays. Nonlinear Anal., Real World Appl. 10(4), 2581–2589 (2009)

Zhang, X., Li, X., Han, X.: Design of hybrid controller for synchronization control of Chen chaotic system. J. Nonlinear Sci. Appl. 10(6), 3320–3327 (2017)

Lv, X., Li, X., Cao, J., Duan, P.: Exponential synchronization of neural networks via feedback control in complex environment. Complexity 2018, 1–13 (2018)

Vinodkumar, A., Senthilkumar, T., Li, X.: Robust exponential stability results for uncertain infinite delay differential systems with random impulsive moments. Adv. Differ. Equ. 2018(1), 39 (2018)

Zhou, L.: Delay-dependent exponential stability of recurrent neural networks with Markovian jumping parameters and proportional delays. Neural Comput. Appl. 28(1), 765–773 (2017)

Liu, Y., Zhang, C., Kao, Y., Hou, C.: Exponential stability of neutral-type impulsive Markovian jump neural networks with general incomplete transition rates. Neural Process. Lett. 47(2), 325–345 (2018)

Zhang, X., Zhou, W., Sun, Y.: Exponential stability of neural networks with Markovian switching parameters and general noise. Int. J. Control. Autom. Syst. 17(4), 966–975 (2019)

Pecora, L., Carroll, T.: Synchronization in chaotic systems. Phys. Rev. Lett. 64(8), 821 (1990)

Zhang, L., Yang, X., Xu, C., Feng, J.: Exponential synchronization of complex-valued complex networks with time-varying delays and stochastic perturbations via time-delayed impulsive control. Appl. Math. Comput. 306, 22–30 (2017)

Zhang, R., Park, J.H., Zeng, D., Liu, Y., Zhong, S.: A new method for exponential synchronization of memristive recurrent neural networks. Inf. Sci. 466, 152–169 (2018)

Wan, P., Sun, D., Chen, D., Zhao, M., Zheng, L.: Exponential synchronization of inertial reaction–diffusion coupled neural networks with proportional delay via periodically intermittent control. Neurocomputing 356, 195–205 (2019)

Hu, J., Sui, G., Lv, X., Li, X.: Fixed-time control of delayed neural networks with impulsive perturbations. Nonlinear Anal., Model. Control 23(6), 904–920 (2018)

Xu, F., Dong, L., Wang, D., Li, X., Rakkiyappan, R.: Globally exponential stability of nonlinear impulsive switched systems. Math. Notes 97(5–6), 803–810 (2015)

Chen, H., Shi, P., Lim, C.-C.: Exponential synchronization for Markovian stochastic coupled neural networks of neutral-type via adaptive feedback control. IEEE Trans. Neural Netw. Learn. Syst. 28(7), 1618–1632 (2016)

Tong, D., Zhou, W., Zhou, X., Yang, J., Zhang, L., Xu, Y.: Exponential synchronization for stochastic neural networks with multi-delayed and Markovian switching via adaptive feedback control. Commun. Nonlinear Sci. Numer. Simul. 29(1–3), 359–371 (2015)

Li, X., Bohner, M.: An impulsive delay differential inequality and applications. Comput. Math. Appl. 64(6), 1875–1881 (2012)

Yang, D., Li, X., Shen, J., Zhou, Z.: State-dependent switching control of delayed switched systems with stable and unstable modes. Math. Methods Appl. Sci. 41, 6968–6983 (2018)

Rakkiyappan, R., Latha, V.P., Zhu, Q., Yao, Z.: Exponential synchronization of Markovian jumping chaotic neural networks with sampled-data and saturating actuators. Nonlinear Anal. Hybrid Syst. 24, 28–44 (2017)

Cheng, J., Park, J.H., Karimi, H.R., Shen, H.: A flexible terminal approach to sampled-data exponentially synchronization of Markovian neural networks with time-varying delayed signals. IEEE Trans. Cybern. 48(8), 2232–2244 (2017)

Yang, D., Li, X., Qiu, J.: Output tracking control of delayed switched systems via state-dependent switching and dynamic output feedback. Nonlinear Anal. Hybrid Syst. 32, 294–305 (2019)

Yang, X., Li, X., Xi, Q., Duan, P.: Review of stability and stabilization for impulsive delayed systems. Math. Biosci. Eng. 15(6), 1495–1515 (2018)

Wan, X., Yang, X., Tang, R., Cheng, Z., Fardoun, H.M., Alsaadi, F.E.: Exponential synchronization of semi-Markovian coupled neural networks with mixed delays via tracker information and quantized output controller. Neural Netw. 118, 321–331 (2019)

Dai, A., Zhou, W., Xu, Y., Xiao, C.: Adaptive exponential synchronization in mean square for Markovian jumping neutral-type coupled neural networks with time-varying delays by pinning control. Neurocomputing 173, 809–818 (2016)

Li, X., Yang, X., Huang, T.: Persistence of delayed cooperative models: impulsive control method. Appl. Math. Comput. 342, 130–146 (2019)

Li, X., Shen, J., Akca, H., Rakkiyappan, R.: LMI-based stability for singularly perturbed nonlinear impulsive differential systems with delays of small parameter. Appl. Math. Comput. 250, 798–804 (2015)

Li, X., Caraballo, T., Rakkiyappan, R., Han, X.: On the stability of impulsive functional differential equations with infinite delays. Math. Methods Appl. Sci. 38(14), 3130–3140 (2015)

Wang, X., Park, J.H., Zhong, S., Yang, H.: A switched operation approach to sampled-data control stabilization of fuzzy memristive neural networks with time-varying delay. IEEE Trans. Neural Netw. Learn. Syst. 31(3), 891–900 (2020)

Wang, X., Park, J.H., Yang, H., Zhang, X., Zhong, S.: Delay-dependent fuzzy sampled-data synchronization of T-S fuzzy complex networks with multiple couplings. IEEE Trans. Fuzzy Syst. 28(1), 178–189 (2020)

Wang, X., Park, J.H., Yang, H., Zhao, G., Zhong, S.: An improved fuzzy sampled-data control to stabilization of T-S fuzzy systems with state delays. IEEE Trans. Cybern. 50(7), 3125–3135 (2020)

Wang, X., She, K., Zhong, S., Yang, H.: Lag synchronization analysis of general complex networks with multiple time-varying delays via pinning control strategy. IEEE Trans. Syst. Man Cybern. Syst. 31(1), 43–53 (2020)

Yang, H., Shu, L., Wang, X., Zhong, S.: Synchronization of IT2 stochastic fuzzy complex dynamical networks with time-varying delay via fuzzy pinning control. J. Franklin Inst. 356(3), 1484–1501 (2019)

Åström, K., Bernhardsson, B.: Comparison of periodic and event based sampling for first-order stochastic systems. IFAC Proc. Vol. 32(2), 5006–5011 (1999)

Fei, Z., Guan, C., Gao, H.: Exponential synchronization of networked chaotic delayed neural network by a hybrid event trigger scheme. IEEE Trans. Neural Netw. Learn. Syst. 29(6), 2558–2567 (2017)

Wen, S., Zeng, Z., Chen, M.Z., Huang, T.: Synchronization of switched neural networks with communication delays via the event-triggered control. IEEE Trans. Neural Netw. Learn. Syst. 28(10), 2334–2343 (2017)

Guo, Z., Gong, S., Wen, S., Huang, T.: Event-based synchronization control for memristive neural networks with time-varying delay. IEEE Trans. Cybern. 49(9), 3268–3277 (2018)

Pradeep, C., Cao, Y., Murugesu, R., Rakkiyappan, R.: An event-triggered synchronization of semi-Markov jump neural networks with time-varying delays based on generalized free-weighting-matrix approach. Math. Comput. Simul. 155, 41–56 (2019)

Park, P., Ko, J., Jeong, C.: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1), 23–238 (2011)

Zhang, X., Han, Q., Seuret, A., Gouaisbaut, F.: An improved reciprocally convex inequality and an augmented Lyapunov–Krasovskii functional for stability of linear systems with time-varying delay. Automatica 84, 221–226 (2017)

Zhang, C., He, Y., Jiang, L., Wu, M., Wang, Q.: An extended reciprocally convex matrix inequality for stability analysis of systems with time-varying delay. Automatica 85, 481–485 (2017)

Zhang, X., Han, Q., Wang, Z., Zhang, B.: Neuronal state estimation for neural networks with two additive time-varying delay components. IEEE Trans. Cybern. 47(10), 3184–3194 (2017)

Zhang, R., Zeng, D., Zhong, S., Yu, Y.: Event-triggered sampling control for stability and stabilization of memristive neural networks with communication delays. Appl. Math. Comput. 310, 57–74 (2017)

Park, P., Lee, W., Lee, S.: Auxiliary function-based integral inequalities for quadratic functions and their applications to time-delay systems. J. Franklin Inst. 352, 1378–1396 (2015)

Acknowledgements

The authors would like to thank the associate editor and anonymous reviewers for their detailed comments and suggestions.

Funding

This work was funded by the National Natural Science Foundation of China under Grant nos. 11671206 and 11601474, the Key Project of Natural Science Research of Anhui Higher Education Institutions of China \((KJ2018A0700)\).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors conceived of the study, participated in its design and coordination, and read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, X., Zhang, H., Yang, J. et al. Stochastically exponential synchronization for Markov jump neural networks with time-varying delays via event-triggered control scheme. Adv Differ Equ 2021, 74 (2021). https://doi.org/10.1186/s13662-020-03109-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-03109-7