Abstract

The present article addresses the exponential stability of recurrent neural networks (RNNs) with distributive and discrete asynchronous time-varying delays. Some novel algebraic conditions are obtained to ensure that for the model there exists a unique balance point, and it is global exponential asymptotically stable. Meanwhile, it also reveals the difference about the equilibrium point between systems with and without distributed asynchronous delay. One numerical example and its Matlab software simulations are given to illustrate the correctness of the present results.

Similar content being viewed by others

1 Introduction

In the last few decades, a number of successful applications of RNNs have been witnessed in many areas, including associative memory, prediction and optimal control, and pattern recognition [1–8]. During the implementation of the operation, time delay is inevitably inherent in the transmission process among neurons on account of limited propagation speed and limited switching of the amplifier [9–13]. In addition, because of the existence of a large number of parallel channels with different coaxial process sizes and lengths, there maybe exist distributions of conduction velocities delays and propagation delays along with these paths. In these cases, we cannot only model the signal propagation with discrete delays due to it not being instantaneous. Thus, it is more suitable to add continuous distribution delays into the neural network model. Moreover, these delays sometimes may produce the desired excellent performance, such as processing moving images between neurons when signals are transmitted, exhibiting chaotic phenomena applied to secure communication. Therefore, it is quite necessary to discuss the dynamical behavior of the neural networks with mixed distributive and discrete delays. And there has been a lot of literature on mixed constant delays [14–19] and time-varying delays [19–24].

Recently, Liu et al. [25] proposed the asynchronous delays, and investigated the exponential stability for complex-valued recurrent neural networks with discrete asynchronous delays. Afterwards, Li et al. [26] presented the stability preservation in discrete analogue of an impulsive Cohen–Grossberg neural network with discrete asynchronous delays. In the implementation of the operation, time delays are not just discrete asynchronous, but also distributive asynchronous, or even mixed asynchronous. In fact, for a driver, there is not only one kind of delay; his eyes, hands and feet all have delays in responding to the operation. Since the delays are different for different drivers, it needs to be coordinated in the driver’s brain central nervous system. Therefore, the stability analysis of neural networks with distributive and discrete asynchronous delays is a challenge that we should look forward to discussing.

Inspired by the challenge above, we investigated the exponential stability of RNNs with mixed asynchronous time-varying delays. The main contribution was to find some novel sufficient conditions which make the discussed system’s balance point unique and the global exponential asymptotically stable. The rest arrangement of this article are as follows. In the second section, the RNN model with some reasonable assumption is given. The main results are given and proved in the third section. The corollaries and comparisons with the existing literature are given in the fourth section. Section 5 gives a numerical example with comprehensible simulation to illustrate the effectiveness of the main results. In the end of this paper, the conclusion is drawn.

2 Model description

In the present article, we investigate a class of RNNs of n (\(n \geq 2\)) interconnected neurons as follows:

where \(x_{i}(t)\) is the state variate at time t related to the ith neuron; \(a_{i}\) is a positive behaved constant; \(f_{j}(\cdot )\) stands for activation function of the jth neuron, and it is a globally Lipschitz continuous and differentiable nonlinear function such that

\(b_{ij}\), \(c_{ij}\), and \(d_{ij}\) are the corresponding connection weights associated with the neurons without delays, with discrete delays, and with distributed delays, respectively; \(\tau _{ij}(t)\) corresponds to the discrete asynchronous transmission time-varying delay along with the axon of the unit j to the unit i at time t such that

\(h_{ij}(t)\) corresponds to the distributed asynchronous transmission time-varying delay along with the axon of the unit j to the unit i at time t, and satisfies

\(u_{i}\) is a constant, and represents the external input of the ith neuron.

For model (1), its initial conditions are assumed to be

where \(\varphi _{i}(s)\) is real-valued continuous function, and

Remark 1

\(\tau _{ij}(t)\) and \(h_{ij}(t)\) above receive different information between different nodes at time t, which means that the time-varying delays are asynchronous in system (1). Therefore, model (1) is more general than Refs. [22, 26].

Assume that \(x^{*}\) is a balance of model (1), and \(x_{i}^{*}\) is its ith component. Then Eq. (1) becomes

By Ref. [20], we can define the global exponential asymptotic stability of \(x^{*}\).

Definition 1

The equilibrium point \(x^{*}\) in model (1) is said to have global exponential asymptotic stability, if there are \(M \geq 1\) and \(\gamma > 0\) such that each solution of Eq. (1) satisfies

where \(\varphi _{i}(s)\) is the initial continuous function, and Ω is a set of real numbers.

3 Main results and proofs

In this section, we will show that there is a unique balance point \(x^{*}\) in the neural networks (1), and it shows global exponential asymptotic stability.

Theorem 1

Suppose that (2), (3), and (5) hold. If for eachi, \(i\in \{1,2,\ldots ,n \}\), one has

then the equilibrium point\(x^{*}\)exists and is unique in system (1).

Proof

On account of \(a_{i} > 0\), (8) can turn into

Let

Then we know that \(x_{i}^{*}\) is a fixed point of the mapping \(g_{i}(x)\) from Eq. (11). Hence the equilibrium point of Eq. (1) can be determined by the fixed points of functions \(g_{1}(x), g_{2}(x), \ldots \) , and \(g_{n}(x)\) within a specific range. Let \(x(t)\) be the vector \((x_{1}(t), x_{2}(t),\ldots ,x_{n}(t))^{T} \), and Φ be a hypercube set defined by

By the hypotheses (3) and (5), we can get

Let \(g(x)\) be the vector function \((g_{1}(x),g_{2}(x),\ldots ,g_{n}(x))^{T}\). From the continuity of \(f_{i}\), we know that \(g(x)\) is a continuous mapping from set Φ to Φ. By Brouwer’s fixed point theorem, there is at least one \(x^{*}\in \varPhi \) such that

It follows that there is at least one equilibrium point in Eq. (1).

Next, we will show the uniqueness of the equilibrium point in Eq. (1).

Let \(y^{*}=(y_{1}^{*}, y_{2}^{*},\ldots ,y_{n}^{*})^{T}\) be also an equilibrium point of model (1). From (2), (3), and (8), we can obtain

Summing over all the neurons that satisfy the inequality (14), we get

It follows that

According to the condition (10), we can get

implying that there exists a unique equilibrium in model (1). □

Theorem 2

Suppose that (2)–(5), and (10) hold, and we have\(\beta \geq 1\)and\(q > 0\)such that

If the equilibrium point\(x^{*}\)and each solution of Eq. (1) with the initial conditions (6) satisfy

then\(x^{*}\)is the global exponential asymptotic stability.

Proof

By Theorem 1, model (1) exists a unique balance point under the assumptions (2), (3), (5), and (10), and we denote it as \(x^{*}\). Then from Eq. (1), we have

Assumed that

Then the derivative along with (19) is

Since

we substitute (21) into (20), and get

Consider a Lyapunov function \(V(t)=V(y_{1}, y_{2},\ldots ,y_{n})(t)\) defined by

Taking the derivative of \(V(t)\) along with (19), we get

Let \(F_{i}(q_{i})\) be an auxiliary continuous function related to index i, defined by

where \(q_{i}\) is a positive real number, and i is a positive nature number not bigger than n. In view of the hypothesis (10), one has

From the continuity of \(F_{i}\), there exists \(q_{i}^{*}\in (0,+\infty )\) such that

Without loss of generality, let \(q=\max_{1\leq i\leq n}\{q_{1}^{*},q_{2}^{*},\ldots ,q_{n}^{*} \}\). Then

Therefore, by (24) and (27), one can see that the derivative of \(V(t)\) is smaller than 0 for \(t\in [0, + \infty ]\). Based on the definition of \(V(t)\) and the assumption (4), we obtain

where

Combining (17), (28), and (29), one can derive the inequality (18), and thus the equilibrium point \(x^{*}\) of Eq. (1) has the global exponential asymptotic stability on account of Definition 1. □

Remark 2

The constant \(\beta \geq 1\), which plays a significant role in the index of convergence of model (1), relies on the distributive delay \(h_{j}\) and delay \(\tau _{j}\) for \(j=1,2,\ldots ,n\). If either the discrete delay \(\tau _{j}\) or the distribution delay \(h_{j}\) of (17) is sufficiently large, namely, the discrete asynchronous delays \(\tau _{ij}(t)\) of (4) and distributed asynchronous delays \(h_{ij}(t)\) of (5) are sufficiently large, then β will be large enough, and thus the convergence time towards the equilibrium point will be longer. Therefore, the convergence time of model (1) can be shortened only if the two delays are reduced appropriately in the process of operation coordination.

4 Corollaries and comparisons

By Theorem 1 and Theorem 2, we will have the following corollaries. Meanwhile, we also will compare the conclusions of this paper with the existing literature.

When \(h_{ij}(t)=0\) for \(i, j\in \{1,2,\ldots ,n\}\), Eq. (1) changes into the following neural networks:

and its initial conditions are

where i is a positive integer not bigger than n.

Corollary 1

Assume that (2) and (3) are true. If for eachi, \(i\in \{1,2,\ldots ,n \}\), one has

then the equilibrium point\(x^{*}\)exists and is unique in the system (30).

Corollary 2

Suppose that (2)–(4), and (32) hold. If there exist two constants\(\beta \geq 1\)and\(q > 0\)such that

and the equilibrium point\(x^{*}\)and each solution of Eq. (30) with the initial conditions (31) satisfy

then\(x^{*}\)is the global exponential asymptotic stability.

Remark 3

By Ref. [26], the equilibrium point of model (30) with discrete asynchronous time-varying delay is the same to that without delays. Meanwhile \(h_{ij}(t)\neq 0\), by Theorem 1, the equilibrium point of model (1) will be affected by \(h_{ij}(t)\), \(t>0\).

When \(\tau _{ij}(t)=0\) for \(i, j\in \{1,2,\ldots ,n\}\), Eq. (1) turns into the following neural networks:

and its initial conditions are

where i is a natural number, belonging to the set \(\{1,2,\ldots ,n\}\).

Corollary 3

Suppose that (2), (3), and (5) hold. If for eachi, \(i\in \{1,2,\ldots ,n \}\), we have

then the equilibrium point\(x^{*}\)exists and is unique in the system (35).

Corollary 4

Suppose that (2), (3), (5), and (36) hold. If we have\(\beta \geq 1\)and\(q > 0\)such that

and the equilibrium point\(x^{*}\)and each solution of Eq. (35) with the initial conditions (36) satisfy

then\(x^{*}\)has the global exponential asymptotic stability.

When \(\tau _{ij}(t)=\tau (t)\) and \(h_{ij}(t)= h(t)\) for \(i, j\in \{1,2,\ldots ,n\}\), let \(\sup_{t\geq 0}\tau (t)\leq \tau \), and \(\sup_{t\geq 0}h(t)\leq h\). Hence Eq. (1) changes into

and its initial conditions are

where \(\varphi _{i}(s)\) is real-valued continuous functions, and

Corollary 5

Suppose that (2), (3), and (5) hold. If for eachi, \(i\in \{1,2,\ldots ,n \}\), we have

then the equilibrium point\(x^{*}\)exists and is unique in the system (40).

Corollary 6

Suppose that (2)–(5), and (43) hold, and we have\(\beta \geq 1\)and\(q > 0\)such that

If the equilibrium point\(x^{*}\)and each solution of Eq. (40) with the initial conditions (41) satisfy

then\(x^{*}\)has the global exponential asymptotic stability.

Remark 4

The system (40) is one of the RNNs with distributive and discrete delays, which occur to the literature [22]. In this paper, we show the balance \(x^{*}\) of Eq. (40) is the global exponential asymptotic stability via the Lyapunov function method, which is different from the method used in the literature [22].

5 Simulation example

Example

We consider the following two-dimensional neural network model:

where \(f_{i}(x_{i}(t))=\tanh (x_{i}(t))\), \(\tau _{11}(t)=\tau _{21}(t)=0.49+0.49\sin (0.02t)\), \(\tau _{12}(t)=\tau _{22}(t)=0.48+0.48\cos (0.03t)\), \(h_{11}(t)=h_{21}(t)=1.5+e^{-t}\), and \(h_{12}(t)=h_{22}(t)=3+e^{-t}\). For the initial condition is considered that \(x_{1}(s)=\ln (s+3.9)\), \(x_{2}(s)=0.4e^{s}-0.7\), \(s\in [-4,0]\).

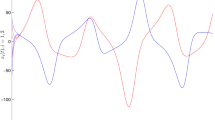

After comparison and simple calculation, we know that Eq. (46) represents a two-dimensional RNN with discrete and distributed asynchronous time-varying delays, and satisfies all the assumptions of Theorem 1 and Theorem 2. In Fig. 1, we illustrate state trajectories of \(x_{1}\) and \(x_{2}\) of model (46), which show that model (46) has a unique and exponential asymptotically stable equilibrium point.

State trajectories of model (46)

In Fig. 2, we illustrate state trajectories of \(x_{1}\) and \(x_{2}\) of model (46) under three other different initial conditions: \(x_{1}(s)=0.4\), \(x_{2}(s)=0.7\); \(x_{1}(s)=-0.5+e^{s}\), \(x_{2}(s)=0.4e^{s}\) and \(x_{1}(s)=-0.9\), \(x_{2}(s)=-0.3\), which demonstrate that exponential convergence of model (46) is global. Therefore, Figs. 1 and 2 show fully the effectiveness of our results in this paper.

State trajectories of model (46) under three other initial conditions

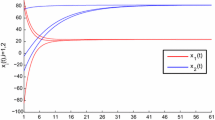

Taking out the distributed delay, model (46) turns into a two-dimensional RNN with discrete asynchronous time-varying delays. Figure 3 illustrates its state trajectories, which are marked as x1-discrete and x2-discrete, respectively. Meanwhile, Fig. 3 also shows the sate trajectories of \(x_{1}\) and \(x_{2}\) of model (46) without delays. From Fig. 3, we see that the dynamical behaviors of Eq. (46) without distributed delay and Eq. (46) without delay are converging toward the same equilibrium point.

State trajectories of model (46) without distributed delay, or without delay

Removing the discrete delay, model (46) becomes a two-dimensional RNN with distributive asynchronous time-varying delays. Figure 4 illustrates its state trajectories, which are marked as x1-distributed and x2-distributed, respectively. Meanwhile, Fig. 4 also demonstrate the sate trajectories of \(x_{1}\) and \(x_{2}\) of model (46) without delays. From Fig. 4, we see that the trajectories of Eq. (46) with distributive asynchronous time-varying delays and Eq. (46) without delay are different, which implies that the dynamical behavior of model (46) is affected by the distributed asynchronous time-varying delay.

State trajectories of model (46) without discrete delay, or without delay

In Fig. 5, we illustrate the state trajectories of model (46) with delays (i): \(\tau _{11}(t)=\tau _{21}(t)=0.49+0.49\sin (0.02t)\), \(\tau _{12}(t)=\tau _{22}(t)=0.48+0.48\cos (0.03t)\), \(h_{11}(t)=h_{21}(t)=1.5+e^{-t}\), \(h_{12}(t)=h_{22}(t)=3+e^{-t}\), and model (46) with delays (ii): \(\tau _{11}(t)=\tau _{21}(t)=0.11+0.11\sin (0.02t)\), \(\tau _{12}(t)=\tau _{22}(t)=0.09+0.09\cos (0.03t)\), \(h_{11}(t)=h_{21}(t)=0.1+e^{-t}\), \(h_{12}(t)=h_{22}(t)=0.2+e^{-t}\), which are marked as x1-big and x2-big, and x1-small and x2-small, respectively. Obviously, the maximum of all delays in (i) is bigger than that in (ii). From Fig. 5, we see that the stability convergence time of neural networks with big upper bound delay is longer than that of neural networks with small upper bound delay.

State trajectories of model (46) with different asynchronous time-varying delays

6 Conclusion

In the present paper, we discuss the RNNs with mixed asynchronous time-varying delays. By the Lyapunov function method, some algebra conditions are given to make the investigated model have a unique and global exponential asymptotically stable balance point. Meanwhile, we also show that the balance point of the neural networks with distributive asynchronous time-varying delays is different from that without distributed delays. Finally, one numerical example and its simulation are given to demonstrate the effectiveness of our results. The considered neural networks in this paper can be further discussed as regards their discrete-time analogue, and also can be investigated as regards their dynamical characteristics by adding pulses.

References

Watta, P.B., Wang, K., Hassoun, M.H.: Recurrent neural nets as dynamical Boolean systems with application to associative memory. IEEE Trans. Neural Netw. 8(6), 1268–1280 (1997)

Bao, G., Zeng, Z.: Analysis and design of associative memories based on recurrent neural network with discontinuous activation functions. Neurocomputing 77, 101–107 (2012)

Lee, T., Ching, P.C., Chan, L.W.: Isolated word recognition using modular recurrent neural networks. Pattern Recognit. 31(6), 751–760 (1998)

Juang, C.F., Chiou, C.T., Lai, C.L.: Hierarchical singleton-type recurrent neural fuzzy networks for noisy speech recognition. IEEE Trans. Neural Netw. 18(3), 833–843 (2007)

Cao, S., Cao, J.: Forecast of solar irradiance using recurrent neural networks combined with wavelet analysis. Appl. Therm. Eng. 25(2–3), 161–172 (2005)

Cao, Q., Ewing, B.T., Thompson, M.A.: Forecasting wind speed with recurrent neural networks. Eur. J. Oper. Res. 221(1), 148–154 (2012)

Xiong, Z., Zhang, J.: A batch-to-batch iterative optimal control strategy based on recurrent neural network models. J. Process Control 15(1), 11–21 (2005)

Tian, Y., Zhang, J., Morris, J.: Optimal control of a batch emulsion copolymerisation reactor based on recurrent neural network models. Chem. Eng. Process. Process Intensif. 41(6), 531–538 (2002)

Yang, X., Li, X., Xi, Q., Duan, P.: Review of stability and stabilization for impulsive delayed systems. Math. Biosci. Eng. 15(6), 1495–1515 (2018)

Song, Q., Yu, Q., Zhao, Z., Liu, Y., Alsaadi, F.E.: Boundedness and global robust stability analysis of delayed complex-valued neural networks with interval parameter uncertainties. Neural Netw. 103, 55–62 (2018)

Yang, D., Li, X., Qiu, J.: Output tracking control of delayed switched systems via state-dependent switching and dynamic output feedback. Nonlinear Anal. Hybrid Syst. 32, 294–305 (2019)

Song, Q., Chen, X.: Multistability analysis of quaternion-valued neural networks with time delays. IEEE Trans. Neural Netw. Learn. Syst. 29(11), 5430–5440 (2018)

Li, X., Yang, X., Huang, T.: Persistence of delayed cooperative models: impulsive control method. Appl. Math. Comput. 342, 130–146 (2019)

Park, J.H.: On global stability criterion for neural networks with discrete and distributed delays. Chaos Solitons Fractals 30, 897–902 (2006)

Liu, Y.R., Wang, Z.D., Liu, X.H.: Design of exponential state estimators for neural networks with mixed time delays. Phys. Lett. A 364, 401–412 (2007)

Zhang, H., Huang, Y., Wang, B., Wang, Z.: Design and analysis of associative memories based on external inputs of delayed recurrent neural networks. Neurocomputing 136, 337–344 (2014)

Wang, Z.D., Liu, Y.R., Liu, X.H.: On global asymptotic stability of neural networks with discrete and distributed delays. Phys. Lett. A 345, 299–308 (2005)

Feng, Y., Yang, X., Song, Q., Cao, J.: Synchronization of memristive neural networks with mixed delays via quantized intermittent control. Appl. Math. Comput. 339, 874–887 (2018)

Feng, Y., Xiong, X., Tang, R., Yang, X.: Exponential synchronization of inertial neural networks with mixed delays via quantized pinning control. Neurocomputing 310, 165–171 (2018)

Wang, Z.D., Shu, H.S., Liu, Y.R., Ho, D.W.C., Liu, X.H.: Robust stability analysis of generalized neural networks with discrete and distributed time delays. Chaos Solitons Fractals 30, 886–896 (2006)

Zeng, Z.G., Huang, T.W., Zheng, W.X.: Multistability of recurrent neural networks with time-varying delays and the piecewise linear activation function. IEEE Trans. Neural Netw. 21(8), 1371–1377 (2010)

Cao, J., Song, Q., Li, T., Luo, Q., Suna, C.Y., Zhang, B.Y.: Exponential stability of recurrent neural networks with time-varying discrete and distributed delays. Nonlinear Anal. 10, 2581–2589 (2009)

Yang, F., Zhang, C., Lien, D.C.H., Chung, L.Y.: Global asymptotic stability for cellular neural networks with discrete and distributed time-varying delays. Chaos Solitons Fractals 34, 1213–1219 (2007)

Song, Q., Yu, Q., Zhao, Z., Liu, Y., Alsaadi, F.E.: Dynamics of complex-valued neural networks with variable coefficients and proportional delays. Neurocomputing 275, 2762–2768 (2018)

Liu, X.W., Chen, T.P.: Global exponential stability for complex-valued recurrent neural networks with asynchronous time delays. IEEE Trans. Neural Netw. Learn. Syst. 27(3), 593–606 (2016)

Li, L., Li, C.: Discrete analogue for a class of impulsive Cohen–Grossberg neural networks with asynchronous time-varying delays. Neural Process. Lett. (2018). https://doi.org/10.1007/s11063-018-9819-3

Acknowledgements

The authors would like to thank the referees for their valuable comments and suggestion.

Availability of data and materials

Not applicable.

Funding

No funding.

Author information

Authors and Affiliations

Contributions

The authors contributed equally to the writing of this paper. The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jia, S., Chen, Y. Global exponential asymptotic stability of RNNs with mixed asynchronous time-varying delays. Adv Differ Equ 2020, 200 (2020). https://doi.org/10.1186/s13662-020-02648-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-02648-3