Abstract

This paper is concerned with the estimation problem for incomplete information stochastic systems from discrete observations. The suboptimal estimation of the state is obtained by constructing the extended Kalman filtering equation. The approximate likelihood function is given by using a Riemann sum and an Itô sum to approximate the integrals in the continuous-time likelihood function. The consistency of the maximum likelihood estimator and the asymptotic normality of the error of estimation are proved by applying the martingale moment inequality, Hölder’s inequality, the Chebyshev inequality, the Burkholder–Davis–Gundy inequality and the uniform ergodic theorem. An example is provided to verify the effectiveness of the estimation methods.

Similar content being viewed by others

1 Introduction

Stochastic differential equations have been widely used in many application areas such as engineering, information and medical science [4, 5]. Recently, stochastic differential equations have been applied to a description of the dynamics of a financial asset, asset portfolio and term structure of interest rates, such as the Black–Scholes option pricing model [6], Vasicek and Cox–Ingersoll–Ross pricing formulas [8, 9, 27], the Chan–Karloyi–Longstaff–Sanders model [10], the Constantinides model [11], and the Ait–Sahalia model [1]. Some parameters in pricing formulas describe the related assets dynamic, however, these parameters are always unknown. In the past few decades, some authors studied the parameter estimation problem for economic models. For example, Yu and Phillips [33] used a Gaussian approach to the study of the parameter estimation for continuous-time short-term interest rates model, Overback [21], Rossi [24] and Wei et al. [29] investigated the parameter estimation problem for the Cox–Ingersoll–Ross model by applying the maximum likelihood method, the least-square method and the Gaussian method, respectively. Moreover, some methods have been used to estimate parameters in general nonlinear stochastic differential equation from continuous-time observations. For instance, we have Bayes estimations [16, 22], maximum likelihood estimations [3, 30, 31] and M-estimations [25, 32]. However, in fact, it is impossible to observe a process continuously in time. Therefore, parametric inference based on sampled data is important in dealing with practical problems, such as least-square estimations [17, 18], approximate transition densities [2, 7] and adaptive maximum likelihood type estimations [26].

A variety of stochastic systems are described by stochastic differential equations [23], and sometimes the stochastic systems have incomplete information. Many authors studied the state estimation problem for incomplete information stochastic systems by using Kalman filtering or extended Kalman filtering [13, 15, 19, 28]. Furthermore, sometimes the parameters and states of a stochastic system are unknown at the same time. Therefore, the parameter estimation and state estimation need to be solved simultaneously. In recent years, some authors investigated the parameter estimation problem for incomplete information linear stochastic systems. For example, Deck and Theting [12] used Kalman filtering and the Bayes method to study the linear homogeneous stochastic systems. Kan et al. [14] discussed the linear nonhomogeneous stochastic systems based on the methods used in [12]. Mbalawata et al. [20] applied Kalman filtering and a maximum likelihood estimation to investigate the parameter and state estimation for linear stochastic systems.

As is well known, parameter estimation for incomplete information linear stochastic systems has been studied by some authors [12, 14, 20]. However, the asymptotic property of the parameter estimator has not been discussed in [20], and in [12, 14], only the drift parameter has been considered. In this paper, the parameter estimation problem for incomplete information nonlinear stochastic system is investigated from discrete observations. Firstly, the suboptimal estimation of the state is obtained by constructing the extended Kalman filtering. Secondly, the approximate likelihood function is given by using a Riemann sum and an Itô sum to approximate the integrals in the continuous-time likelihood function. In the approximate likelihood function, both drift and diffusion parameter are unknown. Finally, the consistency of the maximum likelihood estimator and the asymptotic normality of the error of estimation are proved by applying the martingale moment inequality, Hölder’s inequality, the Chebyshev inequality, the Burkholder–Davis–Gundy inequality and the uniform ergodic theorem.

This paper is organized as follows. In Sect. 2, the state estimation is derived and the approximate likelihood function is given. In Sect. 3, some important lemmas are proved, the consistency of estimator and asymptotic normality of the error of estimation are discussed. In Sect. 4, an example is provided. The conclusion is given in Sect. 5.

2 Problem formulation and preliminaries

In this paper, the estimation problem for incomplete information stochastic system is investigated. The stochastic system is described as follows:

where \(\theta \in \varTheta =\overline{\varTheta }_{0}\) (the closure of \(\varTheta _{0}\)) with \(\varTheta _{0}\) being an open bounded convex subset of \(\mathbb{R}\) is an unknown parameter, \((W_{t},t\geq 0)\) and \((V_{t},t\geq 0)\) are independent Wiener processes, \(X_{t}\) is ergodic, \(u_{\theta }\) is the invariant measure, \(\{Y_{t}\}\) is observable, while \(\{X_{t}\}\) is unobservable.

From now on the work is under the assumptions below.

Assumption 1

\(|f(x,\theta )-f(y,\theta )|\leq K(\theta )|x-y|\), \(|f(x,\theta )| \leq K_{1}(\theta )(1+|x|)\), \(\sup \{K(\theta ),K_{1}(\theta )\}<\infty \), \(\theta \in \varTheta \), \(x,y \in {\mathbb{R}}\).

Assumption 2

\(|h(x)-h(y)|\leq |x-y|\).

Assumption 3

\({\mathbb{E}}|X_{0}|^{p}<\infty \) for each \(p>0\).

Assumption 4

\({\mathbb{E}}[f(X_{0},\theta )(f(X_{0},\theta _{0})-\frac{1}{2}f(X_{0}, \theta ))]\) has the unique maximal value at \(\theta =\theta _{0}\), where \(\theta _{0}\) is the true parameter.

The likelihood function cannot be given directly due to the unobservability of \(\{X_{t}\}\). Therefore, we should solve the estimation problem of \(\{X_{t}\}\) firstly.

The state estimator is designed as follows:

According to (1) and (2), we have

From the Itô lemma and (3), it can be checked that

Taking the expectation from both sides of (4), from Assumption 2, we obtain

Therefore, when \(K_{t}={\mathbb{E}}(X_{t}-\widehat{X_{t}})^{2}\), (5) has the minimum value

From Assumption 1, one has

Since \(f(X_{t},\theta )\) is nonlinear, we cannot obtain the optimal state estimation of \(X_{t}\), the suboptimal state estimation is considered.

Consider

Let

one has

It is easy to check that

Then we obtain

Therefore, it is obvious that

Let

where \((V^{*}_{t},t\geq 0)\) is assumed to be a standard Wiener process defined on a complete probability space \((\varOmega , \mathscr{F}, \{ {\mathscr{F}}_{t}\}_{t\geq 0}, \mathrm{P})\).

Hence

It is assumed that the system (14) reaches the steady state, which means that

In summary, the suboptimal state estimation of \(X_{t}\) is (15).

The likelihood function obeys the expression

Then the approximate likelihood function can be written as

Remark 1

That system (14) reaches the steady state means that the Riccatti equation satisfies \(\frac{d\gamma _{t}}{\,dt}=0\). Hence, we obtain \(\gamma _{t}=\gamma (\theta )\).

Remark 2

In (15), both drift item and diffusion item have a parameter. Thus, it is difficult to discuss the asymptotic property of the estimator. In the next section, the problem is solved.

3 Main results and proofs

The following lemmas are useful to derive our results.

Lemma 1

Assume that \(\{\widehat{X_{t}}\}\) is a solution of the stochastic differential (14) and Assumptions 1–4 hold. Then, for any integer \(n\geq 1\) and \(0\leq s\leq t\),

Proof

Suppose \(\theta _{0}\) is the true parameter value; by applying Hölder’s inequality, it follows that

Since

from Assumption 3 together with the stationarity of the process, one has

From the Burkholder–Davis–Gundy inequality, it can be checked that

where \(C_{p}\) is a positive constant depending only on p.

Then we have

From the above analysis, it follows that

The proof is complete. □

Lemma 2

Under Assumptions 1 and 3, when \(\Delta \rightarrow 0\), one has

Proof

By applying Hölder’s inequality, it can be checked that

From Lemma 1 together with Assumptions 1 and 3, we have

and \({\mathbb{E}}[\frac{f(\widehat{X_{t_{i-1}}},\theta )}{\gamma ^{2}( \theta )}]^{2}\) is bounded.

From the above analysis, it follows that

as \(\Delta \rightarrow 0\).

The proof is complete. □

Lemma 3

Under Assumptions 1 and 3, we have

as \(\Delta \rightarrow 0\).

Proof

From the Hölder inequality and Assumption 1 together with the stationarity of the process, one has

where \(\sup \{K_{2}(\theta )\}<\infty \).

According to the above analysis together with Lemma 1, it follows that

as \(\Delta \rightarrow 0\).

The proof is complete. □

Remark 3

By employing the Hölder inequality, the Burkholder–Davis–Gundy inequality and the stationarity of the process, the above lemmas have been proved. These lemmas play a key role in the proof of the following main results.

In the following theorem, the consistency in probability of the maximum likelihood estimator is proved by applying a martingale moment inequality, the Chebyshev inequality, the uniform ergodic theorem and the results of Lemmas 1–3.

Theorem 1

When \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \),

Proof

According to the expression of the approximate likelihood function and Eq. (1), it follows that

Then we have

From the martingale moment inequality, it can be checked that

where C and \(C_{1}\) are constants.

By applying the Chebyshev inequality, it can be found that

when \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \).

From Lemmas 2–3 together with the uniform ergodic theorem (see e.g. [22]), one has

when \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \).

Hence, it leads to the relation

when \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \).

From Assumption 4, it is easy to check that

when \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \).

The proof is complete. □

In the following theorem, the asymptotic normality of the error of estimation is proved by employing the martingale moment inequality, the Chebyshev inequality, the uniform ergodic theorem and the dominated convergence theorem.

Theorem 2

When \(\Delta \rightarrow 0\), \(n^{\frac{1}{2}}\Delta \rightarrow 0\) and \(n\Delta \rightarrow \infty \) as \(n\rightarrow \infty \),

Proof

Expanding \(\ell '_{n}(\theta _{0})\) about \(\widehat{\theta _{0}}\), it follows that

where θ̃ is between \(\widehat{\theta _{0}}\) and \(\theta _{0}\).

In view of Theorem 1, it is well known that \(\ell '_{n}( \widehat{\theta _{0}})=0\); then

Since

we have

From the same method used in Theorem 1, it is easy to check that

and

By applying the results of Lemmas 2–3 and the uniform ergodic theorem, it follows that

and

Therefore, we have

According to the expression of \(\ell ''_{n}(\theta )\) and by employing the martingale moment inequality, the Chebyshev inequality, the uniform ergodic theorem and the dominated convergence theorem, it follows that

Hence, it can be found that

Since

it follows that

As

it is easy to check that \({\mathbb{E}}[f'(\widehat{X_{t_{i-1}}},\theta _{0})]^{2}\) is bounded.

From Lemma 1 and Assumption 1, we have

Then it follows that

when \(\Delta \rightarrow 0\), \(n^{\frac{1}{2}}\Delta \rightarrow 0\) and \(n\Delta \rightarrow \infty \) as \(n\rightarrow \infty \).

By applying the Chebyshev inequality, it can be found that

By applying the same methods, we have

It is obvious that

Hence, we have

when \(\Delta \rightarrow 0\), \(n^{\frac{1}{2}}\Delta \rightarrow 0\) and \(n\Delta \rightarrow \infty \) as \(n\rightarrow \infty \).

From the above analysis, it can be checked that

when \(\Delta \rightarrow 0\), \(n^{\frac{1}{2}}\Delta \rightarrow 0\) and \(n\Delta \rightarrow \infty \) as \(n\rightarrow \infty \).

The proof is complete. □

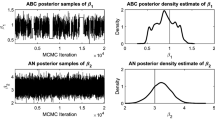

4 Example

Consider the incomplete information nonlinear stochastic system described as follows:

where θ is an unknown parameter, \((W_{t},t\geq 0)\) and \((V_{t},t\geq 0)\) are independent Wiener processes, \(u_{\theta }\) is the invariant measure, \(\{Y_{t}\}\) is observable, while \(\{X_{t}\}\) is unobservable, the equation of \(X_{t}\) is called a hyperbolic diffusion equation.

Firstly, it is easy to verify that \(X_{t}\) is an ergodic diffusion process.

Then, since

\({\mathbb{E}}[\theta \frac{X_{0}}{\sqrt{1+X_{0}^{2}}}(\theta _{0}\frac{X _{0}}{\sqrt{1+X_{0}^{2}}}-\frac{1}{2}\theta \frac{X_{0}}{\sqrt{1+X _{0}^{2}}})]\) attains the unique maximum at \(\theta =\theta _{0}\), it is easy to check that the coefficients satisfy Assumptions 1–4.

Therefore, it is easy to check that

when \(\Delta \rightarrow 0\), \(n\rightarrow \infty \) and \(n\Delta \rightarrow \infty \).

5 Conclusion

The aim of this paper is to estimate the parameter for incomplete information stochastic system from discrete observations. The suboptimal estimation of the state has been obtained by constructing the extended Kalman filtering and the approximate likelihood function has been given. The consistency of the maximum likelihood estimator and the asymptotic normality of the error of estimation have been proved by applying the martingale moment inequality, Hölder’s inequality, the Chebyshev inequality, the Burkholder–Davis–Gundy inequality and the uniform ergodic theorem. Further research topics will include the parameter estimation for incomplete information stochastic systems driven by a Lévy process.

References

Ait-Sahalia, Y.: Testing continuous-time models of the spot interest rate. Rev. Financ. Stud. 9, 385–426 (1995)

Aït-Sahalia, Y.: Maximum likelihood estimation of discretely sampled diffusions: a closed-form approximation approach. Econometrica 70, 223–262 (2002)

Barczy, M., Pap, G.: Asymptotic behavior of maximum likelihood estimator for time inhomogeneous diffusion processes. J. Stat. Plan. Inference 140, 1576–1593 (2010)

Øksendal, B.: Stochastic Differential Equations: An Introduction with Applications. Springer, Berlin (2003)

Bishwal, J.P.N.: Parameter Estimation in Stochastic Differential Equations. Springer, London (2008)

Black, F., Scholes, M.: The pricing of options and corporate liabilities. J. Polit. Econ. 81, 637–654 (1973)

Chang, J.Y., Chen, S.X.: On the approximate maximum likelihood estimation for diffusion processes. Ann. Stat. 39, 2820–2851 (2011)

Cox, J., Ingersoll, J., Ross, S.: An intertemporal general equilibrium model of asset prices. Econometrica 53, 363–384 (1985)

Cox, J., Ingersoll, J., Ross, S.: A theory of the term structure of interest rates. Econometrica 53, 385–408 (1985)

Chan, K.C.: An empirical comparison of alternative models of the short-term interest rate. J. Finance 47, 1209–1227 (1992)

Constantinides, G.M.: A theory of the nominal term structure of interest rates. Rev. Financ. Stud. 5, 531–552 (1992)

Deck, T., Theting, T.G.: Robust parameter estimation for stochastic differential equations. Acta Appl. Math. 84, 279–314 (2004)

Dong, H.: On infinity estimation of randomly occurring faults for a class of nonlinear time varying systems with fading channels. IEEE Trans. Autom. Control 61, 479–484 (2016)

Kan, X., Shu, H.S., Che, Y.: Asymptotic parameter estimation for a class of linear stochastic systems using Kalman–Bucy filtering. Math. Probl. Eng. 2012, Article ID 342705 (2012). https://doi.org/10.1155/2012/342705

Kannan, R.: Orientation estimation based on LKF using differential state. IEEE Sens. J. 15, 6156–6163 (2015)

Kutoyants, Y.A.: Statistical Inference for Ergodie Diffusion Processes. Springer, London (2004)

Long, H.: Parameter estimation for a class of stochastic differential equations driven by small stable noises from discrete observations. Acta Math. Sci. 30, 645–663 (2010)

Long, H., Shimizu, Y., Sun, W.: Least squares estimators for discretely observed stochastic processes driven by small Lévy noises. J. Multivar. Anal. 116, 422–439 (2013)

Lu, X., Xie, L., Zhang, H., et al.: Robust Kalman filtering for discrete-time systems with measurement delay. IEEE Trans. Circuits Syst. II, Express Briefs 54, 522–526 (2007)

Mbalawata, I.S., Särkkä, S., Haario, H.: Parameter estimation in stochastic differential equations with Markov chain Monte Carlo and non-linear Kalman filtering. Comput. Appl. Stat. 28, 1195–1223 (2013)

Overback, L., Rydén, T.: Estimation in Cox–Ingersoll–Ross model. Econom. Theory 13, 430–461 (1997)

Prakasa Rao, B.L.S.: Statistical Inference for Diffusion Type Processes. Arnold, London (1999)

Protter, P.E.: Stochastic Integration and Differential Equations: Stochastic Modelling and Applied Probability, 2nd edn. Applications of Mathematics (New York), vol. 21. Springer, Berlin (2004)

Rossi, G.D.: Maximum likelihood estimation of the Cox–Ingersoll–Ross model using particle filters. Comput. Econ. 36, 1–16 (2010)

Shimizu, Y.: Estimation of parameters for discretely observed diffusion processes with a variety of rates for information. Ann. Inst. Stat. Math. 64, 545–575 (2012)

Uchida, M., Yoshida, N.: Adaptive estimation of an ergodic diffusion process based on sampled data. Stoch. Process. Appl. 122, 2885–2924 (2012)

Vasicek, O.: An equilibrium characterization of the term structure. J. Financ. Econ. 5, 177–188 (1977)

Wang, Z., Lam, J., Liu, X.H.: Filtering for a class of nonlinear discrete-time stochastic systems with state delays. J. Comput. Appl. Math. 201, 153–163 (2007)

Wei, C., Shu, H.S., Liu, Y.R.: Gaussian estimation for discretely observed Cox–Ingersoll–Ross model. Int. J. Gen. Syst. 45, 561–574 (2016)

Wei, C., Shu, H.S.: Maximum likelihood estimation for the drift parameter in diffusion processes. Stoch. Int. J. Probab. Stoch. Process. 88, 699–710 (2016)

Wen, J.H., Wang, X.J., Mao, S.H., et al.: Maximum likelihood estimation of McKean–Vlasov stochastic differential and its application. Appl. Math. Comput. 274, 237–246 (2015)

Yoshida, N.: Asymptotic behavior of M-estimator and related random field for diffusion process. Ann. Inst. Stat. Math. 42, 221–251 (1990)

Yu, J., Phillips, P.C.B.: A Gaussian approach for continuous time models of the short-term interest rate. Econom. J. 4, 210–224 (2001)

Acknowledgements

Not applicable.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61403248.

Author information

Authors and Affiliations

Contributions

The author read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Wei, C. Estimation for incomplete information stochastic systems from discrete observations. Adv Differ Equ 2019, 227 (2019). https://doi.org/10.1186/s13662-019-2169-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2169-2