Abstract

In this paper, the convergence of iterative learning control with initial state error for some fractional equation is studied. According to the Laplace transform and the M-L function, the concept of mild solutions is showed. The sufficient conditions of convergence for the open and closed P-type iterative learning control are obtained. Some examples are given to illustrate our main results.

Similar content being viewed by others

1 Introduction

In this paper, we will analysis the convergence of iterative learning control initial state error of the following fractional system:

where \({}^{\mathrm{c}}\mathrm{D}^{\alpha}_{t}\) denotes the Caputo fractional derivative of order α, \(0<\alpha<1\). \(A, B,C\in R^{n\times n}\), \(u(t)\) is a control vector.

Iterative learning control (ILC) was shown by Uchiyama in 1978 (in Japanese), but only few people noticed it, Arimoto et al. developed the ILC idea and studied the effective algorithm until 1984, they made it to be the iterative learning control theory, more and more people paid attention to it.

The fractional calculus and fractional difference equations have attracted lots of authors during in the past years, they published some outstanding work [1–12], because they described many phenomena in engineering, physics, science, and controllability. The work of fractional order systems in iterative learning control appeared in 2001, and extensive attention has been paid to this field and great progress has been made in the following 15 years [13–19], many fractional nonlinear systems were researched [20–23]. To our knowledge, it has not been studied very extensively. In the study of iterative control theory, assume that the initial state of each run is on the desired trajectory, however, the actual operation often causes some error from the iterative initial state to the desired trajectory, so we consider the system (1) and study the convergence of the learning law.

Motivated by the above mentioned works, the rest of this paper is organized as follows: In Section 2, we will show some definitions and preliminaries which will be used in the following parts. In Sections 3 and 4, we give some results for P-type ILC for some fractional system. In Section 5, some simulation examples are given to illustrate our main results.

In this paper, the norm for the n-dimensional vector \(w=(w_{1},w_{2},\ldots ,w_{n})\) is defined as \(\|w\|=\max_{1 \leq i \leq n }|w_{i}|\), and the λ-norm is defined as \(\|x\|_{\lambda}=\sup_{ t\in[0,T]}\{ e^{-\lambda t}|x(t)|\}\), \(\lambda>0\).

2 Some preliminaries for some fractional system

In this section, we will give some definitions and preliminaries which will be used in the paper, for more information, one can see [1–4].

Definition 2.1

The integral

is called the Riemann-Liouville fractional integral of order α, where Γ is the gamma function.

For a function \(f(t)\) given in the interval \([0,\infty)\), the expression

where \(n=[\alpha]+1\), \([\alpha]\) denotes the integer part of number α, is called the Riemann-Liouville fractional derivative of order \(\alpha>0\).

Definition 2.2

Caputo’s derivative for a function \(f:[0,\infty)\rightarrow R \) can be written as

where \([\alpha]\) denotes the integer part of real number α.

Definition 2.3

The definition of the two-parameter function of the Mittag-Leffler type is described by

if \(\beta=1\), we get the Mittag-Leffler function of one parameter,

Now, according to [24–29], we shall give the following lemma.

Lemma 2.4

The general solution of equation (1) is given by

Lemma 2.5

From Definition 2.4 in [30], we know that the operators \(S_{\alpha,1}(t)\), \(S_{\alpha,\alpha}(t)\), \(S_{\alpha,\alpha-1}(t)\) are exponentially bounded, there is a constant \(C_{0}=\frac{1}{\alpha}\), \(C_{1}=\frac{1}{\alpha}\|A\|^{\frac{1-\alpha}{\alpha}}\), \(C_{2}=\frac {1}{\alpha}\|A\|^{\frac{2-\alpha}{\alpha}}\), \(e_{\alpha}(t)=e^{\|A\| ^{\frac{1}{\alpha}}t}\), \(M=e_{\alpha}(b)\),

3 Open and closed-loop case

In this section, we consider the following fractional equation: \(k=0,1,2,3,\ldots\) ,

For equation (4), we apply the following open and closed-loop P-type ILC algorithm, \(t\in[0,b]\):

where \(L_{1}\), \(L_{2}\) are the parameters which will be determined, \(e_{k}=y_{d}(t)-y_{k}(t)\), \(y_{d}(t)\) are the given functions. The initial state of each iterative learning is

We make the following assumptions:

-

(H1):

\(1-\lambda^{-1}C_{1}M\|C\|\|L_{2}B\|>0\),

-

(H2):

\(\frac{\|I-C S_{\alpha,1}(A,t)B L_{1}\|+\lambda^{-1}C_{1}M\| C\|\|L_{1}B\|}{1-\lambda^{-1}C_{1}M\|C\|\|L_{2}B\|}<1\).

Theorem 3.1

Assume that the open and closed-loop P-type ILC algorithm (5) is used, (H1) and (H2) hold, let \(y_{k}(\cdot)\) be the output of equation (4), if the initial state of each iterative learning satisfy (6), \(\lim_{k\to\infty}\| e_{k}\|_{\lambda}=0\), \(t\in J\).

Proof

According to (2), (5), and (6), we know

so the \((k+1)\)th iterative error is

take the norm of (7),

take the λ-norm of (8),

if \(1-\lambda^{-1}C_{1}M\|C\|\|L_{2}B\|>0\),

let \(\frac{\|I-C S_{\alpha,1}(A,t)B L_{1}\|+\lambda^{-1}C_{1}L_{1}M\|C\| \|B\|}{1-\lambda^{-1}C_{1}L_{2}M\|C\|\|B\|}<1\), (10) is a contraction mapping, and it follows from the contraction mapping that \(\lim_{k\to\infty}\| e_{k}\|_{\lambda}=0\), \(t\in J\). This completes the proof. □

Theorem 3.1 implied that the tracking error \(e_{k}(t)\) depends on C and \(x_{k}(t)\), it is also observed for (9) that the boundedness of the parameters C, B, \(L_{0}\), \(L_{1}\) implies the boundedness of the \(\|e_{k}\|_{\lambda}\), so Theorem 3.1 indirectly indicated that the output error also depend on \(\frac{\|I-C S_{\alpha,1}(A,t)B L_{1}\|+\lambda^{-1}C_{1}M\|C\|\|L_{1}B\|}{1-\lambda ^{-1}C_{1}M\|C\|\|L_{2}B\|}\). From the result, we can do a more in-depth discussion.

Corollary 3.2

Suppose that all conditions are the same with Theorem 3.1, \(\lim_{k\to\infty}\| e_{k}\|_{\lambda}=0\), then

Proof

From Theorem 3.1, the important condition is \(\frac{\|I-C S_{\alpha,1}(A,t)B L_{1}\|+\lambda^{-1}C_{1}M\|C\|\|L_{1}B\|}{1-\lambda ^{-1}C_{1}M\|C\|\|L_{2}B\|}<1\), which implies that

we can get

□

4 P-type ILC for some fractional system with random disturbance

In this section, we consider the following fractional equation: \(k=0,1,2,3,\ldots\) ,

where \(\omega_{k}(t)\), \(\nu_{k}(t)\) are the random disturbance.

Firstly, we will make some assumptions to be satisfied on the data of our problem:

-

(H3):

\(\|\omega_{k} \|_{\lambda}\leq\varepsilon_{1}\), \(\|\nu_{k} \| _{\lambda}\leq\varepsilon_{2}\) for some positive constants \(\varepsilon _{1}\), \(\varepsilon_{2}\),

-

(H4):

\(\rho_{1}=\|I+CS_{\alpha,1}(A,t)L_{2} B \|-\lambda^{-1}C_{1}\| C\|\|L_{2}B\|M>0\), \(\rho_{2}=\|I-CS_{\alpha,1}(A,t)L_{1} B \|+\lambda^{-1}C_{1}\|C\|\|L_{1}B\|M\).

For equation (11), we choose the following open and closed-loop P-type ILC algorithm, \(t\in[0,b]\):

where \(L_{1}\), \(L_{2}\) are the parameters which will be determined, \(e_{k}=y_{d}(t)-y_{k}(t)\), \(y_{d}(t)\) are the given functions.

Assume that the initial state of each iterative learning is (13), where \(L_{1}\), \(L_{2}\) are the parameters which will be determined. We have

Theorem 4.1

Assume that the hypotheses (H3), (H4) are satisfied, let \(y_{k}(\cdot)\) be the output of equation (2), if \(\varepsilon_{1}\rightarrow0\) and \(\varepsilon_{2}\rightarrow0\), \(\rho _{1}>\rho_{2}\), the open and closed-loop P-type ILC (12) guarantees that \(\lim_{k\to\infty}\| e_{k}\|_{\lambda}=0\), \(t\in J\).

Proof

According to (2) and assumptions (H2), (H3), we know

the \((k+1)\)th iterative error is

Taking the norm of (14), it is easy to obtain

once more using the λ-norm, we have

invoking (H3) and (H4), if \(\varepsilon=\lambda^{-1}C_{1}\varepsilon_{1}M\| C\|+\varepsilon_{2}\),

which implies that

if \(\varepsilon_{1}\rightarrow0\) and \(\varepsilon_{2}\rightarrow0\), \(\varepsilon\rightarrow0\), thus \(\lim_{k\to\infty}\| e_{k}\|_{\lambda}=0\), \(t\in J\), and this completes the proof. □

From Theorem 4.1, on the one hand, the random disturbance makes some impact on the system (11), \(\varepsilon _{1}\rightarrow0\) and \(\varepsilon_{2}\rightarrow0\) imply the impact is very small; on the other hand, \(\rho_{1}>\rho_{2}\), for this condition, we illustrate the following corollary.

Corollary 4.2

Suppose that all conditions are the same as Theorem 4.1, \(\lim_{k\to\infty}\| e_{k}(t)\|_{\lambda}=0\), then t satisfies

Proof

According to (H4), \(\|I+CS_{\alpha,1}(A,t)L_{2} B \|-\lambda ^{-1}C_{1}\|C\|\|L_{2}B\|M>0\), then

From Theorem 4.1, we know that \(\varepsilon_{1}\rightarrow0\) and \(\varepsilon_{2}\rightarrow0\), and the condition is

which yields \(\frac{\ln|\frac{C_{1}M}{\lambda C_{0}}|}{\|A\|^{\frac {1}{\alpha}}}< t \). At last, we obtain the estimate

□

5 Simulations

In this section, we will give two simulation examples to demonstrate the validity of the algorithms.

5.1 P-type ILC with initial state error

with the iterative learning control and initial state error

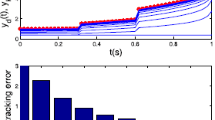

We set the initial control \(u_{0}(\cdot)=0\), \(y_{d}(t)=3t^{2}(1-5t)\), \(t\in(0,1.8)\), and set \(\alpha=0.5\), \(A=1\), \(B=0.2\), \(C=0.5\), \(\lambda =2\), \(L_{1}=L_{2}=0.5\), and \(C_{0}=2\), \(C_{1}=2\), \(\lambda-\|A\|^{\frac {1}{\alpha}}=1>0\), \(M\approx6>0\), \(1-\lambda^{-1}C_{1}M\|C\|\|L_{2}B\| \approx0.7>0\), \(\frac{\|I-C S_{\alpha,1}(A,t)B L_{1}\|+\lambda ^{-1}C_{1}M\|C\|\|L_{1}B\|}{1-\lambda^{-1}C_{1}M\|C\|\|L_{2}B\|}\approx \frac{0.5}{0.7}<1\), all conditions of Theorem 3.1 are satisfied.

The simulation result can be seen from Figure 1 and Figure 2, for the open and closed-loop P-type ILC system (17), with the increase of the number of iterations, it can track the desired trajectory gradually by using the algorithm. We do not use the single iteration rate to get the result, because in the late of the iteration, the output of the system may jump around the desired trajectory, so we adopt a correction method, that is, when \(e(k)>0\), \(u(k)=u(k)-0.5\times e(k)\) or \(e(k)<0\), \(u(k)=u(k)+0.5\times e(k) \), k is the number of iteration, the result approaches the desired trajectory stably and quickly, from Figure 2, the tracking error tends to zero at the 15th iteration, so the iterative learning control is feasible and the efficiency is high.

5.2 P-type ILC with random disturbance

Consider the following P-type ILC system:

with the iterative learning control and initial state error

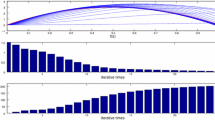

We set the initial control \(u_{0}(\cdot)=0\), \(y_{d}(t)=5t^{2}-t\), \(t\in (0,1.8)\), and set \(\alpha=0.5\), \(A=1\), \(B=0.2\), \(C=1\), \(\lambda=2\), \(L_{1}=1\), \(L_{2}=0.5\), and \(C_{0}=2\), \(C_{1}=2\), \(\rho_{1}\approx1.65\), \(\rho _{2}\approx0.65\), \(\varepsilon_{1}=10^{-15}\rightarrow0\), \(\varepsilon _{2}=10^{-10}\rightarrow0\), all conditions of Theorem 4.1 are satisfied. We also use a correction method, that is, when \(e(k)>0\), \(u(k)=u(k)-m\times e(k)\) or \(e(k)<0\), \(u(k)=u(k)+m\times e(k) \), k is the number of iterations, m is the parameter, we set \(m=0.5, 0.7, 1\), and the output of the system is shown in Figure 3, Figure 4, Figure 5. The symbol ∗∗∗ denotes the desired trajectory, — denotes the output of the system, the tracking error is shown in Figure 6, Figure 7, Figure 8, which imply the number of iteration and the tracking error.

From Figures 3-8 and Table 1, we find the tracking error tends to zero within 7 iterations, so the output of the system can track the desired trajectory almost perfectly. By comparing three cases, when \(m=1\), the iteration number is only 2, and the tracking error is 0.0001, thus the tracking performance is best and improved over the iteration domain.

References

Miller, KS, Ross, B: An Introduction to the Fractional Calculus and Differential Equations. Wiley, New York (1993)

Kilbas, AA, Srivastava, HM, Trujillo, JJ: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Lakshmikantham, V, Leela, S, Devi, JV: Theory of Fractional Dynamic Systems. Cambridge Academic Publishers, Cambridge (2009)

Diethelm, K: The Analysis of Fractional Differential Equations. Springer, Berlin (2010)

Wang, JR, Zhou, Y, Medved, M: On the solvability and optimal controls of fractional integrodifferential evolution systems with infinite delay. J. Optim. Theory Appl. 152, 31-50 (2012)

Zhang, L, Ahmad, B, Wang, G: Explicit iterations and extremal solutions for fractional differential equations with nonlinear integral boundary conditions. Appl. Math. Comput. 268, 388-392 (2015)

Zhang, X: On the concept of general solution for impulsive differential equations of fractional-order \(q\in(1, 2)\). Appl. Math. Comput. 268, 103-120 (2015)

Ge, F-D, Zhou, H-C, Ko, C-H: Approximate controllability of semilinear evolution equations of fractional order with nonlocal and impulsive conditions via an approximating technique. Appl. Math. Comput. 275, 107-120 (2016)

Arthi, G, Park, JH, Jung, HY: Existence and exponential stability for neutral stochastic integrodifferential equations with impulses driven by a fractional Brownian motion. Commun. Nonlinear Sci. Numer. Simul. 32, 145-157 (2016)

Hernández, E, O’Regan, D, Balachandran, K: On recent developments in the theory of abstract differential equations with fractional derivatives. Nonlinear Anal. 73, 3462-3471 (2010)

Zayed, EME, Amer, YA, Shohib, RMA: The fractional complex transformation for nonlinear fractional partial differential equations in the mathematical physics. J. Assoc. Arab Univ. Basic Appl. Sci. 19, 59-69 (2016)

Zhang, X, Zhang, B, Repovš, D: Existence and symmetry of solutions for critical fractional Schrödinger equations with bounded potentials. Nonlinear Anal., Real World Appl. 142, 48-68 (2016)

Bien, Z, Xu, JX: Iterative Learning Control Analysis: Design, Integration and Applications. Springer, New York (1998)

Chen, YQ, Wen, C: Iterative Learning Control: Convergence, Robustness and Applications. Springer, Berlin (1999)

Norrlof, M: Iterative Learning Control: Analysis, Design, and Experiments. Linkoping Studies in Science and Technology, Dissertations, Sweden (2000)

Xu, JX, Tan, Y: Linear and Nonlinear Iterative Learning Control. Springer, Berlin (2003)

Wang, Y, Gao, F, Doyle III, FJ: Survey on iterative learning control, repetitive control, and run-to-run control. J. Process Control 19, 1589-1600 (2009)

de Wijdeven, JV, Donkers, T, Bosgra, O: Iterative learning control for uncertain systems: robust monotonic convergence analysis. Automatica 45, 2383-2391 (2009)

Xu, JX: A survey on iterative learning control for nonlinear systems. Int. J. Control 84, 1275-1294 (2011)

Li, Y, Chen, YQ, Ahn, HS: Fractional-order iterative learning control for fractional-order systems. Asian J. Control 13, 54-63 (2011)

Lan, Y-H: Iterative learning control with initial state learning for fractional order nonlinear systems. Comput. Math. Appl. 64, 3210-3216 (2012)

Lin, M-T, Yen, C-L, Tsai, M-S, Yau, H-T: Application of robust iterative learning algorithm in motion control system. Mechatronics 23, 530-540 (2013)

Yan, L, Wei, J: Fractional order nonlinear systems with delay in iterative learning control. Appl. Math. Comput. 257, 546-552 (2015)

Liu, S, Wang, JR, Wei, W: Analysis of iterative learning control for a class of fractional differential equations. J. Appl. Math. Comput. 53, 17-31 (2017)

Liu, S, Debbouche, A, Wang, J: On the iterative learning control for stochastic impulsive differential equations with randomly varying trial lengths. J. Comput. Appl. Math. 312, 47-57 (2017)

Zhou, Y, Jiao, F: Existence of mild solutions for fractional neutral evolution equations. Comput. Math. Appl. 59, 1063-1077 (2010)

Mophou, GM, N’Guérékata, GM: Existence of mild solutions of some semilinear neutral fractional functional evolution equations with infinite delay. Appl. Math. Comput. 216, 61-69 (2010)

Wei, J: The controllability of fractional control systems with control delay. Comput. Math. Appl. 64, 3153-3159 (2012)

Yan, L, Wei, J: Fractional order nonlinear systems with delay in iterative learning control. Appl. Math. Comput. 257, 546-552 (2015)

Bazhlekova, E: Fractional evolution equations in Banach spaces. PhD thesis, Eindhoven University of Technology, Holland (2001)

Acknowledgements

This work was financially supported by the Zunyi Normal College Doctoral Scientific Research Fund BS[2014]19, BS[2015]09, Guizhou Province Mutual Fund LH[2015]7002, Guizhou Province Department of Education Fund KY [2015]391, [2016]046, Guizhou Province Department of Education teaching reform project [2015]337, Guizhou Province Science and technology fund (qian ke he ji chu) [2016]1160, [2016]1161, Zunyi Science and technology talents [2016]15.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors contributed equally to this work. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liu, X., Li, Y. The convergence analysis of P-type iterative learning control with initial state error for some fractional system. J Inequal Appl 2017, 29 (2017). https://doi.org/10.1186/s13660-017-1302-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-017-1302-6