Abstract

Neural field equations are used to describe the spatio-temporal evolution of the activity in a network of synaptically coupled populations of neurons in the continuum limit. Their heuristic derivation involves two approximation steps. Under the assumption that each population in the network is large, the activity is described in terms of a population average. The discrete network is then approximated by a continuum. In this article we make the two approximation steps explicit. Extending a model by Bressloff and Newby, we describe the evolution of the activity in a discrete network of finite populations by a Markov chain. In order to determine finite-size effects—deviations from the mean-field limit due to the finite size of the populations in the network—we analyze the fluctuations of this Markov chain and set up an approximating system of diffusion processes. We show that a well-posed stochastic neural field equation with a noise term accounting for finite-size effects on traveling wave solutions is obtained as the strong continuum limit.

Similar content being viewed by others

1 Introduction

The analysis of networks of neurons of growing size quickly becomes involved from a computational as well as from an analytic perspective when one tracks the spiking activity of every neuron in the network. It can therefore be useful to zoom out from the microscopic perspective and identify a population activity as an average over a certain group of neurons. In the heuristic derivation of such population models it is usually assumed that each of the populations in the network is infinite, such that, in the spirit of the law of large numbers, the description of the activity in each population reduces to a description of the mean. By considering a spatially extended network and letting the density of populations go to infinity, neural field equations are obtained as the continuum limit of these models. Here we consider the voltage-based neural field equation, which is a nonlocal evolution equation of the form

where \(u(x,t)\) describes the average membrane potential in the population at x at time t, \(w:\mathbb {R}\rightarrow[0,\infty)\) is a kernel describing the strengths of the synaptic connections between the populations, and the gain function \(F:\mathbb {R}\rightarrow[0, 1]\) relates the potential to the activity in the population.

Neural field equations were first introduced by Amari [1] and Wilson and Cowan [2, 3] and have since been used extensively to study the spatio-temporal dynamics of the activity in coupled populations of neurons. While they are of a relatively simple form, they exhibit a variety of interesting spatio-temporal patterns. For an overview see for example [4–8]. In this article we will concentrate on traveling wave solutions, modeling the propagation of activity, which were proven to exist in [9].

The communication of neurons is subject to noise. It is therefore crucial to study stochastic versions of (1). While several sources of noise have been identified on the single neuron level, it is not clear how noise translates to the level of populations. Since neural field equations are derived as mean-field limits, the usual effects of noise should have averaged out on this level. However, the actual finite size of the populations leads to deviations from the mean-field behavior, suggesting finite-size effects as an intrinsic source of noise.

The (heuristic) derivation of neural field equations involves two approximation steps. First, the local dynamics in each population is reduced to a description of the mean activity. Second, the discrete network is approximated by a continuum. In this article we make these two approximation steps explicit. In order to describe deviations from the mean-field behavior for finite population sizes, we set up a Markov chain to describe the evolution of the activity in the finite network, extending a model by Bressloff and Newby [10]. The transition rates are chosen in such a way that we obtain the voltage-based neural network equation in the infinite-population limit. We analyze the fluctuations of the Markov chain in order to determine a stochastic correction term describing finite-size effects. In the case of fluctuations around traveling wave solutions, we set up an approximating system of diffusion processes and prove that a well-posed stochastic neural field equation is obtained in the continuum limit.

In order to derive corrections to the neural field equation accounting for finite-size effects, in [11], Bressloff (following Buice and Cowan [12]) sets up a continuous-time Markov chain describing the evolution of the activity in a finite network of populations of finite size N. The rates are chosen such that in the limit as \(N \rightarrow\infty\) one obtains the usual activity-based network equation. He then carries out a van Kampen system size expansion of the associated master equation in the small parameter \(1/N\) to derive deterministic corrections of the neural field equation in the form of coupled differential equations for the moments. To first order, the finite-size effects can be characterized as Gaussian fluctuations around the mean-field limit.

The model is considered from a mathematically rigorous perspective by Riedler and Buckwar in [13]. They make use of limit theorems for Hilbert-space valued piecewise deterministic Markov processes recently obtained in [14] as an extension of Kurtz’s convergence theorems for jump Markov processes to the infinite-dimensional setting. They derive a law of large numbers and a central limit theorem for the Markov chain, realizing the double limit (number of neurons per population to infinity and continuum limit) at the same time. They formally set up a stochastic neural field equation, but the question of well-posedness is left open.

In [10], Bressloff and Newby extend the original approach of [11] by including synaptic dynamics and consider a Markov chain modeling the activity coupled to a piecewise deterministic process describing the synaptic current (see also Sect. 6.4 in [8] for a summary). In two different regimes, the model covers the case of Gaussian-like fluctuations around the mean-field limit as derived in [11], as well as a situation in which the activity has Poisson statistics as considered in [15].

Here we consider the question how finite-size effects can be included in the voltage-based neural field equation. We take up the approach of describing the dynamics of the activity in a finite-size network by a continuous-time Markov chain and motivate a choice of jump rates that will lead to the voltage-based network equation in the infinite-population limit. We derive a law of large numbers and a central limit theorem for the Markov chain. Instead of realizing the double limit as in [13], we split up the limiting procedure, which in particular allows us to insert further approximation steps. We follow the original approach by Kurtz to determine the limit of the fluctuations of the Markov chain. By linearizing the noise term around the traveling wave solution, we obtain an approximating system of diffusion processes. After introducing correlations between populations lying close together (cf. Sect. 5.1) we obtain a well-posed \(L^{2}(\mathbb {R})\)-valued stochastic evolution equation, with a noise term approximating finite-size effects on traveling waves, which we prove to be the strong continuum limit of the associated network. Further results concerning (stochastic) stability of travelling waves and a corresponding multiscale analysis for the stochastic neural field equations derived in this paper are contained in the references [16, 17], see also the PhD-thesis [18].

The article is structured as follows. We recall how population models can be derived heuristically in Sect. 2 and summarize the work on the description of finite-size effects that can be found in the literature so far. In Sect. 3 we introduce our Markov chain model for determining finite-size effects in the voltage-based neural field equation and prove a law of large numbers and a central limit theorem for our choice of jump rates. We use it to set up a diffusion approximation with a noise term accounting for finite-size effects on traveling wave solutions in Sect. 4. Finally, in Sect. 5, we prove that a well-posed stochastic neural field equation is obtained in the continuum limit.

1.1 Assumptions on the Parameters

As usual, we take the gain function \(F:\mathbb {R}\rightarrow[0,1]\) to be a sigmoid function, for example \(F(x) = \frac{1}{1+ e^{-\gamma(x-\kappa)}}\) for some \(\gamma> 0\), \(0<\kappa<1\). In particular we assume that

-

(i)

\(F \geq0\), \(\lim_{x \downarrow-\infty}F(x)=0\), \(\lim_{x\uparrow \infty}F(x)=1\),

-

(ii)

\(F(x)-x\) has exactly three zeros \(0 < a_{1} < a < a_{2}<1\),

-

(iii)

\(F \in\mathcal{C}^{3}\) and \(F'\), \(F''\) and \(F'''\) are bounded,

-

(iv)

\(F' > 0\), \(F'(a_{1}) < 1\), \(F'(a_{2}) < 1\), \(F'(a)>1\).

Our assumptions on the synaptic kernel w are the following:

-

(i)

\(w \in\mathcal{C}^{1}\),

-

(ii)

\(w(x,y)=w(|x-y|)\geq0\) is nonnegative and homogeneous,

-

(iii)

\(\int_{-\infty}^{\infty} w(x) \,dx = 1\), \(w_{x} \in L^{1}\).

Assumption (iv) on F implies that \(a_{1}\) and \(a_{2}\) are stable fixed points of (1), while a is an unstable fixed point. It has been shown in [9] that under these assumptions there exists a unique monotone traveling wave solution to (1) connecting the stable fixed points (and, in [19], that traveling wave solutions are necessarily monotone). That is, there exist a unique wave profile \(\hat{u}:\mathbb {R}\rightarrow [0,1]\) and a unique wave speed \(c \in \mathbb {R}\) such that \(u^{\mathrm{TW}}(t,x) = u^{\mathrm{TW}} _{t}(x) := \hat{u}(x-ct)\) is a solution to (1), i.e.

and

As also pointed out in [9], we can without loss of generality assume that \(c\geq0\). Note that \(\hat{u}_{x} \in L^{2}(\mathbb {R})\) since in the case \(c>0\)

and in the case \(c=0\)

2 Finite-Size Effects in Population Models

2.1 Population Models

In population models, or firing rate models, instead of tracking the spiking activity of every neuron in the network, neurons are grouped together and the activity is identified as a population average. We start by giving a heuristic derivation of population models, distinguishing as usual between an activity-based and a voltage-based regime.

We consider a population of N neurons. We say that a neuron is ‘active’ if it is in the process of firing an action potential such that its membrane potential is larger than some threshold value κ. If Δ is the width of an action potential, then a neuron is active at time t if it fired a spike in the time interval \((t-\Delta,t]\). We define the population activity at a given time t as the proportion of active neurons,

We assume that all neurons in the population are identical and receive the same input. If the neurons fire independently from each other, then, for a constant input current I,

where \(F(I)\) is the probability that a neuron receiving constant stimulation I is active. In the infinite-population limit, the population activity is thus related to the input current via the function F, called the gain function. Sometimes one also defines F as a function of the potential u, assuming that the potential is proportional to the current as in Ohm’s law. F is typically a nonlinear function. It is usually modeled as a sigmoid, for example

for some \(\gamma>0\) and some threshold \(\kappa>0\), imitating the threshold-like nature of spiking activity.

Sometimes a firing rate is considered instead of a probability. We define the population firing rate \(\lambda^{N}\) as

If \(\delta=\Delta\), then \(\Delta\lambda^{\delta,N}(t)=a^{N}(t)\). At constant potential u, \(\lim_{\delta\rightarrow0} \lim_{N\rightarrow\infty} \lambda^{\delta ,N}(t) = \lambda(u)\), where \(\lambda(u)\) is the single neuron firing rate. Note that \(\lambda\leq\frac{1}{\Delta}\). The firing rate is related to the probability \(F(u)\) via

If the stimulus varies in time, then the activity may track this stimulus with some delay such that

for some time constant \(\tau_{a}\). Taylor expansion of the left-hand side gives an approximate description of the (infinite-population) activity in terms of the differential equation

to which we refer as the rate equation.

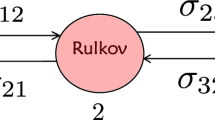

We now consider a network of P populations, each consisting of N neurons. We assume that each presynaptic spike in population j at time s causes a postsynaptic potential

in population i at time t. Here the \((w_{ij})\) are weights characterizing the strength of the synaptic connections between populations i and j, and \(\tau_{m}\) is the membrane time constant, describing how fast the membrane potential relaxes back to its resting value.

Under the assumption that all inputs add up linearly, the potential in population i at time t is given as

or, in differential form,

In the infinite-population limit we therefore obtain the coupled system

The behavior of (5) depends on the two time constants, \(\tau_{m}\) and \(\tau_{a}\). Two different regimes can be identified in which the model can be reduced to just one of the two variables, u or a.

2.1.1 Case 1: \(\tau_{m} \gg\tau_{a} \rightarrow0\)

Setting \(\tau_{a} = 0\) yields \(a_{i} (t) = F(u_{i} (t))\) and thus (5) reduces to

which we will call the voltage-based neural network equation.

2.1.2 Case 2: \(\tau_{a} \gg\tau_{m} \rightarrow0\)

Setting \(\tau_{m} = 0\) yields \(u_{i} (t) = \sum_{j=1}^{P} w_{ij} a_{j} (t)\) and thus (5) reduces in this case to

which we will call the activity-based neural network equation.

Throughout this paper we will be interested in the former regime of the voltage-based neural network equation, thereby assuming \(\tau_{a}\) small. Our aim is to derive stochastic differential equations in a first step for the infinite-population limit \(N\to\infty \) and subsequently for the continuum limit \(P\to\infty\), describing the asymptotic fluctuations.

2.2 Finite-Size Effects

The rate equation discussed in the previous subsection depends crucially on the way, how activity is measured. Starting from the definition (2) for given \(\delta> 0\), the value will depend on the relation between the value of δ and the width Δ of an action potential. In [10], a model for the evolution of the activity in a network of finite populations is set up, based on the definition

of the activity in population j. Here, δ is a time window of variable size. If δ is chosen as the width of an action potential Δ, one obtains the original notion of the activity, \(\Delta a^{\Delta, N} = a^{N}\). Note that the number of spikes in the time interval \((t-\delta,t]\) is limited by \(n_{\mathrm{max}}:= N \vee [\frac{\delta N}{\Delta} ] \).

The dynamics of \(a^{\delta, N}\) is modeled as a Markov chain with state space \(\{ 0, \frac{1}{\delta N}, \ldots , \frac{n_{\mathrm{max}}}{\delta N} \}^{P}\) and jump rates

Here \(e_{i}\) denotes the ith unit vector, \(\lambda(u)\) is the firing rate at potential u, related to the probability \(F(u)\) via \(\Delta \lambda(u) = F(u)\), and \(u^{N}\) evolves according to (4)

The idea for these transition rates is that the activation rate should be proportional to \(\lambda(u)\), while the inactivation rate should be proportional to the activity itself.

The following two regimes are then considered in [10].

2.2.1 Case 1: \(\delta=1\), \(\tau_{a} \gg\tau_{m} \rightarrow0\)

In the first regime, the size of the time window δ is fixed, say \(\delta=1\). If \(\tau_{a} \gg\tau_{m} \rightarrow0\), then as in Sect. 2.1, \(u^{N}_{i}(t) = \sum_{j=1}^{P} w_{ij} a_{j}^{\delta, N}(t)\). The description of the Markov chain can thus be closed in the variables \(a^{\delta, N}_{i}\), leading to the model already considered in [11]. In the limit \(N\rightarrow \infty\) one obtains the activity-based network equation

By formally approximating to order \(\frac{1}{N}\) in the associated master equation, they derive a stochastic correction to (9), leading to the diffusion approximation

for independent Brownian motions \(B_{j}\).

In [13], Riedler and Buckwar rigorously derive a law of large numbers and a central limit theorem for the sequence of Markov chains as N tends to infinity. Note that the nature of the jump rates is such that the process has to be ‘forced’ to stay in its natural domain \([0,\frac{n_{\mathrm{max}}}{N}]\) by setting the jump rate to 0 at the boundary. As they point out, this discontinuous behavior is difficult to deal with mathematically. They therefore have to slightly modify the model and allow the activity to be larger than \(\frac{n_{\mathrm{max}}}{N}\). They embed the Markov chain into \(L^{2}(D)\) for a bounded domain \(D\subset \mathbb {R}^{d}\) and derive the LLN in \(L^{2}(D)\) and the CLT in the Sobolev space \(H^{-\alpha}(D)\) for some \(\alpha>d\).

2.2.2 Case 2: \(\delta=\frac{1}{N}\), \(\tau_{m} \gg\tau_{a}\)

In the second regime, the size of the time window δ goes to 0 as N goes to infinity such that \(\delta N=1\). In this case,

The corresponding Markov chain has state space \(\{ 0, 1, 2, \ldots, n_{\mathrm{max}}\}^{P}\). Since \(\tau_{a} \ll\tau_{m}\), changes in \(u_{i} (t)\) are slow in comparison to changes of the activity, and for fixed voltage u, the Markov chain in fact has a stationary distribution that is approximately Poisson with rate \(\lambda(u)\) (see e.g. [10]). This corresponds to the regime considered in [15].

Note that we cannot expect to have a deterministic limit for the activity as \(N\to\infty\) in this case, so we do not have a deterministic limit for the voltage. We will therefore consider a third regime with \(\delta> 0\) fixed in this paper and study its asymptotic behavior in the large population limit \(N\to\infty\).

2.2.3 Case 3: \(\delta=\Delta\), \(\tau_{m} \gg\tau_{a} \rightarrow 0\)

We can expect in this case to have a law of large numbers, i.e. a deterministic limit for the activity. Since \(a_{i} (t) \approx F(u_{i} (t))\) we also obtain a law of large numbers for the voltage. In order to derive an appropriate diffusion approximation, we will have to reconsider the transition rates of the Markov chain (8).

Going back to our original definition of the activity let us fix the time window δ to be the length of an action potential Δ. We assume that the potential evolves slowly, \(\tau_{m} \gg0\). Speeding up time, we define

Then

For some large n,

The potentials \(\tilde{u}_{i}^{N}\) therefore only depend on the time-averaged activities given for \({\frac{k}{n}\leq t < \frac {k+1}{n}}\) as

We have

Since the relaxation rate \(\tau_{a}\) approaches zero, the time-averaged activity \(\tilde{a}_{i}^{N}(t)\) approximates \(F(\tilde{u}^{N}_{i} (t))\) and thus

with equality in the limit \(N\to\infty\). Differentiating (10) w.r.t. t therefore yields

If \(N <\infty\), then the finite size of the populations causes deviations from (11). In order to determine these finite-size effects, in the next section we will set up a Markov chain \(X^{P,N}\) to describe the evolution of the time-averaged activity \(\tilde{a}^{N}\).

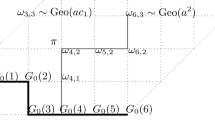

3 A Markov Chain Model for the Activity

We will model the time-averaged activity by a Markov chain \(X^{P,N}\) on the state space \(E^{P,N} = \{0, \frac{1}{N}, \frac{2}{N}, \ldots, 1\} ^{P}\). To this end consider the following jump rates:

for \(x\in E^{P,N}\) with \(x_{i}\in\{\frac{1}{N}, \ldots, 1-\frac{1}{N}\}\). Here, we used the notation \(x_{+} := x\vee0\), \(x_{-} := (-x) \vee0\), and \(e_{i}\) denotes the ith unit vector.

Note that, for \(N\in\mathbb{N}\) large, the interior \((E^{P,N})^{\circ}:= \{\frac{1}{N}, \ldots, 1-\frac{1}{N}\}^{P}\) will be invariant, hence the Markov chain, when started in \((E^{P,N})^{\circ}\) stays away from the boundary. Indeed, for large \(N\in\mathbb{N}\) it follows that

for \(x\in(E^{P,N})^{\circ}\) with \(x_{i} = 1-\frac{1}{N}\) (resp. \(x_{i} = \frac{1}{N}\)), because \(F^{-1} (1-\frac{1}{N}) > 1-\frac{1}{N} \ge\sum_{j=1}^{P} w_{ij} x_{j}\), hence \(q(x, x+\frac{1}{N} e_{i}) = 0\), (resp. \(F^{-1} (\frac{1}{N}) < \frac{1}{N} \le\sum_{j=1}^{P} w_{ij} x_{j}\), hence \(q(x, x-\frac{1}{N} e_{i}) = 0\)) for large N, using the limiting behavior \(\lim_{x \uparrow1} F^{-1}(x) = \infty\) (resp. \(\lim_{x \downarrow0} F^{-1}(x) = - \infty\)).

The idea behind the choice of our rates is the following: the time-averaged activity tends to jump up (down) if the potential in the population, which is approximately given by \(F^{-1}(x_{i})\), is lower (higher) than the input from the other populations, which is given by \(\sum_{j=1}^{P} w_{ij} x_{j}\). The probability that the activity jumps down (up) when the potential is lower (higher) than the input is assumed to be negligible. The jump rates are proportional to the difference between the two quantities, scaled by the factor \(F'(F^{-1}(x_{i}))\). They are therefore higher in the sensitive regime where \(F'\gg1\), that is, where small changes in the potential have large effects on the activity. If \(a^{N}_{i} = F(u^{N}_{i})\) in all populations i, then the system is in balance.

We will see in Proposition 1 below that the Markov chain converges to the solution of (11) for increasing population size \(N\uparrow\infty\).

In [11] a different choice of jump rates was suggested in analogy to (8):

Also this choice leads to (11) in the limit. With these rates, however, the jump rates are high in regions where the activity is high. Since, as explained above, one should think of the Markov chain as governing a slowly varying time-averaged activity, (12) seems like a more natural choice.

The generator of \(Q^{P,N}\) of \(X^{P,N}\) is given for bounded measurable \(f:E^{P,N} \rightarrow \mathbb {R}\) by

Let \(N_{0}\) be such that for \(N\ge N_{0}\) the interior \((E^{P,N})^{\circ}\) is an invariant set for \(X^{P,N}\).

The jump rates out of the interval \([\frac{1}{N_{0}}, 1-\frac {1}{N_{0}} ]\) are 0.

Proposition 1

Let \(X^{P}\) be the (deterministic) Feller process on \([1/N_{0}, 1-1/N_{0}]^{P}\) with generator

If \(X^{P,N}(0) \xrightarrow[N\rightarrow\infty]{d} X^{P}(0) \), then \(X^{P,N} \xrightarrow[N \rightarrow\infty]{d} X^{P}\) on the space of càdlàg functions \(D([0, \infty),[1/N_{0},1-1/N_{0}]^{P})\) with the Skorohod topology (where \(\xrightarrow{d}\) denotes convergence in distribution).

Proof

By a standard theorem on the convergence of Feller processes (cf. [20], Thm. 19.25) it is enough to prove that for \({f \in \mathcal{C}^{\infty}([1/N_{0},1-1/N_{0}]^{P})}\) there exist bounded measurable \(f_{N}\) such that \({\|f_{N} - f\|_{\infty} \xrightarrow {N\rightarrow\infty} 0}\) and \(\|Q^{P,N}f_{N} - L^{P}f\|_{\infty} \xrightarrow{N \rightarrow\infty} 0\).

Let thus \({f \in\mathcal{C}^{\infty}([1/N_{0},1-1/N_{0}]^{P})}\) and set \({f_{N}(x) = f (\frac{[x_{1}N]}{N}, \ldots,\frac{[x_{P}N]}{N} )}\). Then it is easy to see that

uniformly in x. □

4 Diffusion Approximation

We are now going to approximate \(X^{P,N}\) by a diffusion process. To this end, we follow the standard approach due to Kurtz and derive a central limit theorem for the fluctuations of \(X^{P,N}\). This will give us a candidate for a stochastic correction term to (11).

4.1 A Central Limit Theorem

The general theory of continuous-time Markov chains implies the following semi-martingale decomposition:

where \(\pi_{k}: (0,1)^{P} \rightarrow(0,1)\), \(x \mapsto x_{k}\), is the projection onto the kth coordinate, \(Q^{P,N} \pi_{k} (X^{P,N} (s)) = \sum_{y\in E^{P,N}} q^{P,N} ( X^{P,N} (s), y ) y_{k}\) is the (signed) kernel \(Q^{P,N}\) applied to the function \(\pi_{k}\) evaluated at the state \(X^{P,N} (s)\), and

is a zero mean square-integrable martingale (with right-continuous sample paths having left limits in \(t> 0\)). Moreover,

is a martingale too (see [21]). The general theory of stochastic processes states that for any square-integrable right-continuous martingale \(M_{t}\), \(t\ge0\), having left limits in \(t > 0\), there exists a unique increasing previsible process \(\langle M\rangle_{t}\) starting at 0, such that \(M_{t}^{2} - \langle M\rangle_{t}\), \(t\ge0\), is a martingale too. We can therefore conclude that

The bracket process will be crucial to determine the limit of the fluctuations as seen in the next proposition.

Proposition 2

on \(\mathcal{D}([0,\infty), \mathbb {R}^{P})\), where B is a P-dimensional standard Brownian motion, and \(X^{P}\) is the Feller process from Proposition 1.

Proof

Using

Thus,

in probability. For \(k \neq l\),

since for y with \(q^{P,N}(X^{P,N}(s),y) > 0\) at least one of \(X_{k}^{P,N}(s) - y_{k}\) and \(X_{l}^{P,N}(s) - y_{l}\) is always 0. Now

and the statement follows by the martingale central limit theorem; see for example Theorem 1.4, Chap. 7 in [22]. □

This suggests to approximate \(X^{P,N}\) by the system of coupled diffusion processes

\(1 \leq k \leq P\).

Using Itô’s formula, we formally obtain an approximation for \(u^{P,N}_{k} := F^{-1}(a_{k}^{P,N})\),

Since the square root function is not Lipschitz continuous near 0, we cannot apply standard existence theorems to obtain a solution to (13) with the full multiplicative noise term. Instead we will linearize around a deterministic solution to the neural field equation and approximate to a certain order of \(\frac{1}{\sqrt{N}}\).

4.2 Fluctuations around the Traveling Wave

Let ū be a solution to the neural field equation (1). To determine the finite-size effects on ū, we consider a spatially extended network, that is, we look at populations distributed over an interval \([-L,L] \subset \mathbb {R}\) and use the stochastic integral derived in Proposition 2 to describe the local fluctuations on this interval.

Let \(m \in\mathbb{N}\) be the density of populations on \([-L,L]\) and consider \(P=2mL\) populations located at \(\frac{k}{m}\), \(k \in\{-mL, -mL+1, \ldots, mL-1\}\). We choose the weights \(w_{kl}\) as a discretization of the integral kernel \(w:\mathbb {R}\rightarrow[0,\infty)\),

Since we think of the network as describing only a section of the actual domain \(\mathbb {R}\), we add to each population an input \(F(\bar {u}_{t}(-L))\) and \(F(\bar{u}_{t}(L))\), respectively, at the boundaries with corresponding weights

Fix a population size \(N \in \mathbb {N}\). Set \(\bar{u}_{k}(t) = \bar {u}(\frac{k}{m}, t)\) and for \(u\in \mathbb {R}^{P}\),

We write

and assume that \(v_{k}\) is of order \(1/\sqrt{N}\). Linearizing (13) around \((\bar{u}_{k})\) we obtain the approximation

to order \(1/\sqrt{N}\).

Note that \(\hat{b}^{m}_{k}(t,\bar{u}) \approx\partial_{t} \bar{u}_{k}(t) = 0\) for a stationary solution ū, with equality if ū is constant. The finite-size effects are hence of smaller order. Since the square root function is not differentiable at 0 we cannot expand further.

However, the situation is different if we linearize around a moving pattern. We consider the traveling wave solution \(u^{\mathrm{TW}}_{t}(x) = \hat {u}(x-ct)\) to (1) and we assume without loss of generality that \(c>0\). Then \(\hat{b}^{m}_{k}(t,\bar{u}) \approx\partial_{t} \bar {u}_{k}(t) = - c \partial_{x} u^{\mathrm{TW}}_{t} <0\). This monotonicity property allows us to approximate to order \(1/N\) in (13). Indeed, note that since û and F are increasing,

So for L large enough, \(-\hat{b}^{m}_{k}(t, u^{\mathrm{TW}}_{t})>0\) and we have, using Taylor’s formula and (16),

As a possible diffusion approximation in the case of traveling wave solutions we therefore obtain the system of stochastic differential equations

for which there exists a unique solution as we will see in the next section.

5 The Continuum Limit

In this section we take the continuum limit of the network of diffusions (18), that is, we let the size of the domain and the density of populations go to infinity in order to obtain a stochastic neural field equation with a noise term describing the fluctuations around the deterministic traveling wave solution due to finite-size effects.

We thus have to deal with functions that ‘look almost like the wave’ and choose to work in the space \(\mathcal{S}:= \{ u:\mathbb {R}\rightarrow \mathbb {R}: u - \hat {u}\in L^{2} \}\). Note that since for \(u_{1}, u_{2} \in\mathcal{S}\), \(\|u_{1}-u_{2}\| < \infty\), the \(L^{2}\)-norm induces a topology on \(\mathcal{S}\).

5.1 A Word on Correlations

Recall the definition of the Markov chain introduced in Sect. 3. Note that as long as we allow only single jumps in the evolution, meaning that there will not be any jumps in the activity in two populations at the same time, the martingales associated with any two populations will be uncorrelated, yielding independent driving Brownian motions in the diffusion limit (cf. Proposition 2).

This only makes sense for populations that are clearly distinguishable. In order to determine the fluctuations around traveling wave solutions, we consider spatially extended networks of populations. The population located at \(x \in \mathbb {R}\) is to be understood as the ensemble of all neurons in the ε-neighborhood \((x-\varepsilon , x+\varepsilon )\) of x for some \(\varepsilon > 0\). If we consider two populations located at \(x,y \in \mathbb {R}\) with \(|x-y| < 2\varepsilon \), then they will overlap. Consequently, simultaneous jumps will occur, leading to correlations between the driving Brownian motions.

Thus the Markov chain model (and the associated diffusion approximation) is only appropriate as long as the distance between the individual populations is large enough. When taking the continuum limit, we therefore adapt the model by introducing correlations between the driving Brownian motions of populations lying close together.

5.2 The Stochastic Neural Field Equation

We start by defining the limiting object. For \(u \in\mathcal{S}\) and \(t \in[0,T]\) set

Let \(\mathcal{W}^{Q}\) be a (cylindrical) Q-Wiener process on \(L^{2}\) with covariance operator \(\sqrt{Q}\) given as \(\sqrt{Q}h(x) = \int_{-\infty}^{\infty} q(x,y) h(y) \,dy\) for some symmetric kernel \(q(x,y)\) with \(q(x,\cdot) \in L^{2} \cap L^{1}\) for all \(x \in \mathbb {R}\) and \(\sup_{x \in \mathbb {R}} (\|q(x,\cdot)\| + \|q(x,\cdot)\|_{1}) < \infty\). (Details on the theory of Q-Wiener processes can be found in [23, 24].) We assume that the dispersion coefficient is given as the multiplication operator associated with \(\sigma: [0,T] \times\mathcal{S} \rightarrow L^{2}(\mathbb {R})\), which we also denote by σ, where σ is Lipschitz continuous with respect to the second variable uniformly in \(t\leq T\), that is, we assume that there exists \(L_{\sigma}>0\) such that, for all \(u_{1}, u_{2} \in\mathcal {S}\) and \(t \in[0,T]\),

The correlations are described by the kernel q. For f, g in \(L^{2}(\mathbb {R})\),

so formally,

where we denote by \(q\ast q(x,y) \) the integral \(\int q(x,z) q(z,y) \,dz\). We could for example take

for some small \(\varepsilon > 0\) (cf. Sect. 5.1).

The definition of the stochastic integral \(\int_{0}^{t} \sigma(s, u_{s})\, d\mathcal{W}^{Q}_{s}(x)\) w.r.t. the Q-Wiener process \(\mathcal{W}^{Q}\) requires in particular that the operator \(\sigma(t,v) \circ Q^{\frac{1}{2}}\) is Hilbert–Schmidt. In the following, let \(L_{2}^{0}\) be the space of all linear operators L on \(L^{2}\) for which \(L\circ Q^{\frac{1}{2}}\) is Hilbert–Schmidt and denote with \(\|L\|_{L_{2}^{0}}\) the Hilbert–Schmidt norm of \(L\circ Q^{\frac{1}{2}}\).

With the above assumptions on Q and σ it then follows that \(\sigma(t,v) \in L_{2}^{0}\) since by Parseval’s identity

Note that, for uncorrelated noise (i.e. \(Q=E\)), this is not the case. Therefore, in [13] Riedler and Buckwar derive the central limit theorem in the Sobolev space \(H^{-\alpha}\). Splitting up the limiting procedures, \(N \rightarrow\infty\) and continuum limit, allows us to incorporate correlations and finally to work in the more natural function space \(L^{2}\).

Proposition 3

For any initial condition \(u^{0} \in\mathcal{S}\), the stochastic evolution equation

has a unique strong \(\mathcal{S}\)-valued solution. u has a continuous modification. For any \(p \geq2\),

For a proof see for example Prop. 6.5.1 in [18].

5.3 Embedding of the Diffusion Processes

As a next step we embed the systems of coupled diffusion processes (18) into \(L^{2}(\mathbb {R})\). Let \(m \in\mathbb{N}\) be the population density and \(L^{m} \in\mathbb {N}\) the length of the domain with \(L^{m} \uparrow\infty\) as \(m \rightarrow\infty\). For \(k \in\{-mL^{m}, -mL^{m}+1, \ldots, mL^{m}-1\}\) set \(I^{m}_{k} = [\frac{k}{m}, \frac{k+1}{m})\) and \(J^{m}_{k} = (\frac{k}{m}-\frac{1}{4m}, \frac {k}{m}+\frac{1}{4m})\), and let

be the average of \(\mathcal{W}^{Q}_{t}\) on the interval \(J^{m}_{k}\). Then the \(W^{m}_{k}\) are one-dimensional Brownian motions with covariances

Note that the Brownian motions are independent as long as \(m < \frac{1}{4\varepsilon }\).

For \(m \in\mathbb{N}\) let \(\hat{\sigma}^{m}:[0,T] \times \mathbb {R}^{P} \rightarrow \mathbb {R}^{P}\) and assume that there exists \(L_{\hat{\sigma}^{m}} > 0\) such that, for any \(t \in[0,T]\) and \(u_{1}, u_{2} \in \mathbb {R}^{P}\),

Consider the system of coupled stochastic differential equations

with weights as in (14) and (15).

We identify \(u = (u_{k})_{-mL^{m} \leq k \leq mL^{m}-1} \in\mathbb{R}^{P}\) with its piecewise constant interpolation as an element of \(L^{2}\) via the embedding

For \(u \in\mathcal{C}(\mathbb{R})\) set

Then \(u^{m}_{t} := \iota^{m}((u^{m}_{k}(t))_{k})\) satisfies

where \(b^{m}:[0,T]\times L^{2}(\mathbb {R}) \rightarrow L^{2}(\mathbb {R})\) and \(\varPhi^{m}:L^{2}(\mathbb {R}) \rightarrow L^{2}(\mathbb {R})\) are given as

and where \(\sigma^{m}:[0,T] \times L^{2}(\mathbb {R}) \rightarrow L^{2}(\mathbb {R})\) is such that, for \(u\in \mathbb {R}^{P}\), \(\sigma^{m}(t,\iota^{m}(u))= \hat{\sigma}^{m}_{k}(t,u)\) on \(I^{m}_{k}\). We assume joint continuity and Lipschitz continuity in the second variable uniformly in m and \(t\leq T\), that is, there exists \(L_{\sigma}>0\) such that for \(u_{1},u_{2} \in L^{2}(\mathbb {R})\) and \(t\leq T\),

Proposition 4

For any initial condition \(u^{0} \in L^{2}(\mathbb {R})\) there exists a unique strong \(L^{2}\)-valued solution \(u^{m}\) to (22). \(u^{m}\) admits a continuous modification. For any \(p\geq2\),

Proof

Again we check that the drift and diffusion coefficients are Lipschitz continuous. Note that

Therefore, for \(u_{1}, u_{2} \in L^{2}(\mathbb {R})\),

and for an orthonormal basis \((e_{k})\) of \(L^{2}(\mathbb {R})\) we obtain, using Parseval’s identity,

□

5.4 Convergence

We are now able to state the main convergence result. We will need the following assumption on the kernel w.

Assumption 5

There exists \(C_{w}>0\) such that, for \(x\geq0\),

That assumption is satisfied for classical choices of w such as \({w(x) = \frac{1}{2\sigma}e^{-\frac{|x|}{\sigma}}}\) or \({w(x) = \frac {1}{\sqrt{2\pi\sigma^{2}}} e^{-\frac{x^{2}}{2\sigma^{2}}}}\).

Theorem 6

Fix \(T>0\). Let u and \(u^{m}\) be the solutions to (21) and (22), respectively. Assume that

-

(i)

,

-

(ii)

for any \(u: [0,T] \rightarrow\mathcal{S}\) with \(\sup_{t\leq T} \| u_{t} - \hat {u}\| < \infty\),

Then, for any initial conditions \({u_{0}^{m}\in L^{2}(\mathbb {R})}\), \({u_{0} \in\mathcal{S}}\) such that

and for all \(p\geq2\),

We postpone the proof to the Appendix.

Remark 7

Let \(\varepsilon > 0\). The kernel satisfies assumption (i) of the theorem. Indeed, note that, for x, z with \(|x-z| \leq\frac{1}{m}\), . Therefore we obtain, for all k and for any \(x \in I^{m}_{k}\),

Theorem 6 can now be applied to the asymptotic expansion of the fluctuations around the traveling wave derived in Sect. 4.2. In order to ensure that the diffusion coefficients are in \(L^{2}(\mathbb {R})\), we cut off the noise outside a compact set \(\{ \partial_{x} u^{\mathrm{TW}}_{t} \geq\delta\}\), \(\delta>0\). Note that the neglected region moves with the wave such that we always retain the fluctuations in the relevant regime away from the fixed points.

Theorem 8

Assume that the wave speed is strictly positive, \(c>0\). Fix \(\delta>0\). The diffusion coefficients as derived in Sect. 4.2,

where

are jointly continuous and Lipschitz continuous in the second variable with Lipschitz constant uniform in m and \(t\leq T\), and satisfy condition (ii) of Theorem 6.

For a proof see Thm. 6.5.5 in [18].

6 Summary and Conclusions

In this paper we have investigated the derivation of neural field equations from detailed neuron models. Following a common approach in the literature, we started from a phenomenological Markov chain model that yields the neural field equation in the infinite-population limit and allows one to obtain stochastic or deterministic corrections for networks of finite populations. As one novelty to the existing literature we considered a new choice of jump rates, given in the activity-based setting as

and showed that it leads to qualitatively different results in dynamical states with high (resp. low) activity in comparison with the rates

considered in the literature so far (see for example [11, 13]).

The following two major reasons make us believe that the rates (25) provide a better description of finite-size effects in neural fields.

-

1.

The neural field equations evolve on a different time scale than single neuron activity. In the activity-based setting they describe a coarse-grained time-averaged population activity and in the voltage-based setting they are derived under the assumption that the activity in each population is slowly varying such that one can assume that it is related to the population potential via \(a = F (u)\). The rates (25) reflect this property more than the rates (26), in particular in regimes with high (resp. low) activity.

-

2.

Fluctuations due to finite-size effects should be strong where \(F^{\prime}\gg1\), and therefore small changes in the synaptic input lead to comparably large changes in the population activity. They should become small, however, where \(F^{\prime}\ll1\), so in particular around the stable fixed points of the system. This is captured in the jump rates (25), whereas in case (26), fluctuations are high where the activity is high.

The corrections to the neural field equation derived in this setting are of a qualitatively different form than the ones previously considered. As a consequence, one should reconsider effects of intrinsic noise due to finite-size effects as studied for example in [25]. In particular the lowest order correction to the moment equations, or the additive part of the stochastic correction, vanishes when linearizing around a stationary solution. The noise is therefore of smaller order than previously assumed.

This is not the case for stable moving patterns like traveling fronts or pulses, suggesting the movement as a main source of noise. We have for the first time rigorously derived a well-posed stochastic continuum neural field equation with an additive noise term that can be used to study the finite-size effects on these kinds of solutions. It is particularly suitable for the analysis of traveling wave solutions, since the monotonicity of the solution allows one to consider a multiplicative noise term as derived in Sect. 4.2.

References

Amari S. Dynamics of pattern formation in lateral-inhibition type neural fields. Biol Cybern. 1977;27:77–87.

Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12:1–24.

Wilson HR, Cowan JD. A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetik. 1973;13:55–80.

Ermentrout GB, Terman DH. Mathematical foundations of neuroscience. New York: Springer; 2010.

Coombes S, beim Graben P, Potthast R, Wright J. Neural fields—theory and applications. Berlin: Springer; 2014.

Ermentrout GB. Neural networks as spatio-temporal pattern-forming systems. Rep Prog Phys. 1998;61:353–430.

Bressloff PC. Spatiotemporal dynamics of continuum neural fields. J Phys A. 2011;45:033001.

Bressloff PC. Waves in neural media. New York: Springer; 2014.

Ermentrout GB, McLeod JB. Existence and uniqueness of travelling waves for a neural network. Proc R Soc Edinb A. 1993;123:461–78.

Bressloff PC, Newby JM. Metastability in a stochastic neural network modeled as a velocity jump Markov process. SIAM J Appl Dyn Syst. 2013;12(3):1394–435.

Bressloff PC. Stochastic neural field theory and the system-size expansion. SIAM J Appl Math. 2009;70(5):1488–521.

Buice MA, Cowan JD. Field-theoretic approach to fluctuation effects in neural networks. Phys Rev E. 2007;75:051919.

Riedler MG, Buckwar E. Laws of large numbers and Langevin approximations for stochastic neural field equations. J Math Neurosci. 2013;3:1.

Riedler MG, Thieullen M, Wainrib G. Limit theorems for infinite-dimensional piecewise deterministic Markov processes. Applications to stochastic excitable membrane models. Electron J Probab. 2012;17:55.

Buice MA, Cowan JD, Chow CC. Systematic fluctuation expansion for neural network activity equations. Neural Comput. 2010;22(2):377–426.

Lang E. Multiscale analysis of traveling waves in stochastic neural fields. SIAM J Appl Dyn Syst. 2016;15(3):1581–614.

Lang E, Stannat W. \(L^{2}\)-Stability of traveling wave solutions to nonlocal evolution equations. J Differ Equ. 2016;261(8):4275–97.

Lang E. Traveling waves in stochastic neural fields [PhD thesis]. Technische Universität Berlin; 2016. doi:10.14279/depositonce-5019.

Chen F. Travelling waves for a neural network. Electron J Differ Equ. 2003;2003:13.

Kallenberg O. Foundations of modern probability. 2nd ed. New York: Springer; 2002.

Stroock DW. An introduction to Markov processes. Berlin: Springer; 2014.

Ethier SN, Kurtz TG. Markov processes: characterization and convergence. New York: Wiley; 1986.

Prévôt C, Röckner M. A concise course on stochastic partial differential equations. Berlin: Springer; 2007.

Da Prato G, Zabczyk J. Stochastic equations in infinite dimensions. 2nd ed. Cambridge: Cambridge University Press; 2014.

Bressloff PC. Metastable states and quasicycles in a stochastic Wilson–Cowan model of neuronal population dynamics. Phys Rev E. 2010;82:051903.

Acknowledgements

The work of E. Lang was supported by the DFG RTG 1845 and partially supported by the BMBF, FKZ01GQ1001B. The work of W. Stannat was supported by the BMBF, FKZ01GQ1001B.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing Interests

The authors declare that they have no competing interests.

Authors’ Contributions

EL performed the analysis and wrote a first version of the manuscript. WS contributed mathematical discussions and rewrote versions of the manuscript. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Proof of Theorem 6

Appendix: Proof of Theorem 6

Set \(v_{t} = u_{t} - u^{\mathrm{TW}}_{t}\) and \(v_{t}^{m} = u^{m}_{t} - \pi^{m}(u^{\mathrm{TW}}_{t})\). Note that

For the proof of the theorem it therefore suffices to show that

since this will imply that

By Itô’s formula,

In order to finally apply Gronwall’s lemma, we estimate the terms one by one.

1.1 A.1 The Drift

We start by regrouping the terms in a suitable way. We have

Using the Cauchy–Schwarz inequality we get

With (27) it follows that

Another application of the Cauchy–Schwarz inequality yields

Using integration by parts, (23), and assumption (24), we obtain

Analogously,

Finally we observe that

and

Finally we consider

We have

and, as in (27),

The last summand satisfies, using (23) and (27),

1.2 A.2 The Itô Correction

We have

Let \((e_{k})\) be an orthonormal basis of \(L^{2}(\mathbb {R})\). Note that by Parseval’s identity

Thus,

Thus,

and

Using Parseval’s identity again we get

1.3 A.3 Application of Gronwall’s Lemma

We use K, \(K_{1}\), \(K_{2}\), K̃, etc. to denote suitable constants that may differ from step to step. Summarizing the previous steps and using Young’s inequality we arrive at

where

and

is a martingale with quadratic variation process

Applying Itô’s formula to the real-valued stochastic process \(\| v^{m}_{t}-v_{t}\|^{2}\) we obtain for \(p\geq2\)

Estimating the last term as above and using Young’s inequality we obtain

Integrating, maximizing over \(t\leq T\), and taking expectations we get

We estimate the last term using the Burkholder–Davis–Gundy inequality, (28), and Young’s inequality:

Bringing the first summand to the left-hand side of (29) this implies that

We estimate the last term as before and obtain

Altogether we arrive at

An application of Gronwall’s lemma yields

The sequence of continuous functions \(f^{m}: [0,T] \rightarrow \mathbb {R}\)

is decreasing and converges pointwise to 0 since all the integrands are in \(L^{2}(\mathbb {R})\). By Dini’s theorem the convergence is uniform. This together with the facts that \({\|\sigma(t,v_{t})\|_{2}^{2} \leq K(1+\|v_{t}\| ^{2})}\) and \({E ( \sup_{t\leq T} \|v_{t}\|^{2} ) < \infty}\) by Proposition 3, assumptions (i) and (ii), and dominated convergence implies that

and hence

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Lang, E., Stannat, W. Finite-Size Effects on Traveling Wave Solutions to Neural Field Equations. J. Math. Neurosc. 7, 5 (2017). https://doi.org/10.1186/s13408-017-0048-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13408-017-0048-2