Abstract

Background

Whether information from clinical trial registries (CTRs) and published randomised controlled trial (RCTs) differs remains unknown. Knowing more about discrepancies should alert those who rely on RCTs for medical decision-making to possible dissemination or reporting bias. To provide help in critically appraising research relevant for clinical practice we sought possible discrepancies between what CTRs record and paediatric RCTs actually publish. For this purpose, after identifying six reporting domains including funding, design, and outcomes, we collected data from 20 consecutive RCTs published in a widely read peer-reviewed paediatric journal and cross-checked reported features with those in the corresponding CTRs.

Methods

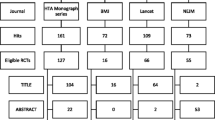

We collected data for 20 unselected, consecutive paediatric RCTs published in a widely read peer-reviewed journal from July to November 2013. To assess discrepancies, two reviewers identified and scored six reporting domains: funding and conflict of interests; sample size, inclusion and exclusion criteria or crossover; primary and secondary outcomes, early study completion, and main outcome reporting. After applying the Critical Appraisal Skills Programme (CASP) checklist, five reviewer pairs cross-checked CTRs and matching RCTs, then mapped and coded the reporting domains and scored combined discrepancy as low, medium and high.

Results

The 20 RCTs were registered in five different CTRs. Even though the 20 RCTs fulfilled the CASP general criteria for assessing internal validity, 19 clinical trials had medium or high combined discrepancy scores for what the 20 RCTs reported and the matched five CTRs stated. All 20 RCTs selectively reported or failed to report main outcomes, 9 had discrepancies in declaring sponsorship, 8 discrepancies in the sample size, 9 failed to respect inclusion or exclusion criteria, 11 downgraded or modified primary outcome or upgraded secondary outcomes, and 13 completed early without justification. The CTRs for seven trials failed to index automatically the URL address or the RCT reference, and for 12 recorded RCT details, but the authors failed to report the results.

Conclusions

Major discrepancies between what CTRs record and paediatric RCTs publish raise concern about what clinical trials conclude. Our findings should make clinicians, who rely on RCT results for medical decision-making, aware of dissemination or reporting bias. Trialists need to bring CTR data and reported protocols into line with published data.

Similar content being viewed by others

Background

An emerging problem that has rarely been investigated concerns the human factors that undermine clinical randomised controlled trials (RCTs) at various stages [1]. In the 1920s and 1930s two scientists in different research fields [2, 3] helped enormously to reappraise statistical theory and methodology in designing trials, thus clarifying how bias influences research. Previous papers have already compared protocols and registered data for clinical outcomes in published RCTs in various clinical settings [4–7], and three studies have investigated discrepancies in selectively reported outcomes [8, 9]. Even though concealment and blinding tools can control human factors in RCT designs [10] and the International Committee of Medical Journal Editors (ICMJE) [11] and Consolidated Standards of Reporting Trials (CONSORT) [12] receive wide consensus, a recent survey among journal editors endorsing the ICMJE and CONSORT established policies disclosed that only 27 % of the 33 respondents cross-checked the data reported in the submitted manuscript against the data registered prospectively in clinical trial registries (CTRs) [13]. In recent years the Food and Drug Administration (FDA) expanded the regulatory requirements for conducting clinical trials and the truthfulness of the data submitted, and now requires authors to annually update CTRs, thus providing clear informative results [14]. Whether trial design, conduction and outcome data from the various CTRs and published RCTs differ or are incompletely reported remains unknown [4, 15, 16]. One systematic review assessed primary outcome discrepancies [17]. No studies have analysed trial reporting domains more widely, and none have addressed paediatric trials. Apart from the Critical Appraisal Skills Programme (CASP) checklist [18], nor do paediatricians and clinical researchers have tools for assessing discrepancies and risk of bias that compare what clinical researchers record in the registered study hypothesis and protocol, and what they then publish in RCTs [19–23]. Knowing more about trial discrepancies should alert paediatricians, clinical researchers, peer-reviewers, editors, and policymakers to possible dissemination or reporting bias undermining paediatric trials whose results provide the best information for medical care [24].

To help in critically appraising research relevant for clinical practice we sought possible discrepancies between what CTRs record and paediatric RCTs actually publish. For this purpose, after identifying six reporting domains, including funding, design, and outcomes, we collected data from a sample of 20 unselected consecutive RCTs published in a widely read peer-reviewed paediatric journal and cross-checked reported features with those in the corresponding CTRs.

Methods

In a study conducted from November 2012 to January 2016, to seek possible discrepancies between what CTRs record and paediatric RCTs actually publish, two reviewers, an experienced clinical paediatrician and an experienced researcher (PR and RD), identified six major reporting domains: five based on their long experience in critically appraising well-conducted clinical trials (reported funding and conflict of interest incompletely declared; discrepant or unclear sample size; inclusion and exclusion criteria not being respected or selective crossover; primary outcome downgraded and secondary outcomes upgraded and reported as primary outcomes in the publication; early study completion unjustified), and one domain (main outcome selectively reported or unreported) based on the Cochrane risk of bias tool [23, 24]. Over the first year they developed, assessed, and graded CTR-RCT discrepancy scores. Over the ensuing years five investigator pairs, supervised by two tutors (PR and RD), and attending the annual course held at Bambino Gesù Children’s Hospital, and subsequent weekly meetings on critically appraising scientific publications (G.A.L.I.L.E.O.), carefully read the 20 consecutive RCTs published monthly from July to November 2013 in the journal Pediatrics [25–44]. They then searched the CTR web link for each corresponding CTR, assessed details, and ‘history of changes’ after the initial registration, and critically appraised each published RCT with the CASP checklist. The five investigator pairs then independently mapped and coded inconsistencies for each reporting domain in the 20 trials, and repeatedly searched online (last access 20 January 2016) from the corresponding CTR websites: the United States National Institute of Health (NCT) (https://clinicaltrials.gov), the International Standard Randomised Controlled Trial Number (ISRCTN) (currently BioMed Central Open Access publishers) (http://www.isrctn.com/), the Nederlands Trials Register (NTR) (http://www.trialregister.nl/trialreg/index.asp), the Australian and New Zealand Clinical Trial Registry (ACTRN), (http://www.anzctr.org.au) and the Clinical Trial Registry-India (CTRI) (http://ctri.nic.in/Clinicaltrials/login.php). Meanwhile, PR and RD supervised the five investigator pairs for scoring the six individual reporting domains and combined scores. PR and RD then consulted the external tutor (FP), and conflicting interpretations were resolved by consensus. PR and RD with the five investigator pairs then reassessed inconsistencies between CTRs and RCTs for each trial over 2 months and, after several attempts, reached 100 % final agreement on grading discrepancy scores from 1 to 3 points, according to the reporting domain importance, and on grading combined discrepancy scores as low, medium and high (Table 1, Additional file 1). Higher discrepancy scores suggested risk of bias.

Results

When we compared what the 20 paediatric RCTs published in the journal Pediatrics reported and what the collected five matching cross-checked CTRs recorded, 9 trials had medium (5–9) and 10 high (10–14) combined discrepancy scores (Table 1, Additional file 1). The five investigator pairs who critically appraised the trials with the CASP checklist found that all 20 published RCTs fulfilled the general criteria for assessing trial internal validity. Of the 20 RCTs, 11 were registered in the NCT [25, 26, 30, 32, 34–39, 44], 4 in the ISRCTN [27, 29, 31, 43], 2 in the ACTRN [33, 42], 1 in the NTR [28], 1 was registered both in the NTR and in the ACTRN [40], and 1 in the CTRI [41]. Assessment for the reporting domains disclosed that 9 trials had discrepancies in declaring sponsorship and conflict of interests [25, 33–37, 40, 41, 44], 8 trials had a discrepant or unclear sample size [26, 31–33, 40–43], 9 trials failed to respect inclusion or exclusion criteria [27, 28, 30, 34, 38–41, 44], 11 trials downgraded or modified primary outcome measures or upgraded secondary outcomes [30, 33, 35–39, 41–44], 13 trials completed early [29, 31, 32, 34–40, 42–44], and all 20 paediatric clinical trials selectively misreported outcomes or failed to report main outcomes, thus tending to overstress the positive results (Table 1, Additional file 1). A single-centre trial was retrospectively registered in the ACTRN [42], and one multicentre trial was registered prospectively in the NTR and retrospectively in the ACTRN [40]. For this multicentre trial, although the NTR reported that the trial had stopped, the ACTRN stated ‘still recruiting’, and neither CTR was updated. Two papers failed to respect the intention-to-treat analysis [29, 40]. Two trials were completed early during a planned interim analysis by an external Data Safety Monitoring Committee (DSMC): the first was stopped for efficacy results (more harm than good in the intervention group), and the target sample remained unreached, but the ISRCTN failed to report the cause [31], and the second, a multicentre trial, owing to futility in the results [40], underreported or misreported outcomes in the two registries (NTR and ACTRN). Published RCT abstracts and results both contained inconsistencies in reporting the reasons for stopping the trials [31, 40]. In another three CTRs and corresponding RCTs, the five investigator pairs detected a discrepancy between the primary outcome and efficacy results reported (more harm than good in the intervention group) [29, 35, 41]. Three published RCTs underreported insignificant results [28, 30, 33], one upgrading the secondary outcome [30], and one downgrading the primary outcome [33]. Two trials, one registered in the ACTRN and one in the CTRI, downgraded primary outcome in the published RCT [33, 41], two upgraded secondary outcomes, both registered in the NCT [30, 39], and another eight modified primary outcome or primary outcome measures, six registered in the NCT, one in ISRCTN, and one in ACTRN [35–39, 42–44]. For one clinical trial, the NCT automatically reported the previous RCT paper reference that included the NCT primary outcome, but neglected to report the RCT reference that included secondary outcomes that had been upgraded and yielded insignificant results [30]. For another clinical trial, the authors reported their previous published papers in the NCT record, but neglected to report the second published RCT giving a partially modified primary outcome in the updated NCT data, recorded after RCT publication [37]. Of the 20 clinical trials, seven CTRs (three registered in the NCT, two in the NTR, and two in the ACTRN) failed to index automatically the RCT reference or Uniform Resource Locator (URL) address, and all these trials had discrepancies in outcome data or yielded insignificant results [28, 33, 34, 37, 38, 40, 42]. Twelve CTRs reported the RCT references or URL addresses, but the authors failed to summarise the main results [25–27, 29–32, 35, 36, 39, 43, 44]. For only one clinical trial did the authors report the main results in the CTRI but neglect to report increased side effects in the intervention group [41] (Table 1, Additional file 1).

Discussion

By comparing the six reporting domains, mapping, coding and cross-checking 20 published RCTs with the matched five CTRs, our study, applied to clinical paediatric trials published in a widely read peer-reviewed journal, suggests that many trials have discrepancies in reporting domains. The medium or high combined discrepancy scores we found, when we repeatedly searched each database online until January 2016, underline major widely ranging discrepancies between what CTRs record and what published paediatric RCTs then report. The discrepancies we identified in declaring funding and conflict of interests in nine trials, in the number of eligible and enrolled participants in eight clinical trials and in another nine inclusion and exclusion criteria not being respected, emphasise the generally imperfect reporting. These major discrepancies, especially those involving changes in the original study hypotheses, trial designs, study conduction and reporting outcomes raise concern on trustworthiness in scientific trials, as previous papers have underlined [5–9, 13, 15, 16].

Surprisingly, of the 20 published RCTs 11 modified or downgraded primary outcomes or upgraded secondary outcomes (reported secondary outcomes as primary outcomes in the publication), misreporting or tending to overstress positive results. This discrepancy underlines concerns about trial creditability that the CASP checklist overlooks. By assessing and scoring CTR-RCT discrepancies in six clinical trial reporting domains, our study therefore expands current knowledge, thus emphasising the need for international clinical trial regulators to make publicly available CTR-RCT discrepancies in published RCT findings, as recently underlined by the WHO Statement on public disclosure of clinical trial results (http://www.who.int/ictrp/results/reporting/en/; last accessed 20 January 2016). For example, trial (number 5 in Table 1) [29] addresses as primary outcome cognitive improvement and provides significant statistical difference between the intervention and control groups, but leaves unaddressed clinically important patient-centred outcomes, such as deaths and cerebral palsy. Even though deaths increased and cerebral palsy doubled in the intervention group, in their conclusions the investigators paradoxically report that ‘nonsignificant trends in the data suggested a small adverse effect’. In another trial (number 7 in Table 1) [31] an external DSMC decided to stop RCT completion early owing to hyperthermia in the infants enrolled in the intervention group. Neither the highlights section in the RCT nor the conclusions report this result. Although the published RCT reports when the trial stopped, our findings disclose an important clinically relevant feature, namely more harm than good for the primary outcome in the intervention group.

Another unexpected discrepancy in a multicentre trial (number 16 in the Table 1) [40] was that the authors inappropriately and unclearly reported that an external DSMC stopped the trial for futility. The numerous CTRs updated only by dataset supervisors (URL or RCTs reference cited in 12/20 trials), and failing to report results (7/20 trials) underline discrepancies involving incompletely and selectively reported clinical outcome results [5, 45–47]. This finding, along with underappreciated core patient-centred outcomes, raises ethical concerns and suggests dissemination or reporting bias [45–49]. Even though the journal Pediatrics complies with ICMJE requirements [11], and authors are required to submit a completed flowchart and checklist for the CONSORT statement [12] before publication (http://www.aappublications.org/content/pediatrics-author-guidelines#acceptance_criteria; last accessed 20 January 2016), and all the 20 published RCTs give the trial registration number on the first page, our findings underline that still today few authors endorse these rules [13, 14, 17].

An unexpected finding concerned prospective trial registration [11]. Although our study design did not require us to check clinical trial registration timing, we detected two trials (numbers 16 and 18 in Table 1) [40, 42] that had been registered retrospectively and failed to comply with ICMJE registration requirements. One of these two, a multicentre RCT (number 16 in Table 1) [40], was registered prospectively in the NTR and retrospectively in the ACTRN, and although the NTR reported that the trial had stopped for futility, the ACTRN stated ‘still recruiting’ Because a retrospectively registered trial could be hard to identify, regulators need to find new ways to encourage researchers to update information in a timely manner [50–52].

Most important, the major discrepancies our study highlighted in paediatric clinical trials give new clinically important information that researchers synthesising evidence from published RCTs in scientific literature reviews could fail to identify without cross-checking CTRs. Hence, they could provide less reliable scientific evidence, and misdirect future research priorities [49–53]. Our findings could also alert medical journals on the need to introduce rules that require investigators to submit the original Institutional Review Board-approved protocol (and its subsequent amended versions), and to explain later changes, thus helping reviewers and editors in deciding whether to publish the trial and what the final manuscript should state.

Limitations

We acknowledge that our study has several limitations. Because we applied our study method only in few unselected consecutive paediatric RCTs published in a major paediatric journal, rather than including other authoritative journals with higher impact factors, our findings require further validation. Even though our scoring for reporting domains needs further refinement, our findings should make it easier for physicians to use research results from clinical trials. Because most authors neglected to update CTRs, we were unable to seek discrepancies in what authors recorded in CTRs and reported in published RCTs by cross-checking clinical outcome results. Similarly, because none of the five CTRs recorded or the cross-checked matched 20 RCTs appraised gave the necessary information, nor were we able to assess data for patient nonresponse and refusal. Although we analysed a small RCT sample, we found no differences in combined CTR-RCT discrepancy scores among the various CTRs. A final limitation is that we failed to assess interobserver reliability for the different assessor times for individual items and combined CTR-RCT discrepancy scores.

Conclusions

Our study identifies major discrepancies between what CTRs record and paediatric RCTs publish. Our findings should make clinicians who rely on RCT results for medical decision-making, aware of dissemination or reporting bias. Trialists need to bring CTR data and reported protocols in line with published data. Clinical researchers and reviewers could search for CTR-RCT discrepancies to cross-check inconsistencies in core clinical trial reporting domains. Medical journals need to introduce rules that require investigators to submit the original Institutional Review Board-approved protocol (and its subsequent amended versions), and to explain any discrepancies. Assessing discrepancies would with little effort provide greater transparency, avoid wasting research resources, and encourage those who prepare medical recommendations and guidelines to think more critically. Future studies need to clarify whether the trial discrepancies we report warrant scepticism regarding study validity, or call for trialists to be more diligent about updating CTR data.

Abbreviations

- ACTRN:

-

The Australian and New Zealand Clinical Trial Registry

- CASP:

-

Critical Appraisal Skills Programme

- CONSORT:

-

Consolidated Standards of Reporting Trials

- CTRI:

-

The Clinical Trial Registry-India

- CTRs:

-

Clinical trial registries

- ICMJE:

-

International Committee of Medical Journal Editors

- ISRCTN:

-

International Standard Randomised Controlled Trial Number Registry

- NCT:

-

United States National Institute of Health Clinical Trial Registry

- NTR:

-

The Nederlands Trials Register

- RCTs:

-

Randomised controlled trials

- URL:

-

Uniform Resource Locator

References

King M, Nazareth I, Lampe F, Bower P, Chandler M, Morou M, et al. Impact for participant and physician intervention preferences on randomized trials: a systematic review. JAMA. 2005;293:1089–99.

Fisher RA. The design of experiments. Edinburgh: Oliver and Boyd; 1935.

Hill AB. Principles of medical statistics. London: The Lancet; 1937.

Killeen S, Sourallous P, Hunter IA, Hartley JE, Grady HL. Registration rates, adequacy of registration, and a comparison of registered and published primary outcomes in randomized controlled trials published in surgery journals. Ann Surg. 2014;259:193–6.

Chan AW, Hróbjartsson A, Haahr MT, Gøtzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291:2457–65.

Mathieu S, Boutron I, Moher D, Altman DG, Ravaud P. Comparison of registered and published primary outcomes in randomized controlled trials. JAMA. 2009;302:977–84.

Hannink G, Gooszen HG, Rovers MM. Comparison of registered and published primary outcomes in randomized clinical trials of surgical interventions. Ann Surg. 2013;257:818–23.

Hartung DM, Zarin DA, Guise JM, McDonagh M, Paynter R, Helfand M. Reporting discrepancies between the ClinicalTrials.gov results database and peer-reviewed publications. Ann Int Med. 2014;160:477–83.

Su CX. Han M, Ren J, Li WY, Yue SJ, Hao YF. Liu JP Empirical evidence for outcome reporting bias in randomized clinical trials of acupuncture: comparison of registered records and subsequent publications Trials. 2015;16:28.

Schulz KF, Grimes DA. Blinding in randomised trials: hiding who got what. Lancet. 2002;359:696–700.

De Angelis C, Drazen JM, Frizelle FA, Haug C, Hoey J, Horton R, et al. Clinical trial registration: a statement from the International Committee of Medical Journal Editors. N Engl J Med. 2004;351:1250–1.

Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomised trials. Lancet. 2001;357:1191–4.

Hooft L, Korevaar DA, Molenaar N, Bossuyt PMM, Scholten RJPM. Endorsement of ICMJE’s clinical trial registration policy: a survey among journal editors. Neth J Med. 2014;72(7):349–55.

Regulations FDA. Relating to Good Clinical Practice and Clinical Trials. Disqualification of a. Clinical Investigator April. 2012;30. http://www.fda.gov/ScienceResearch/SpecialTopics/RunningClinicalTrials/ucm155713.htm. Accessed 20 Jan 2016.

Ioannidis JPA, Greenland S, Hlatky MA, Khoury MJ, Macleod MR, Moher D, et al. Increasing value and reducing waste in research design, conduct and analysis. Lancet. 2014;383:166–75.

Furukawa TA, Watanabe N, Omori IM, Montori VM, Guyatt GH. Association between unreported outcomes and effect size estimates in Cochrane meta-analyses. JAMA. 2007;297:468–70.

Jones CW, Keil LG, Holland WC, Caughey MC, Platts-Mills TF. Comparison of registered and published outcomes in randomized controlled trials: a systematic review. BMC Med. 2015;13:282.

Critical Appraisal Skills Programme (CASP). Randomised controlled trials checklist (31.05.13). http://www.bettervaluehealthcare.net/wp-content/uploads/2015/07/CASP-Randomised-Controlled-Trial-Checklist_2015.pdf. Accessed 20 Jan 2016.

Medicinal Products for human use. Clinical trials. Regulation EU No 536/2014. http://ec.europa.eu/health/human-use/clinical-trials/index_en.htm. Accessed 20 Jan 2016.

ClinicalTrials.gov. How to submit your results. (Last page reviewed in December 2014). https://www.clinicaltrials.gov/ct2/manage-recs/how-report#ViewingYourRecord. Accessed 20 Jan 2016.

Schroter S, Glasziou P, Heneghan C. Quality of descriptions of treatments: a review of published randomised controlled trials. BMJ Open. 2012;2, e001978.

Armijo-Olivo S, Fuentes J, Ospina M, Saltaji H, Hartling L. Inconsistency in the items included in tools used in general health research and physical therapy to evaluate the methodological quality of randomized controlled trials: a descriptive analysis. BMC Med Res Methodol. 2013;13:116.

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD. Cochrane Bias Methods Group; Cochrane Statistical Methods Group, et al. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials BMJ. 2011;343:d5928.

Rosati P, Porzsolt F. A practical educational tool for teaching child-care hospital professionals attending evidence-based practice courses for continuing medical education to appraise internal validity in systematic reviews. J Eval Clin Pract. 2013;19(4):648–52.

Carroll AE, Bauer NS, Dugan TM, Anand V, Saha C, Downs SM. Use of a computerized decision aid for attention-deficit/hyperactivity disorder (ADHD) diagnosis: a randomized controlled trial. Pediatrics. 2013;132:e623–9.

Davoli AM, Broccoli S, Bonvicini L, Fabbri A, Ferrari E, D’Angelo S, et al. Pediatrician-led motivational interviewing to treat overweight children: an RCT. Pediatrics. 2013;132:e1236–45.

McCarthy LK, Twomey AR, Molloy EJ, Murphy JF, O’Donnell CP. A randomized trial of nasal prong or face mask for respiratory support for preterm newborns. Pediatrics. 2013;132:e389–95.

van der Veek SM, Derkx BH, Benninga MA, Boer F, de Haan E. Cognitive behavior therapy for pediatric functional abdominal pain: a randomized controlled trial. Pediatrics. 2013;132:e1163–72.

Field DJ, Firmin R, Azzopardi DV, Cowan F, Juszczak E, Brocklehurst P, NEST Study Group. Neonatal extra-corporeal membrane oxygenation (ECMO) study of temperature (NEST): a randomized controlled trial. Pediatrics. 2013;132:e1247–56.

Aluisio AR, Maroof Z, Chandramohan D, Bruce J, Mughal MZ, Bhutta Z, et al. Vitamin D3 supplementation and childhood diarrhea: a randomized controlled trial. Pediatrics. 2013;132:e832–40.

McCarthy LK, Molloy EJ, Twomey AR, Murphy JF, O’Donnell CP. A randomized trial of exothermic mattresses for preterm newborns in polyethylene bags. Pediatrics. 2013;132:e135–41.

Shaw RJ, St John N, Lilo EA, Jo B, Benitz W, Stevenson DK, et al. Prevention of traumatic stress in mothers with preterm infants: a randomized controlled trial. Pediatrics. 2013;132:e886–94.

Daniels LA, Mallan KM, Nicholson JM, Battistutta D, Magarey A. Outcomes of an early feeding practices intervention to prevent childhood obesity. Pediatrics. 2013;132:e109–18.

Kurowski BG, Wade SL, Kirkwood MW, Brown TM, Stancin T, Taylor HG. Online problem-solving therapy for executive dysfunction after child traumatic brain injury. Pediatrics. 2013;132:e158–66.

Durrmeyer X, Hummler H, Sanchez-Luna M, Carnielli VP, Field D, Greenough A, et al. Two-year outcomes of a randomized controlled trial of inhaled nitric oxide in premature infants. Pediatrics. 2013;132:e695–703.

Leadford AE, Warren JB, Manasyan A, Chomba E, Salas AA, Schelonka R, et al. Plastic bags for prevention of hypothermia in preterm and low birth weight infants. Pediatrics. 2013;132:e128–34.

Ohls RK, Christensen RD, Kamath-Rayne BD, Rosenberg A, Wiedmeier SE, Roohi M, et al. A randomized, masked, placebo-controlled study of darbepoetin alfa in preterm infants. Pediatrics. 2013;132:e119–27.

Alansari K, Sakran M, Davidson BL, Ibrahim K, Alrefai M, Zakaria I. Oral dexamethasone for bronchiolitis: a randomized trial. Pediatrics. 2013;132:e810–6.

Dilli D, Aydin B, Zenciroğlu A, Özyazici E, Beken S, Okumuş N. Treatment outcomes of infants with cyanotic congenital heart disease treated with synbiotics. Pediatrics. 2013;132:e932–8.

Kamlin CO, Schilleman K, Dawson JA, Lopriore E, Donath SM, Schmölzer GM, et al. Mask versus nasal tube for stabilization of preterm infants at birth: a randomized controlled trial. Pediatrics. 2013;132:e381–8.

Malik A, Taneja DK, Devasenapathy N, Rajeshwari K. Short-course prophylactic zinc supplementation for diarrhea morbidity in infants of 6 to 11 months. Pediatrics. 2013;132:e46–52.

McIntosh CG, Tonkin SL, Gunn AJ. Randomized controlled trial of a car safety seat insert to reduce hypoxia in term infants. Pediatrics. 2013;132:326–31.

Wake M, Tobin S, Levickis P, Gold L, Ukoumunne OC, Zens N, et al. Randomized trial of a population-based, home-delivered intervention for preschool language delay. Pediatrics. 2013;132:e895–904.

Belsches TC, Tilly AE, Miller TR, Kambeyanda RH, Leadford A, Manasyan A, et al. Randomized trial of plastic bags to prevent term neonatal hypothermia in a resource-poor setting. Pediatrics. 2013;132:e656–61.

Song F, Parekh S, Hooper L, Loke YK, Ryder J, Sutton AJ, et al. Dissemination and publication of research findings: an updated review of related biases. Health Technol Assess. 2010;14(8):iii,ix-xi,1-193.

Scherer RW, Sieving PC, Ervin AM, Dickersin K. Can we depend on investigators to identify and register randomized controlled trials? PLoS One. 2012;7, e44183.

Smith V, Clarke M, Williamson P, Gargon E. Survey of new 2007 and 2011 Cochrane reviews found 37 % of prespecified outcomes not reported. J Clin Epidemiol. 2015;68(3):237–45.

Boers M, Kirwan JR, Wells G, Beaton D, Gossec L, d’Agostino MA, et al. Developing core outcome measurement sets for clinical trials: OMERACT filter 2.0. J Clin Epidemiol. 2014;67:745–53.

Chalmers I, Altman DG. How can medical journals help prevent poor medical research? Some opportunities presented by electronic publishing. Lancet. 1999;353:490–3.

Tugwell P, Petticrew M, Kristjansson E, Welch V, Ueffing E, Waters E, et al. Assessing equity in systematic reviews: realising the recommendations of the Commission on Social Determinants of Health. BMJ. 2010;341:c4739.

Dechartres A, Charles P, Hopewell S, Ravaud P, Altman DG. Reviews assessing the quality or the reporting of randomized controlled trials are increasing over time but raised questions about how quality is assessed. J Clin Epidemiol. 2011;64:136–44.

Elliott JH, Turner T, Clavisi O, Thomas J, Higgins JP, Mavergames C, et al. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 2014;11, e1001603.

Tugwell P, Knottnerus JA. Is the ‘Evidence-Pyramid’ now dead? J Clin Epidemiol. 2015;68:1247–50.

Authors’ contributions

FP conceived the original study and revised the manuscript. PR and RD designed the study, assessed and scored the CTR-RCT discrepancy, drafted the manuscript and revised the final version. GR, GT, RI, FG, EF, MZ, CC, VB and RF, working in pairs, searched the studies, extracted the data and revised the manuscript. CC and VB helped with the design of the tables. RF revised the manuscript, PR and RD made substantial revisions to the manuscript. All authors have read and approved the final submitted version.

Paper presented in part as a Poster at the 4th Meeting of the Core Outcome Measures in Effectiveness Trials (COMET) Initiative, 19–20 November 2014, Rome, Italy. Abstract published in Trials 2015;16 Suppl 1:P34. http://www.trialsjournal.com/content/16/S1/P34. Accessed 20 Jan 2016.

The paper was presented as a short communication at Evidence Live 22 June 2016 Oxford, UK.

Competing interests

This research received no specific grant from any funding agency or institution. The authors declare that they have no financial or nonfinancial competing interests.

Author information

Authors and Affiliations

Corresponding author

Additional file

Additional file 1:

Inconsistencies in the 20 trials published in the journal Pediatrics from July to November 2013, and discrepancy scores assessed by cross-checking what CTRs and their matching RCTs reported. Description of data: an operative table describing in detail the results of the 20 unselected consecutive RCTs, published in the journal Pediatrics from July to November 2013, mapped, coded, and cross-checked in six reporting domains to assess and report inconsistencies on what the authors recorded in CTRs and what they published in RCTs, as rated by predefined CTR-RCT discrepancy scores. (DOCX 56 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Rosati, P., Porzsolt, F., Ricciotti, G. et al. Major discrepancies between what clinical trial registries record and paediatric randomised controlled trials publish. Trials 17, 430 (2016). https://doi.org/10.1186/s13063-016-1551-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13063-016-1551-6