Abstract

Background

Abdominal computed tomography (CT) scan is a crucial imaging modality for creating cross-sectional images of the abdominal area, particularly in cases of abdominal trauma, which is commonly encountered in traumatic injuries. However, interpreting CT images is a challenge, especially in emergency. Therefore, we developed a novel deep learning algorithm-based detection method for the initial screening of abdominal internal organ injuries.

Methods

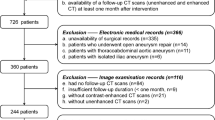

We utilized a dataset provided by the Kaggle competition, comprising 3,147 patients, of which 855 were diagnosed with abdominal trauma, accounting for 27.16% of the total patient population. Following image data pre-processing, we employed a 2D semantic segmentation model to segment the images and constructed a 2.5D classification model to assess the probability of injury for each organ. Subsequently, we evaluated the algorithm’s performance using 5k-fold cross-validation.

Results

With particularly noteworthy performance in detecting renal injury on abdominal CT scans, we achieved an acceptable accuracy of 0.932 (with a positive predictive value (PPV) of 0.888, negative predictive value (NPV) of 0.943, sensitivity of 0.887, and specificity of 0.944). Furthermore, the accuracy for liver injury detection was 0.873 (with PPV of 0.789, NPV of 0.895, sensitivity of 0.789, and specificity of 0.895), while for spleen injury, it was 0.771 (with PPV of 0.630, NPV of 0.814, sensitivity of 0.626, and specificity of 0.816).

Conclusions

The deep learning model demonstrated the capability to identify multiple organ injuries simultaneously on CT scans and holds potential for application in preliminary screening and adjunctive diagnosis of trauma cases beyond abdominal injuries.

Similar content being viewed by others

Introduction

Abdominal trauma is a serious health issue that affects the survival of the injured, especially those under age of 45 years [1], it is a common occurrence in both peaceful and hostile environments, and it mainly involves the liver, spleen, and kidney. Among all types of bodily injuries, those to the abdomen comprise from 0.4% up to 1.8% [2, 3].Despite the improvements in trauma management, abdominal trauma still poses a significant threat to the mortality of the injured, with a global variation of 1–20% [4,5,6]. Abdominal trauma involves a variety of injuries to the abdominal organs, which have different structures and functions. The main causes of death from abdominal trauma are massive bleeding and severe infection in the abdominal cavity. To reduce the mortality of abdominal trauma, it is essential to quickly and accurately assess the presence and extent of internal organ injury, the type and location of organ injury, and the appropriate treatment [7].The diagnosis of abdominal trauma is often challenging, as it may not be evident from physical examination, patient symptoms, or laboratory tests. The most widely adopted trauma evaluation system is the American Association for Surgery of Trauma-organ injury scale (AAST-OIS), which was first introduced in 1989, and the latest update was in 2018. It categorizes the injuries from mild to severe into grades I to V, based on the alterations in the anatomical structure of the injured organ. This scale is considered as the gold standard for trauma classification [8].

CT is indispensable for assessing suspected abdominal injuries in clinical practice, as it provides a comprehensive view of the abdomen, with a unique role in evaluating abdominal parenchymal organs, hollow organs, mesentery, omentum, and vascular injuries caused by trauma, especially when severe or multi-system injuries are suspected [9]. Based on AAST-OIS criteria, the accurate display and interpretation of CT signs are crucial for the clinical diagnosis and classification for trauma patients [10, 11]. Therefore, the standardization of CT scan images for the precise judgment of radiologists on injury signs, and the consistency of grading interpretation are essential in providing better information for therapeutic strategies.

However, explaining CT scanning on abdominal trauma typically is a complex and time-consuming process. In clinical, several factors contribute to the likelihood of errors in abdominal trauma CT diagnosis. These include imaging backlog, understaffing, visual fatigue, and overnight shifts, especially when the caseload exceeds the daily workload [12, 13]. Moreover, the complexity of the abdomen, with its multitude of organs, poses challenges for comprehensive diagnosis, especially in cases involving multiple injuries or active bleeding. Furthermore, anatomical variations and incorrect patient positioning can also lead to misdiagnosis [14]. Despite the involvement of a second radiologist in reviewing the diagnostic results, errors and missed diagnoses remain challenging in clinical practice.

To overcome this challenge, artificial intelligence (AI) technology could facilitate the prompt diagnosis of such injuries and enhance the treatment and care of patients in emergency settings. Therefore, the medical community is increasingly interested in applying AI and machine learning (ML) to assist clinicians. By deploying AI model as virtual diagnostic assistants to serve as secondary image readers, the accuracy and dependability of radiological image interpretation can be significantly enhanced. This empowers radiologists with greater confidence in their diagnostic assessments. Leveraging the feature of AI in rapidly identifying image can expedite the diagnostic process and improve clinical efficiency [15].

In this study, we developed a novel assay based on deep learning to detect severe abdominal organ damage, locate the affected organs, such as the liver, spleen, kidneys, and intestines, and identify any active intra-abdominal bleeding. The purpose of this study is to utilize AI technology to assist in the rapid diagnosis of abdominal trauma. Clinicians can utilize the high-quality image results provided by our developed deep learning model, combined with patient medical history, symptoms and signs for comprehensive and accuracy analysis. This approach facilitates faster initial screening and triage, assisting to quickly identify genuine abdominal trauma patients.

Methods and materials

Data acquisitions and labelling

The datasets were acquired from RSNA Abdominal Trauma Detection AI Challenge (2023) [16], which is a competition database established by the Radiological Society of North America (RSNA) for machine learning model development, in which the images are collected from 23 sites of 14 countries in six continents of more than 4,000 CT scans of patients with various types of abdominal injuries.

For the present study, we picked up 3,147 patients’ data and labelled into six sections, including three parenchymatous organs (liver, spleen, kidney), one cavity organ (intestine), and other two parts of extravasation and any injury. Each section was divided into two categories: health or injury, showed in Table 1.

The required images were transformed from DCM (Digital Imaging and Communications in Medicine, DICOM) into Portable Network Graphics (PNG) for quantitatively simplification and visually optimization. Then, the screened images were cropped in abdominal organs on Z stacks for three-dimensional model establishment.

Images segmentation on abdominal organs

For the rapidly segmentation and processing the liver, spleen, kidney, and intestine in CT images, we applied a 2D semantic segmentation model named U-Net. In this model we divided and extracted the organs of each CT image on every patient. Based on these results, a special semantic segmentation database for CT images was created, in which the mages were annotated on pixel-by-pixel. Then, we trained the model by EfficientNetB0 architecture based on the data set and relevant clinical knowledge, a U-Net 2D semantic segmentation model built on the EfficientNetB0 architecture was trained, and the 5kfold cross-validation was used to evaluate the model’s performance.

Rapid diagnosis and screening of abdominal organ trauma

We constructed a 2.5D semantic segmentation to enhance the analysis of spatial relationships among abdominal organs. The initial step involved identifying the commencement and termination points within each serial section where these organs were visible. We selected 32 images from every section, ensuring each organ was represented in at least four images. Images have 512 × 512 pixels in RGB color model. These images were then processed through the EfficientNetB1 model, converting them into 1280-dimensional vectors. These vectors served as inputs for a two-way Long Short-Term Memory (LSTM) Networks, facilitating the extraction of spatial dimensional features. Through the LSTM network, the feature vector of EfficientNetB1 was changed from 1280 to 512. Subsequently, we employed a Neck structure before subjecting the data to Multi-Label classification to compute the average injury probability for each organ, as showed in Fig. 1.

For evaluating how the accuracy of the algorithm model fit to the dataset, we induced the loss function, which was designed based on the weighted cross-entropy loss function for the individual organ calculation.

Statistical analysis

We performed statistical analysis with the Python libraries NumPy, Pandas, and TensorFlow. We presented the model classification results in terms of accuracy, sensitivity, and specificity using a confusion matrix. We also assessed the model performance using the receiver operating characteristic (ROC) curve and the area under the ROC curve (AUROC). We calculated the confidence intervals of these metrics using the bootstrapping method. In addition, we use the Grad-CAM visualization algorithm to evaluate the model’s capabilities.

Hardware and software

We developed and tested the model on a workstation running Ubuntu 18.04 that included two Intel® Xeon® Gold 6258R CPUs operating at 2.70 GHz, 768 GB of RAM, and eight NVIDIA Tesla V100 (16GB) GPUs. We used Tensorflow v2.14.0 and Python v3.9.18 to create the full process. For picture preprocessing, we used Python packages like Pydicom and OpenCV. TensorFlow, pandas, and NumPy are Python libraries that we used to perform statistical analysis. Using a confusion matrix, we displayed the model’s classification results in terms of accuracy, sensitivity, and specificity. The ROC curve and the AUROC were also used to evaluate the model’s performance. We used the bootstrapping approach to obtain these measures’ confidence intervals.

Results

Patients

The 3147 patients’ images were collected in the database, in which 855 (27.16%) of them had abdominal trauma. The patients who suffered from abdominal trauma were divided into the following categories: 321 patients (37.54%) had liver damage, 354 patients (41.40%) had spleen damage, 182 patients (21.29%) had kidney damage, 64 patients (7.49%), and 200 patients (23.39%) had abdominal extravasation.

2D semantic segmentation model worked well in images segmentation

The model performs well in identifying the location and category of each organ, for the location accuracy was about 85%, and the category accuracy was higher than 90%, that suggested the model can effectively accomplish the tasks of organ localization and categorization. The figure below demonstrates the organ localization using a 2D semantic segmentation model. The left image is the original CT scan, and the right image is the segmented image. The results of the validation of the 2D semantic segmentation model in the 5kfold loop are shown in the following Table 2; Fig. 2.

The 2.5D classification approach performs well in abdominal organs injuries diagnosis

The 2.5D classification model assessed the injury probability for five organs: liver, spleen, kidney, intestine, and exosmosis. Additionally, an “any injury” category was included, representing the maximum probabilities across the five organs. Seven indexes were employed for model classification: area under the curve (AUC), accuracy, PPV, NPV, sensitivity, and specificity. Notably, specificity indicates the accuracy of identifying healthy organs when the sensitivity for detecting diseased organs is set at 90% in the 2.5D model. To evaluate the model’s capabilities, we applied the Grad-CAM visualization algorithm to the test set. The resulting visual heat maps demonstrated the model’s ability to detect organ damage. Figure 3 illustrates examples of the visualized results focusing on the liver, spleen, and kidney.

Liver diagnosis

The 2.5D classification model achieved high accuracy and sensitivity in diagnosing liver injury on CT scans. The specific results are shown in Table 3; Fig. 4A. The AUC and ACC were 0.817 (0.763–0.868) and 0.873 (0.848–0.898), respectively. The PPV and NPV were 0.789 (0.77–0.809) and 0.895 (0.885–0.904), respectively. The sensitivity and specificity were 0.789 (0.77–0.809) and 0.895 (0.885–0.904), respectively. These results show that the model can effectively detect liver injury and facilitate clinical decision-making.

Spleen diagnosis

The 2.5D classification model showed moderate accuracy and sensitivity in detecting spleen injury on CT scans. The specific results are shown in Table 3; Fig. 4B. The AUC and ACC were 0.848 (0.795–0.895) and 0.771 (0.74–0.803), respectively. The PPV and NPV were 0.63 (0.61–0.65) and 0.814 (0.804–0.823), respectively. The sensitivity and specificity were 0.626 (0.606–0.645) and 0.816 (0.807–0.826), respectively. These results indicate that the model can reliably exclude spleen injury and save medical resources. However, the model has low PPV and sensitivity, and its performance in identifying positive cases can be improved.

Renal diagnosis

The 2.5D classification model achieved high performance in detecting kidney injury on CT scans. The specific results are shown in Table 3; Fig. 4C. The AUC and ACC were 0.882 (0.823–0.929) and 0.932 (0.911–0.951), respectively. The PPV and NPV were 0.888 (0.87–0.904) and 0.943 (0.935–0.952), respectively. The sensitivity and specificity were 0.887 (0.87–0.904) and 0.944 (0.935–0.952), respectively. These results indicate that the model can effectively diagnose kidney injury and support clinical decision-making.

Intestinal diagnosis

The 2.5D classification model showed high NPV and specificity in excluding intestinal injury on CT scans. The specific results are shown in Table 3; Fig. 4D. The AUC and ACC were 0.83 (0.699–0.942) and 0.978 (0.965–0.989), respectively. The PPV and NPV were 0.056 (0.027–0.091) and 0.98 (0.969–0.99), respectively. The sensitivity and specificity were 0.149 (0.104–0.203) and 0.943 (0.939–0.947), respectively. These results suggest that the model can reliably rule out intestinal injury, but has low PPV and sensitivity in detecting positive cases.

Extravasation diagnosis

The 2.5D classification model showed high NPV and specificity in excluding extravasation on CT scans. The specific results are shown in Table 3; Fig. 4E. The AUC and ACC were 0.757 (0.67–0.833) and 0.935 (0.916–0.952), respectively. The PPV and NPV were 0.114 (0.082–0.149) and 0.941 (0.925–0.958), respectively. The sensitivity and specificity were 0.247 (0.209–0.29) and 0.863 (0.855–0.87), respectively. These results suggest that the model can reliably rule out extravasation, but the low PPV and sensitivity in detecting positive cases. The low AUC and high ACC indicate that the data set of extravasation was imbalanced, with a large proportion of negative cases, leading to the model misclassifying some positive cases as negative.

Arbitrary damage diagnosis

The 2.5D classification model showed high NPV and specificity in excluding any injury on CT scans. The specific results are shown in Table 3; Fig. 4F. The AUC and ACC were 0.843 (0.803–0.88) and 0.795 (0.762–0.827), respectively. The PPV and NPV were 0.438 (0.387–0.488) and 0.852 (0.825–0.878), respectively. The sensitivity and specificity were 0.653 (0.611–0.696) and 0.705 (0.685–0.724), respectively. These results suggest that the model can reliably rule out any injury, but has low PPV and sensitivity in detecting positive cases. We hypothesized that the low PPV and sensitivity were related to the low performance of the model in diagnosing intestinal and extravasation injuries.

Discussion

In this study, we developed an algorithm that can detect injuries in five abdominal organs: liver, spleen, kidney, intestine, and extravasation. Our results demonstrate that the algorithm can accurately diagnose parenchymal organ injuries. The algorithm can localize the abdominal organs and then detect the injuries in each organ simultaneously, which can assist clinicians in efficient screening and triage, facilitate the treatment of trauma patients, and avoid the waste of medical resources.

Furthermore, the algorithm performed best in identifying kidney injury on abdominal CT scans, with an ACC of 0.932 (PPV: 0.888; NPV: 0.943; Sensitivity: 0.887; Specificity: 0.944). It also showed good performance in diagnosing liver and spleen injuries, with an ACC of 0.873 (PPV: 0.789; NPV: 0.895; Sensitivity: 0.789; Specificity: 0.895) and 0.771 (PPV: 0.63; NPV: 0.814; Sensitivity: 0.626; Specificity: 0.816), respectively.

In clinical practice, radiologists’ diagnostic focus and efficiency can be impacted by various factors, including fatigue and time pressure. Studies have shown that the error rates of radiologists in abdominal CT diagnosis fluctuate throughout the day and week. Specifically, during the workweek, error rates are highest later in the morning and at the end of the workday, with Mondays showing higher rates compared to other days [17]. Additionally, there are notable variations in expertise and experience among radiologists of different ages and qualifications. Studies have found that less experienced radiologists may have error rates as high as 32% in diagnosing abdominal solid organ CT images under busy conditions [18,19,20]. In contrast, diagnostic models based on deep learning exhibit robust stability and rapid speed, unaffected by subjective or objective factors, which can operate continuously for 24 h a day. During our study, we uploaded 3,147 patients’ CT images for the model learning, which cost about 5 h until the diagnosis completion. For individual patient, it takes seconds or minutes to finish the analysis, the time depends on the difference and quality of each CT image.

Previous studies have applied deep learning algorithms to diagnose specific abdominal injuries, such as kidney segmentation [21], splenic laceration [22], liver laceration [23, 24], and abdominal hemorrhage [25]. However, none of these studies have attempted to detect multiple organ injuries in trauma patients. Therefore, we developed a deep learning algorithm that can detect injuries in five different abdominal organs at once. We used a 2D semantic segmentation model to extract the organs from the CT images, and then a 2.5D classification model to predict the injury probability of each organ. This approach improved the speed and accuracy of the algorithm.

We developed an algorithm that requires large amounts of accurately labeled data to achieve high performance, to facilitate the labeling process for the clinicians and enhance the results of the automatic detection algorithm. The dataset for CT examinations in this study included both conventional and enhanced CT scans. We conducted a normalization on the data to avoid the effect of the contrast agent usage in patient before uploaded to the model. A 2.5D classification model had been introduced instead of a 3D model, which could recognize the small data sets, for reduction parameters numbers and prevention the overfitting while preserving the model performance. The LSTM was included as well, for the components could performed spatial analysis on the input serial CT images and capture the spatial relationships among the images.

To improve the diversity and quality of the data set and address the data imbalance issue, we applied various data augmentation techniques that mimic different scenarios that can affect the quality of CT images and help the model cope with reality variations. With the basic geometric transformations, such as horizontal and vertical flipping, which helped to distinguish the same anatomical structures in different orientation caused by scanning angles.

The Geometric transformation technology, blur technology and random Gaussian noise was applied for data enhancement, which can effectively enrich the training data set, resist the occurrence of over-fitting, and enable the model to make correct fitting when facing new images, instead of blindly limiting it to some known images.

Over all, deep learning has been widely applied to clinical data analysis, especially in image processing [26]. It has advanced the field of medical imaging by enabling the identification, classification, and quantification of patterns in various modalities [27], and quantitative assessment of blunt liver trauma in children [28], which can provide clinicians with accurate and fast diagnostic assistant [29]. Our deep learning model provided a high-quality image analysis result that helps clinicians perform quick screening and triage, identify patients with abdominal trauma, to improve medical efficiency and save medical resources when in natural disasters or mass accidents. Moreover, the model has the potential to be applied to the CT diagnosis of other diseases.

Conclusions

This deep learning model can be used to identify multiple organ injuries simultaneously on CT, and may be further applied to the preliminary screening and auxiliary diagnosis of other trauma scenes. While our model has shown promising results, it still has limitations. One of the main areas for improvement is the need for a larger dataset of CT images for more robust algorithm training. Additionally, we aim to explore methods for optimizing the algorithm to enhance the predictive ability of the model. Moving forward, our focus will be on continually augmenting the dataset by incorporating more CT images to further enhance the accuracy and speed of the model.

Data availability

No datasets were generated or analysed during the current study.

Abbreviations

- CT:

-

Computed tomography

- PPV:

-

Positive predictive value

- NPV:

-

Negative predictive value

- AAST-OIS:

-

American association for surgery of trauma-organ injury scale

- AI:

-

Artificial intelligence

- ML:

-

Machine learning

- RSNA:

-

Radiological society of North America

- DICOM:

-

Digital imaging and communications in medicine

- PNG:

-

Portable network graphics

- LSTM:

-

Long short-term memory

- ROC:

-

Receiver operating characteristic

- AUROC:

-

Area under the roc curve

References

Arenaza Choperena G, Cuetos Fernández J, Gómez Usabiaga V, Ugarte Nuño A, Rodriguez Calvete P. Collado Jiménez J Abdom Trauma Radiologia (Engl Ed). 2023;65(Suppl 1):S32–41.

El-Menyar A, Abdelrahman H, Al-Hassani A, Peralta R, AbdelAziz H, Latifi R, et al. Single versus multiple solid organ injuries following blunt abdominal trauma. World J Surg. 2017;41(11):2689–96.

Ball SK, Croley GG. Blunt abdominal trauma. A review of 637 patients. J Miss State Med Assoc. 1996;37(2):465–8.

Raza M, Abbas Y, Devi V, Prasad KV, Rizk KN, Nair PP. Non operative man-agement of abdominal trauma - a 10 years review. World J Emerg Surg WJES. 2013;8:14.

Pekkari P, Bylund PO, Lindgren H, Oman M. Abdominal injuries in a low trauma volume hospital–a descriptive study from northern Sweden. Scand J Trauma Resusc Emerg Med. 2014;22:48.

Gönültaş F, Kutlutürk K, Gok AFK, Barut B, Sahin TT, Yilmaz S. Analysis of risk factors of mortality in abdominal trauma. Ulus Travma Acil Cerrahi Derg. 2020;26(1):43–9.

Chen X, Wang J, Zhao J, Qin X, Liu Y, Zhang Y, et al. Surgery[M]. Beijing: People’s Medical Publishing House; 2018.

Gad MA, Saber A, Farrag S, Shams ME, Ellabban GM. Incidence, patterns, and factors predicting mortality of abdominal injuries in trauma patients. N Am J Med Sci. 2012;4(3):129–34.

Soto JA, Anderson SW. Multidetector CT of blunt abdominal trauma. Radiology. 2012;265(3):678–93.

Dixe de Oliveira Santo I, Sailer A, Solomon N, Borse R, Cavallo J, Teitelbaum J, et al. Grading abdominal trauma: changes in and implications of the revised 2018 AAST-OIS for the spleen, liver, and Kidney. Radiographics. 2023;43(9):e230040.

Chien LC, Vakil M, Nguyen J, Chahine A, Archer-Arroyo K, Hanna TN, et al. The American Association for the surgery of Trauma Organ Injury Scale 2018 update for computed tomography-based grading of renal trauma: a primer for the emergency radiologist. Emerg Radiol. 2020;27(1):63–73.

Degnan AJ, Ghobadi EH, Hardy P, Krupinski E, Scali EP, Stratchko L, Ulano A, Walker E, Wasnik AP, Auffermann WF. Perceptual and interpretive error in diagnostic radiology-causes and potential solutions. Acad Radiol. 2019;26(6):833–45.

Renfrew DL, Franken EA Jr, Berbaum KS, Weigelt FH, Abu-Yousef MM. Error in radiology: classification and lessons in 182 cases presented at a problem case conference. Radiology. 1992;183(1):145–50.

Pinto A, Brunese L. Spectrum of diagnostic errors in radiology. World J Radiol. 2010;2(10):377–83.

Fazal MI, Patel ME, Tye J, Gupta Y. The past, present and future role of artificial intelligence in imaging. Eur J Radiol. 2018;105:246–50.

Errol Colak H-M, Lin R, Ball M, Davis A, Flanders S, Jalal et al. (2023). RSNA 2023 Abdominal Trauma Detection. Kaggle.https://kaggle.com/competitions/rsna-2023-abdominal-trauma-detection.

Kliewer MA, Mao L, Brinkman MR, Bruce RJ, Hinshaw JL. Diurnal variation of major error rates in the interpretation of abdominal/pelvic CT studies. Abdom Radiol (NY). 2021;46(4):1746–51.

Wildman-Tobriner B, Allen BC, Maxfield CM. Common resident errors when interpreting computed tomography of the Abdomen and Pelvis: a review of types, pitfalls, and strategies for improvement. Curr Probl Diagn Radiol. 2019;48(1):4–9.

Brady AP. Error and discrepancy in radiology: inevitable or avoidable? Insights Imaging. 2017;8(1):171–82.

Abujudeh HH, Boland GW, Kaewlai R, Rabiner P, Halpern EF, Gazelle GS, Thrall JH. Abdominal and pelvic computed tomography (CT) interpretation: discrepancy rates among experienced radiologists. Eur Radiol. 2010;20(8):1952–7.

Farzaneh N, Reza Soroushmehr SM, Patel H, Wood A, Gryak J, Fessell D, et al. Automated kidney segmentation for traumatic injured patients through ensemble learning and active contour modeling. Annu Int Conf IEEE Eng Med Biol Soc. 2018;2018:3418–21.

Cheng CT, Lin HS, Hsu CP, Chen HW, Huang JF, Fu CY, et al. The three-dimensional weakly supervised deep learning algorithm for traumatic splenic injury detection and sequential localization: an experimental study. Int J Surg. 2023;109(5):1115–24.

Dreizin D, Chen T, Liang Y, Zhou Y, Paes F, Wang Y, et al. Added value of deep learning-based liver parenchymal CT volumetry for predicting major arterial injury after blunt hepatic trauma: a decision tree analysis. Abdom Radiol (NY). 2021;46(6):2556–66.

Farzaneh N, Stein EB, Soroushmehr R, Gryak J, Najarian K. A deep learning framework for automated detection and quantitative assessment of liver trauma. BMC Med Imaging. 2022;22(1):39.

Dreizin D, Zhou Y, Fu S, et al. A Multiscale Deep Learning Method for Quantitative Visualization of Traumatic Hemoperitoneum at CT: Assessment of Feasibility and comparison with subjective categorical estimation. Radiol Artif Intell. 2020;2(6):e190220.

Miotto R, Wang F, Wang S, Jiang X, Dudley JT. Deep learning for healthcare: review, opportunities and challenges. Brief Bioinform. 2018;19(6):1236–46.

Shen D, Wu G, Suk HI. Deep learning in Medical Image Analysis. Annu Rev Biomed Eng. 2017;19:221–48.

Huang S, Zhou Z, Qian X, Li D, Guo W, Dai Y. Automated quantitative assessment of pediatric blunt hepatic trauma by deep learning-based CT volumetry. Eur J Med Res. 2022;27(1):305.

Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44–56.

Acknowledgements

We appreciate the great help from the Medical Research Center, Academy of Chinese Medical Sciences, Zhejiang Chinese Medical University.

Funding

This work was supported by grants from the Natural Science Foundation of China 82374102.

Author information

Authors and Affiliations

Contributions

XR SHEN, XB DOU, L CHEN, YX ZHOU and XY SHI contributed to the study’s conception. XR SHEN, L CHEN, XB DOU, YX ZHOU, XY SHI, SY ZHANG, SW DING and LL NI contributed to the acquisition and coding of the data. XR SHEN, XB DOU, L CHEN, YX ZHOU, SW DING and XY SHI designed the study and analyzed the data. XR SHEN and L CHEN drafted the manuscript. XR SHEN, L CHEN and XB DOU critically read the manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable for a systematic review.

Consent for publication

Not applicable for a systematic review.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Shen, X., Zhou, Y., Shi, X. et al. The application of deep learning in abdominal trauma diagnosis by CT imaging. World J Emerg Surg 19, 17 (2024). https://doi.org/10.1186/s13017-024-00546-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13017-024-00546-7