Abstract

Background

The implementation of clinical practice guidelines (CPGs) is a cyclical process in which the evaluation stage can facilitate continuous improvement. Implementation science has utilized theoretical approaches, such as models and frameworks, to understand and address this process. This article aims to provide a comprehensive overview of the models and frameworks used to assess the implementation of CPGs.

Methods

A systematic review was conducted following the Cochrane methodology, with adaptations to the "selection process" due to the unique nature of this review. The findings were reported following PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) reporting guidelines. Electronic databases were searched from their inception until May 15, 2023. A predetermined strategy and manual searches were conducted to identify relevant documents from health institutions worldwide. Eligible studies presented models and frameworks for assessing the implementation of CPGs. Information on the characteristics of the documents, the context in which the models were used (specific objectives, level of use, type of health service, target group), and the characteristics of each model or framework (name, domain evaluated, and model limitations) were extracted. The domains of the models were analyzed according to the key constructs: strategies, context, outcomes, fidelity, adaptation, sustainability, process, and intervention. A subgroup analysis was performed grouping models and frameworks according to their levels of use (clinical, organizational, and policy) and type of health service (community, ambulatorial, hospital, institutional). The JBI’s critical appraisal tools were utilized by two independent researchers to assess the trustworthiness, relevance, and results of the included studies.

Results

Database searches yielded 14,395 studies, of which 80 full texts were reviewed. Eight studies were included in the data analysis and four methodological guidelines were additionally included from the manual search. The risk of bias in the studies was considered non-critical for the results of this systematic review. A total of ten models/frameworks for assessing the implementation of CPGs were found. The level of use was mainly policy, the most common type of health service was institutional, and the major target group was professionals directly involved in clinical practice. The evaluated domains differed between the models and there were also differences in their conceptualization. All the models addressed the domain "Context", especially at the micro level (8/12), followed by the multilevel (7/12). The domains "Outcome" (9/12), "Intervention" (8/12), "Strategies" (7/12), and "Process" (5/12) were frequently addressed, while "Sustainability" was found only in one study, and "Fidelity/Adaptation" was not observed.

Conclusions

The use of models and frameworks for assessing the implementation of CPGs is still incipient. This systematic review may help stakeholders choose or adapt the most appropriate model or framework to assess CPGs implementation based on their specific health context.

Trial registration

PROSPERO (International Prospective Register of Systematic Reviews) registration number: CRD42022335884. Registered on June 7, 2022.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Substantial investments have been made in clinical research and development in recent decades, increasing the medical knowledge base and the availability of health technologies [1]. The use of clinical practice guidelines (CPGs) has increased worldwide to guide best health practices and to maximize healthcare investments. A CPG can be defined as "any formal statements systematically developed to assist practitioner and patient decisions about appropriate health care for specific clinical circumstances" [2] and has the potential to improve patient care by promoting interventions of proven benefit and discouraging ineffective interventions. Furthermore, they can promote efficiency in resource allocation and provide support for managers and health professionals in decision-making [3, 4].

However, having a quality CPG does not guarantee that the expected health benefits will be obtained. In fact, putting these devices to use still presents a challenge for most health services across distinct levels of government. In addition to the development of guidelines with high methodological rigor, those recommendations need to be available to their users; these recommendations involve the diffusion and dissemination stages, and they need to be used in clinical practice (implemented), which usually requires behavioral changes and appropriate resources and infrastructure. All these stages involve an iterative and complex process called implementation, which is defined as the process of putting new practices within a setting into use [5, 6].

Implementation is a cyclical process, and the evaluation is one of its key stages, which allows continuous improvement of CPGs development and implementation strategies. It consists of verifying whether clinical practice is being performed as recommended (process evaluation or formative evaluation) and whether the expected results and impact are being reached (summative evaluation) [7,8,9]. Although the importance of the implementation evaluation stage has been recognized, research on how these guidelines are implemented is scarce [10]. This paper focused on the process of assessing CPGs implementation.

To understand and improve this complex process, implementation science provides a systematic set of principles and methods to integrate research findings and other evidence-based practices into routine practice and improve the quality and effectiveness of health services and care [11]. The field of implementation science uses theoretical approaches that have varying degrees of specificity based on the current state of knowledge and are structured based on theories, models, and frameworks [5, 12, 13]. A "Model" is defined as "a simplified depiction of a more complex world with relatively precise assumptions about cause and effect", and a "framework" is defined as "a broad set of constructs that organize concepts and data descriptively without specifying causal relationships" [9]. Although these concepts are distinct, in this paper, their use will be interchangeable, as they are typically like checklists of factors relevant to various aspects of implementation.

There are a variety of theoretical approaches available in implementation science [5, 14], which can make choosing the most appropriate challenging [5]. Some models and frameworks have been categorized as "evaluation models" by providing a structure for evaluating implementation endeavors [15], even though theoretical approaches from other categories can also be applied for evaluation purposes because they specify concepts and constructs that may be operationalized and measured [13]. Two frameworks that can specify implementation aspects that should be evaluated as part of intervention studies are RE-AIM (Reach, Effectiveness, Adoption, Implementation, Maintenance) [16] and PRECEDE-PROCEED (Predisposing, Reinforcing and Enabling Constructs in Educational Diagnosis and Evaluation-Policy, Regulatory, and Organizational Constructs in Educational and Environmental Development) [17]. Although the number of theoretical approaches has grown in recent years, the use of models and frameworks to evaluate the implementation of guidelines still seems to be a challenge.

This article aims to provide a complete map of the models and frameworks applied to assess the implementation of CPGs. The aim is also to subside debate and choices on models and frameworks for the research and evaluation of the implementation processes of CPGs and thus to facilitate the continued development of the field of implementation as well as to contribute to healthcare policy and practice.

Methods

A systematic review was conducted following the Cochrane methodology [18], with adaptations to the "selection process" due to the unique nature of this review (details can be found in the respective section). The review protocol was registered in PROSPERO (registration number: CRD42022335884) on June 7, 2022. This report adhered to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [19] and a completed checklist is provided in Additional File 1.

Eligibility criteria

The SDMO approach (Types of Studies, Types of Data, Types of Methods, Outcomes) [20] was utilized in this systematic review, outlined as follows:

Types of studies

-

All types of studies were considered for inclusion, as the assessment of CPG implementation can benefit from a diverse range of study designs, including randomized clinical trials/experimental studies, scale/tool development, systematic reviews, opinion pieces, qualitative studies, peer-reviewed articles, books, reports, and unpublished theses.

-

Studies were categorized based on their methodological designs, which guided the synthesis, risk of bias assessment, and presentation of results.

-

Study protocols and conference abstracts were excluded due to insufficient information for this review.

Types of data

-

Studies that evaluated the implementation of CPGs either independently or as part of a multifaceted intervention.

-

Guidelines for evaluating CPG implementation.

-

Inclusion of CPGs related to any context, clinical area, intervention, and patient characteristics.

-

No restrictions were placed on publication date or language.

Exclusion criteria

-

General guidelines were excluded, as this review focused on 'models for evaluating clinical practice guidelines implementation' rather than the guidelines themselves.

-

Studies that focused solely on implementation determinants as barriers and enablers were excluded, as this review aimed to explore comprehensive models/frameworks.

-

Studies evaluating programs and policies were excluded.

-

Studies that only assessed implementation strategies (isolated actions) rather than the implementation process itself were excluded.

-

Studies that focused solely on the impact or results of implementation (summative evaluation) were excluded.

Types of methods

Not applicable.

Outcomes

-

All potential models or frameworks for assessing the implementation of CPG (evaluation models/frameworks), as well as their characteristics: name; specific objectives; levels of use (clinical, organizational, and policy); health system (public, private, or both); type of health service (community, ambulatorial, hospital, institutional, homecare); domains or outcomes evaluated; type of recommendation evaluated; context; limitations of the model.

-

Model was defined as "a deliberated simplification of a phenomenon on a specific aspect" [21].

-

Framework was defined as "structure, overview outline, system, or plan consisting of various descriptive categories" [21].

Exclusion criteria

-

Models or frameworks used solely for the CPG development, dissemination, or implementation phase.

-

Models/frameworks used solely for assessment processes other than implementation, such as for the development or dissemination phase.

Data sources and literature search

The systematic search was conducted on July 31, 2022 (and updated on May 15, 2023) in the following electronic databases: MEDLINE/PubMed, Centre for Reviews and Dissemination (CRD), the Cochrane Library, Cumulative Index to Nursing and Allied Health Literature (CINAHL), EMBASE, Epistemonikos, Global Health, Health Systems Evidence, PDQ-Evidence, PsycINFO, Rx for Change (Canadian Agency for Drugs and Technologies in Health, CADTH), Scopus, Web of Science and Virtual Health Library (VHL). The Google Scholar database was used for the manual selection of studies (first 10 pages).

Additionally, hand searches were performed on the lists of references included in the systematic reviews and citations of the included studies, as well as on the websites of institutions working on CPGs development and implementation: Guidelines International Networks (GIN), National Institute for Health and Care Excellence (NICE; United Kingdom), World Health Organization (WHO), Centers for Disease Control and Prevention (CDC; USA), Institute of Medicine (IOM; USA), Australian Department of Health and Aged Care (ADH), Healthcare Improvement Scotland (SIGN), National Health and Medical Research Council (NHMRC; Australia), Queensland Health, The Joanna Briggs Institute (JBI), Ministry of Health and Social Policy of Spain, Ministry of Health of Brazil and Capes Theses and Dissertations Catalog.

The search strategy combined terms related to "clinical practice guidelines" (practice guidelines, practice guidelines as topic, clinical protocols), "implementation", "assessment" (assessment, evaluation), and "models, framework". The free term "monitoring" was not used because it was regularly related to clinical monitoring and not to implementation monitoring. The search strategies adapted for the electronic databases are presented in an additional file (see Additional file 2).

Study selection process

The results of the literature search from scientific databases, excluding the CRD database, were imported into Mendeley Reference Management software to remove duplicates. They were then transferred to the Rayyan platform (https://rayyan.qcri.org) [22] for the screening process. Initially, studies related to the "assessment of implementation of the CPG" were selected. The titles were first screened independently by two pairs of reviewers (first selection: four reviewers, NM, JB, SS, and JG; update: a pair of reviewers, NM and DG). The title screening was broad, including all potentially relevant studies on CPG and the implementation process. Following that, the abstracts were independently screened by the same group of reviewers. The abstract screening was more focused, specifically selecting studies that addressed CPG and the evaluation of the implementation process. In the next step, full-text articles were reviewed independently by a pair of reviewers (NM, DG) to identify those that explicitly presented "models" or "frameworks" for assessing the implementation of the CPG. Disagreements regarding the eligibility of studies were resolved through discussion and consensus, and by a third reviewer (JB) when necessary. One reviewer (NM) conducted manual searches, and the inclusion of documents was discussed with the other reviewers.

Risk of bias assessment of studies

The selected studies were independently classified and evaluated according to their methodological designs by two investigators (NM and JG). This review employed JBI’s critical appraisal tools to assess the trustworthiness, relevance and results of the included studies [23] and these tools are presented in additional files (see Additional file 3 and Additional file 4). Disagreements were resolved by consensus or consultation with the other reviewers. Methodological guidelines and noncomparative and before–after studies were not evaluated because JBI does not have specific tools for assessing these types of documents. Although the studies were assessed for quality, they were not excluded on this basis.

Data extraction

The data was independently extracted by two reviewers (NM, DG) using a Microsoft Excel spreadsheet. Discrepancies were discussed and resolved by consensus. The following information was extracted:

-

Document characteristics: author; year of publication; title; study design; instrument of evaluation; country; guideline context;

-

Usage context of the models: specific objectives; level of use (clinical, organizational, and policy); type of health service (community, ambulatorial, hospital, institutional); target group (guideline developers, clinicians; health professionals; health-policy decision-makers; health-care organizations; service managers);

-

Model and framework characteristics: name, domain evaluated, and model limitations.

The set of information to be extracted, shown in the systematic review protocol, was adjusted to improve the organization of the analysis.

The "level of use" refers to the scope of the model used. "Clinical" was considered when the evaluation focused on individual practices, "organizational" when practices were within a health service institution, and "policy" when the evaluation was more systemic and covered different health services or institutions.

The "type of health service" indicated the category of health service where the model/framework was used (or can be used) to assess the implementation of the CPG, related to the complexity of healthcare. "Community" is related to primary health care; "ambulatorial" is related to secondary health care; "hospital" is related to tertiary health care; and "institutional" represented models/frameworks not specific to a particular type of health service.

The "target group" included stakeholders related to the use of the model/framework for evaluating the implementation of the CPG, such as clinicians, health professionals, guideline developers, health policy-makers, health organizations, and service managers.

The category "health system" (public, private, or both) mentioned in the systematic review protocol was not found in the literature obtained and was removed as an extraction variable. Similarly, the variables "type of recommendation evaluated" and "context" were grouped because the same information was included in the "guideline context" section of the study.

Some selected documents presented models or frameworks recognized by the scientific field, including some that were validated. However, some studies adapted the model to this context. Therefore, the domain analysis covered all models or frameworks domains evaluated by (or suggested for evaluation by) the document analyzed.

Data analysis and synthesis

The results were tabulated using narrative synthesis with an aggregative approach, without meta-analysis, aiming to summarize the documents descriptively for the organization, description, interpretation and explanation of the study findings [24, 25].

The model/framework domains evaluated in each document were studied according to Nilsen et al.’s constructs: "strategies", "context", "outcomes", "fidelity", "adaptation" and "sustainability". For this study, "strategies" were described as structured and planned initiatives used to enhance the implementation of clinical practice [26].

The definition of "context" varies in the literature. Despite that, this review considered it as the set of circumstances or factors surrounding a particular implementation effort, such as organizational support, financial resources, social relations and support, leadership, and organizational culture [26, 27]. The domain "context" was subdivided according to the level of health care into "micro" (individual perspective), "meso" (organizational perspective), "macro" (systemic perspective), and "multiple" (when there is an issue involving more than one level of health care).

The "outcomes" domain was related to the results of the implementation process (unlike clinical outcomes) and was stratified according to the following constructs: acceptability, appropriateness, feasibility, adoption, cost, and penetration. All these concepts align with the definitions of Proctor et al. (2011), although we decided to separate "fidelity" and "sustainability" as independent domains similar to Nilsen [26, 28].

"Fidelity" and "adaptation" were considered the same domain, as they are complementary pieces of the same issue. In this study, implementation fidelity refers to how closely guidelines are followed as intended by their developers or designers. On the other hand, adaptation involves making changes to the content or delivery of a guideline to better fit the needs of a specific context. The "sustainability" domain was defined as evaluations about the continuation or permanence over time of the CPG implementation.

Additionally, the domain "process" was utilized to address issues related to the implementation process itself, rather than focusing solely on the outcomes of the implementation process, as done by Wang et al. [14]. Furthermore, the "intervention" domain was introduced to distinguish aspects related to the CPG characteristics that can impact its implementation, such as the complexity of the recommendation.

A subgroup analysis was performed with models and frameworks categorized based on their levels of use (clinical, organizational, and policy) and the type of health service (community, ambulatorial, hospital, institutional) associated with the CPG. The goal is to assist stakeholders (politicians, clinicians, researchers, or others) in selecting the most suitable model for evaluating CPG implementation based on their specific health context.

Results

Search results

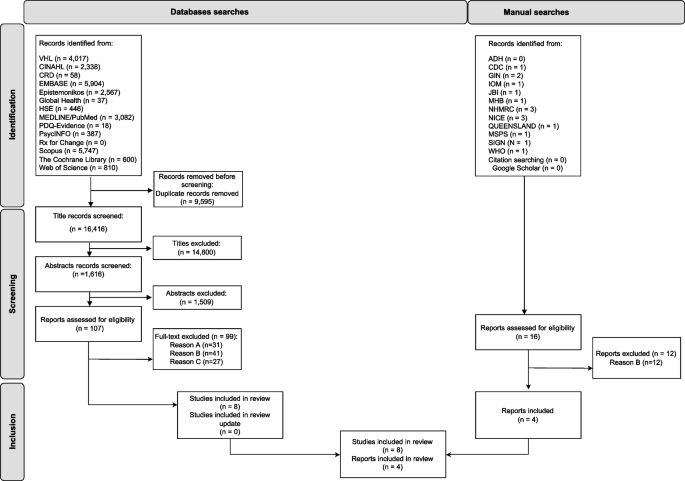

Database searches yielded 26,011 studies, of which 107 full texts were reviewed. During the full-text review, 99 articles were excluded: 41 studies did not mention a model or framework for assessing the implementation of the CPG, 31 studies evaluated only implementation strategies (isolated actions) rather than the implementation process itself, and 27 articles were not related to the implementation assessment. Therefore, eight studies were included in the data analysis. The updated search did not reveal additional relevant studies. The main reason for study exclusion was that they did not use models or frameworks to assess CPG implementation. Additionally, four methodological guidelines were included from the manual search (Fig. 1).

PRISMA diagram. Acronyms: ADH—Australian Department of Health, CINAHL—Cumulative Index to Nursing and Allied Health Literature, CDC—Centers for Disease Control and Prevention, CRD—Centre for Reviews and Dissemination, GIN—Guidelines International Networks, HSE—Health Systems Evidence, IOM—Institute of Medicine, JBI—The Joanna Briggs Institute, MHB—Ministry of Health of Brazil, NICE—National Institute for Health and Care Excellence, NHMRC—National Health and Medical Research Council, MSPS – Ministerio de Sanidad Y Política Social (Spain), SIGN—Scottish Intercollegiate Guidelines Network, VHL – Virtual Health Library, WHO—World Health Organization. Legend: Reason A –The study evaluated only implementation strategies (isolated actions) rather than the implementation process itself. Reason B – The study did not mention a model or framework for assessing the implementation of the intervention. Reason C – The study was not related to the implementation assessment. Adapted from Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372:n71. https://doi.org/10.1136/bmj.n71. For more information, visit:

Risk of bias assessment of studies

According to the JBI’s critical appraisal tools, the overall assessment of the studies indicates their acceptance for the systematic review.

The cross-sectional studies lacked clear information regarding "confounding factors" or "strategies to address confounding factors". This was understandable given the nature of the study, where such details are not typically included. However, the reviewers did not find this lack of information to be critical, allowing the studies to be included in the review. The results of this methodological quality assessment can be found in an additional file (see Additional file 5).

In the qualitative studies, there was some ambiguity regarding the questions: "Is there a statement locating the researcher culturally or theoretically?" and "Is the influence of the researcher on the research, and vice versa, addressed?". However, the reviewers decided to include the studies and deemed the methodological quality sufficient for the analysis in this article, based on the other information analyzed. The results of this methodological quality assessment can be found in an additional file (see Additional file 6).

Documents characteristics (Table 1)

The documents were directed to several continents: Australia/Oceania (4/12) [31, 33, 36, 37], North America (4/12 [30, 32, 38, 39], Europe (2/12 [29, 35] and Asia (2/12) [34, 40]. The types of documents were classified as cross-sectional studies (4/12) [29, 32, 34, 38], methodological guidelines (4/12) [33, 35,36,37], mixed methods studies (3/12) [30, 31, 39] or noncomparative studies (1/12) [40]. In terms of the instrument of evaluation, most of the documents used a survey/questionnaire (6/12) [29,30,31,32, 34, 38], while three (3/12) used qualitative instruments (interviews, group discussions) [30, 31, 39], one used a checklist [37], one used an audit [33] and three (3/12) did not define a specific instrument to measure [35, 36, 40].

Considering the clinical areas covered, most studies evaluated the implementation of nonspecific (general) clinical areas [29, 33, 35,36,37, 40]. However, some studies focused on specific clinical contexts, such as mental health [32, 38], oncology [39], fall prevention [31], spinal cord injury [30], and sexually transmitted infections [34].

Usage context of the models (Table 1)

Specific objectives

All the studies highlighted the purpose of guiding the process of evaluating the implementation of CPGs, even if they evaluated CPGs from generic or different clinical areas.

Levels of use

The most common level of use of the models/frameworks identified to assess the implementation of CPGs was policy (6/12) [33, 35,36,37, 39, 40]. In this level, the model is used in a systematic way to evaluate all the processes involved in CPGs implementation and is primarily related to methodological guidelines. This was followed by the organizational level of use (5/12) [30,31,32, 38, 39], where the model is used to evaluate the implementation of CPGs in a specific institution, considering its specific environment. Finally, the clinical level of use (2/12) [29, 34] focuses on individual practice and the factors that can influence the implementation of CPGs by professionals.

Type of health service

Institutional services were predominant (5/12) [33, 35,36,37, 40] and included methodological guidelines and a study of model development and validation. Hospitals were the second most common type of health service (4/12) [29,30,31, 34], followed by ambulatorial (2/12) [32, 34] and community health services (1/12) [32]. Two studies did not specify which type of health service the assessment addressed [38, 39].

Target group

The focus of the target group was professionals directly involved in clinical practice (6/12) [29, 31, 32, 34, 38, 40], namely, health professionals and clinicians. Other less related stakeholders included guideline developers (2/12) [39, 40], health policy decision makers (1/12) [39], and healthcare organizations (1/12) [39]. The target group was not defined in the methodological guidelines, although all the mentioned stakeholders could be related to these documents.

Model and framework characteristics

Models and frameworks for assessing the implementation of CPGs

The Consolidated Framework for Implementation Research (CFIR) [31, 38] and the Promoting Action on Research Implementation in Health Systems (PARiHS) framework [29, 30] were the most commonly employed frameworks within the selected documents. The other models mentioned were: Goal commitment and implementation of practice guidelines framework [32]; Guideline to identify key indicators [35]; Guideline implementation checklist [37]; Guideline implementation evaluation tool [40]; JBI Implementation Framework [33]; Reach, effectiveness, adoption, implementation and maintenance (RE-AIM) framework [34]; The Guideline Implementability Framework [39] and an unnamed model [36].

Domains evaluated

The number of domains evaluated (or suggested for evaluation) by the documents varied between three and five, with the majority focusing on three domains. All the models addressed the domain "context", with a particular emphasis on the micro level of the health care context (8/12) [29, 31, 34,35,36,37,38,39], followed by the multilevel (7/12) [29, 31,32,33, 38,39,40], meso level (4/12) [30, 35, 39, 40] and macro level (2/12) [37, 39]. The "Outcome" domain was evaluated in nine models. Within this domain, the most frequently evaluated subdomain was "adoption" (6/12) [29, 32, 34,35,36,37], followed by "acceptability" (4/12) [30, 32, 35, 39], "appropriateness" (3/12) [32, 34, 36], "feasibility" (3/12) [29, 32, 36], "cost" (1/12) [35] and "penetration" (1/12) [34]. Regarding the other domains, "Intervention" (8/12) [29, 31, 34,35,36, 38,39,40], "Strategies" (7/12) [29, 30, 33, 35,36,37, 40] and "Process" (5/12) [29, 31,32,33, 38] were frequently addressed in the models, while "Sustainability" (1/12) [34] was only found in one model, and "Fidelity/Adaptation" was not observed. The domains presented by the models and frameworks and evaluated in the documents are shown in Table 2.

Limitations of the models

Only two documents mentioned limitations in the use of the model or frameworks. These two studies reported limitations in the use of CFIR: "is complex and cumbersome and requires tailoring of the key variables to the specific context", and "this framework should be supplemented with other important factors and local features to achieve a sound basis for the planning and realization of an ongoing project" [31, 38]. Limitations in the use of other models or frameworks are not reported.

Subgroup analysis

Following the subgroup analysis (Table 3), five different models/frameworks were utilized at the policy level by institutional health services. These included the Guideline Implementation Evaluation Tool [40], the NHMRC tool (model name not defined) [36], the JBI Implementation Framework + GRiP [33], Guideline to identify key indicators [35], and the Guideline implementation checklist [37]. Additionally, the "Guideline Implementability Framework" [39] was implemented at the policy level without restrictions based on the type of health service. Regarding the organizational level, the models used varied depending on the type of service. The "Goal commitment and implementation of practice guidelines framework" [32] was applied in community and ambulatory health services, while "PARiHS" [29, 30] and "CFIR" [31, 38] were utilized in hospitals. In contexts where the type of health service was not defined, "CFIR" [31, 38] and "The Guideline Implementability Framework" [39] were employed. Lastly, at the clinical level, "RE-AIM" [34] was utilized in ambulatory and hospital services, and PARiHS [29, 30] was specifically used in hospital services.

Discussion

Key findings

This systematic review identified 10 models/ frameworks used to assess the implementation of CPGs in various health system contexts. These documents shared similar objectives in utilizing models and frameworks for assessment. The primary level of use was policy, the most common type of health service was institutional, and the main target group of the documents was professionals directly involved in clinical practice. The models and frameworks presented varied analytical domains, with sometimes divergent concepts used in these domains. This study is innovative in its emphasis on the evaluation stage of CPG implementation and in summarizing aspects and domains aimed at the practical application of these models.

The small number of documents contrasts with studies that present an extensive range of models and frameworks available in implementation science. The findings suggest that the use of models and frameworks to evaluate the implementation of CPGs is still in its early stages. Among the selected documents, there was a predominance of cross-sectional studies and methodological guidelines, which strongly influenced how the implementation evaluation was conducted. This was primarily done through surveys/questionnaires, qualitative methods (interviews, group discussions), and non-specific measurement instruments. Regarding the subject areas evaluated, most studies focused on a general clinical area, while others explored different clinical areas. This suggests that the evaluation of CPG implementation has been carried out in various contexts.

The models were chosen independently of the categories proposed in the literature, with their usage categorized for purposes other than implementation evaluation, as is the case with CFIR and PARiHS. This practice was described by Nilsen et al. who suggested that models and frameworks from other categories can also be applied for evaluation purposes because they specify concepts and constructs that may be operationalized and measured [14, 15, 42, 43].

The results highlight the increased use of models and frameworks in evaluation processes at the policy level and institutional environments, followed by the organizational level in hospital settings. This finding contradicts a review that reported the policy level as an area that was not as well studied [44]. The use of different models at the institutional level is also emphasized in the subgroup analysis. This may suggest that the greater the impact (social, financial/economic, and organizational) of implementing CPGs, the greater the interest and need to establish well-defined and robust processes. In this context, the evaluation stage stands out as crucial, and the investment of resources and efforts to structure this stage becomes even more advantageous [10, 45]. Two studies (16,7%) evaluated the implementation of CPGs at the individual level (clinical level). These studies stand out for their potential to analyze variations in clinical practice in greater depth.

In contrast to the level of use and type of health service most strongly indicated in the documents, with systemic approaches, the target group most observed was professionals directly involved in clinical practice. This suggests an emphasis on evaluating individual behaviors. This same emphasis is observed in the analysis of the models, in which there is a predominance of evaluating the micro level of the health context and the "adoption" subdomain, in contrast with the sub-use of domains such as "cost" and "process". Cassetti et al. observed the same phenomenon in their review, in which studies evaluating the implementation of CPGs mainly adopted a behavioral change approach to tackle those issues, without considering the influence of wider social determinants of health [10]. However, the literature widely reiterates that multiple factors impact the implementation of CPGs, and different actions are required to make them effective [6, 46, 47]. As a result, there is enormous potential for the development and adaptation of models and frameworks aimed at more systemic evaluation processes that consider institutional and organizational aspects.

In analyzing the model domains, most models focused on evaluating only some aspects of implementation (three domains). All models evaluated the "context", highlighting its significant influence on implementation [9, 26]. Context is an essential effect modifier for providing research evidence to guide decisions on implementation strategies [48]. Contextualizing a guideline involves integrating research or other evidence into a specific circumstance [49]. The analysis of this domain was adjusted to include all possible contextual aspects, even if they were initially allocated to other domains. Some contextual aspects presented by the models vary in comprehensiveness, such as the assessment of the "timing and nature of stakeholder engagement" [39], which includes individual engagement by healthcare professionals and organizational involvement in CPG implementation. While the importance of context is universally recognized, its conceptualization and interpretation differ across studies and models. This divergence is also evident in other domains, consistent with existing literature [14]. Efforts to address this conceptual divergence in implementation science are ongoing, but further research and development are needed in this field [26].

The main subdomain evaluated was "adoption" within the outcome domain. This may be attributed to the ease of accessing information on the adoption of the CPG, whether through computerized system records, patient records, or self-reports from healthcare professionals or patients themselves. The "acceptability" subdomain pertains to the perception among implementation stakeholders that a particular CPG is agreeable, palatable or satisfactory. On the other hand, "appropriateness" encompasses the perceived fit, relevance or compatibility of the CPG for a specific practice setting, provider, or consumer, or its perceived fit to address a particular issue or problem [26]. Both subdomains are subjective and rely on stakeholders' interpretations and perceptions of the issue being analyzed, making them susceptible to reporting biases. Moreover, obtaining this information requires direct consultation with stakeholders, which can be challenging for some evaluation processes, particularly in institutional contexts.

The evaluation of the subdomains "feasibility" (the extent to which a CPG can be successfully used or carried out within a given agency or setting), "cost" (the cost impact of an implementation effort), and "penetration" (the extent to which an intervention or treatment is integrated within a service setting and its subsystems) [26] was rarely observed in the documents. This may be related to the greater complexity of obtaining information on these aspects, as they involve cross-cutting and multifactorial issues. In other words, it would be difficult to gather this information during evaluations with health practitioners as the target group. This highlights the need for evaluation processes of CPGs implementation involving multiple stakeholders, even if the evaluation is adjusted for each of these groups.

Although the models do not establish the "intervention" domain, we thought it pertinent in this study to delimit the issues that are intrinsic to CPGs, such as methodological quality or clarity in establishing recommendations. These issues were quite common in the models evaluated but were considered in other domains (e.g., in "context"). Studies have reported the importance of evaluating these issues intrinsic to CPGs [47, 50] and their influence on the implementation process [51].

The models explicitly present the "strategies" domain, and its evaluation was usually included in the assessments. This is likely due to the expansion of scientific and practical studies in implementation science that involve theoretical approaches to the development and application of interventions to improve the implementation of evidence-based practices. However, these interventions themselves are not guaranteed to be effective, as reported in a previous review that showed unclear results indicating that the strategies had affected successful implementation [52]. Furthermore, model domains end up not covering all the complexity surrounding the strategies and their development and implementation process. For example, the ‘Guideline implementation evaluation tool’ evaluates whether guideline developers have designed and provided auxiliary tools to promote the implementation of guidelines [40], but this does not mean that these tools would work as expected.

The "process" domain was identified in the CFIR [31, 38], JBI/GRiP [33], and PARiHS [29] frameworks. While it may be included in other domains of analysis, its distinct separation is crucial for defining operational issues when assessing the implementation process, such as determining if and how the use of the mentioned CPG was evaluated [3]. Despite its presence in multiple models, there is still limited detail in the evaluation guidelines, which makes it difficult to operationalize the concept. Further research is needed to better define the "process" domain and its connections and boundaries with other domains.

The domain of "sustainability" was only observed in the RE-AIM framework, which is categorized as an evaluation framework [34]. In its acronym, the letter M stands for "maintenance" and corresponds to the assessment of whether the user maintains use, typically longer than 6 months. The presence of this domain highlights the need for continuous evaluation of CPGs implementation in the short, medium, and long term. Although the RE-AIM framework includes this domain, it was not used in the questionnaire developed in the study. One probable reason is that the evaluation of CPGs implementation is still conducted on a one-off basis and not as a continuous improvement process. Considering that changes in clinical practices are inherent over time, evaluating and monitoring changes throughout the duration of the CPG could be an important strategy for ensuring its implementation. This is an emerging field that requires additional investment and research.

The "Fidelity/Adaptation" domain was not observed in the models. These emerging concepts involve the extent to which a CPG is being conducted exactly as planned or whether it is undergoing adjustments and adaptations. Whether or not there is fidelity or adaptation in the implementation of CPGs does not presuppose greater or lesser effectiveness; after all, some adaptations may be necessary to implement general CPGs in specific contexts. The absence of this domain in all the models and frameworks may suggest that they are not relevant aspects for evaluating implementation or that there is a lack of knowledge of these complex concepts. This may suggest difficulty in expressing concepts in specific evaluative questions. However, further studies are warranted to determine the comprehensiveness of these concepts.

It is important to note the customization of the domains of analysis, with some domains presented in the models not being evaluated in the studies, while others were complementarily included. This can be seen in Jeong et al. [34], where the "intervention" domain in the evaluation with the RE-AIM framework reinforced the aim of theoretical approaches such as guiding the process and not determining norms. Despite this, few limitations were reported for the models, suggesting that the use of models in these studies reflects the application of these models to defined contexts without a deep critical analysis of their domains.

Limitations

This review has several limitations. First, only a few studies and methodological guidelines that explicitly present models and frameworks for assessing the implementation of CPGs have been found. This means that few alternative models could be analyzed and presented in this review. Second, this review adopted multiple analytical categories (e.g., level of use, health service, target group, and domains evaluated), whose terminology has varied enormously in the studies and documents selected, especially for the "domains evaluated" category. This difficulty in harmonizing the taxonomy used in the area has already been reported [26] and has significant potential to confuse. For this reason, studies and initiatives are needed to align understandings between concepts and, as far as possible, standardize them. Third, in some studies/documents, the information extracted was not clear about the analytical category. This required an in-depth interpretative process of the studies, which was conducted in pairs to avoid inappropriate interpretations.

Implications

This study contributes to the literature and clinical practice management by describing models and frameworks specifically used to assess the implementation of CPGs based on their level of use, type of health service, target group related to the CPG, and the evaluated domains. While there are existing reviews on the theories, frameworks, and models used in implementation science, this review addresses aspects not previously covered in the literature. This valuable information can assist stakeholders (such as politicians, clinicians, researchers, etc.) in selecting or adapting the most appropriate model to assess CPG implementation based on their health context. Furthermore, this study is expected to guide future research on developing or adapting models to assess the implementation of CPGs in various contexts.

Conclusion

The use of models and frameworks to evaluate the implementation remains a challenge. Studies should clearly state the level of model use, the type of health service evaluated, and the target group. The domains evaluated in these models may need adaptation to specific contexts. Nevertheless, utilizing models to assess CPGs implementation is crucial as they can guide a more thorough and systematic evaluation process, aiding in the continuous improvement of CPGs implementation. The findings of this systematic review offer valuable insights for stakeholders in selecting or adjusting models and frameworks for CPGs evaluation, supporting future theoretical advancements and research.

Availability of data and materials

Not applicable.

Abbreviations

- ADH:

-

Australian Department of Health and Aged Care

- CADTH:

-

Canadian Agency for Drugs and Technologies in Health

- CDC:

-

Centers for Disease Control and

- CFIR:

-

Consolidated Framework for Implementation Research

- CINAHL:

-

Cumulative Index to Nursing and Allied Health Literature

- CPG:

-

Clinical practice guideline

- CRD:

-

Centre for Reviews and Dissemination

- GIN:

-

Guidelines International Networks

- GRiP:

-

Getting Research into Practice

- HSE:

-

Health Systems Evidence

- IOM:

-

Institute of Medicine

- JBI:

-

The Joanna Briggs Institute

- MHB:

-

Ministry of Health of Brazil

- MSPS:

-

Ministerio de Sanidad y Política Social

- NHMRC:

-

National Health and Medical Research Council

- NICE:

-

National Institute for Health and Care Excellence

- PARiHS:

-

Promoting action on research implementation in health systems framework

- PRECEDE-PROCEED:

-

Predisposing, Reinforcing and Enabling Constructs in Educational Diagnosis and Evaluation-Policy, Regulatory, and Organizational Constructs in Educational and Environmental Development

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PROSPERO:

-

International Prospective Register of Systematic Reviews

- RE-AIM:

-

Reach, effectiveness, adoption, implementation, and maintenance framework

- SIGN:

-

Healthcare Improvement Scotland

- USA:

-

United States of America

- VHL:

-

Virtual Health Library

- WHO:

-

World Health Organization

References

Medicine I of. Crossing the Quality Chasm: A New Health System for the 21st Century. 2001. Available from: http://www.nap.edu/catalog/10027. Cited 2022 Sep 29.

Field MJ, Lohr KN. Clinical Practice Guidelines: Directions for a New Program. Washington DC: National Academy Press. 1990. Available from: https://www.nap.edu/read/1626/chapter/8 Cited 2020 Sep 2.

Dawson A, Henriksen B, Cortvriend P. Guideline Implementation in Standardized Office Workflows and Exam Types. J Prim Care Community Heal. 2019;10. Available from: https://pubmed.ncbi.nlm.nih.gov/30900500/. Cited 2020 Jul 15.

Unverzagt S, Oemler M, Braun K, Klement A. Strategies for guideline implementation in primary care focusing on patients with cardiovascular disease: a systematic review. Fam Pract. 2014;31(3):247–66. Available from: https://academic.oup.com/fampra/article/31/3/247/608680. Cited 2020 Nov 5.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):1–13. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/s13012-015-0242-0. Cited 2022 May 1.

Mangana F, Massaquoi LD, Moudachirou R, Harrison R, Kaluangila T, Mucinya G, et al. Impact of the implementation of new guidelines on the management of patients with HIV infection at an advanced HIV clinic in Kinshasa, Democratic Republic of Congo (DRC). BMC Infect Dis. 2020;20(1):N.PAG-N.PAG. Available from: https://search.ebscohost.com/login.aspx?direct=true&db=c8h&AN=146325052&.

Browman GP, Levine MN, Mohide EA, Hayward RSA, Pritchard KI, Gafni A, et al. The practice guidelines development cycle: a conceptual tool for practice guidelines development and implementation. 2016;13(2):502–12. https://doi.org/10.1200/JCO.1995.13.2.502.

Killeen SL, Donnellan N, O’Reilly SL, Hanson MA, Rosser ML, Medina VP, et al. Using FIGO Nutrition Checklist counselling in pregnancy: A review to support healthcare professionals. Int J Gynecol Obstet. 2023;160(S1):10–21. Available from: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85146194829&doi=10.1002%2Fijgo.14539&partnerID=40&md5=d0f14e1f6d77d53e719986e6f434498f.

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):1–12. Available from: https://bmcpsychology.biomedcentral.com/articles/10.1186/s40359-015-0089-9. Cited 2020 Nov 5.

Cassetti V, M VLR, Pola-Garcia M, AM G, J JPC, L APDT, et al. An integrative review of the implementation of public health guidelines. Prev Med reports. 2022;29:101867. Available from: http://www.epistemonikos.org/documents/7ad499d8f0eecb964fc1e2c86b11450cbe792a39.

Eccles MP, Mittman BS. Welcome to implementation science. Implementation Science BioMed Central. 2006. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-1-1.

Damschroder LJ. Clarity out of chaos: Use of theory in implementation research. Psychiatry Res. 2020;1(283):112461.

Handley MA, Gorukanti A, Cattamanchi A. Strategies for implementing implementation science: a methodological overview. Emerg Med J. 2016;33(9):660–4. Available from: https://pubmed.ncbi.nlm.nih.gov/26893401/. Cited 2022 Mar 7.

Wang Y, Wong ELY, Nilsen P, Chung VC ho, Tian Y, Yeoh EK. A scoping review of implementation science theories, models, and frameworks — an appraisal of purpose, characteristics, usability, applicability, and testability. Implement Sci. 2023;18(1):1–15. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/s13012-023-01296-x. Cited 2024 Jan 22.

Moullin JC, Dickson KS, Stadnick NA, Albers B, Nilsen P, Broder-Fingert S, et al. Ten recommendations for using implementation frameworks in research and practice. Implement Sci Commun. 2020;1(1):1–12. Available from: https://implementationsciencecomms.biomedcentral.com/articles/10.1186/s43058-020-00023-7. Cited 2022 May 20.

Glasgow RE, Vogt TM, Boles SM. *Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322. Available from: /pmc/articles/PMC1508772/?report=abstract. Cited 2022 May 22.

Asada Y, Lin S, Siegel L, Kong A. Facilitators and Barriers to Implementation and Sustainability of Nutrition and Physical Activity Interventions in Early Childcare Settings: a Systematic Review. Prev Sci. 2023;24(1):64–83. Available from: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85139519721&doi=10.1007%2Fs11121-022-01436-7&partnerID=40&md5=b3c395fdd2b8235182eee518542ebf2b.

Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, et al., editors. Cochrane Handbook for Systematic Reviews of Interventions. version 6. Cochrane; 2022. Available from: https://training.cochrane.org/handbook. Cited 2022 May 23.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372. Available from: https://www.bmj.com/content/372/bmj.n71. Cited 2021 Nov 18.

M C, AD O, E P, JP H, S G. Appendix A: Guide to the contents of a Cochrane Methodology protocol and review. Higgins JP, Green S, eds Cochrane Handb Syst Rev Interv. 2011;Version 5.

Kislov R, Pope C, Martin GP, Wilson PM. Harnessing the power of theorising in implementation science. Implement Sci. 2019;14(1):1–8. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/s13012-019-0957-4. Cited 2024 Jan 22.

Ouzzani M, Hammady H, Fedorowicz Z, Elmagarmid A. Rayyan-a web and mobile app for systematic reviews. Syst Rev. 2016;5(1):1–10. Available from: https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-016-0384-4. Cited 2022 May 20.

JBI. JBI’s Tools Assess Trust, Relevance & Results of Published Papers: Enhancing Evidence Synthesis. Available from: https://jbi.global/critical-appraisal-tools. Cited 2023 Jun 13.

Drisko JW. Qualitative research synthesis: An appreciative and critical introduction. Qual Soc Work. 2020;19(4):736–53.

Pope C, Mays N, Popay J. Synthesising qualitative and quantitative health evidence: A guide to methods. 2007. Available from: https://books.google.com.br/books?hl=pt-PT&lr=&id=L3fbE6oio8kC&oi=fnd&pg=PR6&dq=synthesizing+qualitative+and+quantitative+health+evidence&ots=sfELNUoZGq&sig=bQt5wt7sPKkf7hwKUvxq2Ek-p2Q#v=onepage&q=synthesizing=qualitative=and=quantitative=health=evidence&. Cited 2022 May 22.

Nilsen P, Birken SA, Edward Elgar Publishing. Handbook on implementation science. 542. Available from: https://www.e-elgar.com/shop/gbp/handbook-on-implementation-science-9781788975988.html. Cited 2023 Apr 15.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):1–15. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/1748-5908-4-50. Cited 2023 Jun 13.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. Available from: https://pubmed.ncbi.nlm.nih.gov/20957426/. Cited 2023 Jun 11.

Bahtsevani C, Willman A, Khalaf A, Östman M, Ostman M. Developing an instrument for evaluating implementation of clinical practice guidelines: a test-retest study. J Eval Clin Pract. 2008;14(5):839–46. Available from: https://search.ebscohost.com/login.aspx?direct=true&db=c8h&AN=105569473&. Cited 2023 Jan 18.

Balbale SN, Hill JN, Guihan M, Hogan TP, Cameron KA, Goldstein B, et al. Evaluating implementation of methicillin-resistant Staphylococcus aureus (MRSA) prevention guidelines in spinal cord injury centers using the PARIHS framework: a mixed methods study. Implement Sci. 2015;10(1):130. Available from: https://pubmed.ncbi.nlm.nih.gov/26353798/. Cited 2023 Apr 3.

Breimaier HE, Heckemann B, Halfens RJGG, Lohrmann C. The Consolidated Framework for Implementation Research (CFIR): a useful theoretical framework for guiding and evaluating a guideline implementation process in a hospital-based nursing practice. BMC Nurs. 2015;14(1):43. Available from: https://search.ebscohost.com/login.aspx?direct=true&db=c8h&AN=109221169&. Cited 2023 Apr 3.

Chou AF, Vaughn TE, McCoy KD, Doebbeling BN. Implementation of evidence-based practices: Applying a goal commitment framework. Health Care Manage Rev. 2011;36(1):4–17. Available from: https://pubmed.ncbi.nlm.nih.gov/21157225/. Cited 2023 Apr 30.

Porritt K, McArthur A, Lockwood C, Munn Z. JBI Manual for Evidence Implementation. JBI Handbook for Evidence Implementation. JBI; 2020. Available from: https://jbi-global-wiki.refined.site/space/JHEI . Cited 2023 Apr 3.

Jeong HJJ, Jo HSS, Oh MKK, Oh HWW. Applying the RE-AIM Framework to Evaluate the Dissemination and Implementation of Clinical Practice Guidelines for Sexually Transmitted Infections. J Korean Med Sci. 2015;30(7):847–52. Available from: https://pubmed.ncbi.nlm.nih.gov/26130944/. Cited 2023 Apr 3.

GPC G de trabajo sobre implementación de. Implementación de Guías de Práctica Clínica en el Sistema Nacional de Salud. Manual Metodológico. 2009. Available from: https://portal.guiasalud.es/wp-content/uploads/2019/01/manual_implementacion.pdf . Cited 2023 Apr 3.

Australia C of. A guide to the development, implementation and evaluation of clinical practice guidelines. National Health and Medical Research Council; 1998. Available from: https://www.health.qld.gov.au/__data/assets/pdf_file/0029/143696/nhmrc_clinprgde.pdf.

Health Q. Guideline implementation checklist Translating evidence into best clinical practice. 2022.

Quittner AL, Abbott J, Hussain S, Ong T, Uluer A, Hempstead S, et al. Integration of mental health screening and treatment into cystic fibrosis clinics: Evaluation of initial implementation in 84 programs across the United States. Pediatr Pulmonol. 2020;55(11):2995–3004. Available from: https://www.embase.com/search/results?subaction=viewrecord&id=L2005630887&from=export. Cited 2023 Apr 3.

Urquhart R, Woodside H, Kendell C, Porter GA. Examining the implementation of clinical practice guidelines for the management of adult cancers: A mixed methods study. J Eval Clin Pract. 2019;25(4):656–63. Available from: https://search.ebscohost.com/login.aspx?direct=true&db=c8h&AN=137375535&. Cited 2023 Apr 3.

Yinghui J, Zhihui Z, Canran H, Flute Y, Yunyun W, Siyu Y, et al. Development and validation for evaluation of an evaluation tool for guideline implementation. Chinese J Evidence-Based Med. 2022;22(1):111–9. Available from: https://www.embase.com/search/results?subaction=viewrecord&id=L2016924877&from=export.

Breimaier HE, Halfens RJG, Lohrmann C. Effectiveness of multifaceted and tailored strategies to implement a fall-prevention guideline into acute care nursing practice: a before-and-after, mixed-method study using a participatory action research approach. BMC Nurs. 2015;14(1):18. Available from: https://search.ebscohost.com/login.aspx?direct=true&db=c8h&AN=103220991&.

Lai J, Maher L, Li C, Zhou C, Alelayan H, Fu J, et al. Translation and cross-cultural adaptation of the National Health Service Sustainability Model to the Chinese healthcare context. BMC Nurs. 2023;22(1). Available from: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85153237164&doi=10.1186%2Fs12912-023-01293-x&partnerID=40&md5=0857c3163d25ce85e01363fc3a668654.

Zhao J, Li X, Yan L, Yu Y, Hu J, Li SA, et al. The use of theories, frameworks, or models in knowledge translation studies in healthcare settings in China: a scoping review protocol. Syst Rev. 2021;10(1):13. Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7792291.

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–50. Available from: https://pubmed.ncbi.nlm.nih.gov/22898128/. Cited 2023 Apr 4.

Phulkerd S, Lawrence M, Vandevijvere S, Sacks G, Worsley A, Tangcharoensathien V. A review of methods and tools to assess the implementation of government policies to create healthy food environments for preventing obesity and diet-related non-communicable diseases. Implement Sci. 2016;11(1):1–13. Available from: https://implementationscience.biomedcentral.com/articles/10.1186/s13012-016-0379-5. Cited 2022 May 1.

Buss PM, Pellegrini FA. A Saúde e seus Determinantes Sociais. PHYSIS Rev Saúde Coletiva. 2007;17(1):77–93.

Pereira VC, Silva SN, Carvalho VKSS, Zanghelini F, Barreto JOMM. Strategies for the implementation of clinical practice guidelines in public health: an overview of systematic reviews. Heal Res Policy Syst. 2022;20(1):13. Available from: https://health-policy-systems.biomedcentral.com/articles/10.1186/s12961-022-00815-4. Cited 2022 Feb 21.

Grimshaw J, Eccles M, Tetroe J. Implementing clinical guidelines: current evidence and future implications. J Contin Educ Health Prof. 2004;24 Suppl 1:S31-7. Available from: https://pubmed.ncbi.nlm.nih.gov/15712775/. Cited 2021 Nov 9.

Lotfi T, Stevens A, Akl EA, Falavigna M, Kredo T, Mathew JL, et al. Getting trustworthy guidelines into the hands of decision-makers and supporting their consideration of contextual factors for implementation globally: recommendation mapping of COVID-19 guidelines. J Clin Epidemiol. 2021;135:182–6. Available from: https://pubmed.ncbi.nlm.nih.gov/33836255/. Cited 2024 Jan 25.

Lenzer J. Why we can’t trust clinical guidelines. BMJ. 2013;346(7913). Available from: https://pubmed.ncbi.nlm.nih.gov/23771225/. Cited 2024 Jan 25.

Molino C de GRC, Ribeiro E, Romano-Lieber NS, Stein AT, de Melo DO. Methodological quality and transparency of clinical practice guidelines for the pharmacological treatment of non-communicable diseases using the AGREE II instrument: A systematic review protocol. Syst Rev. 2017;6(1):1–6. Available from: https://systematicreviewsjournal.biomedcentral.com/articles/10.1186/s13643-017-0621-5. Cited 2024 Jan 25.

Albers B, Mildon R, Lyon AR, Shlonsky A. Implementation frameworks in child, youth and family services – Results from a scoping review. Child Youth Serv Rev. 2017;1(81):101–16.

Acknowledgements

Not applicable

Funding

This study is supported by the Fundação de Apoio à Pesquisa do Distrito Federal (FAPDF). FAPDF Award Term (TOA) nº 44/2024—FAPDF/SUCTI/COOBE (SEI/GDF – Process 00193–00000404/2024–22). The content in this article is solely the responsibility of the authors and does not necessarily represent the official views of the FAPDF.

Author information

Authors and Affiliations

Contributions

NFM and JOMB conceived the idea and the protocol for this study. NFM conducted the literature search. NFM, SNS, JMG and JOMB conducted the data collection with advice and consensus gathering from JOMB. The NFM and JMG assessed the quality of the studies. NFM and DFG conducted the data extraction. NFM performed the analysis and synthesis of the results with advice and consensus gathering from JOMB. NFM drafted the manuscript. JOMB critically revised the first version of the manuscript. All the authors revised and approved the submitted version.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

13012_2024_1389_MOESM1_ESM.docx

Additional file 1: PRISMA checklist. Description of data: Completed PRISMA checklist used for reporting the results of this systematic review.

13012_2024_1389_MOESM3_ESM.doc

Additional file 3: JBI’s critical appraisal tools for cross-sectional studies. Description of data: JBI’s critical appraisal tools to assess the trustworthiness, relevance, and results of the included studies. This is specific for cross-sectional studies.

13012_2024_1389_MOESM4_ESM.doc

Additional file 4: JBI’s critical appraisal tools for qualitative studies. Description of data: JBI’s critical appraisal tools to assess the trustworthiness, relevance, and results of the included studies. This is specific for qualitative studies.

13012_2024_1389_MOESM5_ESM.doc

Additional file 5: Methodological quality assessment results for cross-sectional studies. Description of data: Methodological quality assessment results for cross-sectional studies using JBI’s critical appraisal tools.

13012_2024_1389_MOESM6_ESM.doc

Additional file 6: Methodological quality assessment results for the qualitative studies. Description of data: Methodological quality assessment results for qualitative studies using JBI’s critical appraisal tools.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Freitas de Mello, N., Nascimento Silva, S., Gomes, D.F. et al. Models and frameworks for assessing the implementation of clinical practice guidelines: a systematic review. Implementation Sci 19, 59 (2024). https://doi.org/10.1186/s13012-024-01389-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-024-01389-1