Abstract

Background

The Hospital Value Based Purchasing Program (HVBP) in the United States, announced in 2010 and implemented since 2013 by the Centers for Medicare and Medicaid Services (CMS), introduced payment penalties and bonuses based on hospital performance on patient 30-day mortality and other indicators. Evidence on the impact of this program is limited and reliant on the choice of program-exempt hospitals as controls. As program-exempt hospitals may have systematic differences with program-participating hospitals, in this study we used an alternative approach wherein program-participating hospitals are stratified by their financial exposure to penalty, and examined changes in hospital performance on 30-day mortality between hospitals with high vs. low financial exposure to penalty.

Methods

Our study examined all hospitals reimbursed through the Medicare Inpatient Prospective Payment System (IPPS) – which include most community and tertiary acute care hospitals – from 2009 to 2016. A hospital’s financial exposure to HVBP penalties was measured by the share of its annual aggregate inpatient days provided to Medicare patients (“Medicare bed share”). The main outcome measures were annual hospital-level 30-day risk-adjusted mortality rates for acute myocardial infarction (AMI), heart failure (HF) and pneumonia patients. Using difference-in-differences models we estimated the change in the outcomes in high vs. low Medicare bed share hospitals following HVBP.

Results

In the study cohort of 1902 US hospitals, average Medicare bed share was 61 and 41% in high (n = 540) and low (n = 1362) Medicare bed share hospitals, respectively. High Medicare bed share hospitals were more likely to have smaller bed size and less likely to be teaching hospitals, but ownership type was similar among both Medicare bed share groups.. Among low Medicare bed share (control) hospitals, baseline (pre-HVBP) 30-day mortality was 16.0% (AMI), 10.9% (HF) and 11.4% (pneumonia). In both high and low Medicare bed share hospitals 30-day mortality experienced a secular decrease for AMI, increase for HF and pneumonia; differences in the pre-post change between the two hospital groups were small (< 0.12%) and not significant across all three conditions.

Conclusions

HVBP was not associated with a meaningful change in 30-day mortality across hospitals with differential exposure to the program penalty.

Similar content being viewed by others

Background

The three aims of better health, better care, and lower costs of the U.S. Department of Health and Human Services’ National Quality Strategy are at the focal-point of the Centers for Medicare and Medicaid Services’ (CMS’) notion of value [1]. The Affordable Care Act (ACA), passed in 2010, introduced hospital-based reforms that sought to move from volume-based to value-based care through financial incentives to hospitals to improve performance [2]. The Hospital Value Based Purchasing Program (HVBP) is a key mechanism through which CMS ties financial payment adjustments to hospital performance for acute care hospitals paid under the Inpatient Prospective Payment System (IPPS), covering most community and tertiary acute care hospitals in the US [3]. Starting in fiscal year (FY) 2013, IPPS hospitals were subject to a financial penalty or bonus – in the form of a percentage reduction or increase not just in the reimbursement for these admission cohorts but for all admissions of Medicare patients – depending on their past performance along multiple domains of inpatient care: patient experience; 30-day mortality; clinical process of care; and efficiency [4]. The 30-day mortality measure, accounting for 25% of the total score, was introduced into the HVBP performance scoring in 2014, and applied to acute myocardial infarction (AMI), heart failure (HF) and pneumonia admission cohorts. Maximum penalty was 1% (reduction in Medicare reimbursement) in 2013 and increased to 1.75% in 2016 and 2% since 2017.

Evidence on the impact of HVBP on the target outcomes is limited. Two prior studies, which found that HVBP was not associated with any change in 30-day mortality, compared mortality changes in IPPS hospitals with those in non-IPPS hospitals [5, 6]. These studies used critical access hospitals or hospitals in the state of Maryland as the comparison group because neither were subject to the HVBP penalties. However, critical access hospitals are small rural hospitals (with fewer than 25 inpatient beds) whose operations may be structurally different from IPPS hospitals [7]. Furthermore, as these hospitals are not mandated to report their performance, those who voluntarily report performance may be selectively different from those that do not. Similarly, while hospitals in the state of Maryland were not exposed to the HVBP, as they are not paid under IPPS, they were exposed to a similar set of state imposed financial incentives to improve quality during the relevant time period [8, 9]. Moreover, these studies had limited follow-up data – one to two years – after the start of the HVBP and were unable to assess longer-term changes in the mortality outcomes. In this study, we focused only on IPPS hospitals and compared the performance of hospitals in which Medicare patients account for a high share of bed days with hospitals in which Medicare patients account for a low share of bed days using 4 years of follow-up data on mortality since the start of the HVBP. Our rationale, based on prior work on the impact of pay-for-performance programs, is that the incentives from the HVBP payment adjustments will have a larger impact on hospitals that rely on Medicare patients to a greater extent [6, 10]. Hospitals with lower reliance on Medicare patients (“low Medicare share hospitals”) will effectively experience a lower “dose” of the incentive compared to those with higher reliance (“high Medicare share hospitals”).

Using publicly reported data on hospital performance from 2009 to 2016, we examined the association between HVBP incentive size and changes in 30-day mortality by comparing pre- to post-HVBP changes in hospital 30-day mortality among high Medicare share hospitals with those of low Medicare share hospitals. We examined 30-day mortality changes for the three admission cohorts (AMI, HF and pneumonia) that were all introduced into the HVBP in 2014.

Methods

Data sources and sample

The data for this study comes from two publicly available sources: CMS Hospital Compare [11] (2009–2016) and CMS Final Impact Rule [12] (2009–2016) as well as from the American Hospital Association Annual Survey [13] (2009) and Census Bureau’s American Community Survey [14]. The study sample comprised all IPPS hospitals from 2009 to 2016 (2756 IPPS hospitals in 2009 and 2607 IPPS hospitals in 2016); CAHs, hospitals in the State of Maryland, pediatric hospitals, long term care facilities, psychiatric hospitals, rehabilitation hospitals, and Veterans Affairs hospitals were excluded [4]. Additionally, hospitals were excluded if their IPPS status changed during the study period or if publicly reported 30-day risk-adjusted morality was unavailable for any study year. Therefore, all included hospitals have balanced data for all study years (2009–2016).

Outcomes, independent variable and hospital characteristics

Our outcomes were hospital-level 30-day risk adjusted mortality rates for AMI, HF, and pneumonia reported annually by the CMS Hospital Compare program [15]; these refer to mortality experienced by Medicare patients admitted to a hospital. Our analytic data consisted of annual longitudinal observations for each of these three diagnoses at IPPS hospitals. According to the Hospital Compare program, for each hospital, the risk adjusted rate of 30-day mortality reported each year for each cohort is based on eligible admissions during that year and in the preceding 2 years, and adjusted for compositional differences across hospitals in patient age, sex, comorbid health conditions and other unobserved, but systematic, hospital effects [16]. For the pneumonia cohort, we did not include 2016 performance since CMS’ identification criteria for pneumonia admissions was modified in 2016 to include admissions with aspiration pneumonia as a principal discharge diagnosis, and admissions with sepsis as a principal discharge diagnosis that have a secondary diagnosis of pneumonia [17].

Our identification of high vs. low Medicare share hospitals was based on the share of aggregate inpatient days of care provided to Medicare-reimbursed patients out of total inpatient days for all patients in 2009 (baseline pre-HVBP year). Specifically, we defined a dichotomous indicator of high Medicare share hospitals that groups hospitals with Medicare share inpatient days greater than or equal to 55% as high Medicare share hospitals and the other hospitals as low Medicare share hospitals. Due to a dearth of prior work or theoretical guidelines in grouping hospitals by Medicare share, we also examined different categorizations including with different cut-offs and examined the sensitivity of study findings to these alternative cut-offs.. [18, 19]. Due to the similarity of findings across the different specifications, we have reported findings based on the 55% cut-off as our preferred estimate; estimates from alternative cut-offs (50 and 60%) are reported in the Appendix.

The hospital characteristics included in our analysis as regression covariates were: bed size (less than 100, 100–199, and 200 or more); teaching hospital status - a binary variable indicating membership in the Council of Teaching Hospitals; ownership (not-for-profit, government non-federal, and for-profit); and region (Northeast, Midwest, South, West). In addition, we also included time-varying characteristics: hospital average daily patient census; proportion of low income patients at hospital level (disproportionate share hospital [DSH] %); state-level annual poverty rate; state-level annual unemployment rate.

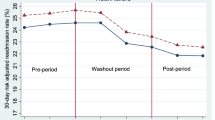

Statistical analysis

We compared the hospital characteristics of the high vs. low Medicare share hospitals in the baseline year 2009. We plotted annual average mortality rate for each condition from 2009 to 2016 for high and low Medicare share hospitals; compared the baseline difference in mortality rate between high and low Medicare share hospitals for each condition; and estimated linear time series models to capture average change in annual mortality rates over the study period for the targeted conditions among the two groups of hospitals. We evaluated the association of the HVBP incentive size with the mortality outcomes using a difference-in-differences type approach, whereby pre- vs. post-HVBP changes in the outcome in high Medicare share hospitals were contrasted with corresponding changes in low Medicare share hospitals. In identifying pre- and post-HVBP periods, we note that while the HVBP was announced in March 2010, with passage of the ACA, the 30-day mortality measures for AMI, HF, and pneumonia were introduced in FY 2014. However, hospital performance on 30-day mortality was based on all eligible hospitalizations during the prior 3 year period, and for the first program year (FY 2014) hospital performance was based on discharges during July 1, 2011 – June 30, 2013 [15]. Since this evaluation plan was announced in 2010 soon after the passage of the ACA, we consider the start date of the HVBP to be 2011, the first year in which patient outcome measures were evaluated to determine payment adjustments for FY 2014. We defined a dichotomous measure of the post-HVBP period that was equal to 1 for the time period 2011–2016 (post-HVBP) and 0 for observations between 2009 and 2010 (pre-HVBP) (Fig. 1). As post-HVBP period is longer than the pre-HVBP period, in sensitivity analyses we examined if use of a shorter post-HVBP period (2011–2013 and 2014–2016 separately) affects the main findings; these analyses also examine if the results differ if we used earlier (2011–2013) and later (2014–2016) post periods. To examine for heterogeneity in mortality change we used a three-way difference-in-differences regression model specification and estimated mortality change estimates for hospitals grouped by teaching status, ownership and bed size.

For our main analysis, to estimate the association between hospital mortality performance and the degree of exposure to the HVBP, we used linear regression models with difference-in-differences specifications that included indicators for high Medicare share hospitals and post-HVBP time period, and for the interaction (product) of high Medicare share hospitals and post-HVBP. We also included as covariates, indicators of the aforementioned hospital characteristics measured in the baseline year (2009). The coefficient estimate of the interaction term gives the excess pre- to post-HVBP change in mortality rate for high Medicare share hospitals compared to that for low Medicare share hospitals [20, 21]. As the difference-in-differences specification assumes similarity in pre-HVBP longitudinal changes in each mortality measure between high vs. low Medicare share hospitals, we tested the validity of this assumption (“parallel trends test”) using alternative difference-in-differences models using only pre-HVBP data [22]. The results are reported in Table 3 in Appendix. We used a linear (hospital-level) random effects regression model with heteroscedasticity-robust standard errors [23, 24]. In sensitivity analysis with respect to the random effects specification, we also performed similar estimation using a fixed effects specification to control for systematic time-invariant unobserved hospital characteristics [24]. For each model, we included year fixed effects to adjust for secular trends in the mortality outcomes.

Given potential changes in 30-day readmission rate for AMI, HF, and pneumonia associated with the Hospital Readmission Reduction Program (HRRP), another CMS incentive program that was introduced alongside the HVBP in 2010, there may be unintended spillover effects (of the HRRP) on 30-day mortality rates for the aforementioned conditions [25]. As a sensitivity analysis, we re-estimated variants of our main models that included 30-day risk adjusted readmission rate for the corresponding admission cohort as an additional covariate; a significant difference between the difference-in-differences estimates in the models without and with adjustment for the readmission rate would indicate possible spillover effects.

All statistical analyses in this study were performed using Stata version 14.1 [26]. The Institutional Review Board of the Boston University School of Medicine considered this study exempt from human subjects review as no person-level data was involved.

Results

The final study sample comprised 1902 hospitals in each year between 2009 and 2016. We compared the characteristics of the high Medicare share hospitals vs. the low Medicare share hospitals in 2009 (Table 1). The mean share of Medicare inpatient days was 61% for high Medicare share hospitals and 41% for low Medicare share hospitals. A significantly lower proportion of high Medicare share hospitals compared to low Medicare share hospitals were teaching hospitals (1.1% vs. 17.2%). Hospital ownership types were similar across both hospital groups, but a larger proportion of the high Medicare share hospitals had smaller bed size (≤99 beds; 28.1% vs. 13.2%) and were in the South (45.2% vs. 34.3%).

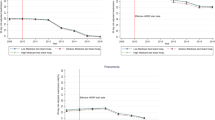

Figure 2 presents the longitudinal trends in the 30-day risk adjusted morality rate for AMI, HF, and pneumonia. The average annual change in mortality rate between 2009 and 2016 for low vs. high Medicare share hospitals for AMI was − 2.0%% vs. 2.04% [p-value for difference between the two groups = 0.53], for HF was 1.0% vs. 1.1% (p-value = 0.48) and for pneumonia was − 0.2% vs. 0.2% [p-value = 0.003].

The results of the association between a hospital’s degree of reliance on Medicare patients (and thus the effective incentive size under the HVBP) and 30-day risk adjusted mortality rate for AMI, HF, and pneumonia using the difference-in-differences analysis are presented in Table 2 (full regression estimates in Table 4 in Appendix). There was a secular decrease in 30-day AMI mortality rates between pre- and post-HVBP periods in both high and low Medicare share hospitals. Adjusted for trends and covariate differences, the difference in change in the high Medicare share hospitals was not significant (0.03, 95% confidence interval (CI) [− 0.13, 0.19%]). Similarly, for HF and pneumonia admissions, there was no significant difference in the changes in high vs. low Medicare share hospitals. We found that high patient census was associated with lower mortality across all three admission cohorts, while mortality was higher in government non-federal hospitals (for AMI and pneumonia admissions) and in the South.

The validity of our difference-in-differences results rests on the assumption that there were no pre-HVBP trend differences between high and low Medicare share hospitals. Therefore, we compared the pre-HVBP mortality trends between high and low Medicare share hospitals (“parallel trends test”). For HF and pneumonia admission cohorts the pre-HVBP trends were similar among the two hospital groups (Table 3 in Appendix); for the AMI cohort, the difference in change between low vs. high Medicare share hospitals was significant but small and not meaningful, amounting to less than 9% of standard deviation in 30-day mortality in 2009.

Sensitivity analysis limiting the post-period to 3 years (2011–2013 and 2014–2016), instead of the 6 years in the main analysis, showed no significant change in mortality – and in the case of pneumonia cohort using 2014–2016 data, a significant not meaningful change – associated with HVBP (Tables 5 and 6 in Appendix). Also, alternative cutoffs of 50 and 60% in identifying high Medicare bed share hospitals also showed no change in findings, with one exception (Tables 7 and 8 in Appendix). Using a lower threshold (50%) showed a significant increase in mortality for pneumonia admissions; however, the magnitude of this change was small, amounting to 10% of the baseline standard deviation in mortality. An alternative regression model specification including hospital fixed effects also produced similar results (Table 9 in Appendix). Including hospital 30-day readmission rate as a covariate showed no change in mortality associated with HVBP and no association between mortality and readmission rates (Table 10 in Appendix). In models to examine heterogeneity we found significant increase in mortality (of up to 25% of the standard deviation in mortality rate) for low bed size (AMI mortality) and privately owned (HF and pneumonia mortality) hospitals (Table 11 in Appendix).

Discussion

Our main findings showed that exposure to larger HVBP penalties was not associated with differential change in 30-day mortality for AMI, HF and pneumonia patients across hospitals. These findings were largely reiterated in extensive sensitivity analyses; although in a few cases we found a significant change in mortality associated with HVBP exposure, in all these instances the magnitude of change was small (under 25% of the standard deviation in the mortality measure at baseline). These results are broadly consistent with findings from other studies that used critical access hospitals or hospitals in the state of Maryland as the comparison group [5, 6]. Additionally, we did not find evidence of mortality spillover effects from changes in readmission rates due to the HRRP (i.e., higher mortality rates for targeted conditions). Our study adds to the body of evidence in the literature that has found no significant impact of pay-for-performance programs on patient outcomes [27], but extends existing work in two important ways. First, we utilized the design of the HVBP to identify hospitals that differed in the extent of HVBP incentives. This provided the method to compare IPPS hospitals that were more likely to be affected by the program (larger effective penalty) with IPPS hospitals that were less likely to be impacted by the program (smaller effective penalty), in the post-HVBP period compared to the pre-HVBP period. Previous studies relied on non-IPPS hospitals to serve as a control group for hospitals that were exposed to the program; as noted previously, non-IPPS hospitals were different from IPPS hospitals in ways that potentially limited their comparability in the 30-day mortality experience [5, 6]. Nevertheless, our finding of no meaningful change in mortality following the HVBP using a comparison between hospitals that differed in the effective incentive size under HVBP, is comparable to the results of prior studies using non-IPPS hospitals as controls and, taken together, all the studies reinforce the finding of no HVBP-associated changes in 30-day mortality. Second, while other studies have assessed the mortality outcomes over a relatively short follow-up period (one to two years) after the start of the HVBP, we evaluated the outcomes of the IPPS hospitals over a longer follow-up period (4 years), reducing the risk of missing late effects of the HVBP [5, 6].

There are a number of possible explanations for the lack of evidence of improvement in patient outcomes under the HVBP. First, the financial incentives under the HVBP may have been too small – the maximum penalty was 1% (2013), 1.25% (2014), 1.5% (2015), and 1.75% (2016) – to elicit performance changes from hospitals [28, 29]. Second, the HVBP is spread out over multiple domains – patient experience, process of care, patient outcomes, and efficiency – with the result that the effect of the program may be dispersed and hard to appreciate [1, 5]. Third, even though we have 4 years of follow-up data, bringing about systematic changes in hospital care that can result in improvement in mortality may require even more time. It remains to be seen whether reductions in mortality will require a longer time to materialize. Fourth, it may be that pay-for-performance schemes that give financial rewards or penalties to providers for better performance are not effective enough to impact the outcomes we examined. In fact, prior studies have failed to find any evidence that pay-for-performance schemes have improved patient outcomes [27, 30].

Our study has several limitations. First, although reliance on Medicare revenues may be smaller for the low Medicare share hospitals, these hospitals still have an incentive to improve patient outcomes since part of their revenue comes from Medicare payments. However, the magnitude of this incentive is likely to be substantially smaller in comparison with that for high Medicare share hospitals. Thus, to the extent that incentives influence hospital behavior, efforts to reduce mortality under the HVBP should have been substantially greater in high-Medicare share hospitals. Second, the HVBP program was launched during the time that quality of care was improving [1]. While this secular trend may limit our ability to disentangle the effects of the HVBP from the concurrent changes in hospital quality of care, our use of a difference-in-differences analysis is the approach most likely to achieve this. Third, our models do not account for time-varying factors, such as changes in regional hospital competition and state healthcare policy (e.g., Medicaid policy), that may systematically affect high and low Medicare share hospitals differently, leading to potential bias in our estimates of the change in mortality associated with HVBP.

Conclusion

In conclusion, our results indicate that HVBP was not associated with differential improvement in mortality outcomes across hospitals that differed in the extent of risk of penalty over the 4 years since the implementation of the program. Policy makers should re-evaluate whether providing monetary incentives to hospitals is an effective mechanism to motivate hospitals to perform better. There is considerable concern that this program may have unintended adverse consequences, particularly for financially vulnerable hospitals, by exacerbating the resource constraints in facilitating interventions to improve patient care; also, they may lead to undesirable gaming responses, such as patient cherry-picking or use of alternative diagnostic codes for non-targeted conditions [31]. Alternatively, CMS should consider working collaboratively – rather than punitively – with hospitals to identify and prioritize quality problems that are most relevant to individual providers, create and support learning systems that focus on collecting data for learning and quality improvement, and provide more financial support for quality improvement efforts at hospitals that lack resources.

Availability of data and materials

Following are the four sources of data used for this study; all data are publicly available.

1) CMS Hospital Compare.

Centers for Medicare & Medicaid Services: Hospital Compare Data Archive. https://data.medicare.gov/data/archives/hospital-compare; 2018.

2) CMS Final Rule Impact File.

Centers for Medicare & Medicaid Services: Final Rule Impact File Data. https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/Historical-Impact-Files-for-FY-1994-through-Present.html; 2017.

3) AHA Annual Survey.

American Hospital Association: AHA Annual Survey Database. Chicago: www.ahadata.com; 2017.

4) American Community Survey.

U.S. Census Bureau: American Community Survey. In., vol. https://factfinder.census.gov/. Washington, DC: U.S. Census Bureau; 2017.

Abbreviations

- ACA:

-

Affordable Care Act

- AMI:

-

Acute myocardial infarction

- CMS:

-

Centers for Medicare and Medicaid Services

- HBVP:

-

Hospital Value Based Purchasing Program

- HF:

-

Heart Failure

- HRRP:

-

Hospital Readmissions Reduction Program

- IPPS:

-

Inpatient Prospective Payment System

References

VanLare JM, Conway PH. Value-based purchasing--national programs to move from volume to value. N Engl J Med. 2012;367(4):292–5.

Patient Protection and Affordable Care Act. Patient protection and affordable care act. Public law. 2010;111(48):759–62.

Norton EC, Li J, Das A, Chen LM. Moneyball in Medicare. J Health Econ. 2018;61:259–73.

Lake Superior Quality Innovation Network: Understanding Hospital Value-Based Purchasing. 2019. https://www.lsqin.org/wp-content/uploads/2017/12/VBP-Fact-Sheet.pdf.

Ryan AM, Krinsky S, Maurer KA, Dimick JB. Changes in hospital quality associated with hospital value-based purchasing. N Engl J Med. 2017;376(24):2358–66.

Figueroa JF, Tsugawa Y, Zheng J, Orav EJ, Jha AK. Association between the value-based purchasing pay for performance program and patient mortality in US hospitals: observational study. BMJ. 2016;353. https://www.ncbi.nlm.nih.gov/pubmed/27160187.

Joynt KE, Harris Y, Orav EJ, Jha AK. Quality of care and patient outcomes in critical access rural hospitals. JAMA. 2011;306(1):45–52.

Calikoglu S, Murray R, Feeney D. Hospital pay-for-performance programs in Maryland produced strong results, including reduced hospital-acquired conditions. Health Aff (Millwood). 2012;31(12):2649–58.

Rajkumar R, Patel A, Murphy K, Colmers JM, Blum JD, Conway PH, Sharfstein JM. Maryland's all-payer approach to delivery-system reform. N Engl J Med. 2014;370(6):493–5.

Jha AK, Joynt KE, Orav EJ, Epstein AM. The Long-Term Effect of Premier Pay for Performance on Patient Outcomes. N Engl J Med. 2012;0(0):null.

Centers for Medicare & Medicaid Services: Hospital Compare Data Archive: 2018. https://datamedicare.gov/data/archives/hospital-compare

Centers for Medicare & Medicaid Services: Final Rule Impact File Data: 2017. https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/Historical-Impact-Files-for-FY-1994-through-Present.html.

American Hospital Association. AHA Annual Survey Database. Chicago; 2016. www.ahadata.com. Accessed 1 Jan 2017.

U.S. Census Bureau. American community survey. Washington, DC: U.S. Census Bureau; 2017. https://factfinder.census.gov/

Centers for Medicare & Medicaid Services: Hospital Compare: http://www.medicare.gov/hospitalcompare/search.html; 2015.

Yale New Haven Health Services Corporation/Center for Outcomes Research and Evaluation. 2018 Condition-Specific Measures Updates and Specifications Report Hospital-Level 30-Day Risk-Standardized Mortality Measures. New Haven: Centers for Medicare & Medicaid Services; 2018. www.qualitynet.org

Yale New Haven Health Services Corporation/Center for Outcomes Research and Evaluation. 2016 Condition-Specific Measures Updates and Specifications Report: Hospital-Level 30-Day Risk-Standardized Mortality Measures. Baltimore: Centers for Medicare & Medicaid Services; 2016.

McGarry BE, Blankley AA, Li Y. The impact of the Medicare hospital readmission reduction program in New York state. Med Care. 2016;54(2):162–71.

Werner RM, Kolstad JT, Stuart EA, Polsky D. The effect of pay-for-performance in hospitals: lessons for quality improvement. Health Aff (Millwood). 2011;30(4):690–8.

Dimick JB, Ryan AM. Methods for evaluating changes in health care policy: the difference-in-differences approach. JAMA. 2014;312(22):2401–2.

Wooldridge JM. Econometric analysis of cross-section and panel data. Cambridge: The MIT Press; 2002.

Ryan AM, Burgess JF Jr, Dimick JB. Why we should not be indifferent to specification choices for difference-in-differences. Health Serv Res. 2015;50(4):1211–35.

Bertrand M, Duflo E, Mullainathan S. How much should we trust differences-in-differences estimates? Q J Econ. 2004;119(1):249–75.

Cameron AC, Trivedi PK. Microeconometrics: methods and applications. New York: Cambridge University Press; 2005.

Dharmarajan K, Wang Y, Lin Z, et al. Association of changing hospital readmission rates with mortality rates after hospital discharge. JAMA. 2017;318(3):270–8.

StataCorp. Stata Statistical Software: Release 14. College Station: StataCorp LP; 2016.

Eijkenaar F, Emmert M, Scheppach M, Schoffski O. Effects of pay for performance in health care: a systematic review of systematic reviews. Health Policy. 2013;110(2–3):115–30.

Ryan AM. Will value-based purchasing increase disparities in care? N Engl J Med. 2013;369(26):2472–4.

Werner RM, Dudley RA. Medicare's new hospital value-based purchasing program is likely to have only a small impact on hospital payments. Health Aff (Millwood). 2012;31(9):1932–40.

Doran T, Maurer KA, Ryan AM. Impact of provider incentives on quality and value of health care. Annu Rev Public Health. 2017;38:449–65.

Himmelstein DU, Ariely D, Woolhandler S. Pay-for-performance: toxic to quality? Insights from behavioral economics. Int J Health Serv. 2014;44(2):203–14.

Acknowledgements

None.

Funding

This study was funded by the National Institutes of Health (Grant: R01HL127212; Principal Investigator: Amresh D. Hanchate). The National Institutes of Health had no role in any stage of the conduct of the research reported in this study, including the study design, data analysis, interpretation of the findings, and writing of the manuscript. The contents are those of the author(s) and do not necessarily represent the official views of, nor an endorsement, by the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

SB and ADH were responsible for the conception and design of the study, and analysis and interpretation of the data. SB drafted the manuscript. ADH and ML were responsible for obtaining and developing the analytic data. DM, MPO and ML contributed to interpretation of the findings, and were involved in the revising it for important intellectual content. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Institutional Review Board at the Boston University School of Medicine and a waiver of consent was granted was no individual-level data was used.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

This document contains (a) estimates of all covariates from the regression models, and (b) estimates from regression models of sensitivity analyses.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Banerjee, S., McCormick, D., Paasche-Orlow, M.K. et al. Association between degree of exposure to the Hospital Value Based Purchasing Program and 30-day mortality: experience from the first four years of Medicare’s pay-for-performance program. BMC Health Serv Res 19, 921 (2019). https://doi.org/10.1186/s12913-019-4562-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-019-4562-7