Abstract

Background

This paper identifies and describes measures of constructs relevant to the adoption or implementation of innovations (i.e., new policies, programs or practices) at the organizational-level. This work is intended to advance the field of dissemination and implementation research by aiding scientists in the identification of existing measures and highlighting methodological issues that require additional attention.

Methods

We searched for published studies (1973–2013) in 11 bibliographic databases for quantitative, empirical studies that presented outcome data related to adoption and/or implementation of an innovation. Included studies had to assess latent constructs related to the “inner setting” of the organization, as defined by the Consolidated Framework for Implementation Research.

Results

Of the 76 studies included, most (86%) were cross sectional and nearly half (49%) were conducted in health care settings. Nearly half (46%) involved implementation of evidence-based or “best practice” strategies; roughly a quarter (26%) examined use of new technologies. Primary outcomes most often assessed were innovation implementation (57%) and adoption (34%); while 4% of included studies assessed both outcomes. There was wide variability in conceptual and operational definitions of organizational constructs. The two most frequently assessed constructs included “organizational climate” and “readiness for implementation.” More than half (55%) of the studies did not articulate an organizational theory or conceptual framework guiding the inquiry; about a third (34%) referenced Diffusion of Innovations theory. Overall, only 46% of articles reported psychometric properties of measures assessing latent organizational characteristics. Of these, 94% (33/35) described reliability and 71% (25/35) reported on validity.

Conclusions

The lack of clarity associated with construct definitions, inconsistent use of theory, absence of standardized reporting criteria for implementation research, and the fact that few measures have demonstrated reliability or validity were among the limitations highlighted in our review. Given these findings, we recommend that increased attention be devoted toward the development or refinement of measures using common psychometric standards. In addition, there is a need for measure development and testing across diverse settings, among diverse population samples, and for a variety of types of innovations.

Similar content being viewed by others

Background

The gap between the generation of new evidence and translation of this evidence into practice is the single biggest chasm in biomedical and community-based research today [1, 2]. The lag between discovery and implementation is partly due to a failure to understand organizational factors that affect adoption and implementation of innovations (i.e., new policies, programs or practices) [3]. Adoption has been defined as the decision of an organization or a community to commit to and initiate an evidence-based intervention (EBI), whereas implementation involves the process of putting to use or integrating an EBIs within a setting [4]. Successful dissemination of EBIs requires an understanding of how and why organizations and systems adopt innovations, as well as their capacity to deliver and maintain them over time.

While there is a growing literature on organizational characteristics related to adoption and implementation of EBIs, the field is still in need of validated conceptual frameworks and measures. There are important lessons to be learned from other reviews [3, 4] and other disciplines (e.g., education, community psychology, health services) [5,6,7,8,9,10,11,12]. However, to our knowledge, a systematic review of organizational characteristics and methods of measurement across disciplines and settings (e.g., health care, technology firms, schools) has not yet been conducted. To move the field of dissemination and implementation (D&I) in public health forward, there is a need for reliable and valid measures to assess organizational characteristics and to validate conceptual frameworks that seek to guide strategies to promote adoption and implementation of EBI strategies broadly.

Given the early stage of research in the fields of health services and public health, coupled with the rapid pace of advances in science, this is an opportune time to evaluate measures of organizational characteristics and to consider next steps to strengthen them. Thus, the aims of this systematic review are to: (1) identify measures of organizational characteristics hypothesized to be associated with the adoption and/or implementation of innovations across a range of disciplinary fields; (2) describe the characteristics and psychometric properties of the measures; and (3) provide recommendations to improve the measurement of constructs in future studies. While the terms organizational “predictors,” “factors,” and “measures” are often used interchangeably, we chose the term organizational “construct” to refer to the characteristic being evaluated [13]. In this review, we focus specifically on latent constructs [14], or those that cannot be directly observed, and examine the psychometric properties of the variables used to measure those constructs.

Methods

Conceptual model

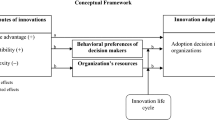

We used the Consolidated Framework for Implementation Research (CFIR) to guide the entries in this systematic review [15]. The CFIR is a meta-theoretical framework that integrates constructs from relevant D&I theories [16] and addresses multiple domains, including the: (1) inner organizational setting; (2) characteristics of the intervention or EBI; (3) outer setting; (4) characteristics of those implementing the intervention; and (5) processes put in place for implementation. The CFIR is becoming a widely used framework in D&I research [15, 17], and thus provided a useful framework for the current review.

Our main goal was to identify and review measures of organizational characteristics associated with adoption and/or implementation of innovations. Our review focused on latent constructs associated with CFIR’s “inner organizational setting” because we were particularly interested in describing psychometric properties of existing measures. Thus, we excluded/omitted descriptions of structural features of organizations that are directly observable, such as organizational size. The latent constructs described under the inner organizational setting are comprised of: (a) networks and communications (e.g., teamwork, relationships among individuals within the organization); (b) culture (e.g., norms, values); and (c) implementation climate, which is described as “shared receptivity to change.” Receptivity includes six sub-constructs, including tension for change, compatibility of change with organizational values, relative priority (shared perception of importance of change), organizational incentives and rewards for change goals and feedback (“extent to which goals are clearly communicated, acted upon”), learning climate, and readiness for implementation [15]. According to the CFIR, an inner organizational setting that is stable, has clear and decentralized decision-making authority, has a capacity for change, and collective receptivity to change is more likely to adopt and/or implement an innovation than those lacking these characteristics [15].

Identification of eligible articles

With assistance from a Health Services Reference Librarian, we generated a comprehensive list of search terms and used a database thesauri to tailor the list of terms for each of 11 relevant electronic databases. Databases searched included: PubMed (Web-based), EMBASE (DIALOG platform), Cumulative Index to Nursing and Allied Health Literature (CINAHL—EBSCO platform), ABI/INFORM (DIALOG), Business Source Premier (EBSCO), PsycINFO (DIALOG), EconLit (DIALOG), Social Sciences Abstracts (DIALOG), SPORTDiscus (EBSCO), ILLUMINA and Educational Resource Information Center (ERIC). In PubMed, the following Medical Subject Headings (MeSH) terms were selected and combined with relevant title and text words: organizational culture, organizational objectives, leadership, organizational policy, organizational innovation, diffusion of innovations, and efficiency—organizational. Title and text words defined the concepts of organizational characteristics, organizational factors, organizational capacity, measures, adoption, implementation, sustainability, decision-making, and readiness. Although papers offered various definitions for adoption and implementation specific to the innovation studied, adoption was generally conceptualized as the decision process of an organization to commit to and utilize an innovation. Implementation was conceptualized as putting to use or integrating an innovation within a setting. Search strategies for the remaining databases were adjusted for the syntax appropriate for each database using a combination of thesauri and text words. Database searches were conducted between January and March of 2013. Papers included had to: (a) be written in the English language; (b) be published in a peer-reviewed journal; (c) report results of original research; (d) quantitatively assess outcomes related to adoption and/or implementation of innovations; and (e) assess latent constructs of the “inner organizational setting” as defined by the CFIR. We chose 1973 as a start date for the review because we wanted to include contributions to the field of D&I from other disciplines and this is when research on D&I seemed to become very active. All published reports from January 1973 to March 2013 were identified and retrieved.

Data abstraction and coding

Information regarding several key variables was abstracted. The complete list of variables included: year of publication, study location (country), study design, year of data collection (i.e., reported within the study), sample characteristics, study setting (e.g., health care, worksite), type of innovation (e.g., evidence-based/best practice strategies), outcomes (i.e., adoption, implementation, implementation and adoption, organizational readiness), use of theory, name of theory (e.g., Diffusion of Innovations), constructs assessed, inclusion/exclusion of psychometric properties (i.e., reliability, validity), and characteristics of measures used to assess each construct. Assessment of study quality (i.e., potential bias) was not conducted in the process of this review. Our main goal was to describe the psychometric properties of the widest possible range of available measurement instruments; studies of poor quality may or may not include good organizational measures.

To ensure consistency of coding, a standardized codebook was created based on a prior measurement review [18]. As a next step, all research team members reviewed three of the included studies and abstracted relevant data. Team members then reviewed the classification of measures for each of the constructs in each of the papers and discussed any disagreements until consensus was reached. The codebook was thereafter refined. During the subsequent coding process, three research team members independently reviewed full texts using pre-determined inclusion and exclusion criteria. Three research team members coded all papers independently; one additional team member subsequently verified all coding. Data were subsequently added to an Access database; after cleaning, the data were downloaded into Excel files and evidence tables were constructed, and organized by CFIR inner organizational construct categories. All excluded papers underwent full-text review by at least three authors to verify exclusion criteria.

Discrepancies in coding within triads were resolved through discussion with the research team. We opted for a group consensus method because of the large number of papers and the enormous variability in terminology used to describe organizational characteristics across studies. We elected to follow standard definitions of organizational constructs provided by the CFIR [12], as opposed to the terminology selected by the authors, due to this wide variability. We used the PRISMA guidelines [19] to report the process and results of this review.

Results

Citations included

The yield from each phase of the search process is depicted in Fig. 1. After duplicates were removed, a total of n = 651 unique citations were identified and reviewed for eligibility as described. A total of n = 332 (51%) articles were excluded during the initial review process. Of the n = 319 (49%) articles that underwent full-text review, n = 243 (76%) were ultimately excluded because they did not meet one or more of the inclusion criteria. Nearly half of exclusions (46%) were due to the article type (e.g. not peer-reviewed article); 22% of excluded studies lacked outcome data. This multi-stage review process yielded n = 76 articles for inclusion in the review (Table 1).

Characteristics of included studies

Table 2 shows the characteristics of the studies included in the review. A majority (63%) took place in the U.S. Most (86%) were cross-sectional and over half used mailed surveys for data collection (59%). Nearly half (49%) of studies were conducted in health settings, which included physical and mental health service agencies. Studies in worksites (22%) and schools (16%) were also relatively common. About half (46%) of the “innovations” that were studied included evidence-based strategies or ‘best practices,’ although there were a substantial number (26%) that addressed technological innovations.

More of the literature focused on implementation (57%) than adoption (34%), with few (4%) addressing both. Nearly half (45%) of the studies specifically cited a theory or conceptual model guiding the investigation; of those that did, the largest percentage (34%) cited Diffusion of Innovations Theory.

Psychometric properties of measures

Details of each of the studies are included in Additional file 1. Overall, of the 76 included studies, approximately 46% (n = 35) included psychometric information pertaining to organizational measures. Because many studies assessed more than one inner organizational construct measure, the number of studies included in the table (n = 35) is less than the total number of constructs assessed (n = 83). Of the total number of studies that included some psychometric information about measures, 94% (33/35) described reliability and 71% (25/35) reported on validity.

Among those studies with reliability reported (n = 33), most reported internal consistency reliability with Cronbach’s alphas (85%, n = 28/33). One study did not report actual measures of reliability or validity directly in the manuscript, but did provide a citation referencing reliability and validity. Among those studies with validity reported (n = 25) the most common forms of validity included content, discriminant, and convergent validity. Information on potential biases within and/or across studies was not specified in the current study, but addressed more broadly in the discussion.

There were a total of 83 measures of CFIR constructs identified. Across all of the studies included in this review, only one measure was used in more than one study [20]. Across studies, definitions of constructs varied widely. For example, “structural characteristics” included, but were not limited to, organizational structure, formalization, and centralization. The two most frequently reported organizational constructs across the studies were “readiness for implementation” (60%, n = 21/35) and “organizational climate” (54%, n = 19/35). The number of items used to assess each construct ranged from one to 32, with the majority of measures consisting of three to six items.

Discussion

While a few reports on measurement in the field of D&I research have been published [3, 21, 22], we believe this systematic review adds to the literature in that it was guided by an explicit and widely-used conceptual model (i.e., CFIR), included studies of both adoption and implementation, integrated literature from multiple disciplines, and considered a variety of settings and innovation types. This review confirms the lack of standardized or validated instruments to assess organizational characteristics.

Inconsistent definitions of constructs and lack of information on reliability and validity for organizational measures

Our findings are similar to others that have examined the issue of measurement in D&I studies [3, 8] and have found inconsistent definitions of constructs and lack of information on reliability and validity for organizational constructs. For example, Wisdom and colleagues [23] studied factors influencing adoption of innovations across multiple theoretical frameworks and in multiple settings (e.g., health care, government bodies), noting that implementation cannot occur without adoption [23]. While organizational-level measures were among the most likely to be assessed in the adoption process, the authors found no consistency in measures across studies in their review. Martinez and colleagues [24] reviewed instrumentation challenges facing the field of implementation science and recommended that investigators utilize the Society for Implementation Research Collaboration (SIRC) Instrument Review Project and the Grid-Enabled Measures (GEM) databases to identify existing instruments [21]. We agree with this recommendation but note that there remains a need for additional research and development on organizational level measures. Kruse and colleagues [25] conducted a systematic review of factors influencing adoption of the electronic health record in medical practice and produced a conceptual model of internal and external organizational characteristics factors that they believe should guide future empirical tests [12]. However, their review combined a wide array of internal and external factors; differences between organizational and individual or interpersonal factors that might influence the adoption decision were not clearly delineated. More recently, Clinton-McHarg and colleagues [22] identified gaps in reporting of psychometric properties of organizational measures and concluded that such omissions limit the utility of implementation-focused theoretical frameworks. A review of measures by Kirk and colleagues [21] found increasing use of the CFIR in D&I studies, but advocate for explicit justifcation for inclusion/exclusion of particular constructs within the framework. Each of the above-mentioned reviews provide important contributions to the field and demonstrate a broad consensus regarding the need for improved scrutiny and development of organizational level measures.

Relative to other fields of study, D&I research in public health and health care is a relatively new priority [9]. As such, it is not surprising that there is a lack of standardized or validated measures to assess salient organizational characteristics. However, increased attention to the development of measures of organizational characteristics is required to improve our understanding of important and potentially modifiable influences on adoption and/or implementation of health program or service-related innovations. Given the few standardized or validated measures identified in this review, we emphasize the need for the development of measures using common psychometric standards [24, 26]. To advance the field, it is crucial that all measures of latent constructs undergo rigorous evaluation of reliability and validity across diverse settings, among diverse population samples, and for a variety of types of innovations.

NIH-funded grid-enabled measures (GEM) database

A positive step in the direction toward improved measures is the NIH-funded Grid-Enabled Measures (GEM) database – a web-based tool to collect and create a national database of measures that can enhance the current state of the science on organizational measures. GEM contains behavioral, social science, and other relevant scientific measures organized by theoretical constructs. The website also contains ‘workspaces’ related to specific scientific disciplines to promote collaboration among researchers while building consensus regarding the use of quality measures. The GEM-D&I workspace currently contains over 130 measures, however, organizational level constructs have received relatively little attention to date [19]. The Instrument Review Project (IRP) led by the Society for Implementation Research Collaboration (SIRC) [26] is an exciting new effort that seeks to address this gap on organizational level measures in mental health, health care and school settings. In addition to providing reviews of existing D&I methods, the SIRC IRP provides a synthesis of implementation science instruments and measures, citations to sources of the measurements, as well as the actual measures [20]. Having a central repository of instruments will allow D&I researchers to obtain and use measures more thoughtfully, enable cross-comparison of study findings, and facilitate the sharing of harmonized data.

Theoretical models or frameworks

Another way to enhance the likelihood of successful adoption and implementation is to address an important limitation we found in our review – a lack of studies that use conceptual models or frameworks to guide the research. This problem has been cited as a barrier to theory-based inquiry [9], but the good news is that new frameworks have been gaining traction in recent years [12, 15, 27, 28] and can provide the basis for greater conceptual clarity in terms of construct definitions, designation of variables as mediators or moderators, and can assist with the operationalization of measures.

This review found that Rogers’s Diffusion of Innovation [16] was the most commonly cited theory. Diffusion of Innovations [16] has been generalized across clinical health care settings [29,30,31] and applied in other industries (e.g., steel firms [32], home building [33]). Thus, it is likely that organizational measures related to Diffusion of Innovations applied in one setting could be developed and/or adapted for use across different industry sectors, types of innovations and populations and cultural groups [34]. More theory-guided research that incorporates organizational-level measures will enhance both research and practice.

Recommendations for future research

Based on these results, our team summarized several important recommendations to be considered in future research. First, we advocate for the use of standardized reporting guidelines, such as for clinical trials (CONSORT) [35], non-randomized designs (TREND) [36], or STROBE (for observational studies) [37] and perhaps adapting these guidelines to include specific information relevant to D&I studies. Such detail could help to ensure the availability of information required to evaluate the strengths and limitations of research. The inclusion of additional detail about the development or adaptation of existing measures, as well as strategies to assess validity and reliability, would facilitate methodological and programmatic advancements. Very recently, Neta et al. [38] proposed a framework for enhancing the value of D&I research which emphasized the importance of considering better reporting at several steps along the research process: planning, delivery, evaluation/results reporting and long-term outcomes. To optimize the value of D&I research, they advocate for distinct reporting guidelines at each of these steps, along with relevant measures. Yet, they acknowledge that “the major barrier to reporting of these measures is the lack of practical, validated, well-accepted instruments (and analytic approaches)” [38].

Our team also recommends that more mixed methods research, where both qualitative and quantitative methodologies are brought to bear on the understanding of D&I issues, is desirable. Our understanding of implementation is rapidly evolving and we are not yet sure which theoretical models are most appropriate and/or applicable to certain situations, nor are we clear about the best definitions of all constructs that are included in theoretical models or conceptual frameworks. Qualitative research methods provide an excellent way to conduct exploratory work that will yield important insights for designing new measures and identifying salient factors that might not currently be reflected in existing conceptual models. Indeed, qualitative investigations of organizational characteristics associated with adoption or implementation [39,40,41,42,43,44,45,46,47,48,49,50,51,52] have greatly enhanced our understanding of relevant factors and helped to refine existing theories. By taking advantage of the strengths of both qualitative and quantitative methods, it is possible to strengthen measure design, development and testing as well.

Results of this review revealed that organizational characteristics can be conceptualized and measured at multiple levels. We recommend that researchers specify and assure congruence among the level of theory, the level of measurement, and the level of analysis [53,54,55]. Culture, climate, leadership, power, participation, and communication are constructs of potential importance to adoption and implementation that can be conceptualized at the organizational level of theory, even though the source of data for the construct resides at the level of an individual [13]. When individuals are asked to report their perceptions of organizational characteristics, it will be important to employ methods to reduce the potential for social desirability bias. This can be accomplished by utilizing neutral question wording, emphasizing the need for respondents to focus on organizational characteristics as opposed to their individual opinions or attitudes, and/or data collection from multiple individuals within a single organization. In working with these organizational constructs, we also recommend that researchers specify the composition model that links the lower-level data to the higher-level construct [53,54,55,56,57]. Several composition models exist (e.g., direct consensus, referent shift, dispersion, and frog-pond) and researchers can use several statistical measures to verify that the functional relationship specified in the composition model holds for the data in question [54]. Specification of the composition model and assessment of its fit to the data increase confidence in the reliability and validity of higher-level constructs measured with lower-level data.

Limitations

Some may view our use of the CFIR as an organizing framework for this systematic review as a limitation of the research. While the CFIR is a widely accepted meta-theoretical framework, it is only one of a growing number of theories, models, and frameworks. Theoretical models and conceptual frameworks to guide D&I inquiries are receiving increased attention [58]. On the other hand, we are unaware of any other theory-guided reviews and believe that this is a major strength of our study. In a recent review, Tabak and colleagues identified more than 61 theoretical models and conceptual frameworks used in implementation science [28] and the number is still growing, as noted in a recent webinar from the Agency for Healthcare Research and Quality (AHRQ) [59]. Nevertheless, even when a single construct that cuts across multiple theories and models is studied (e.g., organizational readiness), there is no consensus regarding conceptual or operational definitions [60].

Another limitation of our review is that relatively little is known about how characteristics of the CFIR “inner organizational setting” relate to outcomes of adoption and implementation [21]. All studies included in this review had an explicit outcome related to adoption and/or implementation, but the extent of adoption or degree of implementation was not consistently identified. And, the vast majority of studies were cross-sectional in nature, not empirical. Thus, we have limited comparison across many factors (level, completeness, frequency, intensity and duration of use) [9]. There is clear need for more studies that examine other domains of the CFIR and test the relationship between key organizational measures and selected adoption or implementation outcomes. Finally, publication bias may have affected the results presented in the current study given that one of our inclusion criteria was being published in a peer-reviewed journal.

Conclusions

The lack of clarity in construct definitions, inconsistent use of theory, absence of standardized reporting criteria for D&I research, and the fact that few measures have demonstrated reliability or validity were among the limitations highlighted in our review. Given these findings, we recommend that increased attention be devoted toward the development or refinement of measures using common psychometric standards. In addition, there is a need for measure development and testing across diverse settings, among diverse population samples, and for a variety of types of innovations.

References

Bowen DJ, Sorensen G, Weiner BJ, Campbell M, Emmons K, Melvin C. Dissemination research in cancer control: where are we and where should we go? Cancer Causes Control. 2009;20:473–85.

Emmons KM, Viswanath K, Colditz GA. The role of transdisciplinary collaboration in translating and disseminating health research: lessons learned and exemplars of success. Am J Prev Med. 2008;35:S204–10.

Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Durlak JA, DuPre EP. Implementation matters: a review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008;41:327–50.

Gagnon MP, Desmartis M, Labrecque M, Car J, Pagliari C, Pluye P, Fremont P, Gagnon J, Tremblay N, Legare F. Systematic review of factors influencing the adoption of information and communication technologies by healthcare professionals. J Med Syst. 2012;36:241–77.

Graham CR, Woodfield W, Harrison JB. A framework for institutional adoption and implementation of blended learning in higher education. Internet High Educ. 2013;18:4–14.

Rabin BA, Glasgow RE, Kerner JF, Klump MP, Brownson RC. Dissemination and implementation research on community-based cancer prevention: a systematic review. Am J Prev Med. 2010;38:443–56.

Fleuren M, Wiefferink K, Paulussen T. Determinants of innovation within health care organizations: literature review and Delphi study. Int J Qual Health Care. 2004;16:107–23.

Cresswell K, Sheikh A. Organizational issues in the implementation and adoption of health information technology innovations: an interpretative review. Int J Med Inform. 2013;82:e73–86.

Addington D, Kyle T, Desai S, Wang J. Facilitators and barriers to implementing quality measurement in primary mental health care: systematic review. Can Fam Physician. 2010;56:1322–31.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Quarterly. 2004;82:581–629.

Larsen KR, Michie S, Hekler EB, Gibson B, Spruijt-Metz D, Ahern D, Cole-Lewis H, Ellis RJ, Hesse B, Moser RP, Yi J. Behavior change interventions: the potential of ontologies for advancing science and practice. J Behav Med. 2017;40(1):6–22. doi:10.1007/s10865-016-9768-0. Epub 1 Aug 2016. PubMed PMID: 27481101.

Bollen KA. Latent variables in psychology and the social sciences. Annu Rev Psychol. 2002;53:605–34.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Rogers EM. Diffusion of innovations. Simon and Schuster. Free Press. New York NY 10020. ISBN 9780743222099. p. 576. August 2003.

Damschroder LJ, Lowery JC. Evaluation of a large-scale weight management program using the consolidated framework for implementation research (CFIR). Implement Sci. 2013;8:1–17.

Allen JD, Coronado GD, Williams RS, Glenn B, Escoffery C, Fernandez M, Tuff RA, Wilson KM, Mullen PD. A systematic review of measures used in studies of human Papillomavirus (HPV) vaccine acceptability. Vaccine. 2010;28:4027–37.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int J Surg. 2010;8:336–41.

Steckler A, Goodman RM, McLeroy KR, Davis S, Koch G. Measuring the diffusion of innovative health promotion programs. Am J Health Promot. 1992;6:214–24.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the consolidated framework for implementation research. Implement Sci. 2016;11:72.

Clinton-McHarg T, Yoong SL, Tzelepis F, Regan T, Fielding A, Skelton E, Kingsland M, Ooi JY, Wolfenden L. Psychometric properties of implementation measures for public health and community settings and mapping of constructs against the consolidated framework for implementation research: a systematic review. Implement Sci. 2016;11:148.

Wisdom JP, Chor KH, Hoagwood KE, Horwitz SM.Innovation adoption: a review of theories and constructs. Adm Policy Ment Health. 2014;41(4):480–502. doi:10.1007/s10488-013-0486-4.

Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implement Sci. 2014;9:118.

Kruse CS, Mileski M, Alaytsev V, Carol E, Williams A. Adoption factors associated with electronic health record among long-term care facilities: a systematic review. BMJ Open. 2015;5(1):e006615. doi:10.1136/bmjopen-2014-006615.

Lewis CC, Stanick CF, Martinez RG, Weiner BJ, Kim M, Barwick M, Comtois KA. The society for implementation research collaboration instrument review project: a methodology to promote rigorous evaluation. Implement Sci. 2015;10:1–18.

Wandersman A, Duffy J, Flaspohler P, Noonan R, Lubell K, Stillman L, Blachman M, Dunville R, Saul J. Bridging the gap between prevention research and practice: the interactive systems framework for dissemination and implementation. Am J Community Psychol. 2008;41:171–81.

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43:337–50.

Gosling AS, Westbrook JI, Braithwaite J. Clinical team functioning and IT innovation: a study of the diffusion of a point-of-care online evidence system. J Am Med Inform Assoc. 2003;10:244–51.

Dopson S, FitzGerald L, Ferlie E, Gabbay J, Locock L. No magic targets! Changing clinical practice to become more evidence based. Health Care Manag Rev. 2010;35:2–12.

Nystrom PC, Ramamurthy K, Wilson AL. Organizational context, climate and innovativeness: adoption of imaging technology. J Eng Technol Manag. 2002;19:221–47.

Sharon O. The diffusion of innovation among steel firms: the basic oxygen furnace. Bell J Econ. 1982;13:45–56.

Blackley DM. Shepard IIIEM: the diffusion of innovation in home building. J Hous Econ. 1996;5:303–22.

Bou Malham P, Saucier G. The conceptual link between social desirability and cultural normativity. Int J Psychol. 2016;51(6):474–80. doi:10.1002/ijop.12261. Epub 23 Feb 2016.

Moher D, Hopewell S, Schulz KF, Montori V, Gotzsche PC, Devereaux PJ, Elbourne D, Egger M, Altman DG. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. Int J Surg. 2012(10):28–55.

Des Jarlais DC, Lyles C, Crepaz N. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: the TREND statement. Am J Public Health. 2004;94:361–6.

von Elm E, Altman DG, Egger M, Pocock SJ, Gotzsche PC, Vandenbroucke JP. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. Int J Surg. 2014;12:1495–9.

Neta G, Glasgow RE, Carpenter CR, Grimshaw JM, Rabin BA, Fernandez ME, Brownson RC. A framework for enhancing the value of research for dissemination and implementation. Am J Public Health. 2015;105:49–57.

Rosemond CA, Hanson LC, Ennett ST, Schenck AP, Weiner BJ. Implementing person-centered care in nursing homes. Health Care Manag Rev. 2012;37:257–66.

Jacobs SR. Multilevel predictors of cancer clinical trial enrollment among CCOP physicians. In: PhD thesis. Chapel Hill: Management; 2014.

Helfrich CD, Savitz LA, Swiger KD, Weiner BJ. Adoption and implementation of mandated diabetes registries by community health centers. Am J Prev Med. 2007;33(1 Suppl):S50–8; S59–65.

McAlearney AS, Reiter KL, Weiner BJ, Minasian L, Song PH. Challenges and facilitators of community clinical oncology program participation: a qualitative study. J Healthcare Manag Am College Healthcare Executives. 2013;58:29–46.

Minasian LM, Carpenter WR, Weiner BJ, Anderson DE, McCaskill-Stevens W, Nelson S, Whitman C, Kelaghan J, O'Mara AM, Kaluzny AD. Translating research into evidence-based practice: the National Cancer Institute Community clinical oncology program. Cancer. 2010;116: 4440–9. doi:10.1002/cncr.25248.

Nickel NC, Taylor EC, Labbok MH, Weiner BJ, Williamson NE. Applying organisation theory to understand barriers and facilitators to the implementation of baby-friendly: a multisite qualitative study. Midwifery. 2013;29:956–64.

Teal R, Bergmire DM, Johnston M, Weiner BJ. Implementing community-based provider participation in research: an empirical study. Implement Sci. 2012;8:7–41. doi:10.1186/1748-5908-7-41.

Weiner BJ, Haynes-Maslow L, Kahwati LC, Kinsinger LS, Campbell MK. Implementing the MOVE! Weightmanagement program in the veterans health administration, 2007-2010: a qualitative study. Prev Chronic Dis. 2012;9:E16. Epub 15 Dec 2011. PubMed PMID: 22172183; PubMed Central PMCID: PMC3277396.

Weiner BJ, Helfrich CD, Savitz LA, Swiger KD. Adoption and Implementation of Strategies for Diabetes Management in Primary Care Practices. Am J Prev Med. 2007;33(1 Suppl):S35–44; S45–9.

Nelson CC, Allen JD, McLellan D, Pronk N, Davis KL. Integrating health promotion and occupational safety and health in manufacturing worksites: perspectives of leaders in small-to-medium sized businesses. Work (Reading, Mass). 2015;52:169–76.

Negron R, Leyva B, Allen J, Ospino H, Tom L, Rustan S. Leadership networks in Catholic parishes: implications for implementation research in health. Soc Sci Med. 2014;122:53–62.

Cucciniello M, Lapsley I, Nasi G, Pagliari C. Understanding key factors affecting electronic medical record implementation: a sociotechnical approach. BMC Health Serv Res. 2015;15:268.

Brewster AL, Curry LA, Cherlin EJ, Talbert-Slagle K, Horwitz LI, Bradley EH. Integrating new practices: a qualitative study of how hospital innovations become routine. Implement Sci. 2015;10:168.

Breslau ES, Weiss ES, Williams A, Burness A, Kepka D. The implementation road: engaging community partnerships in evidence-based cancer control interventions. Health Promot Pract. 2015;16:46–54.

Klein KJ, Dansereau F, Hall RJ. Levels issues in theory development, data collection, and analysis. Acad Manag Rev. 1994;19:195–229.

Klein KJ, Kozlowski SWJ. From micro to meso: critical steps in conceptualizing and conducting multilevel research. Organ Res Methods. 2000;3:211–36.

Rousseau D. Issues of level in organizational research: multilevel and cross-level perspectives. In: Cummings LL, Staw BM, editors. Research in organizational behavior. Volume 7. Greenwich: JAI Press; 1985. p. 1–37.

Chan D. Functional relations among constructs in the same content domain at different levels of analysis: a typology of composition models. J Appl Psychol. 1998;83:234–46.

James LR. Aggregation bias in estimates of perceptual agreement. J Appl Psychol. 1982;67:219–29.

Levin SK, Nilsen P, Bendtsen P, Bulow P. Structured risk assessment instruments: a systematic review of implementation determinants. Psychiatry Psychol Law. 2016;23(4):602–28. http://dx.doi.org/10.1080/13218719.2015.1084661.

AHRQ-Sponsored Continuing Education Activities. [http://www.ahrq.gov/professionals/education/continuing-ed/index.html]. Accessed 15 May 2016.

Gagnon MP, Attieh R, Ghandour el K, Legare F, Ouimet M, Estabrooks CA, Grimshaw J. A systematic review of instruments to assess organizational readiness for knowledge translation in health care. PLoS One. 2014;9:e114338.

Acknowledgements

We wish to thank the following individuals for their thoughtful input on this process: Russ Glasgow, Meridith Eastman, Matthew Chenoweth, Kia Davis, Candace Nelson, Marcia G. Ory, Sarfaraz Shaikh, Niko Pronk.

Funding

Research for this publication was supported by the Centers for Disease Control and Prevention (CDC) and the National Cancer Institute (NCI) cooperative agreements for the Cancer Prevention and Control Research Networks (CPCRN) at the Harvard School of Public Health/Boston School of Public Health (U48DP001946), National Institute for Occupational Safety and Health for the Harvard School of Public Health Center for Work, Health and Well-Being (U19 OH008861); the Central Texas Cancer Prevention and Control Research Network (CTxCPCRN) at The Texas A&M School of Public Health Center for Community Health Development (5U48DP001924); the University of California at Los Angeles, U48 DP001934; Lisa DiMartino’s work on this project was supported by the National Cancer Institute (R25 CA057726).

Availability of data and materials

All data generated and analyzed during this study are included in this published article. The data were culled from existing publications and are cited within the manuscript.

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript. JA conceived of the study, developed data collection and abstraction protocols, abstracted data from included papers, drafted the manuscript and read and approved the final manuscript. SDT abstracted and coded data from included paper, participated in the literature review and drafting the manuscript, and approved the final version. AEM abstracted and coded data from included papers, participated in the literature review and drafting the manuscript, and revised, read and approved the final manuscript. LD abstracted and coded data from included papers, participated in the literature review and drafting the manuscript, and revised and read and approved the final manuscript. DB contributed to design and conceptualization of the study, reviewed, and revised, read and approved the final manuscript. BL participated in the literature review and drafting the manuscript, and revised, read and approved the final manuscript. LL contributed to design and conceptualization of the study, reviewed, and revised, read and approved the final manuscript. BW contributed to design and conceptualization of the study, abstracted and coded data from included papers, reviewed, and revised, read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not relevant as data was from published manuscripts.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Characteristics of studies (n=35) and CFIR constructs (n=83) for which psychometric information was reported. (DOCX 104 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Allen, J.D., Towne, S.D., Maxwell, A.E. et al. Measures of organizational characteristics associated with adoption and/or implementation of innovations: A systematic review. BMC Health Serv Res 17, 591 (2017). https://doi.org/10.1186/s12913-017-2459-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-017-2459-x