Abstract

Background

Clinical competence is essential for providing effective patient care. Clinical Governance (CG) is a framework for learning and assessing clinical competence. A portfolio is a work-placed-based tool for monitoring and reflecting on clinical practice. This study aimed to investigate the effect of using an e-portfolio on the practitioner nurses’ competence improvement through the CG framework.

Methods

This was a quasi-experimental study with 30 nurses in each intervention and control group. After taking the pretests of knowledge and performance, the participants attended the in-person classes and received the educational materials around CG standards for four weeks. In addition, nurses in the intervention group received the links to their e-portfolios individually and filled them out. They reflected on their clinical practice and received feedback. Finally, nurses in both groups were taken the post-tests.

Results

Comparing the pre-and post-test scores in each group indicated a significant increase in knowledge and performance scores. The post-test scores for knowledge and performance were significantly higher in the intervention group than in the control one, except for the initial patient assessment.

Conclusion

This study showed that the e-portfolio is an effective tool for the improvement of the nurses’ awareness and performance in CG standards. Since the CG standards are closely related to clinical competencies, it is concluded that using portfolios effectively improves clinical competence in practitioner nurses.

Similar content being viewed by others

Introduction

Clinical competence is essential for nurses to provide safe and effective patient care [1]. It refers to applying knowledge, attitude, skills, critical thinking, and decision-making in the clinical setting independently to provide optimal patient care. Clinical competence, which is the outcome of nursing education [2] has an association with other outcomes such as safety and satisfaction of patients, quality of care [3], low rate of job burnout [4], high-quality working life [5], self-efficacy, professional confidence, and effective use of clinical skills for nurses [6], which are crucial in the health care system.

Although different studies indicate that the clinical ability of nurses has different aspects such as professional responsibility, care management, interpersonal relationships and interprofessional care, and quality improvement [7], its goal, as mentioned earlier, is providing safe and effective patient care. Clinical competence develops during nursing education [8] and continues in the workplace [9]. Therefore, in addition to improving nursing students’ clinical competence [10, 11], we should also look for a framework to monitor and improve this competence in nurses working in clinical environments.

An accreditation framework that is used to evaluate and improve clinical performance is clinical governance (CG), which refers to the processes, systems, and structures that healthcare organizations use to ensure high-quality patient care [12]. It includes a quality assurance process that often leads to quality improvement activities [13]. CG standards cover items like prevention and health, care and treatment, management of nursing services, compliance with the rights of the service recipient, and drug and equipment management; which are closely related to the nursing staff duties. Hence, training nurses about CG standards is of great importance. CG provides a structure for clinical staff competence to be continually assessed and improved. It can be achieved through audit, feedback, and clinical supervision. The CG framework ensures continuous monitoring and evaluation of the quality of care provided by nurses and supports their ongoing professional development, which leads to delivering high-quality care to patients [14]. The guidelines for CG implementation in hospitals are scrutinized and set as standards. These standards cover not only the requirements of achieving clinical governance but also address local and context-based needs [15]. Clinical staff, including nurses, need to be familiar with these standards and become competent to apply them in practice [14]. So, there is a need to use appropriate learning and assessment tools in this regard.

A portfolio is one of the work-placed-based tools that can help nurses reflect, develop, and monitor their clinical competencies [16, 17]. As a structured, evidence-based tool, a portfolio shows the fulfillment of a profession’s standards for everyone practicing that career. It includes their vision of future growth and development [18] and provides the chance for capacity building in nursing continuous education [19] through reflecting on the practice and comparing the skills with the standards set by regulatory bodies. It offers the opportunity for self-assessment, identification of learning needs, and development of learning plans [20]. Thus, the portfolio can be used for learning and evaluation purposes in nursing [21].

A portfolio can be delivered either paper-based or electronically. Electronic portfolios (e-portfolios) have several advantages over paper-based ones, including more accessibility by many learners, easier shareability and storage, more portability and transferability, enhanced dynamicity, the possibility of uploading multimedia, and the chance of more learner expression [17, 22]. Despite these advantages, an e-portfolio is a relatively new tool for nursing education, and there is a need for further evaluation of its usage in clinical practice [16]. Furthermore, learners’ computer literacy level and access to the appropriate devices and the Internet are challenges for implementing e-portfolios [17, 19, 23]. Therefore, it is important to implement a user-friendly and accessible tool for designing the e-portfolio. Google Docs tool can be a suitable platform for this purpose, because of its availability and compatibility to different devices.

Finally, a scoping review, published in 2022, gathered evidence on the role of e-portfolios in scaffolding learning in healthcare disciplines including nursing. The authors recommend that there is a need to conduct further interventional and longitudinal studies, especially in continuous professional development after graduation. Assessing measurable learning outcomes with a focus on specific competencies helps to enrich the literature about the impact of e-portfolios on clinical performance. Also, optimizing e-portfolio development to guarantee its accessibility and user-friendliness is suggested [24]. These recommendations are aligned with providing evidence to use an e-portfolio to overcome some barriers to enhance the clinical competencies of nursing staff, which is the aim of CG implementation, like training being relevant to real work needs [11, 14], delivering high-quality training programs, learning through real clinical practice [14], covering the gap between the knowledge and clinical practice, and lack of feedback to clinical performance [11].

Therefore, considering (a) the importance of fostering nurses’ clinical competence, (b) the alignment of implementing the CG standards with this purpose, (c) the role of nurse training in achieving these standards, (d) the need to apply appropriate user-friendly and accessible learning and assessment tools, and (e) the need to assess the role of e-portfolio as a learning tool in acquiring clinical competencies; this study aimed to investigate the effect of using an e-portfolio on the nurses’ knowledge and clinical competence. We believe that the results of this study would add new perspectives to the literature to implement such easy-to-use educational strategies in clinical training.

Methods

This was a quasi-experimental study with a pretest-posttest non-equivalent control group design performed in Imam Sajjad Shahriar General Hospital of Tehran University of Medical Sciences from September to November 2021. The nurses of this hospital needed to participate in Continuous Professional Development (CPD) programs for their annual promotion. The hospital’s Clinical Governance Committee (CGC) decided to include training courses about CG in their CPD agenda; so that the nurses would have enough incentive to participate in them. We selected two Internal Medicine wards with 34 and 37 nurses and randomly assigned them to the intervention and control groups. These two wards had a duration of patients’ hospitalization of over 12 h.

The inclusion criterion for nurses was having at least two years of work experience in the Internal Medicine ward. Finally, 30 nurses from each ward could participate in the study.

The Ethics Committee of Tehran University of Medical Sciences approved the study (reference code: IR.TUMS. VCR.REC.1399.379).

Creating the contents

We used the Iranian GC standards as the framework for learning and reflecting. The CGC devised the educational objectives required for nurses to learn about CG based on the related national standards. These standards were classified into “initial patient assessment,” “surgical and anesthesia care,” “prevention and control of infection,” “drug management,” “laboratory and blood transfusion services,” and “service recipient support.” To cover the standards and their related learning objectives, we developed a 71-page booklet, six podcasts (a total of 112 min), and a 20-minute multimedia e-content to be delivered to both control and intervention groups. The CGC confirmed the contents’ coverage of the intended objectives.

Study instruments

We used two instruments to assess the participants’ knowledge and practice before and after the intervention in both study groups. To investigate the knowledge, we used an electronic quiz consisting of 40 multiple-choice questions with a maximum score of 40 which was devised based on the learning objectives. Three experts in the field and two medical educationalists validated the questions ensuring their appropriate coverage of the learning objectives and correctness. Additionally, the test’s internal consistency of 0.79 was calculated using the Kuder-Richardson Formula 20. Appendix 1 includes some sample questions of the knowledge test.

Furthermore, we assessed participants’ performance using six checklists with a total of 100 items that were related to CG standards’ categories. Table 1 shows the checklists alongside their number of items and sample covered duties and tasks. The performance in each item was scored as “appropriate,” “inappropriate,” and “not performed,” with a maximum score of 100. Five CGC members and two medical educationalists approved the validity of the checklists.

Conducting the intervention

The study duration was six weeks for each of the control and intervention groups, including one week for taking the pre-tests, four weeks for the instruction, and one week for getting the post-tests. Four weeks seemed to be a reasonable time for nurses to apply all CG standards to patient care. To avoid contamination, we first conducted the study in the control group. Moreover, there was a long distance between the two wards included in the study on the hospital campus, and their facilities like the pavilion and restaurant were separate. Meanwhile, for more certainty, the questions were loaded one by one on each web page and the test links were deactivated for each participant immediately after submitting the test. To minimize the pre-test effect, the sequence of the questions and items and the wording were different between the pre and post-tests, though the questions were the same. Also, a six-week interval between the pre and post-tests seems to be adequate for not recalling the questions.

The conducted steps in the control group were as follows: The participants signed the informed consent form and then took part in the knowledge test, which we delivered electronically through the hospital’s Learning Management System (LMS). In addition, two trained supervisor nurses referred to the ward together and filled out the performance checklists for each participant in the real work setting within a week. They assessed nurses’ performance either based on the records or by observing them while performing duties or tasks. They would discuss any inconsistencies in filling out the checklists until they reached a consensus.

After obtaining the pre-tests, the participants took part in three one-hour face-to-face classes taught by supervisor nurses and CGC members. These classes were delivered through an interactive lecture teaching strategy and were repeated three times so that the nurses could participate in them at their convenience. In addition, the nurses received the above-mentioned educational contents via the hospital’s LMS. This training was conducted for four weeks during which reminder messages were sent to the nurses every week to encourage them to review the taught materials and refer to the educational contents. Finally, the post-tests were obtained just the same as the pre-tests within the last week.

In the intervention group, the participants underwent the same steps as the control group. The only difference was receiving an e-portfolio besides the above-mentioned training, in which they could reflect on their performance and receive feedback. We devised the e-portfolio using the Google Docs tool. In this e-portfolio, indicators of each standard devised by the CGC were included. Table 2 shows a sample of the e-portfolio questions designed for the indicator of “patient authentication before surgery” related to the “surgical and anesthesia care” category of CG standards. The nurses were asked every week to first self-assess their performance based on a 5-point Likert scale (ranging from very good to very poor); second, reflect on their performance in the assessment week, and write the points of strengths and weaknesses (with a focus on identifying the missed tasks); third, reflect on their performance in comparison with the previous week(s); and fourth, mention their educational needs for further learning. At the end of each week, a trained supervisor nurse provided feedback to each nurse, trying to guide them to improve the quality of care provision. The nurses performed this reflection and feedback cycle for four weeks.

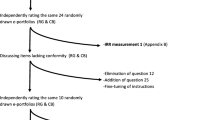

The approach to providing feedback was based on the “Stop”, “Keep”, and “Start” (SKS) model, which promotes the active and regular solicitation of feedback and is useful for encouraging in-depth reflection [25]. In this model, the participants received feedback with a focus on three questions; “what should they start doing?”, “what should they keep doing?”, and “what should they stop doing? Hence, they had the chance to contemplate their behaviors, skills, and choices [26]. The summary of the study steps is illustrated in Fig. 1.

Data analysis

IBM SPSS Statistics for Windows, Version 21.0., was used to analyze the data using independent and paired t-tests to compare the mean scores. Also, ANCOVA was used to eliminate the effect of confounding factors.

Results

All 60 nurses participated in the study from the beginning to the end. There were no significant differences between the two groups concerning gender and years of nursing experience (Table 3).

The shape of the curve and Shapiro Wilk test confirmed the normality of all the knowledge and performance scores, so we used parametric tests for data analysis. No significant differences existed in pretest mean scores for knowledge and performance (P-values of 0.71 and 0.14, respectively). Within-group comparison of pre-and post-test scores indicated the significant achievement in knowledge and performance in both groups (P-values = 0.00). Finally, comparing the post-test scores between the intervention and control groups showed a significant difference in knowledge scores (P-value = 0.00) and no significant difference in performance scores (P-value = 0.16) (Table 4).

To eliminate the effect of the pretest, we used ANCOVA, considering the pretest scores as the covariate, post-test scores as the dependent variable, and the groups (intervention and control) as a factor. The results showed a significant difference between the post-test scores for knowledge and performance (P-values = 0.00) (Table 5).

In addition, we compared the mean scores of the performance post-tests of different categories of CG standards between the two groups. There were significant improvements in post-test scores in the intervention group in all categories except for the initial patient assessment (Table 6).

Discussion

In this study, two groups of nurses participated in the lecture-based classes and received the educational content. Meanwhile, nurses in the intervention group had to fill out an e-portfolio that aimed at reflecting on their clinical competence. The results showed significant increases in both the knowledge and performance scores in the intervention group compared to the control one.

The findings of the present research are in alliance with other studies like the ones of Bangalan [27], Lai [28], and Tsai [29], who confirmed the positive effects of e-portfolios on the knowledge and practice of learners. However, according to Lai [28], although an e-portfolio made progress in the theory and practice of nursing students, some occasional student stress was reported because of technical challenges. That was the reason for choosing the easy-to-use Google Docs tool for designing the e-portfolio in this study. We estimated that only some participants would be able to use more complicated software because of their computer literacy or device issues. As a result of implementing such an easy tool, participants of this study had minimal technical problems while using the e-portfolio.

Furthermore, we found a study in which no specific positive effect was observed for using an e-portfolio in a non-nursing context. Safdari and Torabi [30] implemented an e-portfolio as a formative assessment tool to improve the English writing skills of their learners and found no significant effect. The reason for that is more emphasis on self-assessment in their study, which is a must-to-be part of any portfolio. In contrast, continuous self-assessment was considered a key component in the present study. The nurses not only had to reflect on their performance weekly but also had the opportunity to compare their reflections with the previous week(s). This continuous self-assessment helped the nurses learn through personal reflections on their practice, resulting in self-estimation of the level of knowledge, skill, and understanding, as it is focused on in the literature [31]. To do so, the design for the e-portfolio in this study followed the recommended structure by Cope & Murray [17], including reflection on recent experiences; clarification of positive and negative aspects; self-identification of strength points and areas in need of further improvement; and notification of raising potential learning opportunities.

In this study, the e-portfolio effectively improved the nurses’ performance in all areas of CG except for the initial patient assessment. The participants had to assess different patient problems. Being able to solve one problem successfully is not a good predictor of the capability for other conditions [32]. So, initial patient assessment is case-specific, and nurses may need more encounters to fulfill this standard.

Although health professionals must be trained for clinical competencies during college or in actual practice, there are some concerns. For instance, more financial resources may limit the delivery of high-quality training programs [14]. Our experience indicates that an e-portfolio, which does not need so many financial resources, may solve this challenge.

We experienced some of the advantages mentioned in the literature for e-portfolios, which helped the intervention group acquire higher knowledge and better performance. Like the Hoveyzian study [21], the present e-portfolio, as a learning tool, provided the chance to link theory and practice, and nurses became aware of their strengths and weaknesses through reflection and with the help of provided feedback. In addition, just as in the experience of Pennbrant [33], learning through the e-portfolio method could help nurses understand the expectations in their practice.

This study had some points of strength and limitations. Considering the positive results of this study and the advantages of the e-portfolio, this strategy can be adopted in other hospitals and clinical environments. The barrier of providing appropriate software for e-portfolio development can be overcome using the free Google Docs facility, as implemented in our experience. In addition, we performed a real workplace assessment by observing the nurses’ performance according to the validated checklists, which makes the results more applicable. Despite these advantages, we had no possibility for randomization of the participants between the control and intervention groups, which could result in the same limitations of quasi-experimental studies. In addition, although we used some strategies mentioned before to avoid the contamination bias, we could not eliminate the risk. Other potential limitations are the short duration of the instruction (four weeks) in each group and the possibility of pre-test bias in the case of nurses remembering the questions of the knowledge test and the items of the checklists.

We suggest longer interventions with more self-paced usage of e-portfolios. Also, further studies in different contexts and other populations are recommended. Conducting studies to understand users’ experiences of such tools explores their being truly user-friendly, accessible, and efficient for individuals. Also, examining the long-term impact of using e-portfolios on professional growth, skill development, and clinical performance may be useful.

Conclusion

Considering the importance of clinical competence in improving the quality of patient care, implementing appropriate training strategies to enhance nurses’ competencies is necessary. Based on the findings of this study, the Google Docs-based e-portfolio contributed to enhancing the overall clinical competence of the nurses in various aspects. So, we recommend using an e-portfolio as a learning tool for improving nurses’ clinical performance. Meanwhile, if it is not possible to provide professional e-portfolio software, accessible and user-friendly tools like Google Docs can be customized to serve as the infrastructure.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

References

Lysaght RM, Altschuld JW. Beyond initial certification: the assessment and maintenance of competency in professions. Eval Program Plann. 2000;23(1):95–104.

Raman S, Labrague LJ, Arulappan J, Natarajan J, Amirtharaj A, Jacob D. Traditional clinical training combined with high-fidelity simulation-based activities improves clinical competency and knowledge among nursing students on a maternity nursing course. Nurs Forum (Auckl). 2019;54(3):434–40.

Batbaatar E, Dorjdagva J, Luvsannyam A, Savino MM, Amenta P. Determinants of patient satisfaction: a systematic review. 2016;137(2):89–101.

Kim K, Han Y, Kwak Y, Kim JS. Professional quality of life and clinical competencies among Korean nurses. Asian Nurs Res (Korean Soc Nurs Sci). 2015;9(3):200–6.

Mokhtari S, Ahi G, Sharifzadeh G. Investigating the role of self-compassion and clinical competencies in the prediction of nurses’ professional quality of life. Iran J Nurs Res. 2018;12(6):1–9.

Adib Hajbaghery M, Eshraghi Arani N. Assessing nurses’ clinical competence from their own viewpoint and the viewpoint of head nurses: a descriptive study. Iran J Nurs. 2018;31(111):52–64.

Ajorpaz NM, Tafreshi MZ, Mohtashami J, Zayeri F, Rahemi Z. Psychometric testing of the Persian version of the perceived perioperative competence scale–revised. J Nurs Meas. 2017;25(3):E162–72.

Vernon R, Chiarella M, Papps E. Confidence in competence: legislation and nursing in New Zealand. Int Nurs Rev. 2011;58(1):103–8.

Thrysoe L, Hounsgaard L, Dohn NB, Wagner L. Newly qualified nurses — experiences of interaction with members of a community of practice. Nurse Educ Today. 2012;32(5):551–5.

Hsieh PL, Chen CM. Nursing competence in geriatric/long term care curriculum development for baccalaureate nursing programs: a systematic review. J Prof Nurs. 2018;34(5):400–11.

Alavi NM, NabizadehGharghozar Z, Ajorpaz NM. The barriers and facilitators of developing clinical competence among master’s graduates of gerontological nursing: a qualitative descriptive study. BMC Med Educ. 2022;22(1):1–10.

Lester HE, Eriksson T, Dijkstra R, Martinson K, Tomasik T, Sparrow N. Practice accreditation: the European perspective. Br J Gen Pract. 2012;62(598):e390–2.

Desveaux L, Mitchell JI, Shaw J, Ivers NM. Understanding the impact of accreditation on quality in healthcare: a grounded theory approach. Int J Qual Health Care [Internet]. 2017;29(7):941–7.

Dehnavieh R, Ebrahimipour H, Jafari Zadeh M, Dianat M, Noori Hekmat S, Mehrolhassani MH. Clinical governance: the challenges of implementation in Iran. Int J Hosp Res. 2013;2(1):1–10.

Macfarlane AJ. What is clinical governance? BJA Educ. 2019;19(6):174.

Green J, Wyllie A, Jackson D. Electronic portfolios in nursing education: a review of the literature. Nurse Educ Pract. 2014;14(1):4–8.

Cope V, Murray M. Use of professional portfolios in nursing. Nurs Stand. 2018;32(30):55–63.

Ferdosi M, Ziyari FB, Ollahi MN, Salmani AR, Niknam N. Implementing clinical governance in Isfahan hospitals: barriers and solutions, 2014. J Educ Health Promot. 2016;5(1):20.

Andrews T, Cole C. Two steps forward, one step back: the intricacies of engaging with e-portfolios in nursing undergraduate education. Nurse Educ Today. 2015;35(4):568–72.

Bryant T, Posey L. Evaluating transfer of continuing education to nursing practice. J Contin Educ Nurs. 2019;50(8):375–80.

Hoveyzian SA, Shariati A, Haghighi S, Latifi SM, Ayoubi M. The effect of portfolio-based education and evaluation on clinical competence of nursing students: a pretest–posttest quasiexperimental crossover study. Adv Med Educ Pract. 2021;12:175–82.

Nicolls BA, Cassar M, Scicluna C, Martinelli S. Charting the competency-based e-portfolio implementation journey. International Conference on Higher Education Advances. 2021;1409–17.

Dening KH, Holmes D, Pepper A. Implementation of e-portfolios for the professional development of Admiral nurses. Nurs Stand. 2018;32(22):46–52.

Janssens O, Haerens L, Valcke M, Beeckman D, Pype P, Embo M. The role of ePortfolios in supporting learning in eight healthcare disciplines: a scoping review. Nurse Educ Pract. 2022;103418.

Van De Ridder JMM, Stokking KM, McGaghie WC, Ten Cate OTJ. What is feedback in clinical education? Med Educ [Internet]. 2008;42(2):189–97.

Sefcik D, Petsche E. The cast model: enhancing medical student and resident clinical performance through feedback. J Am Osteopath Assoc. 2015;115(4):196–8.

Bangalan RC, Hipona JB. E-Portfolio: a potential e-learning tool to support student-centered learning, reflective learning and outcome-based assessment. Globus- An International Journal of Management and IT. 2020;12(1):32–7.

Lai CY, Wu CC. Promoting nursing students’ clinical learning through a mobile e-portfolio. CIN - Computers Informatics Nursing. 2016;34(11):535–43.

Tsai PR, Lee TT, Lin HR, Lee-Hsieh J, Mills ME. Nurses’ perceptions of e-portfolio use for on-the-job training in Taiwan. CIN - Computers Informatics Nursing. 2015;33(1):21–7.

Torabi S, Safdari M. The effects of electronic portfolio assessment and dynamic assessment on writing performance. Computer-Assisted Lang Learn Electron J 21(2):52–69.

Eva KW, Regehr G. I’ll never play professional football and other fallacies of self-assessment. J Continuing Educ Health Professions. 2008;28(1):14–9.

Norman G, Bordage G, Page G, Keane D. How specific is case specificity? Med Educ. 2006;40(7):618–23.

Pennbrant S, Nunstedt H. The work-integrated learning combined with the portfolio method-A pedagogical strategy and tool in nursing education for developing professional competence. J Nurs Educ Pract. 2017;8(2):8.

Acknowledgements

The authors would like to thank the CGC members of Imam Sajjad Shahryar Hospital and all the nurses who participated in the study.

Funding

None.

Author information

Authors and Affiliations

Contributions

RM and NN initiated the idea of the research. RM, AM, NN, and AZ planned, designed, analyzed, interpreted, and wrote the study manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This research was performed following the Declaration of Helsinki and was approved by the University Ethical Committee of The Ethics Committee of Tehran University of Medical Sciences approved the study (reference code: IR.TUMS.VCR.REC.1399.379). The researchers explained to the participants and obtained their informed and voluntary consent.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

At the time of conducting this research, Afagh Zarei was a PhD student at Tehran University of Medical Sciences and a member of the research team.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Najaffard, N., Mohammadi, A., Mojtahedzadeh, R. et al. E-portfolio as an effective tool for improvement of practitioner nurses’ clinical competence. BMC Med Educ 24, 114 (2024). https://doi.org/10.1186/s12909-024-05092-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-024-05092-z