Abstract

Background

The viva, or traditional oral examination, is a process where the examiners ask questions and the candidate answers them. While traditional viva has many disadvantages, including subjectivity, low validity, and low reliability, it is advantageous for assessing knowledge, clinical reasoning, and self-confidence, which cannot be assessed by written tests. In order to overcome these disadvantages, structured viva was invented and is claimed to be highly valid, reliable, and acceptable, but this was not confirmed by an overall systematic review or meta-analysis of the studies. The research aims to investigate the studies to reach an overall decision regarding the quality of structured viva as an assessment tool according to the agreed standards in medical education in terms of validity, reliability, and acceptability.

Methods

This systematic review was done following the Preferred Reporting Items for Systematic Reviews and Meta-analysis (PRISMA) guidelines. PubMed, Best Evidence Medical Education (BEME) website reviews, Google Scholars, and ScienceDirect databases were searched for any article addressing the research questions from inception to December 2022. Data analysis was done by the OpenMeta Analyst open-source app, version Windows 10.

Results

A total of 1385 studies were identified. Of them, 24 were included in the review. Three of the reviewed studies showed higher validity of structured viva by a positive linear correlation coefficient compared with MCQs, MCQs and Objective Structured Clinical Examination (OSCE), and structured theory exam. In the reviewed studies, the reliability of structured viva was high by Cronbach alpha α = 0.80 and α = 0.75 in two different settings, while it was low α = 0.50 for the traditional viva. In the meta-analysis, structured viva was found to be acceptable by overall acceptability of (79.8%, P < 0.001) out of all learners who participated in structured viva as examinees at different levels in health professions education using the available numeric data of 12 studies. The heterogeneity of the data was high (I^2 = 93.506, P < 0.001) thus the analysis was done using the binary random-effects model.

Conclusion

Structured viva or structured oral examination has high levels of validity, reliability, and acceptability as an assessment tool in health professions education compared to traditional viva.

Similar content being viewed by others

Introduction

Assessment of the students is a cornerstone in medical education science and thus proper.

assessment is crucial to get quality medical graduates who eventually meet society’s needs and promote the health of the community [1]. Traditional Viva, Viva-voce, or traditional oral.

examination is a process between the examiners who ask questions and a candidate who must.

reply to them [2].

Viva or oral examination is popular as it is a part of many undergraduate and postgraduate programs in health professions education. It is usually used in situations like the decision to pass or fail marginal students in basic sciences, giving a prize to the best student as well as in defending the theses.

The disadvantages of traditional viva include poor content validity, low inter-rater and inter-case reliability, inconsistency in marking, and lack of standardization. However, studies have shown that the validity and reliability can be increased by using structured standardized or structured formulae [2].

Structured viva was properly described as a separate assessment tool by Oakley and Hencken in 2005, but it was described and used in health professions education as early as 1993 by Thomas et al. and 1989 by Tutton et al. [3,4,5].

Structured viva has the advantage of being structured, objective and it is claimed to be fair and reliable, but this was not confirmed by an overall decision such as a systematic review or meta-analysis of the studies [6]. Generally, viva usually assesses knowledge (recall), in-depth clinical reasoning and attitude of the candidate on specific topics, and self-confidence which cannot be assessed by written exams [7]. It should not be influenced by age, gender, race, or socioeconomic status [8].

Reliability is the ratio of the true score variance to the observed score variance, it can be measured with Cronbach alpha which measures the internal consistency of marks of an assessment tool, a value with 0.8 or more considered reliable. Some educationists use the reliability coefficient to measure reliability [5].

Validity is whether the tool measures what is supposed to measure and the reliability is the ratio of the true score variance to the observed score variance [9].

According to the standards in medical education; validity, reliability and acceptability are considered parts of the criteria used for determining the usefulness of a particular method of assessment, together with the feasibility and educational impact those five elements are the criteria of good assessment tool [10].

All of the assessment methods have strengths and intrinsic flaws. Viva or oral examination is widely used in health professions education thus, the aim of this review was to provide a further summary and overview of the studies that have assessed structured viva as an assessment tool in health professions education in terms of validity, reliability and acceptability in comparison to other forms of assessments.

The conceptual framework of the study is to systematically review all the studies related to structured viva validity, reliability and acceptability and to do a meta-analysis following the PRISMA guidelines and protocols which are mainly;

-

Studies selection criteria including the flow diagram.

-

Study characteristics.

-

Studies bias,

-

Study limitations.

-

Fund.

All the study details following the PRISMA guidelines are mentioned step by step in the following sections. The importance or purpose of studying this topic is to ensure the quality of structured viva as an assessment tool according to the agreed standards in medical education in terms of validity, reliability and acceptability thus ensuring learners’ competency, fairness and medical education development.

Materials and methods

This review was conducted according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. The databases of PubMed, Google Scholar, Best Evidence Medical Education (BEME) website reviews, and ScienceDirect were used for the systemic search for any published article in English addressing the research question till December 2022. If there is a disagreement between authors about what research to include, the research is reviewed in detail against all inclusion and exclusion criteria step by step. There were no sources of fund.

The search formula was done using all The Keywords “Viva”, OR “Viva voce”, OR “Structured Viva”, OR “Structural Viva”, OR “Structured oral examination” AND “Validity” OR “reliability” OR “acceptability” AND “Medical” OR “Nursing” OR “Dental” OR “Pharmacy” OR “laboratory sciences”. Words “OR” and “AND” were used as they are functioning in the database algorithms. Overall, all research of structured viva or structured oral examination in any health profession addressing the validity, reliability, and acceptability were searched.

Inclusion criteria

All studies published in English in which structured viva was evaluated for validity, reliability, or acceptability of structured viva as an assessment tool in health professions education were included.

Exclusion criteria

-

1.

Traditional viva exams.

-

2.

Online/Virtual structured viva exams.

-

3.

Semi-structured viva exams.

-

4.

No available abstract or full theses.

-

5.

Articles not in the English language.

All articles retrieved from the search were screened for inclusion in this review based on their titles and abstracts. After that, relevant studies were reviewed for inclusion (full text) according to the eligibility criteria. The researchers assessed the quality of the included studies using the Medical Education Research Study Quality Instrument (MERSQI), a validated study tool used to appraise the methodological quality of medical education studies based on ten items reflecting six domains: study design, sampling, data type, the validity of the evaluation instrument, data analysis, and outcomes.

The researchers screened the articles by themselves and the two researchers participated in quality assessment blindly.

We aimed to assess the validity, reliability and acceptability of the structured viva. Therefore, we summarized the data of correlation coefficients, Cronbach alpha values, and percentages of learners’ acceptability of structured viva in the reviewed studies. The pooled summary prevalence was calculated from the random-effects model due to the notable heterogeneity. The statistical analysis was carried out using OpenMeta[Analyst] app Windows 10 version which is a completely open-source, cross-platform software for advanced meta-analysis.

Results

A total of 1385 studies were found. Relevant titles and abstracts were screened following the inclusion and exclusion criteria and resulted in 60 relevant articles. After reviewing the screened 60 articles, 24 were found to meet the inclusion criteria (1, 5–6, 11,12,13,14,15,16,17,18,19,20,21,22,23,24,25,26,27,28,29,30,31).

The mean MERSQI score for the 24 studies was 12.16 out of an 18-point scale. The schematic flow of the studies selection process is presented in Fig. 1 and the main characteristics of the 24 articles included in the review are in Table 1.

Validity

Three studies (22, 25–26) estimated the validity of structure viva by the correlation coefficient (the linear relation to a criterion variable). All of them showed a highly significant correlation coefficient (r = 0.52, p < 0.01) between the results of structured viva and MCQs. Another study showed a statistically significant correlation (0.48 to 0.51) with multiple choice questions MCQs and OSCE (27). A positive highly significant correlation (r = 0.442, p = 0.001) was seen between marks scored in structured viva and structured theory exam while it was not significant (r = 0.202, p = 0.151) for the marks of traditional viva and structured theory exam [28].

Reliability

The reliability of structured viva in the reviewed health professions studies was determined by Cronbach alpha and the correlation coefficient. Cronbach alpha of structured viva was α = 0.80 compared to α = 0.50 to the conventional viva as described by Madhukumar et al. [1], it was α = 0.75 as described by Anastaki et al. (27). It reached as high α = 0.79 for parts of Jefferies et al.’s structured oral exam of postgraduate training. The reliability coefficient is (0.7 to 0.8) for the use of a structured rating procedure for viva compared to 0.3 to 0.4 for an unstructured viva exam while the multiple-choice test was usually (> 0.8) as described by Tutton et al. [5]. A study showed significant inter-rater reliability for each pair of examiners and each question (r = 0.78 to 0.91; p < 0.0001) (27). Another study showed Interrater agreement of 86.7% as described by Roh et al. [22].

Acceptability

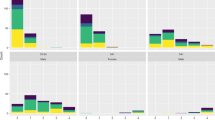

Twelve studies had sufficient data to calculate the overall acceptability rate of structured viva among the participants. The assessment tool was described as clear and fair and had a reasonable level of difficulty. Based on the available numeric data of the 12 studies, overall acceptability was (79.8%, P < 0.001) out of all learners who participated in structured viva as examinees at different levels in health professions education. The heterogeneity of the data was high (I^2 = 93.506, P < 0.001) thus the analysis was done using a binary random-effects model (Fig. 2). Begg and Mazumdar rank correlation Test also showed (Kendall’s Tau = 0.47) with.

p-value of (0.016), this indicates heterogeneity in the meta-analysis studies regarding the structured viva.

Discussion

Health professions education requires learners to develop competencies in addition to knowledge such as professionalism, psychomotor skills, and communication skills. Therefore, the viva-voce examination is appealing because it gives the examiner an opportunity to assess a student’s depth of understanding and their ability to express it in a defined manner [2]. While education is the purposeful activities directed at achieving certain aims, assessment in education or health professions education is mandatory to ensure these aims for the quality and adherence to the standards for both individual learners and faculty members in order to improve performance through identification of areas for improvement and judging the individual competence. Students may be able to tolerate bad teaching, but they cannot tolerate bad assessment, and Assessment drives learning, so assessment in health professions education lies together with the curriculum learning objectives and learning methods as vital cornerstones of any curriculum [32]. One of the causes of differences in the assessment results can be to factors such as anxiety of the examinee and inconsistency of the examiners that need re-evaluation [33, 34].

Validity

The validity of structured viva in the reviewed health professions research was estimated by the correlation coefficient i.e. the linear relation to a criterion variable. There was a highly significant correlation coefficient (r = 0.52, p < 0.01) between the results of structured viva and multiple-choice questions (MCQs) [18].

Correlation means linear relationship and positive correlation means the linear relationship is in a positive way and that indicates a degree of similarity. MCQs are considered as a standard assessment tool in validity and reliability in health professions education. A positive correlation with high significance in p-value means that structured viva is positively related to one of the highest validity tools (MCQs) – a criterion variable – in health professions education.

Another study stated a positively statistically significant correlation of (0.48 to 0.51) with MCQs and Objective structured clinical examination (OSCE) [19]. Thus, it is another positive correlation between MCQs and one of the best clinical assessment tools (OSCE) in terms of standard validity and reliability.

Both studies above described the validity of structured viva by the correlation coefficient i.e. statistical linear relation with standard assessment tools like MCQs and OSCE, the correlation coefficient was positive with a highly significant p-value. This positive correlation with standard assessment tools may be considered as a statistical parameter of acceptable and good validity of structured viva.

A positive highly significant correlation (r = 0.442, P = 0.001) was seen between marks scored in structured viva and structured theory exam while it was not significant (r = 0.202, P = 0.151) for the marks of traditional viva and structured theory exam, this indicates how far traditional viva is low compared to structured viva in terms of validity as statistical correlation [20].

These numbers in the results may help in the resolution of the conflicts and debates in medical education in different studies regarding the validity and reliability of the traditional oral examination and the structured oral examination for example in the study of de Silva V et al. which defended the traditional oral examination in psychiatric post-graduate exam but also stated to find ways to improve reliability and validity [33]. The ways to improve the traditional oral examination can be - as researchers in this study suggest - the conversion of a traditional oral examination to a structured oral examination.

Reliability

The reliability of the assessment tool is the ratio of the true score variance to the observed score variance. Reliability types include; internal consistency reliability, inter-rater reliability, and inter-case reliability.

Reliability in terms of internal consistency is measured by Cronbach alpha (Cronbach alpha 0.7 or greater is acceptable, 0.8 or greater is good and 0.9 or greater is excellent). The reliability of structured viva in the reviewed health professions researches was determined by Cronbach alpha and the correlation coefficient.

Cronbach alpha of structured viva was α = 0.80 compared to α = 0.50 to the traditional viva as described by Madhukumar et al. in 2022, while it was α = 0.75 as described by Anastaki et al. [1, 19]. It reached as high as α = 0.79 for parts of Jefferies et al. 2011 exam of postgraduate training [21]. Cronbach alpha is considered high when it is (0.8 or more), all reviewed studies had relatively high values of Cronbach alpha which indicates good reliability of structured viva as an assessment tool in health professions education.

The reliability coefficient may be used to measure the reliability [5]. In the study by Tutton et al. reliability coefficient was (0.7 to 0.8) for the use of a structured rating procedure for viva compared to (0.3 to 0.4) for unstructured viva exam while the multiple-choice test questions (MCQs) is usually (> 0.8) [5]. These high values of reliability coefficient compared with low values for unstructured or traditional viva indicates the superiority of structured viva over traditional viva in terms of reliability.

A study showed significant Inter-rater reliability for each pair of examiners (r = 0.78 to 0.91; p < 0.0001) [16]. One study by Hye Rin Roh et al. stated the inter-rater agreement percentage was 86.7% in their structured viva exam [22]. Another study showed Correlations of > 0.4 in 80% of the scores and > 0.7 in 50% indicating fair to good intra-rater and inter-rater reliability using the structured oral format [34].

Overall judgment after review of the studies is that structured viva has acceptable and good reliability when compared to traditional viva which has low reliability.

Acceptability

Health professions education is in the era of “Constructivism” and “adult learning theories” where the learners are; self-motivated, problem-based, aware, and have the rights to be involved in the curriculum delivery and assessment tools [1]. This important cornerstone in modern health professions education raised the term “acceptability”.

It is vital for the educational procedure that the learners should be involved and highly accept learning methods and assessment tools. Learners’ acceptability to the new assessment tool, for example, structured viva compared to the traditional or conventional viva vary from a high acceptance rate to a low acceptance rate throughout the published studies, thus it was important to calculate one overall acceptance percentage.

Overall acceptability or the learners’ perception – that the assessment tool is clear, fair, reasonable level of difficulty, acceptance to introduce it in the curriculum or overall acceptance - of structured viva was calculated using the forest plot of 12 researches numeric data in OpenMeta [Analyst] app version Windows 10 and it was (79.8%, P < 0.001) using binary random-effects model due to the heterogeneity of the data (I^2 = 93.506, P < 0.001). This around 80% acceptability rate is considered high and reasonable for an assessment tool i.e. the majority of the participants or the examinees accept structured viva and commented positively regarding it.

Finally, after these results in addition to the traditional viva value of testing higher cognitive levels, problem-solving and communication skills: structured relatively have high acceptability, good validity, and reliability. This outcome makes structured viva a successful alternative preserving traditional viva pros of testing higher cognitive levels and adding to it; validity, reliability, and acceptability thus eliminating its cons.

Limitations

There were few studies that systematically calculated structured viva statistical parameters of the validity and reliability; thus, the meta-analysis effect size could not be calculated for the validity and reliability. Also, there was limited access to some of the published studies.

Conclusion

This review showed that structured viva has acceptable validity and reliability as an assessment tool in health professions education compared to traditional viva. There was high learners’ acceptability of structured viva among learners in health professions education who participated as examinees in structured viva. The researchers recommend converting all traditional viva to structured viva/oral exams to be fair and to avoid subjectivity, low validity, and reliability. More researches should be done to calculate the overall statistical effect size for validity and reliability.

Data Availability

The datasets used during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- BEME:

-

Best Evidence Medical Education

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-analysis

- MCQs:

-

Multiple Choice Questions

- BC:

-

Before Christ

- OSCE:

-

Objective Structured Clinical Examination

- OSPE:

-

Objective Structured Practical Examination

- MERSQI:

-

Medical Education Research Study Quality Instrument

References

Madhukumar, Suwarna MB, Pavithra NS, Amrita. Conventional viva and structured viva—comparison and perception of students. Indian J Public Health Res Dev. 2022;13(2):167–72.

Davis MH, KarunathilakeIJMt. The place of the oral examination in today’s assessment systems. 2005;27(4):294–7.

Oakley B, Hencken C. Oral examination assessment practices:effectiveness and change with a first year undergraduate cohort. J Hosp Leis Sport Tour Educ. 2005;4:3–14.

Thomas CS, Mellsop G, Callender K, Crawshaw J, Ellis PM, Hall A, et al. The oral examination: a study of academic and non-academic factors. Med Educ. 1993;27(5):433–39. https://doi.org/10.1111/j.1365-2923.1993.tb00297.x.

Tutton PJM, Glasgow EF. Reliability and predictive capacity of examinations in anatomy and improvement in the reliability of viva voce (oral) examinations by the use of a structured rating system. Clin Anatomy: Official J Am Association Clin Anatomists Br Association Clin Anatomists. 1989;21:29–34.

Mallick, AyazKhurram AK, Mallick A, Patel. Comparison of structured viva examination and traditional viva examination as a tool of assessment in biochemistry for medical students. Eur J Mol Clin Med. 2020;7(6):1785–93.

Iqbal I, Naqvi S, Abeysundara L, NarulaAJTBotRCoSoE. The value of oral assessments: a review. 2010;92(7):1–6.

Rahman GJJoE. Dentistry Ei. Appropriateness of using oral examination as an assessment method in medical or dental education. 2011;1(2):46.

UKEssays. Validity and reliability of assessment in medical education [Internet]. November 2018.[Accessed18September2022];Availablefrom:https://www.ukessays.com/essays/psychology/validity-and-reliability-of-assessment-in-medical-education-psychology-essay.php?vref=1.

Epstein RM. Assessment in medical education. N Engl J Med. 2007;356(4):387–96.

Ganji KK. Evaluation of reliability in structured VivaVoce as a formative Assessment of Dental Students. J Dent Educ. 2017;81(5):590–96.

Chhaiya SB, Mehta DS, Trivedi MD, Acharya TA, Joshi KJ. Objective structured viva voce examination versus traditional viva voce examination-comparison and students’ perception as assessment methods in pharmacology among second M.B.B.S students. Natl J Physiol Pharm Pharmacol. 2022;12(10):1533–1537.

Khalid A, Sadiqa A. Perception of first and second year medical students to improve structured viva voce as an assessment tool. Ann Jinnah Sindh Med Uni. 2022;8(1):15–19

Dhasmana DC, Bala S, Sharma R, Sharma T, Kohli S, Aggarwal N, Kalra J. Introducing structured viva voce examination in medical undergraduate pharmacology: A pilot study. Indian J Pharmacol. 2016; 48(Suppl 1):S52-S56.

Khakhkhar TM, Khuteta N, Khilnani G. A comparative evaluation of structured and unstructured forms of viva voce for internal assessment of undergraduate students in Pharmacology. Int J Basic Clin Pharmacol. 2019;8:616–21.

AYESHA SADIQA, AMBREEN KHALID. Appraisal of objectively structured viva voce as an Assessment Tool by the medical undergraduate students through Feedback Questionnaire. PJMHS. 2016;13:1.

Dangre-Mudey G, Damke S, Tankhiwale N, Mudey A. Assessment of perception for objectively structured viva voce amongst undergraduate medical students and teaching faculties in a medical college of central India. Int J Res Med Sci. 2016;4:2951–4.

Rohini Bhadre A, Sathe MB, Mosamkar S. Comparison of objective structured viva voce with traditional viva voce. Int J Healthc Biomedical Res. 2016;5(1):62–7.

Waseem N, Iqbal K, IMPORTANCE OF STRUCTURED VIVA AS AN ASSESSMENT TOOL IN ANATOMY. J Univ Med Dent Coll. 2016;7(2):29–4.

Bagga IS, Singh A, Chawla H, Goel S, Goya P. Assessment of Objective Structured viva examination (OSVE) as a tool for formative assessment of undergraduate medical students in Forensic Medicine. Sch J App Med Sci Nov. 2016;4(11A):3859–62.

Vankudre AJ, Almale BD, Patil MS, Patil AM. Structured oral examination as an assessment tool for third year indian MBBS undergraduates in Community Medicine. MVP J Med Sci. 2016;3(1):33.

Gor SK, Budh D, Athanikar BM. Comparison of conventional viva examination with objective structured viva in second year pathology students. Int J Med Sci Public Health. 2014;3:537–9.

Rizwan Hashim A, Ayyub F-Z, Hameed S, Ali S, STRUCTURED VIVA AS AN ASSESSMENT TOOL: PERCEPTIONS OF UNDERGRADUATE MEDICAL STUDENTS. Pak Armed Forces Med J. 2015;65(1):141–4.

Khilnani AK, Charan J, Thaddanee R, Pathak RR, Makwana S, Khilnani G. Structured oral examination in pharmacology for undergraduate medical students: factors influencing its implementation. Indian J Pharmacol 2015 Sep-Oct;47(5):546–50. https://doi.org/10.4103/0253-7613.165182.

Shenwai MR, B Patil K. Introduction of Structured Oral Examination as A Novel Assessment tool to First Year Medical Students in Physiology. J Clin Diagn Res. 2013 Nov;7(11):2544-7. https://doi.org/10.7860/JCDR/2013/7350.3606.

Jefferies, Ann, et al. "Assessment of multiple physician competencies in postgraduate training: utility of the structured oral examination." Advances in Health Sciences Education 16 (2011): 569–577.

Anastakis, Dimitri J., Robert Cohen, and Richard K. Reznick. "The structured oral examination as a method for assessing surgical residents." The American journal of surgery 162.1 (1991): 67–70.

Ahsan M, AyazKhurram, Mallick. A study to assess the reliability of structured viva examination over traditional viva examination among 2nd-year pharmacology students. J DattaMeghe Inst Med Sci Univ. 2022;17(3):589.

Roh, Hye Rin, et al. "Experience of implementation of objective structured oral examination for ethical competence assessment." Korean Journal of Medical Education 21.1 (2009): 23–33.

Kearney RA, Puchalski SA, Yang HY, SkakunENJCJoA. The inter-rater and intra-rater reliability of a new Canadian oral examination format in anesthesia is fair to good. 2002;49(3):232.

Imran M, Doshi C, Kharadi D. Structured and unstructured viva voce assessment: a double-blind, randomized, comparative evaluation of medical students. Int J Health Sci (Qassim). 2019 Mar-Apr;13(2):3–9. PMID:30983939; PMCID: PMC6436443.

Hamad B. Sudan Medical Specialization Board (SMSB) educational assessment program:principles, policies and procedures. 1st ed. Sudan: SMSB; 2017.

Ghosh A, Mandal A, Das N, Tripathi SK, Biswas A, Bera T. Student’s performance in written and vivavocecomponents of final summative pharmacology examinationin MBBS curriculum: a critical insight. Indian J Pharmacol. 2012;44:274–5.

Haque M, Yousuf R, Abu Bakar SM, Salam A. Assessment in undergraduate medical education. Bangladesh Perspect Bangladesh J Med Sci. 2013;12:357–63.

Acknowledgements

We acknowledge the Master of Health Professions Committee at Sudan Medical Specialization Board for their encouragement and support.

Funding

No fund.

Author information

Authors and Affiliations

Contributions

(AA) undertook research design; (AA and WN) participated in searching databases, articles screening, quality assessment and data extraction. All authors interpreted the results and drafted the manuscript. All authors revised and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Abuzied, A.I.H., Nabag, W.O.M. Structured viva validity, reliability, and acceptability as an assessment tool in health professions education: a systematic review and meta-analysis. BMC Med Educ 23, 531 (2023). https://doi.org/10.1186/s12909-023-04524-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-023-04524-6