Abstract

Background

Audience response systems allow to activate the audience and to receive a direct feedback of participants during lectures. Modern systems do not require any proprietary hardware anymore. Students can directly respond on their smartphone. Several studies reported about a high level of satisfaction of students when audience response systems are used, however their impact on learning success is still unclear.

Methods

In order to evaluate the impact of an audience response system on the learning success we implemented the audience response system eduVote into a seminar series and performed a controlled crossover study on its impact on assessments. One hundred fifty-four students in nine groups were taught the same content. In four groups, eduVote was integrated for the first topic while five groups were taught this topic without the audience response systems. For a second topic, the groups were switched: Those groups who were taught before using eduVote were now taught without the audience response system and vice versa. We then analysed the impact of the audience response system on the students’ performance in a summative assessment and specifically focused on questions dealing with the topic, for which the audience response system was used during teaching. We further assessed the students’ perception on the use of eduVote using questionnaires.

Results

In our controlled crossover study we could not confirm an impact of the audience response system eduVote on long-term persistence i.e. the students’ performance in the summative assessment. Our evaluation revealed that students assessed the use of eduVote very positively, felt stronger engaged and better motivated to deal with the respective topics and would prefer their integration into additional courses as well. In particular we identified that students who feel uncomfortable with answering questions in front of others profit from the use of an audience response system during teaching.

Conclusions

Audience response systems motivate and activate students and increase their engagement during classes. However, their impact on long-term persistence and summative assessments may be limited. Audience response systems, however, specifically allow activating students which cannot be reached by the traditional way of asking questions without such an anonymous tool.

Similar content being viewed by others

Background

Audience response systems, also known as classroom response systems, are tools to activate learners during a lecture, to motivate them to participate and to actively follow the instruction. Moreover, tutors can use audience response systems to monitor the learning progress, to receive a fast feedback and to flexibly adapt the contents of the lecture to the learners’ needs.

Today, most students (95% in the age group of 14–29 years) [1] own a smartphone. Therefore, proprietary audience response systems, requiring high efforts as well as high purchase and running costs are no longer required as smartphone-base audience response systems can be used. Browser-based audience response systems do not even require the installation of an app. Such systems are e.g. SMILE (Smartphones in der Lehre) [2, 3], eduVote (SimpleSoft - Buchholz Wengst GbR, Braunschweig, Germany) [4] or Socrative (Showbie Inc., Edmonton, AB). They are versatile and just require a device with internet connection and a web browser. While SMILE can be used for free, both eduVote and Socrative required a paid license. In comparison with traditional systems, Socrative was found to support an active participation in the lecture [5].

The use of audience response systems is highly accepted among students. Students positively assess their use [5,6,7] and audience response systems were reported to increase the student’s satisfaction with a certain lecture.

There are contradictory results regarding the impact of audience response systems on the results of assessments [6,7,8,9]. However, many of such studies were done in lectures and using proprietary audience response systems. There are less or even no studies reported about the use of audience response systems in seminars and regarding smartphone-based systems like e.g. eduVote.

For this reason, we integrated the smartphone-based audience response system eduVote into our classes. EduVote allows a direct integration into PowerPoint presentations. Students access eduVote on their smartphone using a specific website. The students are then able to answer a specific question on their smartphone once it is activated by the lecturer. With the press of a button, the answers of the participants directly appear as bar charts in the PowerPoint presentation in a summarized fashion and can be discussed with the audience.

Here we tested the impact of the audience response system eduVote in a seminar setting on the results of a summative assessment as well as on the students’ perception of the use of this audience response system during class.

Methods

Study setting and design

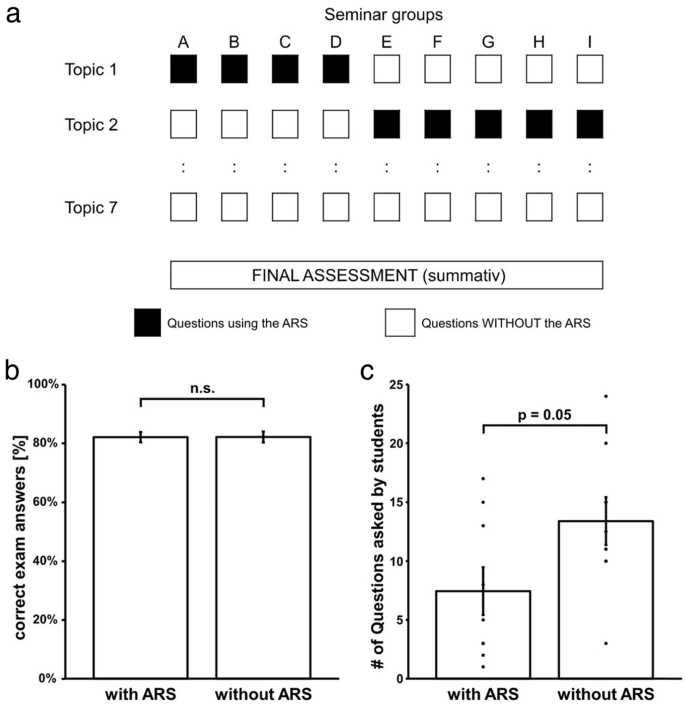

This study was conducted in Summer term 2019 as crossover study [10] during the seminar series “Human genetics”. This seminar series is taught by the Institute of Medical Genetics and Applied Genomics at the University of Tuebingen for the study program in Human Medicine (for students in their 10th semester / 6th clinical semester). The seminar is part of the curricular teaching program in Human Genetics and complements a lecture series in “Applied Human Genetics - Clinical Genetics Part I” (5th semester / 1st clinical semester) and “Part II” (10th semester / 6th clinical semester). While the lectures were held in the plenum of all students, the 154 students of the seminar “Human Genetics” were taught the same contents for different topics in nine seminar groups (groups A-I). Collective 1 (seminar groups A-D) were asked interactive multiple-choice questions using the audience response system while they were taught topic 1. However, topic 2 was taught without this interactive part (i.e. the questions were included in the presentation and they were briefly discussed but students were not asked for their individual answers). Collective 2 (seminar groups E-I) received interactive questions during topic 2 and accordingly topic 1 was taught without this interactive part (see above).

In their summative assessment (took on average 3 weeks (23.9 days) after the last seminars included in this study and 1 week (8.5 days) after the end of the whole seminar series) all groups were asked questions both for topic 1 and topic 2. For analysis, we correlated the success rate in the summative assessment (i.e. the percentage of correct answers) for topic 1 and 2 with the affiliation to collective 1 and 2 (and thereby with the use of interactive questions for the specific topic), respectively.

The seminar tutors were highly experienced experts in the seminar topic. Most tutors conducted the seminar already many times for years and attended a dedicated training in didactics. During the whole seminar series, different teaching-learning methods, adapted to the topic of the respective seminar, were used including collective discussion, problem solving, the use of simulated patients, interactive drawings of family trees, instruction in a lecture-based format, questions to (re-)activate the students etc.

In anonymous questionnaires, we further assessed the students’ demographics, their usual behaviour if a tutor asks questions and their perception of the use of the audience response system. These questionnaires were completed by the students immediately after the respective seminar with the audience response system was taught. The questionnaire was self-developed with external expertise and evaluated in a pilot study [11].

Sample definition and recruitment of participants

Each semester, about 154 students of Human Medicine participate in the seminar “Human Genetics”. All participants of the seminar were invited to participate in the study. The participation in the research study was voluntary and had no impact on the students' grades or whether they passed the course. No part of the study was linked to any form of incentives based on the answer given. The results of the final assessment only of those students who gave their written consent were included in the study. The students were further invited to complete an anonymous questionnaire. The sample consists of a) all students who gave their written consent to a statistical analysis of their summative assessment and of b) all students who completed an anonymous questionnaire, respectively.

Statistical procedure

The dependent variable was the affiliation to either collective 1 (groups A-D) or collective 2 (groups E-I) and the measuring instrument the results of the summative assessment for topic 1 and 2. We used a paired t-test and compared the assessment results with the audience response system (collective 1: results for topic 1 + collective 2: results for topic 2) with the assessment results without the audience response system (collective 1: results for topic 2 + collective 2: results for topic 1).

Sample size estimation

The sample size of about 154 students participating in this study was comparable to the number of participants in other studies which achieved statistically significant results [5,6,7]. Due to the use of dummy coding, each student was both part of the intervention and of the control cohort. This means that a student who e.g. attended for topic 1 a seminar with the audience response system and for topic 2 a seminar without the audience response system served both as proband for topic 1 and as control for topic 2. The statistical total number of cases was therefore 2*n = 308.

Results

Study setting

In order to study the impact of an audience response system on a summative assessment in a seminar setting, we chose the seminar series “Human Genetics”. This seminar series consist of seven different seminars/topics and every student/group was required to attend all seven seminars/topics. Participation of the students was tracked. Either topic 1 (“autosomal recessive diseases”) or topic 2 (“autosomal dominant diseases”) was taught using the audience response system eduVote (SimpleSoft - Buchholz Wengst GbR, Braunschweig, Germany) (Fig. 1a).

Study Design and results. a In the seminar series, 154 students of medicine in nine seminar groups (A-I) were instructed in the same topics. When the students in seminar Groups A-D were instructed in topic 1, they answered interactive questions using the audience response system (ARS), while topic 2 was taught without this interactive part. This design was flipped in seminar groups E-I. We analysed the impact of the audience response system on the results of the final summative assessment. b The results of the summative assessment were independent of the use of the audience response system (ARS). Shown is the mean % (± SEM) of correct answers given by students in the summative assessment for the respective topic for which the audience response system was used during teaching (with ARS, 82.1% ± 1.7%) and for the respective topic for which no audience response system was used (without ARS, 82.2% ± 1.9%). There was no difference between both groups (paired t-test, p = 0.98). c Questions of students in relation to the use of an audience response system. When no audience response system (ARS) was used the students tendentially (p = 0.055) asked more questions (13.4 ± 2.0) than in seminars in which the ARS was employed (7.4 ± 2.0). Mean ± SEM

Participant demographics

One hundred fifty-four students participated in the nine groups of the seminar with a mean of 16 students (n = 9 to 22) per group. One hundred fourteen students (74% of 154) further completed an anonymous questionnaire. This allowed us to further specify the participant demographics: The study cohort consisted of 51% male and 48% female students (1% preferred not to specify the gender). 49% of students were in the age group of 21–25 years. One third (34%) was between 26 and 30 years old and 17% were older than 31 years (Table 1).

Impact of the audience response system on the assessment results

One hundred nineteen students (77% of all seminar participants) gave their written consent that the results of their final assessment could be statistically analysed for this study. Sixty-one students (51% of 119) were taught in topic 1 (“autosomal recessive diseases”) with the use of the audience response system (ARS). For 58 students (49% of 119), the ARS was used for topic 2 (“autosomal dominant diseases”). During the 90 min of the seminar, approximately every 30 min (mean 28 min) the participants answered a multiple-choice question with five discriminators. The aggregated results of all students were displayed after the poll and all answers were subsequently discussed with the audience. We thereby provided a formative feedback to all students who participated in the poll. The respective other topic was taught without the use of eduVote; i.e. the respective questions were shown to the students but no individual answers were demanded and thereby no formative feedback was given. The summative assessment contained additional multiple-choice questions with five discriminators for either topic. These questions were different to the ones asked in the seminar but covered the respective topic (6 questions for topic 1 and 5 questions for topic 2).

We then analysed the answers given by each student in the summative assessment with regard to whether the audience response system (ARS) was employed in the seminar the student attended for the specific topic and calculated the percentage of correct answers with ARS and without ARS for each student. We then compared the percentages for all students but could not identify a difference between both cohorts (Fig. 1b). We concluded that the results of the summative assessment were independent of the use of the audience response system (ARS) in the respective seminar i.e. that the use of the ARS had no impact on the assessment results.

Participant’s perception on the use of an audience response system

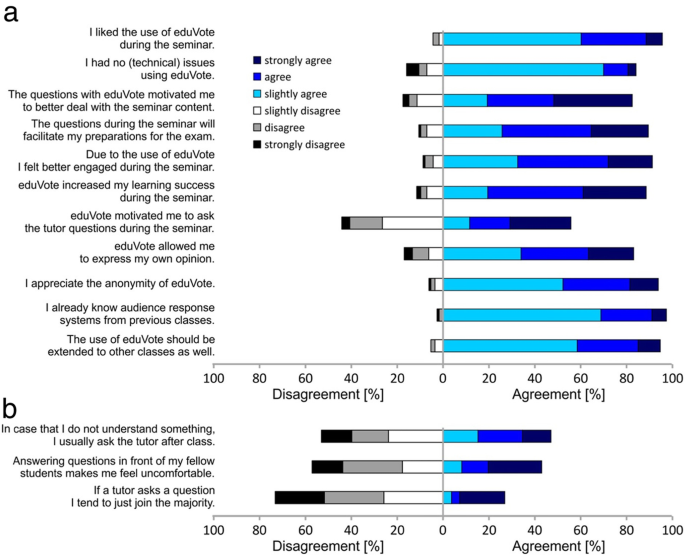

We then evaluated the use of the audience response system using anonymous questionnaires. The student’s opinion was immediately assessed after the respective seminar using a 6-point Likert scale [12] (from 1 “strongly agree” to 6 “strongly disagree”; Fig. 2, Supplementary Table 1). Most students already knew audience response systems like eduVote from previous classes (mean of 1.5 on a 6-point Likert scale ± SEM of 0.1). The students liked the use of eduVote (1.6 ± 0.1) and felt better engaged during the seminar (2.1 ± 0.1). They noticed that eduVote motivated them to better deal with the seminar content (2.6 ± 0.1), that it increased their learning success (2.4 ± 0.1) and that the questions with eduVote will facilitate their preparations for the exam (2.2 ± 0.1). They further appreciated that eduVote allowed them to express their own opinion (2.3 ± 0.1) and also appreciated the anonymity of eduVote (1.8 ± 0.1).

Evaluation results regarding the use of an audience response system. a Immediately after the respective seminar, students were asked about their agreement or disagreement to specific statements regarding the use of the audience response system eduVote using a 6-point Likert scale [12] (from 1 “strongly agree” to 6 “strongly disgree”). Shown is the agreement or disagreement to each statement in % of the answers given (n = 114). Overall, we noticed a high degree of satisfaction with the use of the audience response system. b Assessment of the students’ general perception and handling regarding questions asked by a tutor or to be addressed to a tutor. Shown is the % of agreement or disagreement to each statement

While we observed no major differences between young and older students, two questions revealed differences between male and female students: While only 7% of male students (1.45 ± 0.1 on a 6-point Likert scale) reported technical difficulties when using eduVote, 24% of female students had technical difficulties (2.11 ± 0.15) (p = 0.017). Interestingly, while 36% (4.07 ± 0.1) of male students reported to typically join the majority when a teacher asks a question, only 15% of female students (4.62 ± 0.15) do so (p = 0.019).

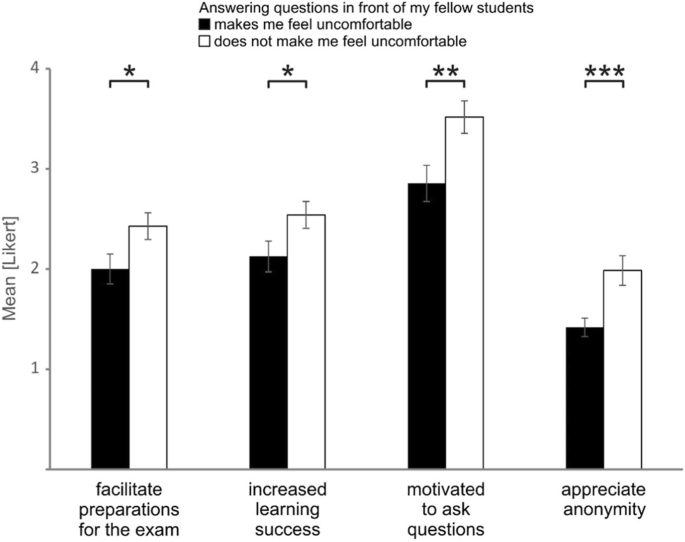

We then asked the question whether other specific groups of students especially profit from the use of eduVote. We therefore grouped the students by their answers in the questionnaire and tested for specific differences between those groups. We identified that students who feel uncomfortable with answering questions in front of others especially profit from the use of the audience response system. We noticed for these students a higher level of agreement (2.00 ± 0.15 on a 6-point Likert scale) with the statement that the questions during the seminar will facilitate their preparations for the exam than for other students (2.43 ± 0.13). They further more agreed that eduVote increased their learning success (2.12 ± 0.15 vs. 2.54 ± 0.13) and felt more motivated by eduVote to ask the tutor questions during the seminar (2.85 ± 0.18 vs. 3.51 ± 0.16). As one may expect, students who feel uncomfortable with answering questions in front of others especially appreciated the anonymity of eduVote (1.42 ± 0.09 vs. 1.95 ± 0.15) (Fig. 3).

Students who indicated that they feel uncomfortable when answering questions in front of their fellow students especially profited from the use of the audience response system. Compared are the levels of agreement (mean Likert scale) of those students who indicated that “Answering questions in front of my fellow students makes me feel uncomfortable” (Likert scale 1–3, black bars, n = 48) with the answers of those students who did not agree to this statement (Likert scale 4–6, white bars, n = 64). Students who feel uncomfortable with answering questions in front of others showed a higher level of agreement with the statements “The questions during the seminar will facilitate my preparations for the exam.” (facilitate preparations for the exam, p = 0.034), “eduVote increased my learning success during the seminar.” (increased learning success, p = 0.044), “eduVote motivated me to ask the tutor questions during the seminar.” (motivated to ask questions, p = 0.008), and “I appreciate the anonymity of eduVote.” (appreciate anonymity, p = 0.002). *, p < 0.05; **, p < 0.01; ***, p < 0.005

Most students (56%) reported that eduVote would motivate them to ask the tutor questions during the seminar. While we could not track, who asked specific questions, we recorded the total number of questions asked by the students in each seminar. We wanted to know whether the students indeed asked more questions during the seminars when eduVote was used. Surprisingly the opposite was true: We observed a tendency towards more questions asked by the students in case that no ARS was used (Fig. 1c).

Taken together, while we could not confirm that the use of the audience response system has an impact on the assessment results of students in our controlled crossover study we observed a high level of satisfaction with the use of eduVote and identified that those students hesitating to answer questions especially profit from the use of an anonymous audience response system like eduVote.

Discussion

Our memory is aimed at making decisions and is prepared to forget [13]. Furthermore, without repetition, just 60% of newly learned material can be recalled after 20 min and after 1 h more than half of it is lost [14]. Only through repetitions and/or processing information is transferred from short term to long term memory [15]. Additionally, students attend many lectures passively without reaching an active or even interactive state of learning [16]. However, active learning has a high impact on the learning success and performance in assessments [17]. Beyond that, the attention of learners strongly declines after 20–25 min of classical teacher-centred lectures [18] requiring a change, like questions using an audience response system, in order to again raise the attention.

Moreover, audience response systems serve another important purpose: The questions using the audience response system and the following discussion of the wrong and correct answers provide the students important and prompt formative feedback during the learning process and about their learning progress [19,20,21] which especially enhances the positive effect of multiple-choice testing [22]. It is difficult to accurately measure the effectiveness of feedback [23]. However, one can at least state that feedback has overall a medium-high effect on student learning and is especially effective for cognitive outcome measures [24] as it activates both fast and slow learning and memory processes in the brain [25].

For these reasons, we integrated an audience response system into our seminar as audience response systems aim at knowledge required for decision making, repeat the contents taught, establish interactivity, activate students, and provide individualized formative feedback.

It was therefore fair to assume that audience response systems should also impact the results of assessments. Previous studies on the impact of audience response systems on assessments showed contradictory results [6, 7, 9]. One needs to consider that it is difficult if not impossible to form appropriate control groups if the impact of audience response systems is assessed in plenary lectures.

Here, we conducted a controlled educational research study in a seminar setting which allowed a direct comparison between topics taught with an audience response system and topics without this additional interactive part. Furthermore, the dummy coding procedure allowed us to reach a considerable high number of study participants compared with other studies [5,6,7, 9].

Students answered interactive questions during their classes using the audience response system eduVote. Control groups were instructed without this interactive part. We analysed the results of the final assessment of students for an impact of the use of the audience response system. However, we could not demonstrate a positive long-term impact of the audience response system on learning and perception.

It is possible that our seminar “Human Genetics” was already interactive enough and that the additional activation using the audience response system had no further effect as the participants already have reached an interactive state of learning [16]. One major bias may have been an overlearning of students in preparation to a summative assessment [26]. This effect may have covered the effect of the teaching methods on the results of the summative assessment.

Due to the nature of this educational research studies, students could not be blinded to the fact that they were exposed to the audience response system. However, as different groups were exposed to the audience response system for different topics, students were blinded when they served as control group. For organizational reasons, we could not randomly assign individual students to study arms as the groups were precomposed. However, we randomly assigned each group to the study arms. In order to exclude bias by different teachers, tutors were randomly assigned to the different seminar and control groups. In order to exclude any dropouts, we carefully tracked whether students participated at the right time, in the right group and the right room.

Strengths of our study were e.g. the large samples size with clear intervention and control cohorts consisting of multiple independent groups. Importantly, our study was not conducted in a laboratory setting with e.g. pre-recorded lectures or artificial questions but during regular classes with real students and tutors. The tutors were highly qualified and experienced. Our results were unaffected by incentives and we still noted a high motivation of the students to participate in our study and to share their opinion and exam results with us. Furthermore, eduVote turned out to be an easy-to-use and reliable audience response system and our intervention could be easily adapted into other classes and courses.

After the class, we evaluated the use of eduVote. In our questionnaire, the students gave a very positive feedback regarding the use of the audience response system. The students further stressed that they especially appreciated the anonymity of the audience response system and that they felt not to be forced to join the majority.

Interestingly, we observed that we could specifically reach and activate students who feel uncomfortable with answering questions in front of others by changing the traditional way of asking questions through the use of an audience response system. While others proposed such an effect of audience response systems before [27], we here provide evidence for this assumption.

Our results are in line with previous results indicating that the main advantage of audience response systems would be more the motivation of students and the generation of a stimulating learning environment than the improvement of assessment grades [6].

Conclusion

Audience response systems are important tools to activate and engage students during classes. However, in our controlled education research study we could not demonstrate a long-term effect of the audience response system on assessment results. Interestingly, we observed that the audience response system specifically reaches and activates students who feel uncomfortable with answering questions in front of others which may not be reachable without such an anonymous tool.

Availability of data and materials

The datasets used and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ARS:

-

Audience response system

References

Statista. https://de.statista.com/statistik/daten/studie/459963/umfrage/anteil-der-smartphone-nutzer-in-deutschland-nach-altersgruppe/. Accessed 26 Feb 2020.

Feiten L, Weber K, Becker B. SMILE: Smartphones in der Lehre – ein Rück- und Überblick. In: INFORMATIK 2013, Gesellschaft für Informatik (GI), Matthias Horbach, P-220, p. 255–69.

Weber K, Becker B. Formative Evaluation des mobilen Classroom-Response-Systems SMILE. In: E-Learning zwischen Vision und Alltag, Claudia Bremer, Detlef Krömker (Hrsg.), Medien in der Wissenschaft, Band 64, Waxmann, Münster / New York / München / Berlin, 2013; p. 277–89.

Eichhorn M. Elektronische Abstimmungssysteme in der Hochschullehre–Empirische Untersuchung zu Erfahrungen mit dem Audience Response System eduVote. In: Lucke U, et al., editors. Die 14. E-learning Fachtagung Informatik, lecture notes in informatics (LNI). Bonn: Gesellschaft für Informatik; 2016. p. 15–26.

Guarascio AJ, Nemecek BD, Zimmerman DE. Evaluation of students’ perceptions of the Socrative application versus a traditional student response system and its impact on classroom engagement. Curr Pharm Teach Learn. 2017;9(5):808–12.

Barbour ME. Electronic voting in dental materials education: the impact on students’ attitudes and exam performance. J Dent Educ. 2008;72(9):1042–7.

Datta R, Datta K, Venkatesh MD. Evaluation of interactive teaching for undergraduate medical students using a classroom interactive response system in India. Med J Armed Forces India. 2015;71(3):239–45.

Kay RH, LeSage A. Examining the benefits and challenges of using audience response systems: a review of the literature. Comp Educ. 2009;53(3):819–27.

Mains TE, Cofrancesco J Jr, Milner SM, Shah NG, Goldberg H. Do questions help? The impact of audience response systems on medical student learning: a randomised controlled trial. Postgrad Med J. 2015;91(1077):361–7.

Sambandan G, Turcu-Stiolica A. Clinical Trials. In: Clinical Pharmacy Education, Practice and Research; 2019. p. 323–44.

Schmidt T, Buchert R, Mau-Holzmann U. Integration moderner Lehrmethoden in den Humangenetik-Unterricht in Tübingen medgen 2019;31:313–319.

Likert R. A technique for the measurement of attitudes. Arch Psychol. 1932;22:140–55.

Richards BA, Frankland PW. The persistence and transience of memory. Neuron. 2017;94(6):1071–84.

Ebbinghaus H. Über das Gedächtnis: Untersuchungen zur experimentellen Psychologie. Leipzig: Duncker&Humblot; 1885.

Craik FIM, Lockhart RS. Levels of processing: a framework for memory research. J Verbal Learning Verbal Behav. 1972;11:671–84.

Chi MT, Wylie R. The ICAP framework: linking cognitive engagement to active learning outcomes. Educ Psychol. 2014;49(4):219–43.

Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, Wenderoth MP. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci U S A. 2014;111(23):8410–5.

Kadmon M, Strittmatter-Haubold V, Greifeneder R, Ehlail F, Lammerding-Köppel M. The sandwich principle--introduction to learner-centred teaching/learning methods in medicine. Z Evid Fortbild Qual Gesundhwes. 2008;102(10):628–33.

Rust C. The impact of assessment on student learning. How can the research literature practically help to inform the development of departmental assessment strategies and learner-centred assessment practices? Act Learn High Educ. 2002;3(2):145–58.

Rushton A. Formative assessment: a key to deep learning? Med Teach. 2005;27(6):509–13.

Norman G, Neville A, Blake JM, Mueller B. Assessment steers learning down the right road: impact of progress testing on licensing examination performance. Med Teach. 2010;32(6):496–9.

Butler AC, Roediger HL 3rd. Feedback enhances the positive effects and reduces the negative effects of multiple-choice testing. Mem Cogn. 2008;36(3):604–16.

Price M, Handley K, Millar J, O’Donovan B. Feedback: all that effort, but what is the effect? Assess Eval High Educ. 2010;35:277–89.

Wisniewski B, Zierer K, Hattie J. The power of feedback revisited: a meta-analysis of educational feedback research. Front Psychol. 2020;10:3087.

Ernst B, Steinhauser M. Feedback-related brain activity predicts learning from feedback in multiple-choice testing. Cogn Affect Behav Neurosci. 2012;12(2):323–36.

Driskell JE, Willis RP, Copper C. Effect of overlearning on retention. J Appl Psychol. 1992;77(5):615–22.

Hassanin H, Essa K, El-Sayed MA, Attallah MM. Enhancement of student learning and feedback of larger group engineering lectures using audience response systems. J Materials Educ. 2016;3856:175–90.

Acknowledgments

The authors thank the students contributing to this study and the Medical Faculty of Tuebingen for supporting this study. The first author thanks the Medical Faculty of Tuebingen for supporting his studies of Medical education. We further acknowledge support by the Open Access Publishing Fund of the Eberhard Karls University of Tuebingen.

Funding

This study was supported by the Medical Faculty of Tuebingen within the PROFIL program. As part of the quality initiative teaching, the Medical Faculty of Tuebingen supports with the PROFIL and PROFILplus program the continuous development of teaching programs including adaptation of existing curricula and innovations in teaching and learning. The funder had no role in the design of the study, collection, analysis, and interpretation of data, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

TS and KPS designed and supervised the study. TS and UAMH acquired funding to support the study. TS wrote the manuscript. UAMH and AG generated questions. AG, AR, OR, KGH, RF, MS, TH and UAMH conducted the respective seminars. TS, JS and MA collected and analysed data. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was reviewed and approved by the local ethics committee of the University Hospital Tübingen (Project number 222/2019BO2). Personalized information (exam results) was included in this study from those students only who gave their written consent to participate. The analysis of the additional questionnaires did not require an ethic consent as this part of the study was completely anonymous. It was not possible to track the identity of the person who completed a respective questionnaire after the questionnaire was handed in.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1: Supplementary Table 1.

Detailed evaluation results regarding the use of eduVote. Students evaluated the use of the audience response system eduVote using a 6-point Likert scale (from 1 “strongly agree” to 6 “strongly disgree”). Shown are details for individual items/questions: The most frequent answer, the median, the mean and the standard error of means (SEM) are listed (n = 114).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Schmidt, T., Gazou, A., Rieß, A. et al. The impact of an audience response system on a summative assessment, a controlled field study. BMC Med Educ 20, 218 (2020). https://doi.org/10.1186/s12909-020-02130-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-020-02130-4