Abstract

Background

Occupational safety and health (OSH) surveillance systems track work-related fatalities, injuries and illnesses as well as the presence of workplace hazards and exposures to inform prevention efforts. Periodic evaluation is critical to the improvement of these systems to meet the demand for more timely, complete, accurate and efficient data processing and analysis. Despite the existence of general guidance for public health surveillance evaluation, no tailored guidance exists for evaluating OSH surveillance systems to date. This study utilized the Delphi technique to collect consensus among experts in the United States on surveillance elements (components, attributes and measures) to inform the development of a tailored evaluation framework.

Methods

A Delphi study approach with three survey rounds invited an expert panel to rate and comment on potential OSH surveillance evaluation framework elements, resulting in an optimal list of elements through the panel’s consensus. Additionally, experts completed a review of OSH surveillance systems they worked with and answered questions regarding the development of an evaluation framework. Descriptive statistics of the ratings were compiled for the Delphi process. Major themes from experts’ comments were further identified using content analysis to inform contextual information underlying their choices.

Results

Fifty-four potential experts across the United States were contacted to participate in the Delphi study. Ten experts began the first survey round with eight then seven experts continuing in the subsequent rounds, respectively. A total of 64 surveillance components, 31 attributes, and 116 example measures were selected into the final list through panel consensus, with 134 (63.5%) reaching high consensus. Major themes regarding current OSH surveillance focused on resources and feasibility, data collection, flexibility, and the inter-relatedness among elements.

Conclusions

A Delphi process identified tailored OSH surveillance elements and major themes regarding OSH surveillance. The identified elements can serve as a preliminary guide for evaluating OSH surveillance systems. A more detailed evaluation framework is under development to incorporate these elements into a standard yet flexible approach to OSH surveillance evaluation.

Similar content being viewed by others

Background

Work-related hazards and exposures affect human health and well-being globally as well as in the United States (US). Each year, there were approximately 2.8 million nonfatal occupational injuries and illnesses and more than 5000 fatal occupational injuries in the US according to the Bureau of Labor Statistics in 2019, corresponding to 2.8 cases per 100 full-time equivalent workers (FTEs) and 3.5 fatalities per 100,000 FTEs, respectively [1]. Important data on work-related fatalities, injuries and illnesses as well as the presence of workplace hazards and exposures have been systematically collected by established occupational safety and health (OSH) surveillance systems to inform prevention efforts. In most countries including the US, OSH surveillance is largely undertaken by national and state government agencies that have the legal authority to require disease and injury reporting and access to various data sources containing OSH information [2, 3]. Being formalized since 1970 in the US, OSH surveillance has undergone continuous development in both national and state levels. Major national systems for work-related injuries, illnesses, and death have been implemented, including the Bureau of Labor Statistics (BLS) national Survey of Occupational Injuries and Illnesses (SOII) and the Census of Fatal Occupational Injuries (CFOI) [4], supplemented by other programs, such as the national Adult Blood Lead Epidemiology and Surveillance System (ABLES) and the National Electronic Injury Surveillance System—Occupational Supplement (NEISS-Work) [5]. Recognizing the pivotal role of states in OSH surveillance, the National Institute for Occupational Safety and Health (NIOSH) has been collaborating and funding state agencies for OSH surveillance since 1980s. In collaboration with the Council of State and Territorial Epidemiologists (CSTE), occupational health indicators (OHIs) surveillance has been proposed as the core activity for state-based surveillance since 2000 [6]. As of 2021, a total of 26 states have established state-based OSH surveillance systems to conduct OHIs surveillance and other expanded surveillance, following guidelines on minimum and comprehensive activities recommended by the NIOSH [7].

Despite tremendous improvement in OSH surveillance methodologies and techniques in recent decades, there have been calls for more accurate and complete data as well as more timely and efficient processing from data intake to the dissemination of interpretable health information to guide better prevention intervention practices [8,9,10]. Under-reporting and under-estimation of occupational injuries and illnesses has continued to be a significant concern regarding OSH surveillance data reliability [11,12,13]. Studies have shown an under-estimation as much as 69% in national statistics of non-fatal work-related injuries and illnesses [14,15,16]. Gaps and challenges also exist in other aspects of OSH surveillance, including multi-source surveillance and data integration, expanding surveillance from lagging indicators (e.g., injuries and diseases) to leading indicators (e.g., workplace exposures and hazards, safety behaviors), maintaining high confidentiality and privacy standards in data reporting and sharing, and other ongoing challenges related to funding, organizational capacity and resources [8, 9, 17,18,19]. On the other hand, emerging modern information technologies provide new opportunities for improving health surveillance but also pose requirements for system infrastructure, staff expertise, and coordination among systems [20, 21].

Evaluation, defined as systematic collection of information about the activities, characteristics, and outcomes of a program, is critical to the implementation and continuous improvement of a surveillance system [22, 23]. Periodic evaluation identifies gaps and potential opportunities for improvement and helps to ensure that problems are being monitored efficiently and effectively and the system is of quality and useful [24]. However, surveillance evaluation can be challenging due to the lack of consistent operational definitions and detailed guidance corresponding to the different types of health surveillance. As a result, evaluations can be incomplete, incomparable and limited in their use of guiding system improvement. It can be particularly challenging to evaluate OSH surveillance systems and no tailored guidelines have been developed for this type of surveillance. The development of surveillance systems for occupational safety and health conditions in the US has historically lagged behind those for other public health conditions, and so has its evaluation practice. Compared to a large body of literature on evaluations of infectious diseases surveillance and other types of public health surveillance, there are few published evaluations on OSH surveillance [25], and thus there is limited reference for this specific type of evaluation. A tailored evaluation framework that takes into consideration characteristics of OSH surveillance systems can guide more effective and consistent OSH surveillance evaluation practice and thus contribute to the continuous development and improvement of OSH surveillance.

Existing guidelines and frameworks

The US Centers for Disease Control and Prevention (CDC) published Guidelines for evaluating surveillance systems in 1988 [26], based on which the Updated guidelines for evaluating public health surveillance systems were further released in 2001 [24]. The two guidelines are probably by far the most well-known and the de facto authoritative guidelines in public health surveillance evaluation. The evaluation method established in the two guidelines, i.e., the use of attributes, such as system simplicity, flexibility, acceptability, data sensitivity and quality, to describe and assess characteristics and performance of a surveillance system, have been widely adopted in existing surveillance evaluations [27,28,29,30,31,32,33,34,35,36]. It also establishes a framework for many other surveillance evaluation guidelines and frameworks [37,38,39,40,41,42,43]. However, the CDC guidelines are limited in that they provide only generic recommendations and are insufficient in guiding different types of surveillance systems [36, 44,45,46].

A published systematic literature review reported 15 guiding approaches for public health and animal health surveillance [46]. As of 2021, we identified a total of 34 published guidelines and frameworks (Methods described in below section; Major guidelines and frameworks and brief introductions are listed in Additional File 1). Nearly 90% of them were published since 2000, indicating an increased interest in the development of surveillance evaluation guidelines. However, most of these existing guidelines and frameworks are intended to be universally applicable or geared towards communicable diseases and animal health surveillance. We found few existing guidelines focusing on occupational safety and health surveillance, except one that was developed for national registries of occupational diseases in European Union countries [47].

Further, existing guidelines and frameworks are insufficient in terms of providing a comprehensive list of attributes and evaluation questions, as well as practical guidance on selecting, defining and assessing these attributes [46]. We have reported elsewhere the results and challenges of an OSH surveillance evaluation in Oregon [36]. We found that it was difficult to find consistent definitions of attributes and evaluation questions in existing references that were appropriate for our system under evaluation. To address the lack of tailored guidelines for evaluating OSH surveillance in the US and thus help to improve the evaluation practice, we propose a guiding framework model, which should incorporate components (i.e., main functions and activities in a system) relevant to OSH surveillance systems in the US as well as a comprehensive list of attributes corresponding to these components. In addition, a list of accessible and appropriate measures adds to the practicality of the framework. This present paper describes the first step towards this end, in which a Delphi study was designed and conducted to solicit opinions and suggestions from a panel of experts to: 1) develop a comprehensive list of framework elements including surveillance components, attributes and example measures; and 2) pilot the selected elements with current OSH surveillance systems in the US. It is expected that findings of this study can improve understanding of OSH surveillance and inform the development of a tailored evaluation framework.

Methods

Being a consensus method, the Delphi technique is commonly used in research and guidelines development. A modified Delphi study with three internet-based survey rounds and a background survey was designed following the recommendations from common Delphi theoretical frameworks and practices in healthcare and related fields [48,49,50,51,52,53,54,55,56,57,58]. Experts across the US were invited to rate and comment on framework elements as well as to review their respective OSH surveillance systems.

The study obtained ethics review approval by the Institutional Review Board at Oregon State University (Review#: 8797). All participants gave informed consent. The study’s experimental protocol was in accordance to guidelines and standards set forth in the Common Rule (45 CFR 46) by the Department of Health and Human Services in the US.

Framework elements

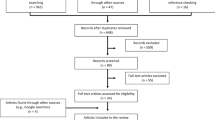

Common surveillance components, attributes, and example measures were compiled from a thorough literature review conducted by January 2019. A combination of three methods was used to systematically search for publications on public health surveillance evaluation guiding approaches: 1) Searching three search engines/data bases: PubMed, Web of Science and Google Scholar using combination of the following key words and wildcards (*): surveillance; and evaluat* OR assess*; and occupational OR "work related" OR workplace OR worker (the set of occupation related key words was not used for Google Scholar); 2) In Google Scholar, searching all publications that cited the two guidelines published by the CDC, Guidelines for evaluating surveillance systems in 1988 and Updated guidelines for evaluating public health surveillance systems in 2001; 3) Checking references from acquired articles. The literature search yielded a total of 2,685 articles and documents, from which we identified 34 publications aiming to provide guidelines and frameworks for evaluating public health surveillance systems.

We thoroughly reviewed the 34 publications on their merit in guiding OSH surveillance evaluation based on our subject matter knowledge and experience. This led to a total of 21 guidelines and frameworks listed in Additional File 1. During the review, we also abstracted surveillance components and attributes and their definitions, as well as example evaluation measures that are deemed to be relevant to OSH surveillance. The abstracted elements were further refined to unify terms with similar connotations, and then grouped into surveillance components and sub-components, with attributes and measures matched to specific components.

A finalized list contains a total of 59 surveillance components (including 47 sub-components), 32 attributes and 133 example measures. These elements were organized into a logic model that was provided to the expert panel to review and amend (See Fig. 1 for the logic model showing the selected components and attributes from the Delphi process). For example, “Infrastructure” was proposed as a component in “Inputs” of the initial logic model, with four sub-components, “Legislation and regulations”, “Funding mechanism”, “Organizational structure”, and “Resources. Three attributes were proposed to assess the “Infrastructure” component, including “Legislative support”, “Compliance” and “Sustainability”. Multiple measures were suggested for each attribute. Examples include, “Are there mandatory requirements on the establishment of the system?”, “Is the system in compliance with all legal and regulatory requirements?”, and “Is funding secure for short-term and long-term future?”.

Delphi panel composition

The panel included experts with experience in at least one of the following two main specialty areas: 1) the operation of OSH surveillance systems in the US and, 2) evaluation of OSH surveillance systems. Potential participants were identified through multiple channels: 1) websites on national and state level OSH surveillance systems in the US; 2) authors of peer-reviewed publications of OSH surveillance evaluation; 3) recommendations from professionals in OSH surveillance; and 4) participants in the CSTE Occupational Health meeting on December 2018.

Recruitment emails were sent to 54 identified persons from 27 national and state level OSH surveillance systems in the US, and authors and researchers in OSH surveillance seeking their participation and/or their recommendation of possible participants. Considering the scope of study and resources available, we aimed for an expert panel size of 10–20 participants. Literature supports that a group of 5–10 experts would be sufficient for a heterogeneous panel, while reasonable results can be obtained with 10–15 experts in a homogenous group [59, 60]. A total of 14 people expressed interest and were invited into the first Delphi round.

Six criteria (Table 1) were evaluated for each potential panelist. A panelist had to satisfy at least three criteria to qualify as an “expert”.

Survey rounds

Three rounds of internet-based surveys were administered from March 2019 to August 2019. In each round, an invitation email was sent to panelists who had completed the previous round, followed by at least two reminder emails. Panelists completed each round in 2 to 4 weeks. The first two rounds were to review 1) surveillance components and attributes, and 2) example measures, respectively. Panelists rated these framework elements on a 5-point Likert scale based on their relevance to OSH surveillance in the US and its evaluation, with 1 indicating the least relevant and 5 the most relevant. They also provided justifications, suggested revisions, additional elements, and other relevant thoughts. Statistics of the ratings and qualitative summaries for each element along with the panelist’s own rating in the first review were sent back to each panelist for a second review in the next round, where the panelist had the opportunity to reconsider his/her ratings for elements that did not have a high panel consensus. In the third round, the panelists also gave a brief review of the OSH surveillance systems they work with and answered questions regarding their system’s operation and evaluation, including strengths and weaknesses. Using selected attributes from the previous two rounds, the panelists scored their system’s performance on a scale from 0 (worst performance) to 10 (best performance) (based upon their best understanding of the systems without collecting actual evaluation evidence). Questions regarding the development of the framework were included in each round. A background survey to collect panelists’ educational and professional experience was completed between the first and the second rounds.

Data analysis and selection of elements

Mean and mode (the most frequent rating) are common statistics used in Delphi process to measure central tendency of panelists’ ratings, or “average group estimate”. While difference between the highest and lowest ratings, SD (standard deviation of ratings), and the percent of experts giving a certain rating or selecting a certain category measure the divergence of group opinions, or the amount of panel disagreement [57, 61, 62]. For each element, quantitative statistics reflecting panel opinions were calculated. The panelist’s comments were taken into consideration to edit the element.

The following predetermined consensus criteria selected elements into three groups:

-

1)

High consensus: mean rating and mode ≥ 4, 80% or more panelists rated 3 or higher, difference of panel ratings ≤ 2 (e.g., range from 3 to 5), and no significant edits based on panelists’ comments;

-

2)

Low consensus/Selected for further confirmation: mean rating and mode ≥ 3 and < 4, 60% to 80% panelists rated 3 or higher;

-

3)

Dropped: mean rating < 3, or mode < 3, or less than 60% panelists rated the element 3 or higher.

All other questions were analyzed using descriptive statistics including mean and percentage. Content analysis was performed for all comments on the elements and responses to open-ended questions in each round to identify major themes regarding the current status of OSH surveillance systems and their evaluation. Data were initially processed using Microsoft Excel. Quantitative analyses were conducted using R (version 3.6.1). Qualitative analysis was conducted using NVivo 12.

Results

Delphi panel participation and composition

Of the 14 people who expressed interest, 10 completed the first round survey and were included in the Delphi panel. Eight out of the ten panelists completed the second round and seven completed the third round. The initial 10 panelists were geographically distributed across the US, representing 12 states covering all five regions in the US. Of the 10 participants, seven worked for state health departments and three were from an academic setting. The panelists’ experience working with OSH surveillance systems in the US ranged from 2 to 5 years, with a mean of 4.3 years. Eight of the 10 panelists had experience with OSH surveillance evaluation. All panelists held a master’s degree or higher and had peer-reviewed publications and/or conference presentations relevant to OSH surveillance. All panel members were qualified to be experts based on the preset criteria (Table 1).

Consensus and opinion convergence

The number of elements selected and consensus statistics from the Delphi rounds are presented in Table 2. The process resulted in 64 components (including 50 sub-components), 31 attributes, and 116 example measures in a final list, with 134 (63.5%) reaching high consensus. In general, components and attributes received higher mean rating and mode as well as smaller difference and SD than example measures. Compared to elements with low consensus, elements that received high consensus tended to have much higher mean and mode, and much smaller difference and SD, with more than 98% of panel experts rating them as \(\ge\) 3.

Changes of statistics through rounds were further checked to investigate opinion convergence and the panel agreement. The SD and difference of elements rated in the second review reduced (Wilcoxon signed rank test P-values < 0.05), indicating increased opinion convergence. More elements had small difference in the final list compared to the initial list (data not shown). For example, the percentage of measures with a difference of 2 or below increased from 66.9% to 92.2% in the second review.

An OSH surveillance logic model was finalized to include components and attributes that were selected after two panel reviews (Fig. 1). A complete list of all selected elements as well as their finalized description can be found in Additional File 2.

Piloting selected elements with OSH surveillance systems

Six state-level and one national OSH surveillance systems in the US were evaluated by panelists in the last Delphi round. The majority of the systems were supported by national funds (86%). Nearly two thirds of the systems (57%) conducted more than one type of OSH surveillance. Three most common types of surveillance were the OHIs surveillance (86%), Injury surveillance (71%), and ABLES (57%). Nearly half of the systems (43%) conducted only secondary data analysis. More than 57% of the systems were never or rarely evaluated and no system was evaluated frequently. For those ever being evaluated, common purposes were to assess implementation (80%) and performance (60%). Need assessment, cost-effectiveness and data quality assessment were rarely or never conducted. Seven attributes were scored high (> 8.0) in these systems, among which, compliance and confidentiality received the highest score (9.0), followed by significance, relevance, adherence, accuracy, and clarity. Three attributes received the lowest score: legislative support (5.9), timeliness (5.7), and integration (3.9). Common perceived weaknesses included high quality data sources, being able to conduct various surveillance activities, dissemination, and creating outcomes and impacts. Major constraints included lack of resources, legislative support, and recognition and integration into the general public health domain.

Major themes identified

Content analysis on the ample commentary from panel experts over the three Delphi rounds revealed the themes described below. Table 3 includes the theme categories and most frequent codes with associated frequency results.

Status quo and issues

Resource constraints and feasibility were common issues noted by the panel. One expert commented that “the reality is that resources for this type of work [OSH surveillance] are historically VERY limited.” Funding was reported as not stable. A panelist wrote that it would be “best if … built into the regular funding cycle.” Technological resources and legislative supports were reported as basic or even lacking. The lack of resources included comments on the dependence of external data in current OSH surveillance. An expert commented that “without them [legislation and regulations] one may end up using existing data not designed for OSH” and another wrote that “most of the data systems in use currently for OSH surveillance were designed for an entirely separate purpose (i.e. financial tracking and reimbursement)”. Reported issues with various existing data sources included the lack of information/variables critical to OSH surveillance (e.g., industry and occupation information), and the data timeliness. A panelist commented, “Often there is a substantial lag time in the availability of surveillance data for public health actions”. A recurring message from the panel experts was to have a “realistic” expectation about current OSH surveillance.

Ideal OSH surveillance

Some system requirements may not seem readily feasible given limited resources and thus belong to “an ideal” OSH surveillance. Experts’ comments helped to outline an ideal OSH surveillance, in which all necessary infrastructures and resources including funding, legislation, human resources, technological platform and stakeholders’ collaboration, were well-integrated to support key surveillance activities from data collection to dissemination as well as interoperability among data systems. Strategic planning was emphasized in the ideal OSH surveillance. “Having a well thought out plan…is critical to developing a streamlined system”. Plans and protocols should cover both existing and potential surveillance activities.

Implications for evaluation and a framework

Nearly all experts held positive expectations about the development of a tailored framework for OSH surveillance evaluation. One expert commented that “It would be helpful to have an evaluation framework specific to OSH surveillance …” and another noted that “we've been struggling with developing a systematic evaluation approach, so it will be good to have consensus on components of an evaluation”. Experts called for flexibility in OSH surveillance evaluation, because “a ‘one-size fits all’ mentality of program evaluation is often unnecessary”, and an evaluation framework should “allow for ‘picking and choosing’ of those evaluation components”. Factors such as differences between systems, changing surveillance objectives and OSH conditions over time, and non-routine activities affect the evaluation choices. One expert commented that “… evaluating it [the infrastructure] seems difficult as it constantly changes and differs from state to state”. Another expert stated, “Case definitions are often lacking for OSH across jurisdictions, states, areas.” As to assessing the significance of surveillance, one expert wrote that “Priorities change and the most useful surveillance systems are those that have a historical trove of information about priority conditions that emerge as ‘significant concerns’ at some point.”

Inter-relatedness among components and attributes

Fundamental and critical components such as funding, resources, organizational structure, management and training, standards and guidelines, and strategies were commonly stated as impacting the system’s performance and stability. For example, experts commented that “Infrastructure is necessary for an effective and efficient public health surveillance system” and the surveillance strategy “impacts standardization and consistency over time and among data sources”. Some elements were regarded as more central in relation to other elements. For example, experts commented that stakeholders’ acceptance and participation were impacted by the system’s compliance, transparency, simplicity and usefulness, while they in turn impacted various aspects of the system’s performance. They stated that “without the willingness, the data is not as good”, “whether a system will function smoothly, meets the intended purpose and can be sustained also depends on its acceptability by stakeholders.” Attributes such as data quality, data timeliness and usefulness were also regarded as central elements.

Discussion

The lack of comparability and replicability in OSH surveillance evaluations limits their usefulness. Agreed terms and their descriptions facilitate understanding of OSH surveillance and thus contribute to the improvement of surveillance and its evaluation. This three-round survey study based on the Delphi technique sought experts’ consensus on framework elements. Experts’ comments on elements as well as the current status of their respective OSH surveillance systems further informed contextual information underlying their choices.

Surveillance elements

This study leveraged a combination of central tendency and divergence measures to differentiate the framework elements. The resulting logic model and list (Fig. 1 and Tables in Additional File 2) contain elements receiving high and low level of consensus. A key to a successful Delphi process is to allow panelists to reconsider and potentially change their opinions after referring to the views of their peers [54, 63]. The second review helped to reach panel opinion convergence for elements that the panel rated low and/or held more dispersed views for in the first review. After the second review, both the difference and SD reduced significantly. The change of ratings in this study suggested that the “group opinions” had guided the panel experts’ reconsideration of their stance. A strength in this Delphi study is that experts were provided with feedback in both quantitative and qualitative summaries, as literature has suggested that qualitative feedback is an effective way to mitigate experts’ propensity to stick to their initial opinions [64, 65].

Many elements in the “Inputs” in the logic model were considered fundamental and critical to the OSH surveillance. The need for more relevant OSH surveillance data sources and stakeholders was also identified by the panel. Regarding surveillance activities, not surprisingly, data processing, ongoing monitoring and data dissemination and their sub-components (except case investigation) were considered to be relevant to OSH surveillance. These activities are also well-accepted components of public health surveillance to date [66].

The study revealed that some components and attributes were less feasible to current OSH surveillance due partly to limited resources, for example, the component, “early detection” and its three sub-components, “provide timely data”, “detect clusters and unusual events” and “guide immediate actions”. Despite that guiding immediate action was proposed for an ideal OSH surveillance [9], the panel experts thought that these activities were less relevant to current OSH surveillance because they were “resources intensive”. Among low consensus attributes, “simplicity” is worth mentioning. In general, a surveillance system should be effective and as simple as possible [24, 67, 68]. Interestingly, the panelists had conflicting perspectives towards simplicity: OSH surveillance was fairly simple, but, on the other hand, that surveillance systems can be intricate.

Example measures received lower mean rating and mode as well as larger difference and SD than components and attributes in the Delphi process, reflecting relatively larger divergence of panel opinions. Our speculation is that components and attributes tend to appear more often in existing evaluation guidelines with relatively finite definitions and connotations, while measures, which represent the operationalization of attributes into measurable indicators, tend to be more flexible and selected/developed at the evaluator’s discretion. As such, the expert panel might hold more diversified opinions on the relevance of a measure to OSH surveillance. Since different measures may be geared towards different aspects of the attributes, the development of a standard yet flexible guiding framework can further test the comprehensiveness and applicability of selected measures, including any customization of evaluation metrics.

Current OSH surveillance status and implications

This study showed that nearly half of the OSH surveillance systems only conducted secondary data analysis. The most common type of surveillance was the OHIs surveillance, which focuses on secondary data analyses with multiple external data sources. The findings are in line with the recent report by the National Academies of Sciences, Engineering, and Medicine that, “there is no single, comprehensive OSH surveillance system in the US, but rather an evolving set of systems using a variety of data sources that meet different objectives, each with strengths and weaknesses” [9]. Limitations with current available data sources presents challenges to OSH surveillance in the US as well as in many other countries [69]. For example, data completeness and accuracy are often compromised with a single data source due to its potential under-coverage and under-reporting issues [70]. On the other hand, data integration has been an issue for surveillance with multiple data sources. We have reported the utilization of workers’ compensation data from different sources for injury research and surveillance and found methodological challenge in developing crosswalk to communicate different data coding systems [71].Both the experts’ comments and their reviews of respective systems highlighted limited resources for OSH surveillance, which is also related to the lack of or less frequent evaluation for the systems. Experts reported barriers to evaluation including staff time and budget, as well as difficulty in reaching out to stakeholders. A major implication is the consideration of flexibility and feasibility in deciding evaluation objectives and criteria. Although feasibility is always a consideration, it seemed to be more remarkable with OSH surveillance, as the panel experts pointed out that many criteria were ideal but not readily feasible due to limited resources.

The identified network relationships among elements are similar to an existing study on animal health surveillance systems [72]. For example, the expert panel pointed that some surveillance elements, such as organization and management, resources and training were fundamental as they impacted the other elements the most, while some other elements, such as acceptability, data completeness, stability and sustainability could both be remarkably impacted and impact other elements, serving an intermediate role in the relationship network. These findings can guide the prioritization of evaluation focuses. Limited by the main focus in this study, in-depth investigation on this topic was not available. Further research is needed to comprehensively understand interactions among OSH surveillance components and attributes and to investigate weights for elements based their interactions.

This study highlighted the need for tailored guidelines for OSH surveillance evaluation. According to the panel experts’ comments in the Delphi process, they struggled with developing a systematic evaluation approach and current guidelines were insufficient to accommodate systems for non-communicable diseases and systems that rely on multiple data sources such as OSH. Relevant components, attributes and measures identified in this study can guide more relevant evaluation of OSH surveillance systems. The study team is further working on a more detailed evaluation framework based on study findings to bridge this gap (manuscript in preparation).

Limitations

A few limitations should be recognized in this study. The study formed a Delphi panel with 10 experts in the first round. Although the panel size met our goal, it was a relatively small panel as compared to existing Delphi studies, in which the number of panelists varied from around 10 to more than 1,000 [73]. In general, the reliability of panel judgement tends to increase with the number panel experts. However, larger panel size may raise issues of manageability and attrition, which may adversely impact the study reliability and validity [62, 73]. Researchers pointed that it was likely that the Delphi study reliability would decline rapidly below six participants while the improvement of reliability would be subjected to diminishing returns after the first round with a panel of 12 panelists or more [54]. To date, there has been no consensus on Delphi panel size, and the numbers in existing studies usually varied according to the scope of the problem and resources (time and budget) available [62, 73].

Participation rate in the second and third round in this study was 80% and 70% respectively. Drop-out has been recognized as a particular challenge in Delphi process given its iterative feature which demands the panelists’ time commitment. Through our communication with the panelists who dropped out, we learned that time conflict was the main reason for them to stop. A review paper showed that response rate in the third round can be as low as 16% for large Delphi studies [49]. Despite we adopted multiple ways recommended in literature to increase returns, including seeking experts recommended from the field, frequent reminders and active contacts even after the specified deadlines [52], the drop-out in the following two rounds may more or less bias the information being gathered in this study. We had to assume that the expert(s) who dropped in the second review held the same view as in his/her first review, but this might have not been true.

Similar to any other consensus methods, the Delphi technique is subject to cognitive bias in information exchange and decision-making processes related to the participants’ backgrounds and personal traits [74]. Potential participants were identified using multiple information sources, however, despite the effort to recruit a panel representing both the national and state level OSH surveillance systems, most panel participants in this study were from state-level surveillance systems.

Researchers have warned that even the most well-planned Delphi may not yield an exhaustive nor all-inclusive set of ideas [51]. The finalized list in this study may not cover every aspect of OSH surveillance given limited existing reference for this field. With continued efforts promoting OSH surveillance and its evaluation, more elements that are relevant and practical to OSH surveillance evaluation may be added into the list.

Conclusion

This paper described the development of a comprehensive list of components, attributes, and example measures relevant to OSH surveillance systems in the US by conducting a three-round Delphi survey study with experts across the US who had experience in OSH surveillance operation and/or with surveillance evaluation.

OSH surveillance components, attributes and example measures identified in this study can serve as a preliminary guide for evaluating OSH surveillance systems. The evaluators could choose components and attributes that are appropriate to their systems and use example measures to develop their own evaluation metrics. Future research and practice are needed to further refine and enrich the list of elements and explore its applicability to OSH surveillance evaluation. The research team is further developing a more detailed evaluation framework based on the study findings to provide standard yet flexible guidance on how to select and prioritize these elements for evaluating different types and stages of OSH surveillance systems.

Availability of data and materials

All data generated or analyzed during this study are included in this published article and its supplementary information files.

Abbreviations

- ABLES:

-

Adult Blood Lead Epidemiology and Surveillance System

- BLS:

-

Bureau of Labor Statistics

- CDC:

-

Centers for Disease Control and Prevention

- CFOI:

-

Census of Fatal Occupational Injuries

- CSTE:

-

Council of State and Territorial Epidemiologists

- FTE:

-

Full-Time Equivalent

- NEISS-Work:

-

National Electronic Injury Surveillance System—Occupational Supplement

- NIOSH:

-

National Institute for Occupational Safety and Health

- OHIs:

-

Occupational Health Indicators

- OSH:

-

Occupational Safety and Health

- SOII:

-

Survey of Occupational Injuries and Illnesses

References

Bureau of Labor Statistics. Injuries, Illnesses, and Fatalities (IIF). Published 2021. Accessed 19 Jul 2021. https://www.bls.gov/iif/home.htm#news

L BS, D Wegman, S Baron, R Sokas. 2017. Occupational and Environmental Health. Vol 1. Oxford University Press. https://doi.org/10.1093/oso/9780190662677.001.0001

Koh D, Aw TC. Surveillance in Occupational Health. Occup Environ Med. 2003;60(9):705–10. https://doi.org/10.1136/oem.60.9.705.

Bureau of Labor Statistics. Injuries, Illnesses, and Fatalities (IIF). Published 2020. Accessed 20 Mar 2020. https://www.bls.gov/iif/home.htm

National Institute for Occupational Safety and Health. Worker Health Surveillance - Our Current Surveillance Initiatives. Published 2019. Accessed 15 May 2020. https://www.cdc.gov/niosh/topics/surveillance/data.html

Council of State and Territorial Epidemiologists. Putting Data to Work: Occupational Health Indicators from Thirteen Pilot States for 2000. Council of State and Territorial Epidemiologists (CSTE) in collaboration with National Institute for Occupational Safety and Health, Centers for Disease Control and Prevention; 2005. https://stacks.cdc.gov/view/cdc/6748/.

Stanbury M, Anderson H, Bonauto D, Davis L, Materna B, Rosenman K. Guidelines for Minimum and Comprehensive State-Based Public Health Activities in Occupational Safety and Health. Nitional Institute for Occupational Safety and Health (NIOSH); 2008.

Landrigan PJ. Improving the surveillance of occupational disease. Am J Public Health. 1989;79(12):1601–2.

National Academies of Sciences, Engineering, and Medicine. 2018. A Smarter National Surveillance System for Occupational Safety and Health in the 21st Century. the National Academies Press. Accessed 20 Jan 2018. http://www8.nationalacademies.org/onpinews/newsitem.aspx?RecordID=24835

Thacker SB, Qualters JR, Lee LM. Public Health Surveillance in the United States: Evolution and Challenges. Morb Mortal Wkly Rep. 2012;61(3):3–9.

Stout N, Bell C. Effectiveness of source documents for identifying fatal occupational injuries: a synthesis of studies. Am J Public Health. 1991;81(6):725–8. https://doi.org/10.2105/AJPH.81.6.725.

Leigh JP, Du J, McCurdy SA. An estimate of the U.S. government’s undercount of nonfatal occupational injuries and illnesses in agriculture. Ann Epidemiol. 2014;24(4):254–9. https://doi.org/10.1016/j.annepidem.2014.01.006.

Pransky G, Snyder T, Dembe A, Himmelstein J. Under-reporting of work-related disorders in the workplace: a case study and review of the literature. Ergonomics. 1999;42(1):171–82. https://doi.org/10.1080/001401399185874.

Leigh JP, Marcin JP, Miller TR. An Estimate of the U.S. Government’s Undercount of Nonfatal Occupational Injuries. J Occup Environ Med. 2004;46(1):10–8. https://doi.org/10.1097/01.jom.0000105909.66435.53.

Rosenman KD, Kalush A, Reilly MJ, Gardiner JC, Reeves M, Luo Z. How Much Work-Related Injury and Illness is Missed By the Current National Surveillance System? J Occup Environ Med. 2006;48(4):357–65. https://doi.org/10.1097/01.jom.0000205864.81970.63.

Ruser JW. Allegations of Undercounting in the BLS Survey of Occupational Injuries and Illnesses. In: Joint Statistical Meetings, Section on Survey Research Methods. Vancouver: American Statistical Association; 2010. p. 2851–65.

Arrazola J. Assessment of Epidemiology Capacity in State Health Departments - United States, 2017. Morb Mortal Wkly Rep. 2018;67:935–9. https://doi.org/10.15585/mmwr.mm6733a5.

Hadler JL, Lampkins R, Lemmings J, Lichtenstein M, Huang M, Engel J. Assessment of Epidemiology Capacity in State Health Departments - United States, 2013. MMWR Morb Mortal Wkly Rep. 2015;64(14):394–8.

Shire JD, Marsh GM, Talbott EO, Sharma RK. Advances and Current Themes in Occupational Health and Environmental Public Health Surveillance. Annu Rev Public Health. 2011;32(1):109–32. https://doi.org/10.1146/annurev-publhealth-082310-152811.

Thacker SB, Stroup DF. Future Directions for Comprehensive Public Health Surveillance and Health Information Systems in the United States. Am J Epidemiol. 1994;140(5):383–97. https://doi.org/10.1093/oxfordjournals.aje.a117261.

Savel TG, Foldy S, Control C for D, Prevention. The role of public health informatics in enhancing public health surveillance. MMWR Surveill Summ. 2012;61(2):20–4.

Centers for Disease Control and Prevention (CDC), Office of Strategy and Innovation. Introduction to Program Evaluation for Public Health Programs: A Self-Study Guide. Atlanta, GA: Centers for Disease Control and Prevention; 2011. http://www.cdc.gov/eval/guide/cdcevalmanual.pdf.

Centers for Disease Control and Prevention, Program Performance and Evaluation Office. Framework for program evaluation in public health. Morb Mortal Wkly Rep. 1999;48(No.RR-11):1–40.

Centers for Disease Control and Prevention. Updated guidelines for evaluating public health surveillance systems: recommendations from the guidelines working group. Morb Mortal Wkly Rep. 2001;50(13):1–36.

Drewe JA, Hoinville LJ, Cook AJC, Floyd T, Stärk KDC. Evaluation of animal and public health surveillance systems: a systematic review. Epidemiol Infect. 2012;140(4):575–90. https://doi.org/10.1017/S0950268811002160.

Centers for Disease Control and Prevention. 1988. Guidelines for evaluating surveillance systems. Morb Mortal Wkly Rep MMWR. 37(No. S-5). https://www.cdc.gov/mmwr/preview/mmwrhtml/00001769.htm

Adamson PC, Tafuma TA, Davis SM, Xaba S, Herman-Roloff A. A systems-based assessment of the PrePex device adverse events active surveillance system in Zimbabwe. PLoS ONE. 2017;12(12): e0190055. https://doi.org/10.1371/journal.pone.0190055.

Jefferson H, Dupuy B, Chaudet H, et al. Evaluation of a syndromic surveillance for the early detection of outbreaks among military personnel in a tropical country. J Public Health Oxf Engl. 2008;30(4):375–83. https://doi.org/10.1093/pubmed/fdn026.

Jhung MA, Budnitz DS, Mendelsohn AB, Weidenbach KN, Nelson TD, Pollock DA. Evaluation and overview of the National Electronic Injury Surveillance System-Cooperative Adverse Drug Event Surveillance Project (NEISS-CADES). Med Care. 2007;45(10 Supl 2):S96-102. https://doi.org/10.1097/MLR.0b013e318041f737.

A Joseph, N Patrick, N Lawrence, O Lilian, A Olufemi. Evaluation of Malaria Surveillance System in Ebonyi state, Nigeria, 2014. Ann Med Health Sci Res. Published online 2017. Accessed 30 Dec 2017. https://www.amhsr.org/abstract/evaluation-of-malaria-surveillance-system-in-ebonyi-state-nigeria-2014-3901.html

Kaburi BB, Kubio C, Kenu E, et al. Evaluation of the enhanced meningitis surveillance system, Yendi municipality, northern Ghana, 2010–2015. BMC Infect Dis. 2017;17:306. https://doi.org/10.1186/s12879-017-2410-0.

Liu X, Li L, Cui H, Jackson VW. Evaluation of an emergency department-based injury surveillance project in China using WHO guidelines. Inj Prev. 2009;15(2):105–10.

Pinell-McNamara VA, Acosta AM, Pedreira MC, et al. Expanding Pertussis Epidemiology in 6 Latin America Countries through the Latin American Pertussis Project. Emerg Infect Dis. 2017;23(Suppl 1):S94–100. https://doi.org/10.3201/eid2313.170457.

MJ Thomas, PW Yoon, JM Collins, AJ Davidson, WR Mac Kenzie. Evaluation of Syndromic Surveillance Systems in 6 US State and Local Health Departments: J Public Health Manag Pract. Published online Sept 2017:1. https://doi.org/10.1097/PHH.0000000000000679

Velasco-Mondragón HE, Martin J, Chacón-Sosa F. Technology evaluation of a USA-Mexico health information system for epidemiological surveillance of Mexican migrant workers. Rev Panam Salud Pública. 2000;7:185–92. https://doi.org/10.1590/S1020-49892000000300008.

Yang L, Weston C, Cude C, Kincl L. Evaluating Oregon’s occupational public health surveillance system based on the CDC updated guidelines. Am J Ind Med. 2020;63(8):713–25. https://doi.org/10.1002/ajim.23139.

Hoffman S, Dekraai M. Brief Evaluation Protocol for Public Health Surveillance Systems. University of Nebraska: The Public Policy Center; 2016.

Centers for Disease Control and Prevention. Evaluating an NCD-Related Surveillance System. Centers for Disease Control and Prevention. 2013.

Holder Y, Peden M, Krug E, et al. Injury Surveillance Guidelines. 2001.

Centers for Disease Control and Prevention. Framework for evaluating public health surveillance systems for early detection of outbreaks: recommendations from the CDC Working Group. Morb Mortal Wkly Rep. 2004;53(No. RR-5):1–13.

Salman null, Stärk KDC, Zepeda C. Quality assurance applied to animal disease surveillance systems. Rev Sci Tech Int Off Epizoot. 2003;22(2):689–96.

Meynard JB, Chaudet H, Green AD, et al. Proposal of a framework for evaluating military surveillance systems for early detection of outbreaks on duty areas. BMC Public Health. 2008;8(1):146.

Hendrikx P, Gay E, Chazel M, et al. OASIS: an assessment tool of epidemiological surveillance systems in animal health and food safety. Epidemiol Infect. 2011;139(10):1486–96.

Auer AM, Dobmeier TM, Haglund BJ, Tillgren P. The relevance of WHO injury surveillance guidelines for evaluation: learning from the aboriginal community-centered injury surveillance system (ACCISS) and two institution-based systems. BMC Public Health. 2011;11(744):1–15. https://doi.org/10.1186/1471-2458-11-744.

Patel K, Watanabe-Galloway S, Gofin R, Haynatzki G, Rautiainen R. Non-fatal agricultural injury surveillance in the United States: A review of national-level survey-based systems. Am J Ind Med. 2017;60(7):599–620. https://doi.org/10.1002/ajim.22720.

Calba C, Goutard FL, Hoinville L, et al. Surveillance systems evaluation: a systematic review of the existing approaches. BMC Public Health. 2015;15(448):448. https://doi.org/10.1186/s12889-015-1791-5.

Spreeuwers D, de Boer AG, Verbeek JH, van Dijk FJ. Characteristics of national registries for occupational diseases: international development and validation of an audit tool (ODIT). BMC Health Serv Res. 2009;9:194. https://doi.org/10.1186/1472-6963-9-194.

Boulkedid R, Abdoul H, Loustau M, Sibony O, Alberti C. Using and Reporting the Delphi Method for Selecting Healthcare Quality Indicators: A Systematic Review. Wright JM, ed. PLoS One. 2011;6(6):e20476. https://doi.org/10.1371/journal.pone.0020476.

Foth T, Efstathiou N, Vanderspank-Wright B, et al. The use of Delphi and Nominal Group Technique in nursing education: A review. Int J Nurs Stud. 2016;60:112–20. https://doi.org/10.1016/j.ijnurstu.2016.04.015.

Habibi A, Sarafrazi A, Izadyar S. Delphi Technique Theoretical Framework in Qualitative Research. Int J Eng Sci. 2014;3(4):8–13.

Hasson F, Keeney S. Enhancing rigour in the Delphi technique research. Technol Forecast Soc Change. 2011;78(9):1695–704. https://doi.org/10.1016/j.techfore.2011.04.005.

Hsu CC, Sandford BA. Minimizing Non-Response in The Delphi Process: How to Respond to Non-Response. Pract Assess Res Eval. 2007;12(17):1–6.

HA Linstone, M Turoff. 2002. The Delphi Method: Techniques and Applications. Addison-Wesley Educational Publishers Inc. Accessed 10 March 2016. http://www.academia.edu/download/29694542/delphibook.pdf

Murphy, Black, Lamping, et al. 1998. Consensus development methods, and their use in clinical guideline development. Health Technol Assess. 2(3). https://doi.org/10.3310/hta2030

Powell C. The Delphi technique: myths and realities. J Adv Nurs. 2003;41(4):376–82. https://doi.org/10.1046/j.1365-2648.2003.02537.x.

Rajendran S, Gambatese JA. Development and Initial Validation of Sustainable Construction Safety and Health Rating System. J Constr Eng Manag. 2009;135(10):1067–75. https://doi.org/10.1061/(ASCE)0733-9364(2009)135:10(1067).

Rowe G, Wright G. The Delphi technique as a forecasting tool: issues and analysis. Int J Forecast. 1999;15(4):353–75. https://doi.org/10.1016/S0169-2070(99)00018-7.

Sinha IP, Smyth RL, Williamson PR. Using the Delphi Technique to Determine Which Outcomes to Measure in Clinical Trials: Recommendations for the Future Based on a Systematic Review of Existing Studies. PLoS Med. 2011;8(1): e1000393. https://doi.org/10.1371/journal.pmed.1000393.

Adler M, Ziglio E. Gazing Into the Oracle: The Delphi Method and Its Application to Social Policy and Public Health. London and Philadelphia: Jessica Kingsley Publishers; 1996.

Clayton MJ. Delphi: a technique to harness expert opinion for critical decision-making tasks in education. Educ Psychol. 1997;17(4):373–86. https://doi.org/10.1080/0144341970170401.

Greatorex J, Dexter T. An accessible analytical approach for investigating what happens between the rounds of a Delphi study. J Adv Nurs. 2000;32(4):1016–24.

Hsu CC, Sandford BA. The Delphi Technique: Making Sense Of Consensus. Pract Assess Res Eval. 2007;12(10):1–8.

Makkonen M, Hujala T, Uusivuori J. Policy experts’ propensity to change their opinion along Delphi rounds. Technol Forecast Soc Change. 2016;109:61–8. https://doi.org/10.1016/j.techfore.2016.05.020.

Bolger F, Wright G. Improving the Delphi process: Lessons from social psychological research. Technol Forecast Soc Change. 2011;78(9):1500–13. https://doi.org/10.1016/j.techfore.2011.07.007.

Meijering JV, Tobi H. The effect of controlled opinion feedback on Delphi features: Mixed messages from a real-world Delphi experiment. Technol Forecast Soc Change. 2016;103:166–73. https://doi.org/10.1016/j.techfore.2015.11.008.

Choi BCK. The Past, Present, and Future of Public Health Surveillance. Scientifica. 2012;2012:1–26. https://doi.org/10.6064/2012/875253.

Health Canada. 2004. Framework and Tools for Evaluating Health Surveillance Systems. Population and Public Health Branch, Health Canada. Accessed 18 October 2017. http://publications.gc.ca/site/eng/260337/publication.html

World Health Organization. Communicable Disease Surveillance and Response Systems: Guide to Monitoring and Evaluating. Geneva: World Health Organization; 2006. http://apps.who.int/iris/bitstream/10665/69331/1/WHO_CDS_EPR_LYO_2006_2_eng.pdf.

Smith GS. Public health approaches to occupational injury prevention: do they work? Inj Prev. 2001;7(suppl 1):i3–10. https://doi.org/10.1136/ip.7.suppl_1.i3.

National research Council. 1998. Protecting Youth at Work: Health, Safety, and Development of Working Children and Adolescents in the United States. The National Academies Press. https://doi.org/10.17226/6019

L Yang, A Branscum, V Bovbjerg, C Cude, C Weston, L Kincl. Assessing disabling and non-disabling injuries and illnesses using accepted workers compensation claims data to prioritize industries of high risk for Oregon young workers. J Safety Res. Published online 30 Mar 2021. https://doi.org/10.1016/j.jsr.2021.03.007

MI Peyre, L Hoinville, B Haesler. et al. 2014. Network analysis of surveillance system evaluation attributes: a way towards improvement of the evaluation process. In: Proceedings ICAHS - 2nd International Conference on Animal Health Surveillance “Surveillance against the Odds”, The Havana, Cuba, 7–9. Accessed October 18, 2017. http://agritrop.cirad.fr/573676/

Williams PL, Webb C. The Delphi technique: a methodological discussion. J Adv Nurs. 1994;19(1):180–6. https://doi.org/10.1111/j.1365-2648.1994.tb01066.x.

Winkler J, Moser R. Biases in future-oriented Delphi studies: A cognitive perspective. Technol Forecast Soc Change. 2016;105:63–76. https://doi.org/10.1016/j.techfore.2016.01.021.

Acknowledgements

The authors thank all panel experts for their participation. The authors thank the Council of State and Territorial Epidemiologists Occupational Subcommittee for facilitating the study recruitment and results dissemination.

Funding

The authors received no specific funding for this work.

Author information

Authors and Affiliations

Contributions

LY substantially worked on conceptualization and design of the study, acquisition, analysis and interpretation of data, writing of the manuscript. LK guided the study and substantially contributed to the design of the study. LK and AB substantially contributed to analysis and interpretation of data. All authors worked on revising of the manuscript critically and final approval to be published. All authors agree to be accountable for all aspects of the work.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study obtained ethics review approval by the Institutional Review Board at Oregon State University (Review#: 8797). All participants gave informed consent. The study’s experimental protocol was in accordance to guidelines and standards set forth in the Common Rule (45 CFR 46) by the Department of Health and Human Services in the US.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Table 1-1.

Major guidelines/frameworks referenced in this study.

Additional file 2: Table 2-1.

OSH surveillance components selected with high/low consensus. Table 2-2. OSH surveillance attributes selected with high/low consensus. Table 2-3. Example measures selected with high/low consensus.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Yang, L., Branscum, A. & Kincl, L. Understanding occupational safety and health surveillance: expert consensus on components, attributes and example measures for an evaluation framework. BMC Public Health 22, 498 (2022). https://doi.org/10.1186/s12889-022-12895-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12889-022-12895-6