Abstract

Background

COVID-19 pandemic has forced physicians to quickly determine the patient’s condition and choose treatment strategies. This study aimed to build and validate a simple tool that can quickly predict the deterioration and survival of COVID-19 patients.

Methods

A total of 351 COVID-19 patients admitted to the Third People’s Hospital of Yichang between 9 January to 25 March 2020 were retrospectively analyzed. Patients were randomly grouped into training (n = 246) or a validation (n = 105) dataset. Risk factors associated with deterioration were identified using univariate logistic regression and least absolute shrinkage and selection operator (LASSO) regression. The factors were then incorporated into the nomogram. Kaplan-Meier analysis was used to compare the survival of patients between the low- and high-risk groups divided by the cut-off point.

Results

The least absolute shrinkage and selection operator (LASSO) regression was used to construct the nomogram via four parameters (white blood cells, C-reactive protein, lymphocyte≥0.8 × 109/L, and lactate dehydrogenase ≥400 U/L). The nomogram showed good discriminative performance with the area under the receiver operating characteristic (AUROC) of 0.945 (95% confidence interval: 0.91–0.98), and good calibration (P = 0.539). Besides, the nomogram showed good discrimination performance and good calibration in the validation and total cohorts (AUROC = 0.979 and AUROC = 0.954, respectively). Decision curve analysis demonstrated that the model had clinical application value. Kaplan-Meier analysis illustrated that low-risk patients had a significantly higher 8-week survival rate than those in the high-risk group (100% vs 71.41% and P < 0.0001).

Conclusion

A simple-to-use nomogram with excellent performance in predicting deterioration risk and survival of COVID-19 patients was developed and validated. However, it is necessary to verify this nomogram using a large-scale multicenter study.

Similar content being viewed by others

Introduction

The respiratory disease coronavirus disease 2019 (COVID-19) caused by severe acute respiratory coronavirus type 2 (SARS-CoV-2) has been spreading globally since December 2019 [1, 2]. COVID-19 information is becoming more detailed as clinical cases increase. Most patients can be cured clinically. However, some COVID-19 patients get worse due to progressive pneumonia, severe dyspnea, gastrointestinal bleeding, or multiple organ failure, and even die [3,4,5]. Critically ill patients are at a higher risk of death, with a mortality rate of up to 49% [3]. Currently, there are no drugs for the COVID-19 treatment [6,7,8,9]. Therefore, it is urgent to determine factors to quickly predict the deterioration of COVID-19 patients.

Cheng et al. [10] showed that the MuLBSTA score (the multilobular infiltration, hypolymphocytosis, bacterial coinfection, smoking history, hypertension, and age) can be used to predict COVID-19 pneumonia mortality. However, in this study, only two of the 11 deaths were consistent with the MuLBSTA score. Furthermore, there is a discrepancy between the disease severity and the MuLBSTA score in clinical trials. The disease can rapidly spread in some patients with a low MuLBSTA score. Ji et al. [11] showed that CALL Score (comorbidity, age, lymphocyte, and lactate dehydrogenase) can be used to predict progression risk in COVID-19 patients. Liang et al. [12] reported that clinical risk score (chest radiographic abnormality, age, hemoptysis, dyspnea, unconsciousness, comorbidities, cancer history, neutrophil-to-lymphocyte ratio, lactate dehydrogenase, and direct bilirubin) is associated with critical illness. However, these methods are complex and require professional radiologists and respiratory doctors to assess the infiltration of multiple lung lobes, especially during this time of the severe epidemic.

Therefore, this study aimed to construct a simple nomogram using common clinical features for the early identification of COVID-19 patients with rapid deterioration and help clinicians to quickly choose better treatment strategies.

Methods

Study participants

The consecutive COVID-19 patients were those admitted to Third People’s Hospital of Yichang between 9 January to 25 March 2020. This study included only patients with positive fluorescence reverse transcription-polymerase chain reaction (RT-PCR) assay results of nasal and pharyngeal swab specimens or specific IgM or IgG antibodies in serum. The disease condition at hospital admission was evaluated and classified into four groups (Mild, Moderate, Severe, and Critical) according to the “COVID-19 diagnosis and treatment program” (7th version) issued by the National Health Commission of China [13]. Patients were reclassified if their condition deteriorated and reached a higher classification criterion.

Disease deterioration was the primary outcome, defined as a change in disease severity from Mild to Moderate/Severe/Critical, Moderate to Severe/Critical, or Severe to Critical during hospitalization [13]. Mortality was the secondary outcome during the treatment.

Candidate predictors

Candidate variables such as demographics (age, gender, and smoking history), comorbid conditions (diabetes mellitus (DM), hypertension, coronary heart disease (CHD), cardiovascular disease (CVD), chronic obstructive pulmonary disease (COPD), cancer, immunodeficiency) were obtained from electronic medical records. Besides laboratory variables were obtained from blood. Whole blood count, coagulation function, routine biochemistry, C-reactive protein (CRP), and procalcitonin (PCT) were assessed in the lab.

Statistical analysis

Continuous normal distribution was expressed as mean ± standard deviation (SD). However, non-continuous normal distribution was expressed as median and interquartile (IQR). Categorical variables were expressed using numbers and proportion (%). The total cohort was randomly divided into training and validation cohorts (7:3). A total of 246 (70.0%) patients were in the training dataset and 105 (30.0%) in the validation dataset. The risk model was established using the following three steps to choose the best predictors of disease deterioration. First, candidate predictors with significant P values were selected using the univariate logistic regression analysis. The optimum subset of predictors was then screened using the least absolute shrinkage and selection operator (LASSO) regression analysis [14]. The lambda parameter that minimized expected model deviance was selected. Finally, the nomogram was constructed using the coefficients for each predictor provided via LASSO regression.

The area under the curve (AUC) of receiver operating characteristic (ROC) analysis was used to evaluate the discriminative performance of the nomogram. A calibration curve was used to measure the nomogram calibration. The Hosmer-Lemeshow test was used to examine the goodness-of-fit [15]. Moreover, the clinical usefulness of the nomogram was assessed using decision curve analysis (DCA), which quantified net benefits at various threshold probabilities [16, 17]. The net benefit was determined by subtracting the false-positive patients from true-positive patients, weighting by the relative harm of no treatment against the negative effects of unnecessary treatment. True positives were the unit of net benefit. For instance, a net benefit of 0.07, means “7 true positives for every 100 patients in the target population.” The decision curves showed that the nomogram is more beneficial than treating either all or no patients, indicating that the nomogram is clinically useful. The performance of the nomogram was further validated in the validation and total cohorts using the earlier described method. Statistics software SPSS 20.0 (IBM, Chicago, IL)) and R software version 3.6.0 were used for all data analysis. A two-sided P < 0.05 indicated a significant difference.

Results

Patient characteristics

A total of 360 consecutive records were included. Nine records were excluded, of which six were duplicate records and three did not have laboratory data. Finally, 351 COVID-19 patients met the study requirements. The random number table was used to randomly divide the total cohort into training cohort (n = 246, 70%) and validation cohort (n = 105, 30%). The clinical characteristics of the training and validation cohorts are shown in Table 1. Despite continuous renal replacement therapy (CRRT), baseline characteristics of both cohorts were comparable, indicating that they could be used as training and validation cohorts.

Selection of predicting factors associated with deterioration risk

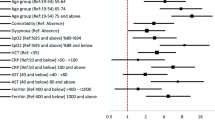

A total of 35 potential predicting factors were used in the development stage of the model. Univariate logistic analysis of the training cohort showed that 24 factors, including age, hypertension, DM, hypertension, CHD, CVD, cancer, White blood cells (WBC), Neutrophil (N), Hemoglobin (HB), Monocyte (Mono), whether lymphocyte (Lym) ≥ 0.8 × 109/L, Platelet (PLT), prothrombin time (PT), Fibrinogen (Fib), D-Dimer, lactate dehydrogenase (LDH) ≥ 400 U/L, aspartate Aminotransferase (AST), alanine aminotransferase (ALT), ASTpeak, ALTpeak, Albumin (ALB), Creatinine, Creatinine peak, CRP, and PCT were significantly associated with deterioration risk (Table 2). LASSO regression was used to build the model since the sample size in this study was inadequate to satisfy the recommended guide of events per variable [18]. The optimal tuning parameter (λ) value of 0.07 with log(λ) = − 2.659 was selected (the minimum criteria). In the training cohort, 24 relevant variables were reduced to four potential predictors (Fig. 1S). The four variables (WBC, CRP, Lym ≥ 0.8 × 109/L, LDH ≥ 400 U/L) with non-zero coefficients were presented in the final model (Table 3).

Prediction nomogram development in the training cohort

A nomogram incorporating the above four independent predictive factors was built (Fig. 1a). AUC of the ROC curve was calculated to assess the performance comparison between this nomogram and the published CALL model [11] (comorbidity, age, lymphocyte, and LDH). The AUC of this nomogram in the training cohort was 0.945, which was higher than the AUC of the CALL model (AUC = 0.909) (Fig. 1b). The cut-off value for risk probability in this model was 0.188, with a sensitivity and specificity of 87.7 and 92.9%, respectively. The calibration curve of this nomogram for the deterioration risk in the training cohort between the observed and predicted risks was consistent (Fig. 1c). The non-significant Hosmer-Lemeshow test (Chi-square = 7.951, P-value = 0.539) indicated a good fit to the model. The clinical value of the nomogram was evaluated using the DCA analysis (Fig. 1d). The DCA curve indicated that if the threshold probability of a patient was between 30 to 80%, using this nomogram to predict patients who could deteriorate is more beneficial than using the “treat-all” or the “treat-no” schemes.

Nomogram predicting the deterioration risk of COVID-19 patients in the training dataset. a Nomogram; b ROC curve; AUC of 0.945; c Calibration plot; d DCA. The y-axis represents the net benefit, the x-axis represents the threshold probability. The red line represents the nomogram, and gray and black lines represent the deteriorated patients and patients without deterioration, respectively. Abbreviations: COVID-19, coronavirus disease 2019; ROC, receiver operating characteristic; AUC, area under the curve; DCA, decision curve analysis

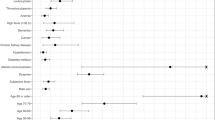

Validation of nomogram performance in the validation and total cohorts

The accuracy of the nomogram in predicting deterioration of COVID-19 patients was high in the validation and total cohorts (AUC = 0.979 and AUC = 0.954, respectively; Fig. 2a-b). Furthermore, calibration plots suggested reasonably good calibration in the validation and total cohorts. The internal calibration plots indicated substantial consistency between the risk predicted by the nomogram and the observed deterioration (Fig. 2c-d). The Hosmer–Lemeshow tests exhibited no statistical significance (Chi-square = 2.172, P-value = 0.988; and Chi-square = 6.577, P-value = 0.681; respectively), suggesting good fitting of the nomogram. Besides, DCA analysis demonstrated significant positive net benefits in the predictive nomogram, exhibiting the favorable potential clinical effect of the nomogram (Fig. 2e-f).

Validation of the discrimination power of the nomogram in the validation and total cohorts. a-b ROC curve analysis of the nomogram in the validation and the total cohorts (AUC, 0.979 and 0.954, respectively); c-d Calibration plot of the nomogram in the validation and the total cohorts; e-f DCA analysis of the nomogram in the validation and the total cohorts. The y-axis represents the net benefit, the x-axis represents the threshold probability. The red line represents the nomogram, and gray and black lines represent the deteriorated patients and patients without deterioration, respectively. Abbreviations: ROC, receiver operating characteristic; AUC, area under the curve; DCA, decision curve analysis

Nomogram for predicting the disease severity and the survival of COVID-19 patients

The total point of each of the 322 cases in the total cohort was calculated based on the nomogram constructed in the training cohort, except for 29 cases due to insufficient information. The total points of patients increased with disease severity, as shown in Fig. 3a (P-value < 0.001). Patients with deterioration had higher points than those without (P-value < 0.001, Fig. 3b). There was a significant difference in points between alive and death groups (P-value < 0.001, Fig. 3c).

Nomogram for predicting the deterioration and the survival of COVID-19 patients in the total cohort (n = 322). a Violin plot showing nomogram total points of different clinical types of COVID-19 patients; b Violin plot showing the correlation of nomogram total points of patients with and without deterioration; c Violin plot showing nomogram total points of alive and dead COVID-19 patients; d Kaplan-Meier survival curves based on the relative low- and high-risk patients divided by the cut-off point (P < 0.0001). Low-risk = Total point < 160; High-risk = Total point ≥160; e Time-dependent ROC curve analysis of the nomogram predicting survival of COVID-19 patients. Abbreviations: COVID-19, coronavirus disease 2019; ROC, receiver operating characteristic; AUC, area under the curve; DCA, decision curve analysis

The total point of 160 corresponding to 50% of the probability of disease deterioration on the nomogram was defined as the cut-off point to distinguish the high and low-risk patients. A total of 322 patients in the total cohort were divided into low-risk (total point < 160, n = 289) and high-risk (total point ≥160, n = 33) groups. The average follow-up time was 56 days. The Kaplan-Meier analysis results indicated a significant difference in overall survival (OS) rates between the high-risk and low-risk group patients. The high-risk patients showed poorer OS than the low-risk patients (8-week survival rate: 71.41% vs. 100%, Log-rank P < 0.0001, Fig. 3d). Time-dependent ROC curve analysis showed an AUC value of 0.959 at 8 weeks (Fig. 3e), indicating outstanding performance for survival prediction.

Discussion

COVID-19 has various clinical manifestations from asymptomatic diseases to pneumonia and life-threatening complications. During clinical diagnosis and treatment of COVID-19, some patients may have rapid disease deterioration and even die [19]. Presently, there is no therapy for COVID-19 patients. Therefore, it is important to identify risk factors early to predict the likelihood of disease deterioration, thus reducing mortality [12].

Univariate logistic regression analysis identified 24 variables related to the COVID-19 deterioration and the LASSO regression method was used to construct the prediction model. LASSO regression selects the best prediction subset from the high-dimensional original dataset [14], thus significantly improving the accuracy of predicting the deterioration risk of COVID-19 patients in this study. A simple nomogram with four clinical common predictors, including WBC, CRP, whether Lym ≥ 0.8 × 109/L, and LDH ≥ 400 U/L was developed based on LASSO regression. Liang et al. [12] found that lower lymphocyte and higher LDH are independent factors for predicting the occurrence of critical illness and the CALL study used a sample size of 208 patients [11] and found that lower lymphocyte and higher LDH are independent factors for predicting disease progression, similar to this study results. The AUC of this nomogram was 0.945, which was higher than that of the CALL score (AUC = 0.909), indicating the outstanding performance of the nomogram for prediction. Notably, instead of analyzing the absolute value of lymphocytes and LDH as continuous variables, they were divided into binary variables using clinical experience. Furthermore, WBC and CRP were included in the final risk model as inflammation gauges. Importantly, this nomogram using simple and common clinical features can help clinicians to make quick and better decisions.

The nomogram had excellent discrimination, with an AUC of 0.945. Therefore, this nomogram has outstanding clinical transformation value. The nomogram also showed good calibration, thus a convenient tool with clinical value. Besides, the total points of patients increased with disease severity. COVID-19 patients in the total cohort were divided into high-risk and low-risk groups with a significant difference in survival rate to further assess the performance of the nomogram for predicting survival. The time-dependent ROC analysis suggested that the AUC reached 0.959 at 8 weeks, indicating outstanding performance for survival prediction. Remarkably, patients in the low-risk group were all alive.

However, this study has some limitations. First, this was a single-center retrospective analysis study, thus limiting the generalizability of this nomogram in other centers. Multiple external validations using different settings and populations are required to fully understand the transportability of the nomogram. Second, the patients in this study were collected within the early 3-month period of the pandemic. There were no new diagnosed patients from the same institution used as the validation cohort at the later stage due to the effective management of the epidemic situation by the Chinese government, thus affecting the generalizability of the model. Furthermore, the small sample sizes can affect the interpretation of the study results.

Conclusion

In conclusion, the simple nomogram showed excellent performance in predicting deterioration risk in COVID-19 patients. It can help to optimize the use of limited resources, especially in areas with several cases and/or shortages of medical resources.

Availability of data and materials

The datasets analyzed in this study are not publicly available but will be made available from the corresponding author on reasonable request.

Abbreviations

- ALT:

-

Alanine aminotransferase

- APTT:

-

Activated partial thromboplastin time

- ARDS:

-

Acute respiratory distress syndrome

- AST:

-

Aspartate Aminotransferase

- AUC:

-

Area under the curve

- CHD:

-

Coronary heart disease

- CI:

-

Confidence interval

- CK:

-

Creatine kinase

- CKMB:

-

Creatine kinase-MB

- COPD:

-

Chronic obstructive pulmonary disease

- COVID-19:

-

Coronavirus disease 2019

- CRP:

-

C-reactive protein

- CRRT:

-

Continuous renal replacement therapy

- CVD:

-

Cardiovascular disease

- DBIL:

-

Direct bilirubin

- DCA:

-

Decision curve analysis

- DM:

-

Diabetes mellitus

- HL:

-

Hosmer-Lemeshow

- IQR:

-

Interquartile

- LASSO:

-

Least absolute shrinkage and selection operator

- LDH:

-

Lactate dehydrogenase

- NIVV:

-

Non-invasive ventilation

- OR:

-

Odds ratio

- PCT:

-

Procalcitonin

- PT:

-

Prothrombin time

- ROC:

-

Receiver operating characteristic

- RT-PCR:

-

Reverse transcription-polymerase chain reaction

- SD:

-

Standard deviation

- TBIL:

-

Total bilirubin

- WBC:

-

White blood cells

References

Cucinotta D, Vanelli M. WHO declares COVID-19 a pandemic. Acta Biomed. 2020;91(1):157–60. https://doi.org/10.23750/abm.v91i1.9397.

Mahase E. Covid-19: WHO declares pandemic because of "alarming levels" of spread, severity, and inaction. BMJ. 2020;368:m1036.

Guan WJ, Ni ZY, Hu Y, Liang WH, Ou CQ, He JX, et al. Clinical characteristics of coronavirus disease 2019 in China. N Engl J Med. 2020:382, 1708–1720.

Lai CC, Shih TP, Ko WC, Tang HJ, Hsueh PR. Severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) and coronavirus disease-2019 (COVID-19): the epidemic and the challenges. Int J Antimicrob Agents. 2020;55(3):105924. https://doi.org/10.1016/j.ijantimicag.2020.105924.

Xie J, Tong Z, Guan X, Du B, Qiu H. Clinical characteristics of patients who died of coronavirus disease 2019 in China. JAMA Netw Open. 2020;3(4):e205619. https://doi.org/10.1001/jamanetworkopen.2020.5619.

Cao B, Wang Y, Wen D, Liu W, Wang J, Fan G, et al. A trial of Lopinavir-ritonavir in adults hospitalized with severe Covid-19. N Engl J Med. 2020:382, 1787–1799.

Grein J, Ohmagari N, Shin D, Diaz G, Asperges E, Castagna A, et al. Compassionate use of Remdesivir for patients with severe Covid-19. N Engl J Med. 2020;382(24):2327–36. https://doi.org/10.1056/NEJMoa2007016.

Antinori S, Cossu MV, Ridolfo AL, Rech R, Bonazzetti C, Pagani G, et al. Compassionate remdesivir treatment of severe Covid-19 pneumonia in intensive care unit (ICU) and non-ICU patients: clinical outcome and differences in post-treatment hospitalisation status. Pharmacol Res. 2020;158:104899. https://doi.org/10.1016/j.phrs.2020.104899.

Sanders JM, Monogue ML, Jodlowski TZ, Cutrell JB. Pharmacologic treatments for coronavirus disease 2019 (COVID-19): a review. JAMA. 2020:323, 1824–1836.

Cheng Z, Lu Y, Cao Q, Qin L, Pan Z, Yan F, et al. Clinical Features and Chest CT Manifestations of Coronavirus Disease 2019 (COVID-19) in a single-center study in Shanghai, China. AJR Am J Roentgenol. 2020(215):121–6.

Ji D, Zhang D, Xu J, Chen Z, Yang T, Zhao P, et al. Prediction for progression risk in patients with COVID-19 pneumonia: the CALL score. Clin Infect Dis. 2020;71(6):1393–9. https://doi.org/10.1093/cid/ciaa414.

Liang W, Liang H, Ou L, Chen B, Chen A, Li C, et al. Development and validation of a clinical risk score to predict the occurrence of critical illness in hospitalized patients with COVID-19. JAMA Intern Med. 2020;180(8):1081–9. https://doi.org/10.1001/jamainternmed.2020.2033.

National Health Commission of the People’s Republic of China. Diagnosis and treatment plan of novel coronavirus pneumonia (Trial version Seventh). 2020.

Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. J Stat Softw. 2010;33(1):1–22.

Kramer AA, Zimmerman JE. Assessing the calibration of mortality benchmarks in critical care: the Hosmer-Lemeshow test revisited. Crit Care Med. 2007:35, 2052–2056.

Vickers AJ, Cronin AM, Elkin EB, Gonen M. Extensions to decision curve analysis, a novel method for evaluating diagnostic tests, prediction models and molecular markers. BMC Med Inform Decis Mak. 2008;8(1):53. https://doi.org/10.1186/1472-6947-8-53.

Vickers AJ, van Calster B, Steyerberg EW. A simple, step-by-step guide to interpreting decision curve analysis. Diagn Progn Res. 2019;3(1):18. https://doi.org/10.1186/s41512-019-0064-7.

Peduzzi P, Concato J, Kemper E, Holford TR, Feinstein AR. A simulation study of the number of events per variable in logistic regression analysis. J Clin Epidemiol. 1996;49(12):1373–9. https://doi.org/10.1016/S0895-4356(96)00236-3.

Pascarella G, Strumia A, Piliego C, Bruno F, Del Buono R, Costa F, et al. COVID-19 diagnosis and management: a comprehensive review. J Intern Med. 2020;288(2):192–206. https://doi.org/10.1111/joim.13091.

Acknowledgements

None.

Funding

The National Natural Science Foundation of China (No. 81400160 and 82070218), the Natural Science Foundation of Fujian Province (No. 2017 J01185), and the Novel coronavirus pneumonia prevention and control project of Fujian Medical University (2020YJ007) supported this study.

Author information

Authors and Affiliations

Contributions

ZYZ, DBD, GPC, and DZK conceptualized and designed the study, interpreted the data, and drafted the manuscript. CHW, YYF, YMH, and MHL were responsible for the acquisition of data and administrative and technical support. ZLL and SDF performed data analysis, and ZLL and YY conducted the statistical analysis. All authors contributed to the writing of the manuscript, read and approved the final submitted version.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

The ethics committee of the First Affiliated Hospital of Fujian Medical University approved this study. The study was conducted following the Declaration of Helsinki guidelines. The Ethics Board of the First Affiliated Hospital of Fujian Medical University waived the requirement of written informed consent.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Figure 1S

Potential features selection using the least absolute shrinkage and selection operator (LASSO) regression. (a) Tuning parameter (λ) selection in the LASSO model used 10-fold cross-validation via minimum criteria. Different mean-squared error (MSE) values were plotted versus log (λ). The numbers across the top of the plot represent the number of features remaining. Dotted vertical lines were drawn at the optimal values using the minimum criteria and the 1 standard error of the minimum criteria (the 1-SE criteria). The optimal λ value of 0.07 with log (λ) = − 2.659 was selected (the minimum criteria). (b) LASSO coefficient profiles of the 24 features. A coefficient profile plot was produced against the log (λ) sequence, and the four non-zero coefficients were chosen at the values selected using 10-fold cross-validation

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Zeng, Z., Wu, C., Lin, Z. et al. Development and validation of a simple-to-use nomogram to predict the deterioration and survival of patients with COVID-19. BMC Infect Dis 21, 356 (2021). https://doi.org/10.1186/s12879-021-06065-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12879-021-06065-z