Abstract

We discuss the status and prospects of the Large Hadron Collider beauty (LHCb) experiment, one of the four large detectors based at the LHC. The physics programme of the experiment is discussed by highlighting the status of rare b-quark decays, charged current semileptonic decays and the searches for CP violation. These areas make a strong cases for a second upgrade of LHCb, which will fully harness the HL-LHC’s potential as a flavour physics machine while maintaining a rich and diverse research programme. The upgrade also provides an opportunity for the development of novel detector technologies during an exciting period of anticipation in preparation for the future circular collider currently foreseen.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

At this unique juncture in particle physics, a multitude of theories once believed to be ideal complements to the Standard Model (SM) have been either disqualified or so significantly confined in their scope that they no longer hold the same prominence. However, the unresolved issues of the SM that were prevalent prior to the initiation of the Large Hadron Collider (LHC) remain unresolved. These circumstances have led some to consider this to be a period of stagnation. Historically, such periods have often served as precursors to significant breakthroughs. The challenge we face is due to our inability to predict if this impending revolution will occur in the coming decade or necessitate a more extended timeline. The initial two runs of the LHC have largely corroborated the SM, exceeding our own expectations. In this scenario, where there is no decisive conception of the scale and structure of New Physics (NP), indirect searches and precision measurements emerge as highly promising avenues. Among these, precise assessments of Flavour Physics hold particular intrigue as they could potentially elucidate the Flavour puzzle - the distinctive pattern of masses and couplings across different families that, although described, remain inexplicable within the SM. In more technical terms, the intriguing aspect is that the gauge portion of the SM Langrangian has an extensive discrete symmetry, \(U(3)^5\), which is broken by the Higgs mechanism via Yukawa couplings and is suggestive of a concealed dynamics at work.

Among the various flavour-physics experiments, LHCb has been one of the most prominent for the last 15 years. The reason for this is due to the unique sensitivity, meaning that LHCb results from both the original detector and its upgrades have the potential to significantly influence the future direction of particle physics. The LHCb experiment [1] is one of the four large detectors located at the LHC and is specifically designed to study the decays of beauty and charm quarks. Unlike the ATLAS and CMS detectors, LHCb is designed more like a fixed target experiment, with the collision point situated on one side of the detector. This single-arm forward spectrometer geometry allows the maximum amount of space in which to instrument in a single direction, which is crucial to the performance of particle ID and momentum resolution. This is at the expense of having a smaller acceptance, where only the pseudo-rapidity range \(2< \eta < 5.0\) is instrumented. However, due to the particular nature of \(b\bar{b}\) production at the LHC, most beauty quarks are produced in the forward direction, and therefore around 20% of which decay within the LHCb acceptance.

Being situated at the LHC, the LHCb detector operates in a hadronic environment, with hundreds of particles produced in every hard pp interaction. This is in contrast to the B-factories, where two B-mesons are much more isolated and kinematically constrained. The reason why LHCb can compete in this environment is due to the huge production rate at the LHC. For example, starting from 2035, the high-luminosity LHC will produce O(100 M) beauty quark pairs every second [2]. This is equivalent to producing a B-factory dataset every 10 s, albiet in much harsher conditions. The exploitation of this huge dataset for the purposes of heavy flavour physics is a formidable, yet as we will discuss later, rewarding challenge.

2 Status of the LHCb experiment

Aside from its geometry, the LHCb detector has a few features which distinguish it when compared to the other experiments at the LHC. Firstly, the vertex detector (VELO) is placed only 5.1 mm from the beam, allowing for an excellent impact parameter resolution of roughly 35 \(\mu \hbox {m}\) depending on the transverse momentum of the particle. This is vitally important for timing resolution and to reject particles that originate from the primary pp collision. Another feature of LHCb crucially important for heavy flavour physics is the ability to identify hadrons. This is provided by ring imaging Cherenkov detectors (RICH), situated upstream and downstream of a dipole magnet. The tracking system, comprised of the VELO, a silicon tracking system situated upstream of the magnet and further tracking stations downstream. These provide an excellent momentum resolution resulting in \(\sim \) 20 MeV mass resolution for a fully reconstructed b-hadron decay.

The LHCb experiment does not operate at the maximum luminosity of \(2\times 10^{34}\) cm\(^{-2}\) s\(^{-1}\) currently accesable at the LHC. The reason for this choice is firstly due to the very large occupancies in the forward region of the LHC, which would make particle reconstruction prohibitively complicated. The second reason is due to the extremely high signal rates, resulting in unfeasible requirements for any trigger or DAQ system realisable with current technology. The luminosity is therefore ‘levelled’ by defocusing the beam at the interaction point, allowing for a more manageable data rate and simpler to analyse. During runs I and II, the luminosity at LHCb was levelled to a maximum rate of \(4\times 10^{32}\) cm\(^{-2}\) s\(^{1}\), which resulted in around 1–2 hard pp interactions per bunch crossing. In this environment the first level of the trigger system, based on partial readout such as high transerve energy clusters in the calorimeters, was able to achieve good efficiency for a wide variety of signatures.

For the ongoing LHC run III, the luminosity will be levelled to a rate of \(2\times 10^{33}\) cm\(^{-2}\) s\(^{-1}\), a five-fold increase from the previous runs. At this luminosity, there are around 7 pp interactions per bunch crossing, which is a significantly more challenging environment for both triggering and reconstruction. The LHCb detector was therefore upgraded [3] to meet this challenge: The tracking systems were replaced, with the strips in the VELO replaced by a pixel detector; the Tracker Turicensis was replaced by the Upstream Tracker improving to material budget and increased acceptance and finally the Outer and Inner Trackers were replaced with Scintilating Fibres. These upgrades improved the occupancy and radiation hardness, which are mandatory upgrades to deal with the increased data.

The second important aspect of the LHCb upgrade 1 is the near-complete replacement of the readout electronics, allowing for the full detector to be readout at the bunch crossing rate of 30MHz. This allows for a trigger system that is fully implemented in software. The main advantage of this is to utilise the large impact parameter of particles that originate from beauty and charm hadron decays. This allows to have softer transverse momentum requirements compared to the original trigger system, which substantially improves the efficiency for fully hadronic decays which, among other things, are of crucial importance for LHCb’s CP-violation programme discussed later on in this review. The LHCb upgrade 1 has now been completed, and the detector is currently being commissioned. A total of 50 fb\(^{-1}\) are expected to be taken during the next two run periods until 2032, which will allow for significantly more precise measurements in addition to a broader programme enabled by the new trigger system.

The LHCb physics programme includes a large and diverse set of measurements, including studies of charm decays, electroweak physics and analyses of heavy-ion collisions. In this review, we focus on measurements performed with b-hadron decays, which remain a core part of the experiment’s physics potential. The b physics programme is summarised by highlighting three areas: Studies of rare b decays, measurements of charged-current semileptonic decays and searches for new sources of CP violation.

3 Rare decays

Rare decays of b-hadrons serve as promising probes for NP, as they are suppressed and can therefore introduce contributions from NP that are competitive with the SM decay amplitude. A particularly sensitive set of rare decays are those proceeding via flavour-changing-neutral-currents (FCNC), where a b-quark transitions into either a strange or down quark. FCNCs have had a significant historical impact in the construction of the SM, for example which led Glashow, Iliopoulos, and Maiani to predict the existence of the charm quark (c) [4]. Interestingly, when operators of dimensions greater than four are added to the Standard Model (SM), FCNC transitions naturally emerge, a key reason why rare decays are often targeted in the search for NP. Rare decays play an outsized role in the physics programme of LHCb as the experiment has a unique sensitivity, thanks to the vast number of b-quarks produced at the LHC and its exceptional performance in particle identification, momentum, and vertex resolution which is crucial for background control.

In a scenario where we postulate that there are no new particles below the electroweak scale, we can characterise \(b\rightarrow s\ell ^+\ell ^-\) transitions using an effective Lagrangian encompassing only light SM fields. The main distinction between the SM and effective Lagrangians, renormalized at a scale \(\mu \sim m_b\), is the number of effective operators, which can be more extensive in the NP case, in addition to the value of the corresponding coefficients.

where \(G_F\) denotes the Fermi constant, and where the index i indicates the following set of dimension-six operators (treated independently for \(\ell =e\) and \(\mu \)):

This methodological approach is highly effective in correlating different decays, not only facilitating a combined interpretation of different measurements for enhanced sensitivity but also leveraging the coherence of various modes to mitigate the impact of statistical and systematic effects.

In recent years, several discrepancies in rare decays have emerged, which form a large part of the so-called flavour anomalies. The anomalies related to rare decays comprised of three types of measurements: lower than predicted branching fractions for various \(b\rightarrow s\mu \mu \) decays [5,6,7], a deviation in the angular distrubution of \(B\rightarrow K^*\mu ^+\mu ^-\) decays [8, 9], and previous deviations in tests of lepton flavour universality. Furthermore, discrepancies with respect to the SM in tree-level semiltauonic decays, proceeding through \(b\rightarrow c\tau \nu \) processes, have been identified and will be elaborated upon in the next section.

The first anomaly in rare decays observed was a discrepancy in the angular distribution of the decay \(B\rightarrow K^*\mu ^+\mu ^-\) [10], specifically in the observable \(P_5'\) [11, 12], which was later corroborated by further measurements [8, 9]. An effective field theory analysis of the deviation points towards a lower than expected value of the Wilson coefficient, \({\mathcal {C}}_{9}\), which is the coupling associated with the vector current to the di-lepton pair at the level of around 3\(\sigma \) standard deviations. One of the striking features of this interpretation is that such a shift is numerically consistent with the lower branching fractions seen in other decay modes, which dampens the possibility of a statistical fluctation. Despite this intriguing coherence, it appears that a theoretical breakthrough is necessary for definitive conclusions, as they indicate a shift to \(C_9\) which can also be affected by long-distance contributions from decays \(b\rightarrow s \bar{c} c\), where the \(c\bar{c}\) annihilates to form the two leptons. As \(c\bar{c}\) decay proceeds via a photon, the contribution interferes with the vector contribution of pure semileptonic \(b\rightarrow s \mu \mu \) decay and can mimic a NP effect. As this \(c\bar{c}\) contribution is fully hadronic, it is very difficult to compute and is therefore controlling it is the main avenues to clarify the situation. Approaches using data [13,14,15,16,17] have been proposed and implemented in order to help constrain the theoretical predictions. At the current moment there is not yet a consensus on the size of this effect, but progress is being steadily made (see for example Ref. [18]).

Fortunately, this issue is not present for fully leptonic rare decays, of the type \(B_{(s)}\rightarrow \ell ^+\ell ^-\), as they can proceed only in the SM with an axial-vector coupling to the two leptons. A famous example of this type is the decay \(B_s\rightarrow \mu ^+\mu ^-\), which has long been considered a critical channel for the search for NP due to its helicity suppression as well as that from the GIM mechanism. This makes it significantly enhanced in models with an extended Higgs sector, such as the MSSM, where the branching ratio is proportional to \(\tan ^6\beta \) [19,20,21,22]. In terms of effective operators, NP can contribute new scalar/pseudoscalar operators, yielding non-zero values for the Wilson coefficients \(C_{S,P}^{(\prime )}\) or by modifying the value of the axial-vector coefficient \(C_{10}\). The main challenge in the experiment for this decay is to distinguish it from background sources which have much larger branching fractions compared to the ultra rare decay rate.

The latest LHCb measurement has measured the value of \({{{\mathcal {B}}}}(B_{s}\rightarrow \mu \mu )=3.09^{+0.48}_{-0.44} \times 10^{-9}\) [23], the value of which is consistent with the SM prediction [24, 25]. More recently, the CMS experiment updated their result to \({{{\mathcal {B}}}}(B_{s}\rightarrow \mu \mu )=(3.66\pm 0.14) \times 10^{-9}\) [26]. A combination of various measurements of these branching ratios including one from ATLAS [27] limits potential NP contributions to \(C_{10}\).

In the absence of substantial theoretical advancement for rare semileptonic decays, one viable approach to address the anomalies observed in \(b\rightarrow s\mu ^{+}\mu ^{-}\) processes, assuming they are a result of NP, would be to relate them to other phenomena and look for predicted signatures in those systems. Consequently, when tests on lepton universality in \(b\rightarrow s\ell ^{+}\ell ^{-}\) processes at LHCb displayed discrepancies with respect to the Standard Model (SM), it was reasonable to consider these discrepancies as potentially related (see Refs. [28, 29] and references therein). The following ratio of branching ratios, denoted by \(R(X_s, q_{min}^2, q_{ma}^2)\), are considered:

Lepton Universality is an accidental symmetry of the SM. Hence, it would not be surprising for extensions of the SM to break it. Although the quantity in Eq. (3) is theoretically clean (see Refs. [30, 31] for discussions on theory uncertainty), its experimental measurement is more challenging, particularly at LHCb.

These experimental challenges arise from the significantly larger bremsstrahlung radiation of electrons compared to muons, which results in poorer momentum resolution. This, in turn, leads to lower efficiency, poorer invariant mass resolution, and ultimately, more complex handling of various backgrounds.

LHCb had initially measured the observables \(R_K\) [32], \(R_{K^*}\) [33], \(R_{K_S}\) [34] and \(R_{pK}\) [35] to be all below unity, with a local combined significance [36] exceeding four standard deviations. This brought considerable attention to the flavour anomalies, as they appeared to present a coherent pattern involving a non-lepton universal NP coupling \(C_V=C9-C_{10}\).

The general analysis strategy comprises the following steps. The primary trigger for electron events utilizes the electromagnetic calorimeter, a method that is less efficient than triggering muons, which is accomplished through the muon detector. The remainder of the event is also utilized as an additional trigger line. To alleviate the issue of poor resolution caused by bremsstrahlung radiation, a bremsstrahlung recovery algorithm is implemented for the electrons. This involves adding to the electron momentum the momentum of bremsstrahlung photons that are compatible with the electron’s trajectory.

The primary difference between the latest LHCb analysis [37, 38] and previous ones lies in the treatment of misidentified \(B\rightarrow hhh X\) type backgrounds that contaminate the electron channel. To account for all potential hadron misidentified backgrounds, a data-driven procedure was developed. This involves creating a hadron background-enriched sample by inverting the PID criteria. The candidates are weighted according to their misidentification probability, obtained with control channels. After subtracting the residual electron component, a high-purity background sample for single and double hadron misidentification is obtained. This provides a template for fitting the invariant mass distributions, as shown in Fig. 1.

Invariant mass distribution of \(B^+\rightarrow K^+\ell ^+\ell ^-\) and \(B^0\rightarrow K^{*0}\ell ^+\ell ^-\) for the muon mode (top four figures) and electron mode (bottom four figures) with the fit to data overimposed. Reproduced from Ref. [37]

The latest LHCb measurements of \(R_K\) and \(R_{K^*}\) perform the analysis in two different regions of \(q^2\), resulting in four different measurements. These measurements are compatible with SM predictions, significantly altering the broader picture. Figure 2 compares the results previous analyses [33, 34] with the latest results [37, 38]in the four bins of \(q^2\).Footnote 1 It’s worth emphasising that while these measurements align with the SM, they can still accommodate a 5-10% lepton universality (LU) violation. The new results have strongly influenced global fits for the flavour anomalies, which were approaching a significance of five standard deviations prior to these analyses (see e.g. Ref. [39]). While different groups yield compatible values under the same assumptions, papers obtaining different significance values mainly differ in how they handle non-local contributions from charm. Given the updated results and the current uncertainty of the \(c\bar{c}\) contribution, the situation for the rare decays anomalies remains unresolved.

Summary of the measurements for \(R_{K}\) and \(R_{K^*}\) across different \(q^2\) regions, as reported by LHCb. Previous measurements refer to [32, 33]. The more recent measurements [37, 38] have introduced improved background handling techniques and thus supersede the previous ones. Please note that the measurements of \(R_{K^*}\) in the low-\(q^2\) region, as specified in the cited references, were carried out within two distinct \(q^2\) ranges: \(0.045<q^2<1.1\) \(\hbox {GeV}^2\) and \(0.01<q^2<1.1\) \(\hbox {GeV}^2\), respectively

Importantly, the uncertainty of the measurements is statistical, thus the LHCb Upgrade(s) will remain the primary experiment for LU tests in rare decays. This is significant because any LU violation would be a clear indication of NP. The anomalies have inspired innovative theoretical models that provide an intriguing explanation for the flavour puzzle. Many of these compelling models regard the third family as unique. For these models, LFU tests with the tau in the final states are critical, while electron/muon LU violation can be minimal without causing issues for the models. Consequently, a promising way to test these models, besides semi-tauonic measurements, would be rare decays with taus in the final state of the type \(b\rightarrow s\tau \tau \).

Most models that account for the flavour anomalies through the addition of NP also predict potentially significant effects in \(b\rightarrow s\tau \tau \) transitions due to a hierarchical coupling to the different generations. The current best limits are as follows:

These were established by the LHCb [40], BaBar [41] and Belle [42] experiments. Evidently, rare decays involving tau particles in the final state pose greater challenges than their counterparts involving electrons and muons, as tau particles decay before reaching the detector. The upgrade of the Belle experiment, Belle II, is expected to achieve a limit of \(5.4\times 10^{-4}\) for \(B^0\rightarrow K^{*0}\tau \tau \) with a luminosity of 50 \(\hbox {ab}^{-1}\) [43].

Such decays prove even more experimentally challenging at the LHCb experiment, where there is a high density of particles and the momentum of the decaying B-meson is unknown, in contrast to the Belle II experiment. Regardless, LHCb established the world’s first limit on \(B_s \rightarrow \tau \tau \) and (assuming no contribution of \(B_s\)), the best limit on \(B^0 \rightarrow \tau \tau \) [40]. Studies on \(B\rightarrow X_s \tau \tau \) at LHCb are ongoing.

Moreover, a significant \(b\rightarrow s\tau \tau \) NP contribution could be investigated in the dimuon spectrum of \(B^+\rightarrow K^+\mu \mu \) by fitting the dimuon spectrum, as suggested in Ref. [44]. While this technique necessitates assumptions on the theoretical model of the dimuon spectrum, it has the potential to yield results competitive with direct searches. Thus, such indirect searches could hold particular relevance for the LHCb upgrade II.

Finally, the search for lepton flavour violation (LFV) represents a fascinating area of study, as this accidental symmetry is already violated in the Standard Model (SM) due to neutrino oscillations. However, the LFV induced by the SM is at an unmeasurably low level. Many extensions of the SM, including models aimed at explaining flavour anomalies, predict LFV at a level that upcoming particle physics experiments may be capable of measuring. Searches for LFV in the LHCb Upgrades complement those of dedicated experiments. Though it’s inherently more challenging to determine LFV limits for the third family of quarks and leptons compared to the second family, the potential preferential coupling of NP to the third family might offset these experimental constraints. A summary of LFV limits set by the LHCb experiment can be found in Table 1.

Limits on \(\tau \rightarrow 3 \mu \) are currently dominated by the Belle experiment, with Belle II anticipated to reach levels of a few parts in \(10^{-10}\) assuming no background [43]. For LHCb, surpassing the limits set by Belle II will pose a considerable challenge. However, sensitivity studies indicate that LHCb Upgrade II could also explore the level of \(10^{-9}\). It’s worth mentioning that LHCb might be the only experiment capable of potentially confirming a discovery by Belle II.

LHCb is leading in setting limits on \(b\rightarrow s\mu e\) across all channels, as well as on \(b\rightarrow s\tau \mu \). All these limits are statistically limited and are expected to remain so after Run 3 and Run 4. It’s important to note that LHCb has begun to explore an intriguing region for \(b\rightarrow s\tau \mu \), making future measurements especially interesting.

4 Tree-level semileptonic decays

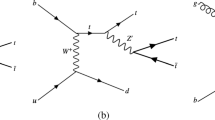

In the SM, tree-level semileptonic decays of the type \(b\rightarrow c,u \ell ^{-}\bar{\nu }_{\ell }\) proceed via a charged-current interaction mediatated by the W boson. The presence of the weakly interacting neutrino in the final state is an unambiguous signal of a short-distance interaction, substantially simplifying the theoretical treatment as no long-distance contributions are present. The price to pay for this theoretical clarity is large, however, as the signal is necessarily partially reconstructed. Therefore, it was a surprise that LHCb contributed as significantly as it has in this system.

Given their theoretical appeal, tree-level semileptonic decays are the ideal system to measure the magnitudes of the CKM elements \(V_{ub}\) and \(V_{cb}\). There are two main ways to determine these parameters. The first is to study inclusive \(b\rightarrow c (u) \ell \nu _{\ell }\) decays, where all hadronic final states of the charm or the up quark are included in the signal. The second is to reconstruct an exclusive hadronic final state such as \(B\rightarrow \pi \ell \nu \). Measurements based on exclusive and inclusive methods have had a long-standing disagreement for more than a decade for both \(|V_{ub}|\) and \(|V_{cb}|\) [45]. This disagreement is one of the issues that should be resolved to maximise the sensitivity for the test of CKM unitarity.

Determining a CKM magnitude requires control over the absolute normalisation of the production and efficiency, which is difficult at a hadron collider. Therefore, the strategy at LHCb is to normalise the \(b\rightarrow u\) transition by a corresponding \(b \rightarrow c\) transition, thus performing a measurement of \(|V_{ub}|/|V_{cb}|\). This has been done with \(\Lambda _b^{0} \rightarrow p \mu \nu \) and \(B_{s}^{0} \rightarrow K^{+}\mu ^{-}\nu \) decays, which are easier signatures than the canonical \(B\rightarrow \pi \mu \nu \) decay used at the B-factories due to the large amount of pions that originate from background sources. The main strategy of the analysis is to take advantage of the high signal yields at LHCb to apply very tight selection criteria which improves the purity and consequently reduces systematic uncertainties associated with the background. An example of this is to select candidates which have the best vertex resolution, as are more easily distinguished from background as well as having better kinematic resolution.

When using lattice QCD, the results from LHCb agree with the B-factory results based on \(B\rightarrow \pi \mu \nu \). This agreement significantly strengthens the confidence in the exclusive determinations of \(|V_{ub}|\) which will be crucial to finally solving the inclusive-exclusive puzzle. The uncertainties are also competitive with those from the B-factories and are expected to reach an ultimate precision of 1% after the second LHCb upgrade [46], which relies on further progress on lattice QCD techniques.

On the \(|V_{cb}|\) side, the determination is strongly affected by the treatment of the hadronic form factors and how they are combined with the experimental data [47,48,49,50]. The differential shape of the branching fraction as a function of the decay kinematics such as the squared di-lepton mass \(q^{2}\) provides a large amount of experimental information in which to constrain the theory parameters. Here is where LHCb could provide a complementary measurement, as the large signal yields will result in very precise measurements in wider kinematic bins due to the lower resolution compared to the B-factories. These measurements will have a significant impact, provided that systematic uncertainties remain below the very high statistical precision.

Due to their large branching fractions, semileptonic decays are a unique place to study decays involving the notoriously difficult \(\tau \)-lepton. Despite its large SM amplitude, the decay \(b\rightarrow c\tau ^{-}\bar{\nu }_{\tau }\) is highly sensitive to NP due to the large number of third-generation fermions involved in the decay. The branching fractions of these decays are tested with respect to the SM by normalising them to their muonicFootnote 2 counterparts to form lepton universality ratios

which are predicted to be around 30% in the SM due to the kinematic suppression with the presence of the \(\tau \)-lepton. \(\tau \)-leptons are notoriously difficult to reconstruct as they decay with at least one neutrino with a low visible branching fraction. For semitauonic decays, this means at least two neutrinos missing from the decay, resulting in a broad signal shape that is highly susceptable to backgrounds.

There are two decay modes of the \(\tau \)-lepton that are considered. The first is the leptonic decay \(\tau ^{-} \rightarrow \mu ^{-}\bar{\nu }_{\mu }\nu _{\tau }\), which suffers from three missing neutrinos but has a large signal yield and a well understood \(\tau \) decay. The second is the three-prong decay \(\tau ^{-}\rightarrow \pi ^{-}\pi ^{+}\pi ^{-}\nu _{\tau } (\pi ^{0})\) which allows for a better kinematic reconstruction and higher purity at the expense of a smaller signal yield. For both modes, the most dangerous background is from \(X_{b}\rightarrow X_{c}\bar{X}_{c} (X)\) decays, where one of the \(X_{c}\) hadrons mimics a \(\tau \) decay. Due to the similarity in the mass and lifetime, the kinematics of the \(X_{c}\) decay products can be fiendishly difficult to disentangle from the signal.

The signal yields are determined by three-dimensional fits to variables that discriminate between signal and the various backgrounds. Examples include the \(q^{2}\) distribution or the \(\tau ^{-}\) decay time. The latest LHCb measurements [51, 52] of \(R(D^{(*)})\) are shown in Fig. 3 together with those of the B factories [53,54,55,56,57,58]. The fact that the hadron collider measurements are competitive with those from the B-factories is a huge success story of the LHCb experiment. The SM prediction is also depicted, which is 3.3 \(\sigma \) from the world average. Given the complexity of the measurements, caution should be taken when interpreting this discrepancy as a sign of NP, but it is interesting to note that three seperate experiments consistency measure higher semitauonic yields than predicted and in more than one \(\tau \) decay channel. Further measurements, including those only accessible at the LHC like those using \(\Lambda _b\) baryons [59] will be crucial to clarify the situation in the future.

Measurements and SM predictions (black) of the lepton universality ratios R(D) and \(R(D^*)\) [45]

5 Searches for CP violation

The observed level of baryon asymmetry in the universe mandates sources of CP violation beyond the SM [60]. The LHCb experiment is contributing to the search for new sources of CP violation in several different ways. The first that we will discuss is the contribution from LHCb in testing the unitarity of the CKM matrix. Here, LHCb dominates the precision of the CKM angle \(\gamma \), providing world-leading results on the CKM parameter \(sin(2\beta )\) and providing important contributions to the CKM magnitude ratio \(|V_{ub}|/|V_{cb}|\).

When LHCb started collecting data in 2009, the angle \(\gamma \) was the least precisely known of the CKM unitarity triangle, with a precision of \(\sim \)25% [61]. Over a decade of LHCb measurements sensitive to the interference between \(b\rightarrow c\) and \(b\rightarrow u\) transitions have improved the precision by over a factor of six. Unlike the CKM parameter \(sin(2\beta )\), there is no golden mode for \(\gamma \) measurements, which means that the ultimate precision is obtained by combining several different \(B\rightarrow D h\) analyses. Therefore, the ability to reconstruct a wide variety of fully hadronic final states is a key experimental requirement for the experiment. Of particular importance is \(K-\pi \) separation as the main sensitivity arises from CKM-suppressed decays \(B^{+}\rightarrow \bar{D^{0}} K^{+}\), which would be swamped by the more abundant decay \(B^{+}\rightarrow \bar{D^{0}}\pi ^{+}\) if no particle ID requirements were applied. More recently, decay modes involving \(\pi ^{0}\) mesons were included [62] to further boost the sensitivity using charm decays such as \(D\rightarrow h^{+} h^{'-}\pi ^{0}\). The ability to reconstruct neutral pions and obtain physics sensitivity from decays involving them is a surprise given the large occupancy in the calorimeters. Other novel reconstruction techniques involve determining the signal yield of \(B\rightarrow D^{*0} h\) decays without reconstructing the \(\pi ^{0}\) from the \(D^{*0}\rightarrow D^{0} \pi ^{0}\) decay. This is possible due to the low Q-value of the \(D^{*0}\) decay. All the different \(B\rightarrow Dh\) decays have a complementary sensitivity to \(\gamma \) and associated hadronic nuisance parameters. The combination of which results in \(\gamma = (65.4^{+3.8}_{-4.2})^\circ \), where the charm mixing parameters are also measured simultaneously [63]. Most of the measurements that contribute to this average use the full LHCb dataset of 9 \(fb^{-1}\), although there are few which are yet to be updated such as that from \(B_{s}\rightarrow D_{s} K^{+}\) [64]. Being based on fully hadronic decays, the improved trigger system in run III will particularly benefit these measurements in the future.

The CKM parameter sin(2\(\beta \)) is another place where LHCb is currently world leading. Here, the golden mode is the decay \(B^{0}\rightarrow J/\psi K^{0}_{s}\), where the decay into a CP eigenstate allows for interference between the mixing and decay amplitudes. There are two key experimental challenges associated with this analysis. The first is the ability to reconstruct the long-lived \(K_{s}^{0}\) meson, which has an average decay length of around one metre in the LHCb detector. This is significantly longer than for the B-factories due to the larger boost at the LHC centre of mass energy which results in a comparatively lower reconstruction efficiency. This is one of the reasons why the precision on \(\gamma \) has been dominated by LHCb measurements for years whereas the world’s most precise measurement of sin(2\(\beta \)) has only just become a measurement from LHCb.

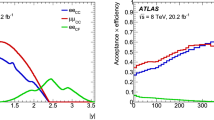

The other challenge for this measurement is flavour tagging, as the final state does not determine the flavour of the \(B^{0}\) meson at production. Flavour tagging at a hadron collider is much more difficult compared to the B-factories, where the two B mesons are quantum entangled and are produced in isolation. Flavour tagging at LHCb relies on two aspects of \(b\bar{b}\) events in LHC collisions. The first is to exploit correlations of accompanying particles in the hadronisation of the b-hadron of interest, known as same-side tagging. The second is to exploit anticorrelations in the decay products of the other b-hadron produced in the event, known as opposite-side tagging. The performance of flavour tagging is quantified by the tagging power, which represents the size of a perfectly tagged dataset that would have equal precision. The tagging power is a result of the exploitation of several different weak correlations and has steadily improved as more sophisticated techniques have been employed to utilise more inclusive decay pathways. This can be seen in Fig. 4, which shows the tagging power reported in LHCb publications as a function of the preprint date. This improvement is one of the reasons why the latest precision of sin(2\(\beta \)) has improved beyond the niave scaling of luminosity.

Beyond the unitarity of the CKM matrix, CP violation in \(B_{s}^{0}\) mixing is also highly sensitive to many NP models which favour second and third generation couplings [65]. This means that the measurement of the CP violating phase in the \(B_{s}^{0}\) system, \(\phi _{s}\) has unique NP potential. The golden modes for this observable is \(B_{s}^{0} \rightarrow J/\psi hh\), where hh is either a pair of pions or kaons. In addition to flavour tagging and particle ID, a key aspect of the LHCb performance is the time resolution needed due to the very fast oscillations of the \(B_{s}^{0}\) meson. This is where the large boost provided in high energy LHC environment is highly advantageous, leading to a time resolution of 35–45 fs. This performance is demonstrated by an ultra precise measurement of the \(B_{s}^{0}\) oscillation frequency [66] using \(B_{s}^{0}\rightarrow D_{s}^{-} \pi ^{+}\) decays. The decay time of candidates that have undergone an oscillation or not is shown in Fig. 5, showing that the remarkably fast oscillations can be resolved by the LHCb detector. This level of fidelity is crucial for the measurement of \(\phi _{s}\), for which has recently been performed with the full LHCb run I–II dataset of 9 \(\mathrm fb^{-1}\) [67]. The result is \(-0.039\pm 0.022\pm 0.006\) rad which is consistent with the SM, and is two times more precise than any other measurement. Despite the remarkable precision, the comparison to the SM is still limited by the statistical uncertainty of the measurement, meaning that there are excellent prospects for the upgraded LHCb detector in the future. Such progress will rely on control of the penguin pollution, which will be helped to be controlled using the SU(3) related decay \(B_{s}^{0}\rightarrow J/\psi K^{*0}\) [68].

Decay time distribution of \(B_{s}^{0}\rightarrow D_{s}^{-} \pi ^{+}\) decays, split between cases in which the \(B_{s}^{0}\) has undergone oscillation or not [66]

In order to observe CP violation directly in the decay of a particle, one needs two decay amplitudes with different weak and strong phases. The size of the CP asymmetry is maximised when the magnitudes of the two amplitudes are of similar size. Fully hadronic charmless B decays are perfect laboratories for studying such large effects, as both tree- and loop-level diagrams contribute to the final state. Furthermore, the CKM suppression of the tree-level \(b\rightarrow u\) amplitude allows for a large CP asymmetry as it places the magnitude of that amplitude to a similar level of the loop diagram. The loop diagram typically occurs through a gluonic penguin, which ensures a strong phase difference with respect to the tree-level amplitude. Indeed, for three-body \(B^{+}\rightarrow h^{+}h^{-}h^{+}\) decays, a 80% CP asymmetry was observed in certain kinematic regions [69].

The complication with interpreting CP asymmetries in charmless B decays is that its size depends on the amplitude magnitudes and the strong phase, which are difficult to calculate. Fully hadronic decays cannot be factorised in the same way that semileptonic decays can, which makes theoretical calculations much more challenging. For this reason, cancelations between similar decays can be useful to bring uncertainties from QCD under control. A well-known example of this is to compare the CP asymmetry of \(B^{0} \rightarrow K^{+}\pi ^{-}\) decays with its isospin partner, \(B^{+} \rightarrow K^{+} \pi ^{0}\). Given that only the spectator quark is different between the two decays, one would expect the CP asymmetry to be very similar if not the same. However, the CP asymmetries of the two decays, \(A_{CP}(B^{0}\rightarrow K^{+}\pi ^{-}) = -0.084 \pm 0.004\) and \(A_{CP}(B^{+}\rightarrow K^{+}\pi ^{0}) = 0.040 \pm 0.021\), are over five standard deviations from each other, indicating either unexpectedly large hadronic effects or even contributions from NP. This difference is surprising and is known in the field as the \(K\pi \) puzzle. Given the difficult signature of the decay \(\pi ^{0}\rightarrow \gamma \gamma \), the uncertainty is dominated by the \(B^{+}\) transition. As there is only one charged particle in the signature, there is no B vertex to reconstruct which is normally a vital signature to identify b-hadron decays. A measurement of the \(B^{+}\rightarrow K^{+}\pi ^{0}\) decay was therefore considered impossible at a hadron collider. However, due to the very large production at the LHC, a tight selection could be employed using the four-momentum of the \(\pi ^{0}\) which allowed to reduce the background enough to determine the signal for a CP measurement [70]. The signal is not as clean as fully charged b-hadron decays, is still of remarkable purity given the challenging signature. The LHCb analysis allowed for the most precise measurement of the CP asymmetry in the world, which significantly increased the \(K\pi \) puzzle. The success of this measurement gives encouragement to other related decays such as \(B^{+}\rightarrow pi^{+} K^{0}\), which should be of a similar challenge and are crucial to understand the \(K\pi \) puzzle further via a sum-rule relation [71].

Finally, all these searches described in this section so far have been using B meson decays. Despite the connection to baryeogenesis, no observation of CP violation has been made yet in baryons themselves. There was hope with evidence reported in \(\Lambda _b^{0}\rightarrow p \pi ^{+}\pi ^{-}\pi ^{+}\) decays [72] but unfortunately faded when analysed with an increased dataset [73]. Searches are on-going in several different decay modes of both \(\Lambda _b\) and \(\Xi _{b}\) baryons. Given the lack of baryon oscillations in the SM, the main area of focus is charmless decays. Given the amplitudes involved it is inevitible that CP violation will be eventually seen in baryons, which will mark another important milestone in the history of CP violation attained by LHCb after the observation of CP violation in charm decays [74].

6 The case for a second LHCb upgrade

The second LHCb upgrade [46, 75] aims for an unprecedented luminosity of \(1.5\times 10^{34}\) cm\(^{-2}\) s\(^{-1}\), which is expected to cause approximately 40 visible proton–proton interactions per crossing, yielding about 2000 charged particles within the LHCb’s area of coverage. To handle this substantial increase, changes are envisioned for the existing spectrometer components, including increasing their granularity, lowering the material within the detector, and incorporating advanced timing precision. This initiative will strive to preserve or enhance the current performance of the detector, particularly in areas such as track-finding efficiency and momentum resolution.

The LHCb Upgrade II will fully harness the HL-LHC potential as a flavour physics machine while maintaining a rich and diverse research programme. A glimpse of the potential available at the LHC can be found in Fig. 6, which shows the vast increase of the number of b-hadrons that will be produced. Some of the measurements that LHCb Upgrade II will undertake will constrain theoretical models for decades to come. From the conclusion of the HL-LHC to the commencement of the future circular collider (FCC), it will be crucial to have a robust set of measurements to guide future theoretical work. Neglecting to fully exploit the third family’s flavour potential could result in a missed opportunity.

Although the LHCb Upgrade II presents great opportunities, it also poses substantial technological challenges. The key points of the upgrade for tracking, particle identification, and data processing are summarised below.

The VELO is a critical component of the LHCb upgrade physics agenda, designed to perform real-time track reconstruction from all LHC bunch crossings within the software trigger system. The enhanced luminosity projected for Upgrade II necessitates a new and more capable VELO. This includes the ability to manage an enlarged data output, higher radiation levels, and an increased occupancy. Innovative techniques are being devised to facilitate real-time pattern recognition and correct association of each b-hadron to its originating primary vertex (PV). This effort will involve the development of a novel pixel detector with superior rate and timing capabilities, and a more efficient mechanical design.

To better associate the decay products of heavy flavor with their parent PV, effective utilization of timing information is critical. Research indicates that through a timing precision of 50-100 ps, mis-association levels of PVs can be curtailed from about 20% to approximately 5%. Further, reducing the pixel pitch from its current size of \(55\mu \)m at Upgrade II is considered advantageous, especially for the VELO’s innermost region. Timing will not only aid in the track reconstruction process but also help conserve computational resources. R &D for the VELO is currently ongoing with the major candidate solutions for the sensors including Planar Sensors, Low Gain Avalanche Diodes (LGADs) and 3D sensors.

Upgrade II also plans changes to the downstream tracking system, which currently comprises a silicon strip detector (UT) and three tracking stations (T-stations). The redesigned system will need to accommodate higher occupancies by enhancing detector granularity and minimizing incorrect matches between upstream and downstream track segments.

For the inner portions of the T-stations, as well as the UT, Monolithic Active Pixel Sensors (MAPS) are being considered. Optimization efforts for the UT are ongoing, and both HV- and LV-CMOS solutions are under consideration. Regarding the inner part of the T-stations, a preliminary prototype of the MightyPix silicon detector, based on HV-CMOS technology, has been tested. Significant synergies exist with R &D work conducted in the context of both the Mu3e experiment and the ATLAS Upgrade. The requisite number of detector layers is currently being investigated. As with the VELO, the integration of timing information into the tracking system could substantially improve the accuracy of matching upstream and downstream track segments.

Quality particle identification (PID) is a vital component in precision flavor measurements. The PID subdetectors in the ongoing experiment will be improved and, in some cases, enhanced for the upcoming Upgrade II. The primary enhancements include improved granularity and for select subdetectors, rapid timing of a few tens of picoseconds. The improved timing helps to associate signals with a few proton–proton interactions in the bunch crossing.

The RICH system for Upgrade II builds on the current detectors, consisting of an upstream RICH 1 for lower momentum tracks, and a downstream RICH 2. To manage increased track multiplicity, it will be necessary to replace the current MaPMTs with more granular photodetectors. Multiple technologies are under consideration, with SiPMs being a prime candidate.

An opportunity to enhance low-momentum hadron-identification capabilities is under consideration by installing a TORCH detector. This detector measures time-of-flight by detecting internally reflected Cherenkov light produced in a thin quartz plane. The TORCH can help identify kaons in the region below 10 GeV/c.

The electron, photon, and pi-zero identification provided by the current electromagnetic calorimeter (ECAL) has proved essential. A suitably designed new ECAL will be necessary for achieving high performance in key modes and for ensuring a broad physics program at Upgrade II. One of the challenges for the ECAL at Upgrade II will be the harsh radiation environment. Solutions to these challenges include reducing the Molière radius of the converter, moving to a smaller cell size, and using fast timing information.

Upgrade II aims to provide ECAL performance at least as good as the current detector in Run 1 and Run 2 conditions. An active R &D program has begun to investigate potential technologies for the Upgrade II ECAL. It’s possible that the final detector might use multiple solutions due to the rapid variation of multiplicity and radiation flux with position.

The current muon system in Upgrade I conditions would result in a degraded performance due to the higher background flux foreseen for Upgrade II. Enhanced shielding will be necessary in front of the muon detector to mitigate the incidence of punch-through particles. This could involve substituting the HCAL with up to 1.7 ms of iron, thereby adding an extra four interaction lengths compared to the existing setup. As the HCAL’s main function of contributing to the hardware trigger will become obsolete starting from Run 3, this change is feasible. In terms of the muon system, upgrades are needed for the detectors in the most central areas of all stations, aiming for a design with both superior granularity and rate capabilities.

Potential solutions are the micro-resistive WELL detector (\(\mu \)-RWELL) for the higher occupancy region, and MWPC or RPC detectors in the lower flux region. R &D is ongoing to study all of these possibilities.

The LHCb Upgrade II detector is expected to generate data in the range of 200 Tb per second. This vast amount of data necessitates real-time processing and a substantial reduction before it can be stored. Advances in radiation-resistant optical links and commercial networking technology, along with the specific design of the LHCb that positions the readout cables outside of the detector acceptance, should enable the transmission of this massive data volume to a computational farm by the time of Upgrade II.

Data processing in Upgrade II presents a significant challenge. Traditional trigger methods will not suffice for substantial data rate reduction at even the initial trigger stages. Instead, the data processing approach for Upgrade II will focus on pile-up suppression, prioritizing the early-stage discarding of detector hits not associated with the specific proton–proton interaction of interest. Accurate timing data is vital for rapidly selecting reconstructed objects based on their originating proton–proton interaction. Therefore, access to detailed timing information across multiple subdetectors is imperative. It’s equally significant to carry out an early-stage reconstruction of charged particle trajectories and neutral particle clusters created within the LHCb acceptance, to optimally associate specific heavy flavour indicators with proton–proton interactions. LHCb has already showcased its proficiency in performing a comprehensive offline-quality detector alignment, calibration, and reconstruction in a near-real-time environment during Run 2, as well as its capacity to conduct precision physics assessments using this real-time data processing.

Adapting this capability to the more rigorous conditions of Upgrade II will require the construction of a processor farm leveraging whichever technology or technologies are deemed most commercially sustainable over a decade from now. Given the increasing trend towards more diverse computing architectures, with CPU server farms being augmented with GPU or FPGA accelerators, it becomes crucial to sustain and expand collaborations with computing institutions that specialize in the design of these hybrid architectures. Research has been carried out on FPGA-based downstream tracking. Collaborations with industry partners will be instrumental in ensuring that LHCb’s trigger and reconstruction algorithms are optimally calibrated to make the best use of the most cost-effective architecture.

In conclusion, despite the technological challenges of the LHCb Upgrade II, it also offers a unique opportunity to develop novel detector technologies during an exciting period of anticipation while we await the FCC. The combination of achieving cutting-edge physics goals and advancing technological capabilities underscores the significance of the LHCb Upgrade II.

7 Summary

In 2008, shortly before the LHCb experiment was due to start collecting data, the experiment published a roadmap [76] of six key measurements to be performed with high priority in the upcoming run. These were dominated by decays with fully charged final states, where the only leptons considered were muons. In this review we highlighted three areas of b physics, but even within that highlight one can see a huge diversity of signatures, involving electrons, \(\tau \)-leptons, neutrinos and neutral pions. The last 15 years has brought about a huge expansion of the LHCb programme beyond what was considered possible at a hadron collider and is one of the great successes of the LHCb experiment. The next 15 years will certainly contain more surprises in addition to the expected improvements to the measurements already established within the programme. The motivation for the LHCb upgrades is clear.

This is not to mention the huge amount of physics potential for which we could not cover in this review. We did not cover the pentaquark discovery [77] and associated measurements into exotic spectrospopy, which have a huge interest both in and outside the field. Beyond b-physics the LHCb physics programme includes a large and diverse set of measurements, which have steadily expanded over the last decade. In flavour physics, measurements of a wide variety of charm-quark measurements from oscillations to spectroscopy. In particular, one of LHCb’s landmark measurements has been the discovery of CP violation in \(D\rightarrow hh\) decays [74], which is a milestone in the history of the field. Beyond flavour, there is a broad programme of electroweak physics measurements, from drell-yan to top quark measurements. Highlights here include the W boson mass measurement [78] and ion-proton cross-section measurements [79], which inform background estimates in astrophysics.

Given the unique opportunity of HL-HLC, pushing the LHCb experiment to its maximal capability is mandatory. There is a possibility that the current discrepancies in \(b\rightarrow s\mu ^{+}\mu ^{-}\) and \(b\rightarrow c\tau ^{-}\bar{\nu }_{\tau }\) could be resolved or new anomalies could emerge. In both cases, the LHCb Upgrade II will be a fundamental resource for future theoretical work and, in turn, for upcoming experiments. It also provides an important bridge to design and implement new detector technologies in the time period between the final upgrades of ATLAS and CMS and the new generation accelerator complex, the FCC. It is clear to us that neither LHCb nor b-hadron physics will lose any relevance over the next decade, whether or not NP is discovered in that time.

Data availability

No data associated in the manuscript.

References

A.A. Alves Jr. et al., The LHCb detector at the LHC. JINST 3, 08005 (2008). https://doi.org/10.1088/1748-0221/3/08/S08005

G. Apollinari, O. Brüning, T. Nakamoto, L. Rossi, High luminosity large hadron collider HL-LHC. CERN Yellow Rep. 5, 1–19 (2015). https://doi.org/10.5170/CERN-2015-005.1. arXiv:1705.08830 [physics.acc-ph]

R. Aaij et al., The LHCb upgrade I (2023). arXiv:2305.10515 [hep-ex]

S.L. Glashow, J. Iliopoulos, L. Maiani, Weak interactions with Lepton–Hadron symmetry. Phys. Rev. D 2, 1285–1292 (1970). https://doi.org/10.1103/PhysRevD.2.1285

R. Aaij et al., Differential branching fractions and isospin asymmetries of \(B \rightarrow K^{(*)} \mu ^+ \mu ^-\) decays. JHEP 06, 133 (2014). https://doi.org/10.1007/JHEP06(2014)133. arXiv:1403.8044 [hep-ex]

R. Aaij et al., Measurements of the S-wave fraction in \(B^{0}\rightarrow K^{+}\pi ^{-}\mu ^{+}\mu ^{-}\) decays and the \(B^{0}\rightarrow K^{\ast }(892)^{0}\mu ^{+}\mu ^{-}\) differential branching fraction. JHEP 11, 047 (2016). https://doi.org/10.1007/JHEP11(2016)047. arXiv:1606.04731 [hep-ex] [Erratum: JHEP 04, 142 (2017)]

R. Aaij et al., Branching Fraction Measurements of the Rare \(B^0_s\rightarrow \phi \mu ^+\mu ^-\) and \(B^0_s\rightarrow f_2^\prime (1525)\mu ^+\mu ^-\)- Decays. Phys. Rev. Lett. 127(15), 151801 (2021). https://doi.org/10.1103/PhysRevLett.127.151801. arXiv:2105.14007 [hep-ex]

R. Aaij et al., Measurement of \(CP\)-Averaged Observables in the \(B^{0}\rightarrow K^{*0}\mu ^{+}\mu ^{-}\) Decay. Phys. Rev. Lett. 125(1), 011802 (2020). https://doi.org/10.1103/PhysRevLett.125.011802. arXiv:2003.04831 [hep-ex]

R. Aaij et al., Angular analysis of the \(B^{+}\rightarrow K^{\ast +}\mu ^{+}\mu ^{-}\) decay. Phys. Rev. Lett. 126(16), 161802 (2021). https://doi.org/10.1103/PhysRevLett.126.161802. arXiv:2012.13241 [hep-ex]

R. Aaij et al., Measurement of Form-Factor-Independent Observables in the Decay \(B^{0} \rightarrow K^{*0} \mu ^+ \mu ^-\). Phys. Rev. Lett. 111, 191801 (2013). https://doi.org/10.1103/PhysRevLett.111.191801. arXiv:1308.1707 [hep-ex]

J. Matias, F. Mescia, M. Ramon, J. Virto, Complete Anatomy of \(\bar{B}_d -> \bar{K}^{* 0} (-> K \pi )l^+l^-\) and its angular distribution. JHEP 04, 104 (2012). https://doi.org/10.1007/JHEP04(2012)104. arXiv:1202.4266 [hep-ph]

S. Descotes-Genon, T. Hurth, J. Matias, J. Virto, Optimizing the basis of \(B\rightarrow K^*ll\) observables in the full kinematic range. JHEP 05, 137 (2013). https://doi.org/10.1007/JHEP05(2013)137. arXiv:1303.5794 [hep-ph]

R. Aaij et al., Measurement of the phase difference between short- and long-distance amplitudes in the \(B^{+}\rightarrow K^{+}\mu ^{+}\mu ^{-}\) decay. Eur. Phys. J. C 77(3), 161 (2017). https://doi.org/10.1140/epjc/s10052-017-4703-2. arXiv:1612.06764 [hep-ex]

T. Blake, U. Egede, P. Owen, K.A. Petridis, G. Pomery, An empirical model to determine the hadronic resonance contributions to \(\overline{B}{} ^0 \!\rightarrow \overline{K}{} ^{*0} \mu ^+ \mu ^- \) transitions. Eur. Phys. J. C 78(6), 453 (2018). https://doi.org/10.1140/epjc/s10052-018-5937-3. arXiv:1709.03921 [hep-ph]

C. Bobeth, M. Chrzaszcz, D. van Dyk, J. Virto, Long-distance effects in \(B\rightarrow K^*\ell \ell \) from analyticity. Eur. Phys. J. C 78(6), 451 (2018). https://doi.org/10.1140/epjc/s10052-018-5918-6. arXiv:1707.07305 [hep-ph]

M. Chrzaszcz, A. Mauri, N. Serra, R. Silva Coutinho, D. van Dyk, Prospects for disentangling long- and short-distance effects in the decays \(B\rightarrow K^* \mu ^+\mu ^-\). JHEP 10, 236 (2019). https://doi.org/10.1007/JHEP10(2019)236. arXiv:1805.06378 [hep-ph]

N. Gubernari, D. van Dyk, J. Virto, Non-local matrix elements in \(B_{(s)}\rightarrow \{K^{(*)},\phi \}\ell ^+\ell ^-\). JHEP 02, 088 (2021). https://doi.org/10.1007/JHEP02(2021)088. arXiv:2011.09813 [hep-ph]

N. Gubernari, M. Reboud, D. van Dyk, J. Virto, Improved theory predictions and global analysis of exclusive \(b \rightarrow s\mu ^+\mu ^-\) processes. JHEP 09, 133 (2022). https://doi.org/10.1007/JHEP09(2022)133. arXiv:2206.03797 [hep-ph]

C.-S. Huang, W. Liao, Q.-S. Yan, The promising process to distinguish supersymmetric models with large tan Beta from the standard model: B -\(>\) X(s) mu+ mu-. Phys. Rev. D 59, 011701 (1999). https://doi.org/10.1103/PhysRevD.59.011701. arXiv:hep-ph/9803460

C. Hamzaoui, M. Pospelov, M. Toharia, Higgs mediated FCNC in supersymmetric models with large tan Beta. Phys. Rev. D 59, 095005 (1999). https://doi.org/10.1103/PhysRevD.59.095005. arXiv:hep-ph/9807350

S.R. Choudhury, N. Gaur, Dileptonic decay of B(s) meson in SUSY models with large tan Beta. Phys. Lett. B 451, 86–92 (1999). https://doi.org/10.1016/S0370-2693(99)00203-8. arXiv:hep-ph/9810307

K.S. Babu, C.F. Kolda, Higgs mediated \(B^0 \rightarrow \mu ^{+} \mu ^{-}\) in minimal supersymmetry. Phys. Rev. Lett. 84, 228–231 (2000). https://doi.org/10.1103/PhysRevLett.84.228. arXiv:hep-ph/9909476

R. Aaij et al., Analysis of neutral B-meson decays into two muons. Phys. Rev. Lett. 128(4), 041801 (2022). https://doi.org/10.1103/PhysRevLett.128.041801. arXiv:2108.09284 [hep-ex]

C. Bobeth, M. Gorbahn, T. Hermann, M. Misiak, E. Stamou, M. Steinhauser, \(B_{s, d} \rightarrow l^+ l^-\) in the standard model with reduced theoretical uncertainty. Phys. Rev. Lett. 112, 101801 (2014). https://doi.org/10.1103/PhysRevLett.112.101801. arXiv:1311.0903 [hep-ph]

M. Beneke, C. Bobeth, R. Szafron, Power-enhanced leading-logarithmic QED corrections to \(B_q \rightarrow \mu ^+\mu ^-\). JHEP 10, 232 (2019). https://doi.org/10.1007/JHEP10(2019)232. arXiv:1908.07011 [hep-ph]

A. Tumasyan et al., Measurement of the \(\text{ B}^0_{\text{ S }} \rightarrow \mu ^+\mu ^-\) decay properties and search for the \(\text{ B}^0\rightarrow \mu ^+\mu ^-\) decay in proton–proton collisions at \(\sqrt{s} = 13 TeV\). Phys. Lett. B 842, 137955 (2023). https://doi.org/10.1016/j.physletb.2023.137955. arXiv:2212.10311 [hep-ex]

M. Aaboud et al., Study of the rare decays of \(B^0_s\) and \(B^0\) mesons into muon pairs using data collected during 2015 and 2016 with the ATLAS detector. JHEP 04, 098 (2019). https://doi.org/10.1007/JHEP04(2019)098. arXiv:1812.03017 [hep-ex]

B. Bhattacharya, A. Datta, D. London, S. Shivashankara, Simultaneous explanation of the \(R_K\) and \(R(D^{(*)})\) puzzles. Phys. Lett. B 742, 370–374 (2015). https://doi.org/10.1016/j.physletb.2015.02.011. arXiv:1412.7164 [hep-ph]

D. Buttazzo, A. Greljo, G. Isidori, D. Marzocca, B-physics anomalies: a guide to combined explanations. JHEP 11, 044 (2017). https://doi.org/10.1007/JHEP11(2017)044. arXiv:1706.07808 [hep-ph]

M. Bordone, G. Isidori, A. Pattori, On the standard model predictions for \(R_K\) and \(R_{K^*}\). Eur. Phys. J. C 76(8), 440 (2016). https://doi.org/10.1140/epjc/s10052-016-4274-7. arXiv:1605.07633 [hep-ph]

G. Isidori, S. Nabeebaccus, R. Zwicky, QED corrections in \( \overline{B}\rightarrow \overline{K}{\rm \ell }^{+}{\rm \ell }^{-} \) at the double-differential level. JHEP 12, 104 (2020). https://doi.org/10.1007/JHEP12(2020)104. arXiv:2009.00929 [hep-ph]

R. Aaij et al., Test of lepton universality in beauty-quark decays. Nature Phys. 18(3), 277–282 (2022). https://doi.org/10.1038/s41567-021-01478-8. arXiv:2103.11769 [hep-ex]

R. Aaij et al., Test of lepton universality with \(B^{0} \rightarrow K^{*0}\ell ^{+}\ell ^{-}\) decays. JHEP 08, 055 (2017). https://doi.org/10.1007/JHEP08(2017)055. arXiv:1705.05802 [hep-ex]

R. Aaij et al., Tests of lepton universality using \(B^0\rightarrow K^0_S \ell ^+ \ell ^-\) and \(B^+\rightarrow K^{*+} \ell ^+ \ell ^-\) decays. Phys. Rev. Lett. 128(19), 191802 (2022). https://doi.org/10.1103/PhysRevLett.128.191802. arXiv:2110.09501 [hep-ex]

R. Aaij et al., Test of lepton universality with \( {\Lambda }_b^0\rightarrow {pK}^{-}{\rm \ell }^{+}{\rm \ell }^{-} \) decays. JHEP 05, 040 (2020). https://doi.org/10.1007/JHEP05(2020)040. arXiv:1912.08139 [hep-ex]

D. Lancierini, G. Isidori, P. Owen, N. Serra, On the significance of new physics in \(b\rightarrow s\ell ^+\ell ^-\) decays. Phys. Lett. B 822, 136644 (2021). https://doi.org/10.1016/j.physletb.2021. arXiv:2104.05631 [hep-ph]

Test of lepton universality in \(b \rightarrow s \ell ^+ \ell ^-\) decays. Phys. Rev. Lett. 131, 051803 (2023). https://doi.org/10.1103/PhysRevLett.131.051803. arXiv:2212.09152 [hep-ex]

Measurement of lepton universality parameters in \(B^+\rightarrow K^+\ell ^+\ell ^-\) and \(B^0\rightarrow K^{*0}\ell ^+\ell ^-\) decays. Phys. Rev. D 108, 032002 (2023). https://journals.aps.org/prd/abstract/10.1103/PhysRevD.108.032002. arXiv:2212.09153 [hep-ex]

G. Isidori, D. Lancierini, P. Owen, N. Serra, On the significance of new physics in \(b\rightarrow s\ell +\ell -\) decays. Phys. Lett. B 822, 136644 (2021). https://doi.org/10.1016/j.physletb.2021.136644. arXiv:2104.05631 [hep-ph]

R. Aaij et al., Search for the decays \(B_s^0\rightarrow \tau ^+\tau ^-\) and \(B^0\rightarrow \tau ^+\tau ^-\). Phys. Rev. Lett. 118(25), 251802 (2017). https://doi.org/10.1103/PhysRevLett.118.251802. arXiv:1703.02508 [hep-ex]

J.P. Lees et al., Search for \(B^{+}\rightarrow K^{+} \tau ^{+}\tau ^{-}\) at the BaBar experiment. Phys. Rev. Lett. 118(3), 031802 (2017). https://doi.org/10.1103/PhysRevLett.118.031802. arXiv:1605.09637 [hep-ex]

T.V. Dong et al., Search for the decay \(B0\rightarrow \text{ K }*0\tau +\tau -\) at the Belle experiment. Phys. Rev. D 108(1), 011102 (2023). https://doi.org/10.1103/PhysRevD.108.L011102. arXiv:2110.03871 [hep-ex]

L. Aggarwal et al., Snowmass White Paper: Belle II physics reach and plans for the next decade and beyond (2022). arXiv:2207.06307 [hep-ex]

C. Cornella, G. Isidori, M. König, S. Liechti, P. Owen, N. Serra, Hunting for \(B^+\rightarrow K^+ \tau ^+\tau ^-\) imprints on the \(B^+ \rightarrow K^+ \mu ^+\mu ^-\) dimuon spectrum. Eur. Phys. J. C 80(12), 1095 (2020). https://doi.org/10.1140/epjc/s10052-020-08674-5. arXiv:2001.04470 [hep-ph]

Y.S. Amhis et al., Averages of b-hadron, c-hadron, and \(\tau \)-lepton properties as of 2021. Phys. Rev. D 107(5), 052008 (2023). https://doi.org/10.1103/PhysRevD.107.052008. arXiv:2206.07501 [hep-ex]

R. Aaij et al., Physics case for an LHCb Upgrade II—opportunities in flavour physics, and beyond, in the HL-LHC era (2018). arXiv:1808.08865 [hep-ex]

F.U. Bernlochner, Z. Ligeti, D.J. Robinson, N = 5, 6, 7, 8: nested hypothesis tests and truncation dependence of \(|V_{cb}|\). Phys. Rev. D 100(1), 013005 (2019). https://doi.org/10.1103/PhysRevD.100.013005. arXiv:1902.09553 [hep-ph]

P. Gambino, M. Jung, S. Schacht, The \(V_{cb}\) puzzle: an update. Phys. Lett. B 795, 386–390 (2019). https://doi.org/10.1016/j.physletb.2019.06.039. arXiv:1905.08209 [hep-ph]

M. Bordone, M. Jung, D. van Dyk, Theory determination of \(\bar{B}\rightarrow D^{(*)}\ell ^-\bar{\nu }\) form factors at \({\mathcal O} (1/m_c^2)\). Eur. Phys. J. C 80(2), 74 (2020). https://doi.org/10.1140/epjc/s10052-020-7616-4. arXiv:1908.09398 [hep-ph]

D. Ferlewicz, P. Urquijo, E. Waheed, Revisiting fits to \(B^{0} \rightarrow D^{*-} \ell ^{+} \nu _{\ell }\) to measure \(|V_{cb}|\) with novel methods and preliminary LQCD data at nonzero recoil. Phys. Rev. D 103(7), 073005 (2021). https://doi.org/10.1103/PhysRevD.103.073005. arXiv:2008.09341 [hep-ph]

Measurement of the ratios of branching fractions \(\mathcal{R}(\mathcal{D}^{*})\) and \({\mathcal{R}}(\mathcal{D}^{0})\). Phys. Rev. D 108, 012018 (2023). https://journals.aps.org/prd/abstract/10.1103/PhysRevD.108.012018. arXiv:2302.02886 [hep-ex]

R. Aaij et al., Test of lepton flavour universality using \(B^0 \rightarrow D^{*-}\tau ^+\nu _{\tau }\) decays with hadronic \(\tau \) channels (2023). arXiv:2305.01463 [hep-ex]

J.P. Lees et al., Evidence for an excess of \( \rightarrow D^{(*)} \tau ^-\bar{\nu }_\tau \) decays. Phys. Rev. Lett. 109, 101802 (2012). https://doi.org/10.1103/PhysRevLett.109.101802. arXiv:1205.5442 [hep-ex]

J.P. Lees et al., Measurement of an excess of \(\rightarrow D^{(*)}\tau ^- \bar{\nu }_\tau \) decays and implications for charged Higgs bosons. Phys. Rev. D 88(7), 072012 (2013). https://doi.org/10.1103/PhysRevD.88.072012. arXiv:1303.0571 [hep-ex]

Y. Sato et al., Measurement of the branching ratio of \(\bar{B}^0 \rightarrow D^{*+} \tau ^- \bar{\nu }_{\tau }\) relative to \(\bar{B}^0 \rightarrow D^{*+} \ell ^- \bar{\nu }_{\ell }\) decays with a semileptonic tagging method. Phys. Rev. D 94(7), 072007 (2016). https://doi.org/10.1103/PhysRevD.94.072007. arXiv:1607.07923 [hep-ex]

M. Huschle et al., Measurement of the branching ratio of \( \rightarrow D^{(\ast )} \tau ^- \bar{\nu }_\tau \) relative to \(\bar{B} \rightarrow D^{(\ast )} \ell ^- \bar{\nu }_\ell \) decays with hadronic tagging at Belle. Phys. Rev. D 92(7), 072014 (2015). https://doi.org/10.1103/PhysRevD.92.072014. arXiv:1507.03233 [hep-ex]

G. Caria et al., Measurement of \({\mathcal R} (D)\) and \({\mathcal R} (D^*)\) with a semileptonic tagging method. Phys. Rev. Lett. 124(16), 161803 (2020). https://doi.org/10.1103/PhysRevLett.124.161803. arXiv:1910.05864 [hep-ex]

S. Hirose et al., Measurement of the \(\tau \) lepton polarization and \(R(D^*)\) in the decay \( \rightarrow D^* \tau ^- \bar{\nu }_\tau \) with one-prong hadronic \(\tau \) decays at Belle. Phys. Rev. D 97(1), 012004 (2018). https://doi.org/10.1103/PhysRevD.97.012004. arXiv:1709.00129 [hep-ex]

R. Aaij et al., Observation of the decay \( \Lambda _b^0\rightarrow \Lambda _c^+\tau ^-\overline{\nu }_{\tau }\). Phys. Rev. Lett. 128(19), 191803 (2022). https://doi.org/10.1103/PhysRevLett.128.191803. arXiv:2201.03497 [hep-ex]

A.D. Sakharov, Violation of CP invariance, C asymmetry, and baryon asymmetry of the universe. Sov. Phys. Uspekhi 34(5), 392–393 (1991). https://doi.org/10.1070/pu1991v034n05abeh002497

D. Asner et al., Averages of \(b\)-hadron, \(c\)-hadron, and \(\tau \)-lepton properties (2010). arXiv:1010.1589 [hep-ex]

R. Aaij et al., Constraints on the CKM angle \(\gamma \) from \(B^\pm \rightarrow Dh^\pm \) decays using \(D\rightarrow h^\pm h^{\prime \mp }\pi ^0\) final states. JHEP 07, 099 (2022). https://doi.org/10.1007/JHEP07(2022)099. arXiv:2112.10617 [hep-ex]

R. Aaij et al., Simultaneous determination of CKM angle \(\gamma \) and charm mixing parameters. JHEP 12, 141 (2021). https://doi.org/10.1007/JHEP12(2021)141. arXiv:2110.02350 [hep-ex]

R. Aaij et al., Measurement of \(CP\) asymmetry in \(B_s^0 \rightarrow D_s^{\mp } K^{\pm }\) decays. JHEP 03, 059 (2018). https://doi.org/10.1007/JHEP03(2018)059. arXiv:1712.07428 [hep-ex]

L. Di Luzio, M. Kirk, A. Lenz, Updated \(B_s\)-mixing constraints on new physics models for \(b\rightarrow s\ell ^+\ell ^-\) anomalies. Phys. Rev. D 97(9), 095035 (2018). https://doi.org/10.1103/PhysRevD.97.095035. arXiv:1712.06572 [hep-ph]

R. Aaij et al., Precise determination of the \(B_{\rm s }^0\)-\(\overline{B}_{\rm s }^0\) oscillation frequency. Nat. Phys. 18(1), 1–5 (2022). https://doi.org/10.1038/s41567-021-01394-x. arXiv:2104.04421 [hep-ex]

R. Aaij et al., Improved measurement of \(CP\) violation parameters in \(B^0_s\rightarrow J/\psi K^{+}K^{-}\) decays in the vicinity of the \(\phi (1020)\) resonance (2023). arXiv:2308.01468 [hep-ex]

R. Aaij et al., Measurement of CP violation parameters and polarisation fractions in \( {\rm B }_{\rm s }^0\rightarrow \rm J /\psi {\overline{\rm K }}^{\ast 0} \) decays. JHEP 11, 082 (2015). https://doi.org/10.1007/JHEP11(2015)082. arXiv:1509.00400 [hep-ex]

R. Aaij et al., Direct CP violation in charmless three-body decays of B\(\pm \) mesons. Phys. Rev. D 108(1), 012008 (2023). https://doi.org/10.1103/PhysRevD.108.012008. arXiv:2206.07622 [hep-ex]

R. Aaij et al., Measurement of CP violation in the Decay \(B^{+} \rightarrow K^{+} \pi ^{0}\). Phys. Rev. Lett. 126(9), 091802 (2021). https://doi.org/10.1103/PhysRevLett.126.091802. arXiv:2012.12789 [hep-ex]

M. Gronau, A Precise sum rule among four B –\(>\) K pi CP asymmetries. Phys. Lett. B 627, 82–88 (2005). https://doi.org/10.1016/j.physletb.2005.09.014. arXiv:hep-ph/0508047

R. Aaij et al., Measurement of matter–antimatter differences in beauty baryon decays. Nat. Phys. 13, 391–396 (2017). https://doi.org/10.1038/nphys4021. arXiv:1609.05216 [hep-ex]

R. Aaij et al., Search for \(CP\) violation and observation of \(P\) violation in \(\Lambda _b^0 \rightarrow p \pi ^- \pi ^+ \pi ^-\) decays. Phys. Rev. D 102(5), 051101 (2020). https://doi.org/10.1103/PhysRevD.102.051101. arXiv:1912.10741 [hep-ex]

R. Aaij et al., Observation of CP violation in charm decays. Phys. Rev. Lett. 122(21), 211803 (2019). https://doi.org/10.1103/PhysRevLett.122.211803. arXiv:1903.08726 [hep-ex]

C.M. LHCb Collaboration, Framework TDR for the LHCb Upgrade II—opportunities in flavour physics, and beyond, in the HL-LHC era. Technical report, CERN, Geneva (2021). https://cds.cern.ch/record/2776420

B. Adeva, M. Adinolfi, A. Affolder, Z. Ajaltouni, J. Albrecht, F. Alessio, M. Alexander, P. Alvarez Cartelle, J.A.A. Alves, S. Amato, Y. Amhis, J. Amoraal, J. Anderson, O. Aquines Gutierrez, L. Arrabito, M. Artuso, E. Aslanides, G. Auriemma, S. Bachmann, Y. Bagaturia, D.S. Bailey, V. Balagura, W. Baldini, M.B. Pazos, R.J. Barlow, S. Barsuk, A. Bates, C. Bauer, T. Bauer, A. Bay, I. Bediaga, K. Belous, I. Belyaev, M. Benayoun, G. Bencivenni, R. Bernet, M.O. Bettler, A. Bizzeti, T. Blake, F. Blanc, C. Blanks, J. Blouw, S. Blusk, A. Bobrov, V. Bocci, A. Bondar, N. Bondar, W. Bonivento, S. Borghi, A. Borgia, E. Bos, T.J.V. Bowcock, C. Bozzi, J. Bressieux, S. Brisbane, M. Britsch, N.H. Brook, H. Brown, A. Buchler-Germann, J. Buytaert, J.P. Cachemiche, S. Cadeddu, J.M. Caicedo Carvajal, O. Callot, M. Calvi, M. Calvo Gomez, A. Camboni, W. Cameron, P. Campana, A. Carbone, G. Carboni, A. Cardini, L. Carson, K. Carvalho Akiba, G. Casse, M. Cattaneo, M. Charles, P. Charpentier, A. Chlopik, P. Ciambrone, X. Cid Vidal, P.J. Clark, P.E.L. Clarke, M. Clemencic, H.V. Cliff, J. Closier, C. Coca, V. Coco, J. Cogan, P. Collins, F. Constantin, G. Conti, A. Contu, G. Corti, G.A. Cowan, DB. ’Almagne, C. D’Ambrosio, D.G. d’Enterria, W. Da Silva, P. David, I. De Bonis, S. De Capua, M. De Cian, F. De Lorenzi, J.M. De Miranda, L. De Paula, P. De Simone, H. De Vries, D. Decamp, H. Degaudenzi, M. Deissenroth, L. Del Buono, C. Deplano, O. Deschamps, F. Dettori, J. Dickens, H. Dijkstra, M. Dima, S. Donleavy, A.C.d. Reis, A. Dovbnya, T.D. Pree, P.Y. Duval, L. Dwyer, R. Dzhelyadin, C. Eames, C. Easo, U. Egede, V. Egorychev, F. Eisele, S. Eisenhardt, L. Eklund, Esperante D. Pereira, L. Esteve, S. Eydelman, E. Fanchini, C. Farber, G. Fardell, C. Farinelli, S. Farry, V. Fave, V. Fernandez Albor, M.Ferro-Luzzi, S. Filippov, C. Fitzpatrick, F. Fontanelli, R. Forty, M. Frank, C. Frei, M. Frosini, J.L. Fungueirino Pazos, S. Furcas, A. Gallas Torreira, D. Galli, M. Gandelman, Y. Gao, J.-C. Garnier, L. Garrido, C. Gaspar, N. Gauvin, M. Gersabeck, T. Gershon, P. Ghez, V. Gibson, Y. Gilitsky, V.V. Gligorov, C. Gobel, D. Golubkov, A. Golutvin, A. Gomes, M. Grabalosa Gandara, R. Graciani Diaz, L.A. Granado Cardoso, E. Grauges, G. Graziani, A. Grecu, G. Guerrer, E. Gushchin, Y. Guz, Z. Guzik, T. Gys, F. Hachon, G. Haefeli, S.C. Haines, T. Hampson, S. Hansmann-Menzemer, R. Harji, N. Harnew, P.F. Harrison, J. He, K. Hennessy, P. Henrard, J.A. Hernando Morata, A. Hicheur, E. Hicks, W. Hofmann, K. Holubyev, P. Hopchev, W. Hulsbergen, P. Hunt, T. Huse, R.S. Huston, D. Hutchcroft, V. Iakovenko, C.I. Escudero, J. Imong, R. Jacobsson, M. Jahjah Hussein, E. Jans, F. Jansen, P. Jaton, B. Jean-Marie, M. John, C.R. Jones, B. Jost, F. Kapusta, T.M. Karbach, J. Keaveney, U. Kerzel, T. Ketel, A. Keune, S. Khalil, B. Khanji, Y.M. Kim, M. Knecht, J. Knopf, S. Koblitz, A. Konoplyannikov, P. Koppenburg, I. Korolko, A. Kozlinskiy, M. Krasowski, L. Kravchuk, P. Krokovny, K. Kruzelecki, M. Kucharczyk, I. Kudryashov, T. Kvaratskheliya, D. Lacarrere, A. Lai, R.W. Lambert, G. Lanfranchi, C. Langenbruch, T. Latham, R. Le Gac, R. Lefevre, A. Leflat, J. Lefrancois, O. Leroy, K. Lessnoff, , L.Li, Y.Y. Li, J. Libby, M. Lieng, R. Lindner, S. Lindsey, C. Linn, B. Liu, G. Liu, J.H. Lopes, E. Lopez Asamar, J. Luisier, F. Machefert, I. Machikhiliyan, F. Maciuc, O. Maev, J.Magnin, A. Maier, R.M.D. Mamunur, G. Manca, G. Mancinelli, N. Mangiafave, U. Marconi, F. Marin, J. Marks, G. Martellotti, A. Martens, L. Martin, D. Martinez Santos, Z. Mathe, Matte: road map for selected key measurements from LHCb. Roadmap for selected key measurements of LHCb. Technical report, CERN, Geneva, 379 p. (2010). https://cds.cern.ch/record/1224241

R. Aaij et al., Observation of \(J/\psi p\) resonances consistent with Pentaquark states in \(\Lambda _b^0 \rightarrow J/\psi K^- p\) decays. Phys. Rev. Lett. 115, 072001 (2015). https://doi.org/10.1103/PhysRevLett.115.072001. arXiv:1507.03414 [hep-ex]

R. Aaij et al., Measurement of the W boson mass. JHEP 01, 036 (2022). arXiv:2109.01113 [hep-ex]

R. Aaij et al., Measurement of antiproton production from antihyperon decays in \({p} \text{ He }\) collisions at \(\sqrt{s_{\text{N}} \text{N}} =110\, {\text{GeV}}\). Eur. Phys. J. C 83(6), 543 (2023). https://doi.org/10.1140/epjc/s10052-023-11673-x. arXiv:2205.09009 [hep-ex]

Funding

Open access funding provided by University of Zurich.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Owen, P., Serra, N. Status and prospects of the LHCb experiment. Eur. Phys. J. Spec. Top. 233, 225–240 (2024). https://doi.org/10.1140/epjs/s11734-023-01010-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1140/epjs/s11734-023-01010-4