Abstract

Quantum amplitude estimation (QAE) is a pivotal quantum algorithm to estimate the squared amplitude a of the target basis state in a quantum state \(|{\Phi}\rangle \). Various improvements on the original quantum phase estimation-based QAE have been proposed for resource reduction. One of such improved versions is iterative quantum amplitude estimation (IQAE), which outputs an estimate â of a through the iterated rounds of the measurements on the quantum states like \(G^{k}|{\Phi}\rangle \), with the number k of operations of the Grover operator G (the Grover number) and the shot number determined adaptively. This paper investigates the bias in IQAE. Through the numerical experiments to simulate IQAE, we reveal that the estimate by IQAE is biased and the bias is enhanced for some specific values of a. We see that the termination criterion in IQAE that the estimated accuracy of â falls below the threshold is a source of the bias. Besides, we observe that \(k_{\mathrm{fin}}\), the Grover number in the final round, and \(f_{\mathrm{fin}}\), a quantity affecting the probability distribution of measurement outcomes in the final round, are the key factors to determine the bias, and the bias enhancement for specific values of a is due to the skewed distribution of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\). We also present a bias mitigation method: just re-executing the final round with the Grover number and the shot number fixed.

Similar content being viewed by others

1 Introduction

Among various quantum algorithms, quantum amplitude estimation (QAE) is one of the prominent ones. It is originally proposed in [1] as a method to estimate the squared amplitude a of the target basis state \(|{\phi _{1}}\rangle \) in a quantum state \(|{\Phi}\rangle \). If we have the oracle A to generate \(|{\Phi}\rangle \) and the reflection operator S with respect to \(|{\phi _{1}}\rangle \), we can obtain an ϵ-approximation of a,Footnote 1 querying A and S \(O(1/\epsilon )\) times. More concretely, the method in [1] is based on quantum phase estimation (QPE) [2]: using A and S, we construct the Grover operator G (defined later), which acts on \(|{\Phi}\rangle \) to amplify the amplitude of \(|{\phi _{1}}\rangle \), and then operate the controlled version of G \(O(1/\epsilon )\) times followed by an inverse quantum Fourier transform, which yields an approximation of a.

One reason why QAE is important is that it is the basis of other quantum algorithms. For example, it is used in the quantum algorithm for Monte Carlo integration (QMCI) [3], which estimates the expectation of a random variable quadratically faster than the classical counterpart. Furthermore, QMCI has many applications in industry, e.g., derivative pricing [4–9] in finance.

Partly because of such practical importance, many improvements to the original version of QAE have been proposed so far. In particular, some methods without QPE have been devised [10–21]. Since the controlled G requires a larger gate cost than the uncontrolled one, replacing the former with the latter leads to gate cost reduction. The first algorithm in such a direction is QAE based on the maximum likelihood estimation (MLE) [10], which we hereafter call MLEQAE. In this method, we apply G to \(|{\Phi}\rangle \) k times and make N measurements on \(G^{k}|{\Phi}\rangle \) by which we distinguish \(|{\phi _{1}}\rangle \) and other basis states, increasing k according to some given schedule. We hereafter call this k, the number of operations of G, the Grover number. The outcomes of the measurements, namely the numbers of times we get \(|{\phi _{1}}\rangle \) for the various k, give us the information on \(a_{k}=\sin ^{2}\left ((2k+1)\times \arcsin (\sqrt{a})\right )\), the squared amplitude of \(|{\phi _{1}}\rangle \) in \(G^{k}|{\Phi}\rangle \), and thus the information on a. Then, we use this to construct the likelihood function of a and obtain an estimate of a as the maximum likelihood point.

Afterward, the paper [13] proposed iterative quantum amplitude estimation (IQAE), which this paper focuses on. Like MLEQAE, in IQAE we use the outcomes of the measurements on \(G^{k}|{\Phi}\rangle \) with varying k, but in a different way. Starting from \(|{\Phi}\rangle \), which corresponds to \(k=0\), we obtain the confidence interval (CI) of a by the MLE based on the outcomes of the measurements on \(|{\Phi}\rangle \). We then choose the next k adaptively in the way explained later, and make the measurements on \(G^{k}|{\Phi}\rangle \). Via MLE, this yields the CI on \(a_{k}\), which is translated into the CI of a narrower than the previous one. We repeat these steps, each of which is called a round, increasing the Grover number and narrowing the CI, until the CI width reaches the required accuracy ϵ. In addition to this adaptive increment of the Grover number, another feature of IQAE is that N the number of the measurements (the shot number) in one round is increased gradually: if the CI of a derived from measurements with current k is so narrow that we can determine the next k, we stop the current round and go to the next round with the next k, and otherwise, we add more measurements with the current k. The advantage of such an adaptive increment of k and N is that it can lead to saving the total number of queries to G compared to fixing k and N in advance.Footnote 2

In this paper, we focus on the bias in IQAE, which can be an issue in some situations but has not been focused on in previous studies. Note that the estimate â of a by IQAE is stochastic since it is derived from outcomes of measurements on quantum states, which are intrinsically random. Although IQAE guarantees that the magnitude of the error \(\hat{a}-a\) is below the tolerance with high probability, it might be biased, that is, the expectation of the error might not be zero: \(b:=\mathbb{E}[\hat{a} - a]\ne 0\), where \(\mathbb{E}[\cdot ]\) is the expectation with respect to the randomness of the measurement outcomes. We hereafter call b the bias and the residual \(\hat{a}-a-b\) as the random part.

The motivation to focus on the bias in QAE, including IQAE, is the possibility that it may matter more than random part in some cases. That is, when we want some quantities given as a combination of many outputs of different QAE runs, biases can accumulate and, even if small in each output, become significant in total. In worst cases, biases in the sum of N estimates scales as \(O(N)\), whereas according to the central limit theorem, the random parts cancel each other and scale as \(O(\sqrt{N})\). An example of situations where we combine many QAE outputs is calculating the total value of a portfolio of derivative contracts, where each contract is priced by individual runs of QAE. Another example is calculating Gibbs partition functions in statistical mechanics [22]. In the quantum algorithm in [22], a partition function is expressed as the product of expectations of certain random variables, each of which is estimated by QAE.

As far as the author knows, there has been no study on bias in IQAE, while bias in other types of QAE has been studied so far [19, 21, 22]. Thus, in this paper, we investigate the bias in IQAE. We conduct numerical experiments to reveal the nature of the bias in IQAE. One of our key findings from the numerical experiments is that IQAE is in fact biased, and its termination criterion induces the bias. That is, the procedure that the algorithm ends when the CI width of a reaches the required accuracy leads to the bias. This is because the CI is statistically inferred and its width depends on the realized value of the estimate â of a. The algorithm tends to end when â accidentally takes a value that yields a narrow CI. This effect affects the expectation of â and then induces the bias.

In particular, we observe that the bias is enhanced for some specific values of a. This phenomenon is explained by the distribution of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\). Here, \(k_{\mathrm{fin}}\) is the Grover number in the final round, and \(f_{\mathrm{fin}}\in [0,1]\) is a quantity determined by \(k_{\mathrm{fin}}\) (see the definition later), which rapidly varies by a slight change of \(k_{\mathrm{fin}}\) and largely affects the bias. Note that \(k_{\mathrm{fin}}\) is also a random variable depending on the measurement outcomes, and thus so is \((k_{\mathrm{fin}},f_{\mathrm{fin}})\). For a value of a other than the specific ones, \(f_{\mathrm{fin}}\) takes the various values distributed widely in the range \([0,1]\) when \(k_{\mathrm{fin}}\) varies. Then, over the wide distribution of realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) in the 2D plane, the various values of the bias for the various values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) are canceled out on average, which yields a small bias in total. On the other hand, for the specific values of a, the realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) are not distributed widely but concentrated in a small part of the 2D plane, in fact, on a few curves. Thus, the bias cancellation does not occur and the resultant bias remains considerable.

We also propose a simple way to mitigate the bias: just re-executing the final round. If the algorithm ends at the final round with the Grover number \(k_{\mathrm{fin}}\) and the shot number \(N_{\mathrm{fin}}\), we perform another round with the same Grover number and shot number and obtain an estimate of a from the measurement outcomes in this additional round. Now, the gradual increment of the shot number and the termination criterion on the CI width are no longer adopted: we perform just \(N_{\mathrm{fin}}\) shots and stop. This largely diminishes the bias by the termination criterion, as confirmed by the numerical experiments. We also confirm that, although this re-executing solution definitely increases the total number of queries to G, the increase rate is modest – about 25% in our experiment. This is because the final round does not dominate the other rounds in terms of the query number.

The rest of this paper is organized as follows. Section 2 is a preliminary one, where we outline QAE and IQAE. Section 3 is the main part of this paper, where the results of our numerical experiments are presented. We first show the magnitude of the bias for various values of a, which is enhanced for some specific values of a, and then elaborate the aforementioned understanding of such a phenomenon. We finally propose the bias mitigation method by re-executing the final round along with the numerical result. Section 4 summarizes this paper.

2 Preliminaries

2.1 Quantum amplitude estimation

In this paper, QAE is a generic term that means quantum algorithms to estimate the amplitude of a target basis state in a superposition state. Concretely, our aim is described as the following problem.

Problem 1

Let \(\epsilon ,\alpha \in (0,1)\). Suppose that we are given access to the following two quantum circuits A and S on a n-qubit register. A acts as

where \(a\in [0,1]\), \(|{0}\rangle \) is the computational basis state in which all the n qubits take \(|{0}\rangle \), and \(|{\phi _{0}}\rangle \) and \(|{\phi _{1}}\rangle \) are quantum states orthogonal to each other. S acts as

Also suppose that we can measure an observable corresponding to a Hermitian H on the same system, for which \(|{\phi _{0}}\rangle \) and \(|{\phi _{1}}\rangle \) are eigenstates with different eigenvalues. Then, we want to get an estimate of a with accuracy ϵ with probability at least \(1-\alpha \).

Although the setup of Problem 1 seems quite simple, previous studies [1, 10–21] have generally considered this setup, and in fact, many applications can be boiled down to this form. For example, in QMCI [3], the expected value of a random variable is encoded into a quantum state like Eq. (1) as the squared amplitude a of a basis state \(|{\phi _{1}}\rangle \), and through estimating this amplitude, we get an approximation of the expectation.

In many use cases of QAE, \(|{\phi _{1}}\rangle \) and \(|{\phi _{0}}\rangle \) are distinguished by whether a specific qubit takes \(|{1}\rangle \) or \(|{0}\rangle \). In this case, S is the Z gate on the qubit and H is the projective measurement in the computational basis on the qubit.

[1] posed this problem and presented an algorithm for it based on QPE. We have the following theorem on its query complexity.

Theorem 1

([1], Theorem 12, modified)

Suppose that we are given access to the oracles A and S in Eqs. (1) and (2). Then, for any \(\epsilon ,\alpha \in (0,1)\), there exists a quantum algorithm that outputs \(\hat{a}\in (0,1)\) such that \(|\hat{a}-a|\le \epsilon \) with probability at least \(1-\alpha \) calling A and S

times.

Although the success probability in the original algorithm in [1] is lower bounded by a constant \(8/\pi ^{2}\), it can be enhanced to an arbitrary value \(1-\alpha \) at the expense of an \(O\left (\log (1/\alpha )\right )\) overhead in the query complexity. This is done by a trick of taking the median of the results in the multiple runs of the algorithm [3], which is based on the powering lemma in [23].

We do not give the full details of this QPE-based QAE but present its outline briefly. The key ingredient is the Grover operator G, which is defined as

Here, \(S_{0}\) is a unitary such that

which can be implemented as a combination of X gates and a multi-controlled Z gate. The key property of G is that for any \(k\in \mathbb{N}\) it acts as

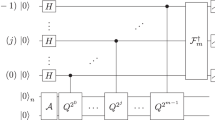

where \(\theta _{a}:=\arcsin \left (\sqrt{a}\right )\). That is, G rotates the statevector by an angle \(2\theta _{a}\) in the 2-dimensional Hilbert space spanned by \(|{\phi _{1}}\rangle \) and \(|{\phi _{0}}\rangle \). Because of this property, we have the following QPE-based approach to estimate a. We prepare a register \(R_{G}\) on which G acts and an ancillary m-qubit register \(R_{\mathrm{anc}}\). Then, using \(G,G^{2},\ldots ,G^{2^{m-1}}\) controlled by the first, second, …, m-th qubits in \(R_{\mathrm{anc}}\), respectively, we generate the state \(\frac{1}{2^{m/2}}\sum _{k=0}^{2^{m}-1}|{k}\rangle G^{k}|{\Phi}\rangle \). Finally, we operate the inverse quantum Fourier transform on \(R_{\mathrm{anc}}\), which, thanks to the property in Eq. (6), yields an m-bit precision estimate of \(\theta _{a}\) and thus that of \(a=\sin ^{2} \theta _{a}\). In this process, the number of uses of (controlled) G is \(1+2+\cdots +2^{m-1}=2^{m}\), and thus A and S are queried \(O(2^{m})\) times, which implies the \(O(1/\epsilon )\) query complexity for accuracy ϵ as shown in Theorem 1. See [1] for more details.

Compared to a naive way for estimating a by repeating generations of \(|{\Phi}\rangle \) and measurements on it and letting the frequency of obtaining \(|{\phi _{1}}\rangle \) be an estimate of a, in which the number of queries to A scales as \(\widetilde{O}(1/\epsilon ^{2})\), QAE achieves the quadratic speedup with respect to ϵ. This is the origin of the quadratic speedups in quantum algorithms built upon QAE, e.g., QMCI in comparison to the classical Monte Carlo method.

2.2 Iterative quantum amplitude estimation

After the original QAE was proposed, some variants have been proposed so far, aiming at the reduction of the resource. Avoiding the use of QPE is a common approach since QPE requires the controlled version of G, for which the resource for the implementation increases compared to the uncontrolled one.

IQAE [13] is in such a direction. The basic idea is as follows. By iterating the generation of \(|{\Phi}\rangle \) and the measurement on it, we get an estimate of a as the frequency of obtaining \(|{\phi _{1}}\rangle \) in the measurements. This is in fact a kind of MLE, since for \(\mathrm{Be}(p)\), the Bernoulli distribution with probability of 1 equal to p, the maximum likelihood estimate of p from multiple trials is nothing but the realized frequency of 1. We also have the CI of a, which contains the true value of a with high probability. Next, for some \(k\in \mathbb{N}\), we repeat generating \(G^{k}|{\Phi}\rangle \) and the measurement on it, and from the measurement outcomes we get an estimate of

and then that of a. Here, due to the periodicity of \(a_{k}\) as the function of a, the \(2k+1\) different values in \([0,1]\) can be the maximum likelihood estimates of a. However, since we already have the CI from the previous round consisting of the measurements on \(|{\Phi}\rangle \), combining it with the measurements on \(G^{k}|{\Phi}\rangle \) yields the new CI as a single interval in \([0,1]\), whose width is narrower than the previous one. We repeat this procedure for a sufficient number of rounds, making the width of the CI narrower. When the CI width reaches the required accuracy ϵ, we have an estimate of a with an error below ϵ. The CI width in each round is taken so that the probability that in every round the obtained CI successfully encloses the true value a is at least some required success probability \(1-\alpha \).

Concretely, we present this algorithm as Algorithm 1. This is a modification of the algorithm [20], which is already a modified version of the original IQAE algorithm in [13].

Leaving the full details of this algorithm to [20], we just present a theorem on the query complexity and some comments.

Theorem 2

(Theorem 3.1 in [20])

Let \(\epsilon ,\alpha \in (0,1)\). Suppose that we are given access to the oracles A in Eq. (1) and S in Eq. (2). Then, Algorithm 1 outputs an ϵ-approximation â of a with probability at least \(1-\alpha \), making \(O\left (\frac{1}{\epsilon}\log \frac{1}{\alpha}\right )\) queries to A and S in total.

Let us make some comments that help us to understand the outline of the algorithm. In Algorithm 1, \([a^{l}_{k_{i},j}, a^{u}_{k_{i},j}]\) and \([\theta ^{l}_{k_{i},j}, \theta ^{u}_{k_{i},j}]\) are the CIs of a and the angle \(\theta _{a}\), respectively. In the ith round, we set the Grover number to \(k_{i}\), and we calculate the maximum likelihood estimate \(\hat{a}_{k_{i},j}\) of \(a_{k_{i}}\) and its CI \([a^{l}_{k_{i},j},a^{u}_{k_{i},j}]\) from the outcomes of the measurements on \(G^{k_{i}}|{\Phi}\rangle \). They are translated to the maximum likelihood estimate \(\hat{a}_{i,j}\) of a and the CIs \([\theta ^{l}_{i,j},\theta ^{u}_{i,j}]\) and \([a^{l}_{i,j},a^{u}_{i,j}]\). The ith round ends if we find the Grover number in the next round by the procedure FindNextK shown as Algorithm 2. In this procedure, we search the next k greedily. Namely, we set it as large as possible, requiring that \([K\theta ^{l}_{i,j},K\theta ^{u}_{i,j}]\) the CI of \(\theta _{a}\) multiplied by \(K=2k+1\) lies in a single quadrant, which enables us to determine the CIs of a and \(\theta _{a}\) as single intervals. If we cannot find such k in the region that \(K \ge r_{\mathrm{min}} (2k_{i}+1)\), we continue the ith round. When \(\Delta a_{i,j}\), the accuracy of the estimate of a from the measurement outcomes so far, goes below the predetermined accuracy level ϵ, the algorithm stops and outputs the estimate at that time.

In the rounds of this process, we operate \(G^{k_{1}},G^{k_{2}},\ldots \) with \(k_{i}\) increasing exponentially as \(K_{i+1} \ge r_{\mathrm{min}}K_{i}\), and its value in the final round is of order \(O(1/\epsilon )\). This means that G is queried \(O(1/\epsilon )\) times in total, and so are A and S, as stated in Theorem 2.

Note that the parameters \(N_{\mathrm{shot}}\) and \(r_{\mathrm{min}}\) in Algorithm 1 are not mentioned in the statement of Theorem 2. Although there may be various settings on these that make the algorithm work, we adopt the following setting in this paper. According to [20], \(N_{\mathrm{shot}}\) can be set to 1, which means that we search the next k every time we make one measurement on \(G^{k_{i}}|{\Phi}\rangle \), and it reduces the query complexity keeping the accuracy. We thus set \(N_{\mathrm{shot}}=1\) hereafter. On \(r_{\mathrm{min}}\), there may be some choices such as 2 in [13] and 3 in [20], and we adopt the former hereafter.

We also note that a slight modification in Algorithm 1 from the algorithm in [20]: the former outputs the maximum likelihood estimate â when \(\Delta a_{i,j}\) becomes smaller than the required accuracy ϵ, while the latter outputs the midpoint of \([a^{l}_{i,j},a^{u}_{i,j}]\) when \(\theta ^{u}_{i,j}-\theta ^{l}_{i,j}\), the width of the CI of \(\theta _{a}\), becomes smaller than 2ϵ. The easily checked relationship \(|a^{u}_{i,j}-a^{l}_{i,j}|\le |\theta ^{u}_{i,j}-\theta ^{l}_{i,j}|\) implies that, if we impose the termination criterion on \(\theta _{a}\) even though we want to guarantee the accuracy of a, we may take unnecessarily many iterations. We thus adopt the termination criterion on a, and in fact, we confirmed that this leads to a smaller query number than the criterion on \(\theta _{a}\) in the later numerical experiment, although we will not show the result in the latter setting.

3 Numerical experiments on the bias in IQAE

3.1 Biases for various a

Hereafter, in order to understand the bias in IQAE and how it arises, we conduct some numerical experiments.

First of all, since the current problem is characterized by a, the amplitude we want to estimate, we run IQAE and see the bias for the various values of a. Here, “run” does not mean running Algorithm 1 on a real quantum computer or a quantum circuit simulator but a classical simulation of the algorithm based on the probability distribution of the outcomes of the measurements made in the algorithm. Concretely, we replace the step 32 in Algorithm 1 with “Draw \(n_{\mathrm{shot}}\) samples from \(\mathrm{Be}\left (a_{k_{i}}\right )\) and let the number of 1’s be \(n_{1}\)”. Note that, if we know the value of a, we know the probability distribution of the outcome in measuring \(G^{k_{i}}|{\Phi}\rangle \), that is, \(|{\phi _{1}}\rangle \) with probability \(a_{k_{i}}\) and \(|{\phi _{0}}\rangle \) with probability \(1-a_{k_{i}}\), which is equivalent to \(\mathrm{Be}\left (a_{k_{i}}\right )\). Therefore, with the above replacement, we can produce the output of Algorithm 1 under the probability distribution it obeys. We hereafter call this simulation procedure Algorithm 1′.

For the estimator â of a, we define its bias as

where \(\mathbb{E}[\cdot ]\) denotes the expectation with respect to the randomness of the measurement outcomes in Algorithm 1. In order to estimate the magnitude of the bias for various values of a, we ran Algorithm 1′ \(N_{\mathrm{run}}\) times, and calculate the value of the average of the errors in the runs

and the standard error of \(\bar{b}(a)\)

where \(\hat{a}^{(n)}\) is the output in the nth run. We can grasp the magnitude of the bias by comparing \(\bar{b}(a)\) and \(\bar{\sigma}(a)\), since, if \(b(a)=0\), \(\bar{b}(a)\) is comparable to \(\bar{\sigma}(a)\) with high probability, e.g., \(|\bar{b}(a)|\le 2\bar{\sigma}(a)\) with about 95%, for sufficiently large \(N_{\mathrm{run}}\). The result is shown in Fig. 1. In this figure, we set a to the 201 equally spaced points in \([0.001,0.999]\) including the ends. The other parameters are set as \(N_{\mathrm{run}}=10^{4}\), \(\epsilon =0.001\), \(\alpha =0.05\), which also applies hereafter. From this figure, we see that, for a considerable fraction of the examined values of a, \(|\bar{b}(a)|\) exceeds \(2\bar{\sigma}(a)\), which implies â is biased. In particular, \(\bar{b}(a)\) takes much larger values for specific values of a than other values. Namely, the bias is enhanced for some specific values of a.

For the various values of the amplitude a, \(|\bar{b}(a)|\), the absolute value of the average of the errors in the 10,000 runs of IQAE (Eq. (13)) is plotted in blue, and \(2\bar{\sigma}(a)\), the standard error of this average (Eq. (14)) times 2 is plotted in orange. We set \(\epsilon =0.001\), \(\alpha =0.05\)

3.2 Reason why the bias is enhanced for specific values of a

We then investigate the reason why the bias is enhanced for specific values of a, fixing a to 0.2505, one of such values. What makes the situation that \(a=0.2505\) different from the others?

To make the investigation as simple as possible, we should note some points. First, we note that it is sufficient to focus on the final round in Algorithm 1. The output of Algorithm 1 is determined by the result of the MLE in the final round. Therefore, as long as the CI obtained in the round just before the final one successfully encloses a, which occurs with high probability at least \(1-\alpha \), the error is determined by the final round only. Second, we note that the final round is characterized by only \(k_{\mathrm{fin}}\), the Grover number in that round: other quantities that define the procedure in the final round are automatically determined when \(k_{\mathrm{fin}}\) is fixed, including \(R_{\mathrm{fin}}\), as long as the CI of a encloses its true value. We finally make a note on notation: here and hereafter, like \(k_{\mathrm{fin}}\) and \(R_{\mathrm{fin}}\), when we write quantities that have \(i_{\mathrm{fin}}\), the index indicating the final round, in the subscript, we replace \(i_{\mathrm{fin}}\) with fin for conciseness.

Based on the above discussion and noting that \(k_{\mathrm{fin}}\) is also a random variable affected by the measurement outcomes in the previous rounds, we write the bias as

where \(\mathcal{G}_{k_{\mathrm{fin}}^{\prime}}\) is the event that \(k_{\mathrm{fin}}\) takes a value \(k_{\mathrm{fin}}^{\prime}\), and \(p_{k_{\mathrm{fin}}^{\prime}}\) is its probability.

3.2.1 Bias conditioned on the final Grover number

We then consider how \(k_{\mathrm{fin}}\) affects \(b_{k_{\mathrm{fin}}}(a)\), the bias conditioned on \(k_{\mathrm{fin}}\). First, we rewrite â the output of Algorithm 1 as

where \(\hat{\gamma}_{\mathrm{fin}}\) is the value of \(\hat{\gamma}_{\mathrm{fin},j}\) when

holds for the first time. Note that \(\gamma ^{l}_{\mathrm{fin},j}\), \(\gamma ^{u}_{\mathrm{fin},j}\) and \(\hat{\gamma}_{\mathrm{fin},j}\) are random variables determined by the measurement outcomes in Algorithm 1, and so is \(\hat{\gamma}_{\mathrm{fin}}\). When \(k_{\mathrm{fin}}\gg 1\), which is a typical situation for small ϵ, the most sensitive dependence of â’s distribution on \(k_{\mathrm{fin}}\) is through the distributions of \(\gamma ^{l}_{\mathrm{fin},j}\), \(\gamma ^{u}_{\mathrm{fin},j}\) and \(\hat{\gamma}_{\mathrm{fin},j}\), while \(k_{\mathrm{fin}}\) also affects â through \(K_{\mathrm{fin}}\) and \(R_{\mathrm{fin}}\) in Eqs. (17) and (18). This is because the distributions of \(\hat{\gamma}_{\mathrm{fin},j}\) etc. largely change even when \(k_{\mathrm{fin}}\) changes slightly. Under the definition in Eq. (9), the distributions of \(\hat{\gamma}_{\mathrm{fin},j}\) etc. are determined by the distribution of \(\hat{a}_{k_{\mathrm{fin}},j}\) and \(\mathfrak{p}(R_{\mathrm{fin}})\), the parity of \(R_{\mathrm{fin}}\). The distribution of \(\hat{a}_{k_{\mathrm{fin}},j}\) is determined by that of the outcome of the measurement on \(G^{k_{\mathrm{fin}}}|{\Phi}\rangle \), which is equivalent to \(\mathrm{Be}(a_{k_{\mathrm{fin}}})\), and \(a_{k_{\mathrm{fin}}}\) can change largely even by shifting \(k_{\mathrm{fin}}\) by 1. When \(\mathfrak{p}(R_{\mathrm{fin}})\) flips, the form of \(\hat{\gamma}_{\mathrm{fin},j}\) as a function of \(\hat{a}_{k_{\mathrm{fin}},j}\) also flips and so does the distribution of \(\hat{\gamma}_{\mathrm{fin},j}\). These observations imply that we should focus on \(a_{k_{\mathrm{fin}}}\) and \(\mathfrak{p}(R_{\mathrm{fin}})\) as key factors for \(b_{k_{\mathrm{fin}}}(a)\). However, as an equivalent to this, we instead focus on how \(b_{k_{\mathrm{fin}}}(a)\) is affected by

where \(\mathrm{frac}(x):=x-\lfloor x \rfloor \) is the fractional part of \(x\in \mathbb{R}\). \(f_{\mathrm{fin}}\) has a one-to-one correspondence to the pair \((a_{k_{\mathrm{fin}}},\mathfrak{p}(R_{\mathrm{fin}}))\), and taking a single quantity \(f_{\mathrm{fin}}\) as a key factor rather than the pair \((a_{k_{\mathrm{fin}}},\mathfrak{p}(R_{\mathrm{fin}}))\) makes the following discussion simpler.

Since \(f_{\mathrm{fin}}\) is also a quantity rapidly changing with respect to \(k_{\mathrm{fin}}\), in order to understand how it affect \(b_{k_{\mathrm{fin}}}(a)\), we temporarily deal with \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\) as independent variables, even though \(k_{\mathrm{fin}}\) determines \(f_{\mathrm{fin}}\). We set \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\) separately, and, as an estimate of \(b_{k_{\mathrm{fin}}}(a)\), calculate the quantity \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) by the procedure in Algorithm 3. That is, we run one round of IQAE many times for fixed \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\), and take the average of the resultant errors in the runs in which IQAE itself is terminated, neglecting the other runs, which move to the next round. We calculate the average only when at least 1000 runs out of the 10,000 total runs yield outputs other than NaN, since averaging a small number of random results leads to an inaccurate estimate. Note that, in compensation for setting \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\) independently, the true amplitude a (now, 0.2505) is slightly adjusted to ã so that Eq. (19) holds. Nevertheless, we expect that \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) gives us a good illustration of the behavior of \(b_{k_{\mathrm{fin}}}(a)\). Also note that the setting in Eq. (21) corresponds to the assumption that the true amplitude ã is enclosed in the CI in the previous round.

In Fig. 2, we show the results for the various values of \(f_{\mathrm{fin}}\) with \(k_{\mathrm{fin}}\) set to several values. For \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) that yields NaN \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\), we do not plot a point.

\(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) calculated by Algorithm 3 for various values of \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\). \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) indicates the bias generated in the final round of IQAE conditioned on the Grover number \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\) in Eq. (19). For \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) that yields NaN \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\), we do not plot a point

We can intuitively understand how the bias is generated by noting the following two points.

First, we note that \(\Delta a_{i,j}\), the estimated accuracy in the intermediate step in Algorithm 1, is also a random variable. It is determined by \(\hat{a}_{k_{i},j}\), the realized frequency of \(|{\phi _{1}}\rangle \) in the repeated measurements on \(G^{k_{i}}|{\Phi}\rangle \), and \(N_{i,j}\), the number of the measurements. How \(\Delta a_{i,j}\) depends on \(\hat{a}_{k_{i},j}\) is understood as follows. Because of the upper bound 1 and lower bound 0 of \(\hat{a}_{k_{i},j}\), the width of the CI \([a^{l}_{k_{i},j},a^{u}_{k_{i},j}]\) of \(a_{k_{i}}\) given as Eq. (8) already depends on not only \(N_{i,j}\) but also \(\hat{a}_{k_{i},j}\): if \(\hat{a}_{k_{i},j}\) is closer to 0 or 1, the CI width becomes smaller. In addition to this, the derivation of \(\Delta a_{i,j}\) from \(\hat{a}_{k_{i},j}\) and \([a^{l}_{k_{i},j},a^{u}_{k_{i},j}]\) by the nonlinear relationship introduce the dependence of \(\Delta a_{i,j}\) on \(\hat{a}_{k_{i},j}\). Besides, since \(\hat{a}_{k_{i},j}\) has the one-to-one correspondence to \(\hat{a}_{i,j}\), we can regard \(\Delta a_{i,j}\) as a function of \(\hat{a}_{i,j}\). Figure 3 illustrates this. Figure 3(a) shows \(a^{u}_{i,j}\) and \(a^{l}_{i,j}\), the upper and lower ends of the CI of a versus the realized value of \(\hat{a}_{i,j}\) after \(N=100\) shots in a round with the Grover number \(k=200\), and Fig. 3(b) shows \(\Delta a_{i,j}\) versus \(\hat{a}_{i,j}\).

In Fig. 3(a), the blue (resp. red) solid line is the upper end \(a^{u}_{i,j}\) (resp. lower end \(a^{l}_{i,j}\)) of the CI of the amplitude a versus the realized value of the estimate \(\hat{a}_{i,j}\) after \(N=100\) shots in an IQAE round with the Grover number \(k=200\). The black dashed line is a diagonal line just for reference, on which the vertical coordinate is equal to \(\hat{a}_{i,j}\). Figure 3(b) shows the estimated accuracy \(\Delta a_{i,j}\), which is determined by \(a^{u}_{i,j}\), \(a^{l}_{i,j}\), and \(\hat{a}_{i,j}\) as Eq. (11), in the same setting. In these figures, the curves are shown over the range of \(\hat{a}_{i,j}\) such that \(\left \lfloor (2k+1)\theta _{\hat{a}_{i,j}}/(\pi /2)\right \rfloor = \left \lfloor (2k+1)\theta _{a=0.2505}/(\pi /2)\right \rfloor \). This is the set of the values \(\hat{a}_{i,j}\) can take in the IQAE round if the CI at the beginning of the round encloses \(a=0.2505\)

The second point is the termination criterion \(\Delta a_{i,j}\le \epsilon \) in Step 32 in Algorithm 1, which means that IQAE ends when the estimated accuracy \(\Delta a_{i,j}\) becomes smaller than ϵ. This criterion, along with the aforementioned dependence of \(\Delta a_{i,j}\) on \(\hat{a}_{i,j}\), leads to the bias. If \(\hat{a}_{i,j}\) goes to the region where \(\Delta a_{i,j}\) is relatively small, Algorithm 1 tends to end early, and, if \(\hat{a}_{i,j}\) goes to the region with larger \(\Delta a_{i,j}\), the algorithm tends to take more shots by the end, or even go to the next round. This makes the probability that the algorithm ends with \(\hat{a}_{i,j}\) corresponding to smaller \(\Delta a_{i,j}\) and outputs such \(\hat{a}_{i,j}\) as â higher. Such an effect yields the probability distribution of â asymmetric about the point \(\hat{a}=a\), and the nonzero bias \(b_{k_{\mathrm{fin}}}\ne 0\).

Although this understanding on how the bias arises is an intuitive one, we will indirectly confirm its validity by seeing in the numerical experiment in Sect. 3.3 that re-executing the final round without the termination criterion mitigates the bias.

With the above understanding, we can also see why the bias \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) depends on \(f_{\mathrm{fin}}\) with \(k_{\mathrm{fin}}\) fixed. As explained above, changing \(f_{\mathrm{fin}}\) corresponds to changing a. Assuming that â mainly distributes in the neighborhood of a, the shape of \(\Delta a_{i,j}\) as a function of \(\hat{a}_{i,j}\) around the point \(\hat{a}_{i,j}=a\) affects the bias, which causes the dependence of the bias on a, and then \(f_{\mathrm{fin}}\). For example, if \(\Delta a_{i,j}\) is increasing in the neighborhood of \(\hat{a}_{i,j}=a\), the preference of \(\hat{a}_{i,j}\) to values corresponding to small \(\Delta a_{i,j}\) leads to the negative bias.

We also note that, in Fig. 2, the graph of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) is nearly antisymmetric with respect to reflection about the vertical line \(f_{\mathrm{fin}}=\frac{1}{2}\), that is,

except the case of \(k_{\mathrm{fin}}=300\). This antisymmetricity is understood as follows. We temporarily ignore the process for the transition to the next round. Then, note that, with \(k_{\mathrm{fin}}\) fixed, the transform \(f_{\mathrm{fin}}\rightarrow 1-f_{\mathrm{fin}}\) conserves \(a_{k_{\mathrm{fin}}}\) and flips \(\mathfrak{p}(R_{\mathrm{fin}})\). Thus, by this transform, the distribution of \(\hat{a}_{k_{\mathrm{fin}},j}\) is unchanged and the relationship between \(\hat{\gamma}_{\mathrm{fin},j}\) and \(\hat{a}_{k_{\mathrm{fin}},j}\) in Eq. (10) is switched. Therefore, assuming that \(k_{\mathrm{fin}}\gg 1\) and neglecting the slight change of a by this transform, we see that this transform flips the distribution of the error \(\hat{a}-a\): \(p(\hat{a}-a;k_{\mathrm{fin}},f_{\mathrm{fin}}) \approx p(-(\hat{a}-a);k_{ \mathrm{fin}},1-f_{\mathrm{fin}})\), where \(p(\cdot ;k_{\mathrm{fin}},f_{\mathrm{fin}})\) is the probability density of \(\hat{a}-a\) conditioned by \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\). This means that the transform \(f_{\mathrm{fin}}\rightarrow 1-f_{\mathrm{fin}}\) also flips the bias as Eq. (23). In fact, this discussion is not rigorous, since the process for the transition to the next round breaks the symmetry with respect to \(f_{\mathrm{fin}}\rightarrow 1-f_{\mathrm{fin}}\). This makes the antisymmetricity disappear in Fig. 2(b) for \(k_{\mathrm{fin}}=300\). Nevertheless, this antisymmetricity holds for the wide region of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\), and becomes a key for the phenomenon that the bias is enhanced only for the specific values of a, as seen below.

3.2.2 Distribution of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\)

Next, we consider the distribution of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\). Since \(f_{\mathrm{fin}}\) is determined by \(k_{\mathrm{fin}}\) with a fixed and \(k_{\mathrm{fin}}\) takes natural numbers, \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) distributes in the 2D plane not continuously but as discrete points. In Fig. 4(a), we show the realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) for \(a=0.2505\) in the 10,000 runs of Algorithm 1′. We see that the plotted points are concentrated only on the three lines. This is not a phenomenon observed for general a. For example, in Fig. 4(b), the similar plot for \(a=0.2006\), for which the observed bias \(\bar{b}(a)\) is much smaller than \(a=0.2505\), the points are distributed more broadly than those for \(a=0.2505\).

The realized values of the Grover number \(k_{\mathrm{fin}}\) in the final round and \(f_{\mathrm{fin}}\) given by Eq. (19) in the 10,000 runs of IQAE for (a) \(a=0.2505\) and (b) \(a=0.2006\) are plotted as transparent black points. Darker regions consisting of many overlapped points indicate that there are many realizations in it and lighter regions indicates the opposite. In Fig. 4(a), the blue, red, and orange solid lines represent \(\mathrm{frac}\left ((2k_{\mathrm{fin}}+1)\theta _{a}/\pi \right )\) for \(k_{\mathrm{fin}}\) such that \(k_{\mathrm{fin}}\equiv 0,1\), and 2 modulo 3, respectively, and the blue, red, and orange horizontal dashed lines represent \(f_{\mathrm{fin}}=1/6\), \(1/2\), and \(5/6\), respectively

The distribution of the points for \(a=0.2505\) is understood as follows. We note that, for \(\theta \in (0,\pi /2)\) written as \(\theta =l\pi /2m\) with positive integers l and m such that \(l < m\), \(f_{\theta}(k):=\mathrm{frac}\left ((2k+1)\theta /\pi \right )\) is a periodic function of \(k\in \mathbb{Z}\) with period m. Thus, it takes at most m values, and, if we plot \(f_{\theta}(k)\) versus k, the points lie on at most m horizontal lines. If we slightly shift θ from such a value, \(f_{\theta}(k)\) can take different values from the original ones, which means the horizontal lines transform into lines with small slopes. \(\theta _{a}\) for \(a=0.2505\) applies to such a case. It can be written in the form of

with small \(m\in \mathbb{N}\) and \(\delta \in \mathbb{R}\) such that \(|\delta |\ll 1\). Concretely, \(l=1\), \(m=3\) and \(\delta \approx 0.000577\). For this a, \(f_{\theta _{a}}(k)\) consists of only three slightly tilted lines shown in Fig. 4(a) as solid lines, which were dashed horizontal lines in the same figure if \(\delta =0\).

We now plot the distribution of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) on the heatmap of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\), which is highly illustrative for understanding why the bias is enhanced for specific values of a. In Fig. 5, we show the results for \(a=0.2505, 0.2006\), and, in addition, \(a=0.25\), for which the 10,000 runs of Algorithm 1′ are conducted too.

The same realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) as Fig. 4 are plotted on the heatmap of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) calculated by Algorithm 3. \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) indicates the bias generated in the final round of IQAE conditioned on the Grover number \(k_{\mathrm{fin}}\) and \(f_{\mathrm{fin}}\) in Eq. (19). Now, the case of \(a=0.25\) is added. Again, the points are in transparent black, and thus darker regions indicate that there are many realizations in it and lighter regions indicate the opposite. In the heatmap, the color of the regions with NaN \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) is set to white. For \(a=0.2505\), the realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) are concentrated on a few lines, whereas for \(a=0.2006\), \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) is distributed more broadly. For \(a=0.25\), the distribution is concentrated on a few lines but has a reflection symmetry about \(f_{\mathrm{min}}=0.5\)

We obtain \(b(a)\) by averaging the values of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) over the realized values of \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\), which is represented by the black points, with the weight proportional to the realization frequency, which is represented by the darkness of the points. In the case of \(a=0.2505\), as seen above, the realized values of \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\) are not broadly distributed but located on a few specific lines. Contrary to this, in the case of \(a=0.2006\), the points are distributed more broadly, and the values of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) on the points take various values, both positive and negative, canceling out each other when averaged. This cancellation tends not to occur in the case of \(a=0.2505\) due to the concentration of the points. In fact, we see that the lines consisting of the realized values of \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\) mainly go through the regions where \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) is positive, which leads to positive \(b(a)\).

Of course, even if Eq. (24) holds and thus the realized values of \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\) concentrate on a few lines, the values of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) on the points may accidentally cancel out each other, resulting in a relatively small \(b(a)\). Conversely, even if the points are distributed broadly, the distribution is not uniform and the heatmap of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) is not completely antisymmetric, which means that the bias cancellation is not perfect. Nevertheless, the distribution of realized \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\) and the cancellation of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) on the points roughly describe the phenomenon that the bias is enhanced for the specific values of a.

We remark on the case that \(\theta _{a}\) can be exactly written in the form of \(l\pi /2m\) with small m. For example, \(a=0.25\) gives \(\theta _{a}=l\pi /2m\) with \(l=1\) and \(m=3\). Although \(a=0.25\) has only a slight difference from \(a=0.2505\), \(a=0.25\) does not yield the large bias: for it, \(b(a=0.25)=3.5\times 10^{-6}\), which is one order of magnitude smaller than \(b(a=0.2505)=3.7\times 10^{-5}\). Figure 5(c) shows the distribution of realized \((k_{\mathrm{fin}}, f_{\mathrm{fin}})\) on the heatmap of \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) for \(a=0.25\). We see that the points are distributed on the two horizontal linesFootnote 3 located at the symmetric positions with respect to reflection about the line \(f_{\mathrm{fin}}=1/2\). Since \(\tilde{b}(k_{\mathrm{fin}}, f_{\mathrm{fin}})\) is nearly antisymmetric about this line, its values at the realized points largely cancel out each other, yielding the relatively small \(b(a)\).

3.3 Mitigation of the bias

Finally, we propose a simple method to mitigate the bias: just re-executing the final round. Namely, we modify Algorithm 1 as follows. Suppose that we get the CI of a such that \(\Delta a_{i,j} \le \epsilon \) in the round with the Grover number \(k_{\mathrm{fin}}\) and the total shot number \(N_{\mathrm{fin}}\). Then, we perform just one additional round using the same \(k_{\mathrm{fin}}\) and \(N_{\mathrm{fin}}\), and let the resultant maximum likelihood estimate â in the added round be the output of the algorithm. In this additional round, we do not impose the termination criterion \(\Delta a_{i,j} \le \epsilon \). We make \(N_{\mathrm{fin}}\) measurements on \(G^{k_{\mathrm{fin}}}|{\Phi}\rangle \) even if \(\Delta a_{i,j} \le \epsilon \) is satisfied in the middle. We show the modified algorithm as Algorithm 4.

The reason why we expect that this re-execution-based bias mitigation works is as follows. Recall that, in Algorithm 1, the error is generated only in the final round as long as the second-to-last round yields the CI of a enclosing the true value, and the bias is induced by the termination criterion \(\Delta a_{i,j} \le \epsilon \). We thus consider that running an additional round without the criterion \(\Delta a_{i,j} \le \epsilon \) and taking the result as the final output will mitigate the bias.

In Fig. 6(a), we plot \(\left |\bar{b}(a)\right |\) in Algorithm 1, which is plotted also in Fig. 1, and that in Algorithm 4. As this figure shows, the enhanced biases for the specific values of a in Algorithm 1 are largely reduced in Algorithm 4, which indicates that re-executing the final round mitigates the bias. If we take, among the examined values of a, those for which \(\left |\bar{b}(a)\right |\ge 2\bar{\sigma}(a)\), the average and maximum of the bias reduction rates for these values of a are 57.8% and 99.2%, respectively.

For the various values of the amplitude a, we plot (a) \(|\bar{b}(a)|\), the absolute value of the average of the errors in the 10,000 runs of IQAE (Eq. (13)) and (b) the average number of queries to G in the same runs of IQAE. The blue line denotes the result for Algorithm 1, which does not incorporate bias mitigation, and the orange one denotes that for Algorithm 4, which includes bias mitigation

An obvious drawback of this bias mitigation method is the increase of the total number of queries to G by running an additional round. We have confirmed that, at least in our numerical experiments, this query number increase is mild. Figure 6(b) shows the average number of queries to G in the 10,000 run of Algorithm 1 and Algorithm 4 .Footnote 4 The ratio of the average query number is about 1.25 for any examined value of a. This mild increase is because the final round in Algorithm 1 is not dominant among all the rounds with respect to query number. Namely, on average, the final round accounts for about 25% of the total number of queries to G across all the rounds.

4 Summary

In this paper, we focused on the bias in IQAE, a widely studied version of QAE. We saw that the bias is enhanced for the specific values of the estimated amplitude a. The termination criterion that the estimated accuracy \(\Delta a_{i,j}\) of a falls below the predetermined accuracy ϵ is a source of the bias. Decomposing the bias into the bias conditioned by \(k_{\mathrm{fin}}\) the Grover number in the final round, we found a key factor that determines the magnitude of the bias: the distribution of the realized values of \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) in the landscape of the conditional bias. Here, \(f_{\mathrm{fin}}\) is defined as Eq. (19), and a main factor to determine the probability distribution of the IQAE estimate â and thus its bias. We found that for a such that Eq. (24) holds with small m and tiny δ, the points of realized \((k_{\mathrm{fin}},f_{\mathrm{fin}})\) are located only on a few lines in a 2D plane, and the conditional biases at the points do not tend to cancel each other so much, resulting in the large bias. We also proposed a simple bias mitigation method by re-executing the final round with the same Grover number \(k_{\mathrm{fin}}\) and shot number \(N_{\mathrm{fin}}\). We saw that the increase of the total number of queries to G is mild, about 25%.

Data Availability

The data generated and analysed during the current study can be obtained from the corresponding author upon a reasonable request.

Notes

For \(x\in \mathbb{R}\), we say that \(y\in \mathbb{R}\) is an ϵ-approximation of x if \(|y-x|\le \epsilon \).

Also in the framework of MLEQAE, some recent studies proposed setting the shot number adaptively [17].

Although \(f_{\mathrm{fin}}\) can take \(\frac{1}{2}\) for \(a=0.25\), none of the 10,000 runs ended with \(f_{\mathrm{fin}}=\frac{1}{2}\), and thus there is no point on the line \(f_{\mathrm{fin}}=\frac{1}{2}\) in Fig. 5(c).

The reason why the curves in Fig. 6(b) have several peaks is as follows. In each round in Algorithm 1, we search the next Grover number \(k_{i+1}\) by Algorithm 2, and depending on the current Grover number \(k_{i}\) and the true angle \(\theta _{a}\), this search tends to require many shots in order to narrow down the CI \([\theta ^{l}_{i,j}, \theta ^{u}_{i,j}]\) of \(\theta _{a}\) so much that \([K_{i+1}\theta ^{l}_{i,j}, K_{i+1}\theta ^{u}_{i,j}]\) lies within a single quadrant. This can happen for any \(\theta _{a}\), but for specific values of \(\theta _{a}\), the probability of this phenomenon is relatively high, which leads to the larger total query number in expectation.

Abbreviations

- QAE:

-

quantum amplitude estimation

- IQAE:

-

iterative quantum amplitude estimation

- QPE:

-

quantum phase estimation

- QMCI:

-

quantum algorithm for Monte Carlo integration

- MLE:

-

maximum likelihood estimation

- MLEQAE:

-

maximum likelihood estimation-based quantum amplitude estimation

- CI:

-

confidence interval

References

Brassard G, Hoyer P, Mosca M, Tapp A. Quantum amplitude amplification and estimation. Contemp Math. 2002;305:53.

Kitaev AY. Quantum measurements and the Abelian stabilizer problem. Electron Colloq Comput Complex. 1995;3:22.

Montanaro A. Quantum speedup of Monte Carlo methods. Proc R Soc A. 2015;471:20150301.

Rebentrost P, Gupt B, Bromley TR. Quantum computational finance: Monte Carlo pricing of financial derivatives. Phys Rev A. 2018;98:022321.

Stamatopoulos N, Egger DJ, Sun Y, Zoufal C, Iten R, Shen N, Woerner S. Option pricing using quantum computers. Quantum. 2020;4:291.

Chakrabarti S, Krishnakumar R, Mazzola G, Stamatopoulos N, Woerner S, Zeng WJ. A threshold for quantum advantage in derivative pricing. Quantum. 2021;5:463.

Miyamoto K. Bermudan option pricing by quantum amplitude estimation and Chebyshev interpolation. EPJ Quantum Technol. 2022;9:3.

Kaneko K, Miyamoto K, Takeda N, Yoshino K. Quantum pricing with a smile: implementation of local volatility model on quantum computer. EPJ Quantum Technol. 2022;9:7.

Doriguello JF, Luongo A, Bao J, Rebentrost P, Santha M. Quantum algorithm for stochastic optimal stopping problems with applications in finance. In: 17th conference on the theory of quantum computation, communication and cryptography (TQC 2022). 2022. p. 2:1–2:24.

Suzuki Y, Uno S, Raymond R, Tanaka T, Onodera T, Yamamoto N. Amplitude estimation without phase estimation. Quantum Inf Process. 2020;19:75.

Aaronson S, Rall P. Quantum approximate counting, simplified. In: Symposium on simplicity in algorithms (SOSA). 2020. p. 24–32.

Nakaji K. Faster amplitude estimation. Quantum Inf Comput 2020;20:1109–23. https://doi.org/10.26421/QIC20.13-14-2.

Grinko D, Gacon J, Zoufal C, Woerner S. Iterative quantum amplitude estimation. npj Quantum Inf. 2021;7:52.

Tanaka T, Suzuki Y, Uno S, Raymond R, Onodera T, Yamamoto N. Amplitude estimation via maximum likelihood on noisy quantum computer. Quantum Inf Process. 2021;20:293.

Giurgica-Tiron T, Kerenidis I, Labib F, Prakash A, Zeng W. Low depth algorithms for quantum amplitude estimation. Quantum. 2022;6:745.

Uno S, Suzuki Y, Hisanaga K, Raymond R, Tanaka T, Onodera T, Yamamoto N. Modified Grover operator for quantum amplitude estimation. New J Phys. 2021;23:083031.

Wada K, Fukuchi K, Yamamoto N. Quantum-enhanced mean value estimation via adaptive measurement. 2022. https://doi.org/10.48550/arXiv.2210.15624.

Tanaka T, Uno S, Onodera T, Yamamoto N, Suzuki Y. Noisy quantum amplitude estimation without noise estimation. Phys Rev A. 2022;105:012411.

Callison A, Browne DE. Improved maximum-likelihood quantum amplitude estimation. 2022. https://doi.org/10.48550/arXiv.2209.03321.

Fukuzawa S, Ho C, Irani S, Zion J. Modified iterative quantum amplitude estimation is asymptotically optimal. In: 2023 proceedings of the symposium on algorithm engineering and experiments (ALENEX). 2023. p. 135–47.

Lu X, Lin H. Random-depth quantum amplitude estimation. 2023. https://doi.org/10.48550/arXiv2301.00528.

Cornelissen A, Hamoudi Y. A sublinear-time quantum algorithm for approximating partition functions. In: Proceedings of the 2023 annual ACM-Siam symposium on discrete algorithms (SODA). 2023. p. 1245–64.

Jerrum MR, Valiant LG, Vazirani VV. Random generation of combinatorial structures from a uniform distribution. Theor Comput Sci. 1986;43:169.

Acknowledgements

Not applicable.

Funding

The author is supported by MEXT Quantum Leap Flagship Program (MEXT Q-LEAP) Grant no. JPMXS0120319794, JSPS KAKENHI Grant no. JP22K11924, and JST COI-NEXT Program Grant No. JPMJPF2014.

Author information

Authors and Affiliations

Contributions

KM as the sole author of the manuscript, conceived, designed, and performed the analysis; he also wrote and reviewed the paper. The author read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

The author has approved the publication. The research in this work did not involve any human, animal or other participants.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miyamoto, K. On the bias in iterative quantum amplitude estimation. EPJ Quantum Technol. 11, 42 (2024). https://doi.org/10.1140/epjqt/s40507-024-00253-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjqt/s40507-024-00253-x