Abstract

We introduce the Real Quantum Amplitude Estimation (RQAE) algorithm, an extension of Quantum Amplitude Estimation (QAE) which is sensitive to the sign of the amplitude. RQAE is an iterative algorithm which offers explicit control over the amplification policy through an adjustable parameter. We provide a rigorous analysis of the RQAE performance and prove that it achieves a quadratic speedup, modulo logarithmic corrections, with respect to unamplified sampling. Besides, we corroborate the theoretical analysis with a set of numerical experiments.

Similar content being viewed by others

1 Introduction, motivation and main results

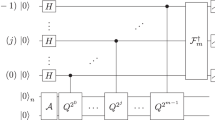

Quantum Amplitude Estimation (QAE) is an algorithm which retrieves information stored in the amplitude of a quantum state. It is argued to have a quadratic speedup over simple repeated sampling of the quantum state. For this reason, QAE is a central subroutine in quantum computation for various applications, e.g. in chemistry [1, 2], finance [3–5], and machine learning [6, 7]. The original QAE algorithm [8] is built composing Quantum Phase Estimation (QPE) [9] and Grover’s algorithms [10]. Standard QPE relies on a Quantum Fourier Transform (QFT) which is very demanding in terms of computational resources, especially if considered for the Noise Intermediate-Scale Quantum era (NISQ).

Several approaches have been proposed to reduce the resources needed by QAE, both in terms of qubits and circuit depth, while approximately preserving the same speedup. These approaches can be broadly categorized in three families.

-

1.

The first family consists in techniques which take advantage of classical post-processing. As an example, in [11] the authors show how to replace QPE by a set of Grover iterations combined with a Maximum Likelihood Estimation (MLE) post-processing algorithm. To correctly assess the overall performance of such techniques, one needs to include the overhead due to the classical post-processing in the total cost of the algorithm, therefore diminishing the potential speedup. Moreover, at the time of writing, no rigorous proof of the correctness of the proposed algorithms has been given yet.

-

2.

The second family includes strategies which still rely on phase estimation, but eliminate the need of a QFT. The main idea is to replace the QFT with Hadamard tests [12]. This variation of QPE was first suggested by Kitaev [13] and is called “iterative phase estimation”. In papers following this approach such as [12] it is not even clear how to control the accuracy of the algorithm other than possibly increasing the number of measurements. Besides, they do not give rigorous proof of the correctness of the algorithm.

-

3.

The methods belonging to the third and last family are based entirely on Grover iterations and they do not require any post-processing. The main difference among algorithms of this family is in the amplification policy. Representative examples of this approach are the Iterative Quantum Amplitude Estimation (IQAE) and the Quantum Amplitude Estimation Simplified (QAES) algorithms [14, 15]. Both provide rigorous proofs of the correctness of the techniques. Although the strategy described in [15] achieves the desired asymptotic complexity exactly (i.e. without logarithmic factors), the constants involved are very large, and likely to render the algorithm impractical. In [14] they do not exactly match the desired asymptotic complexity, yet the constants involved are much lower.

RQAE can be thought of as a generalization of the Quantum Coin algorithm [16, 17] and it is based on an iterative strategy, like [14, 15]. In particular, RQAE utilizes a set of auxiliary amplitudes which allow to shift in a controlled fashion the amplitude to be retrieved. Such shift can be easily and efficiently implemented following the methods presented in [18]. Relying on this, we propose a specific strategy to iteratively choose the amplification factor k (i.e. the Grover exponent) and the shift b at each iteration, progressively improving the estimation of the quantum amplitude to be retrieved, i.e. the target amplitude.

We prove a set of tight bounds for the RQAE algorithm. Moreover, the bounds for RQAE depend on a free parameter q which directly controls the amplification policy. More specifically, the parameter q is a minimum bound for the ratio between the amplification on consecutive steps:

The parameter q affects both the depth of the circuit and the performance (in terms of calls to the oracle) of the algorithm, thus offering a handle to discuss the trade-off between the two.

The other feature that makes RQAE different from alternative amplitude estimation algorithms is the possibility of recovering the sign of the amplitude to be retrieved, hence the name Real Quantum Amplitude Estimation (RQAE). Concretely, RQAE is a parametric algorithm that depends on a real input amplitude \(b_{1}\), which provides a reference, through which we can unambiguously assign a phase to every other amplitude in the quantum register. Then, when referring to the sign of an amplitude, we mean the relative phase between such amplitude and \(b_{1}\). As the notation already suggests, \(b_{1}\) is the shift mentioned above for the first iteration. The new sensitivity to the relative sign of an amplitude allows one to tackle a wider variety of problems, precluded to standard algorithms.

The remainder of this paper is organized as follows. Section 2 introduces the intuition behind the construction of the algorithm. In Sect. 3 we state some theoretical results on the performance of the algorithm for a specific set of parameters (for the rigorous proof see Appendix A). Moreover, we confirm the theoretical properties with a set of simulated experiments. To conclude, we discuss the results and related open questions in Sect. 4.

2 Real quantum amplitude estimation

Consider a one-parameter family of oracles \(\mathcal{A}_{b}\) that, acting on the state \(\mathopen{\lvert }0\mathclose{\rangle }\), yield

where a is a real number, b is an auxiliary, continuous and real parameter that we call “shift”, and \(\mathopen{\lvert }\phi \mathclose{\rangle }\) is a specified direction in the Hilbert space \({\mathcal{H}}\). The RQAE algorithm estimates the amplitude a exploiting the possibility of tuning the shift b iteratively. The ket \(\mathopen{\lvert }\psi \mathclose{\rangle }\) belongs to the plane \(\Pi _{b} = \text{span}\{\mathopen{\lvert }\phi \mathclose{\rangle },\mathopen{\lvert }\phi ^{\perp}\mathclose{\rangle }_{b}\} \subset { \mathcal{H}}\) for which the kets \(\mathopen{\lvert }\phi \mathclose{\rangle }\) and \(\mathopen{\lvert }\phi ^{\perp}\mathclose{\rangle }_{b}\) provide an orthonormal basis. Note that all the quantities with a sub-index b depend on the actual value of the shift. In practice, the construction of oracles such as \(\mathcal{A}_{b}\) from a given un-shifted oracle \(\mathcal{A}\) is generally not difficult. In most cases, a controlled shift of an amplitude can be efficiently implemented via Hadamard gates and some controlled operations. We give an example on how to build such a shifted oracle in Appendix B. In particular, its implementation is straightforward in the framework described in [18].

Given a precision level ϵ and a confidence level \(1-\gamma \), the goal of the algorithm is to compute an interval \((a_{I}^{\min},a_{I}^{\max}) \subset [-1,1]\) of width smaller than 2ϵ which contains the value of a with probability greater or equal to \(1-\gamma \) (see Fig. 1). Here, I denotes the number of iterations to achieve the prescribed accuracy. We take as a representative of the interval its center, \(a_{I} = \frac{a_{I}^{\min}+a_{I}^{\max}}{2}\), thus admitting a maximum error of ϵ:

a is the target amplitude to be estimated, 2ϵ is the width of the estimation interval with bounds \((a_{I}^{\min},a_{I}^{\max})\), \(a_{I}\) is the center of the confidence interval. In the image we distinguish three possibilities to emphasize that the algorithm described below is sensitive to the sign of a

It is convenient to express the amplitudes in terms of their corresponding angles, that is, we consider the generic mapping \(\theta _{x} = \arcsin (x)\) for any real amplitude x. Note that the angle representation is particularly suited to describe Grover amplifications, which indeed admit an interpretation as rotations in the plane \(\Pi _{b}\). As an example, the state \(\mathopen{\lvert }\psi \mathclose{\rangle }\) given in (2) can be written as

where \(\theta _{a+b}\) represents a rotation in the plane \(\Pi _{b}\) defined above. Throughout the paper, we will be changing back and forth from the representation in terms of the actual amplitude or its associated angle whenever needed. To avoid notational clutter, we henceforth drop the sub-index b on the perpendicular ket, leaving its dependence on the shift as understood. Actually, such dependence does not play any role for the algorithm.

In the following subsections we address the details of the procedure describing all the steps contained in each iteration.

2.1 First iteration: estimating the sign

This step achieves a first estimation of the bounds of the confidence interval \((a_{1}^{\min},a_{1}^{\max})\). Normally, this estimation would not be sensitive to the sign of the underlying amplitude because, when sampling from a quantum state, we obtain the square of the amplitude. Nevertheless, taking advantage of the shift b we can circumvent this limitation. In order to compute the sign, we will combine two different pieces of information: the result of measuring the two oppositely shifted states \(\mathopen{\lvert }\psi _{1}\mathclose{\rangle }_{\pm}\) defined as:

for an arbitrary real shift \(b_{1}\). The sign of \(b_{1}\) has to be decided at the start of the algorithm to have a clear reference. In practice, in some setups it is possible to measure at the same time both states taking advantages of Hadamard gates as in the quantum arithmetic techniques discussed in [18] (more details are given in Appendix B). As a and \(b_{1}\) are real numbers, we have the identity:

and we can build a first empirical estimation \(\hat{a}_{1}\) of a as follows:

where \(\hat{p}_{\text{sum}}\) and \(\hat{p}_{\text{diff}}\) are the empirical probabilities of getting \(\mathopen{\lvert }\phi \mathclose{\rangle }\) when measuring \(\mathopen{\lvert }\psi _{1}\mathclose{\rangle }_{-}\) and \(\mathopen{\lvert }\psi _{1}\mathclose{\rangle }_{+}\), respectively. Throughout the paper, when we measure, we will use p̂ to denote the empirical probability obtained from direct sampling. As an example, if in iteration i we sample the state \(N_{i}\) times, getting \(\mathopen{\lvert }\phi \mathclose{\rangle }\) as an outcome \(\hat{N}_{i}\) times, the estimated probability of \(\mathopen{\lvert }\phi \mathclose{\rangle }\) will be \(\hat{p}_{i} = \frac {\hat{N}_{i}}{N_{i}}\).

From (6) and (7), we can obtain a first confidence interval:

where the max and the min operations are introduced because we know a priori that probabilities are bounded between 0 and 1. The assignment of an error \(\epsilon ^{p}_{1}\) to the empirical result \(\hat{p}_{1}\) relies on a statistical analysis and depends on the employed statistical bound, such as Chebysev, Chernoff (Hoeffding) and Clopper-Pearson bounds. Here one of the main differences with respect to the other algorithms present in the literature becomes obvious, although the probabilities are bounded between 0 and 1 the estimated amplitude obtained by the identity (7) is now bounded between \(-1\leq a_{1}\leq 1\), that is, it can be negative (see Fig. 2). Note that the sign of the amplitude depends on the sign of \(b_{1}\), which is taken as being positive for simplicity. However, this election is arbitrary and it could be chosen negative.

Left: the dot corresponds to a, namely the probability to be estimated; \(a_{1}^{\min}\) and \(a_{1}^{\max}\) define the confidence interval whose width is \(2\epsilon _{1}\). Right: the same confidence interval represented in terms of angles. Note that the “true value” (represented by either a and \(\theta _{a}\)) falls in a generic position within the confidence interval. To avoid clutter, here we are not representing the central value of the confidence interval

2.2 Following iterations: amplifying the probability and shrinking the interval

On consecutive iterations, given an input confidence interval \((a^{\min}_{i},a^{\max}_{i})\) (see Fig. 3a) we want to obtain a tighter one \((a^{\max}_{i+1},a^{\min}_{i+1})\) and iterate the process until the desired precision ϵ is reached. At each iteration, the process for narrowing the interval starts by choosing a new shift according to

This election is not unique. Again, we could have chosen \(b_{i+1} = -a^{\max}_{i}\). Always keep in mind that the phase that we are obtaining is relative to the original value of \(b = b_{1}\). Considering the choice (9), we force our lower bound to match exactly zero (see Fig. 3b). The boundaries of the confidence interval \((a^{\min}_{i},a^{\max}_{i})\), when shifted and then expressed in terms of the corresponding angles, become:

The angular region \(\alpha _{i}^{\min} \leq \alpha _{i} \leq \alpha _{i}^{\max}\) represents the confidence interval and we refer to it as confidence fan.

Graphical representation of the steps performed in the \(i+1\) iteration. The solid grey line represents the unknown target value (which is not necessarily the center of the confidence interval). The dashed lines represent the bounds obtained at the i-th step, while the dotted lines represent the bounds obtained at step \(i+1\)

The next step takes advantage of the Grover operator, defined as

where

and \(\mathcal{A}_{b}\) is the oracle defined in (2). The Grover operator applied \(k_{i+1}\) times transforms the generic angle θ into \((2k_{i+1}+1)\theta \), see Fig. 3c. Hence, the state \(\mathopen{\lvert }\psi _{i+1}\mathclose{\rangle }_{+}\) is transformed to:

where the sub-index “+” is employed as in (5), \({\mathcal{G}}^{k_{i+1}}\) indicates the Grover operator applied \(k_{i+1}\) times and, in the second equality, we use (9). Moreover, in (13) we have implicitly defined the angle \(\theta _{i+1} \equiv \arcsin (a-a_{i}^{\text{min}} )\) for which we will use the synonym notation \(\theta _{a-a_{i}^{\text{min}}}=\theta _{i+1}\), according to convenience of presentation.

In order to avoid ambiguities due to the lack of a bijective correspondence angle/amplitude, when measuring amplified probabilities, we cannot allow the amplified angles to go beyond \([0,\frac {\pi}{2} ]\). Namely, we need the amplified confidence fan to stay within the first quadrant. Relying on (10), we choose the Grover amplification exponent as:

so that we maximize the amplification factor while respecting the angle constraint.

Now, we measure the state \(\mathopen{\lvert }\psi _{i+1}\mathclose{\rangle }\) in the amplified space, obtaining the empirical probability

with the statistical error \(\epsilon ^{p}_{i+1}\), and define:

where the max and min functions play an analogous role as in Sect. 2.1 (see Fig. 3d).

In the next step we transform the angles corresponding to \(p^{\max}_{i+1}\) and \(p^{\min}_{i+1}\) to the non-amplified space:

In other words, we have just “undone” the amplification (see Fig. 3e).

Finally, we have to undo the shift (9), actually performing an opposite shift (see Fig. 3f). Using definitions analogous to those given in (8), we finally obtain:

Recall that the goal is to reduce the width of the confidence interval until the desired precision ϵ is reached. To this sake, one has to repeat the iteration just described until the goal is met.

Remark 2.1

Throughout this section we have addressed the general structure of the algorithm. Nevertheless, we have not specified the values of all the parameters involved. More specifically, we have not discussed how \(\epsilon ^{p}_{i}\) is obtained. This parameter strictly depends on the number of shots on each iteration, \(N_{i}\), and the confidence required on each iteration, \(1-\gamma _{i}\), through a set of bounds such as Hoeffding’s inequality or Clopper-Pearson bound (see [19, 20]). In Sect. 3, more insight about these choices is provided.

3 RQAE: configuration and properties

As mentioned before, in order to complete the RQAE method, we need to incorporate a particular choice for the parameter \(\epsilon ^{p}_{i}\) and, thus, the parameters involved in its computation, i.e., \(N_{i}\) and \(1-\gamma _{i}\), the number of shots and confidence level of the i-th iteration, respectively. With the aim of being able to theoretically characterize the algorithm, we propose to take, ∀i, the following constant values:

where

Once the free parameters are selected, the RQAE is completed and ready to be used. Of course, other choices can be made. In Algorithm 1, the RQAE algorithm is schematically described. Note that our selection of the parameters is uniquely determined by the input quantities q, ϵ and γ. As we will see in Sect. 3.1, the choice considered here presents several interesting properties.

3.1 Properties

Given the proposed configuration in Algorithm 1, the RQAE algorithm presents several properties which are listed in the next theorem.

Theorem 3.1

Given ϵ, γ and q, and taking the parameters:

with

then,

-

1.

The error at each iteration is bounded by:

$$ \epsilon ^{p}_{i}\leq \epsilon ^{p}. $$(24) -

2.

We get the amplification policy:

$$ q_{i} = \frac {2k_{i+1}+1}{2k_{i}+1}\geq q. $$(25) -

3.

The depth of the circuit is bounded by:

$$ k_{I}\leq \biggl\lceil \frac {1}{2} \frac {\arcsin (\sqrt{2\epsilon ^{p}} )}{\arcsin (2\epsilon )}- \frac {1}{2} \biggr\rceil =k^{\max}. $$(26) -

4.

The algorithm finishes before \(T(q,\epsilon )\) iterations:

$$ T>I. $$(27) -

5.

The algorithm obtains a precision ϵ with confidence \(1-\gamma \) (Proof of Correctness):

$$ \mathbb{P} \bigl[a\notin \bigl(a^{\min}_{I},a^{\max}_{I} \bigr) \bigr]\leq \gamma . $$(28) -

6.

The total number of calls to the oracle is bounded by:

$$\begin{aligned} N_{\textit{oracle}}< {}& \frac {1}{\sin ^{4} \left( \frac {\pi}{2(q+2)} \right) }\log \left[ \frac {2\sqrt{e}\log _{q} \left( \frac {q^{2}\pi}{2(q+2)\arcsin (2\epsilon )} \right) }{\gamma} \right] \\ &{}\cdot \biggl(\frac {\pi}{2(q+2)\arcsin (2\epsilon )}+2 \biggr) \biggl(1+ \frac {q}{q-1} \biggr) . \end{aligned}$$(29)

The proof of Theorem 3.1 can be found in Appendix A.

The first important feature is the fourth property, where we see that the algorithm achieves the desired precision ϵ with confidence at least \(1-\gamma \), which was our goal in the beginning (see Equation (3)). Property number two justifies why we call the parameter q the “amplification policy”. The reason for this is that q is indeed the minimum ratio of the amplification of an iteration with respect to the previous, thus controlling the amplification policy. The depth of the circuit is intimately related with the amplification policy. In property number three we have a clear bound \(k^{\max}\) for the depth. To get a clearer idea of this bound, in Fig. 4 we depict the maximum depth of the circuit \(k^{\max}\) in terms of the precision ϵ for different amplification policies q. As we see, the depth decreases with q, but at the cost of increasing the number of shots at each iteration (see Equation (19)). This directly leads to the question about what is more relevant in relation to the total number of shots: either performing more shots for each iteration with less iterations (and thus less circuit depth) or performing less shots at the cost of increasing the total number of iterations. This question is directly answered with the sixth property, where we have a bound for the number of shots in terms of the required precision ϵ, the confidence \(1-\gamma \) and the amplification policy q. To facilitate the interpretation of the expression, in Fig. 5 we represent the theoretical number of calls to the oracle in terms of the precision ϵ for different amplification policies q and a fixed confidence level of \(1-\gamma = 0.95\). The dash dotted line, the black solid thin line and the gray solid thin lines are there for comparison purposes. We know that, without amplification, the number of executions of the circuit needed to achieve a precision ϵ grows as:

as it is given by classical bounds such as Chebysev or Clopper-Pearson. A quadratic speedup would then be obtaining the same precision with a total number of oracle calls:

As we see in Fig. 5 the number of calls needed in our method to achieve a precision ϵ grows approximately as \(1/\epsilon \). This means that we have approximately achieved a quadratic speedup compared with unamplified sampling. Furthermore, we see that it is more efficient in terms of the total number of calls to the oracle to use lower amplification policies.

In general, the appropriate amplification policy depends on each specific case. On the one hand, the optimal q depends both on the precision ϵ and the confidence level \(1-\gamma \). On the other hand, in real hardware it could be more interesting to choose higher values of q, as we can not run arbitrarily long circuits.

Last, we have depicted the theoretical bound for the IQAE algorithm which, to our knowledge, represents the state of the art. As we see, the RQAE performance is better than that of the IQAE for some values of q. Note that the comparison with IQAE is not direct since they are estimating the probability and we are estimating the amplitude. However, in Appendix C we show that, under some weak assumptions, this bounds are comparable.

3.2 Empirical performance

The sixth property of Theorem 3.1 gives us an upper bound to the number of calls to the oracle. However, it is always interesting to see how this theoretical bound compares with a real execution of the algorithm. Using the Quantum Learning Machine (QLM) developed by Atos we build a circuit with a total of 5 qubits and we estimate one of the amplitudes encoded in the circuit with our method. Our algorithm is executed with a confidence level of \(1-\gamma = 0.95\) and different amplification policies \(q \in \{2,10,20\}\). For each level of precision ϵ we perform 100 experiments. As we see in Fig. 6 the theoretical bound for the total number of calls to the oracle is respected by the experiments and it is not too loose. Furthermore, also the empirical behavior of \(N_{\text{oracle}}\) with respect to ϵ follows the qualitative trend expected theoretically.

Number of calls to the oracle \(N_{\text{oracle}}\) versus the required precision ϵ. The picture is in a log-log scale with the x-axis inverted. The experimental points (circles, squares and diamonds) represent the mean number of oracle calls obtained by the experiments while the error bars stretch from the maximum to the minimum value obtained in the same experiments. The lines are the corresponding theoretical bounds from Equation (29)

In Fig. 7 we see the empirical result and the theoretical bounds for the amplification in the last iteration and the number of rounds performed. To explain the behaviour we will focus in the results for \(q = 20\). The first five points corresponds to \(I = 1\) as we can see in the right picture. That is the same as saying that for the first five points \(k_{I} = 0\). As the y-axis of the left picture is in log scale we cannot see the points. This phenomena occurs when the first estimation \(\epsilon ^{a}_{1}\) is lower than the required ϵ. In this situation the algorithm does not need to perform more than one iteration. Next, we see in the right picture that we jump to one iteration. In the left figure we see two different regimes. In the first one the amplification matches that of the maximum amplification \(k^{\max}\). In the second one we see that it is settled to \(k_{I} = 10\). To give an explanation for it we first compute which is the minimum \(k_{2}\) possible with \(q= 20\):

As \(k_{2}\) can only have natural values we conclude that \(k_{2}\) is at least 10. Now the explanation of the two regimes becomes more clear. As with the first iteration we do not achieve the required precision ϵ we need to do another iteration. In this second iteration, as \(q=20\), we are sure that \(k_{2}\) is at least 10. But this amplification is too much for the required precision, so the condition in Algorithm 1 on the maximum amplification permitted is activated matching the value of the last amplification to that of the maximum amplification needed \(k_{I} = k^{\max}\). This happens until we reach \(k_{2} = 10\). At this point the maximum bound over the number of amplifications of the last round exceeds the number of amplifications governed by q (\(k_{2} \approx 10\)) and the previous condition deactivates. This new number of amplifications is more than enough to attain the precision required, so the algorithm maintains the same level of amplification and the same number of rounds until the point where that number of amplification is not enough to obtain the required precision. In that moment the algorithm performs another iteration and the number of amplifications of the last rounds is again fixed to that of \(k^{\max}\).

In the left figure we plot the amplification of the last iteration \(k_{I}\) versus the target precision ϵ. In the right figure we plot the number of rounds I versus the target precision ϵ. The left picture is in a log-log scale with the x-axis inverted. The right picture is in a x-log scale with the x-axis inverted. Each experimental point (circles and diamonds) represent the mean for the last amplification and number of rounds of 100 experiments. The error bars stretch from the maximum to the minimum value obtained in the same 100 experiments. The lines are the corresponding theoretical bounds from Equation (26) and Equation (27). To avoid clutter, we have omitted the results for \(q =10\)

4 Conclusion

Throughout this paper we have proposed a new methodology for estimating an amplitude encoded in a quantum circuit. This methodology depends on the possibility of defining a new class of shifted oracles which are easily built, at least in some cases of practical interest. For a suitable choice of parameters described in Algorithm 1, we have proven a set of interesting properties. They include a proof of correctness, a bound on the maximum depth of the algorithm and an upper bound on the total number of shots, hence characterizing the performance of the algorithm.

If we compare RQAE to similar algorithms in the literature such as QAE, QAES and IQAE we have three advantages and one caveat. The caveat is obviously that we are constrained by the possibility of constructing an appropriate oracle including the shift. The first advantage is that we are extracting more information from the quantum circuit than just the module of the amplitude. This feature can be of extreme importance for certain applications where the result can be positive or negative. The second advantage is that we can control to some extent the depth of the circuit, a crucial feature in the current NISQ era. The third advantage is that the total number of calls to the oracle is lower to that QAES and IQAE with an appropriate choice of the amplification policy. It is true that QAES has a better order of convergence, however the constants involved are very large, making the method unfeasible for most values of ϵ used in practice (see the comparison done in [14]).

The choice of parameters considered in the present paper is not unique and alternative choices could be more efficient in terms, for example, of the total number of shots. In fact, we believe it is interesting to explore different parameter settings in the future. One such possibility consists in considering to change the number of shots on each iteration in a dynamical manner. This is motivated by the fact that, in the early stages, q has to be large in order to ensure that we get amplification while, as we increase the number of amplifications, we can lower the value of q while still getting amplification. In this sense, we could consider our current proposal as a “static scheme” which could be generalized to a “dynamical” or “adaptive” scheme. Moreover, since we have observed that lower values of q tend to be better in terms of the total number of calls to the oracle, a suitable dynamical strategy could further improve the performance of the method. One could even pose a more ambitious question pursuing the scheme that minimizes the total number of oracle calls. The generalization to a dynamical scheme is not particularly difficult neither from the conceptual nor from the implementation viewpoint. Nonetheless, the proof of theoretical rigorous bounds becomes more challenging than the static scheme considered here.

Another interesting direction to extend the RQAE algorithm presented here would be that of retrieving not only the sign but the full phase of a complex amplitude. Morally, we would then move in the opposite direction with respect to the standard QAE. Namely, instead of using the QPE to perform a QAE, we would use a QAE algorithm to define an alternative QPE algorithm. Such extension would probably be challenging in terms of proving rigorous bounds for the performance of the algorithm.

Availability of data and materials

Not applicable.

Notes

Although there exist tighter bounds, they are much less tractable from an analytic point of view. One such example is Clopper-Pearson [20].

References

Knill E, Ortiz G, Somma RD. Optimal quantum measurements of expectation values of observables. Phys Rev A. 2007;75(1):012328.

Kassal I et al.. Polynomial-time quantum algorithm for the simulation of chemical dynamics. Proc Natl Acad Sci. 2008;105(48):18681–6.

Rebentrost P, Gupt B, Bromley TR. Quantum computational finance: Monte Carlo pricing of financial derivatives. Phys Rev A. 2018;98(2):022321.

Woerner S, Egger DJ. Quantum risk analysis. npj Quantum Inf. 2019;5(1):1–8.

Gómez A et al.. A survey on quantum computational finance for derivatives pricing and VaR. In: Archives of computational methods in engineering. 2022.

Wiebe N, Kapoor A, Svore K. Quantum algorithms for nearest-neighbor methods for supervised and unsupervised learning. Quantum Inf Comput. 2015;15(3):318–58.

Wiebe N, Kapoor A, Svore K. Quantum Perceptron Models. In: Proceedings of the 30th International Conference on Neural Information Processing Systems. 2016. p. 4006–14.

Brassard G et al.. Quantum amplitude amplification and estimation. In: Quantum computation and information. 2002. p. 53–74.

Nielsen MA, Chuang IL. Quantum computation and quantum information. Phys Today. 2001;54:2.

Grover LK. A fast quantum mechanical algorithm for database search. In: Proceedings of the twenty-eighth annual ACM symposium on theory of computing. 1996. p. 212–9.

Suzuki Y et al.. Amplitude estimation without phase estimation. Quantum Inf Process. 2020;19:2.

Wie CR. Simpler quantum counting. Quantum Inf Comput. 2019;19:11–2.

Kitaev AY. Quantum measurements and the Abelian Stabilizer Problem. Electron. Colloq. Comput. Complex.. 1995;TR96.

Grinko D et al.. Iterative quantum amplitude estimation. npj Quantum Inf. 2021;7:1.

Aaronson S, Rall P. Quantum approximate counting, simplified. In: Symposium on simplicity in algorithms. 2020. p. 24–32.

Abrams DS, Williams CP. Fast quantum algorithms for numerical integrals and stochastic processes (1999).

Shimada NH, Hachisuka T. Quantum coin method for numerical integration. Comput Graph Forum. 2020;39:243–57.

Manzano A et al.. A modular framework for generic quantum algorithms. Mathematics. 2022;10:785.

Hoeffding W. Probability inequalities for sums of bounded random variables. J Am Stat Assoc. 1963;58(301):13–30.

Clopper CJ, Pearson ES. The use of confidence of fiducial limits illustrated in the case of the binomial. Biometrika. 1934;26(4):404–13.

Acknowledgements

The authors would like to thank Elías Combarro, Vedran Dunjko, Andrés Gómez, Javier Más, María R. Nogueiras, Gustavo Ordóñez, Juan Santos Suárez and Carlos Vázquez for fruitful discussions on some aspects of the present work. Part of the computational resources for this project were provided by the Galician Supercomputing Center (CESGA).

Funding

All authors acknowledge the European Project NExt ApplicationS of Quantum Computing (NEASQC), funded by Horizon 2020 Program inside the call H2020-FETFLAG-2020-01(Grant Agreement 951821). A. Manzano and Á. Leitao wish to acknowledge the support received from the Centro de Investigación de Galicia “CITIC”, funded by Xunta de Galicia and the European Union (European Regional Development Fund- Galicia 2014-2020 Program), by grant ED431G 2019/01. D. Musso acknowledges support from the Physics Department at Oviedo University (UNIOVI), the High Energy Physics Group (FPAUO) and the Institute of Space Sciences and Technologies of Asturias (ICTEA).

Author information

Authors and Affiliations

Contributions

All authors worked in the conceptualization of the idea. A.M. and D.M. implemented and tested the algorithms. A.M. and D.M. wrote the main manuscript text. A.M. prepared the figures. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Alberto Manzano and Daniele Musso contributed equally to this work.

Appendices

Appendix A: Proof of theorem 3.1

Here below, we prove each of the statements contained in Theorem 3.1 in order of appearance.

1.1 A.1 First proposition

When finding an empirical estimate p̂ of a probability p, we can assign to it a confidence interval (i.e. we can estimate an associated statistical error) by using Hoeffding’s inequality [19]:Footnote 1

where \(\epsilon _{p}\) is the precision, \(1-\gamma \) is the confidence level and n is the number of shots (i.e. samplings) used for the measurement. As we fixed the values for \(N_{i}\) and \(\gamma _{i}\) in Equations (19) and (20), using (31) we get a fixed value for \(\epsilon ^{p}_{i}\):

Rewriting the previous expression in terms of \(\epsilon ^{p}_{i}\) we have:

where we used (19) and defined

Then, inequality (33) descends from the trivial inequality \(\lceil N \rceil \geq N\). We have thus proven the first proposition.

1.2 A.2 Second proposition

By definition, we have that:

From Equation (14), this expression can be rewritten as:

We now consider the fact that \(\lfloor x \rfloor \geq x-1\) thus obtaining:

Since \(k_{i}\geq 0\), we have also

Now we focus on the term \((2k_{i}+1)\arcsin (2\epsilon _{i}^{a})\) which can be rewritten in terms of \(\epsilon ^{p}_{i}\) as

where we have used (18) and (16) after an obvious relabelling of the index. Next, we define the following functions:

and

The function f̅ is useful because:

and we employ it to bound expression (38):

As \(\epsilon ^{p}_{i}\leq \epsilon ^{p}\leq \frac {1}{2}\) then

By the definition of \(\epsilon ^{p}\) we have that:

and we have proven the second proposition. So far, we have not treated the first iteration, \(i= 1\), which we now consider explicitly:

where we have recalled that \(k_{1} = 0\). We focus our attention on the term

where we have considered (8). Following the same strategy as before we define:

An upper bound for (48) is:

which can be directly obtained from (48). Hence, from Equation (46), we have that:

where we have used

derived from (8).

Eventually, by the definition of \(b_{1}\) we have:

1.3 A.3 Third proposition

Using (18), we have

Following the same reasoning as in Equation (40) we have that:

where we have used the first proposition and the definition of \(k^{\max}\). We have proven the third proposition.

1.4 A.4 Fourth proposition

In this subsection we bound the maximum number of iterations needed to achieve the target accuracy ϵ. First note that, if I represents the last iteration, we have that

otherwise we would be in the last iteration, and that is false by hypothesis. To write (55) we have used (18) with \(I=i+1\). Using similar arguments as in the previous section, we bound \(\epsilon ^{a}_{I-1}\) by

We can rewrite (56) as

where we have used \(\epsilon ^{p}_{i}\leq \epsilon ^{p}\) and we have introduced the positive number T. Still from (57), we obtain that

Using the second proposition \(q_{i}\geq q\) we get

This means that, eventually, we have an upper bound T for the number of iterations I, when (for each new iteration) we increase the amplification \(K_{i}=2k_{i}+1\) by at least a factor q and we have proven the fourth proposition. Moreover, from (58) and \(q_{i}\geq q\) we have that:

1.5 A.5 Fifth proposition

We now want to ensure that the precision ϵ is met with confidence \(1-\gamma \). In order to achieve this, note that:

where we have used the definitions and the third proposition. We remind ourselves that \(1-\gamma _{i}\) represents the confidence level of the single iteration.

1.6 A.6 Sixth proposition

We want to find the necessary maximum number of calls to the oracle in order to obtain a target precision ϵ with confidence \(1-\gamma \). Suppose that we finish after I iterations, then the number of calls to the oracle is given by

As the number of shots \(N_{i}\) of the individual iteration is constant we have that:

where we have used \(K_{1} = 1\), i.e. \(k_{1}=0\). Then, using the inequality for \(q^{T}\) in (60), we obtain

where, in order to perform the second step, we have used that \(q>1\). Developing the expression, we have

where we have used \(I\geq 1\) and \(\frac {q}{q-1}q^{T-I}> 1\). Eventually, using the definition of T and denoting \(K^{\max} = 2k^{\max}+1\) we obtain:

It is straightforward to define an upper bound for the number of shots:

Thus, we can have

Finally, expressing \(\epsilon ^{p}\) in terms of q we get a bound for the number of calls to the oracle in terms of the input parameters ϵ, γ and q:

and we have proven the sixth proposition.

We have done the proof for the case where we consider the number of calls to the oracle as \(\sum_{i=1}^{I} N_{i}k_{i}\). This is the same as in IQAE (see [14]). However, here we are only computing the number of calls to the Grover oracle and that cannot be considered a fair comparison with the unamplified case where we are referring to the number of calls to the original oracle \(\mathcal{A}\). For that reason we will show what will be the bound for the number of calls to the oracle \(\mathcal{A}\) when we consider \(\mathcal{A}^{\dagger}\) the same as \(\mathcal{A}\). The number of calls would then be defined as:

where we have used \(2k_{i}\) because each time you call the Grover oracle you are calling once to \(\mathcal{A}\) and once to \(\mathcal{A}^{\dagger}\). The term +1 is for the first aplication of the oracle (recall that we are calling the operator \(\mathcal{A}\mathcal{G}^{k}\)). In the first iteration we do not call the Grover oracle, so we only \(N_{i}\) calls to \(\mathcal{A}\). Following the same reasoning as before we can bound the term \(1+\sum_{i = 2}^{I-2}\prod_{j=1}^{i}q_{i}\)

Finally we see that:

Appendix B: Constructing the shifted states

Given an oracle \(\mathcal{G}\) such that:

we can build an oracle \(\mathcal{A}_{\theta _{b}}\) such that:

We start by applying a Hadamard gate to the auxiliary register:

Next, apply \(\mathcal{G}\) controlled in the auxiliary register:

We continue by applying y-rotation with angle \(\theta _{b}\) controlled in the auxiliary register:

Finally, applying a Hadamard gate to the first register we get:

Appendix C: Estimation of the probability

In Appendix A we have proven some bounds for the amplitudes. However, in the literature one typically finds analogous bounds proven for the probabilities (i.e. for the square of the amplitudes). In this Appendix we show that, under some restrictions, given an estimation for the amplitudes \((a_{\min},a_{\max})\) such that \(a_{\max}- a_{\min}\leq 2\epsilon \), we can build a pair of bounds for the probabilities \((p_{\min},p_{\max})\) such that \(p_{\max}- p_{\min}\leq 2\epsilon \). So, we can indistinctly refer to the properties of the method when estimating amplitudes or estimating probabilities.

-

1.

Case 1: \(a_{\max}>0\) and \(a_{\min}>0\).

We build the bounds for the probability as \(p_{\max} = a_{\max}^{2}\), \(p_{\min} = a_{\min}^{2}\).

$$ \begin{aligned}[b] p_{\max}-p_{\min} &= a_{\max}^{2}-a_{\min}^{2} = (a_{\max}+a_{\min}) (a_{ \max}-a_{\min}) \\ &\leq (a_{\max}-a_{\min})\leq 2\epsilon , \end{aligned} $$(76)where we have used that \(\vert a_{\max} \vert \leq 0.5\), \(\vert a_{\min} \vert \leq 0.5 \).

-

2.

Case 2: \(a_{\max}<0\) and \(a_{\min}<0\).

We build the bounds for the probability as \(p_{\max} = a_{\min}^{2}\), \(p_{\min} = a_{\max}^{2}\).

$$ \begin{aligned}[b] p_{\max}-p_{\min} &= a_{\min}^{2}-a_{\max}^{2} = (-a_{\max}-a_{\min}) (a_{ \max}-a_{\min}) \\ &\leq (a_{\max}-a_{\min})\leq 2\epsilon , \end{aligned} $$(77)where we have used that \(\vert a_{\max} \vert \leq 0.5\), \(\vert a_{\min} \vert \leq 0.5\).

-

3.

Case 3: \(a_{\max}>0\) and \(a_{\min}<0\) and \(|a_{\max}|>|a_{\min}|\)

We build the bounds for the probability as \(p_{\max} = a_{\max}^{2}\), \(p_{\min} = 0\).

$$ p_{\max}-p_{\min} = a_{\max}^{2}\leq a_{\max}-0\leq a_{\max}-a_{\min} \leq 2\epsilon . $$(78) -

4.

Case 4: \(a_{\max}>0\) and \(a_{\min}<0\) and \(|a_{\max}|<|a_{\min}|\)

We build the bounds for the probability as \(p_{\max} = a_{\min}^{2}\), \(p_{\min} = 0\).

$$ p_{\max}-p_{\min} = a_{\min}^{2}\leq \vert a_{\min} \vert \leq a_{\max}-a_{\min} \leq 2 \epsilon . $$(79)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Manzano, A., Musso, D. & Leitao, Á. Real quantum amplitude estimation. EPJ Quantum Technol. 10, 2 (2023). https://doi.org/10.1140/epjqt/s40507-023-00159-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjqt/s40507-023-00159-0