Abstract

We develop a quantum learning scheme for binary discrimination of coherent states of light. This is a problem of technological relevance for the reading of information stored in a digital memory. In our setting, a coherent light source is used to illuminate a memory cell and retrieve its encoded bit by determining the quantum state of the reflected signal. We consider a situation where the amplitude of the states produced by the source is not fully known, but instead this information is encoded in a large training set comprising many copies of the same coherent state. We show that an optimal global measurement, performed jointly over the signal and the training set, provides higher successful identification rates than any learning strategy based on first estimating the unknown amplitude by means of Gaussian measurements on the training set, followed by an adaptive discrimination procedure on the signal. By considering a simplified variant of the problem, we argue that this is the case even for non-Gaussian estimation measurements. Our results show that, even in absence of entanglement, collective quantum measurements yield an enhancement in the readout of classical information, which is particularly relevant in the operating regime of low-energy signals.

Similar content being viewed by others

1 Introduction

Programmable processors are expected to automate information processing tasks, lessening human intervention by adapting their functioning according to some input program. This adjustment, that is, the process of extraction and assimilation of relevant information to perform efficiently some task, is often called learning, borrowing a word most naturally linked to living beings. Machine learning is a well-established and interdisciplinary research field, broadly fitting within the umbrella of Cybernetics, that seeks to endow machines with this sort of ability, rendering them able to ‘learn’ from past experiences, perform pattern recognition and identification in scrambled data, and ultimately self-regulate [1, 2]. Algorithms featuring learning capabilities have numerous practical applications, including speech and text recognition, image analysis, and data mining.

Whereas conventional machine learning theory implicitly assumes the training set to be made of classical data, a more recent variation, which can be referred to as quantum machine learning, focuses on the exploration and optimisation of training with fundamentally quantum objects. Quantum learning [3], as an area of strong foundational and technological interest, has recently raised great attention. Particularly, the use of programmable quantum processors has been investigated to address machine learning tasks such as pattern matching [4], binary classification [5–8], feedback-adaptive quantum measurements [9], learning of unitary transformations [10], ‘probably approximately correct’ learning [11], and unsupervised clustering [12]. Quantum learning algorithms provide not only performance improvements over some classical learning problems, but they naturally have also a wider range of applicability. Quantum learning has also strong links with quantum control theory [13], and is thus becoming an increasingly significant element of the theoretical and experimental quantum information processing toolbox.

In this paper, we investigate a quantum learning scheme for the task of discriminating between two coherent states. Coherent states stand out for their relevance in quantum optical communication theory [14–16], quantum information processing implementations with light, atomic ensembles, and interfaces thereof [17, 18], and quantum optical process tomography [19]. Lasers are widely used in current telecommunication systems, and the transmission of information can be theoretically modelled in terms of bits encoded in the amplitude or phase modulation of a laser beam. The basic task of distinguishing two coherent states in an optimal way is thus of great importance, since lower chances of misidentification translate into higher transfer rates between the sender and the receiver.

The discrimination of coherent states has been considered, so far, within two main approaches, namely minimum-error and unambiguous discrimination, although the former is more developed. Generally, a logical bit can be encoded in two possible coherent states \(\vert \alpha \rangle\) and \(\vert -\alpha \rangle\), via a phase shift, or in the states \(\vert 0 \rangle\) and \(\vert 2\alpha \rangle\), via amplitude modulation. Both encoding schemes are equivalent, since one can move from one to the other by applying Weyl’s displacement operator \(\hat{D}(\alpha)\) to both states.Footnote 1 In the minimum-error approach, the theoretical minimum for the probability of error is given by the Helstrom formula for discriminating two pure states [20]. A variety of implementations have been devised to achieve this task, e.g., the Kennedy receiver [21], based on photon counting; the Dolinar receiver [22], a modification of the Kennedy receiver with real-time quantum feedback; and the homodyne receiver.Footnote 2 Concerning the unambiguous approach to the discrimination problem, results include the unambiguous discrimination between two known coherent states [24, 25], and its programmable version, i.e., when the information about the amplitude α enters the discrimination device in a quantum form [26–28].

The goal of this paper is to explore the fundamental task of discriminating between two coherent states with minimum error, when the available information about their amplitudes is incomplete. The simplest instance of such problem is a partial knowledge situation: the discrimination between the (known) vacuum state, \(\vert 0 \rangle\), and some coherent state, \(\vert \alpha \rangle\), where the value of α is not provided beforehand in the classical sense, but instead encoded in a number n of auxiliary modes in the state \(\vert \alpha \rangle^{\otimes n}\). Such discrimination scheme can be cast as a learning protocol with two steps: a first training stage where the auxiliary modes (the training set) are measured to obtain an estimate of α, followed by a discrimination measurement based on this estimate. We then investigate whether this two-step learning procedure matches the performance of the most general quantum protocol, namely a global discrimination measurement that acts jointly over the auxiliary modes and the state to be identified.

Before proceeding with the derivation of our results and in order to motivate further the problem investigated in this paper, let us define the specifics of the setting in the context of a quantum-enhanced readout of classically-stored information.

Imagine a classical memory register modelled by an array of cells, where each cell contains a reflective medium with two possible reflectivities \(r_{0}\) and \(r_{1}\). To read the information stored in the register, one shines light into one of the cells and analyses its reflection. The task essentially consists in discriminating the two possible states of the reflected signal, which depend on the reflectivity of the medium and thus encode the logical bit stored in the cell. In a seminal paper on quantum reading [29], the author takes advantage of ancillary modes to prepare an initial entangled state between those and the signal. The reflected signal is sent together with the ancillae to a detector, where a joint discrimination measurement is performed. A purely quantum resource - entanglement - is thus introduced, enhancing the probability of a successful identification of the encoded bit.Footnote 3 This model has been later extended to the use of error correcting codes, thus defining the notion of quantum reading capacity [30] also studied in the presence of various optical limitations [31]. The idea of using nonclassical light to improve the performance of classical information tasks can be traced back to precursory works on quantum illumination [32, 33], where the presence of a low-reflectivity object in a bright thermal-noise bath is detected with higher accuracy when entangled light is sent to illuminate the target region. For more recent theoretical and experimental developments in optical quantum imaging, illumination and reading, including studies on the role of nonclassical correlations beyond entanglement, refer e.g. to Refs. [34–44].

In this paper we consider a reading scenario with an imperfect coherent light source and no initial entanglement involved. The proposed scheme is as follows (see Figure 1). We model an ideal classical memory by a register made of cells that contain either a transparent medium (\(r_{0}=0\)) or a highly reflective one (\(r_{1}=1\)). A reader, comprised by a transmitter and a receiver, extracts the information of each cell. The transmitter is a source that produces coherent states of a certain amplitude α. The value of α is not known with certainty due, for instance, to imperfections in the source, but it can be statistically localised in a Gaussian distribution around some (known) \(\alpha_{0}\). A signal state \(\vert \alpha \rangle\) is sent towards a cell of the register and, if it contains the transparent medium, it goes through; if it hits the highly reflective medium, it is reflected back to the receiver in an unperturbed form. This means that we have two possibilities at the entrance of the receiver upon arrival of the signal: either nothing arrives - and we represent this situation as the vacuum state \(\vert 0 \rangle\) - or the reflected signal bounces back - which we denote by the same signal state \(\vert \alpha \rangle\). To aid in the discrimination of the signal, we alleviate the effects of the uncertainty in α by considering that n auxiliary modes are produced by the transmitter in the global state \(\vert \alpha \rangle^{\otimes n}\) and sent directly to the receiver. The receiver then performs measurements over the signal and the auxiliary modes and outputs a binary result, corresponding with some probability to the bit stored in the irradiated cell.

A quantum reading scheme that uses a coherent signal \(\pmb{\vert \alpha \rangle}\) , produced by a transmitter, to illuminate a cell of a register that stores a bit of information. A receiver extracts this bit by distinguishing between the two possible states of the reflected signal, \(\vert 0 \rangle\) and \(\vert \alpha \rangle\), assisted by n auxiliary modes sent directly by the transmitter.

We set ourselves to answer the following questions: (i) which is the optimal (unrestricted) measurement, in terms of the error probability, that the receiver can perform? and (ii) is a joint measurement, performed over the signal together with the auxiliary modes, necessary to achieve optimality? To accomplish the set task, we first obtain the optimal minimum-error probability considering collective measurements (Section 2). Then, we contrast the result with that of the standard estimate-and-discriminate (E&D) strategy, consisting in first estimating α by measuring the auxiliary modes, and then using the acquired information to determine the signal state by a discrimination measurement tuned to distinguish the vacuum state \(\vert 0 \rangle\) from a coherent state with the estimated amplitude (Section 3). In order to compare the performance of the two strategies, we focus on the asymptotic limit of large n. The natural figure of merit is the excess risk, defined as the excess asymptotic average error per discrimination when α is perfectly known. We show that a collective measurement provides a lower excess risk than any Gaussian E&D strategy, and we conjecture (and provide strong evidence) that this is the case for all local strategies (Section 4). We conclude with a summary and discussion of our results (Section 5), while some technical derivations and proofs are deferred to the Appendices.

2 Collective strategy

The global state that arrives at the receiver can be expressed as either \([ \alpha ]^{\otimes n} \otimes[0]\) or \([ \alpha ]^{\otimes n} \otimes[\alpha]\), where the shorthand notation \([ \cdot]\equiv \vert \cdot \rangle \langle \cdot \vert \) will be used throughout the paper. For simplicity, we take equal a priori probabilities of occurrence of each state. We will always consider the signal state to be that of the last mode, and all the previous modes will be the auxiliary ones. First of all, note that the information carried by the auxiliary modes can be conveniently ‘concentrated’ into a single mode by means of a sequence of unbalanced beam splitters.Footnote 4 The action of a beam splitter over a pair of coherent states \(\vert \alpha \rangle\otimes \vert \beta \rangle\) yields

where T is the transmissivity of the beam splitter, R is its reflectivity, and \(T+R=1\). A balanced beam splitter (\(T=R=1/2\)) acting on the first two auxiliary modes thus returns \(\vert \alpha \rangle\otimes \vert \alpha \rangle\longrightarrow|\sqrt{2}\alpha\rangle \otimes \vert 0 \rangle\). Since the beam splitter preserves the tensor product structure of the two modes, one can treat separately the first output mode and use it as input in a second beam splitter, together with the next auxiliary mode. By choosing appropriately the values of T and R, the transformation \(|\sqrt{2}\alpha\rangle\otimes \vert \alpha \rangle \longrightarrow|\sqrt {3}\alpha \rangle\otimes \vert 0 \rangle\) can be achieved. Applying this process sequentially over the n auxiliary modes, we perform the transformation

Note that this is a deterministic process, and that no information is lost, for it is contained completely in the complex parameter α. This operation allows us to effectively deal with only two modes. The two possible global states entering the receiver hence become \([\sqrt {n}\alpha]\otimes[0]\) and \([\sqrt{n}\alpha]\otimes[\alpha]\).

The parameter α is not known with certainty. Adopting a Bayesian viewpoint, we embed this lack of information into the analysis by considering averaged global states over the possible values of α, where the choice of the prior probability distribution accounts for the prior knowledge that we might already have. One readily sees though that a flat prior probability distribution for α, representing a limiting situation of complete ignorance, is not reasonable in this particular setting. On the one hand, such prior would yield divergent average states of infinite energy, since the phase space is infinite. On the other hand, in a practical situation it is not realistic to assume that all amplitudes α are equally probable.Footnote 5 A way to overcome this apparent difficulty and assign a reasonable prior probability distribution to α is to sacrifice a small number of auxiliary modes (e.g., such that we are left with \(\tilde {n}=n^{1-\epsilon}\) modes) and use them to construct a rough estimator of α, by means of some isotropic quantum tomography procedure. Then, it can be shown that α belongs to a neighbourhood of size \(n^{-1/2+\epsilon}\) centred at a fixed amplitude \(\alpha_{0}\), with probability converging to one (this is shown, though in a classical statistical context, in [47]). Therefore, the asymptotic behaviour of any statistical inference problem that uses this model is determined by the structure of a local (Gaussian) quantum model around a fixed coherent state \(\vert \alpha_{0} \rangle \).Footnote 6 In our case, we consider this ‘localisation’ of the prior probability distribution as an innocuous preparatory process in both the collective and the E&D strategies, in the sense that the comparison between their asymptotic discrimination power will not be affected.

Under these considerations, the initial prior for α will be a Gaussian probability distribution centred at \(\alpha_{0}\), whose width goes as \(\sim1/\sqrt{n}\). That is, we can express the true amplitude α as

where the parameter u follows the Gaussian distribution

To avoid divergences, we have introduced the free parameter μ as a temporal energy cut-off that defines the width of \(G(u)\). After obtaining expressions for the excess risks in the asymptotic regime of large n, we will remove the cut-off dependence by taking the limit \(\mu\to\infty\).

Exploiting the prior information acquired through the rough estimation, that is using Eqs. (3) and (4), we compute the average global states arriving at the receiver

The optimal measurement to determine the state of the signal is the Helstrom measurement for the discrimination of the states \(\sigma_{1}\) and \(\sigma_{2}\) [20], that yields the average minimum-error probabilityFootnote 7

where \(\Vert M \Vert _{1}=\operatorname{tr} \sqrt{M^{\dagger}M}\) denotes the trace norm of the operator M. The technical difficulty in computing \(P_{\mathrm{e}}^{\mathrm {opt}}(n)\) resides in the fact that \(\sigma_{1}-\sigma_{2}\) is an infinite-dimensional full-rank matrix, hence its trace norm does not have a computable analytic expression for arbitrary finite n. Despite this, one can still resort to analytical methods in the asymptotic regime \(n\to \infty \) by treating the states perturbatively.

To ease this calculation, we first apply the displacement operator

to the states \(\sigma_{1}\) and \(\sigma_{2}\), where \(\hat{D}_{1}\) (\(\hat {D}_{2}\)) acts on the first (second) mode, and we obtain the displaced global states

Since both states have been displaced by the same amount, the trace norm does not change, i.e., \(\Vert \sigma_{0}-\sigma_{1} \Vert _{1}=\Vert \bar {\sigma}_{0}-\bar{\sigma}_{1} \Vert _{1}\). Eq. (9) directly yields

where \(c_{k} = \mu^{2k}/[(\mu^{2}+1)^{k+1}]\) and \(\{\vert k \rangle\}\) is the Fock basis. Note that, as a result of the average, the first mode in Eq. (11) corresponds to a thermal state with average photon number \(\mu^{2}\). Note also that the n-dependence is entirely in \(\bar{\sigma}_{2}\). In the limit \(n\to\infty\), we can expand the second mode of \(\bar{\sigma}_{2}\) as

Then, up to order \(1/n\) its asymptotic expansion gives

Inserting Eq. (13) into Eq. (10) and computing the corresponding averages of each term in the expansion, we obtain a state of the form

We can now use Eqs. (11) and (14) to compute the trace norm \(\Vert \bar{\sigma }_{1}-\bar{\sigma}_{2} \Vert _{1}\) in the asymptotic regime of large n, up to order \(1/n\), by applying perturbation theory. The explicit form of the terms in Eq. (14), as well as the details of the computation of the trace norm, are given in Appendix 1. Here we just show the result: the average minimum-error probability \(P_{\mathrm{e}}^{\mathrm{opt}}(n)\), defined in Eq. (7), can be written in the asymptotic limit as

where \(\Lambda_{\pm}^{(2)}\) is given by Eq. (60).

2.1 Excess risk

The figure of merit that we use to assess the performance of our protocol is the excess risk, that we have defined as the difference between the asymptotic average error probability \(P_{\mathrm {e}}^{\mathrm{opt}}\) and the average error probability for the optimal strategy when α is perfectly known. As we said at the beginning of the section, the true value of α is \(\alpha_{0}+u/\sqrt{n}\) for a particular realisation, thus knowing u equates knowing α. The minimum-error probability for the discrimination between the known states \(\vert 0 \rangle\) and \(\vert \alpha _{0}+u/\sqrt{n} \rangle\), \(P_{\mathrm{e}}^{*}(u,n)\), averaged over the Gaussian distribution \(G(u)\), takes the form

To compute this integral we do a series expansion of the overlap in the limit \(n\rightarrow\infty\) and integrate the resulting terms (see Appendix 4). After some algebra we obtain

where

The excess risk is then given by Eqs. (15) and (17) as

Finally, we remove the cut-off imposed at the beginning by taking the limit \(\mu\rightarrow\infty\) and we obtain

Note that the excess risk only depends on the module of \(\alpha_{0}\), i.e., on the average distance between \(\vert \alpha \rangle\) and \(\vert 0 \rangle\). The excess risk is thus phase-invariant, as it should.

Eq. (20) is the first piece of information we need to address the main question posed at the beginning, namely whether the optimal performance of the collective strategy is achievable by an estimate-and-discriminate (E&D) strategy. We now move on towards the second piece.

3 Estimate & Discriminate strategy

An alternative - and more restrictive - strategy to determine the state of the signal consists in the natural combination of two fundamental tasks: state estimation, and state discrimination of known states. In such an E&D strategy, all auxiliary modes are used to estimate the unknown amplitude α. Then, the obtained information is used to tune a discrimination measurement over the signal that distinguishes the vacuum state from a coherent state with the estimated amplitude. In this section we find the optimal E&D strategy based on Gaussian measurements and compute its excess risk \(R^{\mathrm{ E\&D}}\). Then, we compare the result with that of the optimal collective strategy \(R^{\mathrm{ opt}}\).

The most general Gaussian measurement that one can use to estimate the state of the auxiliary mode \(|\sqrt{n}\alpha\rangle\) is a generalised heterodyne measurement, represented by a positive operator-valued measure (POVM) with elements

i.e., projectors onto pure Gaussian states with amplitude \(\bar{\beta}\) and squeezing r along the direction ϕ. The outcome of such heterodyne measurement \(\bar{\beta}=\sqrt{n}\beta\) produces an estimate for \(\sqrt{n}\alpha\), hence β stands for an estimate of α.Footnote 8 Upon obtaining \(\bar{\beta}\), the prior information that we have about α gets updated according to Bayes’ rule, so that now the signal state can be either \([0]\) or some state \(\rho(\beta)\). The form of this second hypothesis is given by

where \(p(\alpha|\beta)\) encodes the posterior information that we have acquired via the heterodyne measurement. It represents the conditional probability of the state of the auxiliary mode being \(\vert \sqrt{n}\alpha \rangle\), given that we obtained the outcome \(\bar{\beta}\). Bayes’ rule dictates

where \(p(\beta|\alpha)\) is given by (see Appendix 2)

\(p(\alpha)\) is the prior information of α before the heterodyne measurement, and

is the total probability of giving the estimate β.

The error probability of the E&D strategy, averaged over all possible estimates β, is then

Note that the estimate β depends ultimately on the number n of auxiliary modes, hence the explicit dependence in the left-hand side of Eq. (26).

We are interested in the asymptotic expression of Eq. (26), so let us now focus on the \(n\to\infty\) scenario. Recall that an initial rough estimation of α permits the localisation of the prior \(p(\alpha)\) around a central point \(\alpha _{0}\), such that \(\alpha\approx\alpha_{0} + u/\sqrt{n}\), where u is distributed according to \(G(u)\), defined in Eq. (4). Consequently, the estimate β will also be localised around the same point, i.e., \(\beta\approx\alpha_{0}+v/\sqrt{n}\), \(v\in\mathbb{C}\). As a result, we can effectively shift from amplitudes α and β to a local Gaussian model around \(\alpha_{0}\), parameterised by u and v. According to this new model, we make the following transformations:

where, for simplicity, we have assumed \(\alpha_{0}\) to be real. Note that this can be done without loss of generality. Note also that, by the symmetry of the problem, this assumption implies \(\phi=0\).

The shifting to the local model transforms the trace norm in Eq. (26) as

where

To compute the explicit expression of \(\rho(v)\) we proceed as in the collective strategy. That is, we expand \([u/\sqrt{n}]\) in the limit \(n\rightarrow\infty\) up to order \(1/n\), as in Eq. (13), and we compute the trace norm using perturbation theory (see Appendix 3 for details). The result allows us to express the asymptotic average error probability of the E&D strategy as

where \(\Delta^{\mathrm{E\&D}}\) is given by Eq. (66).

3.1 Excess risk

The excess risk associated to the E&D strategy is generally expressed as

where \(P_{\mathrm{ e}}^{*}\) is the error probability for known α, given in Eq. (17), and \(P_{\mathrm{ e}}^{\mathrm{ E\& D}}\) is the result from the previous section, i.e., Eq. (33). The full analytical expression for \(R^{\mathrm{ E\&D}}(r)\) is given in Eq. (67). Note that we have to take the limit \(\mu\to\infty\) in the excess risk, as we did for the collective case. Note also that all the expressions calculated so far explicitly depend on the squeezing parameter r (apart from \(\alpha_{0}\)). This parameter stands for the squeezing of the generalised heterodyne measurement in Eq. (21), which we have left unfixed on purpose. As a result, we now define, through the squeezing r, the optimal heterodyne measurement over the auxiliary mode to be that which yields the lowest excess risk (34), i.e.,

To find the optimal r, we look at the parameter estimation theory of Gaussian models (see, e.g., [51]). In a generic two-dimensional Gaussian shift model, the optimal measurement for the estimation of a parameter \(\theta= (q,p)\) is a generalised heterodyne measurementFootnote 9 of the type (21). Such measurement yields a quadratic risk of the form

where \(p(\theta)\) is some probability distribution, \(\hat{\theta}\) is an estimator of θ, and G is a two-dimensional matrix. One can always switch to the coordinates system in which G is diagonal, \(G=\operatorname{ diag}(g_{q},g_{p})\), to write

It can be shown [51] that the optimal squeezing of the estimation measurement, i.e., that for which the quadratic risk \(R_{\hat {\theta}}\) is minimal, is given by

We can then simply compare Eq. (37) with Eq. (34) to deduce the values of \(g_{q}\) and \(g_{p}\) for our case. By doing so, we obtain that the optimal squeezing reads

where

Eq. (39) tells us that the optimal squeezing r is a function of \(\alpha_{0}\) that takes negative values, and asymptotically approaches zero when \(\alpha_{0}\) is large (see Figure 2). This means that the optimal estimation measurement over the auxiliary mode is comprised by projectors onto coherent states, antisqueezed along the line between \(\alpha_{0}\) and the origin (which represents the vacuum) in phase space. In other words, the estimation is tailored to have better resolution along that axis because of the subsequent discrimination of the signal state. This makes sense: since the error probability in the discrimination depends primarily on the distance between the hypotheses, it is more important to estimate this distance more accurately rather than along the orthogonal direction. For large amplitudes, the estimation converges to a (standard) heterodyne measurement with no squeezing. As \(\alpha_{0}\) approaches 0 the states of the signal become more and more indistinguishable, and the projectors of the heterodyne measurement approach infinitely squeezed coherent states, thus converging to a homodyne measurement.

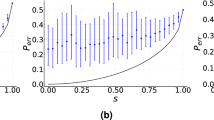

Inserting Eq. (39) into Eq. (35) we finally obtain the expression of \(R^{\mathrm { E\& D}}\) as a function of \(\alpha_{0}\), which we can now compare with the excess risk for the collective strategy \(R^{\mathrm{ opt}}\), given in Eq. (20). We plot both functions in Figure 3. For small amplitudes, say in the range \(0.3 \lesssim \alpha_{0} \lesssim1.5\), there is a noticeable difference in the performance of the two strategies, reaching more than a factor two at some points. We also observe that the gap closes for large amplitudes \(\alpha_{0} \rightarrow\infty\); this behaviour is expected, since the problem becomes essentially classical when the energy of the signal is sufficiently large. Interestingly, very weak amplitudes \(\alpha_{0} \rightarrow0\) also render the two strategies almost equivalent.

4 General estimation measurements

We have showed that a local strategy based on the estimation of the auxiliary state via a generalised heterodyne measurement, followed by the corresponding discrimination measurement on the signal mode, performs worse than the most general (collective) strategy. However, the considered E&D procedure does not encompass all local strategies. The heterodyne measurement, although with some nonzero squeezing, still detects the phase space around \(\alpha_{0}\) in a Gaussian way, i.e., up to second moments. In principle, one might expect that a more general measurement that produces a non-Gaussian probability distribution for the estimate β might perform better in terms of the excess risk, and even possibly match the optimal performance, closing the gap between the curves in Figure 3. Here we show that the observed difference in performance between the collective and the local strategy is not due to lack of generality of the latter. We do so by considering a simplified although nontrivial version of the problem that allows us to obtain a fully general solution.

The intuitive reason why one could think, at first, that a non-Gaussian probability distribution for β might give an advantage is the following. Imagine that α is further restricted to be on the positive real axis. Then, the true α is either to the left of \(\alpha_{0}\) or to the right, depending on the sign of the local parameter u. In the former case, α is closer to the vacuum, so the error in discriminating between them two is larger than for the states on the other side. One would then expect that an ideal strategy should better estimate the negative values of the parameter u, compared to the positive ones. Gaussian measurements like the heterodyne detection do not contemplate this situation, as they are translationally invariant, and that might be the reason behind the gap in Figure 3.

To test this, we design the following simple example. Since the required methods are a straightforward extension of the ones used in the previous sections, we only sketch the procedure without showing any explicit calculation. Imagine now that the true value of α is not Gaussian distributed around \(\alpha_{0}\), but it can only take the two values \(\alpha=\alpha_{0} \pm1/\sqrt{n}\), representing the states that are closer to the vacuum and further away. Having only two possibilities for α allows us to solve analytically the most general local strategy, since estimating the auxiliary state becomes a discrimination problem between the coherent states \(\vert \sqrt{n}\alpha_{0}+1 \rangle\) and \(\vert \sqrt {n}\alpha _{0}-1 \rangle\). The measurement that distinguishes the two possibilities is a two-outcome POVM \(\mathcal{E}=\{[e_{+}],[e_{-}]\}\).Footnote 10 We use the displacement operator (8) to shift to the local model around \(\alpha_{0}\), such that the possible coherent states of the auxiliary mode are now \(\vert 1 \rangle\) and \(\vert {-1} \rangle \). Note that, without loss of generality, one can confine the POVM vectors to the (Bloch) plane spanned by \(\vert 1 \rangle\) and \(\vert -1 \rangle \), so that \(\vert e_{+} \rangle\) and \(\vert e_{-} \rangle\) are real linear combinations of these. Indeed, any component orthogonal to this plane would give no aid to distinguish the hypotheses. This allows us to express the probabilities of correctly identifying each state as

where \(\chi=\langle{1}|{-1}\rangle=e^{-2} \), and the overlap c completely parametrises the measurement \(\mathcal{E}\). If the optimal estimation measurement is indeed asymmetric, it should happen that \(p_{+} < p_{-}\), i.e., that the probability of a correct identification is greater for the state \(\vert -1 \rangle\) than for \(\vert 1 \rangle\).

From now on we proceed as for the Gaussian E&D strategy. We first compute the posterior state of the signal mode according to Bayes’ rule. Then, we compute the optimal error probability in the discrimination of \([-\alpha_{0}]\) and the posterior state, which is a combination of \([1/\sqrt{n}]\) and \([-1/\sqrt{n}]\), weighted by the corresponding posterior probabilities. The c-dependence is carried by these probabilities. Going to the asymptotic limit \(n\to\infty\), applying perturbation theory to compute the trace norm, and averaging the result over the two possible outcomes in the discrimination of the signal state, we finally obtain the asymptotic average error probability for the local strategy as a function of c. The asymptotic average error probability for the optimal collective strategy in this simple case is obtained exactly along the same lines as shown in Section 2, and the one for known states is given by the asymptotic expansion of Eq. (16), substituting the average over \(G(u)\) appropriately.

Now we can compute the excess risk for the local and collective strategy, and optimise the local one over c. As already advanced at the beginning, the optimal solution yields \(c^{*}= (\sqrt{1+\chi }+\sqrt{1-\chi} )/2\), and therefore \(p_{+}=p_{-}\). That is, the POVM \(\mathcal{E}\) is symmetric with respect to the vectors \(\vert {1} \rangle\) and \(\vert {-1} \rangle\), hence both hypotheses receive the same treatment by the measurement in charge of determining the state of the auxiliary mode. Moreover, the gap between the excess risk of both strategies remains. This result leads us to conjecture that the optimal collective strategy performs better than any local strategy.

5 Discussion

In this paper we have proposed a learning scheme for coherent states of light. We have presented it in the context of a quantum-enhanced readout of classically-stored binary information, following a recent research line initiated in [29]. The reading of information, encoded in the state of a signal that comes reflected by a memory cell, is achieved by measuring the signal and deciding its state to be either the vacuum state or some coherent state of unknown amplitude. The effect of this uncertainty is palliated by supplying a large number of auxiliary modes in the same coherent state. We have presented two strategies that make different uses of this (quantum) side information to determine the state of the signal: a collective strategy, consisting in measuring all modes at once and making the binary decision, and a local (E&D) strategy, based on first estimating - learning - the unknown amplitude, then using the acquired knowledge to tune a discrimination measurement over the signal. We have showed that the former outperforms any E&D strategy that uses a Gaussian estimation measurement over the auxiliary modes. Furthermore, we conjecture that this is indeed the case for any (even possibly non-Gaussian) local strategy, based on evidence obtained within a simplified version of the original setting that allowed us to consider completely general measurements.

Previous works on quantum reading rely on the use of specific preparations of nonclassical - namely, entangled - states of light to improve the reading performance of a classical memory [29, 34–36]. Our results indicate that, when there exists some uncertainty in the states produced by the source (and, consequently, the possibility of preparing a specific entangled signal state is highly diminished), alternative quantum resources - namely, collective measurements - still enhance the reading of classical information using uncorrelated, classical coherent light. It is worth mentioning that there are precedents of quantum phenomena of this sort providing enhancements for statistical problems involving coherent states. As an example, in the context of estimation of product coherent states, the optimal measure-and-prepare strategy on identical copies of \(\vert \alpha \rangle\) can be achieved by local operations and classical communication (according to the fidelity criterion), but bipartite product states \(\vert \alpha \rangle \vert \alpha ^{*} \rangle\) require entangled measurements [52].

On a final note, the quantum enhancement found here is relevant in the regime of low-energy signalsFootnote 11 (small coherent amplitudes). This is in accordance to the advantage regime provided by nonclassical light sources, as discussed in other works [29, 35, 37]. A low energy readout of memories is, in fact, of very practical interest. While, mathematically, the success probability of any readout protocol could be arbitrarily increased by sending signals with diverging energy, there are many situations where this is highly discouraged. For instance, the readout of photosensitive organic memories requires a high level of control over the amount of energy irradiated per cell. In those situations, the use of signals with very low energy benefits from quantum-enhanced performance, whereas highly energetic classical light could easily damage the memory.

Notes

For a single mode with annihilation and creation operators \(\hat{a}\) and \(\hat{a}^{\dagger}\) respectively, the displacement operator is \(\hat{D}(\alpha) = \exp (\alpha\hat {a}^{\dagger}- \alpha^{\ast}\hat{a} )\).

While the latter is the simplest procedure, it does not achieve optimality. However, for weak coherent states (\(|\alpha|^{2} \lesssim0.4\)), it yields an error probability very close to the optimal value \(P_{\mathrm{e}}\), and it is optimal among all Gaussian measurements [23]. In fact, among the three mentioned, only the Dolinar receiver is globally optimal.

In particular, in [29] a two-mode squeezed vacuum state is shown to outperform any classical light, in the regime of few photons and high reflectivity memories.

See, e.g., Section III A in [45] for details.

Nonetheless, for finite dimensional systems, assuming a uniform prior can be better justified and very useful; see, e.g., [46].

Note that, sensu stricto, the dependence of \(P_{ \mathrm{e}}^{\mathrm{ opt}}(n)\) on the localisation parameter \(\alpha_{0}\) should be made explicit. Keep in mind that, in general, all quantities computed in this paper will depend on \(\alpha_{0}\). Thus for the sake of notation clarity, we omit it hereafter when no confusion arises.

In our notation, the outcome of the measurement also labels the estimate, so β stands for both indistinctly. This should generate no confusion, since the trivial guess function that uses the outcome \(\bar{\beta}\) to produce the estimate β does not vary throughout the paper.

This is the case whenever the covariance of the Gaussian model is known, and the mean is a linear transformation of the unknown parameter.

Note that we have chosen the POVM elements to be rank-1 projectors. This is no loss of generality. Due to the convexity properties of the trace norm, POVMs with higher-rank elements cannot be optimal.

Note that here we have only considered sending a single-mode signal. However, in what coherent states are concerned, increasing the number of modes of the signal and increasing the energy of a single mode are equivalent operations.

Note that the zero-order eigenvalues \(\gamma_{i,\varepsilon}^{(0)}\) are nondegenerate, hence Eq. (52) presents no divergence problems.

References

MacKay DJC. Information theory, inference, and learning algorithms. Cambridge: Cambridge University Press; 2003.

Bishop CM. Pattern recognition and machine learning. Berlin: Springer; 2006.

Aïmeur E, Brassard G, Gambs S. Machine learning in a quantum world. In: Lamontagne IL, Marchand M, editors. Advances in artificial intelligence. Lecture notes in computer science. vol. 4013. Berlin: Springer; 2006. p. 431-42.

Sasaki M, Carlini A. Quantum learning and universal quantum matching machine. Phys Rev A. 2002;66(2):022303. doi:10.1103/PhysRevA.66.022303.

Guta M, Kotlowski W. Quantum learning: asymptotically optimal classification of qubit states. New J Phys. 2010;12(12):123032. doi:10.1088/1367-2630/12/12/123032.

Neven H, Denchev VS, Rose G, Macready WG. Training a large scale classifier with the quantum adiabatic algorithm (2009).

Sentís G, Calsamiglia J, Muñoz-Tapia R, Bagan E. Quantum learning without quantum memory. Sci Rep. 2012;2:708. doi:10.1038/srep00708.

Pudenz KL, Lidar DA. Quantum adiabatic machine learning. Quantum Inf Process. 2013;12(5):2027-70. doi:10.1007/s11128-012-0506-4.

Hentschel A, Sanders BC. Machine learning for precise quantum measurement. Phys Rev Lett. 2010;104(6):063603. doi:10.1103/PhysRevLett.104.063603.

Bisio A, Chiribella G, D’Ariano GM, Facchini S, Perinotti P. Optimal quantum learning of a unitary transformation. Phys Rev A. 2010;81(3):032324. doi:10.1103/PhysRevA.81.032324.

Servedio RA, Gortler SJ. Equivalences and separations between quantum and classical learnability. SIAM J Comput. 2004;33(5):1067-92. doi:10.1137/S0097539704412910.

Lloyd S, Mohseni M, Rebentrost P. Quantum algorithms for supervised and unsupervised machine learning. 2013. arXiv:1307.0411.

Dong D, Petersen IR. Quantum control theory and applications: a survey. IET Control Theory Appl. 2010;4(12):2651-71. doi:10.1049/iet-cta.2009.0508.

Glauber R. Coherent and incoherent states of the radiation field. Phys Rev. 1963;131(6):2766-88. doi:10.1103/PhysRev.131.2766.

Cahill K, Glauber R. Ordered expansions in boson amplitude operators. Phys Rev. 1969;177(5):1857-81. doi:10.1103/PhysRev.177.1857.

Cahill K, Glauber R. Density operators and quasiprobability distributions. Phys Rev. 1969;177(5):1882-902. doi:10.1103/PhysRev.177.1882.

Cerf N, Leuchs G, Polzik ES, editors. Quantum information with continuous variables of atoms and light. London: Imperial College Press; 2007.

Grosshans F, Van Assche G, Wenger J, Brouri R, Cerf NJ, Grangier P. Quantum key distribution using Gaussian-modulated coherent states. Nature. 2003;421(6920):238-41. doi:10.1038/nature01289.

Lobino M, Korystov D, Kupchak C, Figueroa E, Sanders BC, Lvovsky AI. Complete characterization of quantum-optical processes. Science. 2008;322(5901):563-6. doi:10.1126/science.1162086.

Helstrom CW. Quantum detection and estimation theory. New York: Academic Press; 1976.

Kennedy RS, Hoversten EV, Elias P, Chan V. Processing and transmission of information. Research Laboratory of Electronics, Massachusetts Institute of Technology (MIT), Quarterly Process Report. 1973;108:219.

Dolinar SJ. Processing and transmission of information. Research Laboratory of Electronics, Massachusetts Institute of Technology (MIT), Quarterly Process Report. 1973;111:115.

Takeoka M, Sasaki M. Discrimination of the binary coherent signal: Gaussian-operation limit and simple non-Gaussian near-optimal receivers. Phys Rev A. 2008;78(2):022320. doi:10.1103/PhysRevA.78.022320.

Chefles A, Barnett SM. Optimum unambiguous discrimination between linearly independent symmetric states. Phys Lett A. 1998;250(4-6):223-9. doi:10.1016/S0375-9601(98)00827-5.

Banaszek K. Optimal receiver for quantum cryptography with two coherent states. Phys Lett A. 1999;253(1-2):12-5. doi:10.1016/S0375-9601(99)00015-8.

Sedlák M, Ziman M, Pribyla O, Bužek V, Hillery M. Unambiguous identification of coherent states: searching a quantum database. Phys Rev A. 2007;76(2):022326. doi:10.1103/PhysRevA.76.022326.

Sedlák M, Ziman M, Bužek V, Hillery M. Unambiguous identification of coherent states. II. Multiple resources. Phys Rev A. 2009;79(6):062305. doi:10.1103/PhysRevA.79.062305.

Bartušková L, Černoch A, Soubusta J, Dušek M. Programmable discriminator of coherent states: experimental realization. Phys Rev A. 2008;77(3):034306. doi:10.1103/PhysRevA.77.034306.

Pirandola S. Quantum reading of a classical digital memory. Phys Rev Lett. 2011;106(9):090504. doi:10.1103/PhysRevLett.106.090504.

Pirandola S, Lupo C, Giovannetti V, Mancini S, Braunstein SL. Quantum reading capacity. New J Phys. 2011;13(11):113012. doi:10.1088/1367-2630/13/11/113012.

Lupo C, Pirandola S, Giovannetti V, Mancini S. Quantum reading capacity under thermal and correlated noise. Phys Rev A. 2013;87(6):062310. doi:10.1103/PhysRevA.87.062310.

Lloyd S. Enhanced sensitivity of photodetection via quantum illumination. Science. 2008;321(5895):1463-5. doi:10.1126/science.1160627.

Tan S-H, Erkmen B, Giovannetti V, Guha S, Lloyd S, Maccone L, Pirandola S, Shapiro J. Quantum illumination with Gaussian states. Phys Rev Lett. 2008;101(25):253601. doi:10.1103/PhysRevLett.101.253601.

Nair R. Discriminating quantum-optical beam-splitter channels with number-diagonal signal states: applications to quantum reading and target detection. Phys Rev A. 2011;84(3):032312. doi:10.1103/PhysRevA.84.032312.

Spedalieri G, Lupo C, Mancini S, Braunstein SL, Pirandola S. Quantum reading under a local energy constraint. Phys Rev A. 2012;86(1):012315. doi:10.1103/PhysRevA.86.012315.

Tej JP, Devi ARU, Rajagopal AK. Quantum reading of digital memory with non-Gaussian entangled light. Phys Rev A. 2013;87(5):052308. doi:10.1103/PhysRevA.87.052308.

Ragy S, Adesso G. Nature of light correlations in ghost imaging. Sci Rep. 2012;2:651. doi:10.1038/srep00651.

Lopaeva ED, Ruo Berchera I, Degiovanni IP, Olivares S, Brida G, Genovese M. Experimental realization of quantum illumination. Phys Rev Lett. 2013;110:153603. doi:10.1103/PhysRevLett.110.153603.

Zhang Z, Tengner M, Zhong T, Wong FNC, Shapiro JH. Entanglement’s benefit survives an entanglement-breaking channel. Phys Rev Lett. 2013;111:010501. doi:10.1103/PhysRevLett.111.010501.

Ragy S, Berchera IR, Degiovanni IP, Olivares S, Paris MGA, Adesso G, Genovese M. Quantifying the source of enhancement in experimental continuous variable quantum illumination. J Opt Soc Am B. 2014;31(9):2045-50. doi:10.1364/JOSAB.31.002045.

Farace A, De Pasquale A, Rigovacca L, Giovannetti V. Discriminating strength: a bona fide measure of non-classical correlations. New J Phys. 2014;16(7):073010.

Adesso G. Gaussian interferometric power. Phys Rev A. 2014;90:022321. doi:10.1103/PhysRevA.90.022321.

Weedbrook C, Pirandola S, Thompson J, Vedral V, Gu M. Discord empowered quantum illumination. arXiv:1312.3332 (2013).

Roga W, Buono D, Illuminati F. Device-independent quantum reading and noise-assisted quantum transmitters. arXiv:1407.7063 (2014).

Sedlák M, Ziman M, Bužek V, Hillery M. Unambiguous comparison of ensembles of quantum states. Phys Rev A. 2008;77(4):042304. doi:10.1103/PhysRevA.77.042304.

Sentís G, Bagan E, Calsamiglia J, Muñoz-Tapia R. Multicopy programmable discrimination of general qubit states. Phys Rev A. 2010;82(4):042312. doi:10.1103/PhysRevA.82.042312. See also Erratum, Physical Review A 2011;83:039909.

Gill RD, Levit BY. Applications of the Van Trees inequality: a Bayesian Cramér-Rao bound. Bernoulli. 1995;1(1-2):59-79.

Guta M, Jencova A. Local asymptotic normality in quantum statistics. Commun Math Phys. 2007;276(2):341-79. doi:10.1007/s00220-007-0340-1.

Guta M, Kahn J. Local asymptotic normality for qubit states. Phys Rev A. 2006;73:052108. doi:10.1103/PhysRevA.73.052108.

Kahn J, Guta M. Local asymptotic normality for finite dimensional quantum systems. Commun Math Phys. 2009;289(2):597-652. doi:10.1007/s00220-009-0787-3.

Gill RD, Guta M. On asymptotic quantum statistical inference. In: Banerjee M, Bunea F, Huang J, Koltchinskii V, Maathuis MH, editors. From probability to statistics and back: high-dimensional models and processes – a festschrift in honor of Jon A. Wellner. Beachwood: Institute of Mathematical Statistics; 2013. p. 105-27. doi:10.1214/12-IMSCOLL909.

Niset J, Acín A, Andersen U, Cerf N, García-Patrón R, Navascués M, Sabuncu M. Superiority of entangled measurements over all local strategies for the estimation of product coherent states. Phys Rev Lett. 2007;98(26):260404. doi:10.1103/PhysRevLett.98.260404.

Acknowledgements

We acknowledge John Calsamiglia, Ramon Muñoz-Tapia and Stefano Pirandola for fruitful discussions and feedback. GS was supported by the Spanish MINECO, through Contract No. FIS2013-40627-P, the Generalitat de Catalunya CIRIT Project No. 2014 SGR 966 and FPI Grant No. BES-2009-028117. MG was supported by the EPSRC Grant No. EP/J009776/1. GA was supported by the Foundational Questions Institute Grant No. FQXi-RFP3-1317.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed to the derivation and analysis as well as to the preparation of the manuscript. GS had the leading role in performing the technical calculations.

Appendices

Appendix 1: Trace norm for the collective strategy

The global states that need to be discriminated in the collective strategy are \(\bar{\sigma}_{1}\) and \(\bar{\sigma}_{2}\). As shown in the main text, the first can be expressed as [cf. Eq. (11)]

whereas the second admits an asymptotic expansion [cf. Eq. (14)]

as the result of taking the limit \(n\to\infty\) up to order \(1/n\) in Eq. (10). Computing the arising averages (see Appendix 4), the terms in Eq. (43) take the explicit form

where

We now apply perturbation theory to compute the trace norm \(\Vert \bar {\sigma}_{1}-\bar{\sigma}_{2} \Vert _{1}\) in the asymptotic limit \(n\to\infty\), up to order \(1/n\), using Eqs. (42) and (43). We start by expressing the trace norm as

where \(A=\bar{\sigma}_{1} -\bar{\sigma}_{2}^{(0)}\), \(B=-\bar{\sigma }_{2}^{(1)}\), \(C=-\bar{\sigma}_{2}^{(2)}\), and \(\gamma_{j}\) is the jth eigenvalue of Γ, which admits an expansion of the type \(\gamma_{j} = \gamma_{j}^{(0)} + \gamma_{j}^{(1)}/\sqrt{n} + \gamma_{j}^{(2)}/n\). The matrix Γ belongs to the Hilbert space \(\mathcal{H}_{\infty}\otimes\mathcal{H}_{3}\), i.e., the first mode is described by the infinite dimensional space generated by the Fock basis, and the second mode by the three-dimensional space spanned by the linearly independent vectors \(\{\vert -\alpha_{0} \rangle, \vert 0 \rangle ,\vert 1 \rangle\}\) (we will see that the contribution of \(\vert 2 \rangle\) vanishes, hence it is not necessary to consider a fourth dimension). Writing the eigenvalue equation associated to \(\gamma_{j}\) and separating the expansion orders, we obtain the set of equations

where \(\psi_{j}\) is the eigenvector associated to \(\gamma_{j}\), which also admits the expansion \(\psi_{j}=\psi_{j}^{(0)}+\psi_{j}^{(1)}/\sqrt {n}+\psi _{j}^{(2)}/n\). Equation (48) tells us that \(\gamma_{j}^{(0)}\) is an eigenvalue of A with associated eigenvector \(\psi_{j}^{(0)}\). We multiply (49) and (50) by \(\langle \psi _{j}^{(0)}\vert \) to obtain

Note that Eq. (52) assumes that there is no degeneracy in the spectrum of Γ at zero order (as we will see, this is indeed the case). From the structure of A we can deduce that the form of its eigenvector \(\psi_{j}^{(0)}\) is

where we have replaced the index j by the pair of indices i, ε. The index i represents the Fock state \(\vert i \rangle\) in the first mode, and the vectors \(\vert v_{\varepsilon}\rangle \) are eigenvectors of \([-\alpha_{0}]-[0]\) and form a basis of \(\mathcal{H}_{3}\) in the second mode. Every eigenvalue of Γ is now labelled by the pair of indices i, ε, where \(i=0,\ldots,\infty\) and \(\varepsilon=+,-,0\): the second mode in A has a positive, a negative, and a zero eigenvalue, to which we associate eigenvectors \(\vert v_{+} \rangle\), \(\vert v_{-} \rangle\) and \(\vert v_{0} \rangle\), respectively. It is straightforward to see that the first two are

where \(N_{\pm}= \sqrt{1 \pm e^{-|\alpha_{0}|^{2}/2}}\). The zero-order eigenvalues of Γ with \(\varepsilon=\pm\) are

The third eigenvector \(\vert v_{0} \rangle\) is orthogonal to the subspace spanned by \(\vert -\alpha_{0} \rangle\) and \(\vert 0 \rangle \), and corresponds to the eigenvalue \(\gamma_{i,0}^{(0)} = 0\).Footnote 12 This eigenvector only plays a role through the overlap \(\langle {1}|{v_{0}}\rangle\), which arises in Eqs. (51) and (52). We thus do not need its explicit form, but it will suffice to express \(\langle{1}|{v_{0}}\rangle\) in terms of known overlaps.

From Eqs. (51) and (53) we readily see that \(\gamma_{i,\varepsilon}^{(1)}=0 \). Using Eqs. (45), (46), (52) and (53) we can express \(\gamma _{i,\varepsilon }^{(2)}\) as

where we have used that, by definition, \(\langle{0}|{v_{0}}\rangle =\langle{\alpha _{0}}|{v_{0}}\rangle=0\). The overlaps in (56) are

Now that we have computed the eigenvalues of Γ, we are finally in condition to evaluate the sum in the right-hand side of Eq. (47). Incorporating the relevant eigenvalues, given by Eqs. (55) and (56), it reads

where

(recall that \(\sum_{i=0}^{\infty}c_{i} = 1\)), and

Appendix 2: Conditional probability \(p(\beta|\alpha)\), Eq. (24)

Given two arbitrary Gaussian states \(\rho_{A}\), \(\rho_{B}\), the trace of their product is

where \(V_{A}\) and \(V_{B}\) are their covariance matrices and δ is the difference of their displacement vectors. For the states \(\rho_{A} \equiv [\sqrt{n}\alpha]\) and \(\rho_{B} \equiv E_{\bar{\beta}}\), we have

where \(\alpha= a_{1} + i a_{2}\), \(\bar{\beta} = \bar{b}_{1} + i \bar{b}_{2}\), r is the squeezing parameter, and ϕ indicates the direction of squeezing in the phase space. In terms of α and \(\bar{\beta}\), Eq. (61) reads

Appendix 3: Trace norm for the E&D strategy

For assessing the performance of the E&D strategy, we want to obtain the error probability in discriminating the state \([0]\) and the posterior state \(\rho(\beta)\), resulting from a heterodyne estimation of the state of the auxiliary mode that provides the estimate β. Under a local Gaussian model around \(\alpha_{0}\) parametrised by the complex variables u and v, these states transform into \([-\alpha _{0}]\) and \(\rho(v)\), respectively, where the second is given by

and where \(p(u|v)\) is given by Eq. (30). The error probability is determined by the trace norm \(\Vert [-\alpha _{0}]-\rho (v) \Vert _{1}\) [cf. Eq. (31)]. To compute it, we first series expand \(\rho(v)\) in the limit \(n\to\infty\), up to order \(1/n\). We name the appearing integrals of u, \(u^{*}\), \(|u|^{2}\), \(u^{2}\), and \((u^{*})^{2}\) over the probability distribution \(p(u|v)\) as \(I_{1}\), \(I_{1}^{*}\), \(I_{2}\), \(I_{3}\), and \(I_{3}^{*}\), respectively. This allows us to write the trace norm as

where

and \(\lambda_{\kappa}\) is the κth eigenvalue of Φ, which admits the perturbative expansion \(\lambda_{\kappa}= \lambda_{\kappa }^{(0)}+\lambda_{\kappa}^{(1)}/\sqrt{n}+\lambda_{\kappa}^{(2)}/n\), just as its associated eigenvector \(\varphi_{\kappa}= \varphi_{\kappa}^{(0)} + \varphi_{\kappa }^{(1)}/\sqrt {n} + \varphi_{\kappa}^{(2)}/n\). Up to order \(1/n\), the matrix Φ has effective dimension 4 since it belongs to the space spanned by the set of linearly independent vectors \(\{\vert -\alpha_{0} \rangle, \vert 0 \rangle, \vert 1 \rangle, \vert 2 \rangle\}\). Hence the index κ has in this case four possible values, i.e., \(\kappa=+,-,3,4\). The zero-order eigenvalues \(\lambda_{\kappa}^{(0)}\), which correspond to the eigenvalues of the rank-2 matrix \(A'\), are

(recall that \(\alpha_{0}\in\mathbb{R}\)). Their associated eigenvectors are \(|\varphi_{\kappa}^{(0)}\rangle= \vert v_{\kappa}\rangle\), where \(\vert v_{\pm}\rangle\) is given by Eq. (54), and, by definition, \(\langle{v_{\kappa}}|{-\alpha _{0}}\rangle=\langle{v_{\kappa}}|{0}\rangle=0\) for \(\kappa=3,4\). From analogous expressions to Eqs. (51) and (52) we can write the first and second-order eigenvalues as

The needed overlaps for computing \(\lambda_{\kappa}^{(1)}\) and \(\lambda _{\kappa}^{(2)}\) are given by Eqs. (57), (58), and

The expressions for the overlaps (62) and (63) actually depend on the dimension of the space that we are considering (four in this case), and they are not unique: there are infinitely many possible orientations of the orthogonal pair of vectors \(\{\vert v_{3} \rangle, \vert v_{4} \rangle\}\) such that both of them are orthogonal to the plane formed by \(\{\vert -\alpha_{0} \rangle, \vert 0 \rangle\}\), which is the only requirement we have. However, one can verify that this choice does not affect the trace norm \(\Vert \Phi \Vert _{1}\), thus we are free to choose the particular orientation that, in addition, verifies \(\langle {v_{3}}|{2}\rangle=0\), yielding the simple expressions (62) and (63).

Finally, we write down the trace norm as

which we use now to obtain the asymptotic expression for the average error probability, defined in Eq. (26). Recall Eq. (29) and note that we have to average Eq. (64) over the probability distribution \(p(v)\). Regarding this average, it is worth taking into account the following considerations. First, the v-dependence of the eigenvalues comes from \(I_{1}\), \(I_{2}\), \(I_{3}\), and its complex conjugates. The integrals needed are given in the last part of Appendix 4. Second, the integration yields \(\lambda_{\kappa}^{(1)}=0\) and hence the order \(1/\sqrt{n}\) term vanishes, as it should. And third, the second-order eigenvalues \(\lambda _{3}^{(2)}\) and \(\lambda_{4}^{(2)}\) are v-independent and positive, so we can ignore the absolute values in Eq. (64). Putting all together, we can express the asymptotic average error probability of the E&D strategy as

where

Making use of Eqs. (65) and (17) we can readily compute the excess risk of the E&D strategy:

Appendix 4: Gaussian integrals

At many points in this paper, we integrate complex-valued functions over the complex plane, weighted by the bidimensional Gaussian probability distribution \(G(u)\). This section gathers the integrals that we need. Recall that \(G(u)\) is defined as

Expressing u either in polar or Cartesian coordinates in the complex plane, i.e., \(u = r e^{i\theta} = u_{1}+i u_{2}\), one can readily check that \(G(u)\) is normalised:

The average of a coherent state \([u]\) over the probability distribution \(G(u)\) can be computed by expressing \(\vert u \rangle\) in the Fock basis \(\{ \vert {k} \rangle\}\). It gives

Note that the result of averaging a coherent state over \(G(u)\) is nothing else than a thermal state with average photon number \(\mu^{2}\).

Variations of the previous integral with different complex functions that we use are

and

For the computations in Appendix 3 we also need to perform Gaussian integrals, this time over the probability distribution \(p(v)\), defined in Eq. (29). We make use of

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sentís, G., Guţă, M. & Adesso, G. Quantum learning of coherent states. EPJ Quantum Technol. 2, 17 (2015). https://doi.org/10.1140/epjqt/s40507-015-0030-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjqt/s40507-015-0030-4