Abstract

Tracking droplets in microfluidics is a challenging task. The difficulty arises in choosing a tool to analyze general microfluidic videos to infer physical quantities. The state-of-the-art object detector algorithm You Only Look Once (YOLO) and the object tracking algorithm Simple Online and Realtime Tracking with a Deep Association Metric (DeepSORT) are customizable for droplet identification and tracking. The customization includes training YOLO and DeepSORT networks to identify and track the objects of interest. We trained several YOLOv5 and YOLOv7 models and the DeepSORT network for droplet identification and tracking from microfluidic experimental videos. We compare the performance of the droplet tracking applications with YOLOv5 and YOLOv7 in terms of training time and time to analyze a given video across various hardware configurations. Despite the latest YOLOv7 being 10% faster, the real-time tracking is only achieved by lighter YOLO models on RTX 3070 Ti GPU machine due to additional significant droplet tracking costs arising from the DeepSORT algorithm. This work is a benchmark study for the YOLOv5 and YOLOv7 networks with DeepSORT in terms of the training time and inference time for a custom dataset of microfluidic droplets.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A subset of machine learning-based tools, called computer vision tools, deal with object identification, classification and tracking in images or videos. State-of-the-art computer vision tools can read handwritten text [1,2,3,4], find objects in images [5,6,7,8], find product defects [8, 9], make a medical diagnosis from medical images with accuracy surpassing humans [10, 11] and object tracking [12, 13], just to name a few. In the last few years, they have been increasingly consolidating their place in all scientific fields and industries as reliable and fast analysis methods.

Computer vision tools have shown remarkable success in studying microfluidic systems. Artificial neural networks, for example, can predict physical observables, such as flow rate and chemical composition, from images of microfluidics systems with high accuracy, thus reducing hardware requirements to measure these quantities in an microfluidics experiment [14, 15]. More recently, a convolutional autoencoder model was trained to predict stable vs unstable droplets from their shapes within a concentrated emulsion [16].

Another application of computer vision tools in microfluidics is tracking droplets. Droplet recognition and tracking in experiments such as ref. [17,18,19] and in simulation studies [20, 21] can yield rich information without needing human intervention. For example, counting droplet numbers, measuring flow rate, observing droplets size distribution and computing statistical quantities are cumbersome to measure with the manual marking of the droplets across several frames. Two natural questions, while using computer vision tools for image analysis, are i) how accurate the application is in terms of finding and tracking the objects, and ii) how fast the application is in analyzing each image. A typical digital camera operates at 30 frames per second (fps), thus one challenge is to analyze the images at the same or higher rate for real-time applications.

Along with a few other algorithms, You Only Look Once (YOLO) has the capability to analyze images at a few hundred frames per second [22, 23] and is designed to detect 80 classes of objects in a given image. The very first version of YOLO was introduced back in 2015 and the subsequent versions have been focused on making the algorithm faster and more accurate at detecting objects. The latest release of YOLO is its 7th version [24], with a reported significant gain in speed and accuracy for object detection in standard datasets containing several objects in realistic scenes. In our previous study, we trained YOLO version 5 and DeepSORT for real-time droplet identification and tracking in microfluidic experiments and simulations [25, 26], and we reported the image analysis speed for various YOLOv5 models. In this one, we train the latest YOLOv7 models along with DeepSORT and compare performance and image analysis speed of these models with the previous one. In particular, this paper studies and compares training time, droplet detection accuracy and inference time for an application that combines YOLOv5/YOLOv7 with DeepSORT for droplet recognition and tracking.

2 Experimental methods

The images analyzed in this study were obtained from a microfluidic device for the generation of droplets exploiting a flow-focusing configuration (scheme of the device in Fig. 1). The device has two inlets for oil flow (length: 7 mm, width: 300 \(\upmu \hbox {m}\), depth: 500 \(\upmu \hbox {m}\)), one inlet for the flow of an aqueous solution (length: 5 mm, width: 500 \(\upmu \hbox {m}\), depth: 500 \(\upmu \hbox {m}\)), a Y-shaped junction for droplet generation and an expansion channel. The latter is connected to an outlet for collecting the two-phase emulsion. The device was realized by using a stereolithography system (Enviontec, Micro Plus HD) and the E-shell®600 (Envisiontec) as pre-polymer. The continuous phase consists of silicone oil (Sigma-Aldrich, oil viscosity 350 cSt at \(25^{\circ }\hbox {C}\)), while an aqueous solution constitutes the dispersed phase. The latter was made by dissolving 7 mg of a black pigment (Sigma Aldrich, Brilliant Black BN) in 1 mL of distilled water. Both phases were injected through the inlets at constant flow rates by a programmable syringe pump with two independent channels (Harvard Apparatus, model 33). The images analyzed in this study were obtained by using a flow rate of 10 \(\upmu \)l/min and 150 \(\upmu \)l/min for the dispersed phase and the continuous phase, respectively. The droplets have average diameter of 185 \(\upmu \hbox {m}\). The droplet formation is imaged by using a stereomicroscope (Leica, MZ 16 FA) and a camera (Photron, fastcam APX RS). The fast camera acquired the images at 3000 frames per second (fps). This image capture rate is far higher than any present algorithm’s real-time object detection capabilities. The image playback rate is to 30 fps. The sequences of images were stored as AVI video files. Later, images from the video were used to train YOLO and DeepSORT models as described in the following section.

3 Training YOLOv5 and YOLOv7 models

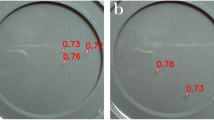

The steps required to train YOLOv5 and YOLOv7 are identical. First, a training dataset is prepared by manually annotating 1000 images taken from a microfluidics experiment as described in Sect. 2. Each image in this dataset has approximately 13 to 14 droplets. One example from the training dataset is shown in Fig. 2. The droplets in these images are identified, and the dimensions of a rectangle that fully covers the droplet are noted in a separate text file called the label file. We used PyTorch implementation of YOLOv5 [27] and YOLOv7 [28] to train several YOLO models on an HPC system on a single node containing two Intel(R) Xeon(R) Gold 6240 CPU @ 2.60GHz Cascade Lake and NVIDIA Tesla V100 GPU with 32 GB VRAM. YOLOv5 and YOLOv7 models differ in the number of trainable parameters (see Table 1). YOLOv7 algorithm includes extended efficient layer aggregation networks to enhance the features learned by different feature maps and improve the use of parameters and calculations over its previous versions [22]. A typical training time is mentioned in Table 1.

During the training phase, a subset of data (called a batch) is passed through the network and a loss value is computed using the difference between the network’s predictions and the ground truth provided in the label file. The loss value is then used to update the network’s trainable parameter to minimize the loss in subsequent passes. An epoch is said to be completed when all of the training data is passed through the network. YOLO’s loss calculation takes into account the error in bounding box prediction, error in object detection and error in object classification [22]. The loss value components computed with training and validation data are shown in Fig. 3.

4 Inference with YOLO and DeepSORT

During the training phase, the quality of YOLOv5 and YOLOv7 models is measured with a well-known mean average precision (mAP), which is calculated with an Intersection over Union (IoU) threshold of 0.5 (see Fig. 4). For both versions, mAP value quickly saturates to unity after training with 20 epochs. Similarly, the average of mAP calculated with IoU threshold of 0.5 to 0.95 in steps of 0.05 for YOLOv5 models are observed between 0.9 and 0.94, and for YOLOv7 models, the mAP values are observed between 0.8 and 0.9. These differences in the mAP values are practically insignificant for droplet detection with the YOLOv5 and YOLOv7 models.

After the models are trained, they can be deployed for real-world applications. One challenging milestone for any computer vision application is to use it in real time, i.e., when the image analysis speed exceeds 30 fps. YOLO models on their own do deliver real-time performance. In Tables 2 and 3, we show the total time for droplet identification and tracking, combining YOLOv5/YOLOv7 with DeepSORT on two hardware configurations. Here, we measured YOLO and DeepSORT time as time taken by the functions that include the algorithms to analyze the input. The time to load the input and write the output is not taken into account. The benchmarking study was carried out on an MSI G77 Stealth laptop with i7-12,700 H, 32 GB RAM and NVIDIA RTX 3070 Ti 8 GB VRAM GPU. Two ’X’ in the table shows those YOLOv7 models that require more than 8GB VRAM making them unfeasible to run on RTX 3070 Ti GPU. Running on GPU, we observe approximately 10% improvement in the inference speed for YOLOv7 over YOLOv5. However, additional time by the object tracking algorithm DeepSORT is comparable with heavier YOLO models. 30 FPS is a commonly acceptable threshold for real-time tracking. The single application combining object identification and tracking can deliver real-time tracking with lighter YOLO models (YOLOv5s, YOLOv5m, YOLOv7-tiny and YOLOv7-x), but they fall below the real-time tracking mark with other heavier YOLO models. Finally, a video of droplet tracking is provided in supplemental material (see SM1.avi).

5 Conclusion

This paper studied two versions of YOLO object detector models coupled with DeepSORT tracking algorithms in a single tool we call DropTrack. DropTrack produces bounding boxes and unique IDs for the detected droplets, which help in constructing trajectories of droplets across sequential frames, thus allowing to compute other derived quantities in real time such as droplet flow rate, droplet size distribution, the distance between droplets, local order parameters, etc., which are desired observations in other applications [29,30,31,32]. The benchmarks studied in this work serve as a guide for computational resource requirements to train the networks and mention expected inference time for various models on diverse hardware configurations.

YOLOv5 and YOLOv7 networks were trained with identical training datasets on the same HPC machine with NVIDIA-V100 GPU. The training time per epoch is comparable for lighter YOLOv5 and YOLOv7, but the heavier YOLOv7 models take almost double the time to complete the training.

We observe a significant increase in inference speed in YOLOv7 models compared to their YOLOv5 counterparts, as one would expect. Moreover, we report detailed computational costs on object detection and object tracking routines and the overall performance of the combined application. Lighter YOLO models are much quicker to identify objects in comparison with the time taken by DeepSORT to track them. However, the object identification time increases with the increasing complexity of the object-detecting networks. Thus, it is crucial to choose the right YOLO network and hardware configuration for real-time tracking at the cost of the bounding box accuracy.

Data Availability Statement

All data generated or analyzed during this study are included in this published article and its supplementary files.

References

L. Kang, P. Riba, M. Rusiñol, A. Fornés, M. Villegas, Pay attention to what you read: Non-recurrent handwritten text-line recognition. Pattern Recogn. 129, 108766 (2022). https://doi.org/10.1016/j.patcog.2022.108766

D. Coquenet, C. Chatelain, T. Paquet, End-to-end handwritten paragraph text recognition using a vertical attention network. IEEE Trans. Pattern Anal. Mach. Intell. 45(1), 508–524 (2023). https://doi.org/10.1109/TPAMI.2022.3144899

Darmatasia, M.I. Fanany, Handwriting recognition on form document using convolutional neural network and support vector machines (cnn-svm). in 2017 5th International Conference on Information and Communication Technology (ICoIC7), 1–6 (2017). https://doi.org/10.1109/ICoICT.2017.8074699

S. Ahlawat, A. Choudhary, A. Nayyar, S. Singh, B. Yoon, Improved handwritten digit recognition using convolutional neural networks (cnn). Sensors (2020). https://doi.org/10.3390/s20123344

Z. Zou, K. Chen, Z. Shi, Y. Guo, J. Ye, Object detection in 20 years: A survey. arXiv preprint arXiv:1905.05055 (2019)

K.J. Joseph, S. Khan, F.S. Khan, V.N. Balasubramanian: Towards open world object detection. in: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 5830–5840 (2021)

J. Brownlee, Deep learning for computer vision: image classification, object detection, and face recognition in python. Mach. Learn. Mastery (2019). https://books.google.co.in/books?id=DOamDwAAQBAJ

A. Prabhu, K.V. Sangeetha, S. Likhitha, S. Shree Lakshmi: Applications of computer vision for defect detection in fruits: A review. in: 2021 International Conference on Intelligent Technologies (CONIT), pp. 1–10 (2021). https://doi.org/10.1109/CONIT51480.2021.9498393

A. John Rajan, K. Jayakrishna, T. Vignesh, J. Chandradass, T.T.M. Kannan, Development of computer vision for inspection of bolt using convolutional neural network. Mater. Today Proc. 45, 6931–6935 (2021). https://doi.org/10.1016/j.matpr.2021.01.372. International Conference on Mechanical, Electronics and Computer Engineering 2020: Materials Science

A. Esteva, K. Chou, S. Yeung, N. Naik, A. Madani, A. Mottaghi, Y. Liu, E. Topol, J. Dean, R. Socher: Deep learning-enabled medical computer vision. npj Digital Medicine 4(1), 5 (2021). https://doi.org/10.1038/s41746-020-00376-2

A. Bhargava, A. Bansal, Novel coronavirus (covid-19) diagnosis using computer vision and artificial intelligence techniques: a review. Multimedia Tools Appl. 80(13), 19931–19946 (2021). https://doi.org/10.1007/s11042-021-10714-5

Z. Soleimanitaleb, M.A. Keyvanrad, A. Jafari: Object tracking methods:a review. In: 2019 9th International Conference on Computer and Knowledge Engineering (ICCKE), pp. 282–288 (2019). https://doi.org/10.1109/ICCKE48569.2019.8964761

S. Xu, J. Wang, W. Shou, T. Ngo, A.-M. Sadick, X. Wang, Computer vision techniques in construction: A critical review. Arch. Comput. Methods Eng. 28(5), 3383–3397 (2021). https://doi.org/10.1007/s11831-020-09504-3

P. Hadikhani, N. Borhani, S.M.H. Hashemi, D. Psaltis, Learning from droplet flows in microfluidic channels using deep neural networks. Sci. Rep. 9, 8114 (2019)

Y. Mahdi, K. Daoud, Microdroplet size prediction in microfluidic systems via artificial neural network modeling for water-in-oil emulsion formulation. J. Dispersion Sci. Technol. 38(10), 1501–1508 (2017). https://doi.org/10.1080/01932691.2016.1257391

J.W. Khor, N. Jean, E.S. Luxenberg, S. Ermon, S.K.Y. Tang, Using machine learning to discover shape descriptors for predicting emulsion stability in a microfluidic channel. Soft Matter 15, 1361–1372 (2019). https://doi.org/10.1039/C8SM02054J

M. Bogdan, A. Montessori, A. Tiribocchi, F. Bonaccorso, M. Lauricella, L. Jurkiewicz, S. Succi, J. Guzowski, Stochastic jetting and dripping in confined soft granular flows. Phys. Rev. Lett. 128, 128001 (2022). https://doi.org/10.1103/PhysRevLett.128.128001

B. Kintses, L.D. van Vliet, S.R. Devenish, F. Hollfelder, Microfluidic droplets: new integrated workflows for biological experiments. Curr. Opin. Chem. Biol. 14(5), 548–555 (2010). https://doi.org/10.1016/j.cbpa.2010.08.013

S.-Y. Teh, R. Lin, L.-H. Hung, A.P. Lee, Droplet microfluidics. Lab Chip 8, 198–220 (2008). https://doi.org/10.1039/B715524G

A. Montessori, M. Lauricella, A. Tiribocchi, S. Succi, Modeling pattern formation in soft flowing crystals. Phys. Rev. Fluids 4(7), 072201 (2019). https://doi.org/10.1103/PhysRevFluids.4.072201

A. Montessori, M.L. Rocca, P. Prestininzi, A. Tiribocchi, S. Succi, Deformation and breakup dynamics of droplets within a tapered channel. Phys. Fluids 33(8), 082008 (2021). https://doi.org/10.1063/5.0057501

J. Redmon, S. Divvala, R. Girshick, A. Farhadi, You only look once: Unified, real-time object detection. in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 779–788 (2016). https://doi.org/10.1109/CVPR.2016.91

J. Redmon, A. Farhadi, Yolov3: An incremental improvement. ArXiv:1804.02767v1 (2018)

C.-Y. Wang, A. Bochkovskiy, H.-Y.M. Liao: YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv preprint arXiv:2207.02696 (2022)

M. Durve, A. Tiribocchi, F. Bonaccorso, A. Montessori, M. Lauricella, M. Bogdan, J. Guzowski, S. Succi, Droptrack - automatic droplet tracking with yolov5 and deepsort for microfluidic applications. Phys. Fluids 34(8), 082003 (2022). https://doi.org/10.1063/5.0097597

M. Durve, F. Bonaccorso, A. Montessori, M. Lauricella, A. Tiribocchi, S. Succi, Tracking droplets in soft granular flows with deep learning techniques. Eur. Phys. J. Plus 136(8), 864 (2021). https://doi.org/10.1140/epjp/s13360-021-01849-3

YOLOv5 git repository. https://github.com/ultralytics/yolov5

YOLOv7 git repository. https://github.com/WongKinYiu/yolov7

D. Ferraro, M. Serra, D. Filippi, L. Zago, E. Guglielmin, M. Pierno, S. Descroix, J.-L. Viovy, G. Mistura, Controlling the distance of highly confined droplets in a capillary by interfacial tension for merging on-demand. Lab Chip 19(1), 136–146 (2019). https://doi.org/10.1039/C8LC01182F

Y.-C. Tan, J.S. Fisher, A.I. Lee, V. Cristini, A.P. Lee, Design of microfluidic channel geometries for the control of droplet volume, chemical concentration, and sorting. Lab Chip 4, 292–298 (2004). https://doi.org/10.1039/B403280M

S. Hettiarachchi, G. Melroy, A. Mudugamuwa, P. Sampath, C. Premachandra, R. Amarasinghe, V. Dau, Design and development of a microfluidic droplet generator with vision sensing for lab-on-a-chip devices. Sens. Actuators, A 332, 113047 (2021). https://doi.org/10.1016/j.sna.2021.113047

A. Khater, M. Mohammadi, A. Mohamad, A.S. Nezhad, Dynamics of temperature-actuated droplets within microfluidics. Sci. Rep. 9(1), 3832 (2019). https://doi.org/10.1038/s41598-019-40069-9

Acknowledgements

The authors acknowledge funding from the European Research Council Grant Agreement No. 739964 (COPMAT) and ERC-PoC2 grant No. 101081171 (DropTrack). We gratefully acknowledge the HPC infrastructure and the Support Team at Fondazione Istituto Italiano di Tecnologia.

Funding

Open access funding provided by Istituto Italiano di Tecnologia within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

MD, AT, AM, ML and JMT performed data labeling, neural network training and output data analysis. SO and AC performed experiments to generate training data. SS and DP designed the study. MD, AT, ML and SO wrote and revised the manuscript.

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Durve, M., Orsini, S., Tiribocchi, A. et al. Benchmarking YOLOv5 and YOLOv7 models with DeepSORT for droplet tracking applications. Eur. Phys. J. E 46, 32 (2023). https://doi.org/10.1140/epje/s10189-023-00290-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epje/s10189-023-00290-x