Abstract

Particle physics today faces the challenge of explaining the mystery of dark matter, the origin of matter over anti-matter in the Universe, the origin of the neutrino masses, the apparent fine-tuning of the electro-weak scale, and many other aspects of fundamental physics. Perhaps the most striking frontier to emerge in the search for answers involves new physics at mass scales comparable to familiar matter, below the GeV-scale, or even radically below, down to sub-eV scales, and with very feeble interaction strength. New theoretical ideas to address dark matter and other fundamental questions predict such feebly interacting particles (FIPs) at these scales, and indeed, existing data provide numerous hints for such possibility. A vibrant experimental program to discover such physics is under way, guided by a systematic theoretical approach firmly grounded on the underlying principles of the Standard Model. This document represents the report of the FIPs 2022 workshop, held at CERN between the 17 and 21 October 2022 and aims to give an overview of these efforts, their motivations, and the decadal goals that animate the community involved in the search for FIPs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Executive summary

The origin of neutrino masses and oscillations, the nature of dark matter and dark energy, the mechanism generating the matter-antimatter asymmetry in our universe or the origin of the hierarchy of scales are some of the deepest mysteries in modern particle physics. So far the vast majority of experimental efforts to answer these questions have been driven by theoretical arguments favoring searches for new particles with sizeable couplings to the Standard Model (SM) and masses commensurate with the Higgs boson. Exploring this paradigm is one of the main goals of the Large Hadron Collider (LHC) at CERN.

An alternative framework to explain new phenomena introduces new light particles interacting only feebly with Standard Model fields. Feebly-interacting particles (FIPs) are currently one of the most debated and discussed topics in fundamental physics, recognized by the recent European Strategy for Particle Physics as one of the compelling fields to explore in the next decade. The workshop FIPs 2022, held at CERN between the 17th and 21st of October 2022, has played a central role in this worldwide discussion. This event was the second edition of a series of workshops fully dedicated to the physics of feebly-interacting particles, gathering experts from particle physics, nuclear physics, astroparticle physics and cosmology. About 320 participants from many countries, including representatives from all major particle physics laboratories, participated in the discussions.

A schematic of low mass hidden valleys/hidden sectors/FIPs. The hidden sector is separated from the visible sector by a barrier, which corresponds to feeble interactions mediated by either a heavy state (labeled here by the GUT scale), an intermediate scale like the weak scale, or some light state. Within the hidden sector(s), there are many types of states which can comprise the matter and force content, and we have named a few (far from complete) examples. hidden valleys are motivated by many UV-complete theories, such as string theory and grand unification, both of which tend to give rise to a wide variety of states at low energy

The workshop was organized around three main themes, that represent the backbone of the present Report: (i) ultra-light FIPs and their connection with cosmology and astrophysics; (ii) light Dark Matter in particle, astro-particle and cosmology; (iii) Heavy Neutral Leptons and their connection with active neutrino physics and neutrino-less double beta decays experiments. In addition, dedicated sessions on New Ideas featured 20 talks given by brilliant young researchers, offering them an opportunity to discuss their work in front of renowned researchers while attending a series of presentation on a wide range of topics.

The objective of FIPs 2022 was to set a common vision for a multi-disciplinary and interconnected approach to fundamental questions in modern particle physics. Their breadth and deep interconnection requires more than ever a diversified research portfolio with a large set of experimental approaches and techniques, together with strong and focused theoretical involvement. No single experiment or laboratory can cover the large parameter space in terms of masses and couplings that FIPs models can suggest, calling for a broad, diverse, but interconnected network of experimental projects.

FIPs 2022 aims to shape the scientific programme in Europe for the physics of feebly-interacting particles. Such a programme would extend far beyond the traditional searches performed at colliders and extracted beam lines, including elements from star evolution, cosmological models, indirect dark matter detection, neutrino physics, gravitational waves physics and AMO (atomic-molecular-optical) physics. In that context, synergies between experimental facilities are paramount, calling for deeper collaboration across main laboratories in the world.

In a geo-political situation with unprecedented economical, political and climate-oriented challenges, we believe that a network of interconnected laboratories can become a sustainable, flexible, and efficient way of addressing the particle physics questions in this century.

2 Introductory talks

2.1 The FIP/hidden sector/valley paradigm: opportunities and challenges – K. Zurek

Author: Kathryn Zurek, <kzurek@caltech.edu>

Traditionally, high energy physics has focused on chasing particles at colliders at ever higher energies. However, light states may reside in a hidden sector, at mass scales well below the weak scale, and remain hidden from the visible sector on account of a high barrier, corresponding to sub-weak interactions between the sectors. This situation, known as the hidden valley (HV)/sector/feebly interacting particle (FIP) framework encompasses a broad class of theories, and is shown schematically shown in Fig. 1. A proposal to systematically search for light hidden sectors, from a diverse class of hidden sectors, at colliders was made in Ref. [1], with the class of low-mass Hidden sectors called hidden valleys. A field of research around hidden sector theories and their phenomenological signatures has grown up, which we attempt to overview here [2,3,4,5].

Examples of three types of signatures that occur via the three types of mediators shown in Fig. 1. In the left figure, we show flavor-violating interactions mediated by a heavy state, as discussed in Ref. [6]. In the center figure, we show displaced decays to a light hidden sector through a weak scale state. And in the right figure, intensity production of a light vector state which subsequently decays to dark matter [7]

The examination of HVs or FIP sectors has gained momentum along with a shift away from a single-minded focus on states at ever higher energies. What has emerged is a complex tapestry of new physics phenomena that occur at lower energies but are still very weakly coupled to the Standard Model (SM). Further, as emphasized in Ref. [1], hidden sectors appear generically in many UV complete theories, such as string theory. Such a hidden sector need not solve the SM’s problems, but it does naturally provide a dark matter candidate. One only requires some symmetry to stabilize at least one of the states in the hidden sector.

The structure of the hidden sector can take many forms: it can be strongly or weakly coupled, containing dark photons or Higgses and/or a dark SU(N) with \(N_f\) flavours. It can have sterile neutrinos or asymmetric dark matter, and have a wide range of dynamics associated with it. The highly malleable nature of low mass hidden sectors, which couple only weakly to the SM, has led, in the last 10–15 years, to a wide range of model building efforts, from light hidden supersymmetric sectors, composite dark matter (from asymmetric and flavored dark matter in many realizations such as nugget and atomic dark matter), to hidden valleys that do the work of baryogensis. The list of theories would be too broad to give justice to here, so we refer the reader to community reports [2,3,4] (and references therein) to grasp the scope of the efforts. There has been accordingly a renewal in the search for mechanisms to set the relic abundance from asymmetric dark matter, freeze-in, \(3 \rightarrow 2\) mechanisms, dark Affleck-Dine, and WIMPless miracles, to name a few.

While the model building activity has been inspiring, the challenge is to detect such sectors through their interactions with the SM, extending the symmetry structure of the SM. The types of FIP signatures depend on the nature of the mediator, as shown schematically in Fig. 2. Weak scale mediators can be produced resonantly at high energy colliders. The mediators then decay to a high multiplicity of states in the hidden sector. Those light states may then decay back to the SM through the heavy mediators, in some cases with long lifetimes. This is the classic Hidden Valley set-up. If the mediator is much lighter than the weak scale, low energy intensity experiments can produce the FIP. A broad range of proposals have been made in the last decade, from the Heavy Photon Search, DarkLight and APEX (see Ref. [4] for details). Finally, heavy mediators between the hidden and visible sectors can mediate rare decays or flavor violating processes, as frequently occurs in models of asymmetric or flavored DM [6]. In short, the hidden valley/sector/FIP revolution has dramatically reshaped the theoretical and experimental particle physics landscape, and the corresponding search for Physics Beyond the Standard Model (BSM).

Dark matter relic density can be a guide for where to look for light dark matter. The same process that sets the relic abundance (or relic asymmetry) can also mediate a scattering process in a direct detection experiment, shown here as dark matter production through \(e^+e^-\) annihilation (freeze-in) or asymmetric freeze-out. The top and bottom figures (taken from Refs. [3, 11] show the predicted interaction cross-section as a shaded band

Traditionally, the search for physics BSM has focused on new states at the weak scale, as solutions to the hierarchy problem. If we relax the motivation to search for new physics only as solutions to the SM’s puzzles, where do we focus our attention? One powerful motivation is to search for suitable dark matter candidates, able to satisfy both the observed relic density and other cosmological constraints on dark sectors. The old paradigm is weak scale dark matter, with the relic abundance fixed by freeze-out. Fixing the dark matter abundance to be the observed abundance naturally places the freeze-out cross-section in the electroweak range. This paradigm is highly testable, either through annihilation byproducts with the predicted cross-section, \(\langle \sigma v \rangle \simeq 3 \times 10^{-26} \text{ cm}^3/\text{s }\), or through Higgs-interacting dark matter in direct detection experiments.

However, even in a hidden sector, the relic abundance may still be set by interactions with the SM. These same interactions give rise to predictive signatures in terrestrial experiments, via crossing symmetry. For example, rare \(e^+e^-\) annihilations to produce DM through freeze-in predict a scattering cross-section that can be probed in direct detection experiments. In addition, asymmetric dark matter has a minimum annihilation cross-section to be consistent with observations in the cosmic microwave background, which also gives rise to a predictive direct detection cross-section. These points are demonstrated in Fig. 3. More generally, light mediators yield large scattering cross-sections in direct detection experiments [3, 8, 9], and give rise to the possibility of direct DM production in intensity experiments, such as SHIP, FASER, Codex and MATHUSLA (see Ref. [4] for a review). In some cases, the relic abundance requirement also gives rise to predictive production rates in intensity experiments such as LDMX, as emphasized in Ref. [10].

A broad array of states, and observational signatures, is predicted in a UV-complete theory of dark matter. Here we show schematically a case developed in Ref. [13], where the unified structure of the theory gives rise to many states (shown in the left side of the figure), as well as a broad range of signatures at colliders (shown in the right panel. This includes the displaced decay of connector particles to a high multiplicity of final states, including the possibility of dark showers and semi-visible jets

As there has been an effort to build and modify low intensity experiments to search for FIPs, there has also been developed in the last decade a suite of experiments to search for sub-GEV light dark matter in direct detection experiments. These efforts were summarized in the community document Ref. [4]. The standard nuclear recoil experiments, which are kinematically well-matched to weak scale dark matter, do not operate well on sub-nuclear mass scales. Instead, research has turned to the development of electronic excitations, and collective modes such as phonons and magnons, which have smaller gaps (eV for electronic excitation and meV for collective excitation) opening the way for detection of light dark matter that deposits less energy.

After this discussion, one has the impression that there are a broad range of signatures in a broad range of models. Some may give up hope that any one of them will lead us to dark matter. While we are offered no guarantees let me close by offering an example of a concrete model of dark matter which gives rise to a broad range of signals from a single, UV complete model. We have argued that such a broad range of signals are the rule, rather than the exception, in hidden valley models. To be concrete, we consider asymmetric dark matter as proposed in Ref. [12]. Asymmetric dark matter requires interactions that, from the SM perspective, violate baryon or lepton number (but conserve these global symmetries when the hidden dark matter sector is included). These operators are naturally higher dimension and of the form

where M is the mass scale of the baryon- or lepton-number violating interaction, which will generally be above the weak scale. Such operators naturally induce flavor-violating interactions, as discussed at length in Ref. [6].

The ADM interaction generates an asymmetric yield of particles and anti-particles. Particles and anti-particles must then be annihilated to allow the small asymmetry to shine through. In the SM this occurs naturally through the presence of forces, e.g. \(e^+ e^- \rightarrow \gamma \gamma \). This most naturally suggests that ADM exists in a hidden sector with dark forces that mediate such interactions to leave us with only the asymmetric component. Both the light and heavy forces that mediate interactions in such an ADM model give rise to observable signatures that we have discussed, such a flavor violation and displaced decays at colliders, as well as signatures at low energy colliders through kinetic mixing of the dark photon.

While the considerations just discussed appear very generically from a bottom-up approach, they appear in a UV complete theory of ADM. To be concrete, we utilize the model proposed recently in Ref. [13], where the mass scale of ADM was generated by unification of the dark gauge group with SM SU(3), where the confinement of both dark and visible sectors is triggered by a common origin. A schematic is shown in Fig. 4. Hidden Valleys were motivated from the top-down (e.g. in string constructions or grand unification), but engineered from the bottom-up approach. In dark unification, we generate the whole panoply of signatures at a collider, from Connector particles that decay promptly to missing energy plus jets, to pairs of displaced vertices, as well as semi-visible jets.

To summarize, there is no single way to search for signatures of hidden sectors. In general, UV complete models feature multiple signatures, and will require multiple different experiments working together to identify the nature of the dark sector. This include light dark matter detection, prompt and displaced decays of light states at a collider, searches for light states at intensity machines, flavor, and even gravitational wave signatures from a hidden sector. Fortunately, there is now a community of people dedicated to probing light dark sectors from many facet.

3 Ultra-light FIPs: theory and experiments

3.1 Introduction

The term ultralight FIP is normally used to indicate the lowest mass range of feebly interacting particles. There is not a clear and crisp definition of the ultralight mass region, but it is common to adopt the \(\sim \)keV threshold (see, e.g., Sect. 2.2). Even lighter FIPs, in the sub-eV mass region, are normally identified as WISPs, which stands for weakly interacting sub-eV (or slim) particles (see, e.g., Ref. [14]). WISPs may play an important role in cosmology, as discussed in the contribution in Sect. 2.3 below.

Landscape overview of experiments available to probe ultralight axion dark matter, in the context of canonical QCD axion models. Green regions denote mass ranges already probed with laboratory dark matter searches for the axion-photon coupling. Red and blue regions denote mass ranges to be probed with ongoing and future laboratory dark matter searches for the axion-photon and axion-nucleon couplings, respectively. Grey regions indicate astrophysical bounds on the axion-photon coupling. Figure from Ref. [23]

Ultralight FIPs have been studied extensively over the past several decades. Arguably, the most well known and studied example is the QCD axion. Originally proposed as a solution of the strong CP problem [15,16,17,18], axions were soon recognized as one of the primary dark matter candidates [19,20,21] (see Refs. [22, 23] for recent accounts). Furthermore, as discussed below (see, in particular, Sect. 2.4), they may play significant roles in astrophysics. Besides QCD axions, the ultralight FIP panorama includes other well-studied candidates such as axion-like particles (ALPs), dark photons and light scalars. On purely theoretical grounds, it is possible to construct several models of ultralight FIPs by considering different sets of low-dimension operators. The discussion of appropriate criteria, such as simplicity or technical naturalness, to classify the different models is among the aims of the FIP Physics Center (see Sect. 2.2).

As discussed in the following contributions, the phenomenological motivations for ultralight FIPs are numerous, and span from particle physics to astrophysics and cosmology. A broad range of probes is available to study FIPs, including laboratory, astrophysical and cosmological probes. The types of signatures can be further categorised depending on whether the ultralight FIPs contribute to the dark matter, dark radiation or dark energy (i.e., are of a cosmological nature), are produced in stars (i.e., are of an astrophysical nature), or are produced in the laboratory (e.g., via FIP-photon interconversion or as virtual mediators of new forces). Searches for FIPs of these different natures generally involve different sets of assumptions, providing complementarity between these different types of searches.

Landscape overview of experiments available to probe ultralight scalar and vector dark matter. Pink regions denote searches for scalar boson dark matter, green regions denote searches for vector boson dark matter via gauge couplings, and blue regions denote searches for vector boson dark matter via the kinetic mixing portal. Figure from Ref. [24]

A general feature of ultralight FIP models is the enormous potential of the astrophysical and cosmological signatures they can yield. Their feeble couplings can allow them to escape dense environments (such as stellar cores), providing a useful telescope into regions that are inaccessible to photons (see Sect. 2.4). Furthermore, being very light and feebly interacting, such FIPs are often stable (or have extremely long lifetimes), and are thus good dark matter candidates. Several non-thermal production mechanisms allow an efficient cosmological production of a non-relativistic population even in the case of extremely light (sub-eV) FIPs. In this sense, the sub-eV region is particularly interesting and well-motivated. If extremely light particles are to account for the totality (or a large fraction) of the dark matter in the universe, they must have huge occupation numbers and thus they would exhibit wavelike behavior. This observation turns out to have rich consequences. In particular, the detection of wavelike dark matter requires specific techniques which have advanced impressively in the past decade, in particular precision measurements and probes (see Figs. 5 and 6 for landscape overviews). An increasing number of these probes adopt the use of quantum techniques and technologies. Numerous contributions in these proceedings present the progress in specific detection techniques as well as near-future expectations. In particular, we report on the status of searches in some of the major laboratories in the world (see Sects. 2.5, 2.6 and 2.7).

The relevance of experimental studies of FIPs in the ultralight region and the progress in specific detection techniques have been long recognized by the international community and have been discussed in recent strategy reports. The US Report on Basic Research Needs for Dark-Matter Small Projects New Initiatives [25] acknowledged that the “Discovery of dark matter waves would provide a glimpse into the earliest moments in the origin of the universe and the laws of nature at ultrahigh energies, beyond what can be probed in colliders”. Several strategic directions have been presented in numerous contributions to the Snowmass 2021 reports (see Figs. 5 and 6) [23, 24, 26,27,28].

In this section, we provide an overview of the main theoretical and experimental results and, in particular, provide an update on the most recent searches and results, as well as future perspectives. In Sect. 2.2, we open with an account of the theoretical panorama, presenting some general criteria to guide the theoretical developments of ultralight FIP models. This defines the FIP Physics Centre approach to classifying and studying ultralight FIPs. Thereafter follow a survey of cosmological (Sect. 2.3) and astrophysical (Sect. 2.4) probes. We note that these probes are not necessarily limited to the sub-eV mass range (although this is the predominant mass region) – for some astrophysical probes, the same methods adopted for the sub-eV mass range also apply for FIP masses up to several keV or even (in the case of supernovae) a few 100 MeV. The following three contributions present the status and perspectives from DESY (Sect. 2.5), the INFN laboratories of Frascati (LNF) and Legnaro (Sect. 2.6), and IBS-CAPP (Sect. 2.7). Following these general reports, Sects. 2.8 and 2.9 present updates on EDM searches, Sect. 2.10 discusses precision measurements of the fine-structure constant, while Sect. 2.11 discusses tests of the fundamental symmetries with matter-antimatter conjugate systems. Sections 2.12 and 2.13 discuss the use of gravitational-wave detectors to search for FIPs. Sections 2.14 to 3.24 present some new theoretical and experimental ideas in the realm of ultralight FIPs. Finally, in Sect. 2.20, we summarise and discuss the outlook of the field.

3.2 Ultra-light FIPs: the FIP physics centre approach – M. Pospelov

Author: Maxim Pospelov, <pospelov@umn.edu> – Joint Session with PSI 2022

The benchmark models formulated by the Physics Beyond Colliders (PBC) Study Group at CERN have played a catalyzing role for the experimental and theoretical studies of MeV-to-GeV scale FIPs. At the moment, there is some interest in expanding this activity towards ultra-light field models, in hope of a similar effect on the field. Here we present a possible approach to such a classification, and formulate some models that can serve as benchmarks.

3.2.1 Principles behind the classification

Ultra-light field (ULF) models is a particular example of FIPs where the masses of particles can be below keV, eV, and sometimes much below. For example the quintessence field models have mass parameters in the potential as small as the Hubble constant today \(\sim 10^{-33}\) eV. In recent years, there has been an intensification of precision measurements/probes of this type of new physics. Many models exist on the market, but a comprehensive list of them has not been created yet.

In devising the models, one could adhere to the following general philosophy:

-

1.

Relative simplicity is still a criterion, as we want a manageable parameter space, and there is no “clear winner” among any of the complicated models.

-

2.

Renormalizability is not a criterion like it was for the majority of the PBC benchmark models before. (The reason is that these models are often in the same class as gravity that by itself is not renormalizable.) That does not prevent us to treat all models within the effective field theory (EFT) framework.

-

3.

Technical naturalness, or stability of the chosen model and all its parameters against radiative corrections without the necessity of extreme fine tuning or unnaturally small cutoff, is the hardest criterion to address. In the past, particle physics literature would tend to adhere to technical naturalness verbatim, and ignore models that tend to contradict this principle. On the other hand, some other communities (Atomic-Molecular-Optical or AMO community, and some cosmologists) tended to ignore this entirely, arguing that more creative UV completion may avoid the problem (that sometimes proves to be correct).

Here we will give mild priority to models with explicit technical naturalness, but would not entirely discard unnatural models if they give promising phenomenology.

Lastly, we also want some relevance of the formulated models for the on-going experimental program of precision physics for BSM. If there is no connection, there is little impetus for FIPs Physic Centre (FPC) of the PBC study group to “maintain” such models. This is a practical consideration, rather than a criterion.

3.2.2 Possible benchmark cases

The set of models we are going to consider is going to be mostly bosonic, not least because bosons can have very large occupation numbers, and this can partially offset the smallness of their coupling. More importantly, light bosons can saturate dark matter. Many models would use \(X \sim \sqrt{2\rho _{\textrm{DM}}/m_X^2}\) ansatz for the amplitude of field X when it saturates the local dark matter energy density \(\rho _{\textrm{DM}}\).

Below I give an account of some reasonable/popular models of ULFs. In the “A list” I will include models that to a large extent conform the idea of technical naturalness.

A category. Technically natural.

M0. “Model 0” is a non-interacting ULF model with some scalar field \(\phi \) and potential \(V(\phi )\) that gives it a slow roll and modifies the equation of state for dark energy, w [29, 30]. These models have been extensively studied in cosmology, but unfortunately they have no other signatures. These models can be technically natural if there is no couplings to the SM, and because it is not clear what the cutoff in these theories is.

M1. Axion-like particles. They are technically natural on account of the shift symmetry, and the shift symmetry is broken softly by the mass. Possible space of mass & couplings: \(\{m_a, g_{a\gamma \gamma }, g_{aee}, g_{app}, g_{ann} \}\) or any combination of those. These are couplings to \(F\tilde{F}\), and the spins of electrons and nucleons. These couplings can be relatively easily mapped to the models/couplings featured in the PBC set [4]. \(m_a\) can be anything including 0. ALPs may or may not saturate DM, and may contribute to the cosmic history [22]. There is a large range of experimental and astrophysical searches of these models.

M2. ULF vector fields. One can have very light vector fields coupled to conserved portals, such as EM current (dark photon), or \(B-L\) current. These vector fields can possibly saturate the DM abundance. Potentially there are many interesting applications with broad-band and resonant searches with a variety of methods depending on their mass [31,32,33].

M3. Light thermal freeze-in dark matter, with possibly sub-eV light mediators (e.g. dark photons). If the mass of mediators is equal to zero, the model starts being equivalent to millicharge. This type of models has many astrophysical applications, direct detection applications, and novel schemes of detecting light millicharged particles (see review [8] for light dark matter).

B category. These are models that could be natural under certain exotic conditions (see e.g. [34, 35]).

M4. Scalar field models (oscillating scalar, or smoothly evolving scalar as in M0) + linear non-derivative couplings to: (4a) to Higgs portal (relaxion) [36]; (4b) to trace of stress-energy tensor; (4c) to spins; 4d) to \(F_{\alpha \beta }^2\) of electromagnetism [37] etc. The reference list here is rather elaborate. Saturating the dark matter density in such models is an option. This type of models have large AMO opportunities as they generally predict the apparent evolution of the coupling constants and masses in time, or an abnormal spin precession.

M5. ULF scalars derivatively coupled to matter fields. (Some variants are called “disformal couplings” in the cosmological literature [38]). The couplings are given by \(T_{\alpha \beta } \partial _\beta \phi \partial _\alpha \phi /\Lambda ^4\), \(H^\dagger H \partial _\alpha \phi \partial _\alpha \phi /\Lambda ^2\) [39], where \(T_{\alpha \beta }\) is the stress-energy tensor, and H is the SM Higgs field. There is not much signatures here for precision physics, other than cosmology studies of \(N_{eff}\) and also collider studies of missing energy. (Also, the model can be put in the “A list” if \(\Lambda \) is at the weak scale and above.)

C category. Technically unnatural, but with interesting phenomenology

M6. Chameleon-type models. (Mass increases inside matter [40]). The couplings needed in the simplest case are \(T_{\alpha \alpha } \phi ^2/\Lambda ^2\), \(H^\dagger H\phi ^2\) [41]. If the scalar field \(\phi \) contributes to DM, the chameleonic mass can keep it outside of the over-dense regions, creating space-varying properties of matter [41, 42].

M7. ULF models with nontrivial clustering properties due to self-interaction. For example, DM out of lumps of light fields (Q-balls). Coupling to matter may introduce transient effects, when e.g. atomic properties temporarily change when a lump passes through [43, 44].

M8. ALP couplings to EDMs. This is inspired by the QCD axion, but the dipole coupling is taken to be much larger than the QCD-derived value [45]. Relevant for some experimental proposals.

3.2.3 Suggested parameter space

M0. \(\mathcal{L} = \frac{1}{2} (\partial \phi )^2 -V(\phi )\). It is reasonable to try several generic possibilities, such as models with “late motion of field” when there is a constant linear forcing, and the value of the field is \(\phi (z=0) = 0\) by construction. Possible models for massive field with some initial condition at early times are defined by the following potentials:

The first model has parameter space \(\left\{ V_0, V^{\prime }\right\} \), and is about dark energy. The last two models have parameter space \(\left\{ V_0, m_0, \phi _0\right\} \) and \(\left\{ V_0, V_1, f\right\} \) respectively, and have dark energy and dark matter associated with them.

M1. This is more or less the same parameter space as in ALPs models. Since we are talking about low energies, it is reasonable, of course, to switch to the language of nucleons rather than quarks and gluons, and therefore we have:

The parameter space is in principle a multi-dimensional \(\{m_a, f_i\}\), and one could take different \(f_i\) slices of it. The initial position of a field, \(a_0\) at earlier times, is also important and a unique \(a_0\), and/or randomly distributed \(a_0\) are possible. Alternatively, all models that aspire to have some contribution to DM, we may quantify their late-time contribution to the DM energy density by a parameter \(\kappa _i \equiv \Omega _i/\Omega _{DM}\), \(i= a,\phi ,V\) etc.

M2. Ultra-light vector fields can have the following Lagrangian:

The parameter space is evidently \(\{m_V, \epsilon \}\) and \(\{m_V, g_{B-L} \}\). (If \(m_V\) is tiny, the model may prefer Dirac SM neutrinos).

M3. The model has the same parameter set as Benchmark model 2 (PBC set). Specifically, one takes small \(m_{A'}\), and \(m_\chi > O(\textrm{keV})\):

and the covariant derivative contains \(g_{d}\) dark gauge coupling. The model parameter space is \(\{ m_V, \epsilon , m_\chi , g_d \}\). The difference with the PBC models, is a smaller mass range, and freeze-in conditions for creating DM.

M4. In this class of models, to the scalar models encountered before (M0) we add

Comments: model A is a more restrictive realization of model C. Model D is basically the same as the M1. Simultaneous presence of e.g. C and D will lead to mass-spin coupling, also searched in experiments.

M5. The parameter space is the mass of “disformal” scalar and its coupling, \(\{m_\phi , \Lambda \}\). (Representative couplings are given by \(T_{\alpha \beta } \partial _\beta \phi \partial _\alpha \phi /\Lambda ^4\) or \(H^\dagger H \partial _\alpha \phi \partial _\alpha \phi /\Lambda ^2\)). On theoretical grounds, we expect that the coupling \(\Lambda \) is larger than the EW scale. See the latest paper Ref. [46].

M6. Chameleon or “symmetron”-type models have a large variety. For example, one can consider

In-medium value of \(H^\dagger H - \langle H^\dagger H\rangle \) is non-zero, and if \(m_\phi ^2\) is small, it has consequences for spatial distribution of \(\phi \), especially if it is dark matter. If \(m_\phi ^2\) is negative, \(\phi \) field will have a nonzero v.e.v. in vacuum, and matter effects can restore symmetry.

M7. The parameter space of models with extended DM objects are difficult to describe in a few numbers. Let us approximate the DM field profile inside a “defect” by some Gaussian field:

\(\phi _0\) and R will describe the amplitude and the extent of the field configuration, that will have a mass \(\propto \phi _0^2R\), so that number density of these objects n should obey \( \sim n \phi _0^2R \le \rho _{DM}\). The interaction of such an object with matter can be described by Eqs. (7)–(11).

M8. Finally, an ULF coupling to an EDM can be described as

The parameter space of the model is then \(\{m_\phi , d_i/f, \Omega _\phi \}\).

Conclusion: This is the first, and incomplete step towards the classification of ultra-light new physics. A lot more efforts are required in analyzing these models, and assembling constraints on their parameter space.

3.3 Observational searches for ultra-light FIPs with cosmological surveys – B. Wallisch

Author: Benjamin Wallisch, <benjamin.wallisch@fysik.su.se>

3.3.1 Introduction

Cosmological observations are a sensitive probe of particle physics and have become precise enough to start complementing terrestrial experiments. For example, they have the potential to shed new light on the properties of neutrinos and discover particles that are even more weakly coupled to the rest of the Standard Model (SM). In particular measurements of the cosmic microwave background (CMB) and the large-scale structure (LSS) of the universe are remarkably sensitive to light, feebly-interacting particles (FIPs). Importantly, these observations are and will be providing interesting bounds, both leading and complementary to those from astrophysics, colliders and laboratories, on both hot (thermal) and cold (non-thermal) FIP populations.

Light thermal relics of the hot big bang are one of the primary targets of current and especially future cosmological surveys. Apart from new massive particles, an interesting consequence of many proposals for physics beyond the Standard Model (BSM) are extra light species [28, 47, 48], such as axions (we will refer to both QCD axions and axion-like particles as axions for simplicity) [15, 17, 18, 49, 50], dark photons [51, 52] and light sterile neutrinos [53]. While searching for these particles is one of the main objectives of particle physics, their detection could be difficult in terrestrial experiments because their couplings might be too small or their masses too large. Interestingly, the temperature in the early universe was probably high enough to make the production of weakly-coupled and/or massive particles efficient. Their gravitational influence could then be detected if the energy density carried by these particles was significant. This will be the case for light relics which were in thermal equilibrium with the Standard Model at early times and subsequently decoupled from the SM degrees of freedom. In addition, even the absence of a detection would result in new insights by providing constraints on the SM couplings of these new particles. This sensitivity to extremely weakly interacting particles is a unique advantage of cosmological probes of BSM physics.

Classes of models with non-thermally produced, light particles are another interesting target for BSM searches for which cosmological surveys can uniquely contribute important information over a large range of scales. The very long wavelengths associated with the classical wave-like nature of ultra-light cold bosons means that their main signatures can be found in astrophysical and especially cosmological environments. As for thermally produced, light FIPs, a cold population of such particles can be found in many SM extensions, including the so-called axiverse in string theory which contains many ultra-light axions [49], for instance. There also exist several different production mechanisms which can leave distinct observational signatures.

While cosmological probes of FIPs are a broad and growing field of study, we will focus on light thermal FIPs and briefly discuss (ultra-)light non-thermal axions at the end. (In the cosmological context, we usually refer to sub-eV particles as being light.) We will first generally describe advantages and the physical origin of the constraining power of cosmology (and astrophysics) to light thermal relics. Then, we provide explicit examples of bounds on the SM couplings of these elusive particles, in particular axions. We finally give an introduction to ultra-light axions before we conclude with a summary. We note that we will mainly focus on the particle physics aspects of these searches and refer to [22, 28, 54,55,56,57,58,59,60,61,62,63,64,65] and references therein for details on the underlying cosmological probes and more general reviews (see also the related literature on sub-MeV dark matter, such as [8, 66]).

3.3.2 The power of cosmology (and astrophysics)

The detection of new light species is difficult since their couplings to the Standard Model are constrained to be small. Given the resulting small scattering cross sections, terrestrial experiments in the intensity or energy frontier of particle physics are challenging (see other contributions in these proceedings). The study of astrophysical and cosmological systems, however, provide us access to high-density environments and/or the ability to follow the evolution over long time scales which can overcome the small cross sections and allow a significant production of these extra particles. This then allows us to put some of the best constraints on FIPs.

It is illustrative in this context [67] to consider the fractional change in the number densities of the particles involved in the production process, which is schematically given by the interaction rate \(\Gamma \sim n\sigma \), with number density n and thermally-averaged cross section \(\sigma \), times the interaction time \(\Delta t\):

This highlights how small cross sections can be compensated for by high densities, e.g. \(n \sim (1\,\textrm{keV})^3\) and \((10\,\textrm{MeV})^3\) in stellar interiors and supernova explosions, and long time scales, such as the very long lifetime of stars, \(\Delta t \sim O(10^8)\,\textrm{yrs}\), or \(\Delta t \sim 10\,\textrm{s}\) for supernovae. We therefore find significant changes in the evolution of these astrophysical systems, \(\Delta n/n \gtrsim 1\), if \(\sigma \gtrsim (n \Delta t)^{-1} \sim \left( 10^{10}\,\textrm{GeV}\right) ^{\!-2}\). A similar argument can be applied to the early universe, which was dominated by radiation. The high densities of the early universe, \(n \sim T^3 \gg (1\,\textrm{MeV})^3\) before neutrino decoupling, allow light particles to have been in thermal equilibrium with the SM (and therefore efficiently produced) for time scales of \(\Delta t < 1\,\textrm{s}\). They can therefore make a significant contribution to the radiation density, and be detected in CMB and LSS observations. Based on this rough estimate, cosmological constraints should improve over astrophysical bounds for temperatures above \(10^4\,\textrm{GeV}\).

Another advantage of cosmological observations is that they can provide broad constraints on phenomenological descriptions. On the other hand, particle physics searches can be blind to unknown or incompletely specified forms of new physics. This means that terrestrial experiments may give strong constraints on specific scenarios, while cosmological measurements are less sensitive to the details of the models and can compress large classes of BSM physics into broad categories. For example, cosmology can constrain all couplings of new particles to the Standard Model, while astrophysical systems and laboratory experiments are often only sensitive to a subset of these interactions, such as the coupling to photons. This universality of cosmological constraints is one of the reasons why the search for light thermal relics has been adopted as one of the main science targets of future cosmological surveys (see e.g. [68, 69]), such as the next-generation CMB and LSS experiments CMB-S4 [56, 70, 71] and Spec-S5 [72, 73], respectively. In the next section, we will provide a few explicit examples for the constraining power of cosmology to light thermal relics.

3.3.3 Cosmological constraints on relic axions

From both the particle physics and cosmology perspective, it is useful to theoretically describe light thermal relics within an effective field theory (EFT) framework organized according to their spin [74] and observationally search for their contribution to the radiation density in the early universe as parametrized by the effective number of relativistic species, \(N_\textrm{eff}\). We will therefore briefly review these topics before discussing bounds in the decoupling and rethermalization scenarios.

Given the universal sensitivity of cosmology, it is more efficient to study the interactions between the new species with the SM degrees of freedom within the framework of effective field theory [74] and thereby capture their main phenomenology instead of working through BSM models one by one. Generally speaking, this means parametrizing these interactions as

where \(g \sim O(1)\) is a dimensionless coupling constant, \(\Lambda \) is the associated energy scale, \(\mathcal {O}_X\) and \(\mathcal {O}_\textrm{SM}\) are operators of dark sector and SM fields of dimension \(\Delta _X\) and \(\Delta _\textrm{SM}\), respectively, and \(n=\Delta _X+\Delta _\textrm{SM}-4\). To prevent large quantum corrections of the small masses of X, we employ (approximate) symmetries which restrict the allowed couplings in (18). This naturally separates the EFT according to the spin of the new particle(s): shift symmetries for scalars, chiral and axial symmetries for spin-\(\frac{1}{2}\) particles and gauge symmetries for vector bosons. By dimensional analysis, the interaction rate \(\Gamma _X\) grows with temperature T for \(n > \frac{1}{2}\), i.e. the new species is potentially in thermal equilibrium at high temperatures and decouples at a lower freeze-out temperature \(T_F\):

where we used the Hubble parameter during radiation domination in the early universe, \(H(T) \sim T^2/M_\textrm{pl}\). For \(\Lambda \ll M_\textrm{pl}\), decoupling therefore happens at \(T_F \ll \Lambda \), i.e. in the regime of validity of the EFT, provided that n is not too large. If the decoupling temperature is smaller than the reheating temperature of the universe, \(T_F < T_R\), the dark sector thermalizes with the Standard Model producing a detectable relic abundance (see below). Conversely, excluding such a relic abundance would lead to a bound on the interaction strength, \(\Lambda \gtrsim \left( T_R^{2n-1} M_\textrm{pl}\right) ^{1/(2n)}\), and severely constrain the currently available parameter space, in particular for high-scale reheating [75]. More generally, we can constrain the interaction terms (18) by measuring the relic density since it is governed by the decoupling temperature \(T_F(\Lambda )\).

We usually infer the relic density \(\rho _X\) in terms of \(N_\textrm{eff}\), which parametrizes the radiation density \(\rho _r\) of the universe as

Here, we used the well-measured photon energy density \(\rho _\gamma \) and the neutrino energy density \(\rho _\nu \), given by the SM value of \(N_\textrm{eff}^\textrm{SM} = 3.044\) [76,77,78], which has now been theoretically calculated with an uncertainty in the fourth decimal place. The contribution to \(N_\textrm{eff}\) from any extra light relic is given by

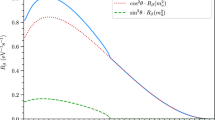

where \(g_{*,X} = 1, 7/4, 2\) is the effective number of degrees of freedom for a real scalar, Weyl fermion and massless vector boson, respectively, \(g_*(T)\) is the effective number of total relativistic degrees of freedom in thermal equilibrium at temperature T, and \(g_{*,\textrm{SM}} = 106.75\) is the maximum SM value at temperatures above the scale of electroweak symmetry breaking. We present this contribution to \(N_\textrm{eff}\) from a single thermally-decoupled species as a function of the decoupling temperature \(T_F\) and the spin of the particle in Fig. 7. Here, we assumed that there is no significant entropy production in the universe for \(T < T_F\) which would otherwise decrease \(\Delta N_\textrm{eff}\). If we additionally consider a minimal extension of the Standard Model, \(g_*(T \gg m_t) \approx g_{*,\textrm{SM}}\), with top mass \(m_t\), we get \(\Delta N_\textrm{eff} \ge 0.027 g_{*,X}\). (We refer to [67] for a detailed discussion of these assumptions and their implications.) This in particular implies that cosmological surveys which are sensitive to \(\Delta N_\textrm{eff} = 0.027\) will either detect the existence of any light particle that was ever thermalized in the history of the universe or provide strong constraints on their potential SM interactions. Interestingly, this threshold is within reach of future observations.

Contributions of a single massless particle, which decoupled from the Standard Model at a decoupling temperature \(T_F\), to the effective number of relativistic species, \(N_\textrm{eff} = N_\textrm{eff}^\textrm{SM} + \Delta N_\textrm{eff}\), with the SM expectation \(N_\textrm{eff}^\textrm{SM} = 3.044\) from neutrinos (from [28, 59, 67] where we also refer to for additional details). The purple, green and blue lines show the contributions for a real scalar, Weyl fermion and vector boson, respectively. The two vertical gray bands indicate neutrino decoupling and the QCD phase transition. The current limit on \(\Delta N_\textrm{eff}\) at 95% c.l. from Planck 2018 and BAO data [79], and the anticipated sensitivity of the Simons Observatory (SO) [80] and CMB-S4 [70, 71] as examples for upcoming and next-generation CMB experiments are included with dashed lines. The horizontal gray band illustrates the future sensitivity that might potentially be achieved with a combination of cosmological surveys of the CMB and LSS, such as CMB-HD [81], Spec-S5 [73] and PUMA [82], cf. [57, 83, 84]. The displayed values on the right are the observational thresholds for particles with different spins and arbitrarily large decoupling temperature in minimal scenarios

We can exemplify the potential sensitivity of cosmological \(N_\textrm{eff}\) measurements to constrain couplings of SM particles to BSM pseudo-Nambu-Goldstone bosons (pNGBs), which are scalar particles that arise as a consequence of the breaking of (approximate) global symmetries, such as axions (shift symmetry) [15, 17, 18, 49, 50], familons (flavor symmetry) [85,86,87,88] or majorons (neutrino masses) [89, 90]. For the dimension-5 interaction of photons and gluons with axions \(\phi \), \(\mathcal {L} \supset -\phi /(4\Lambda _{\gamma ,g}) X_{\mu \nu } \tilde{X}^{\mu \nu }\), with respective field-strength tensor \(X_{\mu \nu } = F_{\mu \nu }, G_{\mu \nu }^a\) and its dual \(\tilde{X}_{\mu \nu }\), we have (19) with \(n = 1\). Observationally excluding a contribution of \(\Delta N_\textrm{eff} = 0.027\) to the radiation density therefore implies reheating-temperature-dependent constraints on the interaction strengths of [75]

This limits would be stronger than current (astrophysical) constraints for reheating (or similar entropy production eras above the electroweak symmetry breaking scale) temperatures of about \(10^4\,\textrm{GeV}\) for the axion-photon coupling and generally for the interaction with gluons. Similar bounds can also be inferred for couplings to SM fermions and neutrinos [75] (see also [91,92,93,94,95,96], for instance).

Another scenario occurs for effective operators with \(n < 1/2\) in (18) for which the new light particles are out of equilibrium at high temperatures and thermalize at lower temperatures, cf. (19). This is in particular the case for dimension-5 pNGB couplings to SM fermions \(\psi \), such as the SM axial vector current, \(\partial _\mu \phi \, \bar{\psi } \gamma ^\mu \gamma ^5 \psi \), which become effective dimension-4 operators after electroweak symmetry breaking leading to \(\Gamma _\phi \propto m_i/\Lambda _i\, T\), where \(m_i\) is the respective SM fermion mass. Once these particles thermalize with the SM at \(T \gtrsim m_i\), they will contribute to \(N_\textrm{eff}\) at an observable level, but at a negligible level for \(T \lesssim m_i\) since the fermion number density becomes Boltzmann suppressed. In fact, this would be easier to detect since the contribution to \(N_\textrm{eff}\) in this rethermalization scenario is larger than their equivalent freeze-out contribution.

At the same time, the absence of a detection would allow us to place direct constraints on the interaction strength between the light particles and the SM fermions: we require that the recoupling temperature is smaller than the effective decoupling temperature so that the interaction rate is already Boltzmann suppressed when the pNGBs would rethermalize [75]. While these constraints are usually weaker than the freeze-out bounds, they do not make any assumptions about the reheating temperature (as long as \(T_R > m_i\)). As illustrated in Fig. 8, both current constraints on \(N_\textrm{eff}\) from Planck and, in particular, future measurements at the projected sensitivity of CMB-S4 set interesting constraints on the couplings of some SM fermions to axions and other pNGBs [75, 97,98,99,100,101,102,103]. At the moment, the cosmological constraints on axion-muon and axion-tau couplings are complementary to astrophysical constraints from supernova 1987A (cf. [104,105,106]) and white dwarf cooling, respectively, but will be competitive or even supersede these bounds with future \(N_\textrm{eff}\) measurements [97]. Upcoming CMB and LSS surveys will also be sensitive to the couplings of axions to heavy quarks even though the derivation of precise constraints depends on the strong-coupling regime of quantum chromodynamics. More generally, the environment, required modeling and physical understanding in the early universe is quite different to the interiors of stars, i.e. cosmological probes can be an important complementary test of these axion-matter couplings (see e.g. [107]).

3.3.4 Cosmological constraints on ultra-light axions

So far, we have considered a potential thermal population of light species, but there are also several ways of non-thermally producing light BSM particles which may also constitute (part of) the dark matter. One such example are (ultra-)light axions which have an interesting phenomenology and distinct observational signatures, with cosmological observations setting the current bounds in a sizable part of parameter space (see [22, 23, 60, 63, 108, 109] for recent reviews).

Non-thermally produced bosons with masses in the range \(10^{-33}\,\textrm{eV} \lesssim m_\phi \lesssim 10^{-2}\,\textrm{eV}\) are particularly interesting since they may contribute the observed dark matter and dark energy densities. These particles generally behave like dark energy with an equation of state \(w = -1\) when the Hubble parameter is smaller than their mass, \(H \lesssim m_\phi \). For larger values of H at later times, the axion field oscillates rapidly, behaving like (dark) matter with \(w = 0\) on average. This implies that these FIPs either contribute to dark matter or dark energy throughout the history of the universe. The behavior of an axion with \(m_\phi \lesssim 10^{-33}\,\textrm{eV}\) cannot be distinguished from a cosmological constant as dark energy today since its Compton wavelength exceeds the cosmic horizon. If its mass is smaller and larger than about \(10^{-27}\,\textrm{eV}\), respectively, it behaves like early dark energy and dark matter. Through this behavior, the wave-like nature with macroscopic de Broglie wavelengths and broader phenomenology, these particles also have the potential to address some open questions in astrophysics and cosmology.

Ultra-light axions have various observable implications that affect several cosmological probes (and other astrophysical measurements and terrestrial experiments). For instance, the slowly rolling axion field in the dark energy regime in particular impacts the largest angular scales and the wave-like nature of these FIPs suppresses density fluctuations on smaller scales below the (comoving) Jeans scale, \(\lambda _J = 0.1\,\textrm{Mpc}\, (m_\phi /10^{-22}\,\textrm{eV})^{-1/2}\, (1+z)^{1/4}\). These are signals that are especially imprinted in both the primary and secondary CMB anisotropies, and in LSS observations of galaxy clustering, weak gravitational lensing and the Lyman-\(\alpha \) forest. Current CMB and LSS surveys constrain the ultra-light axion energy density to \(\Omega _\phi \lesssim 0.01\) in the mass range \(10^{-32}\,\textrm{eV} \lesssim m_\phi \lesssim 10^{-26}\,\textrm{eV}\), with Lyman-\(\alpha \) observations adding additional constraints for \(10^{-23}\,\textrm{eV} \lesssim m_\phi \lesssim 10^{-20}\,\textrm{eV}\). Future surveys, especially CMB-S4, are forecasted to close this gap and improve the current bounds by one to two orders of magnitude (see Fig. 4 of [63] for a recent compilation of current and future CMB and LSS constraints [110,111,112,113,114,115,116,117,118,119,120]). An additional powerful signature of ultra-light axions is a spectrum of isocurvature fluctuations, which depends on whether the underlying U(1) symmetry of these FIPs is broken before or after inflation and may provide further insights together with CMB B modes in the former case (see e.g. [121,122,123,124,125]). Somewhat more model-dependent constraints may also be inferred through CMB birefringence observations (e.g. [126,127,128,129,130]), for instance, if these BSM particles couple to photons.

Contribution of a rethermalized light axion to \(\Delta N_\textrm{eff}\) as a function of its coupling \(\Lambda _i\) to different SM fermions \(\psi _i\) for the dimension-5 derivative coupling to the SM axial vector current \(-\Lambda _i^{-1} \partial _\mu \phi \, \bar{\psi }_i \gamma ^\mu \gamma ^5 \psi _i\) (from [97] where we also refer to for a detailed discussion). The displayed values for the bottom and charm couplings are conservative and may be (significantly) larger. The horizontal dashed lines and gray band are the same as in Fig. 7. Given the displayed results, current and future constraints on \(N_\textrm{eff}\) can be directly translated into the equivalent bounds on \(\Lambda _i\)

3.3.5 Summary

The wealth and precision of current CMB and LSS data does not only allow us to probe the universe on large scales, but we can also use it to search for BSM particles. These cosmological observations are particularly well suited to study light FIPs, such as axions, which arise in many well-motivated extensions of the Standard Model. Current surveys already provide interesting bounds on many scenarios through broad, model-independent data analyses which in particular capture the gravitational influence of new species on the evolution of the universe. While the main parameters of the effective number of relativistic species \(N_\textrm{eff}\) and the axion energy density \(\Omega _\phi \) for a thermal and non-thermal population of such particles, respectively, parametrize their energy density, we also note that these cosmological measurements can shed light on additional properties, such as their (non-)free-streaming nature and, therefore, their self-interactions or dark couplings (cf. [55, 131,132,133,134,135,136,137,138,139]). Upcoming and future CMB and LSS surveys are designed to improve current bounds on \(N_\textrm{eff}\) and \(\Omega _\phi \) by at least one order of magnitude which will translate into many orders of magnitude in coupling strengths and large parts of currently viable parameter spaces. Cosmological probes of light FIPs therefore have a bright future by themselves, and through their combination and complementarity with astrophysical and terrestrial experiments.

3.4 Ultra-light FIPs: what we know from stars/supernovae /neutron stars/white dwarfs/etc – P. Carenza

Author: Pierluca Carenza,<pierluca.carenza@fysik.su.se>

Feebly interacting particles (FIPs) play a major role in a plethora of astrophysical phenomena. An extremely important phenomenological aspect is the impact that FIPs have on stellar evolution. Indeed, exotic particle with feeble interactions with ordinary matter, once produced in stars, escape draining energy from the stellar core. Thus, stars are efficient FIP factories given their extreme density and temperature conditions.

Axions and axion-like particles (ALPs), collectively called ‘axions’, are among the most studied FIPs since their intriguing connection to the strong CP problem and the possibility of explaining the nature of Dark Matter (see, e.g., Refs. [23, 140,141,142,143] for recent reviews). A very generic property of these particles is a coupling to photons through the following interaction Lagrangian

which enables the axion production in external electromagnetic field and the possibility of a radiative decay for massive axions. At low-energy, another phenomenologically relevant coupling is the one with fermions

where \(\Psi \) is any fermion field (electrons, nucleons, ecc...) with mass \(m_{f}\). This interaction is important in environments with a high density of electrons or nucleons, like in stars. Here, axions can be produced by various processes involving fermions and their consequences will be extensively discussed.

Also neutrinos can be listed as FIPs, and their properties are efficiently probed by astrophysics. In particular, their electromagnetic properties, as the magnetic moment, are of great interest to unveil their nature. A neutrino with a non-vanishing magnetic moment interacts with photons through the following Lagrangian

where the indices i, j indicate the neutrino flavors, and \(F^{\mu \nu }\) is the electromagnetic tensor. This interaction makes possible for right-handed (sterile) neutrinos to be produced by oscillations of a left-handed neutrino in an external electromagnetic field.

The last FIP we consider is an exotic gauge boson, usually called hidden photon or dark photon (DP). In general, DPs are kinetically mixed with ordinary photons by means of the following interaction

where \(\epsilon \) is the mixing angle and \(X_{\mu \nu }\) is the field tensor associated with DPs. This vertex allows DPs to convert into photons independently of external fields, unlike axions.

In the following we explore the phenomenology associated with FIPs produced in stars and their consequences on the stellar evolution.

3.4.1 Stellar bounds on FIPs

Stellar astrophysics is a well-developed branch of astrophysics that, while far from being a closed subject, provides an accurate and consistent picture of the stellar evolution, with detailed information about the stellar interior. The properties of stars are strongly affected by the microphysics of their constituents. Therefore, stellar evolution is sensitive to particle physics and the extreme conditions reigning in stellar interiors make stars ideal laboratories for particle physics. Every evolutionary stage has unique properties that can be used to test various FIPs. Typically stars are grouped depending on their visible properties, as luminosity and surface temperature. In the following we identify different stars and discuss how they can be used to probe FIPs. Details on the global analyses of stellar bounds on FIPs, especially axions, can be found in [144,145,146,147,148,149,150]. Here we provide only a brief summary with updated results.

Sun The Sun is the most studied and well-known star. Its interior is composed by ionized hydrogen, that is converted into helium to produce energy and balance the gravitational collapse. The detailed internal structure is revealed by helioseismology, the study of pressure waves propagating to the solar surface. This study allows to reconstruct the sound-speed profile in the solar interior, which turns out to be a sensitive probe of density and temperature inside the star.

Axions coupled to photons through Eq. (23) are produced in the Sun via Primakoff conversion of thermal photons in the electrostatic field of ions, \(\gamma +Ze\rightarrow a\rightarrow Ze\). The constant flux of energy subtracted from axions to the Sun during its evolution would change the equilibrium structure compared to a case without axions. This imprint would affect the sound-speed profile inside the Sun and also the surface helium abundance [151].

Solar neutrinos are a precious messenger of the innermost structure of the Sun. The flux of neutrinos produced in nuclear reactions is extremely sensitive to the solar temperature, since production rates have a steep temperature dependence. A sizable amount of energy-loss through FIP productions might results into a higher temperature and higher neutrino fluxes. Solar neutrino data (for the \(\,^{8}\textrm{B}\) neutrino flux) collected from the Sudbury Neutrino Observatory (SNO) were used to set an upper limit on exotic losses equal to \(L_{x}\lesssim 0.1 L_{\odot }\), where \(L_{\odot }=3.84\times 10^{33}~{\textrm{ergs}}^{-1}\) is the solar photon luminosity [152]. This probe is more sensitive to exotic losses than helioseismology, since \(L_{x}\lesssim 0.2 L_{\odot }\) is the constrained obtained by helioseismological studies [151].

A joint analysis of both solar neutrinos and helioseismology leads to a constraint on the axion-photon coupling equal to \(g_{a\gamma }<4.1\times 10^{-10}~{\textrm{GeV}}^{-1}\) at \(99\%\) CL for \(m_{a}\lesssim 1~{\textrm{keV}}\) [153]. With a similar approach it is possible to constrain DPs, that are produced by conversion of thermal photons. Precisely, in a plasma there are two photon modes (transversal and longitudinal) with different dispersion relations. Both modes contribute to the DP production, especially in resonant conditions, when the DP mass (for resonant conversion of transverse modes) or its energy (for resonant conversion of longitudinal modes) match the plasma frequency [154, 155]. Analyses of solar properties constrain DP mixing and mass to be \(\epsilon \, m_{\chi } < 1.8 \times 10^{-12}~{\textrm{eV}}\) at \(99\%\) CL [153]. In analogy, also electromagnetic neutrino properties, as a milli-charge or a large magnetic moment, are constrained by solar physics [156] (see also Ref. [157] for other FIP models).

Until now the discussion was focused on how FIPs affect solar observables indirectly. However, the Sun is the closest star and potentially the brightest source of sub-keV FIPs. Therefore, it is possible to constrain FIP properties by direct detection experiments pointing to the Sun. For example, light axions (with masses \(m_{a}\lesssim {\textrm{keV}}\)) coupled to photons have a flux at Earth equal to \(\phi _{a}\simeq (g_{a\gamma }/10^{-10}~\textrm{GeV}^{-1})^{2}\,4\times 10^{11}~\textrm{cm}^{-2}\textrm{s}^{-1}\). This large axion flux is potentially detectable through conversion of axions into X-rays in intense magnetic fields: this is the idea behind the helioscope design, of which the CERN Axion Solar Telescope (CAST) is the most currently developed example [158]. CAST set the most stringent experimental constraint on the axion-photon coupling in a wide mass range, \(g_{a\gamma }<0.66\times 10^{-10}~\textrm{GeV}^{-1}\) at \(95\%\) CL for \(m_{a}\lesssim 20~\textrm{meV}\) [158, 159]. An upgraded version of CAST is the planned helioscope International Axion Observatory (IAXO), expected to probe axions with a photon coupling down to \(g_{a\gamma }\sim 10^{-12}~\textrm{GeV}^{-1}\) [160, 161]. The first stage in the IAXO development, BabyIAXO, is already expected to probe unexplored parameter space about a factor of 3 below the CAST bound [162].

The astonishing sensitivity of these searches allows to probe other FIP properties. For example, the axion-electron coupling in Eq. (24) opens many channels for the axion production in the Sun: axion-Compton scattering \(\gamma e^{-}\rightarrow a e^{-}\), electron Bremsstrahlung on electrons or ions \(e^{-}+Ze\rightarrow a+e^{-}+Ze\), atomic axio-deexcitation \(I^{*}\rightarrow I\,a\) and axio-recombination \(e^{-}+I\rightarrow a+I^{-}\), where I represents any atomic species [163]. The Bremsstrahlung is the main component of the solar axion flux at low energies, with a peak at \(\sim 1~\textrm{keV}\). At higher energies, above \(\sim 5~\textrm{keV}\) the axion-Compton scattering is the most important contribution and atomic processes introduce peculiar spectral lines in the whole energy range. Constraints on the solar structure set a bound \(g_{ae}\lesssim 2.3\times 10^{-11}\) [152, 163] that is weaker than other stellar constraints. However, CAST is able to probe axions coupled to both electrons, important in the production, and photons, for the detection. The bound obtained by CAST is \(g_{a\gamma }g_{ae}<8.1\times 10^{-23}~\textrm{GeV}^{-1}\) at \(95\%\) CL for \(m_{a}\lesssim 10~\textrm{meV}\) [164]. Since IAXO will probe a motivated region of the axion parameter space, some studies discussed, in case of a discovery, the possibility of IAXO to distinguish between axion models [165], determining its mass [166] and also probe solar properties [167] (see Ref. [168] for a comprehensive study).

Solar axion observations can also be used to probe the axion-nucleon coupling, a fundamental property of the most motivated axion models. The idea of axions produced in nuclear deexcitation processes is old [169, 170] and CAST data was already used to set constraints on axions coupled to both photons and nucleons [171, 172]. An updated analysis of the next generation helioscope sensitivity was recently presented in Ref. [173]. In addition, axions produced in the \(p + d \rightarrow {^3{\textrm{He}}}+ \hbox {a}(5.49 \,\ \hbox {MeV})\) reaction have been probed, through photon and electron interactions, by experiments designed for neutrinos, like Borexino [174], and the perspectives for the future Jiangmen Underground Neutrino Observatory (JUNO) are bright [175].

This discussion motivates why the Sun is an excellent axion source. However, not all the FIPs produced in the Sun manage to escape its gravitational field. A small portion of these is gravitationally trapped around the star, forming a basin [176]. Thus, we expect that the density of non-relativistic FIPs is locally higher, enhancing the perspectives for these searches.

Red giant stars A star like the Sun, which burns hydrogen in the core, is called main-sequence star. Most of the stars in the Universe are in this phase. When the hydrogen in the core is exhausted, the nuclear source of energy to contrast the gravitational collapse disappears and stars with a mass \(M\lesssim 2M_{\odot }\) develop an inert helium core, surrounded by a burning shell of hydrogen. In this phase, the external layers of the star expand, increasing the stellar luminosity even though the surface temperature drops. This is the Red Giant (RG) phase. The helium produced in the hydrogen shell continues to fall on the core, that becomes very dense and electrons are degenerate. In order to contrast the gravitational collapse, the degenerate core shrinks to increase the degeneracy pressure. In this process the gravitational attraction at the edge of the core, where the burning hydrogen shell lies, increases and heats up this layer. The luminosity increases as the temperature of the hydrogen shell grows and this process is mostly determined by the core mass. Together with the hydrogen layer, also the temperature of the core increases until, suddenly, the temperature is high enough to ignite helium. This process is very fast, given the steep temperature dependence of the helium burning rate. For this reason, this process is called helium-flash. The properties of the RG when the helium-flash happens determine the location of the so-called RGB tip in the color-magnitude diagram.

The RGB tip is extremely sensitive on possible exotic losses. The additional loss delays the He ignition giving time to the core to grow further and thus making the star at the RGB tip brighter. This observable provides a very effective way to constrain the axion-electron coupling. Indeed, axions are produced very efficiently in RGs via Bremsstrahlung. The latest analyses constrain \(g_{ae}\lesssim 1.48\times 10^{-13}\) at \(95\%\) CL for light (sub-keV) axions [177, 178]. The standard energy-loss in a RG core is dominated by plasmon decay into neutrinos \(\gamma ^{*}\rightarrow \nu \bar{\nu }\). This neutrino production rate might be increased in presence of a large NMM. The constraint evaluate in Ref. [178] is \(\mu <1.2\times 10^{-12}~\mu _{\textrm{B}}\), where \(\mu _{\textrm{B}}\) is the Bohr magneton. These are the most stringent bounds on FIP properties, showing the power of astrophysical studies compared to laboratory experiments. Just like in the Sun, DPs can be copiously produced also in RGs by photon-DP oscillations in the DP mass range \(3~\textrm{keV}\lesssim m_{\chi }\lesssim 30~\textrm{keV}\). A simple criterion, requiring that the emissivity in the core is less than 10 \(\textrm{erg}\textrm{g}^{-1}\textrm{s}^{-1}\), excludes \(\epsilon \gtrsim 10^{-15}\) [32].

Horizontal branch stars As explained in the previous section, a relatively low mass star ignites helium in its core after the RG phase. The stable configuration with a core composed by helium, surrounded by a burning hydrogen shell, is called horizontal branch (HB) star. The high temperature reached during this stage (\(\sim 10\) keV) makes electrons in the core non-degenerate. Thus, HB stars are efficient in producing axions coupled to photons via the Primakoff process. The energy subtracted by axions speeds up the HB phase, compared to the RG phase that is unaffected by axion production [179]. Indeed, the high electron degeneracy suppresses the axion production via Primakoff conversion. The duration of these phases is measurable by counting stars in a particular phase: the longer the duration, the more stars are found in a given phase. The most relevant observable in this respect is the R parameter, defined as the ratio of the number of stars in the HB and in the RG phase, \(R = N_{\textrm{HB}}/N_{\textrm{RGB}}\). This quantity is typically measured by counting stars in globular clusters (GCs), gravitationally bound systems of coeval stars differing only in their initial mass. In Ref. [180] it was obtained that \(R=1.39\pm 0.03\) from the analysis of 39 GCs. Exotic losses would reduce this parameter, since the HB phases is shortened compared to the RG one. Precisely, in absence of exotic particles the duration of the HB phase is \(\tau _{\textrm{HB}}\simeq 88.4\) Myr and the uncertainty on the R parameter reflects into a maximal reduction of the \(\sim 15\%\) within \(2\sigma \).

Applying this criterion to axions coupled to photons, it is obtained that \(g_{a\gamma }<0.65\times 10^{-10}~\textrm{GeV}^{-1}\) at \(95\%\) CL [180, 181] for massless axions. This constraint was later generalized to masses up to \(m_{a}\sim 300~\textrm{keV}\) [182] and carefully taking into account that radiative axion decays constitute a new energy transfer channel [183]. The HB constraint is shown in Fig. 9 and compared to other bounds on heavy axions.

The DP emission also causes a shortening of the HB phase, which is significant if the DP luminosity exceeds the \(10\%\) of standard HB luminosity (assumed to be \(60L_{\odot }\)). This criterion leads to a bound that reaches down to \(\epsilon \lesssim 10^{-15}\) for \(m_{\chi }\sim 2.6~\textrm{keV}\) [32].

Bounds on the axion-photon coupling \(g_{a\gamma }\) for massive axions. The red region represents the HB bound [182, 183]. The SN 1987A bound (green) [184] and the experimental limits from Refs. [185, 186], are also shown. The light green bound refers to the constraint obtained in Ref. [187]. The orange constraint is obtained by considering the energy-loss criterion applied to low-energy SNe [188]

Eventually, a HB star burns all the helium in the core, and it is left with an inert carbon and oxygen core surrounded by a shell of burning helium. A star in this phase is in the Asymptotic Giant Branch. This phase can also be used to test FIPs since these stars are very bright and their properties are well-known. In addition to reveal exotic energy-losses, this phase gives a snapshot of how the nucleosynthesis works and its possible interplay with FIPs. The case of axions is discussed in this context [189], concluding that energy-losses would lower the mass of the remnant core, a white dwarf (WD), and affect the temperature of the burning helium shell, affecting the chemical composition of the star.

White dwarfs A WD is the last evolutionary stage for stars with a mass \(\lesssim 8\,M_{\odot }\). The chemical composition of WDs depends on the mass of their core: for masses smaller than \(\sim 0.4M_{\odot }\) they are composed by helium; for masses up to \(\sim 1.05M_{\odot }\), carbon and oxygen accumulate in the core and more massive WDs are made of oxygen and neon. In a WD the gravitational collapse is balanced by the pressure of degenerate electrons, making the core isothermal given their high thermal conductivity. The long evolution of WDs, on the scale of Gyr, is a simple cooling. A young WD cools mostly through neutrinos produced in plasmon decay in the core. Older WDs lose energy via surface photon emission. FIPs would change this picture by accelerating the cooling process.

Axions coupled to electrons can be produced in WDs via electron Bremsstrahlung [190] (see Ref. [191] for an extensive discussion on this process). The axion emission, for sufficiently high axion-electron coupling, might be competitive with standard losses soon after the neutrino emission ceases to be dominant. The WD cooling rate is measured with asteroseismology techniques, but monitoring the evolution of a single WD requires an accurate understanding of the internal structure and composition of the star. By constrast, it is possible to reconstruct global properties of WDs via the WD luminosity function (WDLF), a relation between WD mass and luminosity. However, the WDLF is sensitive to properties of the stellar population as the initial mass function and star formation rates. This second method based on the WDLF was used to set a constraint \(g_{ae}\lesssim 1.4\times 10^{-13}\) on the axion-electron coupling [192]. In a similar fashion to the RG case, a large NMM would enhance the neutrino production via plasmon decay. Observations exclude a NMM larger than \(\mu \gtrsim 5\times 10^{-12}\,\mu _{\textrm{B}}\) [193].

Another important observable is related with a peculiar class of WDs: the pulsating WDs. The pulsation in a WD is caused by a competition between the cooling rate, that increases the core degeneracy pressure, and the gravitational contraction. Pulsations are especially important for young WDs (see Ref. [194] for a comprehensive review). Since the pulsation is related to cooling processes, measurements of the pulsation period are able to probe FIPs [195, 196]. The analysis of this observable reveals a hint for a DFSZ axion with \(g_{ae}\sim 5\times 10^{-13}\) [197] and a constraint \(g_{ae}\lesssim 7\times 10^{-13}\) [198]. Regarding non-standard neutrino properties, a large NMM can be similarly constrained giving \(\mu \lesssim 5\times 10^{-12}\,\mu _{\textrm{B}}\) [199].

Recently, it was pointed out that the WD initial-final mass relation (IFMR) is sensitive to exotic physics [200]. The IFMR maps the initial mass of a main sequence star to the final mass of the WD into which it evolves. This is a completely new approach for WD constraints on FIPs. When applied to the axion case, this idea leads to a constraint competitive with the HB one, especially for heavy (\(m_{a}\sim 300\) keV) axions [200].

Bounds from massive stars The use of massive stars in constrained FIPs have been considerably more limited. In recent years, however, some works have attempted to extract information on the FIP-matter couplings through the observations of these stars. In Ref. [201], it was shown that even a relatively small neutrino magnetic moment, \((2-4) \times 10^{-11} \mu _B\) (below the experimental bound but above the WD and RG constraints) can cause observable changes to the evolution of a massive (\(10-20M_{\odot }\)) star. Specifically, it would cause a shifts in the threshold masses for creating core-collapse supernovae and oxygen-neon-magnesium white dwarfs, and the appearance of a new type of supernova in which a partial carbon-oxygen core explodes within a massive star.