Abstract

Measurements of Higgs boson production, where the Higgs boson decays into a pair of \(\uptau \) leptons, are presented, using a sample of proton-proton collisions collected with the CMS experiment at a center-of-mass energy of  , corresponding to an integrated luminosity of 138\(\,\text {fb}^{-1}\). Three analyses are presented. Two are targeting Higgs boson production via gluon fusion and vector boson fusion: a neural network based analysis and an analysis based on an event categorization optimized on the ratio of signal over background events. These are complemented by an analysis targeting vector boson associated Higgs boson production. Results are presented in the form of signal strengths relative to the standard model predictions and products of cross sections and branching fraction to \(\uptau \) leptons, in up to 16 different kinematic regions. For the simultaneous measurements of the neural network based analysis and the analysis targeting vector boson associated Higgs boson production signal strengths are found to be \(0.82\pm 0.11\) for inclusive Higgs boson production, \(0.67\pm 0.19\) (\(0.81\pm 0.17\)) for the production mainly via gluon fusion (vector boson fusion), and \(1.79\pm 0.45\) for vector boson associated Higgs boson production.

, corresponding to an integrated luminosity of 138\(\,\text {fb}^{-1}\). Three analyses are presented. Two are targeting Higgs boson production via gluon fusion and vector boson fusion: a neural network based analysis and an analysis based on an event categorization optimized on the ratio of signal over background events. These are complemented by an analysis targeting vector boson associated Higgs boson production. Results are presented in the form of signal strengths relative to the standard model predictions and products of cross sections and branching fraction to \(\uptau \) leptons, in up to 16 different kinematic regions. For the simultaneous measurements of the neural network based analysis and the analysis targeting vector boson associated Higgs boson production signal strengths are found to be \(0.82\pm 0.11\) for inclusive Higgs boson production, \(0.67\pm 0.19\) (\(0.81\pm 0.17\)) for the production mainly via gluon fusion (vector boson fusion), and \(1.79\pm 0.45\) for vector boson associated Higgs boson production.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the standard model (SM) of particle physics [1,2,3], the masses of the W and Z bosons are obtained through their interaction with a fundamental field that enters the theory via the Brout–Englert–Higgs mechanism [4,5,6,7,8,9], in a process known as electroweak symmetry breaking. The Higgs boson (H) is the quantized manifestation of this field. A particle compatible with H was observed at the CERN LHC by the ATLAS and CMS experiments in the \(\upgamma \upgamma \), \({\textrm{ZZ}}\), and \({\textrm{WW}}\) final states using data collected in 2011–2012 at center-of-mass energies of \(\sqrt{s}=7\) and  [10,11,12]. The properties of the new particle, including its couplings, spin, and CP eigenstate are so far consistent with those expected for a Higgs boson with a mass of \(125.35\pm 0.14 \,\text {GeV}\) [13] as predicted by the SM [14,15,16,17,18,19,20,21,22,23,24,25].

[10,11,12]. The properties of the new particle, including its couplings, spin, and CP eigenstate are so far consistent with those expected for a Higgs boson with a mass of \(125.35\pm 0.14 \,\text {GeV}\) [13] as predicted by the SM [14,15,16,17,18,19,20,21,22,23,24,25].

In the SM, the mass generation of fermions is introduced in the form of Yukawa couplings to the Brout–Englert–Higgs field. Extensions of the SM, like supersymmetry [26, 27], predict deviations of the H couplings, particularly to down-type fermions, such as the \(\uptau \) lepton or b quark, which further increases interest in the \({\textrm{H}} \rightarrow \uptau \uptau \) decay [28, 29].

The \({\textrm{H}} \rightarrow \uptau \uptau \) decay, which in the SM and its extensions offers a much larger branching fraction than \({\textrm{H}} \rightarrow \upmu \upmu \) and reduced background compared to \({\textrm{H}} \rightarrow {\textrm{bb}}\), is the most promising channel to study H decays to fermions. Accordingly, the first evidence for the H coupling to (down-type) fermions was found in the \({\textrm{H}} \rightarrow \uptau \uptau \) decay channel using data collected at \(\sqrt{s}=7\) and  [30,31,32]. A combination of the measurements performed by the ATLAS and CMS experiments at the same \(\sqrt{s}\) led to the first measurement of the H coupling to the \(\uptau \) lepton with a statistical significance of more than 5 standard deviations (s.d.) [15]. The first observation of \({\textrm{H}} \rightarrow \uptau \uptau \) decays with a single experiment was achieved by the CMS experiment adding data collected at

[30,31,32]. A combination of the measurements performed by the ATLAS and CMS experiments at the same \(\sqrt{s}\) led to the first measurement of the H coupling to the \(\uptau \) lepton with a statistical significance of more than 5 standard deviations (s.d.) [15]. The first observation of \({\textrm{H}} \rightarrow \uptau \uptau \) decays with a single experiment was achieved by the CMS experiment adding data collected at  in 2016 to the data collected at 7 and

in 2016 to the data collected at 7 and  [33].

[33].

In recent years, H production rates have been investigated in the framework of the simplified template cross section (STXS) scheme, introduced by the LHC Higgs Working Group [34]. This scheme defines a set of kinematic and topological phase space regions, referred to as STXS bins, for differential measurements. The STXS scheme supports the investigation of each production mode individually. It facilitates the combination of measurements across different H decay channels and across experiments. The STXS bins have been chosen to reduce the dependence on any underlying theoretical models embodied in the measurements. They have been defined in stages, corresponding to the anticipated statistical power of the data required to perform the measurements. In STXS stage-0, which was used in the analyses of the LHC Run-1 data, events have been assigned to basic categories according to their main H production mechanisms:

-

1.

gluon fusion;

-

2.

vector boson fusion (VBF);

-

3.

quark-initiated H production in association with a vector boson V (\({\textrm{VH}}\));

-

4.

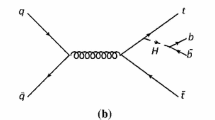

H production in association with a top anti-top quark pair (\({\textrm{t}}\bar{\textrm{t}} {\textrm{H}} \)).

Subsequent refinements to the STXS scheme resulted in division of the basic categories into finer bins, called stage-1.1 [35], and a redefinition of some of the stage-0 categories: Gluon fusion and gluon-initiated \({\textrm{gg}}\rightarrow {\textrm{Z}} ({\textrm{qq}}){\textrm{H}} \) production with hadronic Z boson decays, which can be viewed as part of inclusive H production via gluon fusion at higher order in perturbation theory, are defined as a combined category, referred to as \({\textrm{ggH}}\). The VBF and quark-initiated \({\textrm{qq}}\rightarrow {\textrm{V}} ({\textrm{qq}}){\textrm{H}} \) production with hadronic V decays are defined as a combined category, referred to as \({\textrm{qqH}}\). The \({\textrm{VH}}\) category in turn refers to leptonic V decays with at least one charged lepton. One further update resulted in the STXS stage-1.2 scheme, which forms the basis for this paper. With respect to the STXS stage-1.1 this update introduces a finer split of the \({\textrm{ggH}}\) bin with the H transverse momentum (\(p_{\textrm{T}} ^{{\textrm{H}}}\)) larger than 200\(\,\text {GeV}\), which is the only difference of relevance for this paper.

The first STXS measurements combining the \({\textrm{H}} \rightarrow \upgamma \upgamma \), \({\textrm{ZZ}}\), \({\textrm{WW}}\), bb, \(\uptau \uptau \), and \(\upmu \) \(\upmu \) decay modes have been performed by the ATLAS [17] and CMS [16] Collaborations. First STXS measurements in the \({\textrm{H}} \rightarrow \uptau \uptau \) decay channel alone have been performed by the ATLAS Collaboration [36, 37]. In this paper, we report three STXS analyses in the \({\textrm{H}} \rightarrow \uptau \uptau \) decay channel performed by the CMS Collaboration. All analyses are based on the LHC data sets of the years 2016–2018, corresponding to an integrated luminosity of 138\(\,\text {fb}^{-1}\). The events are analyzed in the \({\textrm{e}} \upmu \), \({\textrm{e}} \uptau _\textrm{h} \), \(\upmu \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states based on the number of electrons, muons, and \(\uptau _\textrm{h}\) candidates in the event, where \(\uptau _\textrm{h}\) refers to a hadronic \(\uptau \) lepton decay. Results are reported in the form of signal strengths relative to the SM predictions and products of cross sections and branching fraction for the decay into \(\uptau \) leptons. They are provided in the redefined STXS stage-0 and -1.2 scheme. Some of the STXS stage-1.2 bins, to which the analyses are not yet sensitive, have been combined into supersets.

Two analyses target the simultaneous measurement of the \({\textrm{ggH}}\) and \({\textrm{qqH}}\) processes. One, referred to as the “cut-based” (CB) analysis, exploits an event categorization optimized on the ratio of signal over background events, together with one- (1D) and two-dimensional (2D) discriminants related to the properties of the \(\uptau \uptau \) and jet final state to distinguish between signal and backgrounds. The other, referred to as the neural network (NN) analysis, exploits NN event multiclassification, with the output of the NNs as the only discriminating observables. The NN-analysis is found to be more sensitive with a 30% stronger constraint on the signal strength for the STXS stage-0 \({\textrm{qqH}}\) bin and 30% (40%) stronger constraints, on average, on the signal strengths for the STXS stage-1.2 \({\textrm{ggH}}\) (\({\textrm{qqH}}\)) bins, relative to the CB-analysis. In addition, a third analysis, referred to as the \({\textrm{VH}}\)-analysis, targets \({\textrm{VH}}\) production. The inclusive, STXS stage-0, and -1.2 signal strengths and products of the cross sections and branching fraction for the decay into \(\uptau \) leptons obtained from the combination of the NN- and \({\textrm{VH}}\)-analyses are considered the main results of the paper. A description of the CB-analysis is given as it facilitates comparisons of the selections documented in the paper with alternative simulations or theory models. It also serves as reference for a previous publication of differential cross section measurements in the \({\textrm{H}} \rightarrow \uptau \uptau \) decay channel [38], with which it shares the same object selections, strategy for signal extraction, and methods for the estimation of data-driven backgrounds, and as a detailed verification for the statistical methods exploited by the NN-analysis.

The remainder of this paper is organized as follows. In Sects. 2 and 3 the CMS detector and the reconstruction of the objects and event variables used in the analyses are introduced. The event selections commonly used in all three analyses are described in Sect. 4. Modelings of signals and backgrounds are described in Sect. 5. The STXS scheme used to classify events is introduced in Sect. 6. In Sects. 7, 8, and 9, the selection steps and event classifications specific to the individual analyses are described in more detail. Systematic uncertainties are discussed in Sect. 10. The results of the analyses are discussed in Sect. 11. The paper is summarized in Sect. 12.

2 The CMS detector

The central feature of the CMS apparatus is a superconducting solenoid of 6\(\,\text {m}\) internal diameter, providing a magnetic field of 3.8 \(\,\text {T}\). Within the solenoid volume are a silicon pixel and strip tracker, a lead tungstate crystal electromagnetic calorimeter (ECAL), and a brass and scintillator hadron calorimeter (HCAL), each composed of a barrel and two endcap sections. Forward calorimeters extend the pseudorapidity (\(\eta \)) coverage provided by the barrel and endcap detectors. Muons are detected in gas-ionization chambers embedded in the steel flux-return yoke outside the solenoid.

Events of interest are selected using a two-tiered trigger system. The first level (L1), composed of custom hardware processors, uses information from the calorimeters and muon detectors to select events at a rate of around 100\(\,\text {kHz}\) within a fixed latency of about 4\(\,\upmu \text {s}\) [39]. The second level, known as the high-level trigger (HLT), consists of a farm of processors running a version of the full event reconstruction software optimized for fast processing, and reduces the event rate to around 1\(\,\text {kHz}\) before data storage [40].

A more detailed description of the CMS detector, together with a definition of the coordinate system used and the relevant kinematic variables, can be found in Ref. [41].

3 Event reconstruction

The reconstruction of the proton-proton (\({\textrm{pp}}\)) collision products is based on the particle-flow (PF) algorithm [42], which combines the information from all CMS subdetectors to reconstruct a set of particle candidates (PF candidates), identified as charged and neutral hadrons, electrons, photons, and muons. In the 2016 (2017–2018) data sets the average number of interactions per bunch crossing was 23 (32). The fully recorded detector data of a bunch crossing defines an event for further processing. The primary vertex (PV) is taken to be the vertex corresponding to the hardest scattering in the event, evaluated using tracking information alone, as described in Ref. [43]. Secondary vertices, which are detached from the PV, might be associated with decays of long-lived particles emerging from the PV. Any other collision vertices in the event are associated with additional mostly soft inelastic \({\textrm{pp}}\) collisions called pileup (PU).

Electron candidates are reconstructed by combining clusters of energy deposits in the ECAL with hits in the tracker [44, 45]. To increase their purity, reconstructed electrons are required to pass a multivariate electron identification discriminant, which combines information on track quality, shower shape, and kinematic quantities. For the analyses presented here, a working point with an identification efficiency of 90% is used, with a misidentification rate of \({\approx }\,1\%\) from jets, in the kinematic region of interest. Muons in the event are reconstructed by performing a simultaneous track fit to hits in the tracker and in the muon chambers [46, 47]. The presence of hits in the muon chambers already leads to a strong suppression of particles misidentified as muons. Additional identification requirements on the track fit quality and the compatibility of individual track segments with the fitted track further reduce the misidentification rate. For the analyses presented here, muon identification requirements with an efficiency of \({\approx }\,99\%\) are chosen, with a misidentification rate below 0.2% for pions.

The contributions from backgrounds to the electron (muon) selection are further reduced by requiring the corresponding lepton to be isolated from any hadronic activity in the detector. This property is quantified by an isolation variable

where \(p_{\textrm{T}} ^{{\textrm{e}} (\upmu )}\) corresponds to the electron (muon) transverse momentum \(p_{\textrm{T}}\) and \(\sum p_{\textrm{T}} ^{\text {charged}}\), \(\sum E_{\textrm{T}} ^{\text {neutral}}\), and \(\sum E_{\textrm{T}} ^{\gamma }\) to the \(p_{\textrm{T}}\) (transverse energy \(E_{\textrm{T}}\)) sum of all charged particles, neutral hadrons, and photons, in a predefined cone of radius \(\varDelta R= \sqrt{\smash [b]{\left( \varDelta \eta \right) ^{2}+\left( \varDelta \phi \right) ^{2}}}\) around the lepton direction at the PV, where \(\varDelta \eta \) and \(\varDelta \phi \) (measured in radians) correspond to the angular distances of the particle to the lepton in the \(\eta \) and azimuthal \(\phi \) directions. The chosen cone size is \(\varDelta R=0.3\,(0.4)\) for electrons (muons). The lepton itself is excluded from the calculation. To mitigate the contamination from PU, only those charged particles whose tracks are associated with the PV are taken into account. Since for neutral hadrons and photons an unambiguous association with the PV or PU is not possible, an estimate of the contribution from PU (\(p_{\textrm{T}} ^{\text {PU}}\)) is subtracted from the sum of \(\sum E_{\textrm{T}} ^{\text {neutral}}\) and \(\sum E_{\textrm{T}} ^{\gamma }\). This estimate is obtained from tracks not associated with the PV in the case of \(I_{\text {rel}}^{\upmu }\) and from the mean energy flow per area unit in the case of \(I_{\text {rel}}^{{\textrm{e}}}\). For negative values the result of this difference is set to zero. The isolation criteria given in Sect. 4 have an efficiency for isolated electrons and muons from \(\uptau \)-decays well above 95%.

For further characterization of the event, all reconstructed PF candidates are clustered into jets using the anti-\(k_{\textrm{T}}\) jet clustering algorithm as implemented in the FastJet software package [48, 49] with a distance parameter of 0.4. Jets resulting from the hadronization of b quarks are used to separate signal from top anti-top quark pair (\({\textrm{t}}\bar{\textrm{t}}\)) events. These are identified either exploiting the DeepCSV (CB- and \({\textrm{VH}}\)-analyses) or the DeepJet (NN-analysis) algorithm, as described in Refs. [50, 51]. The working points chosen for the DeepCSV algorithm correspond to b jet identification efficiencies of 70 and 80% for a misidentification rate for jets originating from light quarks and gluons of 1 and 11%, respectively. For the DeepJet algorithm a working point is chosen with an identification efficiency for b jets of 80% for a misidentification rate for jets originating from light quarks and gluons of 1% [52]. Jets with \(p_{\textrm{T}} >30\,\text {GeV} \) and \(|\eta |<4.7\) and b jets with \(p_{\textrm{T}} >20\,\text {GeV} \) and \(|\eta |<2.4\), (2.5 for 2017 and afterwards) are used. In 2017, the ECAL endcaps were subject to an increased noise level affecting the reconstruction of jets. Jets with \(p_{\textrm{T}} <50\,\text {GeV} \) in the corresponding range of \(2.65<|\eta |<3.14\) have been excluded from the analysis resulting in an expected efficiency loss of \({\approx }4\%\) for \({\textrm{H}} \rightarrow \uptau \uptau \) events provided via VBF.

Jets are also used as seeds for the reconstruction of \(\uptau _\textrm{h}\) candidates. This is done by exploiting the substructure of the jets, using the “hadrons-plus-strips” algorithm [53, 54]. Decays into one or three charged hadrons with up to two neutral pions with \(p_{\textrm{T}} >2.5\,\text {GeV} \) are used. Neutral pions are reconstructed as strips with dynamic size from reconstructed electrons and photons contained in the seeding jet, where the strip size varies as a function of the \(p_{\textrm{T}}\) of the electron or photon candidates. The \(\uptau _\textrm{h}\) decay mode is then obtained by combining the charged hadrons with the strips. To distinguish \(\uptau _\textrm{h}\) candidates from jets originating from the hadronization of quarks or gluons, and from electrons, or muons, the DeepTau (DT) algorithm [54] is used. This algorithm exploits the information of the reconstructed event record, comprising tracking, impact parameter, and ECAL and HCAL cluster information; the kinematic and object identification properties of the PF candidates in the vicinity of the \(\uptau _\textrm{h}\) candidate and the \(\uptau _\textrm{h}\) candidate itself; and several global characterizing quantities of the event. It results in a multiclassification output \(y^{\text {DT}} _{\alpha }\,(\alpha =\uptau ,\,{\textrm{e}},\,\upmu ,\,\text {jet} )\) equivalent to the Bayesian probability that the \(\uptau _\textrm{h}\) candidate originated from a \(\uptau \) lepton, an electron, muon, or the hadronization of a quark or gluon. From this output three discriminants are built according to

For the analyses presented here, predefined working points of \(D_{{\textrm{e}}}\), \(D_{\upmu }\), and \(D_{\text {jet}}\) are chosen [54]. The exact choice of working point depends on the analysis and \(\uptau \uptau \) final state, and is given in Table 1. For \(D_{{\textrm{e}}}\), the efficiencies vary from 54 (Tight) to 71% (VVVLoose) for misidentification rates from 0.05–5.42%, where the letter V in VLoose and other working-point labels stands for “Very”. For \(D_{\upmu }\), the efficiencies vary from 70.3 (Tight) to 71.1% (VLoose) for misidentification rates from 0.03–0.13%. For \(D_{\text {jet}}\), the efficiencies of the chosen working points vary from 35 (VTight) to 49% (Medium) for misidentification rates from 0.14–0.43%. The misidentification rate of \(D_{\text {jet}}\) strongly depends on the \(p_{\textrm{T}}\) and quark flavor of the misidentified jet. The estimated value of this rate should therefore be viewed as approximate.

The missing transverse momentum vector \({\vec p}_{\textrm{T}}^{\text {miss}}\) is also used in event characterization. For the CB- and \({\textrm{VH}}\)-analyses, it is calculated from the negative vector \(p_{\textrm{T}}\) sum of all PF candidates [55]. For the NN-analysis the pileup-per-particle identification algorithm [56] is applied to reduce the dependence of \({\vec p}_{\textrm{T}}^{\text {miss}}\) on PU. This means that \({\vec p}_{\textrm{T}}^{\text {miss}}\) is computed from the PF candidates weighted by their probability to originate from the PV [57]. The \({\vec p}_{\textrm{T}}^{\text {miss}}\) is used for the discrimination of W boson production in association with jets (\({\textrm{W}} \)+jets) from signal by exploiting the transverse mass

where \(\ell \) stands for an electron or a muon, but might also refer to the vector sum of \({\vec p}_{\textrm{T}} ^{{\textrm{e}}}\) and \({\vec p}_{\textrm{T}} ^{\upmu }\), and \(\varDelta \phi \) stands for the angular difference of \({\vec p}_{\textrm{T}} ^{\ell }\) and \({\vec p}_{\textrm{T}}^{\text {miss}}\). The \({\vec p}_{\textrm{T}}^{\text {miss}}\) is also used for a likelihood-based estimate of the invariant mass \(m_{\uptau \uptau }\) of the \(\uptau \uptau \) system before the decays of the \(\uptau \) leptons [58]. This estimate combines the measurement of \({\vec p}_{\textrm{T}}^{\text {miss}}\) and its covariance matrix with the measurements of the visible \(\uptau \uptau \) decay products, utilizing the matrix elements for unpolarized \(\uptau \) decays [59] for the decay into leptons and the two-body phase space [60] for the decay into hadrons. On average the resolution of this estimate amounts to 10% in the \(\uptau _\textrm{h} \uptau _\textrm{h} \), 15% in the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \), and 20% in the \({\textrm{e}} \upmu \) final states, related to the number of neutrinos that escape detection.

4 Event selection

Common to all analyses presented in this paper is the selection of a \(\uptau \) pair. Depending on the final state, the online selection in the HLT step is based on the presence of an \({\textrm{e}} \upmu \) pair, a single electron or muon, an \({\textrm{e}} \uptau _\textrm{h} \) or \(\upmu \uptau _\textrm{h} \) pair, or a \(\uptau _\textrm{h} \uptau _\textrm{h} \) pair in the event [61,62,63]. In the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, the presence of a lepton pair in the HLT step allows lower \(p_{\textrm{T}}\) thresholds on the light lepton candidate. The efficiency of the online selection is generally above 90% without strong kinematic dependencies of the leptons selected for the offline analysis, as checked from independent monitor trigger setups. In the offline selection, further requirements on \(p_{\textrm{T}}\), \(\eta \), and \(I_{\text {rel}}^{{\textrm{e}} (\upmu )}\), are applied in addition to the object identification requirements described in Sect. 3 and summarized in Table 2.

In the \({\textrm{e}} \upmu \) final state, an electron and a muon with \(p_{\textrm{T}} >15\,\text {GeV} \) and \(|\eta | <2.4\) are required. Depending on the trigger path that has led to the online selection of an event, a stricter requirement of \(p_{\textrm{T}} >24\,\text {GeV} \) is imposed on one of the two leptons to ensure a sufficiently high efficiency of the HLT selection. Both leptons are required to be isolated from any hadronic activity in the detector according to \(I_{\text {rel}}^{{\textrm{e}} (\upmu )} <0.15\,(0.20)\).

In the \({\textrm{e}} \uptau _\textrm{h} \) (\(\upmu \uptau _\textrm{h} \)) final state, an electron (muon) with \(p_{\textrm{T}} >25\,(20)\,\text {GeV} \) is required, if an event was selected by a trigger based on the presence of the \({\textrm{e}} \uptau _\textrm{h} \) (\(\upmu \uptau _\textrm{h} \)) pair in the event. From 2017 on the threshold on the muon is raised to 21\(\,\text {GeV}\). If the event was selected only by a single-electron trigger, the \(p_{\textrm{T}}\) requirement on the electron is increased to 26, 28, or 33\(\,\text {GeV}\) for the years 2016, 2017, or 2018, respectively. For muons, the \(p_{\textrm{T}}\) requirement is increased to \(23\,(25)\,\text {GeV} \) for 2016 (2017–2018), if selected only by a single-muon trigger. The electron (muon) is required to be contained in the central detector with \(|\eta |<2.1\), and to be isolated according to \(I_{\text {rel}}^{{\textrm{e}} (\upmu )} <0.15\). The \(\uptau _\textrm{h}\) candidate is required to have \(|\eta |<2.3\) and \(p_{\textrm{T}} >35\, (32)\,\text {GeV} \) if selected by an \({\textrm{e}} \uptau _\textrm{h} \,(\upmu \uptau _\textrm{h} )\) pair trigger, or \(p_{\textrm{T}} >30\,\text {GeV} \) if selected by a single-electron (single-muon) trigger. In the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state, both \(\uptau _\textrm{h}\) candidates are required to have \(|\eta |<2.1\) and \(p_{\textrm{T}} >40\,\text {GeV} \). The working points of the DT discriminants as described in Sect. 3 are chosen depending on the final state and are given in Table 1.

The selected \(\uptau \) decay candidates are required to be of opposite charges and to be separated by more than \(\varDelta R= 0.3\) in the \(\eta \)–\(\phi \) plane in the \({\textrm{e}} \upmu \) final state and 0.5 otherwise. This applies also to all selected \(\uptau \)-decay candidates in the \({\textrm{VH}}\)-analysis, where the final states comprise a combination of one or more light leptons (e or \(\upmu \)) and one or two \(\uptau _\textrm{h}\) candidates. The closest distance of the tracks to the PV is required to be \(d_{z}<0.2\,\text {cm} \) along the beam axis. For electrons and muons, an additional requirement of \(d_{xy}<0.045\,\text {cm} \) in the transverse plane is applied. In rare cases in which more than the expected number of \(\uptau _\textrm{h}\) candidates fulfilling all selection requirements is found in an event, in the CB- and NN-analyses, the candidates with the highest \(D_{\text {jet}}\) scores are chosen until the expected number of \(\uptau _\textrm{h}\) candidates is met. In subsequent steps, the analyses differ slightly in their selection requirements, which is a consequence of the different analysis strategies employed in the CB- and NN-analyses, and the different measurement target for the \({\textrm{VH}}\)-analysis. For the CB- and NN-analyses, events with additional leptons fulfilling looser selection criteria are excluded to avoid the assignment of single events to more than one \(\uptau \uptau \) final state. For the NN-analysis, which is more inclusive than the CB-analysis before event classification, a requirement of \(m_{\textrm{T}} ^{\ell }<70\,\text {GeV} \) is imposed in the \({\textrm{e}} \uptau _\textrm{h} \) (\(\upmu \uptau _\textrm{h} \)) final state, to keep an orthogonal control region for the estimate of the background from events with quark- or gluon-induced jets, which are misidentified as \(\uptau _\textrm{h}\) leptons (\(\text {jet}\rightarrow \uptau _\textrm{h} \)), as discussed in Sect. 5.2. In the \({\textrm{e}} \upmu \) final state, events with at least one b jet are excluded from the selection in order to suppress the background from \({\textrm{t}}\bar{\textrm{t}}\) production. Finally, to prevent kinematic event overlap with the analysis of \({\textrm{H}} \rightarrow {\textrm{WW}}\) events, \(m_{\textrm{T}} ^{{\textrm{e}} \upmu }\) calculated from \({\vec p}_{\textrm{T}} ^{{\textrm{e}}} +{\vec p}_{\textrm{T}} ^{\upmu } \) and \({\vec p}_{\textrm{T}}^{\text {miss}}\) is required to be less than 60\(\,\text {GeV}\) in the \({\textrm{e}} \upmu \) final state.

Further selection details of the CB- and \({\textrm{VH}}\)-analyses are discussed in Sects. 8, 9.1, and 9.2.

5 Background and signal modeling

The main backgrounds in the CB- and NN-analyses originate from Z boson production in association with jets in the \(\uptau \uptau \) decay channel (\({\textrm{Z}} \rightarrow \uptau \uptau \)), \({\textrm{W}} \)+jets, \({\textrm{t}}\bar{\textrm{t}}\) production, and SM events where light quark- or gluon-induced jets are produced through the strong interaction, referred to as quantum chromodynamics (QCD) multijet production. Minor backgrounds originate from the production of two V bosons (diboson), single t quark, and Z boson production in the \({\textrm{ee}}\) and \(\upmu \upmu \) final states (also denoted as \({\textrm{Z}} \rightarrow \ell \ell \)). We distinguish three ways in which these processes may contribute to the selected event samples:

-

1.

they contain two genuine \(\uptau \) leptons in their final states;

-

2.

at least one quark- or gluon-induced jet is misidentified as \(\uptau _\textrm{h}\) (\(\text {jet}\rightarrow \uptau _\textrm{h} \)), e, or \(\upmu \) (\(\text {jet}\rightarrow \ell \));

-

3.

an isolated high-\(p_{\textrm{T}}\) electron or muon is misidentified as originating from a \(\uptau \) lepton decay or mistakenly identified as a \(\uptau _\textrm{h}\) candidate.

Event groups 1 and 2 are estimated from data, as will be discussed in the following sections. Event group 3 and the signal are estimated from simulation. Group 1, which still relies on the simulation of the \(\uptau \) decays, group 3, and the signal are subject to simulation-to-data corrections, which have been determined from dedicated control regions, as will be discussed in Sect. 10.1.

For the \({\textrm{VH}}\)-analysis, the backgrounds generally involve the production of an additional V boson. Details of the background estimation are given in Sect. 9.4

5.1 Backgrounds with genuine \(\uptau \) lepton pairs

For events in the CB- and NN-analyses in which, e.g., the decay of a Z boson results in two genuine \(\uptau \) leptons, the \(\uptau \)-embedding method is used, as described in Ref. [64]. For this purpose \(\upmu \upmu \) events are selected in data. All energy deposits of the muons are removed from the event record and replaced by simulated \(\uptau \) lepton decays with the same kinematic properties as the selected muons. In this way, the method relies only on the simulation of the \(\uptau \) lepton decay and its energy deposits in the detector, while all other parts of the event, such as the reconstructed jets, their identification as originating from the PV, the identification of b jets, or the non-\(\uptau \) related parts of \(p_{\textrm{T}} ^\text {miss}\), are obtained from data. This results in an improved modeling of the data compared to the simulation of the full process and removes the need for several of the simulation-to-data corrections detailed in Sect. 10.1 for these events. A detailed discussion of the selection of the original \(\upmu \upmu \) events, the exact procedure itself, its range of validity, and related uncertainties can be found in Ref. [64].

Although the selected muons predominantly originate from Z boson decays, there are also contributions from other processes that result in two genuine \(\uptau \) leptons. For example, \({\textrm{t}}\bar{\textrm{t}}\) and diboson events where both W bosons decay into a muon and neutrino are included in the original selection of \(\upmu \upmu \) events, and replacing the selected muons by simulated \(\uptau \) lepton decays naturally leads to an estimate of these processes, as well. For the selection described in Sect. 4, 97% of the \(\upmu \upmu \) events selected for the \(\uptau \)-embedding method originate from Z boson decays, \({\approx }1\%\) from \({\textrm{t}}\bar{\textrm{t}}\) production, and the rest from other processes.

5.2 Backgrounds with jets misidentified as hadronic \(\uptau \) lepton decays

The main processes contributing to \(\text {jet}\rightarrow \uptau _\textrm{h} \) events in the \({\textrm{e}} \uptau _\textrm{h} \), \(\upmu \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states are QCD multijet, \({\textrm{W}} \)+jets, and \({\textrm{t}}\bar{\textrm{t}}\) production. These events are estimated using the “fake factor” or \(F_{\textrm{F}}\)-method described in Refs. [29, 65] and adapted to each corresponding analysis described in this paper. For this purpose, the signal region (SR) as defined by the event selection given in Sect. 4 is complemented by the disjoint application region (AR) and determination regions (DR\(^{i}\), where i stands for QCD, \({\textrm{W}} \)+jets, or \({\textrm{t}}\bar{\textrm{t}}\)). All other processes are estimated either from simulation or from the \(\uptau \)-embedding method and subtracted from the data in the AR, DR\(^{\text {QCD}}\), and DR\(^{{\textrm{W}} {+}\text {jets}}\). The SR and the AR differ only in the working point chosen for the identification of the \(\uptau _\textrm{h}\) candidate, where for the AR a looser working point is chosen and the events from the SR are excluded.

Depending on the final state, one or three independent sets of extrapolation factors \(F_{\textrm{F}} ^{i}\) are then derived. For the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state, where QCD multijet production contributes \({\approx }94\%\) of the events in the AR, only \(F_{\textrm{F}} ^{\text {QCD}}\) is determined, and \(F_{\textrm{F}} ^{{\textrm{W}} {+}\text {jets}}\) and \(F_{\textrm{F}} ^{{\textrm{t}}\bar{\textrm{t}}}\) are assumed to be similar. In the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, where the sharing is more equal, separate \(F_{\textrm{F}} ^{i}\) are used for QCD multijet, \({\textrm{W}} \)+jets, and \({\textrm{t}}\bar{\textrm{t}}\) production. In these final states the largest fraction in the AR, in the range of 55–70%, is expected to originate from \({\textrm{W}} \)+jets production; the smallest fraction in the AR, in the range of 2–5%, is expected to originate from \({\textrm{t}}\bar{\textrm{t}}\) production.

Each \(F_{\textrm{F}} ^{i}\) is determined in a dedicated DR\(^{i}\), defined to enrich each corresponding process. The \(F_{\textrm{F}} ^{i}\) are then used to estimate the yields \(N_{\text {SR}}\) and kinematic properties of the combination of these backgrounds in the SR from the number of events \(N_{\text {AR}}\) in the AR according to

For this purpose, the \(F_{\textrm{F}} ^{i}\) are combined into a weighted sum, using the simulation-based estimate of the fractions \(w_{i}\) of each process in the AR. A template fit to the data in the AR yields a similar result for the \(w_{i}\).

For the estimation of \(F_{\textrm{F}} ^{\text {QCD}}\), the charges of the two selected \(\uptau \) decay products are required to be of the same sign. For the estimation of \(F_{\textrm{F}} ^{{\textrm{W}} {+}\text {jets}}\), high \(m_{\textrm{T}} ^{ \ell }\) and the absence b jets are required. For \({\textrm{t}}\bar{\textrm{t}}\) production a sufficiently pure DR with still similar kinematic properties to the SR in data can not be defined. Instead the \(F_{\textrm{F}} ^{{\textrm{t}}\bar{\textrm{t}}}\) are obtained from simulation and corrected to data with a selection of more than two jets, at least one b jet, and more than two leptons in an event.

The \(F_{\textrm{F}} ^{i}\) depend mainly on the \(p_{\textrm{T}}\) of the (leading) \(\uptau _\textrm{h}\) candidate and are derived on an event-by-event basis. For \(F_{\textrm{F}} ^{\text {QCD}}\), an additional dependency on the number of selected jets \(N_{\text {jet}}\) being 0, 1, or \({\ge }2\), and for \(F_{\textrm{F}} ^{{\textrm{W}} {+}\text {jets}}\), a similar dependency on \(N_{\text {jet}}\) and the distance \(\varDelta R(\uptau _\textrm{h},{\textrm{e}} \,(\upmu ))\) between the \(\uptau _\textrm{h}\) and the \({\textrm{e}} \,(\upmu )\) in \(\eta \)–\(\phi \) are introduced. Subleading dependencies on \({\vec p}_{\textrm{T}} ^{ {\textrm{e}} (\upmu )}\), \(I_{\text {rel}}^{{\textrm{e}} (\upmu )}\), the \(p_{\textrm{T}}\) of the second leading \(\uptau _\textrm{h}\) candidate, or the mass of the visible \(\uptau \uptau \) decay products (\(m_\text {vis}\)) enter via bias corrections obtained from additional control regions in data. They are usually close to 1 but can range between 0.8–1.2, depending on the process and observable.

5.3 Backgrounds with jets misidentified as an electron or muon

The number of \(\text {jet}\rightarrow \ell \) events in the \({\textrm{e}} \upmu \) final state is estimated in a way that is similar to the \(F_{\textrm{F}}\)-method. In this case, an AR is distinguished from the SR by requiring the charges of the electron and muon to be of the same sign. A DR is defined requiring \(0.2<I_{\text {rel}}^{\upmu } <0.5\) from which a transfer factor \(F_{\textrm{T}}\) is obtained to extrapolate the number \(N_{\text {AR}}\) of events in the AR to the number \(N_{\text {SR}}\) of events in the SR according to

The main dependency of \(F_{\textrm{T}}\) is on the distance \(\varDelta R({\textrm{e}},\upmu )\) between the e and \(\upmu \) trajectories at the PV in \(\eta \)–\(\phi \), and \(N_{\text {jet}}\) being 0, 1, or \({\ge }2\). Subleading dependencies on \({\vec p}_{\textrm{T}} ^{{\textrm{e}}}\) and \({\vec p}_{\textrm{T}} ^{\upmu }\) are introduced via a closure correction in the DR and a bias correction to account for the fact that \(F_{\textrm{T}}\) has been determined from less-isolated muons. The latter is obtained from another control region with \(0.15\,(0.20)<I_{\text {rel}}^{{\textrm{e}}} <0.50\) for the (CB-) NN-analysis.

5.4 Simulated backgrounds and signal

In the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, more than 80% of all backgrounds, after the selection described in Sect. 4, are obtained from one of the methods described in the previous sections. In the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state, this fraction is \({\gtrsim }\,95\%\). All remaining backgrounds—for example Z boson, \({\textrm{t}}\bar{\textrm{t}}\), or diboson production, where at least one decay of a V boson into an electron or muon is not covered by either of the methods—are obtained from simulation.

The production of Z bosons in the \({\textrm{ee}}\) and \(\upmu \upmu \) final states and W boson production are simulated at leading order (LO) precision in the strong coupling constant \(\alpha _\textrm{S}\), using the MadGraph 5_amc@nlo 2.2.2 (2.4.2) event generator [66, 67] for the simulation of the data taken in 2016 (2017–2018), exploiting the “so-called” MLM jet matching and merging scheme of the matrix element calculation with the parton [68]. To increase the number of simulated events in regions of high signal purity, supplementary samples are generated with up to four outgoing partons in the hard interaction. For diboson production MadGraph 5_amc@nlo is used at next-to-LO (NLO) precision in \(\alpha _\textrm{S}\). For the simulation of \({\textrm{t}}\bar{\textrm{t}}\) and single t quark production the powheg 2 [69,70,71,72,73,74] event generator is used at NLO precision in \(\alpha _\textrm{S}\).

The signal samples are also obtained at NLO precision in \(\alpha _\textrm{S}\) using powheg for the five main production modes, gluon fusion [71, 74], VBF [75], \({\textrm{VH}}\) (split by \({\textrm{WH}}\) and \({\textrm{ZH}}\)) [76], and \({\textrm{t}}\bar{\textrm{t}} {\textrm{H}} \) [77]. For the production via gluon fusion the distributions of \(p_{\textrm{T}} ^{{\textrm{H}}}\) and the jet multiplicity in the simulation are tuned to match the next-to-NLO (NNLO) accuracy obtained from full phase space calculations with the nnlops generator [78, 79].

All signal samples have been produced assuming \(m_{{\textrm{H}}} =125\,\text {GeV} \) and rescaled to correspond to the cross sections and branch fraction for the decay to \(\uptau \) leptons as expected for \(m_{{\textrm{H}}} =125.38\,\text {GeV} \), based on the recommendations of the LHC Higgs Working Group [80]. This change is less than \(-1\%\) for the cross sections for H production via gluon fusion and VBF, and \(-1\%\) for the branching fraction for the decay in \(\uptau \) leptons.

For the generation of all processes, the NNPDF3.0 [81] (NNPDF3.1 [82]) set of parton distribution functions (PDFs) is used for the data taken in 2016 (2017–2018). All matrix element generators are interfaced with the pythia 8.230 event generator [83], which is used to model the effects of parton showering, hadronization, and fragmentation, as well as the decay of the \(\uptau \) lepton. For this purpose, two different tunes (CUETP8M1 [84] and CP5 [85]) are used for the data taken in 2016 and from 2017 onward, for the parameterization of multiparton interactions and the underlying event.

When comparing to data, Z boson, \({\textrm{t}}\bar{\textrm{t}}\), and single t quark events in the \({\textrm{tW}}\)-channel are normalized to their cross sections at NNLO precision in \(\alpha _\textrm{S}\) [86,87,88]. Single t quark production in the t-channel and diboson events are normalized to their cross sections at NLO precision in \(\alpha _\textrm{S}\) or higher [88,89,90]. The signal samples are normalized to their inclusive cross sections and branching fractions as recommended by the LHC Higgs Working Group [34], assuming an H mass of 125.38\(\,\text {GeV}\) [13].

Binning for \({\textrm{ggH}}\) production in the reduced STXS stage-1.2 scheme as exploited by the analyses presented in this paper. The dark gray boxes indicate the STXS bins measured by the analyses. Depending on the analysis, a split is applied to the 0-jet bin, as is indicated by the dashed line. The boxes colored in light gray indicate a finer subdivision of the NN signal classes for \(N_{\text {jet}} \ge 2\) used for the classification task, as explained in Sect. 7. Thresholds on \(p_{\textrm{T}} ^{{\textrm{H}}}\) are given in \(\text {GeV}\)

For all simulated events, additional inclusive inelastic \({\textrm{pp}}\) collisions generated with pythia are added according to the expected PU profile in data to take the effect of the observed PU into account. All events generated are passed through a Geant4-based [91] simulation of the CMS detector and reconstructed using the same version of the CMS event reconstruction software as used for the data.

6 Simplified template cross section schemes

The analyses presented in this paper aim for measurements of inclusive and differential production cross sections for the signal at increasing levels of granularity following the STXS scheme specified by the LHC Higgs Working Group [34]. In this scheme two stages are defined. Stage-0 assumes the separation by production modes, of which \({\textrm{ggH}}\), \({\textrm{qqH}}\), and \({\textrm{VH}}\) are of relevance for this paper. For \({\textrm{ggH}}\), which subsumes gluon fusion and \({\textrm{gg}}\rightarrow {\textrm{Z}} ({\textrm{qq}}){\textrm{H}} \) production, and \({\textrm{qqH}}\), which comprises VBF and \({\textrm{qq}}\rightarrow {\textrm{V}} ({\textrm{qq}}){\textrm{H}} \) production, the simulated signals of each corresponding process are combined for signal extraction, assuming relations between the processes as expected from the SM.

At stage-1.1 [35] and -1.2, these production modes are further split into STXS bins according to the jet multiplicity at stable-particle level, the invariant mass of the two leading jets \(m_{\textrm{jj}}\), if present in an event, and \(p_{\textrm{T}} ^{{\textrm{H}}}\). The \({\textrm{VH}}\) process is further split by V type and \(p_{\textrm{T}}\) (\(p_{\textrm{T}} ^{{\textrm{V}}}\)). For all cross section measurements, the absolute value of the H rapidity is required to be less than 2.5. The only difference of stage-1.2 with respect to stage-1.1 that is of relevance for this paper is the further splitting of the \({\textrm{ggH}}\) bin with \(p_{\textrm{T}} ^{{\textrm{H}}} >200\,\text {GeV} \) into a bin with \(200<p_{\textrm{T}} ^{{\textrm{H}}} \le 300\,\text {GeV} \) and a bin with \(300\,\text {GeV} <p_{\textrm{T}} ^{{\textrm{H}}} \).

Since the analyses under consideration do not have enough events to exploit all STXS bins defined in Ref. [35], the bins have been combined as shown in Figs. 1, 2 and 3 resulting in 7–8 measurements for \({\textrm{ggH}}\), 4 measurements for \({\textrm{qqH}}\), and 4 measurements for \({\textrm{VH}}\) production. In the figures, the gray boxes correspond to the measured STXS bins. For the CB-analysis, the \({\textrm{ggH}}\) 0-jet STXS bin is measured inclusively in \(p_{\textrm{T}} ^{{\textrm{H}}}\). For the NN-analysis this bin is split in \(p_{\textrm{T}} ^{{\textrm{H}}}\). Throughout the text the \({\textrm{qqH}}\) bin with \({<}2\) jets or \(0<m_{\textrm{jj}} <350\,\text {GeV} \) is also labeled as “non-VBF-topo”. For all STXS bins, histogram template distributions are obtained from the simulated signal processes discussed in Sect. 5.4. These are fitted to the data together with corresponding template distributions from each considered background process, for signal extraction in each given STXS bin, as discussed in Sect. 11.

7 Neural network based analysis

For the NN-analysis, all selected events are provided as input to a set of multiclassification NNs. The outputs of these NNs are used to distribute the events into background classes depending on the \(\uptau \uptau \) final state, as shown in Table 3, and a number of signal classes.

The background classes, which are based on the experimental signatures of groups of processes rather than individual processes, closely resemble the background model discussed in Sect. 5. The genuine \(\uptau \), \(\text {jet}\rightarrow \uptau _\textrm{h} \), and \(\text {jet}\rightarrow \ell \) classes are trained on data. The \({\textrm{t}}\bar{\textrm{t}}\) (tt) and diboson (db) classes are trained on simulated events from \({\textrm{t}}\bar{\textrm{t}}\) and diboson production excluding those parts of the processes which are already covered by the \(\uptau \)-embedding method. The db class in addition subsumes single t quark production. The \({\textrm{Z}} \rightarrow \ell \ell \) (zll) classes in the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, are trained on simulated \({\textrm{Z}} \rightarrow {\textrm{ee}}\) and \({\textrm{Z}} \rightarrow \upmu \upmu \) events. Finally the miscellaneous (misc) classes comprise those processes that are either difficult to isolate or too small to be treated as single templates for signal extraction. These are \({\textrm{Z}} \rightarrow \ell \ell \) events in the \({\textrm{e}} \upmu \) final state; diboson and single t quark production in the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states; and \({\textrm{t}}\bar{\textrm{t}}\), \({\textrm{Z}} \rightarrow \ell \ell \), diboson, and single t quark production in the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state.

Depending on the stage of the STXS measurement that the analysis is targeting, two different sets of NNs are used that differ by the number of signal classes. Two signal classes are defined for the stage-0 measurement corresponding to the \({\textrm{ggH}}\) and \({\textrm{qqH}}\) processes. For the stage-1.2 measurement, the stage-0 processes are divided into 15 subclassess, splitting the signal events for training by their jet multiplicity and kinematic properties at the stable-particle level. This subdivision follows the STXS stage-1.2 scheme as shown in Figs. 1 and 2, with the exception that the signal class for \({\textrm{ggH}}\) events with \(N_{\text {jet}} \ge 2\) is subdivided into four additional STXS bins, according to \(p_{\textrm{T}} ^{{\textrm{H}}}\) and \(m_{\textrm{jj}}\) to accommodate future combined coupling measurements.

The goal of this strategy is to achieve not only the best possible separation between the signal and each of the most relevant backgrounds, but also across all individual STXS stage-1.2 bins.

7.1 Neural network layout

For each measurement, a distinct NN is trained for each of the four \(\uptau \uptau \) final states. All NNs have a fully connected feed-forward architecture with two hidden layers of 200 nodes each. The activation function for the hidden nodes is the hyperbolic tangent. For the output layers the nodes are defined by the classes discussed in the previous section and the activation function is chosen to be the softmax function [92]. The NN output function \(y_{l}\) of each output node l can be interpreted as a Bayesian conditional probability for an event to be associated with event class l, given its input features \(\vec {x}\). This conditional probability interpretation does not take the prior of the production rate of each corresponding process into account.

In the \({\textrm{e}} \uptau _\textrm{h} \), \(\upmu \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states each NN has the same 14 input features comprising the \(p_{\textrm{T}}\) of both \(\uptau \) candidates and their vector sum; the \(p_{\textrm{T}}\) of the two leading jets, their vector sum, their difference in \(\eta \), and \(m_{\textrm{jj}}\); \(N_{\text {jet}}\); the number of b jets \(N_{\text {Btag}}\); \(m_{\uptau \uptau }\); \(m_\text {vis}\); and the estimates of the momentum transfer of each exchanged vector boson under the VBF hypothesis, as used in Ref. [93]. In the \({\textrm{e}} \upmu \) final state, where events containing b jets have been excluded from the analysis, \(N_{\text {Btag}}\) carries no discriminating information. Instead, \(m_{\textrm{T}} ^{{\textrm{e}} \upmu }\) has been added as an input feature with some separating power. These variables have been selected from a larger feature space based on their importance for the classification task derived from the metric defined in Ref. [94]. Moreover, to account for differences in data-taking conditions, the data-taking year is an additional input to each NN that is provided through one-hot-encoding, such that the correct data-taking year obtains the value 1, while all other data-taking years obtain the value 0.

Before entering the NNs, all input features are standardized for their distributions to have mean 0 and s.d. 1. Potentially missing features in a given event, such as \(m_{\textrm{jj}}\) for events with less than two selected jets, are assigned a default value that is close enough to the transformed value space to not influence the NN decision.

7.2 Neural network training

The samples discussed in Sect. 5 are used for the training of the NNs. Those processes, which are part of the misc event class, are weighted according to their expected production rates to represent the event mixture as expected in the test samples.

The parameters to be optimized during training are the weights (\(\{w_{a}\}\)) and biases (\(\{b_{b}\}\)) of \(y_{l}\). The classification task is encoded in the NN loss function, chosen to be the categorical cross entropy

where k indicates the event, on which L is evaluated. All training events are implicitly labeled by the true process p to which they belong. The NN output function for event k to belong to event class l is given by \(y_{l} ^{(k)}\). The function \(y_{p}^{\prime (k)}\) encodes the prior knowledge of the training. It is 1 if the predicted class l of event k coincides with p, and is 0 otherwise. The \(y_{l} ^{(k)}\) depend on the weights, biases, and input features \(\{\vec {x}_{p}^{(k)} \}\) of event k to the corresponding NN. Before training, the weights are initialized with random numbers using the Glorot initialization technique [95] with values drawn from a uniform distribution. The biases are initialized with zero. The training is then performed as a minimization task on the empirical risk functional

in the space of \(\{w_{a}\}\) and \(\{b_{b}\}\) using randomly sampled mini-batches of 30 events per signal and background class and data-taking year, drawn from the training data set using a balanced batch approach [96]. This approach has shown improved convergence properties on training samples with highly imbalanced lengths. The batch definition guarantees that all true event classes enter the training with equal weight in the evaluation of R, i.e., uniform prevalence. On each mini-batch a gradient step is applied defined by the partial derivatives of L in each weight, \(w_{a}\), and bias, \(b_{b}\), using the Adam minimization algorithm [97], with a constant learning rate of \(10^{-4}\).

To guarantee statistical independence, events that are used for training are not used for any other step of the analysis. The performance of the NN during training is monitored evaluating R on a validation subset that contains a fraction of 25% of randomly chosen events from the training sample, which are excluded from the gradient computation. The training is stopped if the evaluation of R on the validation data set does not indicate any further decrease for a sequence of 50 epochs, where an epoch is defined by 1000 mini-batches. The NNs used for the analysis are then defined by the weights and biases of the epoch with the minimal value of R on the validation sample. To improve the generalization property of the NNs, two regularization techniques are introduced. First, after each hidden layer, a layer with a dropout probability of 30% is added. Second, the weights of the NNs are subject to an L2 (Tikhonov) regularization [98] with a regularization factor of \(10^{-5}\).

The NNs draw their power not only from the isolated values of each corresponding feature, but also from correlations across features. To provide an objective statistical measure of an adequate modeling of the inclusive NN feature space, goodness-of-fit (GoF) tests have been performed on 1D and 2D histograms of all features and their pairwise combinations. This has been done prior to the application of the NNs to the test data set and the data. These GoF tests are based on a saturated likelihood model, as described in Ref. [99], exploiting the data model as described in Sect. 5 and including all systematic uncertainties of the model and their correlations as used for signal extraction. During these tests we generally observe an agreement of the model with the data within 5–10% of the event density, well contained in the combined systematic variations of the uncertainty model typically ranging between 10–15%, prior to the maximum likelihood fit used for signal extraction.

7.3 Characterization of the classification task

The overall success of the NNs in adapting to the given classification task is monitored with the help of confusion matrices. Examples of such confusion matrices for the STXS stage-0 and -1.2 measurements, evaluated on the full analyzed data set in the \(\upmu \uptau _\textrm{h} \) final state, are shown in Fig. 4. For the given representation the matrices have been normalized such that all entries in each column, corresponding to a given true event class, sum to unity. The values on the diagonals in the figure thus represent the sensitivity of the NN to each given true event class. Random association would lead to a uniform distribution across predicted event classes with a weight of one out of seven (twenty) for the stage-0 (-1.2) training.

Confusion matrices for the NN classification tasks used for the (upper) STXS stage-0/inclusive and (lower) STXS stage-1.2 cross section measurements described in Sect. 7. These confusion matrices have been evaluated on the full test data set, comprising all data-taking years in the \(\upmu \uptau _\textrm{h} \) final state. They are normalized such that all entries in each column, corresponding to a given true event class, sum to unity

For the stage-0 classification, sensitivities of 70% and larger can be observed for the \({\textrm{qqH}}\), genuine \(\uptau \), tt, and zll classes. The \(\text {jet}\rightarrow \uptau _\textrm{h} \) and misc classes have less prominent features to identify them, which partially relates to the fact that they comprise several different processes. The \({\textrm{ggH}}\) class also reveals ambiguities, especially with respect to the \({\textrm{qqH}}\) and genuine \(\uptau \) classes. Adding the fractions of true \({\textrm{ggH}}\) events in these three NN output classes results in a sensitivity of 79% to distinguish \({\textrm{ggH}}\) events from events without genuine \(\uptau \) leptons in the final state, giving hint to the importance of \(\uptau \)-related features for the NN response, in this case. The distinction of \({\textrm{ggH}}\) from genuine \(\uptau \) events mostly relies on features related to \(p_{\textrm{T}} ^{{\textrm{H}}}\), such as the vector sum \(p_{\textrm{T}}\) of the two \(\uptau \) candidates. On the other hand \({\textrm{ggH}}\) events with high \(p_{\textrm{T}} ^{{\textrm{H}}}\) tend to higher jet multiplicities, which makes them harder to distinguish from \({\textrm{qqH}}\) events. These trends are confirmed by the confusion matrix of the stage-1.2 classification that reveals larger off-diagonal elements relating \({\textrm{ggH}}\) events with \(N_{\text {jet}} =0\) and low \(p_{\textrm{T}} ^{{\textrm{H}}}\) with genuine \(\uptau \) events and \({\textrm{ggH}}\) events with \(N_{\text {jet}} \ge 2\) and \(m_{\textrm{jj}} >350 \,\text {GeV} \) with \({\textrm{qqH}}\) events.

The stage-1.2 classification reveals high sensitivities to the \({\textrm{qqH}}\) signal classes with high \(m_{\textrm{jj}}\) and the \({\textrm{ggH}}\) signal classes with high \(p_{\textrm{T}} ^{{\textrm{H}}}\). Larger confusion, sticking out from the general trend, is observed for \({\textrm{qqH}}\) events with \(N_{\text {jet}} <2\) or \(m_{\textrm{jj}} <350\,\text {GeV} \). We observe that 70% of these events migrate from their original truth labeled class into one of three \({\textrm{ggH}}\) NN output classes with \(N_{\text {jet}} \ge 2\) and \(m_{\textrm{jj}} <350\,\text {GeV} \). This can be explained by the similarity of the observable signatures. For \({\textrm{ggH}}\) events with \(N_{\text {jet}} \ge 2\) and \(m_{\textrm{jj}} <350\,\text {GeV} \) the reconstructed jets may originate from the matrix element calculation, but most probably they emerge from the parton-shower model. In the case of initial-state radiation, \(m_{\textrm{jj}}\) can take large values and thus mimic the VBF signature in the detector. The fact that the NN associates 76% of the \({\textrm{qqH}}\) events originating from the bin in discussion with NN output classes with \(N_{\text {jet}} \ge 2\) and \(m_{\textrm{jj}} <350\,\text {GeV} \) indicates the experimental signature that the NN has identified to be decisive for this classification. The fact that no migrations from the corresponding \({\textrm{ggH}}\) bins into the \({\textrm{qqH}}\) bin are observed can be explained by the more specific signatures in the \({\textrm{ggH}}\) bins, which are additionally split in \(p_{\textrm{T}} ^{{\textrm{H}}}\). The \({\textrm{qqH}}\) bin, which is inclusive in \(p_{\textrm{T}} ^{{\textrm{H}}}\), acts like a superclass to these \({\textrm{ggH}}\) bins in this respect. An event that is compatible with a certain \(p_{\textrm{T}} ^{{\textrm{H}}}\) hypothesis will be associated with one of the \({\textrm{ggH}}\) classes rather than the \({\textrm{qqH}}\) class. The background processes are identified with a sensitivity comparable to the stage-0 training. The slightly larger confusion can be understood by the increased number of classes and therefore increased variety of signatures that a given event can be associated with.

We visualize the influence of single features and their pairwise linear correlations on the NN response exploiting the metric \(\langle t_{\alpha }\rangle \) based on Taylor expansions of the \(y_{l}\) after training, with respect to the features \(\vec {x}\) up to second order, as described in Ref. [94]. For a classic gradient descent training, \(\langle t_{\alpha }\rangle \) corresponds to the mean of the absolute values of the given Taylor coefficient obtained from the whole sampled input space. In this way we identify \(m_{\uptau \uptau }\), \(m_\text {vis}\), \(m_{\textrm{jj}}\), and especially correlations across these features as most influential for the NN classification. For the stage-0 \({\textrm{ggH}}\) event class we identify \(m_{\uptau \uptau }\), \(m_\text {vis}\), and corresponding (self-) correlations of \(m_{\uptau \uptau }\) and \(m_\text {vis}\) as the most important characteristics for identification; the term self-correlation corresponds to the second derivative in the Taylor expansion and reveals, e.g., that the signal is peaking in the \(m_{\uptau \uptau }\) and \(m_\text {vis}\) distributions. The fact that these characteristics are shared across the \({\textrm{ggH}}\), \({\textrm{qqH}}\), and genuine \(\uptau \) event classes explains the relatively high degree of confusion across these categories for the stage-0 training. The separation of \({\textrm{ggH}}\) from \({\textrm{qqH}}\) events mostly relies on characteristics related to \(m_{\textrm{jj}}\). As previously discussed this is more difficult for \({\textrm{ggH}}\) events with \(N_{\text {jet}} \ge 2\). The distinction of genuine \(\uptau \) events mostly relies on the vector \(p_{\textrm{T}}\) sum of the two \(\uptau \) candidates and on \(m_{\uptau \uptau }\). For the tt event class, we find that the information that \({\textrm{t}}\bar{\textrm{t}}\) events are nonpeaking in \(m_\text {vis}\) and \(m_{\uptau \uptau }\) contributes as much as \(N_{\text {Btag}}\) and the jet properties to the observed high sensitivity. These findings, which similarly apply to all final states, demonstrate that the NNs have indeed captured the features that are expected to provide the best discrimination between event classes.

7.4 Classification and discriminants for signal extraction

For each event, the maximum of the \(y_{l}\) obtained from all classes l defines the class to which the event is assigned. This maximum takes values ranging from one over the number of classes, for events that cannot be determined without ambiguity, to one, for events that can clearly be associated with a corresponding class. Histogrammed distributions of \(y_{l}\) for each corresponding class are also used as input for signal extraction.

For the stage-1.2 measurement, this leads to 18 input distributions for all signal and background classes in the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state and 20 input distributions in the \({\textrm{e}} \upmu \), \({\textrm{e}} \uptau _\textrm{h} \), and \(\upmu \uptau _\textrm{h} \) final states, for each data-taking year. Sample distributions of \(y_{l}\), combined for all data-taking years, are shown in Fig. 5. Here and in all figures of that kind the data are represented by the black points, where the error bars indicate the statistical uncertainty of the event count in each corresponding histogram bin. The processes of the background model are represented by the stacked filled histograms.

Observed and predicted distributions of \(y_{l}\) for the signal classes for the \({\textrm{ggH}}\) \(N_{\text {jet}} =1\) subspace in three increasing STXS stage-1.2 bins in \(p_{\textrm{T}} ^{{\textrm{H}}}\) and the \({\textrm{qqH}}\) \(N_{\text {jet}} \ge 2\) bin with \(m_{\textrm{jj}} \ge 700\,\text {GeV} \), \(p_{\textrm{T}} ^{{\textrm{H}}} [0, 200]\,\text {GeV} \), in the \(\upmu \uptau _\textrm{h} \) final state of the NN-analysis. The distributions show all data-taking years combined. The signal contributions for (red) each STXS bin corresponding to the given event class, and the remaining inclusive (blue) \({\textrm{ggH}}\) and (orange) \({\textrm{qqH}}\) processes, excluding the STXS bin in consideration, are also shown as unstacked open histograms. All distributions are shown after the fit of the model, used for the extraction of the STXS stage-1.2 signals, to the data from all final states and data-taking years. Signal contributions in particular have been scaled according to the obtained fit results. In the lower panel of each plot the differences either of the corresponding additional signals or the data relative to the background expectation after fit are shown

For the stage-0 measurement, the training with two signal categories is used, resulting in five event classes in the \(\uptau _\textrm{h} \uptau _\textrm{h} \) final state and seven event classes in the \({\textrm{e}} \upmu \), \({\textrm{e}} \uptau _\textrm{h} \), and \(\upmu \uptau _\textrm{h} \) final states, for each data-taking year. For this measurement, the signal classes are combined into a 2D discriminant depending on \(y_{{\textrm{ggH}}}\) and \(y_{{\textrm{qqH}}}\). The binning scheme used for this discriminant and the distribution of the unrolled discriminant for all data-taking years combined, in the \(\upmu \uptau _\textrm{h} \) final state, are shown in Fig. 6. The binning is grouped in up to 11 bins in \(y_{{\textrm{qqH}}}\) and up to seven bins in \(y_{{\textrm{ggH}}}\). Vertical gray dashed lines in Fig. 6 (left) indicate the main groups of bins in \(y_{{\textrm{qqH}}}\) in this scheme. Each main group corresponds to increasing values in \(y_{{\textrm{qqH}}}\) from left to right. From bin 0–20, the binning within each main group indicates increasing values in \(y_{{\textrm{ggH}}}\). The last main group on the right of the figure is ordered by \(y_{{\textrm{qqH}}}\) only. The same input distributions are also used for a measurement of the inclusive H production cross section. In both figures, the differences either of the corresponding additional signals or the data relative to the background expectation after the fit used for signal extraction are shown in the lower panel of each plot.

Observed and predicted distributions of the 2D discriminant as used for the extraction of the STXS stage-0 and inclusive signals, for all data-taking years in the \(\upmu \uptau _\textrm{h} \) final state of the NN-analysis. Also shown is the definition of each individual bin, on the right. The background distributions are shown after the fit of the model, used for the extraction of the inclusive signal to the data from all final states and data-taking years. For this fit some of the bins have been merged to ensure a sufficient population of each bin. The distributions are shown with the finest common binning across all data-taking years. The signal contributions for the (red) inclusive, (blue) \({\textrm{ggH}}\), and (orange) \({\textrm{qqH}}\) signals are also shown as unstacked open histograms, scaled according to the correspondingly obtained fit results. The vertical gray dashed lines indicate six primary bins in \(y_{{\textrm{qqH}}}\), the last four of which to the right have been enhanced by factors ranging from 3 to 15 for improved visibility. In the lower panel of the plot on the left the differences either of the corresponding additional signals or the data relative to the background expectation after fit are shown

8 Cut-based analysis

For the CB-analysis the event selection as described in Sect. 4 is modified and extended in a few places. To reduce the contamination from \({\textrm{W}} \)+jets production in the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, a requirement of \(m_{\textrm{T}} ^{\ell }<50\,\text {GeV} \) is imposed. To suppress the background from \({\textrm{t}}\bar{\textrm{t}}\) production, events that contain at least one b jet are excluded from the selection not only in the \({\textrm{e}} \upmu \), but also in the \({\textrm{e}} \uptau _\textrm{h} \), and \(\upmu \uptau _\textrm{h} \) final states. For similar reasons, in the \({\textrm{e}} \upmu \) final state an additional requirement of \(D_{\zeta } >-30\,\text {GeV} \) is imposed on the event variable \(D_{\zeta }\) defined as

where \(\hat{\zeta }\) corresponds to the bisectional direction between the electron and muon trajectories at the PV in the transverse plane [100]. The variables \(p_{\zeta }^\text {miss}\) and \(p_{\zeta }^\text {vis}\) can each take positive or negative values. Their linear combination has been chosen to optimize the sensitivity of the analysis in the \({\textrm{e}} \upmu \) final state. A more detailed discussion of the variable \(D_{\zeta }\) is given in Ref. [65]. Finally, the requirement on \(I_{\text {rel}}^{\upmu }\) is tightened from 0.20 to 0.15 in the \({\textrm{e}} \upmu \) final state.

Signal composition of the subcategories of the CB-analysis, in terms of the STXS stage-1.2 bins (in %). The rows correspond to the signal categories described in the text including the signal categories of the \({\textrm{VH}}\)-analysis as described in Sect. 9. The columns refer to the STXS bins specified in Figs. 1, 2 and 3. The STXS stage-1.2 \({\textrm{qqH}}\) bin with \(N_{\text {jet}} <2\) or \(m_{\textrm{jj}} <350\,\text {GeV} \) is labeled by “qqH/non-VBF-topo”. This figure is based on all \(\uptau \uptau \) final states

After selection, event categories are designed to increase the sensitivity to the signal by isolating regions with large signal-to-background ratios, and provide sensitivity to the stage-0 and -1.2 \({\textrm{ggH}}\) and \({\textrm{qqH}}\) STXS bins.

Events are distributed in different categories, which separate the different H production modes, corresponding to the stage-0 processes in the STXS scheme. A 0-jet category is used to collect events with no reconstructed jet. This category predominantly contains background, but also some signal events, which mainly originate from \({\textrm{ggH}}\) production. It therefore mainly acts as a control region for backgrounds. Two event categories target \({\textrm{qqH}}\) production. Depending on the \(\uptau \uptau \) final state, these are defined by the presence of more than 2 jets with \(m_{\textrm{jj}} >350\,\text {GeV} \) or a large separation in \(\eta \) (\(\varDelta \eta _\mathrm {{jj}}\)), and an estimate of \(p_{\textrm{T}} ^{{\textrm{H}}}\) (\(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\)) larger or smaller than 200\(\,\text {GeV}\), where \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\) is obtained from the sum of the \({\vec p}_{\textrm{T}}\) of the two \(\uptau \) candidates and \({\vec p}_{\textrm{T}}^{\text {miss}}\). All other events enter two so-called “boosted” categories, which are distinguished by the presence of exactly one or at least two jets in an event. The boosted categories are supposed to contain mostly \({\textrm{ggH}}\) events with H recoiling against one or several jets, but they also contain contributions from \({\textrm{qqH}}\) events that did not pass the VBF category selection. This leads to five categories for each \(\uptau \uptau \) final state.

In each of these categories, 2D distributions are then built to provide more granularity for the analysis. The observables for these distributions are chosen to separate the signal from the backgrounds, but also to provide additional sensitivity to the individual STXS stage-1.2 bins. One of the observables is always chosen to be \(m_{\uptau \uptau }\). In the 0-jet category of the \({\textrm{e}} \uptau _\textrm{h} \) and \(\upmu \uptau _\textrm{h} \) final states, the \(p_{\textrm{T}}\) of the \(\uptau _\textrm{h}\) candidate is taken as a second observable, as the contribution from backgrounds with misidentified \(\uptau _\textrm{h}\) candidates significantly decreases with \(p_{\textrm{T}}\). In the \({\textrm{e}} \upmu \) and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states, where the sensitivity to 0-jet signal events is low, no second observable is chosen, and 1D distributions are used. In the VBF categories, the second observable is \(m_{\textrm{jj}}\). In addition to aligning with the definition of the STXS stage-1.2 \({\textrm{qqH}}\) bins, using this variable as an observable increases the analysis sensitivity to the \({\textrm{qqH}}\) process as a whole, since the signal-to-background ratio quickly increases with increasing values of \(m_{\textrm{jj}}\). In the boosted categories, the second observable is chosen to be \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\).

The category definitions, as well as the observables per category, are summarized in Table 4. Figure 7 shows the composition of the categories in terms of signal in the individual STXS stage-1.2 bins integrated over all \(\uptau \uptau \) final states. The subcategorization on the vertical axis of the figure is given by the categorization given in Table 4 and the binning for \(m_{\textrm{jj}}\) and \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\)of the corresponding 2D distributions. In the boosted categories, the signal is generally composed of at least 50% of the signal in the corresponding STXS bin, but there are migrations between adjacent \(p_{\textrm{T}} ^{{\textrm{H}}}\) bins because of the limited resolution of \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\), as well as contributions from \({\textrm{ggH}}\) events with 0 jets in the low \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\) subcategories, and from \({\textrm{qqH}}\) events with 1 or 2 jets with low \(m_{\textrm{jj}}\) in the boosted subcategories with high \(\hat{p}_{\textrm{T}}^{{\textrm{H}}}\). In the VBF categories, there is a mixture of \({\textrm{ggH}}\) and \({\textrm{qqH}}\) events with high \(m_{\textrm{jj}}\), as well as limited contributions from \({\textrm{ggH}}\) events with lower \(m_{\textrm{jj}}\) but high \(p_{\textrm{T}} ^{{\textrm{H}}}\). In the 2D distributions, the VBF categories with \(m_{\textrm{jj}} >700\,\text {GeV} \) are subdivided with additional \(m_{\textrm{jj}}\) thresholds going up to 1800\(\,\text {GeV}\) depending on the category and final state. This binning provides an additional separation between the \({\textrm{ggH}}\) and \({\textrm{qqH}}\) events in the VBF categories. The 2D distributions for the boosted \({\ge }2\)-jets category for the \({\textrm{e}} \upmu \), \(\ell \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states, for all data-taking years combined, are shown in Fig. 8.

Observed and predicted 2D distributions in the \({\ge }2\) jet category of the (upper) \({\textrm{e}} \upmu \), (middle) \(\ell \uptau _\textrm{h} \), and (lower) \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states of the CB-analysis. The predicted signal and background distributions are shown after the fit used for the extraction of the inclusive signal. The “Others” background contribution includes events from diboson and single t quark production, as well as \({\textrm{H}} \rightarrow {\textrm{WW}}\) decays. The uncertainty bands account for all systematic and statistical sources of uncertainty, after the fit to the data

9 VH production modes

Higgs boson production in association with a W or Z boson has a cross section that is much lower than the cross section for \({\textrm{ggH}}\) or \({\textrm{qqH}}\) production, but it provides an additional check of the predictions of the SM. The best sensitivity for \({\textrm{VH}}\) production is obtained using H decay modes with large branching fractions, such as \({\textrm{H}} \rightarrow \uptau \uptau \) and \({\textrm{H}} \rightarrow {\textrm{bb}}\).

Four final states are considered for \({\textrm{WH}}\) production, corresponding to the W boson decays into \({\textrm{e}} \upnu \) and \(\upmu \upnu \), combined with the \({\textrm{e}} \uptau _\textrm{h} \), \(\upmu \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states of the \({\textrm{H}} \rightarrow \uptau \uptau \) decay, and discarding the final state with two electrons and one \(\uptau _\textrm{h}\) because of the large background from electron charge misidentification. The \({\textrm{e}} \upmu \) final state of the \({\textrm{H}} \rightarrow \uptau \uptau \) decay is not studied because of its small branching fraction and overlap with the \({\textrm{WH}}\) analysis with \({\textrm{H}} \rightarrow {\textrm{WW}}\) decays. For \({\textrm{ZH}}\) production, six final states are studied, corresponding to the Z boson decays into \({\textrm{ee}}\) and \(\upmu \upmu \), combined with the \({\textrm{e}} \uptau _\textrm{h} \), \(\upmu \uptau _\textrm{h} \), and \(\uptau _\textrm{h} \uptau _\textrm{h} \) final states of the \({\textrm{H}} \rightarrow \uptau \uptau \) decay. The \({\textrm{e}} \upmu \) decay of the Higgs boson is not studied here as it is already included in the \({\textrm{ZH}}\) analysis targeting \({\textrm{H}} \rightarrow {\textrm{WW}}\) decays. This analysis supersedes a previous analysis that was performed using 2016 data only, which had found signal strengths relative to the SM prediction for \({\textrm{WH}}\) and \({\textrm{ZH}}\) production of \(3.39^{+1.68}_{-1.54}\) and \(1.23^{+1.62}_{-1.35}\), respectively [101].

9.1 WH final states

After the trigger selection detailed in Sect. 4, e, \(\upmu \), and \(\uptau _\textrm{h}\) candidates comprising the \({\textrm{e}} \upmu \uptau _\textrm{h} \), \({\textrm{e}} \uptau _\textrm{h} \uptau _\textrm{h} \), \(\upmu \upmu \uptau _\textrm{h} \), and \(\upmu \uptau _\textrm{h} \uptau _\textrm{h} \) final states are required to satisfy the additional selection criteria listed in Table 5. Final states with a \(\upmu \,\)benefit from a lower \(p_{\textrm{T}}\) threshold at the trigger level. Beyond that, events with additional electrons, muons, or b jets are rejected for all \({\textrm{WH}}\) final states.