Abstract

We assess the status of a wide class of WIMP dark matter (DM) models in light of the latest experimental results using the global fitting framework GAMBIT. We perform a global analysis of effective field theory (EFT) operators describing the interactions between a gauge-singlet Dirac fermion and the Standard Model quarks, the gluons and the photon. In this bottom-up approach, we simultaneously vary the coefficients of 14 such operators up to dimension 7, along with the DM mass, the scale of new physics and several nuisance parameters. Our likelihood functions include the latest data from Planck, direct and indirect detection experiments, and the LHC. For DM masses below 100 GeV, we find that it is impossible to satisfy all constraints simultaneously while maintaining EFT validity at LHC energies. For new physics scales around 1 TeV, our results are influenced by several small excesses in the LHC data and depend on the prescription that we adopt to ensure EFT validity. Furthermore, we find large regions of viable parameter space where the EFT is valid and the relic density can be reproduced, implying that WIMPs can still account for the DM of the universe while being consistent with the latest data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Despite years of searching, the identity of dark matter (DM) remains a mystery. Nevertheless, the large number of past, present and future probes of its particle interactions makes it essential to regularly revisit the constraints on the most popular theoretical candidates, in order to guide future searches.

A favoured paradigm for the particle nature of dark matter is that of Weakly Interacting Massive Particles (WIMPs), due to the fact that it allows for a simple thermal mechanism to produce DM with the cosmologically-observed abundance [1]. Such models have also attracted attention due to the large number of possible signals they predict, none of which have been definitively observed so far. Although this has led some to make claims of the demise of WIMPs [2], others have argued that such predictions are premature [3].

A relatively agnostic approach to WIMP model building is to pursue a bottom-up, Effective Field Theory (EFT) approach, in which one enumerates all of the allowed higher dimensional operators which lead to interactions between DM and Standard Model (SM) particles. Any result described by an EFT can in general be explained by many high-energy theories. In this way, the EFT description is a model-independent one, as it does not depend on the Ultraviolet (UV) completion that describes an effective operator. This is, however, a double-edged sword: because an effective operator does not encode any information about the UV completion, it has no constraining power in distinguishing between the range of UV theories that can map to it – nor can all UV-complete theories be mapped to an EFT description for the energies we are interested in here.

In spite of these limitations, the bottom-up approach is well-advised given the lack of direct evidence pointing to the properties of DM. The EFT approach in particular is highly suitable for low-velocity environments such as direct detection [4,5,6,7,8,9,10] and indirect detection [11,12,13,14,15,16,17]. At higher energy scales, the EFT approach starts breaking down, such that simplified models have become the theories of choice for the interpretation of LHC searches [18, 19] (see also Refs. [20, 21] for a hybrid approach called “Extended Dark Matter EFT”). Nevertheless, there is an extensive literature on EFTs at colliders [22,23,24,25,26,27,28,29,30,31,32] including studies by ATLAS [33] and CMS [34], which may help to shed light on the nature of DM when interpreted with care.

A common approach to the analysis of EFTs for DM in the literature has been to consider a single operator at a time [35,36,37,38,39,40,41] and compare experimental bounds on the new physics scale \({\Lambda }\) with the values implied by the observed DM relic density. This method, however, severely limits the scope of the analysis and potentially leads to overly-aggressive exclusions, not only because it neglects (potentially destructive) interferences between different operators [42], but also because the relic density constraint can be considerably relaxed when several operators contribute to the DM annihilation cross-section. The first global study of EFTs for scalar, fermionic and vector DM taking interference effects into account was performed in Ref. [43], but no collider constraints were included in the analysis and no couplings to gluons were considered. More recently, Ref. [44] applied Bayesian methods to perform a global analysis of scalar DM, for which only a small number of effective operators need to be considered and collider constraints can be neglected. Examples of global studies considering subspaces of a general DM EFT include Refs. [45,46,47].

In the present work, we exploit the computational power of the GAMBIT framework [48] to perform the first global analysis of a very general set of effective operators up to dimension 7 that describe the interactions between a Dirac fermion DM particle (or a DM sub-component) and quarks or gluons. Such a set-up arises for example in many extensions of the SM gauge group, such as gauged baryon number [49] or other anomaly-free gauge extensions that require additional stable fermions [50, 51]. Our novel approach of considering many operators simultaneously enables us to study parameter regions where several types of DM interactions need to be combined in order to satisfy all constraints. Our analysis substantially improves upon the previous state-of-the-art in both the statistical rigour with which the DM EFT parameter space is interrogated, and in the new combinations of constraints that are simultaneously applied. We also increase the level of detail with which individual constraints are modelled, summarised as follows.

First, we include a much improved calculation of direct detection constraints using the GAMBIT module DarkBit [52]. We consider the renormalization group (RG) evolution of all effective operators from the electroweak to the hadronic scale and then match the relativistic operators onto the non-relativistic effective theory [53] relevant for DM-nucleon scattering. We then calculate event rates in direct detection experiments to leading order in the chiral expansion, including the contributions from operators that are naively suppressed in the non-relativistic limit, and determine the resulting constraints using detailed likelihood functions for a large number of recent experiments. In the process, we include a number of nuisance parameters to account for uncertainties in nuclear form factors and the astrophysical distribution of DM.

Second, we consider the most recent constraints on DM annihilations using gamma rays and the Cosmic Microwave Background (CMB). To include the latter, we employ the recently released GAMBIT module CosmoBit [54], which uses detailed spectra to calculate effective functions for the efficiency of the injected energy deposition and obtain constraints on the DM annihilation cross-section while varying cosmological parameters. For the calculation of annihilation cross-sections we make use of the new GAMBIT Universal Model Machine (GUM) [55, 56] to automatically generate the relevant code based on the EFT Lagrangian.

Third, we combine the above detailed astrophysical and cosmological constraints with a state-of-the-art implementation of LHC constraints on WIMP dark matter. A central concern for any study of EFTs is the range of validity of the EFT approach [57,58,59,60,61,62,63,64]. This is particularly true when considering constraints from the LHC, which may probe energies above the assumed scale of new physics. A naive application of the EFT in such a case may lead to unphysical predictions, such as unitarity violation. Whenever this is the case it becomes essential to adopt some form of truncation to ensure that only reliable predictions are used to calculate experimental constraints.

In the present work we address these challenges in two key ways. First, we separate the scale of new physics \({\Lambda }\) from the individual Wilson coefficients  (rather than scanning over a combination such as

(rather than scanning over a combination such as  ), such that the former can be directly interpreted as the scale where the EFT breaks down and the latter can be constrained by perturbativity. Second, we check the impact of a phenomenological nuisance parameter that describes the possible modification of LHC spectra at energies beyond the range of EFT validity. The nuisance parameter smoothly interpolates between an abrupt truncation and no truncation at all.

), such that the former can be directly interpreted as the scale where the EFT breaks down and the latter can be constrained by perturbativity. Second, we check the impact of a phenomenological nuisance parameter that describes the possible modification of LHC spectra at energies beyond the range of EFT validity. The nuisance parameter smoothly interpolates between an abrupt truncation and no truncation at all.

Our analysis reveals viable parameter regions for general WIMP models across a wide range of new physics scales, including very small values of \({\Lambda }\) (\({\Lambda }< 200 \, \text {GeV}\)), where there are no relevant LHC constraints and very large values of \({\Lambda }\) (\({\Lambda }> 1.5 \,\text {TeV}\)), where LHC constraints are largely robust. Of particular interest are the intermediate values of \({\Lambda }\) (\({\Lambda }\sim 700{\text {--}} 900 \, \text {GeV}\)), for which our DM EFT partly accommodates several small LHC data excesses that could be interesting to analyse in more detail in the context of specific UV completions or simplified models. However, our analysis also reveals that there cannot be a large hierarchy between \({\Lambda }\) and the DM mass \(m_\chi \). In particular, even with the most general set of operators we consider, it is impossible to simultaneously have a small DM mass (\(m_\chi \lesssim 100 \, \text {GeV}\)) and a large new physics scale (\({\Lambda }> 200 \, \text {GeV}\)). In other words, for light DM to be consistent with all constraints, it is necessary for the new physics scale to be so low that the EFT approach breaks down for the calculation of LHC constraints. For heavier DM, on the other hand, thermal production of DM in the early universe would exceed the observed abundance whenever \({\Lambda }\) is more than one order of magnitude larger than \(m_\chi \) (up to the unitarity bound at a few hundred TeV [65], where the maximum possible value of \({\Lambda }\) approaches \(m_\chi \)).

This work is organised as follows. We introduce the DM EFT description in Sect. 2. In Sect. 3, we discuss the constraints used in this study, and our methods for computing likelihoods and observables. We present our results in Sect. 4. Finally, we present our conclusions in Sect. 5. The samples from our scans and the corresponding GAMBIT input files, and plotting scripts can be downloaded from Zenodo [66].

2 Dark matter effective field theory

In this study, we consider possible interactions of SM fields with a Dirac fermion DM field, \(\chi \), that is a singlet under the SM gauge group. For phenomenological reasons discussed in detail in Sect. 3, we focus on interactions between \(\chi \) and the quarks or gluons of the SM. We assume that the mediators that generate these interactions are heavier than the scales probed by the experiments under consideration. Following the notation of Refs. [67, 68], the interaction Lagrangian for the theory can be written as

where  is the DM-SM operator, \(d\ge 5\) is the mass dimension of the operator,

is the DM-SM operator, \(d\ge 5\) is the mass dimension of the operator,  is the dimensionless Wilson coefficient associated to

is the dimensionless Wilson coefficient associated to  , and \({\Lambda }\) is the scale of new physics (which can be identified with the mediator mass). The full Lagrangian for the theory is then

, and \({\Lambda }\) is the scale of new physics (which can be identified with the mediator mass). The full Lagrangian for the theory is then

such that the free parameters of the theory are the DM mass \(m_\chi \), the scale of new physics \({\Lambda }\), and the set of dimensionless Wilson coefficients  .

.

For sufficiently large \({\Lambda }\), the phenomenology at small energies is dominated by the operators of lowest dimension, and we therefore limit ourselves to \(d \le 7\). However, even this leaves a relatively large set of operators. The DM EFT that is valid below the electroweak (EW) scale (with the Higgs, W, Z and the top quark integrated out) contains 2 dimension five, 4 dimension six, and 22 dimension seven operators (not counting flavour multiplicities), while the DM EFT above the EW scale for a singlet Dirac fermion DM has 4 dimension five, 12 dimension six, and 41 dimension seven operators (again, not counting flavour multiplicities) [68]. The large set of possible operators poses a challenge for a global statistical analysis where bounds on \({\Lambda }\) and  are derived from experimental observations (see Sect. 3 for details). An added complexity is that we consider both processes where the typical energy transfer is above the EW scale (such as collider searches and indirect detection) as well as processes in which the energy release is small (direct detection). The consistent implementation of these bounds requires the combination of both DM EFTs, together with the appropriate matching conditions between the two.

are derived from experimental observations (see Sect. 3 for details). An added complexity is that we consider both processes where the typical energy transfer is above the EW scale (such as collider searches and indirect detection) as well as processes in which the energy release is small (direct detection). The consistent implementation of these bounds requires the combination of both DM EFTs, together with the appropriate matching conditions between the two.

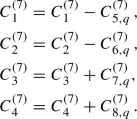

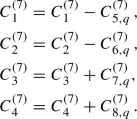

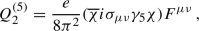

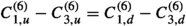

To make the problem tractable we focus in our numerical analysis on a subset of DM EFT operators - the dimension six operators involving DM, \(\chi \), and SM quark fields, q,

The difference between the DM EFT below the EW scale and the DM EFT above the EW scale is in this case very simple: above the EW scale the quark flavours run over all SM quarks, including the top quark, while below the EW scale the top quark is absent.

While the above set of operators does not span the full dimension six bases of the two DM EFTs, it does collect the most relevant operators. The full dimension six operator basis contains operators where quarks are replaced by the SM leptons. These are irrelevant for the collider and direct detection constraints we consider, and are thus omitted for simplicity. The basis of dimension six operators for the DM EFT above the EW scale contains, in addition, operators that are products of DM and Higgs currents. These are expected to be tightly constrained by direct detection to have very small coefficients such that they are irrelevant in other observables, and are thus also dropped for simplicity.

To explore to what extent the numerical analyses would change, if the set of considered DM EFT operators were enlarged, we also perform global fits including, in addition to the dimension six operators (3)–(6), a set of dimension seven operators that comprise interactions with the gluon field either through the QCD field strength tensor \(G^a_{\mu \nu }\) or its dual \({\widetilde{G}}_{\mu \nu }=\frac{1}{2}\epsilon _{\mu \nu \rho \sigma }G^{\rho \sigma }\), as well as operators constructed from scalar, pseudoscalar and tensor bilinears:

The definition of the operators describing interactions with the gluons,  , includes a loop factor since in most new physics models these operators are generated at one loop. Similarly, the couplings to scalar and tensor quark bilinears,

, includes a loop factor since in most new physics models these operators are generated at one loop. Similarly, the couplings to scalar and tensor quark bilinears,  , include a conventional factor of the quark mass \(m_q\), since they have the same flavour structure as the quark mass terms (coupling left-handed and right-handed quark fields). The \(m_q\) suppression of these operators is thus naturally encountered in new physics models that satisfy low energy flavour constraints, such as minimal flavour violation and its extensions. Note that, unless explicitly stated otherwise, \(m_q\) always refers to the running mass in the modified minimal subtraction (\(\overline{\mathrm{MS}}\)) scheme.

, include a conventional factor of the quark mass \(m_q\), since they have the same flavour structure as the quark mass terms (coupling left-handed and right-handed quark fields). The \(m_q\) suppression of these operators is thus naturally encountered in new physics models that satisfy low energy flavour constraints, such as minimal flavour violation and its extensions. Note that, unless explicitly stated otherwise, \(m_q\) always refers to the running mass in the modified minimal subtraction (\(\overline{\mathrm{MS}}\)) scheme.

The complete dimension-seven basis below the EW scale contains eight additional operators with derivatives acting on the DM fields [68]. To simplify the discussion we do not include these operators in our analysis, partially because they do not lead to new chiral structures in the SM currents. Moreover, the direct detection constraints on these additional operators are expressible in terms of the operators that we do include in the global fits due to the non-relativistic nature of the scattering process.

Note that the operators  are not invariant under EW gauge transformations, and are thus replaced in the DM EFT above the EW scale by operators of the form \(({\bar{\chi }}\chi )({\bar{q}}_Lq_R)H\), where H is the Higgs doublet. In all the processes we consider, H can be replaced by its vacuum expectation value – either because the emission of the Higgs boson is phase-space suppressed or suppressed by small Yukawa couplings, or both. This means that, up to renormalization group effects (to be discussed in Sect. 2.1), the operators

are not invariant under EW gauge transformations, and are thus replaced in the DM EFT above the EW scale by operators of the form \(({\bar{\chi }}\chi )({\bar{q}}_Lq_R)H\), where H is the Higgs doublet. In all the processes we consider, H can be replaced by its vacuum expectation value – either because the emission of the Higgs boson is phase-space suppressed or suppressed by small Yukawa couplings, or both. This means that, up to renormalization group effects (to be discussed in Sect. 2.1), the operators  can also be used in our fitting procedure above the EW scale.

can also be used in our fitting procedure above the EW scale.

In principle, analogous operators to  exist for leptons instead of quarks [69, 70] and weak gauge bosons instead of gluons [71,72,73].Footnote 1 In general, these play a much smaller role in the phenomenology and will not be considered here. Similarly, throughout this work the Wilson coefficients of any dimension five operators are set to zero at the UV scale.

exist for leptons instead of quarks [69, 70] and weak gauge bosons instead of gluons [71,72,73].Footnote 1 In general, these play a much smaller role in the phenomenology and will not be considered here. Similarly, throughout this work the Wilson coefficients of any dimension five operators are set to zero at the UV scale.

The Wilson coefficients of the operators defined above depend implicitly on the energy scale of the process under consideration. In our fits, all Wilson coefficients are specified at the new physics scale \({\Lambda }\). If this scale is larger than the top mass, \({\Lambda }> m_t\), all six quarks are active degrees of freedom and the Wilson coefficients need to be specified for \(q = u, d, s, c, b, t\). For \({\Lambda }< m_t\), the top quarks are integrated out, and only the Wilson coefficients for \(q = u, d, s, c, b\) need to be specified. This is done automatically in our fitting procedures, such that effectively both EFTs are used in the fit, according to the numerical value of the scale \({\Lambda }\).

Although, a priori, the Wilson coefficients for each quark flavour are independent, we will restrict ourselves to the assumption of minimal flavour violation (which implies  and

and  ), and the assumption of isospin invariance (which implies

), and the assumption of isospin invariance (which implies  ).Footnote 2 Hence, each operator comes with only one free parameter in addition to the global parameters \({\Lambda }\) and \(m_\chi \). Under these assumptions, the two EFTs above and below the EW scale have the same number of free parameters.

).Footnote 2 Hence, each operator comes with only one free parameter in addition to the global parameters \({\Lambda }\) and \(m_\chi \). Under these assumptions, the two EFTs above and below the EW scale have the same number of free parameters.

2.1 Running and mixing

For many applications, the RG running of the Wilson coefficients (i.e. their dependence on the energy scale \(\mu \)) can be neglected. In fact, the operators  ,

,  ,

,  and

and  have vanishing anomalous dimension, while

have vanishing anomalous dimension, while  ,

,  ,

,  ,

,  as well as

as well as  exhibit no running at one-loop order in QCD [77]. Nevertheless, there are two cases when the effects of running can be important:

exhibit no running at one-loop order in QCD [77]. Nevertheless, there are two cases when the effects of running can be important:

-

1.

Mixing: Different operators can mix with each other under RG evolution, such that operators assumed negligible at one scale may give a relevant contribution at a different scale. This is particularly important in the context of direct detection, because for certain operators the DM-nucleon scattering cross-section is strongly suppressed in the non-relativistic limit. In such a case, the dominant contribution to direct detection may arise from operators induced only at the loop level [78, 79]. In our case, the dominant effects arise from the top quark Yukawa and are discussed below.

-

2.

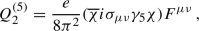

Threshold corrections: Whenever the scale \(\mu \) drops below the mass of one of the quarks, the number of active degrees of freedom is reduced and a finite correction to various operators arises. In our context, the only effect is the matching of the operators

onto the operators

onto the operators  at the heavy quark thresholds, which is given by

at the heavy quark thresholds, which is given by  (17)

(17)Mixing of the tensor operators

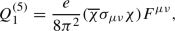

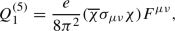

above the EW scale and subsequent matching gives rise to the dimension-five dipole operators

above the EW scale and subsequent matching gives rise to the dimension-five dipole operators  (18)

(18) (19)

(19)where \(F_{\mu \nu }\) is the electromagnetic field strength tensor and e is the electromagnetic charge. These operators give an important contribution to direct detection experiments and are thus kept.Footnote 3

In the present work we include these effects as follows. To calculate the Wilson coefficients at the hadronic scale \(\mu = 2\,\text {GeV}\) (relevant for direct detection) we make use of the public code DirectDM v2.2.0 [67, 68], which calculates the RG evolution of the operators defined above, including threshold corrections and mixing effects. The code furthermore performs a matching of the resulting operators at \(\mu = 2\,\text {GeV}\) onto the basis of non-relativistic effective operators relevant for DM direct detection (see Sect. 3.1).

DirectDM currently requires as input the Wilson coefficients in the five-flavour scheme given at the scale \(m_Z = 91.1876\,\text {GeV}\). For \({\Lambda }< m_t\) (five-flavour EFT), we can therefore directly pass the Wilson coefficients defined above to DirectDM. For \({\Lambda }> m_t\) (six-flavour EFT), there are three additional effects that are considered. First, as pointed out in Ref. [80], the operators  give a contribution to the dipole operators

give a contribution to the dipole operators  at the one-loop level, which is given byFootnote 4

at the one-loop level, which is given byFootnote 4

Second, as pointed out first in Ref. [81], the operator with an axial-vector top-quark current  mixes into the operators

mixes into the operators  with light quark vector currents. The relevant effects are given by [78]

with light quark vector currents. The relevant effects are given by [78]

after integrating out the Z boson at the weak scale. Here, \(s_w\equiv \sin \theta _w\) with \(\theta _w\) the weak mixing angle, and \(v=246\,\)GeV is the Higgs field vacuum expectation value. The flavour universal UV contributions  largely compensate the mixing effect in the fit; the remnant effect, due to the isospin-breaking Z couplings, is small.

largely compensate the mixing effect in the fit; the remnant effect, due to the isospin-breaking Z couplings, is small.

Third, in order to match the EFT with six active quark flavours onto the five-flavour scheme, we need to integrate out the top quark and apply the top quark threshold corrections given in Eq. (17). We neglect any other effects of RG evolution between the scales \({\Lambda }\) and \(m_Z\), i.e. all Wilson coefficients other than  and

and  are directly passed to DirectDM.Footnote 5

are directly passed to DirectDM.Footnote 5

For the purpose of calculating the LHC constraints, we neglect the effects of running and do not consider loop-induced mixing between different operators, which is a good approximation for the operators  and

and  . For the operators

. For the operators  mixing effects are known to be important in principle [82], but these operators are currently unconstrained by the LHC in the parameter region where the EFT is valid (see Sect. 2.2). Likewise we also calculate DM annihilation cross-sections at tree level. In particular, in these calculations we neglect the running of the strong coupling \((\alpha _s)\) and use the pole quark masses \((m_q^{\text {pole}})\) instead of the running quark masses. Moreover, we neglect a small loop-level contribution from the operators

mixing effects are known to be important in principle [82], but these operators are currently unconstrained by the LHC in the parameter region where the EFT is valid (see Sect. 2.2). Likewise we also calculate DM annihilation cross-sections at tree level. In particular, in these calculations we neglect the running of the strong coupling \((\alpha _s)\) and use the pole quark masses \((m_q^{\text {pole}})\) instead of the running quark masses. Moreover, we neglect a small loop-level contribution from the operators  to the operators

to the operators  .

.

2.2 EFT validity

A central concern when employing an EFT to capture the effects of new physics is that the scale of new physics must be sufficiently large compared to the energy scales of interest for the EFT description to be valid. Unfortunately, the point at which the EFT breaks down is difficult to determine from the low-energy theory alone. Considerations of unitarity violation make it possible to determine the scale where the EFT becomes unphysical, but in many cases the EFT description already fails at lower energies, in particular if the UV completion is weakly coupled.

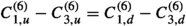

Illustration of our approach for studying DM EFTs compared to a more naive approach, in which one only uses the experiment that yields the strongest bound on \(C / {\Lambda }^2\). The resulting exclusion is indicated by the red shaded region. By independently varying \({\Lambda }\), we can include additional information from experiments that give weaker bounds on \(C / {\Lambda }^2\) but for which the EFT has a larger range of validity. The additional exclusion obtained in this way is indicated by the blue shaded region. The region of parameter space that corresponds to the non-perturbative values of Wilson coefficient C is excluded in either approach (shaded brown)

To address this issue in the present study, we simultaneously vary the overall scale \({\Lambda }\), which corresponds to the energy where new degrees of freedom become relevant and the EFT description breaks down, and the Wilson coefficients  for each operator. Doing so introduces a degeneracy, because cross sections are invariant under the rescaling \({\Lambda }\rightarrow \alpha {\Lambda }\) and

for each operator. Doing so introduces a degeneracy, because cross sections are invariant under the rescaling \({\Lambda }\rightarrow \alpha {\Lambda }\) and  . However, the advantage of this approach is that the parameter \({\Lambda }\) can be used to determine which constraints can be trusted in the EFT limit. This is illustrated in Fig. 1, which compares our approach of varying \({\Lambda }\) and

. However, the advantage of this approach is that the parameter \({\Lambda }\) can be used to determine which constraints can be trusted in the EFT limit. This is illustrated in Fig. 1, which compares our approach of varying \({\Lambda }\) and  separately to the naive approach where only

separately to the naive approach where only  is constrained.

is constrained.

We emphasize that this approach assumes the same new-physics scale for all effective operators, even though they may be generated through different mechanisms, and hence at different scales, in the UV. In practice, one should think of \({\Lambda }\) as the minimum of all of these scales, i.e. the energy at which new degrees of freedom first become relevant. These new degrees of freedom may not contribute to all processes, such that some effective operators may provide an accurate description even at energies above \({\Lambda }\). Whether or not this is the case cannot be determined from the low-energy viewpoint, such that we conservatively limit the EFT validity to energies below \({\Lambda }\).

For the purpose of direct detection constraints, the only requirement on \({\Lambda }\) is that it is larger than the hadronic scale, so that the effective operators can be written in terms of free quarks and gluons. This is the case for \({\Lambda } > rsim 2\,\text {GeV}\), which will always be satisfied in the present study. However, in order to evaluate direct detection constraints, it is necessary to determine the relic abundance of DM particles, which depends on the cross-sections for the processes \(\chi \chi \rightarrow q q\) or \(\chi \chi \rightarrow g g\), just as in the case of indirect detection constraints (see Sect. 3.3). For this calculation to be meaningful in the EFT framework, we require \({\Lambda }> 2 m_\chi \). Parameter points with smaller values of \({\Lambda }\) will thus be invalidated. A dedicated study of direct detection constraints for \({\Lambda }< 2 m_\chi \) will be left for future work.

In the context of LHC searches for DM, EFT validity requires that the invariant mass of the DM pair produced in a collision satisfies \(m_{\chi \chi } < {\Lambda }\) [83]. To obtain robust constraints, only events with smaller energy transfer should be included in the calculation of likelihoods. The problem with this prescription is that \(m_{\chi \chi }\) does not directly correspond to any observable quantity (such as the missing energy  of the event) and hence the impact of varying \({\Lambda }\) on predicted LHC spectra is difficult to assess. One possible way to address this issue would be to generate new LHC events for each parameter point and include only those events with small enough \(m_{\chi \chi }\) in the likelihood calculation, but this is not computationally feasible in the context of a global scan.

of the event) and hence the impact of varying \({\Lambda }\) on predicted LHC spectra is difficult to assess. One possible way to address this issue would be to generate new LHC events for each parameter point and include only those events with small enough \(m_{\chi \chi }\) in the likelihood calculation, but this is not computationally feasible in the context of a global scan.

In the present work, we adopt the following simpler approach: Rather than comparing \({\Lambda }\) to the invariant mass of the DM pair, we compare it to the typical overall energy scale of the event, which can be estimated by the amount of missing energy produced. In other words, we do not modify the missing energy spectrum for  and only apply the EFT validity requirement for larger values of

and only apply the EFT validity requirement for larger values of  . This approach is less conservative than the one advocated, for instance in Refs. [63, 64], where the energy scale of the event is taken to be the partonic centre-of-mass energy \(\sqrt{{\hat{s}}}\), but it has the crucial advantage that it can be applied after event generation, since the differential cross-section with respect to missing energy

. This approach is less conservative than the one advocated, for instance in Refs. [63, 64], where the energy scale of the event is taken to be the partonic centre-of-mass energy \(\sqrt{{\hat{s}}}\), but it has the crucial advantage that it can be applied after event generation, since the differential cross-section with respect to missing energy  is exactly the quantity that is directly compared to data.Footnote 6

is exactly the quantity that is directly compared to data.Footnote 6

In the following, we will consider two different prescriptions for how to impose the EFT validity. The first one is to introduce a hard cut-off, i.e. to set  for

for  . The second, more realistic, prescription is to introduce a smooth cut-off that leads to a non-zero but steeply falling missing energy spectrum above \({\Lambda }\). For this we make the replacement

. The second, more realistic, prescription is to introduce a smooth cut-off that leads to a non-zero but steeply falling missing energy spectrum above \({\Lambda }\). For this we make the replacement

for  . Here a is a free parameter that depends on the specific UV completion. The limits \( a \rightarrow 0 \) and \(a \rightarrow \infty \) correspond to no truncation and an abrupt truncation above the cut-off, respectively. For the case that the EFT results from the exchange of an s-channel mediator with mass close to \({\Lambda }\), one finds \(a \approx 2\) [30]. Rather than taking inspiration from a specific UV completion, we will instead keep a as a free parameter in the interval [0, 4] and find the value that gives the best fit to data at each parameter point. This approach typically leads to conservative LHC bounds in the sense that much stronger exclusions may be obtained in specific UV completions, if the heavy particles that generate the effective DM interactions can be directly produced at the LHC. However, this truncation procedure can lead to unrealistic spectral shapes with sharp features that may be tuned to fit fluctuations in the data. As will be discussed in more detail in Sect. 4, any explanation of data excesses through this approach must be interpreted with care.

. Here a is a free parameter that depends on the specific UV completion. The limits \( a \rightarrow 0 \) and \(a \rightarrow \infty \) correspond to no truncation and an abrupt truncation above the cut-off, respectively. For the case that the EFT results from the exchange of an s-channel mediator with mass close to \({\Lambda }\), one finds \(a \approx 2\) [30]. Rather than taking inspiration from a specific UV completion, we will instead keep a as a free parameter in the interval [0, 4] and find the value that gives the best fit to data at each parameter point. This approach typically leads to conservative LHC bounds in the sense that much stronger exclusions may be obtained in specific UV completions, if the heavy particles that generate the effective DM interactions can be directly produced at the LHC. However, this truncation procedure can lead to unrealistic spectral shapes with sharp features that may be tuned to fit fluctuations in the data. As will be discussed in more detail in Sect. 4, any explanation of data excesses through this approach must be interpreted with care.

Without upper bounds on the Wilson coefficients, any requirement on EFT validity could be satisfied by making both \({\Lambda }\) and the Wilson coefficients arbitrarily large. We therefore require  , which is necessary for a perturbative UV completion and ensures that there is no unitarity violation in the validity range of the EFT [62].

, which is necessary for a perturbative UV completion and ensures that there is no unitarity violation in the validity range of the EFT [62].

One drawback of this prescription is that the EFT validity requirement depends on the normalisation of the effective operators. For example, we have written  with a prefactor \(\alpha _s / (12\pi )\) and

with a prefactor \(\alpha _s / (12\pi )\) and  with a prefactor \(\alpha _s / (8\pi )\) to reflect the fact that in many UV completions, these operators would be generated at the one-loop level. If these operators are instead generated at tree level (e.g. from a strongly interacting theory), it would be more appropriate to write the prefactor as \(4\pi \alpha _s\). With the latter convention any constraint on the new physics scale \({\Lambda }\) becomes stronger by a factor \((48\pi ^2)^{1/3} \approx 5.3\) for

with a prefactor \(\alpha _s / (8\pi )\) to reflect the fact that in many UV completions, these operators would be generated at the one-loop level. If these operators are instead generated at tree level (e.g. from a strongly interacting theory), it would be more appropriate to write the prefactor as \(4\pi \alpha _s\). With the latter convention any constraint on the new physics scale \({\Lambda }\) becomes stronger by a factor \((48\pi ^2)^{1/3} \approx 5.3\) for  and by a factor \((32\pi ^2)^{1/3} \approx 4.6\) for

and by a factor \((32\pi ^2)^{1/3} \approx 4.6\) for  , meaning that much larger values of \({\Lambda }\) are experimentally testable and the range of EFT validity is substantially increased. We have confirmed explicitly that the results presented in Sect. 4 do not depend on the specific definition of the Wilson coefficients for

, meaning that much larger values of \({\Lambda }\) are experimentally testable and the range of EFT validity is substantially increased. We have confirmed explicitly that the results presented in Sect. 4 do not depend on the specific definition of the Wilson coefficients for  .Footnote 7

.Footnote 7

2.3 Parameter ranges

In this study we focus on the following parameter regions. In order to be able to neglect QCD resonances in the process \(\chi {\bar{\chi }} \rightarrow q {\bar{q}}\), we restrict ourselves to \(m_\chi > 5\,\text {GeV}\). In order to have a sufficiently large separation of scales between the new physics scale \({\Lambda }\) and the hadronic scale, we also require \({\Lambda }> 20\,\text {GeV}\). As discussed in Sect. 2.2, we furthermore impose the bound  on all Wilson coefficients and the bound \({\Lambda }> 2 m_\chi \). The upper bounds on \(m_\chi \) and \({\Lambda }\) depend on the details of the scans that we perform and will be discussed in Sect. 4.

on all Wilson coefficients and the bound \({\Lambda }> 2 m_\chi \). The upper bounds on \(m_\chi \) and \({\Lambda }\) depend on the details of the scans that we perform and will be discussed in Sect. 4.

3 Constraints

In this section we describe the constraints relevant for our model. A summary of all likelihoods included in our scans is provided in Table 1. For each likelihood that directly constrains the interactions of the DM particle we also quote the background-only log-likelihood \(\ln {\mathcal {L}}^{\text {bg}}\) obtained when setting all Wilson coefficients to zero. For the remaining likelihoods we instead quote the maximum achievable value of the log-likelihood \(\ln {\mathcal {L}}^{\text {max}}\). The sum of all these contributions, \(\ln {\mathcal {L}}^{\text {ideal}} = -105.3\) will be used to calculate log-likelihood differences below.

3.1 Direct detection

Direct detection experiments search for the scattering of DM particles from the Galactic halo off nuclei in an ultra-pure target by measuring the energy \(E_{\text {R}}\) of recoiling nuclei. The differential event rate with respect to recoil energy is given by

where \(\rho _0\) is the local DM density, \(m_T\) is the target nucleus mass, f(v) is the local DM velocity distribution and

is the minimal DM velocity to cause a recoil carrying away a kinetic energy \(E_{\text {R}}\), where \(\mu = m_T \, m_\chi / (m_T + m_\chi )\) is the reduced mass of the DM-nucleus system.

The local DM density and velocity distribution are not very well known and introduce sizeable uncertainties in the prediction of experimental signals (see the discussion of nuisance parameters in Sect. 3.6). Nevertheless, the greatest challenge in the present context is the calculation of the differential scattering cross-section \(\text {d}\sigma / \text {d}E_{\text {R}}\). For this purpose, one needs to map the effective interactions between relativistic DM particles and quarks or gluons defined above onto effective interactions between non-relativistic DM particles and nucleons \(N = p,n\). The EFT of non-relativistic interactions can be written as

where the operators \({\mathcal {O}}^N_i\) depend only on the DM spin \(\mathbf {S}_\chi \), the nucleon spin \(\mathbf {S}_N\), the momentum transfer \(\mathbf {q}\) and the DM-nucleon relative velocity \(\mathbf {v}\) [4, 53, 99].

The non-relativistic operators can be divided into four categories according to whether or not they depend on the nucleon spin \(\mathbf {S}_N\), such that scattering is suppressed for nuclei with vanishing spin, and whether or not they depend on \(\mathbf {q}\) and/or \(\mathbf {v}\), such that scattering is suppressed in the non-relativistic limit. Specifically, \({\mathcal {O}}^N_1\) leads to spin-independent (SI) unsuppressed scattering, \({\mathcal {O}}^N_4\) leads to spin-dependent (SD) unsuppressed scattering, \({\mathcal {O}}^N_5\), \({\mathcal {O}}^N_8\), \({\mathcal {O}}^N_{11}\) lead to SI momentum-suppressed scattering and \({\mathcal {O}}^N_6\), \({\mathcal {O}}^N_7\), \({\mathcal {O}}^N_9\), \({\mathcal {O}}^N_{10}\), \({\mathcal {O}}^N_{12}\) lead to SD momentum-suppressed scattering, which is typically unobservable. For the relativistic operators included in this study we give the dominant type of interaction they induce in the non-relativistic limit in Table 2.

The coefficients \(c_i^N(q^2)\) can be directly calculated from the Wilson coefficients of the relativistic operators at \(\mu = 2\,\text {GeV}\). The explicit dependence on the momentum transfer \(q = \sqrt{2 m_T E_{\text {R}}}\) is a result of two effects. First, under RG evolution some of the effective DM-quark operators mix into the DM dipole operators  (see Eq. (20)). These operators then induce long-range interactions, i.e. contributions to the \(c_i^N(q^2)\) that scale as \(q^{-2}\). Since the momentum transfer can be very small in direct detection experiments, these contributions can be important in spite of their loop suppression. Second, the coefficients include nuclear form factors, obtained by evaluating expectation values of quark currents like \(\langle N' | {\overline{q}} \gamma ^\mu q | N \rangle \). These form factors can be calculated in chiral perturbation theory and exhibit a pion pole for axial and pseudoscalar currents, i.e. a divergence for \(q \rightarrow m_\pi \) [100, 101].

(see Eq. (20)). These operators then induce long-range interactions, i.e. contributions to the \(c_i^N(q^2)\) that scale as \(q^{-2}\). Since the momentum transfer can be very small in direct detection experiments, these contributions can be important in spite of their loop suppression. Second, the coefficients include nuclear form factors, obtained by evaluating expectation values of quark currents like \(\langle N' | {\overline{q}} \gamma ^\mu q | N \rangle \). These form factors can be calculated in chiral perturbation theory and exhibit a pion pole for axial and pseudoscalar currents, i.e. a divergence for \(q \rightarrow m_\pi \) [100, 101].

All of these effects are fully taken into account in \(\textsf {DirectDM} \), which calculates the coefficients \(c_i^N(q^2)\) for given Wilson coefficients  at a higher scale (see App. A). These coefficients are then passed onto DDCalc v2.2.0 [52, 110], which calculates the differential cross-section for each operator \({\mathcal {O}}^N_i\) (including interference) and target element of interest. DDCalc also performs the velocity integrals needed for the calculation of the differential event rate, and the convolution with energy resolution and detector acceptance needed to predict signals in specific experiments:

at a higher scale (see App. A). These coefficients are then passed onto DDCalc v2.2.0 [52, 110], which calculates the differential cross-section for each operator \({\mathcal {O}}^N_i\) (including interference) and target element of interest. DDCalc also performs the velocity integrals needed for the calculation of the differential event rate, and the convolution with energy resolution and detector acceptance needed to predict signals in specific experiments:

where M is the detector mass, \(T_{\text {exp}}\) is the exposure time and \(\phi (E_{\text {R}})\) is the acceptance function.

By combining DirectDM and DDCalc, we can obtain likelihoods for a wide range of direct detection experiments. In the present analysis, we include constraints from the most recent XENON1T analysis [93], LUX 2016 [88], PandaX 2016 [91] and 2017 [92] analyses, CDMSlite [84], CRESST-II [85] and CRESST-III [86], PICO-60 2017 [89] and 2019 [90], and DarkSide-50 [87].

The hadronic inputs to DirectDM v2.2.0 [67] were updated with the most recent \(N_f=2+1\) lattice QCD results, following the FLAG quality requirements [107], see Table 3. All the inputs are evaluated at \(\mu =2\) GeV. The hadronic matrix elements for protons and neutrons are related using isospin conservation.

For operators with vector quark currents, the least well known are the hadronic matrix elements involving the strange quark, while the matrix elements for operators with u, d quark vector currents have negligible errors to the precision we are working with. Since the strange quark vector current vanishes at \(q^2=0\), the first non-vanishing contribution is obtained only at next-to-leading order in the chiral expansion, and depends on the strange quark charge radius, \(r_s^2 = -0.0045(14)\,\)fm\(^2\) [104, 105]. For the nuclear magnetic moment induced by the strange quark, \(\mu _s= -0.036(21)\) [104, 105], we inflate the errors according to the Particle Data Group prescription.

The scalar form factors at zero recoil are obtained from expressions in Ref. [103], namely

where the upper (lower) sign is for the proton (neutron). We use a rather conservative estimate \(\sigma _{\pi N}=(50\pm 15)\) MeV [101] that covers the spread between the lattice QCD [111,112,113,114,115,116,117,118] and pionic atom determinations [113, 114, 117, 119,120,121,122,123]. The other two parameters are \(\xi \equiv (m_d-m_u)/(m_d + m_u)= 0.36\, \pm 0.04\) and \(B c_5 \, (m_d-m_u)=(-0.51\pm 0.08)\) MeV [103].

The matrix elements of tensor currents are described by three sets of form factors, but only two, \(g_{T}^{q}\) and \(B_{T,10}^{q/N} (0)\), enter the chirally leading expressions. For \(g_{T}^{q}\), the only \(N_f=2+1\) result from Ref. [117] does not satisfy the FLAG quality requirements, so we use the \(N_f=2+1+1\) results from Ref. [106] instead; the difference between the \(N_f=2+1\) and \(N_f=2+1+1\) results is expected to be small. For \(B_{T,10}^{q/N}(0)\), we use the results from the constituent quark model in Ref. [109].

3.2 Relic abundance of DM

The Early Universe time evolution of the number density of the \(\chi \) particles, \(n_\chi \), is governed by the Boltzmann equation [124]

where \(n_{\chi ,\text {eq}}\) is the number density in equilibrium, H(t) is the Hubble rate and \(\langle \sigma v_{\text {{rel}}}\rangle \) is the thermally averaged cross-section times the relative (Møller) velocity, given by

where \(K_{1,2}\) are the modified Bessel functions and \(v_{\mathrm{lab}}\) is the velocity of one of the annihilating (anti-)DM particles in the rest frame of the other (for a discussion, see also Ref. [125]). We stress that there is no additional factor of 1/2 in the above equations. However, the fact that DM consists of Dirac particles implies that the total contribution to the observed DM density is given by \(n_\chi +n_{{{\bar{\chi }}}}=2n_\chi \) (disregarding the possibility of an initial asymmetry [126]).

We compute tree-level annihilation cross-sections using CalcHEP v3.6.27 [127, 128], where the implementation of the four-fermion interactions is generated by GUM [55, 56] from UFO files via the tool ufo_to_mdl (described in Appendix B). To ensure the EFT picture is valid, we invalidate points where \({\Lambda }\le 2 m_\chi \). We obtain the relic density of \(\chi \) by numerically solving Eq. (28) at each parameter point, assuming the standard cosmological historyFootnote 8 and using the routines implemented in DarkSUSY v6.2.2 [130, 131] via DarkBit. We then compare the prediction to the relic density constraint from Planck 2018: \({\varOmega }_{\text {DM}}\,h^2 = 0.120 \pm 0.001\) [98]. We include a \(1\%\) theoretical error on the computed values of the relic density, which we combine in quadrature with the observed error on the Planck measured value. More details on this prescription can be found in Refs. [48, 52].

We note that our uncertainty estimate does not include uncertainties in the calculation of the annihilation cross-section very close to quark thresholds, which may be considerably larger. Moreover, our approach does not capture the potential effect of additional degrees of freedom on \(\langle \sigma v_\text {rel}\rangle \) during freeze-out. The resulting effects, such as resonances or coannihilations could both increase and decrease the resulting value of \({\varOmega }_\chi \) (see e.g. Refs. [132, 133]), so the relic density constraint should be interpreted with care for \({\Lambda }\sim 2m_\chi \), i.e. close to the EFT validity boundary (see Sect. 2.2).

The very nature of the EFT construction implies additional degrees of freedom above the energy scale \({\Lambda }\). Given the potential for a rich dark sector containing \(\chi \), and in particular, the possibility of additional DM candidates not captured by the EFT, we will by default not demand that the particle \(\chi \) constitutes all of the observed DM, i.e. we allow for the possibility of other DM species to contribute to the observed relic density. In practice, this means that we modify the relic density constraint in such a way that the likelihood is flat if the predicted value is smaller than the observed one. In this case, we rescale all predicted direct and indirect detection signals by

and \(f_\chi ^2\), respectively. In doing so, we assume that the fraction \(f_\chi \) is the same in all astrophysical systems and that any additional DM population does not contribute to signals in these experiments. In a second set of scans we then impose a stricter requirement, namely that the DM particle under consideration saturates the DM relic abundance (\(f_\chi \approx 1\)) rather than imposing the relic density as an upper bound (\(f_\chi \le 1\)).Footnote 9

3.3 Indirect detection with gamma rays

If DM is held in thermal equilibrium in the early universe via collisions with SM particles, then it can still annihilate today, especially in regions of high DM density. As with the relic abundance calculation, in order for the effective picture to hold for DM annihilation, we must impose \({\Lambda }> 2 m_\chi \).

Gamma rays from dwarf spheroidal galaxies (dSphs) are a particularly robust way of constraining annihilation signals from DM [134]. In general, for a given energy bin i, the DM-induced \(\gamma \)-ray flux from target k can be written in the factorised form \({\varPhi }_i \cdot J_k\), where details of the particle physics processes are encoded in \({\varPhi }_i\), and details of the astrophysics are encoded in \(J_k\). See the DarkBit manual [52] for more details.

In general, only operators that lead to s-wave annihilation ( ) give rise to observable gamma-ray signals; see for instance, Table 2. For the operators

) give rise to observable gamma-ray signals; see for instance, Table 2. For the operators  and

and  , the leading contribution to the annihilation cross-section is p-wave suppressed, i.e. proportional to \(v_{\text {rel}}^2\). As DM in dSphs is extremely cold, with \(\langle v^2\rangle ^{1/2}\sim 10^{-4}\), this factor is very small, and the resulting limits are exceedingly weak. We therefore neglect p-wave contributions to all annihilation processes here.

, the leading contribution to the annihilation cross-section is p-wave suppressed, i.e. proportional to \(v_{\text {rel}}^2\). As DM in dSphs is extremely cold, with \(\langle v^2\rangle ^{1/2}\sim 10^{-4}\), this factor is very small, and the resulting limits are exceedingly weak. We therefore neglect p-wave contributions to all annihilation processes here.

For s-wave annihilation, one obtains

where \(f_\chi \) is the DM fraction defined in Eq. (30), \((\sigma v)_{0,j}\) denotes the zero-velocity limit of the cross-section for \(\chi {{\bar{\chi }}}\rightarrow j\) and \(N_{\gamma ,j}\) is the number of photons, per annihilation, resulting from the final state channel j. The prefactor 1/4 accounts for the Dirac nature of the DM particles (under the assumption that \(n_\chi =n_{{{\bar{\chi }}}}\)). Again, we use CalcHEP to compute annihilation cross-sections, with the CalcHEP model files generated by ufo_to_mdl via GUM (see Appendix B). The photon yields \({dN_{\gamma ,j}}/{dE}\) used in DarkBit are based on tabulated Pythia runs, as provided by DarkSUSY.

The J-factor for each dSph k is simply the line-of-sight integral over the DM distribution assuming an NFW density profile and the solid angle \({\varOmega }\),

where \(D_k\) is the distance to the dSph. In our analysis we use the Pass-8 combined analysis of 15 dSphs after 6 years of Fermi-LAT data [96]. We use the gamLike v1.0.1 interface within DarkBit [52] to compute the likelihood for the gamma-ray observations, \(\ln {\mathcal {L}}_{\mathrm{exp}}\), constructed from the product \({\varPhi }_i \cdot J_k\) and summed over all targets and energy bins,

We also include a contribution from profiling over the J-factors of each dSph, \(\ln {\mathcal {L}}_J = \sum _k \ln {\mathcal {L}}(J_k)\) [52, 96], such that the full likelihood reads

Gamma rays from the Galactic centre region provide a promising complementary way of constraining a signal from annihilating DM. While the J-factor is expected to be significantly higher than for dSphs, however, this conclusion is largely based on the result of numerical simulations of gravitational clustering rather than on the direct analysis of kinematical data. The reason for this is that the gravitational potential within the solar circle is dominated by baryons, not by DM, which adds additional uncertainty due to a dominant component of astrophysical gamma rays from this target region. As a result, Galactic centre observations with Fermi-LAT are somewhat less competitive than the dSph limits discussed above [135]. The upcoming Cherenkov Telescope Array (CTA), on the other hand, has a good chance of probing thermally produced DM up to particle masses of several TeV [136]. We will not include the projected CTA likelihoods in our scans, but indicate the reach of CTA when discussing our results.

3.4 Other indirect detection constraints

3.4.1 Solar capture

The presence of non-zero elastic scattering cross-sections with nuclei combined with self-annihilation to heavy SM states leads to an additional, unique signature of DM in the form of high-energy neutrinos from the Sun. If Milky Way DM scatters with solar nuclei and loses enough kinetic energy to fall below the local escape velocity, it will become gravitationally bound. As long as it is above the evaporation mass threshold \(\simeq 4\) GeV, captured DM will thermalize in a small region near the solar centre, and annihilate to SM products which then produce neutrinos via regular decay processes. These are distinct from the neutrinos from Solar fusion, as they are expected to have much higher energies than the \(\sim \) MeV scales of fusion processes. Leading constraints have been obtained by Super-Kamiokande down to a few GeV [137], and by the IceCube South Pole Neutrino Observatory, between 20 and 10\(^4\) GeV [138]. For typical annihilation cross-sections, the captured DM population reaches an equilibrium that is determined by the capture rate. For each likelihood evaluation, we obtain the non-relativistic effective operators (Eq. (25)) as described in Sect. 3.1, using DirectDM to obtain the non-relativistic Wilson coefficients. These are passed to the public code Capt’n General [139], which computes the DM capture rate via the integral over the solar radius r and DM halo velocity u:

where \(w(r) = \sqrt{u^2 + v^2_{{\text {esc}},\odot }(r)}\) is the DM velocity at position r, and

is the probability of scattering from velocity w to a velocity less than the local Solar escape velocity \(v_{\text {esc},\odot }(r)\), \({d\sigma _{i}}/{dE_{\text {R}}}\) is the DM-nucleus scattering cross-section, \(n_i(r)\) is the number density of species i with atomic mass \(m_{N,i}\), and \(\mu _i = m_\chi /m_{N,i}\). Version 2.1 of Capt’n General uses the method described in detail in Ref. [140], separating the DM-nucleus cross-section into factors proportional to non-relativistic Wilson coefficients, powers of w and exchanged momentum q, and operator-dependent nuclear response functions computed in Ref. [140] for the 16 most abundant elements in the Sun. Solar parameters are based on the Barcelona Group’s AGSS09ph Standard Solar Model [141, 142].

Annihilation cross-sections are computed as described in Sect. 3.2, via CalcHEP. Once the equilibrium population of DM in the Sun has been obtained, cross-sections and annihilation rates are passed to DarkSUSY, which computes the neutrino yields as a function of energy. These are finally passed to nulike v1.0.9 [143, 144], which computes event-level likelihoods based on a re-analysis of the 79-string IceCube search for DM annihilation in the Sun [97].

3.4.2 Cosmic microwave background

Additional constraints on the DM annihilation cross-section arise from the early universe, more specifically from observations of the Cosmic Microwave Background (CMB). Annihilating DM particles inject energy into the primordial plasma, which affects the reionisation history and alters the optical depth \(\tau \). The magnitude of this effect depends on the specific annihilation channel and how efficiently the injected energy is deposited. These details can be encoded in an effective efficiency coefficient \(f_{\text {eff}}\), which depends on the injected yields of photons, electrons and positrons, and thus on the DM mass and its branching ratios into different final states [145]. The CMB is then sensitive to the following parameter combination:

where \(\langle \sigma v_{\text {rel}} \rangle \approx (\sigma v)_0\) to a very good approximation during recombination; we thus also neglect p-wave contributions to all annihilation processes here.

In order to calculate \(p_{\text {ann}}\) for a given parameter point, one first needs to calculate the injected spectrum of photons, electrons and positrons and then convolve the result with suitable transfer functions that link the energy injection rate to the energy deposition rate [146]. The first part of this calculation has been automated within DarkSUSY and is accessible via DarkBit. The second part relies on DarkAges [147] (which is part of the ExoCLASS branch of CLASS) and is accessible via CosmoBit [54], see Appendix C for further details.Footnote 10

As the Planck collaboration only quotes the 95% credible interval for \(p_{\text {ann}}\) [98], the remaining challenge is to obtain a likelihood for \(p_{\text {ann}}\) from cosmological data. Although this likelihood can, in principle, be calculated for each parameter point individually using the CosmoBit interface to CLASS and the Planck likelihoods, carrying out such a large number of calculations would be prohibitively slow, in particular if the cosmological parameters of the \({\Lambda }\)CDM model are to be varied simultaneously. In the present work, we therefore adopt a simpler approach, where we first calculate the likelihood when varying \(p_{\text {ann}}\) (while profiling over the \({\Lambda }\)CDM and cosmological nuisance parameters). This approach yields

where \(p_{\text {ann}}^{28} \equiv p_{\text {ann}} / \left( 10^{-28} \, \text {cm}^3~\text {s}^{-1}~\text {GeV}^{-1} \right) \). In arriving at this result, we have included the Planck TT,TE,EE+lowE+lensing likelihoods (using the ‘lite’ likelihood for multipoles \( \ell \ge 30 \), which only require one additional nuisance parameter [148]), as well as the BAO data of 6dF [149], SDSS DR7 MGS [150], and the SDSS BOSS DR12 galaxy sample [151]. This profile likelihood, which reproduces the 95% credible interval obtained by the Planck collaboration [98], can then be used in all subsequent scans, so that only \(p_{\text {ann}}\) needs to be calculated for each parameter point and it is no longer necessary to call CLASS or plc.

3.4.3 Charged cosmic rays

Finally, DM particles annihilating in the Galactic halo also produce positrons, antiprotons and, to a lesser degree, heavier anti-nuclei that could in principle be observed in the spectrum of charged cosmic rays. Positrons quickly lose their energy through synchrotron radiation, and are thus a robust probe of exotic contributions from the local Galactic environment; the resulting bounds on DM annihilating to quarks or gluons are, however, much weaker than the other indirect detection constraints discussed here [152].Footnote 11 Anti-nuclei, on the other hand, probe a significant fraction of the entire Galactic halo because energy losses are much less efficient in this case. For antiprotons, this generally leads to competitive constraints on DM annihilation signals [155,156,157], but it also means that such bounds necessarily strongly depend on uncertainties relating to modelling the production and propagation of cosmic rays in the Galactic halo. In addition to the dozen (or more) free parameters in the diffusion-reacceleration equations, there exist significant uncertainties on the energy dependence of the nuclear cross-sections responsible for the conventional antiproton flux [158] and possible correlated systematics [159]. A full statistical analysis, which would require a treatment of the large number of (effective) propagation parameters as nuisance parameters in our scans, is prohibitive in terms of computational costs [160] and hence beyond the scope of this work.

3.5 Collider physics

The effective operators defined in Sect. 2 allow for the pair production of WIMPs in the proton–proton collisions at the LHC. If one of the incoming partons radiates a jet through initial state radiation (ISR), one can observe the process \(pp \rightarrow \chi \chi j\) as a single jet associated with missing transverse energy ( ). In this study, we include the CMS [95] and ATLAS [94] monojet analyses based on \(36\,\mathrm {fb}^{-1}\) and \(139\,\mathrm {fb}^{-1}\) of data from Run II, respectively. ATLAS and CMS have performed a number of further searches for other types of ISR, leading for example to mono-photon signatures, but these are known to give weaker bounds on DM EFTs than monojet searches [24, 161, 162].

). In this study, we include the CMS [95] and ATLAS [94] monojet analyses based on \(36\,\mathrm {fb}^{-1}\) and \(139\,\mathrm {fb}^{-1}\) of data from Run II, respectively. ATLAS and CMS have performed a number of further searches for other types of ISR, leading for example to mono-photon signatures, but these are known to give weaker bounds on DM EFTs than monojet searches [24, 161, 162].

The expected number of events in a given bin of the  distribution is

distribution is

where \(L =36\,{\text {fb}}^{-1}\) or \(139\,{\text {fb}}^{-1}\) is the total integrated luminosity, \(\sigma \) the total production cross-section and the factor \((\epsilon A)\) is the efficiency times acceptance for passing the kinematic selection requirements for the analysis. Both \(\sigma \) and \((\epsilon A)\) can be obtained via Monte Carlo simulation, but given the dimensionality of the DM EFT parameter space it is computationally too expensive to perform these simulations on the fly during the parameter scan, as would be the standard approach to collider simulations within ColliderBit in GAMBIT.

Starting from UFO files generated using FeynRules v2.0 [163], we have therefore produced separate interpolations of \(\sigma \) and \(\epsilon A\) based on the output of Monte Carlo simulations with MadGraph_aMC@NLO v2.6.6 [164] (v2.9.2) for the CMS (ATLAS) analysis, interfaced to Pythia v8.1 [165] for parton showering and hadronization. The matching between MadGraph and Pythia is performed according to the CKKW prescription, and the detector response is simulated using Delphes v3.4.2 [166]. The ColliderBit code extension that enables \(\sigma \) and \((\epsilon A)\) interpolations to be used as an alternative to direct Monte Carlo simulation will be generalised and documented in the next major version of ColliderBit.

We only include the dimension-6 and 7 EFT operators ( and

and  ) which are relevant for collider searches. Other operators give a negligible contribution due to either being suppressed by the parton distribution functions (in the case of heavy quarks), or by a factor of the fermion mass (small in the case of light quarks).

) which are relevant for collider searches. Other operators give a negligible contribution due to either being suppressed by the parton distribution functions (in the case of heavy quarks), or by a factor of the fermion mass (small in the case of light quarks).

To reduce the computation time for our study, we generate events in discrete grids of the Wilson coefficients and DM mass. Separate grids are defined for each set of operators that do not interfere, such that the total number of events will simply be the sum of the contributions calculated from each grid. At dimension-6, there is interference between operators  /

/ and

and  /

/ . For these Wilson coefficients, we parametrize the tabulated grids in terms of a mixing angle \(\theta \), defined via

. For these Wilson coefficients, we parametrize the tabulated grids in terms of a mixing angle \(\theta \), defined via  and

and  .

.

The CMS and ATLAS analyses have 22 and 13 exclusive signal regions, respectively, corresponding to the individual bins in the missing transverse energy distributions. As discussed below, the publicly available information makes it possible to combine all signal regions for the CMS analysis, while for the ATLAS analysis, only a single signal region can be used at once. To maximize the sensitivity of the ATLAS analysis, we combine the three highest missing energy bins, for which systematic uncertainties in the background estimation (and hence their correlations) are negligible, such that the highest bin in our analysis corresponds to all events with  .Footnote 12 Once the predicted yields for all bins have been evaluated, taking into account the EFT validity constraint as described in Sect. 2.2, we compute a likelihood for each analysis as follows.

.Footnote 12 Once the predicted yields for all bins have been evaluated, taking into account the EFT validity constraint as described in Sect. 2.2, we compute a likelihood for each analysis as follows.

For the CMS analysis, we follow the “simplified likelihood” method [167], since the required covariance matrix was published by CMS. In this approach, the full experimental likelihood function is approximated by a standard convolved Poisson–Gaussian form, with the systematic uncertainties on the background predictions treated as correlated Gaussian distributions:

For each signal region i, the observed yield, expected signal yield and expected background yield are given by \(n_i\), \(s_i\) and \(b_i\), respectively. The deviation from the nominal expected yield due to systematic uncertainties is given by \(\gamma _i\). The correlations between the different \(\gamma _i\) are encoded in the covariance matrix \({\varvec{{\varSigma }}}\) provided by CMS, where we also add the signal yield uncertainties in quadrature along the diagonal. We follow the procedure in Ref. [167] in treating the \(\gamma _i\) nuisance parameters as linear corrections to the expected yields. For every point in our scans of the DM EFT parameter space, we profile Eq. (39) over the 22 nuisance parameters in \({\varvec{\gamma }}\) to obtain a likelihood solely in terms of the set of DM EFT signal estimates \({\varvec{s}}\):

In the case of the ATLAS analysis, for which such a covariance matrix is not available, the conservative course of action is to calculate a likelihood using only the signal region with the best expected sensitivity. The ATLAS likelihood is therefore given by

where \({\mathcal {L}}_{{\text {ATLAS}}}(s_i, \hat{\hat{\gamma _i}})\) is the single-bin equivalent of Eq. (39), and i refers to the signal region with the best expected sensitivity, i.e. the signal region that would give the lowest likelihood in the case \(n_i = b_i\).

The total LHC log-likelihood is then given by \(\ln {\mathcal {L}}_{{\text {LHC}}} = \ln {\mathcal {L}}_{{\text {CMS}}} + \ln {\mathcal {L}}_{{\text {ATLAS}}}\). However, due to the per-point signal region selection required in the evaluation of \(\ln {\mathcal {L}}_{{\text {ATLAS}}}\), the variation in typical yields between the different signal regions would manifest as a large variation in the effective likelihood normalization between different parameter points. To avoid this we follow the standard approach in ColliderBit of using the log-likelihood difference

as the LHC log-likelihood contribution in the parameter scan [168].

When presenting the results of a global fit we identify the maximum-likelihood point \({\varvec{{\Theta }}}_{\text {best-fit}}\) in the DM EFT parameter space and map out the \(1\sigma \) and \(2\sigma \) confidence regions defined using the likelihood ratio \({\mathcal {L}}({\varvec{{\Theta }}}) / {\mathcal {L}}({\varvec{{\Theta }}}_{\text {best-fit}})\). Thus, in cases where some region of the DM EFT parameter space can accommodate a modest excess in the collider data, other DM EFT parameter regions that might still perform better than the SM, or that are experimentally indistinguishable from SM, can appear as excluded. While this is perfectly reasonable, given that the comparison is to the best-fit DM EFT point and not to the SM expectation, it is also interesting to study the global fit results under the assumption that mild excesses in the collider data indeed do not originate from a true new physics signal. A simple and pragmatic approach is then to replace \({\varDelta } \ln {\mathcal {L}}_{{\text {LHC}}}\) with a capped version,

This will assign the same log-likelihood value, \({\varDelta } \ln {\mathcal {L}}_{{\text {LHC}}}^{\text {cap}} = 0\), for all DM EFT parameter points whose prediction fit the collider data as well as, or better than, the SM prediction (\(\mathbf{s} = \mathbf{0}\)) does. Thus, analogous to how exclusion limits from LHC searches are constructed to only exclude new physics scenarios that predict too many signal events, the capped likelihood only penalizes parameter points for performing worse than the background-only scenario. The result obtained from using \({\varDelta } \ln {\mathcal {L}}_{{\text {LHC}}}^{\text {cap}}\) in a fit is therefore close to the result one would obtain by constructing a joint exclusion limit for the LHC searches, and applying this limit as a hard cut on the parameter space favoured by the other observables. The main difference is that the capped LHC likelihood incorporates a continuous likelihood penalty.Footnote 13 A more detailed introduction to the capped likelihood construction can be found in Ref. [169].

Below we will present some results using this capped LHC likelihood, and some using the full LHC likelihood in Eq. (42). In light of the discussion above, the two sets of results should be interpreted as answering slightly different questions: The fit results with the full LHC likelihood show what DM EFT scenario is in best agreement with the complete set of current data, and how much worse other DM EFT scenarios perform in comparison. The results with the capped LHC likelihood map out the DM EFT parameter space that is preferred by the non-collider observables and not excluded by a combination of the LHC searches.

3.6 Nuisance parameter likelihoods

In our scans we also vary a set of relevant nuisance parameters related to the DM observables and SM measurements. Most of these nuisance parameters are directly constrained by dedicated measurements, which we include through appropriate likelihood functions. In some cases, however, several conflicting measurements exist, indicating additional systematic uncertainties in the methodology. In these cases we constrain the nuisance parameters through effective likelihoods intended to give a conservative constraint on the allowed ranges. The nuisance parameters and \(3\sigma \) ranges used in this study are summarised in Table 4. We briefly cover each nuisance likelihood in turn below.

We follow the default prescription in DarkBit for the local DM density \(\rho _0\), where the likelihood is given by a log-normal distribution with central value \(\rho _0 = 0.40\) GeV cm\(^{-3}\) and error \(\sigma _{\rho _0}=0.15\) GeV cm\(^{-3}\). We scan over an asymmetric range in \(\rho _0\) to reflect the log-normal distribution – see Ref. [52] for more details.

We follow the same treatment of the Milky Way halo as in the GAMBIT Higgs portal study [110]. We utilise Gaussian likelihoods for parameters describing the Maxwell-Boltzmann velocity distribution, specifically the peak of the distribution \(v_{\mathrm{peak}} = 240\, \pm \,8\) km s\(^{-1}\) [170], and the galactic escape velocity \(v_{\mathrm{esc}} = 528 \pm 25\) km s\(^{-1}\), based on the Gaia data [171].

We employ a Gaussian likelihood for the running top quark mass in the \(\overline{\text {MS}}\) scheme with a central value \(m_t (m_t) = 162.9\) GeV and an error 2.0 GeV [172].Footnote 14 The top pole mass \((m_t^\text {pole})\) is then computed using the following formula:

We use only the one-loop QCD corrections in this shift in order to be consistent with the procedure carried out in Ref. [172]. We have checked that the above expression gives the expected result for the top pole mass and matches well with Ref. [172].

For direct detection, we employ nuisance parameter likelihoods for a number of hadronic input parameters that are used to evaluate form factors at the nuclear scale. Specifically, we use a product of four Gaussian likelihoods to include the constraints on \(\sigma _{\pi N}\), \({\varDelta } s\), \(g_T^s\) and \(r_s^2\) quoted in Table 3. The remaining hadronic input parameters are fixed to the central values given in Table 3.

4 Results

We now present the results obtained from comprehensive scans of the parameter space introduced above. These scans were carried out with the differential evolution sampler Diver v1.4.0 [173] using a population of \(5 \times 10^4\) and a convergence threshold of either \(10^{-5}\) or \(3 \times 10^{-5}\). As we will analyse our scan results using profile likelihood maps, the sole aim of the scans is to map out the likelihood function in sufficient detail across the high-likelihood regions of parameter space. In particular, no statistical interpretation is associated with the density of parameter samples, and we can therefore combine samples from scans that use different metrics on the parameter space. To ensure that all parameter regions are properly explored, we perform two different types of scans:

-

Full: We explore DM masses up to the unitarity bound (\(5 \, \text {GeV}< m_\chi < 150\,\text {TeV}\) and \(20 \, \text {GeV}< {\Lambda }< 300 \, \text {TeV}\)).Footnote 15 In these scans, \(m_\chi \) and \({\Lambda }\) are scanned on a logarithmic scale, while the Wilson coefficients are scanned on both a linear and a logarithmic scale (i.e. we combine the samples from both scanning strategies to achieve a thorough exploration of the whole parameter space).

-

Restricted: We consider the parameter region where experimental constraints are most relevant (\(m_\chi < 500\,\text {GeV}\) and \({\Lambda }< 2 \, \text {TeV}\)). In these scans the DM mass is scanned on a linear scale, the scale \({\Lambda }\) on a logarithmic scale and the Wilson coefficients on a scale that is logarithmic on \([-4\pi ,-10^{-6}]\), linear on \([-10^{-6},10^{-6}]\) and logarithmic on \([10^{-6},4\pi ]\). This approach was found to achieve the optimum resolution of the LHC constraints while simultaneously ensuring that enough viable samples are also found for small \({\Lambda }\) when some or all of the Wilson coefficients are tightly constrained.

All nuisance parameters are scanned on a linear scale. In the first set of scans, we fix the Wilson coefficients for all dimension-7 operators to zero, so that there are 6 model parameters and 8 nuisance parameters. The second set of scans then includes all 14 Wilson coefficients, bringing the total number of parameters up to 24.