Abstract

We examine the capacity of the Large Hadron Collider to determine the mean proper lifetime of long-lived particles assuming different decay final states. We mostly concentrate on the high luminosity runs of the LHC, and therefore, develop our discussion in light of the high amount of pile-up and the various upgrades for the HL-LHC runs. We employ model-dependent and model-independent methods in order to reconstruct the proper lifetime of neutral long-lived particles decaying into displaced leptons, potentially accompanied by missing energy, as well as charged long-lived particles decaying ihnto leptons and missing energy. We also present a discussion for lifetime estimation of neutral long-lived particles decaying into displaced jets, along with the challenges in the high PU environment of HL-LHC. After a general discussion, we illustrate and discuss these methods using several new physics models. We conclude that the lifetime can indeed be reconstructed in many concrete cases. Finally, we discuss to which extent including timing information, which is an important addition in the Phase-II upgrade of CMS, can improve such an analysis.

Similar content being viewed by others

1 Introduction

The lack of observation of new physics at the Large Hadron Collider (LHC) has prompted a re-evaluation of the strategies aiming to probe signals of physics beyond the standard model (BSM). Initial expectations had been that new physics would reveal itself in prompt searches involving leptons, jets, and missing energy, or, eventually, in the form of exotic resonances. However, no smoking gun signal has appeared in such searches so far. It is, therefore, only reasonable to entertain the possibility that new physics may manifest itself in unexpected ways, in the form of non-standard signatures. Although such a terra incognita can be daunting to explore, we can appeal to well-motivated theoretical scenarios for guidance. An attractive possibility is that some of the produced particles are long-lived, i.e. that the secondary vertices through which they decay are macroscopically displaced with respect to the primary interaction point at which they are produced. Such signatures appear in a large variety of new physics frameworks such as supersymmetry [1,2,3,4,5,6,7,8,9,10,11,12,13,14,15], Twin Higgs models [16], gauge unification frameworks based on vector-like fermions [17], or Hidden Valley models [18,19,20], as well as in frameworks including dark matter [21,22,23,24,25,26,27,28,29] or baryogenesis [30].

Searches for long-lived particles (LLPs) have already been pursued at previous experiments like CDF and Dø, and are being pursued at ATLAS, CMS and LHCb in LHC, see, e.g., Refs. [31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51] and will be one of the primary focus of new physics searches at the LHC in the coming years. For an overview of recent LLP searches, we refer the reader to Ref. [52] and references therein. In addition to the multi-purpose experiments ATLAS and CMS, dedicated detectors like FASER [53] and MATHUSLA [54] have been proposed to probe long-lived particles [55]. The range of new physics scenarios that such detectors can explore is both vast and very well-motivated [56, 57]. Moreover, these proposals aim at filling in a “lifetime gap” between prompt collider searches and cosmological constraints such as big bang nucleosynthesis (BBN), which is typically sensitive to lifetimes of the order of 0.1 s or longer [58].

In this paper we consider LLPs as states with a proper lifetime long enough such that they decay only after traversing some macroscopic distance (order of few cm) within the detector. Such lifetimes can be induced either by rather small couplings or in specific kinematic configurations involving small mass splittings between the particles participating in the process or large propagator masses. Regardless of the underlying physics, LLPs introduce significant additional complications for experimental searches as compared to promptly produced particles. If the LLP is heavy and charged, it will leave a distinct track in the detector, making detection easier, while neutral LLPs are more difficult to detect.

We place ourselves in the hopeful scenario that long-lived particles (LLPs) will be observed at the High Luminosity runs of the Large Hadron Collider (HL-LHC) and we examine its capacity to reconstruct the LLP lifetime. Extracting the lifetime information can not only provide crucial information in order to, at least partly, reconstruct features of the underlying microscopic model, notably the coupling strength between the LLP and its decay products, but may also lead to the establishment of more unexpected connections between LHC observations and, e.g., cosmology, for an example see Ref. [26]. Motivated by our simple analysis presented in Ref. [59], we explore several ways to estimate the lifetimes of different kinds of LLPs with multifarious decay modes.

Several studies have appeared in the literature concerning different ways to estimate the lifetime of LLPs. Our analysis builds upon and expands this body of work in a number of ways:

-

Many of the existing analyses have focused on the potential for lifetime determination in searches for charged LLPs [60,61,62,63,64,65]. The advantage of charged LLPs is that their boost and mass can be inferred from their tracks in the detector, while in the case of charged massive stopped particles (CHAMPs) different methods can be employed which have been explored, e.g., in [66,67,68,69,70,71,72]. The – arguably, more challenging – case of neutral LLPs, in which one has to reconstruct their decay products in order to estimate their lifetime has, on the other hand, received less attention. In this work, we attempt to fill in this void.

-

Many of the existing analyses have considered several thousands of LLP events in order to assess whether their lifetime can be reconstructed. Given the recent null results from numerous LHC searches, the current bounds only allow between a few hundreds to a few thousands of events. In this paper, we choose to work with cross-sectionFootnote 1 values that conform with these latest limits. At the same time, we place ourselves in the framework of the High-Luminosity LHC (HL-LHC), which is expected to accumulate a total luminosity of \(3000 \, {\text {fb}}^{-1}\), thus increasing the possibility of discovering and measuring the properties of long-lived particles even for more moderate cross-section values.

-

On the other hand, even though this luminosity increase will be extremely beneficial for LLP searches, with the accumulation of more data the pile-up (PU) events are going to negatively impact the prospects for observing LLPs and measuring their properties. Indeed, \(\sim 140\) PU events per bunch crossing are expected in the HL-LHC [73], compared to only 30–50 in the previous runs. However, the ATLAS and CMS detectors will also undergo major upgrades – both in the hardware and in the software fronts: increase in the rapidity range of the calorimeters, improved timing information, addition of timing layers which will help experimentalists to better understand and subtract pile-up events are but a few such examples. Although we are fully aware of the fact that, at the end of the day, these issues can only be analysed in full by the experimental collaborations themselves, we attempt to provide at least some preliminary discussion on how such factors may affect our capacity to reconstruct the lifetime of LLPs.

-

Most existing analyses have employed \(\chi ^2\) methods in order to reconstruct the LLP lifetime. In this work, we discuss how the inclusion of likelihoods and some machine learning algorithms fare against such canonical treatments.

In what follows we present ways to estimate the lifetime of LLPs considering various decay channels into different final states. We start with the simplest case of displaced leptons, continue with displaced jets and, eventually, study LLP decays involving invisible particles. Most of the analyses presented here can be applied to numerous LLP models involving such final states and also to several future colliders. Finally, we also study the prospect of the proposed MIP timing detector (MTD) [74], which will be included in the Phase-II upgrade of the CMS detector and will play a key role in improving the determination of the LLP lifetime during the HL-LHC runs. We should point out that at various stages we will allow for some leeway, in terms of the known quantities available to us, as well as potentially speculate on some uncertainties that will only be precisely estimated once the LHC resumes operation after the long shutdown 2 (LS2).

The paper is organised as follows: In Sect. 2, we start by recalling basic formulae related to the LLP lifetime and discuss why we restrict ourselves to LLP decays within the tracker. In Sect. 3, we discuss existing bounds on processes involving LLPs, we illustrate why the LLP lifetime cannot be reconstructed through a naive exponential fit, we propose alternative approaches and we study how these can be used to estimate the lifetime for different LLP decay modes. Section 4 is dedicated to a discussion of the MTD and how adding timing information can improve the situation, not only concerning the lifetime estimation but also in order to identify the model by reconstructing the mass of the LLP in cases where the LLP decays involve invisible particles. Our conclusions are presented in Sect. 5.

2 Long-lived particle lifetime reconstruction

We start this Section by reviewing some relations related to the lifetime of long-lived particles (LLPs).

2.1 Kinematics of LLPs

In the laboratory frame, the decay length of a particle with mean proper decay time \(\tau \) (as measured in its own rest frame) is given by

where \(\gamma = E/m = (1-\beta ^2)^{-1/2}\) is the relativistic factor with \(\beta = v/c = |\vec {p}|/E\), v is the velocity of the decaying particle and c denotes the speed of light. For simplicity, we will refer to the mean proper decay lifetime (\(\tau \)) or the mean proper decay length (\(c\tau \)) as just “lifetime” or “decay length”, unless stated otherwise. The decay probability of such particles follows the same distribution as the one encountered in radioactive decays. If we consider the production of a number \(N_0\) of such unstable particles with mean proper lifetime \(\tau \), the expected number of surviving particles N(t) evolves as a function of time t through the usual exponentially decreasing distribution

By measuring the decay length \(d_i\) of each event, together with the corresponding kinematical factor \(\beta _i\), we can deduce the proper decay time associated to the event. Ideally, it is possible to infer the values of \(N_0\) and \(\tau \) by performing an exponential fit of the sample data, provided that enough statistics is available. If the proper decay length is large, the number of LLP decays within the detector volume will be very small, and therefore we will require a large enough statistical sample to perform a faithful fit.

We note that the geometrical acceptance probability for an LLP with a decay length d as it traverses the detector is given by

where \(L_{1}\) and \(L_{2}\) are the distances between the interaction point and the point where the LLP respectively enters and exits the decay volume, and \(\Delta \Omega \) is the geometric cross-section of the active detector volume [57]. Thus we clearly see that while the LHC can be sensitive to decays occurring within a certain displacement, the probability decreases if the displacement length is significant.Footnote 2

In the following, we will consider the production of a variety of long-lived particles, hereafter denoted by X, with different decay modes. The long-lived particle can be charged or neutral and therefore its identification efficiency depends on the tracker and the energy deposition in the detector, among various other factors.

2.2 Restricting to decays within tracker

In order to estimate the lifetime of the long-lived particle X, in the following we will restrict ourselves to decays occurring inside the tracker region of the detector. This not only allows access to the position of the secondary vertex (SV), but also helps in reconstructing the charged decay products of X as well as in the measurement of the boost factor \(\beta \gamma \). However, this restriction will limit the number of observed LLP decays, especially in the case of particles characterised by longer lifetimes leading to decays outside the tracker region. In this Section we quantify the fraction of decays we can expect within the tracker for given ranges of LLP masses and lifetimes.

Consider the production of a pair of long-lived particles at the LHC, \(pp \rightarrow XX\), and their subsequent decays into Standard Model particles. Since the boost factor \(\beta \gamma \) of the particles X depends on their production mode, for illustration we focus on a supersymmetric (SUSY) model containing a LLE-type R-parity violating (RPV) coupling, for a review cf e.g. [75]. In this model a pair of sneutrino LLPs is produced through a quark-initiated s-channel process as in Fig. 1 and decays into two electron pairs, with a mean proper lifetime that is controlled by the LLP mass and the magnitude of the RPV coupling. Events have been simulated using PYTHIA6 [76] at \(\sqrt{s}=14 \, \text {TeV}\).

In Fig. 2 we show the variation of the fraction of LLP decays as a function of the LLP mass and proper decay length. We focus on two intervals for the decay length, namely a decay within 30 cm (left panel) or between 30 and 100 cm (right panel) from the beam line. The former corresponds to the transverse dimension of the ATLAS large area tracking setup as given in [77] and the latter to the rest of the tracker region [78].

For particles with masses in the TeV range, we observe that even for proper lifetimes of the order of a few meters, about 40% of the decays are expected to take place within 30 cm from the beam line, i.e. within the silicon detector. For lighter particles having masses around 10 GeV, this fraction turns to 20%, provided the proper lifetime is \(c\tau \lesssim 1 \,\text {m}\). For even lighter particles, the sensitivity will be reduced, since they may have larger boost factors and consequently decay much later. Considering radial distances between 30 and 100 cm from the beam line, we find that we can expect at least \(10\%\) decays within this region for a mass of about \(\sim 1~ \text {TeV}\) and a mean proper lifetime of \(c\tau \sim 10\, \text {m}\). The same fraction of decays is expected for a particle mass of about 10 GeV and a proper lifetime of \(c\tau \sim 1\, \text {m}\).

The small increase in the fraction of decays within \(30~ \text {cm}\) and a subsequent dip in the corresponding fraction in the region between 30 and \(100~ \text {cm}\), for LLP masses around 40–50 GeV is due to the presence of the Z-pole in the LLP pair-production cross-section. At these mass values, the LLPs will preferentially be produced by the decay of an on-shell Z-boson and hence they will have very little boost and smaller decay lengths.

We also notice that in the right panel plot of Fig. 2, a red region is sandwiched between two purple regions, i.e., the decay fraction in this region first rises and then falls again. This is because as the lifetime increases up to a particular value (\(c\tau \sim \) few \(100~ \text {mm}\)), we expect the decay fraction in this region to increase. However, when the lifetime becomes much higher, the decay length distribution becomes flatter and hence, the chances of the LLP to decay in regions of the detector outside the tracker also increase, making the decay fraction in this region decrease.

In summary, we find that for a wide range of proper decay length values \(c\tau \), the probability of the LLP to decay within a distance of 30 cm from the beam line is substantial. In the following, we will therefore restrict ourselves to decays taking place within this radius, i.e. within the region of the ATLAS tracker where large area tracking is possible, since it will serve well in identifying the position of the secondary vertex.

3 Lifetime reconstruction for different LLP decay modes

After the previous preliminary remarks, let us now turn to our analysis. We will study to which extent the lifetime of a long-lived particle (LLP) can be reconstructed considering the following four decay scenarios:

-

Displaced leptons: In this case we will assume a neutral LLP decaying into a pair of leptons. We will generate our Monte Carlo data set using a supersymmetric model containing a LLE-type R-parity violating coupling [8, 39, 75, 79,80,81,82]. In this model the sneutrino is the LLP and it decays into two electrons. Given this final state, the position of the secondary vertex as well as the boost (\(\beta \gamma \)) of the LLP can be experimentally measured.

-

Displaced leptons with missing transverse energy (\({\mathrm{E}/}_{\mathrm{T}}\)): We will assume a neutral LLP decaying into a pair of leptons along with an invisible particle. Here we will employ a minimal gauge-mediated supersymmetry breaking (GMSB) model in which a long-lived lightest neutralino decays into a Z and a nearly massless gravitino [1, 5, 81, 83]. We will also discuss the feasibility of estimating the lifetime in a scenario with a heavier invisible particle in Sect. 4.1.2. For this signature, although the position of the secondary vertex can be measured, the boost of the LLP cannot be reconstructed since part of the final state is invisible.

-

Kinked (or disappearing) tracks: As a final case we will consider a charged LLP decaying into a lepton along with an invisible particle. The model that we will use for this analysis is again LLE-type RPV SUSY [9, 35, 88], with a slepton LLP decaying into a charged lepton and a neutrino. In these signatures, the position of the kink or the position where the charged track disappears can provide the LLP decay position, and the \(\beta \gamma \) of the LLP can be calculated from the charged LLP track itself.

-

Displaced jets: Here we will consider a neutral LLP decaying into two jets. In this analysis, we will again use the R-parity-violating supersymmetric framework, but with a LQD-type coupling [8, 75, 84] inducing the decay of a long-lived sneutrino into a jet pair. For the displaced jets signature, the position of the secondary vertex can also be measured. It is possible to reconstruct the \(\beta \gamma \) of the LLP from the jets, however, the reconstruction is plagued with important uncertainties due to the fact that the observed jets are quite different than the initial partons.

Let us also note that this choice of models should not be taken to reflect any theoretical prejudice. They have been chosen simply for convenience, as they are already incorporated in the PYTHIA6 framework and they can give rise to the experimental signatures that we will be studying in what follows. In the same spirit, we will not be concerned with the phenomenological viability of these models with regards to the full set of constraints that could be envisaged. Put simply, for the purposes of this work these models should be viewed as toy models. Any model giving rise to such production modes will exhibit the results discussed below. The results are general and hold for most models exhibiting the respective topologies.

3.1 Typical cross-sections and triggering of LLPs

We start by briefly discussing the cross-section upper limits that have been obtained in various experimental searches by CMS and ATLAS and which are relevant for our processs. This will guide us in chosing realistic values for these cross-sections which are allowed by current observations.

-

Displaced leptons (electrons or muons)

The CMS search for LLPs decaying to two leptons at \(\sqrt{s}=8 \, \text {TeV}\) with \(19.6 \, {\text {fb}}^{-1}\) of data sets an upper limit of few fb on the signal cross-section (production of LLPs from SM Higgs decays and branching of LLPs to electrons/muons) when the LLP mass is 20 GeV and it has a proper mean decay length (\(c\tau \)) of 1 cm [82].

The search for displaced vertices of oppositely charged leptons in ATLAS at \(\sqrt{s}=13 \, \text {TeV}\) with \(32.8 \, {\text {fb}}^{-1}\) of data rules out signal production cross-sections up to \(\sim 0.3 \, \text {fb}\) for the signal model where a pair of squarks of mass 700 GeV is produced and decays into a 500 GeV neutralino which is long-lived (\(c\tau \sim 2\)–\(3 \, \text {cm}\)) and decays into two charged leptons (electron/muon) and a neutrino via an RPV coupling [39].

-

Displaced jets

The CMS search for displaced jets at \(\sqrt{s}=13 \, \text {TeV}\) with \(132 \, {\text {fb}}^{-1}\) data for the jet-jet model (where LLPs are pair produced and decay to two quarks) sets a cross-section upper limit of 1 fb for an LLP mass of 100 GeV having \(c\tau \) of 1 cm [85]. This study has imposed a bound of 0.07 fb at 95% confidence level for simplified models with pair-produced neutral LLPs decaying into quark-antiquark pairs for long-lived particle masses larger than 500 GeV and mean proper decay lengths between 2 and 250 mm. In Ref. [86], LLPs with mean proper decay length ranging between 0.1 and 100 mm have been studied. In the context of R-parity violating SUSY scenarios, a cross-section bound of 0.08 fb at 95% confidence level for neutralino and gluino masses between 800 GeV and 3 TeV, has been obtained. The mean proper decay length has been constrained between 1 mm and 25 mm for such pair-produced particles.

For ATLAS, the search for displaced hadronic jets in the inner detector and muon spectrometer at \(\sqrt{s}=13 \, \text {TeV}\) with \(33.0 \, {\text {fb}}^{-1}\) of data sets an upper limit of \(\sim 10^{-2}\) on the branching of SM Higgs to scalar LLPs which in turn decay to give displaced jets when the LLP has a mass of 25 GeV and \(c\tau \) of 60–70 cm [87].

-

Disappearing tracks

In the recent CMS search for disappearing tracks [88] at \(\sqrt{s}=13 \, \text {TeV}\) with \(140 \, {\text {fb}}^{-1}\) of data, the upper limit on cross-section times branching of a chargino decaying into a wino-like neutralino is found to be \(\sim 5 \, \text {fb}\), for a 500 GeV chargino with \(c\tau = 100 \, \text {cm}\).

The disappearing tracks search at \(\sqrt{s}=13 \, \text {TeV}\) with \(36.1 \, {\text {fb}}^{-1}\) of data in ATLAS [35] excludes charginos with masses up to \(\sim \) 560 GeV having \(c\tau \) of 30 cm when \(\text {tan} \beta =5\) and \(\mu >0\).

From this discussion on the various cross-section upper limits we conclude that the strongest bounds until now are obtained by ATLAS in the displaced leptons search and the corresponding upper limit of \({\mathcal {O}}(0.1) \, \text {fb}\) applies for an LLP of mass 500 GeV having \(c\tau \, 2\)–\(3 \, \text {cm}\), with the LLP stemming from the decay of a 700 GeV particle. All other limits are mostly in the ballpark of at least a few fb for the \(c\tau \) values for which they are the most sensitive. In this work, we therefore choose typical cross-sections of 1 fb, 0.1 fb and 0.05 fb for the various LLP processes. These amount to 3000, 300 and 150 pairs of LLPs produced at the HL-LHC for \(3000 \, {\text {fb}}^{-1}\) of luminosity respectively. Although these are conservative numbers for some of the scenarios in which the limits are weaker, which implies that the correspondng cross-sections can still be greater than 1 fb, they will provide us with an idea of how well the lifetime can be estimated even with a moderate number of observed events.

The next step is to ensure that the LLP process can be triggered upon. If the event does not pass triggering criteria, it will be lost forever and this will severely hamper the prospects of discovering LLPs at the LHCFootnote 3. Usually the first level of triggers are hardware-based and have very limited information available at a coarser resolution. These are followed by software-based triggers, which have access to the full detector granularity.

Until now no tracking information was available at the first level triggers, however, there is a proposal to include tracking at the level-1 (L1) in the Phase-II upgrade. This opens up the possibility to use the L1 tracking for designing dedicated LLP triggers. Several attempts have been made to develop dedicated triggers for LLPs, both by experimental collaborations [90, 91] and phenomenological studies [92, 93]. The Phase-II upgrade of the CMS L1 trigger [94] also has some discussions on how specific triggers can be developed for triggering events with displaced objects. Reference [94] has also discussed the prospect of using the ECAL timing for triggering on displaced jets, having a \(p_T\) as low as 20 GeV. In summary, even in the high PU environment of HL-LHC, triggering of LLPs will be possible making use of the Phase-II upgrades along with the unique features of processes involving LLPs.

3.2 A note on backgrounds

For any discovery at the LHC, one has to carefully consider the backgrounds. Despite the numerous challenges characterising long-lived particles, they generically tend to give rise to fairly clean channels with relatively low background rates. Therefore, throughout our analysis we have neglected processes and instrumental effects that can act as backgrounds to the LLP signatures that we consider.

As an example, the authors of [95] followed one CMS [96] and one ATLAS [97] analysis to estimate the double differential inclusive jet cross-section and the inclusive prompt photon cross-section, respectively. They found that if the probability to measure an object with a given lifetime is modelled as a gaussian smear with a time resolution of 30 ps (the standard deviation of the gaussian distribution), the number of background events mis-measured to have a time-delay of more than 1 ns was negligible, as 1 ns lies more than 30 standard deviations away from the central value of the distribution. They further showed that for objects with a worse resolution, such as electrons, a 60 ps time resolution would, similarly, lead to negligible backgrounds. Moreover, the authors estimated the number of pile-up events, which can also act as backgrounds. They computed the number of pile-up backgrounds to be around \(10^7\), considering the fake rate of jet \(\rightarrow \) photon \(\sim 10^{-4}\), fraction of track-less jets \(\sim 10^{-3}\), and the inclusive cross-section of \(\sigma _{\mathrm{inc}} \sim 80 \, \text {mb}\). Applying a gaussian smear with a slightly larger resolution of 190 ps, they found that the number of background events for a time delay of \(\Delta t> 1 \, \text {ns} \, (\Delta t > 2 \, \text {ns})\) is 0.7 (0). A recent CMS paper [43] and the LLP community white paper [52] further categorised various other sources of backgrounds. The respective number of background events from beam halo, satellite bunch crossings and cosmic rays was found to be of the order of 0.5, 1 and 1 respectively, after imposing \(p_T\) cuts on the objects. Other sources of backgrounds can arise from fake-particle signatures that mimic real particles. These usually arise from spurious detector noise and are very hard to model with Monte Carlo simulations.

All in all, the low level of background rates that is expected in LLP searches allows us to neglect their impact, at least in this preliminary study. Needless to say that, in case one of the LHC experiments does observe an excess, they will have to be carefully studied.

3.3 Displaced leptons

We start with the experimentally simplest case in which a long-lived particle X decays into two leptons within the inner tracker of ATLAS. In this case, the position of the secondary vertex can be identified precisely by observing the lepton tracks in the tracker.Footnote 4 In general, the larger the number of tracks, the greater is the efficiency of reconstructing the secondary vertex, cf Ref. [99]. The position of the secondary vertex for a pair of displaced leptons can be reconstructed with a precision of few (\({\mathcal {O}}(10)\)) \(\mu \text {m}\) in the transverse direction if the decay occurs towards the inner tracker region, and becomes more uncertain for longer transverse displacements, cf Refs. [52, 99]. The mass of the decaying particle X can be inferred from the dilepton invariant mass distribution. Finally, the boost factor of the decaying particle X can be determined as \((\beta \gamma )_{\text {LLP}} = p/m\), where p and m are the absolute momentum and the invariant mass of the dilepton system, respectively. We present our analyses assuming two displaced electrons in the final state. In the case of muons, we need not restrict to the inner tracker and can rather consider decays up to the muon spectrometer [100]. In this case, we could also use the muon tracks in the muon spectrometer to reconstruct the secondary vertex as well as the four-momentum of the LLP.

3.3.1 Lessons from a naive exponential fit

Let us first attempt to reconstruct the LLP lifetime through a simple exponential fit.Footnote 5 We will see that experimental cuts introduce a bias on the sample and, hence, hamper the lifetime estimation. Solutions to this issue will be suggested in the following Sect. 3.3.4 and further elaborated upon in the subsequent Sections.

For this introductory exercise, we consider an ideal situation in which initial and final state radiation as well as smearing effects are absent, and the four-momenta of the long-lived particles X can be measured with infinite precision. We generate our data sample of parton-level events, \(pp \rightarrow XX\), at \(\sqrt{s}=14 \, \text {TeV}\) using PYTHIA 6 for different masses and lifetimes of the particle X.Footnote 6 Note that the \(\beta \gamma \) distribution of the LLPs will vary depending on their production mode and is, therefore, a model-dependent quantity.

We demand that both the electrons coming from the decay of the long-lived particle X have transverse momentum \(p_T > 20 \, \text {GeV}\) and, in order to illustrate one key difference between the previous LHC runs and the HL-LHC, we impose two alternative pseudorapidity cuts, namely \(|\eta | < 2.4\) (previous Runs) or \(|\eta |<4.0\) (HL-LHC) [101]. The samples with only the \(p_T\) and \(\eta \) cuts applied will be referred to as “basic cuts” (BC). Since the reconstruction of the secondary vertex becomes more difficult and less precise as the latter approaches the outer surface of the tracker, we impose an additional condition on the displacement of the secondary vertex with respect to the interaction point. We restrict ourselves to events for which the transverse decay length \(d_T\) of X lies within the region of the ATLAS tracker where large area tracking is possible (which extends out to 30 cm in the radial direction) and the displacement \(|d_z|\) in the longitudinal direction is within 150 cm which corresponds to the half-length of the ATLAS tracker. The samples with these additional cuts on the decay length along with the basic cuts applied will be hereafter referred to as “extra cuts” (EC). All used cuts can be summarized as follows:

- (BC):

-

\(p_T>20~ \text {GeV}\) and \(|\eta |<2.4\) (previous LHC Runs) or \(|\eta |<4.0\) (HL-LHC) for both electrons,

- (EC):

-

\(0.1~ \text {cm}< d_T < 30~ \text {cm}\) and \(d_z < 150~ \text {cm}\) for displacement of secondary vertex, in addition to (BC).

For the lifetime estimate we use all the selected LLPs, and not specifically one or two LLPs per event. In Fig. 3 we show the impact of these cuts on the decay length \(d = \beta \gamma c \tau \), proper lifetime \(\tau \) and \(\beta \gamma \) distributions (top-left, top-right and bottom-left panels respectively), when applied individually as well as all combined.

We first focus on the case in which \(|\eta |<2.4\). We observe that the pseudorapidity cut on the electrons introduces a bias on the \(\beta \gamma \) distribution towards smaller values. This is explained by the fact that \(|\eta | < 2.4 \) restricts events to the central region, characterised by high \(p_{T}\) and low \(p_{z}\). The fraction of events with high values of \(\beta \gamma = p/m = \sqrt{p_x^2+p_y^2+p_z^2}/m\) in the central region is much smaller compared to the forward region,Footnote 7 and therefore the rapidity cut rejects a large fraction of such events. On the other hand, we see that the cuts on the \(p_T\) of the electrons and on the transverse decay length of X affect the \(\beta \gamma \) distribution only slightly.

Effect of the cuts on the distribution of the reconstructed decay length d (upper left), the proper lifetime \(\tau \) (upper right), and the the boost factor \(\beta \gamma \) (bottom left and right for two different pseudorapidity cuts \(|\eta |<2.4\) and \(|\eta |<4.0\) respectively). The reconstruction here is actually a pseudo-reconstruction where all the detector effects and inefficiencies have not been taken into account. The distributions have been obtained assuming a mass of 100 GeV and a proper decay length of 50 cm. In each panel, we indicate the specific cuts that have been applied

For the decay length d, we observe that this distribution is mostly affected by the cuts on the transverse decay length of X, pushing the spectrum towards lower values of d. Similarly, these cuts also shift the proper lifetime \(\tau \) distribution towards lower values, hence, biasing our samples in favour of events characterised by smaller proper decay lengths. This implies that the observed distribution is overall skewed with respect to the underlying one, and our estimate for the lifetime will also be biased towards smaller values.

Based on the discussion presented in Sect. 2, we now attempt to estimate the lifetime of the particle X through a simple exponential fit using the TF1 class integrated in the ROOT environment [102]. The performance of this estimation depends both on the mean proper lifetime of the LLP and on its mass. To illustrate this dependence, we perform our fit assuming two different LLP masses, 100 GeV and 1 TeV, and three different mean decay lengths, 10 cm, 50 cm, and 1 m. The results of this exercise are summarised in Table 1, which shows the estimated lifetimes based on the samples without any cuts and with the various cuts applied for all six cases. For completeness, we also quote the number of LLPs remaining after each set of cuts is applied, starting with a sample of 10,000 LLPs (i.e., 5000 events since in each event a pair of LLPs is produced).Footnote 8

We observe that although the LLP mean decay length can be accurately reconstructed when the BC sample is used, once the (necessary) extra cuts are applied the result of the fitting procedure becomes incompatible with the actual underlying value. The situation becomes worse when the true decay length is large, since a larger fraction of the decays occurs beyond the limit of \(d_T < 30 \, \text {cm}\). The induced bias leads to results which can deviate from the actual decay length by almost one order of magnitude. The situation becomes marginally better when the mass of the LLP increases, since heavier LLPs are characterised by smaller \(\beta \gamma \) values and are more centrally produced, but it is clear that a naive exponential fit to the data does not constitute a viable option to reconstruct the LLP lifetime.

Moving to the case of the HL-LHC (\(|\eta | \lesssim 4.0\)), which will be our focus in everything that follows, in the bottom-right panel of Fig. 3, we also show the effect of the looser \(\eta \) cut on the \(\beta \gamma \) distribution (bottom-right panel). We observe that in this case the \(\beta \gamma \) distribution is affected substantially less, implying that the HL-LHC upgrade may perform better in estimating the LLP lifetime. However, the effects of the Extra Cuts which concern the position of the SV, remain. Moreover, as we already mentioned Pile-Up is expected to become an important issue in the HL-LHC environment. In the next Section, we discuss how the addition of 140 PU vertices per hard interaction affects the situation and it can be remedied.

3.3.2 The high PU environment of HL-LHC

To simulate the high PU environment of HL-LHC, we generate 1 million soft QCD events using PYTHIA8 [103] and use these as the pile-up. We merge this PU with the hard process using the PileUpMerger of Delphes-3.4.2 [104], assigning to each event a number of PU events which drawn from a Poisson distribution with an average value of 140. The average number of PU vertices follows from the peak luminosity of HL-LHC, which is proposed to be \(\sim 5\times 10^{34}\,{\mathrm{cm}}^{-2}{\mathrm{s}}^{-1}\). The vertices follow a two-dimensional Gaussian distribution, with \(z=0\) and \(t=0\) having the maximum probability of having a vertex, with \(\sigma _z= 5.3 \, \text {cm}\) and \(\sigma _t = 160 \, \text {ps}\) respectively. The total spread is of 25 cm and 800 ps respectively in z and t directions. We use PYTHIA6 to generate the hard process at \(\sqrt{s}=14 \, \text {TeV}\) and Delphes-3.4.2 for detector simulation. The total integrated luminosity used in this work is \(3000 \, {\text {fb}}^{-1}\).

As we saw, for the lifetime estimation of any particle we need the information of its decay position in the detector and the boost of the particle, where the latter can be reconstructed from its decay products. The distances are mostly measured with respect to the primary vertex, which is the vertex which has the maximum value of \(\sum _{n_{trk}} p_T^2\) or \(\sum _{n_{trk}} p_T^2/n_{trk}\), where \(n_{trk}\) is the number of tracks starting from that vertex. Then, in processes with LLPs which are not accompanied by many high-energy prompt particles, and in the presence of \(\sim 140\) other such vertices, the chances of misidentifying any PU vertex as being the PV will be higher (for a quantitative discussion cf Ref. [92]). In such a scenario, the \(d_z\) of the secondary vertex calculated from the reconstructed PV will not be the same as that calculated from the vertex where the LLP is actually produced, which will affect the overall measured LLLP decay length (d) in the lab frame. However, in all cases, the transverse distance of the SV (\(d_T\)) remains the same, and therefore, it is more convenient to use \(d_T\) in all further calculations, instead of d.

The high amount of PU at HL-LHC, among other problems, will affect the electron isolation adversely, since a large amount of tracks now enter the electron isolation cone. However, for displaced leptons we can only use the tracks which start close to the identified secondary vertex. We understand, then, that using such an isolation technique will not constitute a viable option at the trigger level, notably the L1 trigger. However, since here we are mostly concerned with the offline analysis, where we have fully reconstructed tracks, such an isolation can be applied and is helpful in identifying the displaced leptons even in the high PU environment of the HL-LHC.

Figure 4 (top panel) shows the isolation variable for electrons calculated from Delphes with an isolation cone of 0.3 and the isolation variable calculated using tracks starting within 10 mm of the identified secondary vertex of the electrons. We find that taking only displaced tracks improves the isolation drastically as expected. Also, we can see that for \(\langle \mathrm{PU}\rangle =140\), the RhoCorrected isolation is slightly better than the usual isolation variable, since it has some PU mitigation already present. When only displaced tracks starting near the SV are used for isolation, the amount of PU really does not affect the distributions much. As we can see from the bottom panel of Fig. 4, the \(c\tau \) distribution for the low and high PU scenarios are very similar, with no bias in any preferred direction. Also, since we are using \(d_T\) of the vertex, which remains the same irrespective of whether we identify the LLP production vertex as the PV, it does not depend on whether the added number of PU is high (around 140) or low (close to zero). Therefore, for simplicity, we merge our hard process with low PU.

3.3.3 Systematic uncertainties on the \(d_T\) and \(\beta _T\gamma \) distributions

In this section we study the effect of varying the parton distribution functions (PDF sets) and the scale of the \(2\rightarrow 2\) hard process on the \(d_T\) and \(\beta _T\gamma \) distributions. Since these two are the most important quantities required for the lifetime estimation of a LLP, we want to study the sources of systematic uncertainties and the ensuing uncertainty in their distributions. These effects depend on the decay length and mass of the LLP, and we perform this exercise for the same benchmark points as were chosen in Sect. 3.3.1. The results for an LLP of mass 100 GeV and decay length 10 cm are shown in Fig. 5. Overall, the uncertainties due to the scale variation are slightly greater than those due to PDF variation. However, for both the \(d_T\) and \(\beta _T\gamma \) distributions, the important observation is that changing the PDF set or the scale of the hard interaction does not drastically alter the shape of the distributions. For simplicity, we will demonstrate the impact of such systematic effects on the lifetime estimation by assuming a flat uncertainty of 10% and 20%, however, one might use the uncertainties of different bins for different lifetimes separately if they have been computed beforehand.

Uncertainties in the \(d_T\) (left) and \(\beta _T\gamma \) (right) distributions with variation of PDF (top) and scale for the \(2\rightarrow 2\) hard process (bottom) for an LLP of mass 100 GeV and \(c\tau \) of 10 cm. The error is not more than 0.5% in both the cases, even for varying \(c\tau \) values

3.3.4 Towards more realistic assessments

From the previous discussion we see that it is not possible to reconstruct the LLP lifetime in a fully model-independent manner based only on the measurement of its decay position, its mass, and its boost. The extra cuts (EC) introduce a bias towards smaller lifetimes in the sample because they restrict it to decays within a transverse distance of 30 cm from the beam line and hence reject decays characterised by larger proper lifetimes. We discuss few approaches that can be envisaged in order to circumvent this problem, which we will further develop in the subsequent sections.

The first approach requires minimal experimental information but incorporates theoretical bias, as it relies on a model-dependent \(\chi ^2\) fitting. If, from experiment, we have the distribution of the transverse decay length \(d_T\) and the mass \(M_{X}\) of the LLP X, then we can simulate the process for that particular mass within the framework of a concrete model. Simulating events for different lifetimes and performing a binned \(\chi ^2\) analysis of the \(d_T\) distribution, we can obtain an estimate for the actual lifetime of X. The results that can be obtained in this way will be presented in Sect. 3.3.5.

The second approach is also based on a \(\chi ^2\) (or likelihood) analysis. In contrast to the previous method it is fairly model-independent, but requires additional experimental information. To be more precise, along with the \(d_T\) distribution, we will assume that we can obtain the transverse boost factor (\(\beta _T\gamma \)) distribution of X from experiment. It is then possible to fit the (normalised) \(\beta _T\gamma \) distribution by an appropriate function and use the latter as a probability density function to generate random numbers. As a second step, random numbers will also be generated for each \(c\tau \) distribution. Then, multiplying the two sets of random numbers leads to the \(d_T\) distribution for that particular lifetime. Based on this, we can vary the lifetime and perform a \(\chi ^2\) analysis comparing the experimental \(d_T\) distribution and the one generated using the procedure we just described to estimate the actual lifetime of X. This method will be discussed in Sect. 3.3.8.

3.3.5 \(\chi ^2\) fitting of \(\beta _T\gamma c\tau \) distribution: model-dependent analysis

Let us start with the model-dependent approach. For the case in which the LLP decays into lepton pairs, the transverse decay length distribution and the LLP mass \(M_X\) can be experimentally measured. Then, within the framework of a concrete model, we can simulate the process assuming different lifetimes and perform a \(\chi ^2\) analysis in a straightforward manner. The minimum of the resulting \(\chi ^2\) distribution provides an estimate for the lifetime of the LLP. The reason why some knowledge about the underlying model is useful is because, as already mentioned above, the \(\beta \gamma \) distribution depends on the production mechanism and the decay length distribution is generated at the Monte-Carlo level by multiplication of the former with the lifetime distribution.

From now on we restrict our analysis to four out of the six benchmarks given in Table 1, which are characterised by mean proper decay lengths of 10 cm or 50 cm. For each configuration, we generate 3000, 300 and 150 events, corresponding to cross-sections of 1 fb, 0.1 fb and 0.05 fb respectively, and apply the EC on the obtained samples. The events passing these cuts constitute the “experimental” \(d_T\) distributions that we wish to fit. To this end, we generate the same process with the LLP mass set equal to the invariant mass of the two final state electrons and varying the lifetime. For each lifetime, we generate 5000 events and then apply the EC. We construct \(d_T\) distributions for LLPs simulated for different lifetimes with the same bin size as the \(d_T\) distribution of the discovered LLP that we obtain from the experiment. Here, we have set the bin size to 1 cm. We then calculate the \(\chi ^2\) value between these two distributions as

where N is the total number of bins in the distribution, and \(n_{\mathrm{exp}}\) and \(n_{\mathrm{th}}\) are the experimentally observed and theoretically expected number of events in each bin. The expected number of events in each bin (\(n_{\mathrm{th}}\)) is normalized to the total number of events observed in experiment. The denominator is the square of the 68% confidence level uncertainty in the observed number (\(n_{\mathrm{exp}}\)), which is equal to the difference between the \(68\%\) upper (b) and lower (a) limits on the value of \(n_{\mathrm{exp}}\). The latter are given by

where \(F^{-1}_{\chi ^2}\) is the quantile of the \(\chi ^2\) distribution with the number of degrees of freedom for the \(\chi ^2\) distribution given by \(n_d\) [105, Eq. 9.18]. The uncertainty taken here is exact and equals to \(\sqrt{n}\) for large values of n.

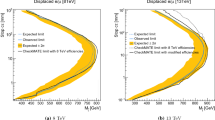

In our simulation, we vary the mean proper decay length between 1 cm and 150 cm with a step size of 1 cm. Figure 6 shows the resulting \(\chi ^2\) values as a function of the decay length for the four cases, with 1 fb cross-section for each. The reconstructed lifetimes, corresponding to the \(\chi ^2\) minimum for all the three assumed cross-sections are summarized in Table 2 together with the \(1\sigma \) and \(2\sigma \) lower and upper limits on the lifetime in each caseFootnote 9. Here, the number of degrees of freedom (dof) for the \(\chi ^2\) analysis has been taken to be one less than the number of bins over which the sum in Eq. (4) has been carried out (\(N = 30\), \(dof=29\)).

We observe that in our best-case scenario (\(\sigma = 1 \, \text {fb}\)) the mean proper lifetime can be reconstructed with a precision of \(\sim 20\%\) for decays lengths of the order of 10 cm, whereas for 50 cm we obtain the lifetime within roughly a factor of 2. Note also that, again, with increasing mass the \(\chi ^2\) minimum becomes more prominent. We find that the 1\(\sigma \) and 2\(\sigma \) lower and upper limits often have the exact same values. This is just due to the fact that the results here have been quoted with a precision of 1 cm, which corresponds to the bin size and scan interval. Even with less statistics, the lower decay length can be reconstructed, although the error band increases, and for the higher decay length benchmark, with 0.1 or 0.05 fb cross-section, only a weak lower bound can be placed on the lifetime. However, from our discussion on the typical allowed cross-sections of LLP processes, we found that the upper limits usually get weaker for LLPs with high \(c\tau \) values and, therefore, our optimistic scenarios are, indeed, viable.

Table 3 shows the effect of adding a flat systematic uncertainty due to the choice of PDF or scale of the hard interaction, on the number of events that is expected in a bin of the \(d_T\) histogram of the benchmark with \(M_X =100 \, \text {GeV}\) and \(c\tau =10 \, \text {cm}\) with cross-sections 1 fb (0.05 fb), as has been discussed in Sect. 3.3.3. We have ignored here the variation of this error with the decay length of the LLP. We observe that even with a systematic uncertainty of 20%, the results are not affected substantially. We have mentioned earlier that the error in identifying the secondary vertex is very small in the current LHC runs, where the PU is low. For HL-LHC, it might be difficult to correctly identify the position of the SV due to the high amount of PU which leads to large track multiplicity. Therefore, we add a 10 mm smearing on the position of the SV to see how it affects the lifetime estimation. Table 4 shows our results for an LLP of mass 100 GeV and decay length 10 cm decaying into electrons and having a cross-section of 1 fb (0.05 fb), whose \(d_T\) position has a Gaussian error of 10 mm. The error band on the estimated lifetime increases by about \(\sim \)10 cm for the low cross-section scenario, and for the higher cross-section, there is no change.

The results shown in Table 2 have been obtained using the same model as the one that was used in order to generate our pseudo-experimental sample. A reasonable question would be to ask how would these results change if we assumed the wrong model, like a different production mode of the LLP X. In this case, we will obtain the wrong lifetime estimate but with comparable error bands. For example, if the actual underlying process was production of X from the decay of an on-shell resonance, and we had assumed non-resonant production, then the \(\chi ^2\) analysis would give minima at a wrong decay length due to differences in the boost factor distributions of the two processes. However, it is possible to identify such resonant LLP production and the mass of the intermediate resonance, if any, from the total invariant mass distribution of the two LLPs’ decay products. Moreover, the spin information of LLP X can also be inferred from the angular distributions of its decay products as discussed in Ref. [106] for sleptons and in Refs. [106,107,108] for the Higgs boson. Hence, it is possible to deduce several key features of the underlying model if we can reconstruct all the decay products of the LLP pair. Even for decays of LLPs involving invisible particles, there are methods to identify the model and its parameters, like the LLP mass, as we will discuss later.

3.3.6 Unbinned and binned likelihood estimators

When the number of observed events is lower the \(\chi ^2\) analysis becomes less reliable, since the \(\chi ^2\) value as defined in Eq. (4) does not follow the \(\chi ^2\) distribution if the errors cannot be treated as Gaussian. In this case we can envisage other estimators such as the likelihood, the unbinned version of which is defined as:

where \(N_{obs}\) is the total number of observed events and \(f(ct_i;c\tau _j)\) is the probability of observing an LLP with decay length \(ct_{i}\) if the mean decay length is \(c\tau _j\). Therefore, the \(c\tau _j\) value which maximises \(L_j\) will provide an estimate of the lifetime of the LLP. However, one has to correctly identify the probability distribution function \(f(ct_{i};c\tau _j,W)\). If we had no cuts imposed on our sample, this would just be \(f(ct_{i};c\tau _j) \sim \mathrm{exp}(-ct_i/c\tau _j)\). Application of the cuts biases the sample as we have seen in Sect. 3.3.1, and the bias is dependent on \(c\tau _j\) as well as other factors like the mass of the LLP and its production mode, all of which we have denoted by W. The prescription for performing an unbinned likelihood analysis is to first fit the \(d_T\) or \(c\tau \) distributions for different \(c\tau _j\) within a particular model and for the mass of the LLP as obtained from experiment, and find suitable PDFs corresponding to each \(c\tau _j\). If all of them can be fitted well with a single function, say, \(f(ct_i;\vec {\theta })\), where \(\vec {\theta }\) is the set of parameters, then one might try fitting these parameters as functions of \(c\tau _j\), \(\vec {\Theta }(c\tau _j)\). Then Eq. (6) can be written as:

where the functions \(\vec {\Theta }(c\tau _j)\) and \(f(ct_{i};\vec {\Theta }(c\tau _j))\) are predetermined for a given model and LLP mass, and therefore the unbinned maximum likelihood estimator can be used in this case. In order to check the robustness of our estimation against different choices of distribution functions, we fitted 80 different functional forms from the Python fitter package [109], assuming different decay lengths of a 100 GeV LLP, produced as discussed in Sect. 2.2. While performing this exercise we found that for different \(c\tau \) values, different functions were providing the best fit, for example, for \(c\tau = 5 \, \text {cm}\) the \(\beta \) or \(\chi ^2\) functions appear to be optimal whereas for \(c\tau = 20 \, \text {cm}\) the generalised exponential functionFootnote 10 provides the best fit. It is, therefore, difficult to generalise this procedure for all \(c\tau \) values as well as for LLPs with different mass and production modes.

Since the probability distribution function is unknown, we turn naturally to the binned likelihood analysis, where we can treat the number of events observed in each bin of the \(d_T\) distribution as coming from a Poisson distribution with \(\mu \) being the number of events expected from the theoretical simulation that we perform for different \(c\tau \) values assuming a specific model and mass of LLP. It is defined as:

where the symbols have the same meaning as described in Sect. 3.3.5. In Eq. (8), we are therefore using N different Poisson PDFs with different expectation values coming from the theoretical simulations. As discussed in the case of unbinned likelihood, the value of \(c\tau \) which maximises L is the estimated lifetime of the LLP. One can also use \(-2\mathrm{ln} L\) as the estimator, and then one has to minimise this quantity. For data following a Gaussian distribution, \(\chi ^2 = -2\mathrm{ln} L\) and \(\Delta \chi ^2 = 2\Delta \mathrm{ln}L= F^{-1}_{\chi ^2 m}(1-\alpha ) \Rightarrow \Delta \mathrm{ln}L = F^{-1}_{\chi ^2 m}(1-\alpha )/2\) [110, Chapter 40], where \(F^{-1}_{\chi ^2 m}\) is the chi-square quantile for m degrees of freedom. We use this to quote the 1\(\sigma \) and 2\(\sigma \) error bands on the lifetime estimated using the \(-\mathrm{ln}L\) method. Figure 7 shows \(-\mathrm{ln} L\) as a function of \(c\tau \) for the benchmark with \(M_X =100 \, \text {GeV}\) and \(c\tau =10 \, \text {cm}\), for two different cross-sections − 1 fb (3000 events) and 0.05 fb (150 events). We find that the results are comparable to the estimates that we get from the \(\chi ^2\) analysis. However, as we discussed earlier, for a smaller number of observed events, the likelihood is probably a more reliable estimator.

3.3.7 Lifetime estimation using machine learning based regression

Instead of using a \(\chi ^2\) (or likelihood) analysis to estimate the lifetime, one can also employ machine learning-based (ML) regression techniques. To illustrate how these – more sophisticated – techniques work, we use the DNN based KerasRegressor and the XGBRegressor, both from Scikit-learn [111] to compare their outcome with the one obtained from our \(\chi ^2\) analysis for some of our benchmarks. We use the same format of input as for the model-dependent \(\chi ^2\) discussed above. We train the networks with the simulated \(d_T\) distributions which are generated for a particular LLP mass within a specific model and for \(c\tau \) values between 1 to 150 cm. The inputs are, therefore, the bin entries for 30 bins from 1 to 30 cm of the \(d_T\) distribution normalised to the total number of observed events in the experiment. This set of 150 histograms from simulations are randomly split into training and validation sets, with 100 and 50 \(c\tau \) distributions respectively, and we check that the network is not overfitting. The trained network is then used to predict the decay length of the benchmark points from their \(d_T\) distributions. We have seen in the previous sections that addition of PU does not significantly affect our results for the case of displaced leptons. In order to train ML-based regressors it is better to use a larger sample size in the simulations in order to ensure that the histograms that are used for training are smooth, and since generating the process with PU is both resource and time consuming, we ignore PU for a simple illustration of the ML techniques for lifetime estimation, and generate 50,000 events (100,000 pairs of LLPs) to obtain the training data set. The testing is done with LLP samples having a cross-section of 1.67 fb (around 5000 events by the end of HL-LHC).

In the XGBRegressor case, we employ a GradientBoostingRegressor with the following parameters:

n_estimators=2500, max_depth=8, learning_rate=0.1,

min_samples_leaf=5, min_samples_split=5.

Using the quantile regression presented in [112], and taking as an example our benchmark of a 100 GeV LLP with \(c\tau =50\) cm, the reconstructed central value is 63, the \(2\sigma \) lower limit is 47, and the 2\(\sigma \) upper limit is 116 from the XGBRegressor. We can, hence, see that the performance is reasonably comparable to the \(\chi ^2\) analysis.

For the DNN based regressor (KerasRegressor [113]), we have used two fully connected hidden layers with 30 and 32 nodes respectively, with RELU activation function. The loss of the network is taken to be the mean squared error and ADAM optimiser has been used with its default learning rate of 0.001. We find that the lifetime estimates are 10 and 57 for the LLP benchmarks with \(M_X=100\) and \(c\tau = 10 \, \text {cm}\) and 50 cm respectively.

In both cases, we should point out that we have performed a naive optimization of the hyperparameters based on trial-and-error and further optimization may be possible. Such a study is, however, clearly beyond the scope of the present work.

The size of the training and validations samples might seem small compared to those used in other problems, like jet tagging. However, even with this smaller training sample, we observed that the ML-based regressors could perform a very good fit, and the predictions are close to the expected results. This is due to the fact that the lifetime depends on very few parameters and, therefore, the regressors can learn the variation in the \(d_T\) distribution with \(c\tau \) even with a smaller sample.

3.3.8 \(\chi ^2\) fitting of \(\beta _T\gamma c\tau \) distribution: model-independent analysis

Let us now turn to our model-independent method which, however, requires certain additional experimental information. As we already mentioned in Sect. 3.3.4, here we will assume that the \(\beta _T \gamma \) and \(d_T\) distribution can be extracted from experiment. This distribution will be fitted with a suitable function which, if treated as a probability density function, can be used to generate a large number of random values for \(\beta _T\gamma \). In Fig. 8 we show the normalised \(\beta _T\gamma \) distribution we obtain for benchmark \((m_X, c\tau ) = (100~ \text {GeV},10~ \text {cm})\), along with the corresponding fit. Moreover, we employ an additional function of the form \(-c\tau \ln U[r] \), where U[r] generates a random number distributed uniformly between 0 and 1, to generate exponential lifetime distributions with different values for \(c\tau \). Multiplying the two sets of random numbers, we obtain a \(d_T\) distribution for various combinations of \(\beta _T\gamma \) and \(c\tau \). This \(d_T\) distribution can then be used to perform a \(\chi ^2\) analysis similar to the one described in Sect. 3.3.5 and obtain an estimate of the LLP lifetime. In this case, no specific model assumption is needed, since the knowledge of the \(\beta _T\gamma \) distribution encapsulates all the necessary model information. Therefore, this is indeed a model-independent approach, provided that the information on \(\beta _T \gamma \) is experimentally accessible.

Figure 9 shows the \(\chi ^2\) distribution obtained through this method as a function of the reconstructed decay length for the same four benchmark configurations as in Sect. 3.3.5, each having 1 fb cross-section. Table 5 shows the reconstructed decay length and the \(1\sigma \) and \(2\sigma \) lower and upper limits for each scenario for all the three assumed cross-sections. Similar to the model-dependent \(\chi ^2\) analysis presented previously, the number of degrees of freedom has been taken to be \(dof = N-1 = 29\).

Our findings show that for LLPs characterized by relatively short lifetimes or heavy LLPs, through this method it is possible to reconstruct the mean decay length with a comparable precision as when knowledge of the underlying model is assumed. For longer lifetimes and lower masses, and within the lifetime interval that we considered, we could only infer a lower limit from this \(\chi ^2\) analysis as can seen in the upper right panel of Fig. 9. Again with increasing mass, the lifetime estimation improves. These results are to be expected: as the mean proper lifetime and/or boost of the LLPs increases, the number of decays occurring within a radial distance of 30 cm from the beam line decreases. This means that our sampling of the \(\beta _T \gamma \) distribution carries larger uncertainties which, in turn, reflect upon our capacity to reconstruct the LLP lifetime. In a sense, a model-dependent fit corresponds to the limit at which the \(\beta _T \gamma \) distribution is known with infinite precision. Note also that the estimated lifetime using this method tends to be on the higher side because the \(\beta _T\gamma \) distribution is affected by the cuts, as shown in Fig. 3, and is biased towards lower values. Consequently, larger \(c\tau \) values are favoured in order to match the experimental \(d_T\) distribution.

Other estimators, like the binned likelihood and ML-based regressors, as discussed for the model-dependent approach, can also be employed in the model-independent case. We do not repeat the analysis here with these other estimators, since the procedure remains similar – in this case, instead of comparing the \(d_T\) distributions from a particular theoretical model with varying lifetimes with the experimentally observed \(d_T\), one would compare the latter with \(d_T\) distributions obtained from the product of experimentally observed \(\beta _T\gamma \) and the exponential \(c\tau \) distributions for various lifetimes.

3.4 Displaced leptons with missing transverse energy

We now consider the 3-body decay of a neutral long-lived particle (LLP) X into two leptons along with an invisible particle Y,

The presence of more than one lepton implies that the position of the secondary vertex (SV) can be identified. But in this class of LLP decays, measuring the \(\beta \gamma \) of X is more challenging since not all the decay products can be reconstructed. Hence, we need a lifetime estimation method which does not rely on the knowledge of the \(\beta \gamma \) information of the LLP. To the best that we can think of, the only option in order to reconstruct the LLP lifetime in this class of decay modes is a model-dependent analysis.

An additional complication arises due to the lack of knowledge concerning the LLP mass. One possibility is to employ the dilepton invariant mass edge, which is determined by the difference between the mass of the LLP and that of the invisible particle

and to assume that particle Y is massless. We can then indeed estimate the mass of X from the edge of the dilepton invariant mass distribution. After determining the mass, we can follow the same procedure as in the case of displaced leptons.

The massless invisible particle assumption can be avoided by employing the stransverse mass (MT2) variable to find out the masses of the mother particle as well as its invisible decay product as has been shown for the case of gluino decaying to neutralino and jets in Ref. [114]. The transverse mass of a gluino is given as

where \(m_{T,vis}\) and \(\mathbf{p }^{vis}_T\) are the transverse invariant mass and transverse momentum of the visible system, respectively, while \(m_Y\) and \(\mathbf{p }^Y_T\) are the assumed mass and transverse momentum of the invisible system, respectively. Each event will involve two such LLP decays and the stransverse mass variable (MT2) is defined as

where the maximum transverse mass of the two LLPs in each event is minimised over all possible values of \(\mathbf{p }^{Y(1)}_T\) and \(\mathbf{p }^{Y(2)}_T\) such that they always satisfy \(\mathbf{p }^{Y(1)}_T + \mathbf{p }^{Y(2)}_T = \mathbf{p }^{miss}_T\). The edge of the transverse mass distribution (i.e. \(m_{T2}^{max}\)) gives the value of the LLP mass only if the correct mass of the invisible particle is used in Equation (10). Otherwise, \(m_{T2}^{max}\) has a different functional dependence on \(m_Y\) depending on whether its value is smaller or greater than the actual invisible particle mass. The two functions, however, intersect at the invisible particle mass. As it has been shown in Ref. [114], this feature can be used in order to deduce the mass of the LLP. In Fig. 10 we show the variation of the maximum stransverse mass with varying trial masses for the invisible particle fitted with two different functions and how the intersection of these functions can provide an estimate of both the LLP and invisible particle’s masses.

The reconstructed masses are \(483.8\pm 4.57~\text {GeV}\) and \(92.35\pm 8.62~\text {GeV}\) at parton-level with 10,000 events for a \(500~\text {GeV}\) LLP decaying into a \(100~\text {GeV}\) invisible particle. Note that here we have performed a very simplistic analysis without considering any detector effects, just to illustrate that it is, indeed, possible to obtain at least a ballpark estimate of the particle masses even for signatures including missing transverse energy (even in the absence of timing). Still, in oder to obtain an estimate of the impact of detector effects, we repeat the analysis using Delphes-3.4.2 with the CMS card. In the bottom panel of Fig. 10 we show the reconstruction of both the LLP and invisible particle mass using MT2 with the detector level missing transverse energy. The reconstructed masses are \(475.97\pm 5.13~\text {GeV}\) and \(86.82\pm 5.13~\text {GeV}\), assuming 1000 events. We find that even with this number of events, this method can provide a reasonable estimate of the two masses involved in the process at the reconstruction level.

With the LLP and invisible masses at hand, and within the framework of a specific model, we can then perform a model-dependent \(\chi ^2\) analysis to reconstruct the LLP lifetime. It should be noted that the same method can also be applied to the case in which the LLP decays to two leptons and an invisible particle through an on-shell intermediate particle. For further details of this analysis the reader is referred to Ref. [114].

In Table 6 we present the reconstructed lifetime values along with their \(1\sigma \) (\(68\%\) CL) and \(2\sigma \) (\(95\%\) CL) lower and upper limits for the GMSB model presented in Sect. 3 for all the benchmarks considered with three different cross-sections. We observe that the true lifetime can be reconstructed with a precision of \(40\%\) (at \(68\%\) CL) for small masses and lifetimes, improving to roughly \(15\%\) for heavier (i.e. less boosted) LLPs. For longer lifetimes the latter number translates to roughly \(40\%\), whereas for a light LLP with a longer lifetime we could only infer a lower limit on \(c\tau \) within the considered interval.

3.5 Charged LLP decaying into lepton and invisible particle

The last case we consider is that of a charged LLP decaying into a lepton and an invisible particle inside the tracker. If the mass difference between the charged LLP and the invisible particle is substantial, then the lepton will have sufficient transverse momentum and can be reconstructed, giving rise to a “kinked” track signature. If, on the other hand, the charged LLP and the invisible particle are degenerate in mass, the lepton will be too soft to be reconstructed, leading to a disappearing track in the Tracker. Here we will focus on the former case.

In the busy environment of the LHC, online triggering on a kinked track is challenging [80], especially if the LLP decay occurs towards the outer parts of the Tracker system. However, as stated in Ref. [80], off-line reconstruction of this kink could be attempted, which would then provide the position of the SV with some uncertainty. Moreover, from the track of the charged LLP we can calculate its momentum, while the rate of energy loss due to ionisation (i.e. the LLP’s dE/dx) can be used to estimate its mass. Then, it is – at least in principle – possible to retrieve all the information that is necessary in order to reconstruct the lifetime in a similar manner as we did for displaced leptons, and we can use any of the alternatives to estimate the lifetime.

Note that until now we have not discussed issues related to the efficiency with which displaced objects can be detected. Given the exceptionally challenging nature of the kinked tracks, however, it is important to try and estimate, even in a crude manner, the efficiency of reconstructing such a signature in the first place. To this goal, in what follows we will assume that the probability to reconstruct a kinked track can be expressed as a convolution of three factors: first, the efficiency to identify the charged LLP track. This can be typically identified with about \(95\%\) efficiency if the LLP travels a distance of at least 12 cm before decaying, as shown in Ref. [115]. Secondly, the efficiency of identifying the (displaced) lepton track. We take this to be identical as for ordinary displaced leptons, and borrow it from Ref. [77]. Finally, in order to be able to disentangle the two tracks, we also demand that the angular separation (\(\Delta R\)) between the LLP and the lepton should be greater than 0.1 radian, so that the kink is prominent.

3.5.1 Model-dependent \(\chi ^2\) analysis

With the previous remarks in mind, we first perform a model-dependent \(\chi ^2\) analysis. In Table 7 we show the reconstructed decay length values for our four benchmarks along with the \(1\sigma \) and \(2\sigma \) lower and upper limits on the LLP lifetime for each scenario.

We see that a lifetime \(c\tau = 10 \, \text {cm}\) can be reconstructed with a precision of \(\sim 15\%\) (\(65\%\) CL) for a 100 GeV LLP, which turns to \(10\%\) for a 1 TeV particle. As expected, the precision decreases as \(c\tau \) increases but, for \(c\tau = 50 \, \text {cm}\) we can still obtain results within a rough factor of 2.

3.5.2 Model independent \(\chi ^2\) analysis

Let us now move to our model-independent analysis. One important point to note here is that the transverse decay length distribution as obtained from experiment is expected to be biased towards lower values because of the dependence of the displaced lepton track reconstruction efficiency on the decay length – the efficiency decreases as \(d_T\) increases. Since, however, these efficiencies are known, it should be possible to unfold the experimental \(d_T\) distribution accordingly and then compare with the distributions that we obtain using the product of various \(c\tau \) distributions with the fitted \(\beta \gamma \) distribution.Footnote 11 However, for the unfolding to work in experiment, one needs to not only know the efficiencies associated with tracking, but also the uncertainties associated with these efficiencies very well. Quantifying these experimentally is quite an arduous job and this will affect the lifetime estimates and the sensitivities. Since we do not know the uncertainties yet, we perform the analysis assuming that we know them precisely well.

In Table 8 we present the reconstructed lifetime value for each of our benchmarks, along with their \(1\sigma \) (\(68\%\)) and \(2\sigma \) (\(95\%\)) lower and upper limits.

We observe that for all of our benchmarks, it is possible to obtain a reasonable reconstruction of the LLP lifetime, with a precision that is comparable to the one obtained through the model-dependent analysis presented in the previous section.

3.6 Displaced jets

Let us now move to the case of a neutral long-lived particle that decays into two quarks inside the tracker part of the detector. The observed LHC signature in this case consists of displaced jets. Since jets contain numerous charged particles, by extrapolating their tracks, it is possible to obtain the position of the secondary vertex quite accurately [98, 99]. In our analysis, we will assume that the positions of the secondary vertices are known with high precision and we will study the reconstruction of the mother particle’s, i.e. the LLP’s, \(\beta \gamma \) from its decay products.

In the high PU scenario of HL-LHC, the displaced jets signature will get more affected than final states consisting of displaced leptons. The 140 vertices per bunch crossing can increase the jet multiplicity to very high values, even when a higher \(p_T\) cut, and in this busy environment, it is difficult to identify the displaced jets coming from the LLPs. Considering narrow jets reduces the PU contribution and therefore, proves useful for identifying the LLP jets, since the latter deposits energy in smaller physical region, as has been discussed in Ref. [92]. Also, the proposal to include the timing layer in the Phase-II upgrade of CMS (MTD) with a timing resolution of 30 ps, would help in bringing down the PU amount to the current PU amount, around 30–50 vertices per bunch crossing [74]. Pile-up mitigation techniques, like PUPPI (pileup per particle identification), might also help, however, how these methods work in the HL-LHC environment and how well can one recover the displaced jets need separate studies, which is clearly beyond the scope of the present work. Here, we present the analysis assuming that complete removal of PU is possible. Delphes, by default, does not handle displaced objects properly as has been discussed in Ref. [116] due to the absence of the three-dimensional detector geometry and segmentation, and needs major modifications. Therefore, we have presented the analysis at Pythia-level here.

As in the displaced lepton case, we use Pythia6 to generate events for pair-production of a long-lived particle X and its eventual decay into quarks. We use the same set of cuts (EC) for the displaced jets as used for final states with displaced letpons, since these cuts are on the position of the secondary vertex to ensure that the displaced tracks can be reconstructed with good efficiency as motivated from the extent of large area tracking in ATLAS. We discuss the reconstruction of the boost of the LLP from displaced jets and show that the situation becomes less straightforward than in the case of displaced leptons due to several complications affecting jet reconstruction. First, the mismatch between the actual energy of the quarks and the one measured from the jet affects the reconstruction of the \(\beta _T\gamma \) distribution of the LLP. Secondly, the reconstruction of jets as their displacement increases may introduce additional challenges at the LHC. Concerning the second issue, since we are restricting ourselves to decays occurring within 30 cm from the beamline, we don’t expect much difficulty in reconstructing the displaced jets. However, the measured jet energy can be quite different than that of the initial quark coming from the LLP decay. This may, in particular, affect the model-independent analysis which crucially depends on the fitting of the transverse boost distribution of the LLP.

3.6.1 Reconstructing \(\beta _T\gamma \) of the LLP from displaced jets