Abstract

This is the third of a series of papers on three-loop computation of renormalization constants for Lattice QCD. Our main points of interest are results for the regularization defined by the Iwasaki gauge action and \(n_f=4\) Wilson fermions. Our results for quark bilinears renormalized according to the RI’-MOM scheme can be compared to non-perturbative results. The latter are available for twisted mass QCD: being defined in the chiral limit, the renormalization constants must be the same. We also address more general problems. In particular, we discuss a few methodological issues connected to summing the perturbative series such as the effectiveness of boosted perturbation theory and the disentanglement of irrelevant and finite-volume contributions. Discussing these issues we consider not only the new results of this paper, but also those for the regularization defined by the tree-level Symanzik improved gauge action and \(n_f=2\) Wilson fermions, which we presented in a recent paper of ours. We finally comment on the extent to which the techniques we put at work in the NSPT context can provide a fresher look into the lattice version of the RI’-MOM scheme.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Numerical Stochastic Perturbation Theory (NSPT [1, 2]) can be a powerful tool to address perturbative computations in lattice QCD up to an order which would be impossible to attain with standard, diagrammatic approaches. A few years ago the Parma group applied NSPT to get three- (and even four-) loop Renormalization Constants (RCs) of finite quark bilinears in the scheme defined by the Wilson gauge action and Wilson fermions [3]. Very recently, [4] provided in turn both finite and logarithmically divergent three-loop RCs for currents in the scheme defined by the tree-level Symanzik improved gauge action and two flavors of Wilson quarks. The inclusion of divergent RCs was made possible by the method first introduced in [5, 6]: when an anomalous dimension is in place, finite-size effects can be important in NSPT computations and they have to be carefully taken into account. The main result of the current paper is the computation of quark currents RCs in the regularization defined by the Iwasaki gauge action and four flavors of Wilson quarks (quenched computations will be reported as well, to enable a comparison). Preliminary results were quoted in [7]. For a complete discussion of our methodology the reader should refer to [4], which has been largely devoted to a discussion in some detail of the NSPT approach to the computation of renormalization constants (with a main emphasis on the control over finite lattice spacing and finite-volume effects).

Both in the case of the \(n_f=2\) tree-level Symanzik gauge action and in the case of the \(n_f=4\) Iwasaki gauge action, our results can be compared with analogous non-perturbative computations for twisted mass fermions [8, 9] (the renormalization scheme is massless and thus the RCs are the same). In order to do that, perturbative series have to be summed. An important goal of this paper is a discussion of the issues that are related to summing the PT series for lattice QCD.

The overall structure of this paper is as follows:

-

Section 2 presents an overview of our methodology. It is mainly intended to allow the reader to go through the paper without having to refer to other sources.

-

In Sect. 3 our results for \(Z_S, Z_P, Z_V, Z_A\) for the Iwasaki gauge action and \(n_f=0,4\) Wilson fermions are presented.

-

In Sect. 4 we address the issue of summing the series, and in particular we deal with the explicit disentanglement of irrelevant (finite \(a\)) contributions, which is possible once also finite-volume effects have been corrected for. We take into account not only the results of the current paper, but also those of [4] (i.e., we compare the two regularizations).

-

Section 5 contains a discussion of different ways of summing the series. The effectiveness of boosted perturbation theory is discussed; it turns out that this is relevant in particular for the Symanzik action.

-

In Sect. 6 we briefly discuss to which extent our approach can provide a contribution for an overall better understanding of the lattice version of the RI’-MOM scheme.

2 Three-loop renormalization constants in NSPT

In this section we provide a brief account of our computational strategy. This is basically a summary of the discussion of [4], to which the interested reader is referred for an in-depth description of our method.

2.1 Three-loop RI’-MOM lattice computations

The lattice is a suitable regulator for the RI’-MOM renormalization scheme [10]. The definition of the latter for quark currents starts from the computation of Green functions on external quark states at fixed momentum \(p\),

The \(G_{\Gamma }(p)\) are then amputated to get vertex functions (\(S(p)\) is the quark propagator)

By projecting on the tree-level structure,

one gets the quantities \(O_\Gamma \) which enter the definition of the currents’ renormalization constants,

By choosing different \(\Gamma \) one obtains the different currents, e.g. the scalar (identity), pseudoscalar (\(\gamma _5\)), vector (\(\gamma _{\mu }\)), and axial (\(\gamma _5\gamma _{\mu }\)) cases. The master formula (Eq. (1)) is defined in terms of the quark field renormalization constants; this in turn reads

We adhere to the standard recipe of getting a mass-independent scheme by defining everything at zero quark mass.

A main point in our strategy is to get the (divergent) logarithmic contribution to the renormalization constants from continuum computations: NSPT is only in charge of reconstructing the finite parts. The typical renormalization constant we want to compute (in the continuum limit) reads

where the lattice cutoff (\(a\)) is in place and the expansion is in the renormalized coupling. Divergencies can show up as powers of \({l}= \log (\mu a)^2\). By differentiating Eq. (3) with respect to \({l}\) one obtains the expression for the anomalous dimension,

whose expansion can be read from the continuum computations [11],

This is a scheme dependent, finite quantity, with no dependence on the regulator left. By imposing the requirement that the expression we get by differentiating Eq. (3) matches Eq. (4) we can obtain the expressions of all the \(d_n^{(i >0)} \, (n\le 3)\), which are thus expressed in terms of the \(\gamma _{m\le n}\), the \(d_{m \le n}^{(0)}\) and the coefficients of the \(\beta \)-function; the latter come into place since part of the dependence on \(\mu \) in Eq. (4) is via the coupling \(\alpha (\mu )\).

In the above discussion there was no reference to a (covariant) gauge parameter \(\lambda \). This is legitimate, since we compute in Landau gauge, i.e.\(\;\lambda =0\). In a generic (covariant) gauge, one has a dependence on \(\lambda \) entering Eq. (3). Moreover, the gauge parameter anomalous dimension comes into place in linking Eq. (3) to (4). Since the non-trivial dependence on the gauge parameter anomalous dimension is itself proportional to \(\lambda \), all this is immaterial in the Landau gauge: if one keeps track of the whole \(\lambda \)-dependence and then puts \(\lambda =0\) one gets the same result as which is got by ignoring \(\lambda \) from the very beginning.

In our computations the \(Zs\) are expressed as an expansion in the bare lattice coupling \(\alpha _0\),

Equation (5) is obtained from Eq. (3) by plugging into the latter the matching of the renormalized coupling to the lattice bare one.

2.2 Two-loop matching of \(\alpha _\mathrm{{IWA}}\) to continuum

The matching of \(\alpha _\mathrm{{IWA}}\) to a continuum coupling is only known to one loop [12]. Since we need a two-loop matching to get Eq. (5), we had to compute it. This was done by first matching \(\alpha _\mathrm{{IWA}}\) to an intermediate scheme, which was chosen to be a potential scheme. The matching of the latter to \(\overline{\mathrm{MS}}\) is well known [13] and the results for the anomalous dimension we can read from [11] are obtained as expansions in \(\alpha _{{{\overline{\mathrm{MS}}}}}\). Thus, computing the matching of potential coupling \(\alpha _\mathrm{{V}}\) to \(\alpha _\mathrm{{IWA}}\) is a possible solution. Here and in the following our notation only enlightens the dependence of the scheme on the gluonic action: the dependence on Wilson fermions has to be assumed as well, when we refer to the four flavors case. The strategy of the computation is that of [14, 15]. The interested reader can find more information on technical details both in [4] and in [16].

We started from the NSPT computation of Wilson loops \(W(R,T)\) from which we got Creutz ratios

A potential for static sources at distance \(r=Ra\) can now be defined and a coupling out of it according to

where one can see that in a lattice regularization a residual mass \(\delta m\) comes on top of the coupling. Here we need to rely on an approximation, since we cannot compute the limit in Eq. (6). As a consequence of the same observation, our results are not in the continuum limit. Despite this, we could obtain a decent estimate of the matching that in perturbation theory reads (\(r=Ra\))

where the expansion coefficients are a function of scale parameters \(\Lambda \) and coefficients of the \(\beta \)-functions \(b_i\)

Reconstructing one-loop result was a check that the procedure is viable, and at two loops we could finally obtain

where the new piece of information is contained in the quantity \(X\): in the following we will refer to the latter.

2.3 Three-loop critical mass

Staying at zero quark mass in our three-loop NSPT computation requires the knowledge of the Wilson fermion critical mass at two loop, which is well known from the literature [17].

From now on, we switch to \(\beta ^{-1}\) as the expansion parameter for our results. Also we introduce a hat notation to denote dimensionless quantities, e.g. \(\hat{p} = pa\) (if needed, explicit factors of \(a\) will be later singled out).

The critical mass is computed from the inverse quark propagator (\(\hat{m}_W(\hat{p}) = \mathcal{O}(\hat{p}^2)\) is the irrelevant mass term generated at tree level)

More precisely, in the self-energy \(\hat{\Sigma }(\hat{p},\hat{m}_\mathrm{cr},\beta ^{-1})\) we single out the components along the (Dirac space) identity, the one along the gamma matrices and the irrelevant one along the remaining elements of the Dirac basis

The critical mass can be read from \(\hat{\Sigma }_c\) Footnote 1 at zero momentum

The known one- and two-loop values of the critical mass were inserted (as counterterms): this is enough to have massless quarks at the order we are interested in (three loops). The novel three-loop result for the critical mass is not relevant for the computations at hand: it is simply a byproduct.

Computations were performed on different lattice sizes: \(32^4, 24^4, 20^4, 16^4, 12^4\). Left panel of Fig. 1 shows the three-loop computation of \(\hat{\Sigma }_c\) at different values of momentum on a \(32^4\) lattice, in the \(n_f=4\) case. We are interested in the zero momentum value, which can be got by fitting our observable as an expansion in hypercubic invariants, e.g.

This is a general feature of all our computations. On each lattice size we got a different value and an infinite volume result could then be obtained by extrapolation. Right panel of Fig. 1 displays results plotted as a function of \(N^{-2}\), which is the power that best fits our data. \(N=L/a\) is the only significant quantity in the NSPT context (there is no value one can attach to the lattice spacing \(a\)). The infinite volume extrapolation was first fitted by keeping only the single power \(-2\). We then checked that the central values of fits performed adding other powers were consistent with that result, within the error of the latter. This procedure, thought limited by the number of available sizes, proved to be accurate enough, as we could check at one- and two-loop level, for which the expected zero value of the critical mass was obtained in the infinite volume limit. Our final results for the three-loop contribution to the critical mass are \(\hat{m}_\mathrm{cr}^{(3)} = -0.98(1) \;\; (n_f=0)\) and \(\hat{m}_\mathrm{cr}^{(3)} = -0.78(2) \;\; (n_f=4)\).

2.4 Fitting irrelevant and finite-volume effects

In Eq. (1) currents renormalization constants are defined in terms of the quark field renormalization constant. The latter can be computed—see Eq. (2)—from the quark self-energy, more precisely from its component along the gamma matrices, which at any finite value of the lattice spacing reads

Notice the tower of irrelevant contributions which go on top of the one expected in the continuum limit. All these contributions are contained in the definition of \(\widehat{\Sigma }_{\gamma }(\hat{p}, \nu )\). In the latter one recognizes a dependence on the direction \(\nu \), which comes via the dependence on the length \(|\hat{p}_{\nu }|\). In the continuum limit this dependence drops out and, once one subtracts the logarithmic contribution discussed in Sect. 2.1, the value of the finite part of \(Z_q(\mu =p)\) is given by \(\lim _{a\rightarrow 0}\hat{\Sigma }_{\gamma }^{(0)}(\hat{p})\). This observation on the dependence on \(\nu \) of \(\widehat{\Sigma }_{\gamma }(\hat{p},\nu )\) becoming immaterial in the continuum limit has to be borne in mind also in the following, e.g. in Eq. (15).

The second ingredient in Eq. (1) is given by the quantities \(O_{\Gamma }\). These have their lattice counterparts

For the vector and axial currents we eliminate dependences on directions like the one we have just discussed in the case of \(\widehat{\Sigma }_{\gamma }(\hat{p},\nu )\), e.g.

The reason for getting rid of this dependence in this case while retaining it in the case of \(\widehat{\Sigma }_{\gamma }(\hat{p},\nu )\) will become clear in a moment.

Our NSPT computations are performed on different, finite lattice sizes \(N=L/a\) (our lattices are always isotropic); therefore one must expect finite-size corrections. On dimensional grounds, we can expect a dependence on \(pL\). Since we want to compute the currents’ renormalization constants in both the continuum and the infinite volume limit, we need to take two limits. This is done in the following form:

Equation (15) is our key formula and deserves a few comments:

-

We only compute the finite parts. As was made clear in Sect. 2.1, we know all the relevant logarithms entering the quantities we are concerned with. This means in particular that we can subtract their contribution: this is the meaning of the subscript \(\ldots |_\mathrm{log subtr }\) in the definition of \(\widehat{O}_\Gamma (\hat{p}, pL, \nu )\).

-

Since in Eq. (15) we take the limits \(a \rightarrow 0\) and \(L \rightarrow \infty \) and we subtract the logs that come from the anomalous dimension \(\gamma _{O_\Gamma }\), \(\widehat{\Sigma }_{\gamma }(\hat{p}, pL, \nu )\) reconstructs the contribution of \(Z_q\) (and in this limit the dependence on \(\nu \) drops out). Notice that this is true because of the ratio that is taken. In order to determine \(Z_q\) itself one should look at \(\widehat{\Sigma }_{\gamma }(\hat{p}, pL, \nu )\) alone and perform the subtraction of different logs (i.e., those connected to the quark field anomalous dimension).

-

On a fixed lattice volume, the \(a \rightarrow 0\) limit of \(\widehat{O}_\Gamma (\hat{p}, pL,\nu )\) can be evaluated by computing the quantity for different momenta \(\hat{p}\) and fitting the results in terms of hypercubic invariants, e.g. those listed in Eq. (13). The possible terms are dictated by symmetries of both \(\widehat{\Sigma }_{\gamma }(\hat{p}, pL, \nu )\) and \(\hat{O}_{\Gamma }(\hat{p}, pL)\) (a formal power counting fixes how many terms one should retain).

-

We want to account for the limits \(a \rightarrow 0\) and \(L \rightarrow \infty \) simultaneously. This is done by computing the quantity \(\widehat{O}_\Gamma (\hat{p}, pL,\nu )\) on different volumes and performing a combined fit. The combined fit is made possible by defining finite-size corrections according to

$$\begin{aligned} \widehat{O}_{\Gamma }(\hat{p}, pL,\nu )&= \widehat{O}_{\Gamma }(\hat{p}, \infty ,\nu ) + ( \widehat{O}_{\Gamma }(\hat{p}, pL,\nu ) \nonumber \\&- \widehat{O}_{\Gamma }(\hat{p}, \infty ,\nu )) \nonumber \\&\equiv \widehat{O}_{\Gamma }(\hat{p}, \infty ,\nu ) + \Delta \widehat{O}_{\Gamma }(\hat{p}, pL,\nu ) \nonumber \\&\simeq \widehat{O}_{\Gamma }(\hat{p}, \infty ,\nu ) + \Delta \widehat{O}_{\Gamma }(pL) \end{aligned}$$(16)where the main rationale for the last (approximate) equality is that we neglect corrections on top of corrections. Since \(p_\mu L = {{2\pi n_\mu } \over {L} }L = 2\pi n_\mu \), there is only one finite-size correction for each 4-tuple \(\{n_\mu \,|\,\mu =1,2,3,4\}\) and no functional form has to be inferred for the correction.

All in all, a prototypal fitting form of ours reads

where in order to make things easy we limited to a very moderate order in \(a\) (actually, less than what we use in realistic fits). The relevant contribution to the finite part is \(c_1\). We recall once again that this is a combined fit to data taken on different lattice sizes, with the same \(\Delta \widehat{O}_{\Gamma }(pL)\) applying to all the data corresponding to the same momentum 4-tuple \(\{n_1,n_2,n_3,n_4\}\) (resulting in different values of momenta on different lattice sizes). Notice that the inclusion of \(\Delta \widehat{O}_{\Gamma }(pL)\) makes the fit not constrained with respect to an overall shift. This is cured by including in the fit a few measurements (of the order \(\sim 1,2,3\)) taken on the largest lattice in the high momentum region: these are assumed to be free from finite-size effects and act as a normalization point.

Finite-size effects can easily be spotted in the left panel of Fig. 2, where we plot one-loop \(\widehat{O}_S(\hat{p}, pL, \nu )\) on both \(32^4\) (black symbols) and \(16^4\) (red, filled symbols), in the \(n_f=4\) case. One can see that data are arranged in families (different symbols): this is a direct consequence of the dependence on \(\nu \) one can see e.g. in Eq. (17). All in all, there is one family for each length \(|\hat{p}_{\nu }|\). Since finite-size effects are there, families do not join smoothly across different lattices. In the right panel of Fig. 2 one can inspect how things change taking into account finite-size corrections \(\Delta \widehat{O}_{S}(pL)\): families do join smoothly. This family mechanism provides a very effective handle to detect finite-size effects: this is the reason for retaining the \(\nu \) dependence in the definition of \(\widehat{O}_{\Gamma }(\hat{p}, pL,\nu )\); to be definite, we retain it in the numerator (i.e., in the contribution connected to \(Z_q\)); keeping it also in the denominator would result in a too odd fitting form.

One-loop (\(n_f\!=4\)) \(\widehat{O}_S(\hat{p}, pL, \nu )\) (see Eq. 15) measured on a \(32^4\) (black, empty) and a \(16^4\) (red, filled) lattice, without (left) and with (right) finite-size corrections. Notice that, in the right panel, the two (black/empty and red/filled) square data points near \((pa)^2=2.5\) literally fall on top of each other (as they should, if finite-size effects were indeed perfectly removed), so that only one can be seen

3 Results

In Table 1 we give a brief account of our statistics. One can see that computations were performed on five different sizes (\(32^4, 20^4, 24^4, 16^4, 12^4\)) for both \(n_f=0\) and \(n_f=4\). The latter are our main point of interest. While with only two values of \(n_f\) we cannot determine the coefficients of the \(n_f\) dependence,Footnote 2 it is interesting to have at least an indication of how sensitive the results are to the number of flavors.

Since NSPT requires the (order by order) integration of the Langevin equation, a finite-order integration scheme for this stochastic differential equation is needed: the Euler scheme is our choice here. An \(\epsilon \rightarrow 0\) extrapolation (a linear one, in the case at hand) is needed to remove the effects of the finite time step \(\epsilon \). In Table 1 we provide the statistics collected on each different size. Notice that the values of \(\epsilon \) are chosen different for \(n_f=0\) and \(n_f=4\) (see the discussion in [2]).

Configurations were saved on which we can still measure different observables. They were saved with frequencies which were chosen having a rough analysis of the autocorrelations in place. The analysis of the different observables is in charge of dealing with the residual autocorrelation effects.

In Table 2 we report the coefficients of the three-loop expansion of \(Z_S, Z_P, Z_V\) and \(Z_A\).Footnote 3 The results are reported for both \(n_f=4\) and \(n_f=0\). We remind the reader that the expansion parameter is \(\beta ^{-1}\). We stopped at three-loop order given the knowledge of anomalous dimensions which we can get from [11]. For finite quantities there is in principle no limitation (other than practical ones dictated by statistics). Notice that [18] could now open new opportunities for even higher-order computations.

We quote the analytical one-loop results [12]: the comparison is a first proof of the effectiveness of our method. The reader could notice that we have somehow less systematic deviations from analytic results here than in [4]. This is due to the fact that we took the normalization points for finite-size effects at slightly higher values of the momentum: see the discussion after Eq. (17). To be definite, the normalization points are in the highest \((pa)^2\) region of Fig. 2, which in terms of an n-tuple reads \(\{4,4,4,4\}, \{4,4,4,5\}\); the two choices have been compared and results have been proved to be equivalent within errors. A more stringent confirmation of our results comes from the fitting of irrelevant contributions. The latter is a key ingredient of our approach, since fitting irrelevant contributions compliant to lattice symmetries is our handle on continuum limit. A comparison to results in [19] made us confident in our results: the leading irrelevant terms that we fitted are consistent with the diagrammatic results.

The errors we quote are dominated by the stability of fits with respect to the change of fitting ranges, functional forms, number of lattice sizes simultaneously taken into account. Notice that the three-loop results for \(Z_S\) and \(Z_P\) have an extra source of error in the indetermination in the coupling matching parameter \(X\) (see Eq. (9) and the discussion over there).

All in all, our new results, i.e. two-loop and three-loop contributions to \(Z_S, Z_P, Z_V\) and \(Z_A\) for Iwasaki action seem to be quite moderate, in particular for two loops: taking into account the typical values of \(\beta \) that are relevant to numerical simulations (\(\beta \sim 2\)), three-loop contributions are typically larger than two-loop contributions. The coefficients themselves are smaller than the ones found in the case of the tree-level Symanzik improved action [4], but this is not per se of any significance. First of all, the comparison in magnitude of two- and three-loop coefficients has to be corrected for the different \(\beta \) value regimes one is interested in (there is roughly a factor of 2). What is even more important is the weight of two- and three-loop contributions with respect to the leading one: what really makes the difference in between the two different regularizations is the relative weight of the one-loop contributions themselves.

Another general feature that emerges from our computations is that irrelevant corrections from hypercubic invariants which are not \(O(4)\) invariant appear to be in general quite significant for this action. All this is of course a numerical accident, but it is a relevant one when it comes to summing the series and assessing irrelevant effects.

4 Summing the series

We now come to the issue of summing the series. In this and in the following section we will deal not only with the results of this paper, but also with the ones for the regularization defined by the tree-level Symanzik improved gauge action and \(n_f=2\) Wilson fermions, i.e. those of [4].

For both cases one can compare perturbative and non-perturbative results, which for the Iwasaki case can be found in [9]. As already said, [9] deals with the same, massless RI-MOM scheme with twisted mass fermions: the results are presented at \(\beta =1.95\) and \(\beta =2.10\).

For \(Z_V\) we obtain

where the first error is the statistical one, while the second is a rough estimate of the truncation effects, which we simply take as the highest-order contribution. The latter recipe is the conventional one: one is of course well aware of its roughness, even if making use of it at three-loop level is more than what is usually done. On the other side, we have already made the point that two-loop contributions are indeed small for the Iwasaki case (and indeed even smaller for the \(n_f=4\) than for the \(n_f=0\) case). Since the three-loop contribution is thus relatively important, the net effect is an estimate of the truncation errors which is quite large. The other finite renormalization constant is \(Z_A\), for which we obtain

For \(Z_S\) we get in turn

Finally \(Z_P\) reads

One can directly observe a fair agreement with the results of [9]: basically the errors that result from our procedure make the perturbative and non-perturbative results fully consistent. Actually the (smaller) statistical errors would be enough to obtain a substantial agreement with non-perturbative results in the case of the finite renormalization constants. Notice that non-perturbative results in [9] are presented in two variants, referring to the different prescriptions “M1” and “M2” which the authors discuss. Here it suffices to say that the differences are to be ascribed to different treatments of irrelevant effects (and thus they are systematic effects): in general the two methods differ more for the divergent than for the finite renormalization constants. We will have more to say on irrelevant effects later in this section and then again in Sect. 6.

The quantity \(\sum _{i=1}^3 \beta ^{-i} \, {{1} \over {4} } \sum _{\nu =1}^4 \tilde{O}_V^{(i)}(\hat{p},\nu )\) for the cases of Iwasaki (left; \(\beta =2.10\)) and the Symanzik (right; \(\beta =4.05\)) case. Black points quantify the impact of irrelevant (finite lattice spacing) effects on a determination of \(Z_V\)

We now move to the results we got for the regularization defined by the tree-level Symanzik improved gauge action and \(n_f=2\) Wilson fermions (i.e. those in [4]). We stress once again that in this case the two- and three-loop coefficients are larger, but this should be corrected by taking into account the regime of \(\beta \) one is interested in (typical values of \(\beta \) for the tree-level Symanzik are roughly double of those for Iwasaki). Moreover, convergence properties of the series are dominated by the relative weights of one-loop and higher-order contributions. The results we obtain summing the series we computed in [4] can be compared to the non-perturbative ones in [8]. In this case we make our comparison at the largest value of \(\beta \) which is discussed in [8] (the reason for this will become clear in a moment). For \(Z_V\) our results sum to

while

Moving to logarithmically divergent renormalization constants, we get

andFootnote 4

Conventions with errors are the same as before. In this case deviations are manifest, in particular for \(Z_S\) and \(Z_P\). This in the end does not come as a surprise, given the observations we have already made: convergence properties are strongly controlled by the relative weight of one-loop and higher-order contributions. This is the reason for not attempting to sum the series at values of \(\beta \) smaller than the largest one. While there is a tendency to converge for finite quantities, logarithmically divergent constants are fairly far away from each other in the perturbative and non-perturbative computations. This clearly motivates the step forward of summing the series in different couplings, which will be addressed in the following section. Before we move to that issue, we present a first discussion of how we can assess the impact of irrelevant effects once we sum the series.

On both panels, the red/filled circles mark the quantity \(1+\sum _{i=1}^3 \beta ^{-i} \, \mathring{O}_V^{(i)}(\hat{p})\). As for blue/empty circles on the left panel they denote the quantity \(1+\sum _{i=1}^3 \beta ^{-i} \, {{1} \over {4} } \sum _{\nu =1}^4 \, \bar{O}_V^{(i)}(\hat{p},\nu )\), while on the right they are \(1+\sum _{i=1}^3 \beta ^{-i} \, \bar{O}_V^{(i)}(\hat{p},\nu )\). Data are for Symanzik action at \(\beta =4.05\)

The results we have just reported holds in the continuum and infinite volume limits, i.e. they are free from irrelevant and finite-size effects. To be definite: in the prototypal form of Eq. (17) this corresponds to retaining only \(c_1\). On the other side, to assess the irrelevant effects we can discard the continuum limit and finite-size contributions. Again, in the prototypal form of Eq. (17) this corresponds to discarding \(c_1\) (the continuum limit result) and \(\Delta \widehat{O}_{\Gamma }(pL)\) (the finite-size effects). This defines a new quantity, which we denote \(\tilde{O}_{\Gamma }(\hat{p}, \nu )\). At the same (very) moderate order of Eq. (17) a prototypal form for this quantity reads

All in all: in \(\tilde{O}_{\Gamma }(\hat{p}, \nu )\) everything depends on (powers of) \(\hat{p}\) and thus does not survive the continuum limit; on the other side, there is no \(pL\) dependence because that has been eliminated by subtracting the \(\Delta \widehat{O}_V(pL)\). Obviously, for divergent constants we compute the finite parts only (i.e. these are log-subtracted quantities).

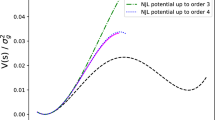

In Fig. 3 we plot the quantity

for the Iwasaki (left panel) and the Symanzik (right panel) case (values of the coupling are once again \(\beta =2.10\) and \(\beta =4.05\) respectively). These can be regarded as the irrelevant contributions to \(Z_V\) (computed in infinite volume at three-loop accuracy). Notice that in abscissa we report values of momentum in dimensionless units (in other terms, there is no value for the lattice spacing involved). Notice also that in Fig. 3 we average on directions, which is the common practice. When computed in this way, irrelevant effects come out of our fit, which is necessarily an effective one: we have to stop at a given order in the lattice spacing. We stress nevertheless that the fit is performed at fairly large orders (typically \(a^6\)) and at three-loop level.

One can easily see how different the impact is of violations of (continuum-like) rotational symmetry in the two cases. It is true that one often tries to minimize these effects by a convenient choice of the momenta. One should nevertheless keep in mind the trivial observation that the amount of violation is not decided by the choice of momenta: one should try in any case to fit terms compliant to the lattice symmetries.

In Fig. 4 we plot (in the case of Symanzik at \(\beta =4.05\)) the observable relevant for computing \(Z_V\) in yet another couple of ways. Let us consider once again the prototypal expansion of Eq. (17) and let us define two other quantities, which at the same (moderate) order read

All in all: out of the fit results, in both cases we discard the finite-size contributions and in the second quantity we also discard what depends on the length \(|\hat{p}_{\nu }|\), i.e. we cut part of the irrelevant effects.Footnote 5

On the left panel of Fig. 4 blue/empty circles denote the quantity

which is averaged over directions. Again on the left panel, red/filled circles denote instead

On the right panel, the red/filled circles are the same as on the left, while the blue/empty circles denote instead the quantity

for which there is no average over directions and families come into place again. There is one subtlety: one can see that in the right panel the blue, empty circles do not point in a trivial way to the result one is interested in. In other terms, when we keep the families structure, only the red, filled circles are the ones smoothly guiding the eye to the correct extrapolated result.

5 Summing the series in different couplings

The Symanzik case displayed not so brilliant convergence properties. Thus, that is the prototypal situation in which one would like to go for what is usually, generically referred to as boosted perturbation theory [20]. One re-expresses the series as expansions in different couplings, of course looking for better convergence properties that in the case one starts with. Often one deals with this having only a one-loop result available. As discussed in [3], this is at risk of being an empty exercise. At one loop, nothing changes but the value of the coupling itself. So, the effectiveness of the procedure relies on the optimal choice of coupling and scale that are really relevant for the computation at hand. Actually this choice has to be regarded as so good that one loop captures essentially the complete result. This does not need to hold true and can be strictly speaking only assessed a posteriori. Only having at least a two-loop result available one can inspect how the series actually reshuffle and one can hope to learn something more on the convergence properties. Our question is: can a three-loop computation be reliable enough to gain solid, new pieces of information?

We here compare results obtained as expansions in the couplings which were also used in [3], i.e.

\(P\) is the basic \(1\times 1\) plaquette, for which we do have an expansion in \(\beta ^{-1}\). In the Symanzik, \(n_f=2\) case, the latter reads

For the Iwasaki, \(n_f=4\) case, we haveFootnote 6

We can thus work out the expansions we are interested in. \(x_2\) and \(x_3\) are quite popular as boosted couplings. In the end, we want to see whether results coming from summing series in different couplings do or do not all approach the same result. The definition of \(x_1\) can be useful with this respect. Convergence properties in the Iwasaki computations are fairly good in the original coupling; we will focus the case of Symanzik action, looking for better convergence. There is an overall ambiguity we have to live with: we do not have non-perturbative simulations in the same setting we are dealing with (Symanzik action and \(n_f=2\) Wilson fermions). In view of this limitation, we have no non-perturbative value for the different couplings. We have indeed estimates which come in turn from summing perturbative expansions of the plaquette (actually even at higher orders than three loops): these are the values we plug in. On the other side, one could even take in first approximation the values of the plaquette for the different regularization of [3]. This ambiguity is admittedly a limitation. Still, if we take into account the order of magnitude of the error one can attach to the value of the coupling, it turns out that this is dominated by the other errors, typically the truncation errors which are still the dominant ones. The latter are estimated as done previously (i.e., as the highest-order contribution) and will be the only ones reported in the following. Table 3 summarizes our results.

Let us start from looking at \(Z_V\). Notice that switching from \(x_0\) to \(x_1\) and then to \(x_2\), the value of the couplings are getting larger and larger as we proceed. Results for the \(x_1\) and \(x_2\) expansions are quite close to each other and they both approach the results of [8]. We get even closer when we switch to \(x_3\). While the central value is now literally on top of the non-perturbative result, the error has become pretty large. This is simply the effect of the fact that the series has started oscillating: already at one loop one gets essentially the result 0.66, and then two- and three-loop contributions basically cancel each other.

We proceed to \(Z_A\). Once again, in the case of the \(x_3\) expansion the series has already started oscillating. All in all, it is fair to say that results for finite constants display a tendency to get closer to the non-perturbative ones. Actually, \(Z_V\) changed more than \(Z_A\), which is good, since the former was deviating more than the latter from non-perturbative results.

We proceed to the logarithmically divergent renormalization constants. If one takes the values of \(Z_S\) and \(Z_P\) after the (various, different) boosting procedures and compare them to the results in [8], one can still see quite important discrepancies. So, there is still quite a gap for divergent renormalization constants, which did not hold true in the case of finite constants. It could well be that one simply needs more terms to definitely assess the convergence properties, but there is another issue which could be considered. We have already noticed that “M1” and “M2” results in [8] differ much more in the case of \(Z_S\) and \(Z_P\) than in the case of \(Z_A\) and \(Z_V\). One method tries to gain more information from the lower momenta region than the other. To be more precise, one simply subtracts the known leading one-loop \(a^2\) irrelevant effects and look for a plateau region, while the other tries to fit extra irrelevant effects in the lower momenta region. This region is just the theater of a subtle interplay of UV and IR effects: on the one side higher powers of \(pa\) are suppressed (and this is good to assess irrelevant contributions), but on the other side that is just the region which is prone to suffering from finite-size (IR) effects.

All in all, boosted perturbation theory apparently solves the problem of the discrepancies between perturbative and non-perturbative results for \(Z_V\) and \(Z_A\). This sounds good, also in view of the fact that different boosted couplings basically point to consistent results. Discrepancies are still there for \(Z_S\) and \(Z_P\). While there is of course the possibility that even higher-order terms should be included, there is another explanation that could hold true. Given the interplay of IR and UV effects, there is a possibility that non-perturbative computations could suffer from finite-volume effects. These effects are not expected to be the same that we get (and correct for) in our NSPT setting, but they could possibly be assessed: more on this in the following section.

As a final comment, we go back to the Iwasaki case, for which basically there was no compelling reason to go for boosted couplings (of course one could nevertheless do it). We stress that the latter observation could be done only in view of the control of the series at three-loop level.

6 Some general remarks on lattice RI’-MOM

There is something interesting that one can learn from our computations, not only with respect to a comparison of perturbative and non-perturbative results.

First of all, we put forward a method to assess (the possible presence of) finite-size effects. One can see that there is in principle no reason why one should not attempt the same in the non-perturbative case. We are actually working on this [21].

Moreover, the high-loop computations which are enabled by NSPT can provide a new handle to correct non-perturbative computations with respect to irrelevant contributions. We have briefly sketched this in [22]. Quite interestingly, another group is working on the same ideas [23]. Basically this amounts to the following simple recipe:

-

One needs both a high-order NSPT computation and a standard non-perturbative computation.

-

First of all, one should try to assess the possible presence of finite-size effects in both cases. We stress once again that these do not need at all to be the same. Once assessed, they should be corrected in both computations.

-

Once both results are corrected for (possible) finite-size effects, one can take the irrelevant effects as estimated via the fitting procedure we described (here and) in [4] and subtract them from the non-perturbative data.

Subtracting irrelevant effects is by now a common practice. There are many approaches to this, requiring different combinations of perturbative computations and fitting of terms compliant to the lattice symmetries; see [24] for a recent contribution. In the end, our proposal is basically yet another variant, whose merits are worth investigating.

7 Conclusions and prospects

This work is a little landmark at the end of a path that we took a few years ago. The point we wanted to make is that the three-loop computation of Renormalization Constants for Lattice QCD is a realistic goal. There is in principle no sharp constraint on computing finite constants, while for logarithmically divergent ones there is a limit because continuum computations are available at three-loop order in the RI’-MOM scheme. These results make it possible to derive the leading logarithmic contributions one has to account for in the lattice regularization of the same RI’-MOM scheme. As for the finite parts, numerical stochastic perturbation theory can do the job. All this is under control because we can assess both finite lattice spacing (UV) and finite volume (IR) effects.

As a general conclusion, it is fair to say that the NSPT approach to the computation of renormalization constants for lattice QCD can provide at least two valuable contributions. First of all, it is a completely independent approach with respect to non-perturbative computations, with different systematic effects. From another point of view, NSPT techniques provide a new method to correct non-perturbative computations with respect to irrelevant contributions.

Last but not least, the method we suggested for the correction of finite-size effects could be useful in non-perturbative cases as well.

Notes

This is by the way the reason for the subscript \(c\).

At two loops one could of course pin down a number, but that would not even have the status of a fit.

The reader will notice a few significant corrections with respect to the preliminary results in [7].

We regret a typo in the value of \(Z_P\) reported in [4].

One can see a more general effect of the recipe for \(\mathring{O}_{\Gamma }(\hat{p})\) by referring to Eq. (14): over there the recipe amounts to singling out the contribution of the \(\hat{\Sigma }_{\gamma }^{(0)}(\hat{p})\) term.

By a mere numerical accident, in this case the error on the three-loop coefficient is actually smaller than that on the two-loop coefficient.

References

F. Di Renzo, E. Onofri, G. Marchesini, P. Marenzoni, Four loop result in SU(3) lattice gauge theory by a stochastic method: Lattice correction to the condensate. Nucl. Phys. B 426, 675 (1994)

F. Di Renzo, L. Scorzato, Numerical stochastic perturbation theory for full QCD. JHEP 04, 073 (2004)

F. Di Renzo, V. Miccio, L. Scorzato, C. Torrero, High-loop perturbative renormalization constants for Lattice QCD. I. Finite constants for Wilson quark currents. Eur. Phys. J. C 51, 645 (2007)

M. Brambilla, F. Di Renzo, High-loop perturbative renormalization constants for Lattice QCD (II): three-loop quark currents for tree-level Symanzik improved gauge action and \(n_f=2\) Wilson fermions. Eur. Phys. J. C 73, 2666 (2013)

F. Di Renzo, E.-M. Ilgenfritz, H. Perlt, A. Schiller, C. Torrero, Two-point functions of quenched lattice QCD in numerical stochastic perturbation theory. (I) The ghost propagator in Landau gauge. Nucl. Phys. B 831, 262 (2010)

F. Di Renzo, E.-M. Ilgenfritz, H. Perlt, A. Schiller, C. Torrero, Two-point functions of quenched lattice QCD in numerical stochastic perturbation theory. (II) The gluon propagator in Landau gauge. Nucl. Phys. B 842, 122 (2011)

M. Hasegawa, M. Brambilla, F. Di Renzo, Three loops renormalization constants in numerical stochastic perturbation theory. PoS Lattice 2012, 240 (2012)

M. Constantinou et al. [ETM Collaboration], Non-perturbative renormalization of quark bilinear operators with \(N_f = 2\) (tmQCD) Wilson fermions and the tree-level improved gauge action. JHEP 1008, 068 (2010)

B. Blossier et al. [ETM Collaboration], Renormalisation constants of quark bilinears in lattice QCD with four dynamical Wilson quarks. PoS Lattice 2011, 233 (2011)

G. Martinelli, C. Pittori, C.T. Sachrajda, M. Testa, A. Vladikas, A general method for nonperturbative renormalization of lattice operators. Nucl. Phys. B 445, 81 (1995)

J.A. Gracey, Three loop anomalous dimension of nonsinglet quark currents in the RI-prime scheme. Nucl. Phys. B 662, 247 (2003)

S. Aoki, K.I. Nagai, Y. Taniguchi, A. Ukawa, Perturbative renormalization factors of bilinear quark operators for improved gluon and quark actions in lattice QCD. Phys. Rev. D 58, 074505 (1998)

Y. Schroder, The static potential in QCD to two loops. Phys. Lett. B 447, 321 (1999)

F. Di Renzo, L. Scorzato, The residual mass in lattice heavy quark effective theory to \(\alpha ^3\) order. JHEP 0102, 020 (2001)

F. Di Renzo, L. Scorzato, The \(N_f = 2\) residual mass in perturbative lattice-HQET for an improved determination of \(m_b^{{\overline{\rm MS}}}(m_b^{{\overline{\rm MS}}})\). JHEP 0411, 036 (2004)

M. Brambilla, F. Di Renzo, Matching the lattice coupling to the continuum for the tree level Symanzik improved gauge action. PoS Lattice 2010, 222 (2010)

A. Skouroupathis, M. Constantinou, H. Panagopoulos, Two-loop additive mass renormalization with clover fermions and Symanzik improved gluons. Phys. Rev. D 77, 014513 (2008)

J.A. Gracey, Renormalization group functions of QCD in the minimal MOM scheme. J. Phys. A 46, 225403 (2013)

M. Constantinou, V. Lubicz, H. Panagopoulos, F. Stylianou, O(a**2) corrections to the one-loop propagator and bilinears of clover fermions with Symanzik improved gluons. JHEP 0910, 064 (2009)

G.P. Lepage, P. Mackenzie, On the viability of lattice perturbation theory. Phys. Rev. D 48, 2250 (1993)

M. Brambilla, G. Burgio, F. Di Renzo (in preparation)

M. Brambilla, F. Di Renzo, Finite size effects in lattice RI-MOM. PoS Lattice 2013, 322 (2013)

J. Simeth, A. Sternbeck, E.-M. Ilgenfritz, H. Perlt, A. Schiller, Discretization errors for the gluon and ghost propagators in Landau gauge using NSPT. PoS Lattice 2013, 459 (2013)

M. Constantinou, M. Costa, M. Gckeler, R. Horsley, H. Panagopoulos, H. Perlt, P.E.L. Rakow, G. Schierholz et al., Perturbatively improving regularization-invariant momentum scheme renormalization constants. Phys. Rev. D 87(9), 096019 (2013)

Acknowledgments

We warmly thank Luigi Scorzato and Christian Torrero, who took part in the long-lasting project of three-loop computation of LQCD renormalization constants and from whom we could always have support and useful inputs. We are very grateful to M. Bonini, V. Lubicz, C. Tarantino, R. Frezzotti, P. Dimopoulos and H. Panagopoulos for all the stimulating discussions we had over the years. M.H. warmly thanks Roberto Alfieri for having introduced him to grid-computing. This research is supported by the Research Executive Agency (REA) of the European Union under Grant Agreement No. PITN-GA-2009-238353 (ITN STRONGnet). We acknowledge partial support from both Italian MURST under contract PRIN2009 (20093BMNPR 004) and from I.N.F.N. under i.s. MI11 (now QCDLAT). We are grateful to the Research Center for Nuclear Physics and to the Cybermedia Center in Osaka University for the time that was made available to us on their computing facilities. We also acknowledge computer time on the Tramontana I.N.F.N. facility and on the Aurora system. We thank the AuroraScience Collaboration for the latter.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Funded by SCOAP3 / License Version CC BY 4.0.

About this article

Cite this article

Brambilla, M., Di Renzo, F. & Hasegawa, M. High-loop perturbative renormalization constants for Lattice QCD (III): three-loop quark currents for Iwasaki gauge action and \(n_f=4\) Wilson fermions. Eur. Phys. J. C 74, 2944 (2014). https://doi.org/10.1140/epjc/s10052-014-2944-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-014-2944-x