Abstract

Accurate, fast and lightweight dense target detection methods are highly important for precision agriculture. To detect dense apricot flowers using drones, we propose an improved dense target detection method based on YOLOv8, named D-YOLOv8. First, we introduce the Dense Feature Pyramid Networks (D-FPN) to enhance the model’s ability to extract dense features and Dense Attention Layer (DAL) to focus on dense target areas, which enhances the feature extraction ability of dense areas, suppresses features in irrelevant areas, and improves dense target detection accuracy. Finally, RAW data are used to enhance the dataset, which introduces additional original data into RAW images, further enriching the feature input of dense objects. We perform validation on the CARPK challenge dataset and constructed a dataset. The experimental results show that our proposed D-YOLOv8m achieved 98.37% AP, while the model parameters were only 13.2 million. The improved network can effectively support any task of dense target detection.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Introduction

Accurate detection and precise management of dense targets in orchard management face significant challenges, particularly in complex and dynamic environments. Despite the integration of advanced facilities in modern orchards1,2,3, existing approaches still encounter limitations, such as suboptimal detection accuracy with current algorithms and constraints imposed by traditional detection methods. In response to the intricacies of orchard environments, this study proposes a dense apricot flower detection method, D-YOLOv8, designed for unmanned aerial vehicle (UAV) Imagery4. The precise detection of apricot flowers can be applied to areas such as blossom period prediction and yield forecasting, contributing significantly to orchard management.

In academic research, we are adept at using technology to improve agricultural practices and guide development directions. Scholars tend to use deep learning technology and image technology to support the development of smart agriculture5. Agricultural mechanization is the basis for current highly developed agriculture, and the combination of new technologies is considered to be a prerequisite for future agricultural development. The development of agriculture is inseparable from the support of new technologies and the actual agricultural environment. After many years of agricultural benefit development, we have begun to turn our attention to green agriculture, emphasizing its precision and sustainability, which cannot be achieved solely by relying on traditional agricultural technology6. The development and combination of deep learning and computer vision technology will gradually become an important part of future agriculture and will be used to increase researchers’ understanding of crops, accurately predict crop growth, and complete automated precise control. This study is committed to using these new technologies to broaden the research and application fields of precision agriculture.

After rapid development, computer vision has always been a difficulty and challenge in the field of computer research7,8,9,10,11. It was not until the maturity of machine learning theory and computing power that it was rapidly innovated and widely used. Computer vision (CV) research faces complex scenarios and a large number of calculations, so it is often difficult to establish simple models and apply them. The powerful feature extraction capabilities of machine learning are highly suitable for complex CV tasks. With the early achievements of machine learning in CV, researchers have found that using deeper and more parameterized networks in CV can continue to improve performance, which further promotes the maturity of deep learning theory12,13. Imran et al.14 investigated the performance of deep learning methods and traditional methods in precision agriculture and found that deep learning methods performed better and were more robust. This research is based on deep learning technology and uses the more mature end-to-end detection model YOLO as a framework to improve dense target detection. YOLOv8 is a general target detection method that achieves good results on large and medium-scale targets, but does not perform well on dense targets15,16. Chunling et al.17 noted that YOLO has broad application potential for UAV devices; however, the effect of YOLO on small target detection still needs to be improved, because the UAV perspective is dominated by dense small targets. Peng et al.18 used an FPN in an SAR target detection task to improve the detection performance for small targets. Small targets such as ships in SAR images are mainly small targets. Inspired by this literature, D-FPN was introduced in our study to improve the detection performance of dense targets19,20. Wengjing et al.21 used an attention mechanism to improve the performance in building segmentation tasks. The attention mechanism can focus features on the target area while weakening the weight of the background area. Inspired by the attention mechanism’s ability to enhance feature extraction, DAL is proposed in our work to enhance the detection ability of dense features.

For dense target detection, we improved the model. In addition, we also made new attempts at improving the data collection and production methods. The main objective of the AIM 2019 Challenge22 is to map RAW data to RGB images. According to the literature and results, RAW data contain more bit-depth information, and some information is irreversibly lost during the mapping process23,24,25,26,27. Xu et al.28proposed a benchmark model for target detection using RAW data, in which RAW data captured by on-board sensors are used for direct target detection in the field of autonomous driving. The results demonstrated the effectiveness of using RAW data for target detection. Inspired by relevant challenges and the literature, we also introduced the use of RAW data to enhance the dataset in our work to further improve the performance of dense target detection.

Our work uses new technologies to solve practical problems in precision agriculture, including the latest deep learning detection models and RAW data processing methods in CV. The main contribution of this work is to propose a target detection method named D-YOLOv8, in which the feature extraction network and detection method are improved for dense targets. Another major contribution of this work is the introduction of RAW data for enhanced dense object detection, which is a new exploration in the field of visual detection. Our main contributions can be summarized as follows:

-

The D-FPN model is introduced into the model. This module directly extracts dense features from the shallow backbone network layer and uses simple and efficient structural filtering to extract effective features, which enhances the ability to extract dense features.

-

The DAL model is also introduced in the model, which further enhances the ability to extract dense features by focusing attention on dense areas from the spatial and channel directions.

-

During the data enhancement process, we pioneered the use of RAW data to construct a dense target detection dataset, which effectively enhanced the detection performance of dense targets.

The rest of the paper is structured as follows: the “Methods” section contributes the methods used in our main work; the “Dataset” section introduces the datasets we use; the Experiences section introduces the methods and environment of our experiments; and the “Results” section details the results and comparisons of the experiments we introduced. The “Discussion” section discusses the results, limitations and future directions of our research; and the “Conclusion” section summarizes the contributions of our work

Methods

YOLOv8 is a representative algorithm in single-stage object detection and is considered to be at the forefront of technology. Moreover, the proposed method has achieved significant improvements in detection performance and speed through critical enhancements. YOLOv8 inherits and combines the strengths and characteristics of previous YOLO versions, resulting in substantial progress in both detection performance and speed29. The objective of this research is to enhance the ablity of the YOLOv8 framework to address dense apricot flowers detection from a drone’s perspective, aiming to improve detection accuracy while also optimizing the model for efficiency.

D-YOLOv8 framework

While YOLOv8 is a representative algorithm in the YOLO series, delivering remarkable performance and speed in the field of object detection, the original YOLOv8 model is a general-purpose detection algorithm that has not been specifically optimized for particular scenarios30. Therefore, in this study, we introduce Dense-YOLOv8, abbreviated D-YOLOv8, to address the challenge of dense apricot flower detection from a drone’s perspective. The model architecture of D-YOLOv8 is illustrated in Fig. 1.

Given that flowers are often densely distributed as small objects from a drone’s perspective, the D-YOLOv8 model incorporates a series of optimizations. First, the Dense-FPN module is used to extract dense features from the backbone network’s shallow layers. Second, it efficiently extracts features and employs them for object detection through the DAL module. These improvements not only reduce the model’s structure but also increase the training speed while improving the real-time performance. Consequently, the D-YOLOv8 model is better suited for lightweight drone platforms, opening up new possibilities in the field of drone applications.

The D-YOLOv8 model employs a lightweight backbone network to extract multilevel features, catering to the demands of dense object detection. To further enhance the detection efficiency and accuracy, the model introduces the D-FPN module, which extracts shallow-level features and integrates multilevel positional information31. This improvement helps the model better understand the position and spatial distribution of dense objects32. Additionally, D-YOLOv8 introduces a dense attention layer, which applies attention weights more effectively to dense regions, further improving the efficiency of dense object detection. The features fused through the dense attention layer are fed into the detection head to obtain the final detection results.

During the training phase of the D-YOLOv8 model, the task of the detection head is to match the model’s outputs with ground truth values for loss calculation. To achieve this objective, D-YOLOv8 employs the matching strategy of a Task-Aligned Assigner33, as shown in Eq. 1. The Task-Aligned Assigner’s matching strategy multiplies the CIoU (complete intersection over union) and the prediction score to obtain a metric that measures the degree of alignment between the predictions and the ground truth. Specifically, the model’s generated predictions are first matched with the ground truth. This is done by calculating the “align metric,” which is obtained by multiplying the CIoU and the prediction score. Subsequently, the samples are ranked based on this metric, and the top K samples are selected.

where \(\alpha \) and \(\beta \) are hyperparameters for weight assignment.

During model training, selected samples are used to calculate the loss, where BCE (binary cross-entropy) is employed to compute the classification loss. This involves calculating the prediction probabilities and real label probabilities for sample classification, as shown in Eq. 2. The BCE loss formula quantifies the disparity between the model’s predicted classification probability \(P_c\) and the actual label classification probability \(Y_{c}\). Its objective is to minimize the loss function, thus achieving a better fit to the training data.

The calculation of the positional loss is relatively complex. Initially, the intersection over union (IoU) between the predicted and actual results is computed. Subsequently, the CIoU loss is computed, as illustrated in Eq. 3. The CIoU takes into account geometric information to a greater extent in the context of object detection.

where c and d represent the center position and diagonal of two bounding boxes, respectively. v serves as a penalty term to account for the orientation differences between the two bounding boxes. \(\lambda \) functions as an adjustable parameter to balance the influence of IoU and orientation differences. \(\epsilon \) is used to prevent division by zero in the denominator.

The distribution focal loss (DFL) is a novel localization loss function used to construct an anchor-free detection head, addressing the issue of class imbalance in positive and negative samples while improving model accuracy34. When calculating the detection boxes, DFL optimizes the probabilities of predicting the positions closest to the ground truth (GT) on both the left and right sides, allowing the network to quickly focus on the distribution in the vicinity of the object position, as shown in Eq. 4. This design is no longer constrained by Anchor-Boxes, enabling the prediction of more and denser objects.

in the equation, \(P_i\) and \(Y_i\) represent the predicted and ground truth distributions of the left and right bounding boxes, respectively. \(w_L\) and \(w_R\) represent the widths of the left and right bounding boxes, respectively. \(\text {CE}(P_{i,k}, Y_{i,k})\) denotes the cross-entropy loss used to compare the position distributions. \(\gamma \) and \(\delta \) are tuning parameters used to balance the left and right bounding box losses, respectively. N represents the number of samples.

In D-YOLOv8, to further improve detection accuracy, a combination of CIoU and DFL with appropriate weights is used to form the Bbox loss, while the Cls loss is constructed using BCE, as shown in Eq. 5.

where \(\tau \), \(\mu \), and \(\omega \) are hyperparameters representing the weights of each loss component. In this study, the default values are set to 7.5, 0.5, and 1.5.

The introduction of DFL into the D-YOLOv8 model has shown higher accuracy and performance in object detection tasks, especially in drone-based object detection tasks in complex scenarios with dense object distributions. The incorporation of DFL not only is significant for the field of object detection but also provides novel insights and methods for future research and applications in related domains.

The detection head calculates the position and classification of the detection results through nonmaximum suppression (NMS), yielding the final detection results, as illustrated in Fig. 235. The visualization of the detection results is shown. The D-YOLOv8m model, trained on the constructed dataset, was used for prediction on test images, and the detection results were annotated with green bounding boxes and confidence scores. To further emphasize the phenotypic characteristics of apricot flowers, a subset of blossoms is highlighted, allowing for a more detailed observation of the phenological stages of the apricot flowers. The visualizations demonstrated that the proposed D-YOLOv8 model performed well in detecting dense apricot flowers and correctly identified the majority of apricot flower targets.

As shown in Fig. 3, the flowchart of the D-YOLOv8 model illustrates the training and deployment process. Like other deep learning models, the D-YOLOv8 model needs to be trained on a high-performance GPU-equipped workstation until it converges36. Subsequently, the trained model can be applied to UAV detection devices for the purpose of detecting apricot flowers. Through this process, we can comprehensively validate the proposed object detection algorithm for apricot flowers from the perspective of UAVs and establish a theoretical and practical foundation for its application in other special environments that require the use of UAVs for research. This has significant implications for the advancement of UAV technology in fields such as agriculture and environmental monitoring.

Original YOLOv8

The design of YOLOv8 draws inspiration from YOLOv7 ELAN’s backbone network and neck section, while also taking cues from YOLOv5’s C3 structure, which has been replaced with a more feature-rich C2f structure37. Additionally, channel scaling has been adjusted based on different scenario applications38,39. This structural enhancement, as illustrated in Fig. 1, enables the model to better adapt to various scenarios, thereby improving its performance and versatility.

In addition, the head layer of YOLOv8 adopts the prevalent decoupled head structure, which is used for classification and detection tasks separately. It also employs an anchor-free detection head, eliminating the reliance on anchor boxes40. The structure is illustrated in Fig. 1.

Other algorithms in the YOLO series, such as YOLOv3, YOLOv5, and YOLOv7, have also achieved research outcomes and applications in various fields41,42. With the advancement of deep learning theories, more researchers are directing their focus towards increasingly complex scenarios and tasks. This trend has further propelled the integration of designed algorithms with specific application scenarios43,44,45,46. In many cases, algorithmic improvements are targeted not only towards accuracy and performance but also towards addressing specific tasks, such as dense object detection in our study.

As a novel object detection algorithm within the YOLO architecture, YOLOv8 enhances its network structure, loss functions, and data training processes, simultaneously improving detection accuracy and performance while maintaining a lightweight model. However, YOLOv8 was initially designed as a general-purpose object detection method, primarily focused on medium to large-sized objects, and may not retain high accuracy and performance in dense small object detection47. This is particularly critical in the domain of object detection based on UAVs, where detected objects often manifest as densely distributed small entities17. Therefore, our study proposes improvements specifically tailored to the frequent occurrence of dense small objects in UAV scenarios.

Dense feature pyramid networks

Deeper networks have the capability to extract higher-dimensional features but may also introduce issues such as feature loss and model overfitting48. To address the challenge of dense small object detection, we propose the D-FPN module, which is designed to extract sufficient features even from shallow layers. This module directly captures shallow-layer features and, through a combination of downsampling, upsampling, and convolution operations, connects them to the original features. The structure of this module is illustrated in Fig. 4.

The feature extraction blocks extract effective dense features through a series of downsampling and upsampling steps. During this process, downsampling helps filter out noise and irrelevant information from shallow features, while upsampling contributes to preserving overall detailed features. Taking the features extracted from the backbone network, P2 (size \(200 \times 200 \times 128\)) and P3 (size \(100 \times 100 \times 256\)), as examples, after multiple downsampling and upsampling operations, \(P2_{output}\) (size \(200 \times 200 \times 128\)) and \(P3_{output}\) (size \(100 \times 100 \times 256\)) are obtained as shown in Eq. 6.

Furthermore, we introduce the spatial pyramid pooling fusion (SPPF) module to extract features at different scales. This module takes the high-level features, denoted as \(SPPF_{input}\), extracted by the backbone network as input. It then connects the input feature with the features pooled at each layer through three layers of max-pooling, as calculated in Eq. 7. Max-pooling helps extract features at different scales, thereby enhancing the network’s multiscale perception capability.

Finally, spatial features are propagated to shallower layers through deconvolution and elementwise addition, enhancing the utilization of deep-layer features. \(SPPF_{output}\) is deconvolved to the same size as \(P3_{output}\) and then fused with shallow-layer features to obtain P3F (\(100 \times 100 \times 256\)), as calculated in Eq. 8. Furthermore, the fusion of P3F and \(P2_{output}\) results in P2F (\(200 \times 200 \times 128\)), as shown in Eq. 8. This approach of deconvolution and elementwise addition effectively facilitates the integration of spatial features from deep layers to shallow layers, thereby enhancing the ability of shallow layers to extract features related to dense objects.

where \(\circledast \) denotes elementwise summation.

The proposed D-FPN module achieves effective extraction and fusion of features related to dense small objects through multiple layers of operation. First, the feature extraction block of D-FPN extracts more dense features in the shallow layers, providing richer information about small objects. Second, the SPPF module extracts features at different scales, enhances multiscale perception and effectively fuses high-level spatial features. The introduction of the D-FPN module effectively addresses the challenges of feature extraction and fusion for dense small objects, improving the detection performance of the network, and enhancing the model’s accuracy and robustness. This is particularly significant for tasks involving dense small objects, such as drone-based object detection.

Dense attention layer

To further enhance the model’s ability to detect dense small objects, this study introduces a dense attention layer (DAL) block, inspired by the use of attention mechanisms in deep learning49,50. Attention mechanisms enable the model to focus its attention on relevant objects, allowing it to ignore irrelevant features, which benefits the model’s object detection capabilities51,52. The DAL block takes the features extracted by the D-FPN as input, employs an attention mechanism to extract attention weights, and applies these weights to the input features to obtain the final detection features, as illustrated in Fig. 5.

The proposed DAL block is a novel dense attention layer that enhances the advantages of various methods through the extraction and fusion of multiple attention features. The design of DAL aims to fully leverage different types of attention mechanisms to improve feature representation. First, we apply average pooling and max pooling operations with kernel size \(1 \times 1 \times C\) (where C is the number of channels) to the input feature D. These two pooling operations produce channelwise attention features, AvgPool(D) and MaxPool(D). Next, we elementwise multiply these two extracted features with the input feature F to obtain a new feature D1, as shown in the following equation (see Eq. 9). By fusing the channelwise attention features extracted by the pooling operations with the input feature, we enhance the expressive power of the input feature. The effectiveness of this strategy has been validated in experiments, as it fully harnesses the advantages of average pooling and max pooling, providing DAL with a significant accuracy advantage28,53.

where \(\odot \) denotes elementwise multiplication and \(+\) represents channelwise summation.

The adjusted feature F1, which has undergone channel attention, is used as the input. To further fuse features and focus more attention on spatial positions, we introduce spatialwise stochastic pooling and max pooling. Stochastic pooling is a pooling method in which values within the pooling kernel region are randomly selected to compute the maximum value. Compared to traditional max pooling, this stochastic pooling method increases the model’s robustness, especially when there may be noise interference in shallow features. For objects in dense areas, using a stochastic approach can also lead to the selection of certain features, thus reducing the interference of noisy features.

We combine both stochastic max pooling and max pooling in the spatial direction, using a kernel of size \(H \times W \times 1\), and then multiply the obtained weights with the elements of D1 in the spatial direction to obtain D2, as specified in the following equation (see Eq. 10). By combining stochastic max pooling with max pooling, we can further enhance features in the spatial direction and better handle noise interference.

where \(+\) represents the spatialwise summation.

After channel attention and spatial attention correction, the corrected weight features are fused with the input features from the feature extractor, as shown in Eq. 11. This fusion process introduces more attention information into the features, which aids in extracting more effective objects within dense features. Additionally, the attention information helps concentrate the features, aligning with the characteristics of dense object detection in UAV applications.

In the field of deep learning, attention mechanisms play a crucial role by allowing models to intelligently focus more computational resources on critical regions of data, significantly enhancing a model’s performance in object areas. In this context, we introduce the DAL module, which aims to concentrate on meaningful features in both channel and spatial dimensions to highlight essential features while suppressing irrelevant features54. This process helps ensure that feature information can be efficiently and effectively propagated throughout the neural network. The DAL module is designed as a lightweight, versatile module that can be easily integrated into deep neural network architectures, such as YOLOv8, for end-to-end training55. By introducing the DAL module, the model can intelligently select features to focus on, thereby improving not only the model’s performance but also its robustness. This module has a critical role in various computer vision tasks, helping the model better understand and utilize information within the data. It can be applied not only for dense detection from a UAV perspective but also for other dense detection tasks.

Raw imagex

We conducted a comprehensive investigation of current mainstream open-source datasets and found that they all store images in a compressed format, such as the Joint Photographic Experts Group (JPG). Furthermore, many commonly used image enhancement techniques are applied to these compressed image formats. The JPG format employs lossy compression algorithms, which means that some level of detail and information is lost during the image compression process, resulting in a reduction in image quality. While this lossy compression algorithm has distinct advantages in certain applications, such as capturing images on mobile devices and sharing on social media, it may not be rigorous enough for scientific research, especially in applications where high image detail is needed.

In scientific research, precise analysis and processing of images are essential, and lossy compression algorithms may lead to the loss of critical detail, potentially affecting the accuracy of the research results. To address this issue, researchers and scientists have increasingly turned to lossless image enhancement methods, as mentioned in this study, which involve the use of RAW images for lossless enhancement. Lossless image enhancement methods allow for the preservation of more detail and information without compression or loss, thereby improving image quality and fidelity. This approach is of paramount importance in scientific research, because it ensures that researchers can accurately analyze and process image data without being compromised by compression losses56.

A RAW image refers to unprocessed and uncompressed raw data directly captured from a CMOS sensor57. Each pixel in an RAW image represents the luminance value range of one of the RGB color channels, based on the structure of the CMOS sensor. In this study, RAW images from both the DJI Zenmuse P1 and Canon 80D cameras were used, with each color channel having a luminance range of 14 bits, equivalent to \(2^{14} = 16,384\) different luminance levels. When converting RAW images into commonly used RGB three-channel color images, it is necessary to reconstruct the RGB values for each pixel by computing the single-color channel values at the original positions and the values of adjacent color channels. It is important to note that the layout of CMOS sensors may vary between manufacturers, with one common layout being the Bayer pattern layout, where color channels are arranged according to Eq. 12.

in this layout, the four letters represent the order of color channels in a 2 \(\times \) 2 grid pattern, with “R” for red, “G” for green, and “B” for blue.

Comparison of exposure adjustments between JPG and RAW images. The images depict the same captured scene in two distinct formats: JPG, which is a compressed format generated by the drone’s default settings, and digital negative (DNG), which is the RAW format produced by the drone. The images are cropped at identical positions, and lens correction is applied to the JPG, potentially resulting in minor visual disparities compared to those in the RAW format. Each subfigure includes information specifying the image format and the magnitude of exposure adjustment. Exposure adjustment is accomplished using an adaptive histogram equalization algorithm, with the numerical values indicating the extent of adjustment.

RAW images encompass a wide range of up to 16,384 luminance levels, and this rich information is discarded when cameras export images in formats such as JPG and others, as illustrated in Fig. 6. Among the discarded information, especially after adjustments in the dark regions, there may be additional details. These additional details are highly valuable for our lossless image enhancement process.

In contrast, there are notable differences in adjusting the lighting between compressed formats, such as JPG, and RAW formats. JPG images typically cannot fully reconstruct dark details, whereas RAW images contain all the information required to recover these dark details after appropriate exposure adjustments. Furthermore, exposure adjustments in JPG images are limited, and further increasing the exposure can result in localized overexposure and increased noise. In contrast, when processed with suitable exposure adjustment algorithms, RAW images not only allow for overall brightness adjustments to increase image exposure but also enable the enhancement of underexposed areas and the suppression of overexposed highlights through dynamic algorithms58. Therefore, RAW images have found extensive applications in fields such as photography, filmmaking, and aerial photography, where post-processing adjustments, including exposure, provide a broader dynamic range and increased flexibility.

In this study, we collected data while simultaneously preserving RAW images. We used the rawpy library in Python to access the original information of the RAW images and applied adaptive histogram equalization to adjust the exposure, obtaining images at different exposure levels. This step allowed us to acquire images under various lighting conditions, including backlight, nighttime, and fill light, which are complex lighting scenarios. For drones, dealing with challenging lighting conditions is a significant challenge; therefore, these RAW images that have undergone lossless enhancement further enrich our dataset.

Experimental platform

We used a uniform and highly reproducible experimental approach in our work, and we cited widely recognized methods and the literature for comparison. We used the same workstation environment for the experiments, where the workstation was equipped with an i9-12900K CPU, an Nvidia RTX 4090 24G GPU, 128GB of DDR5 RAM, and a 1T SSD. The main software packages deployed on the workstation include Ubuntu20, Python 3.7, PyTorch 1.13, and CUDA 11.

Experimental details

The data collection and processing methods have been described in detail in the Dataset section. In different experiments, we used the same randomly divided datasets, with the training set, verification set and test set being 80:15:5. During training, we use weight parameters pretrained on the COCO dataset to speed up the model convergence process. We resize the image to 800 \(\times \) 800 pixels and then uniformly input it to the model. The CARPK challenge dataset was used in additional experiments to further verify the effectiveness of our proposed model.

During the training process, the model used stochastic gradient descent (SGD), the batch size was set to Auto, the training epoch was set to 600, the learning rate was set to 0.01, and the momentum was set to 0.937. Based on the Ultralytics YOLOv8 framework and source code, we implemented five different versions of the model59. The enhancement method was incrementally applied and tested on the validation dataset after each training iteration. In the experiment involving the CARPK dataset, we utilized the training and testing subsets from the CARPK dataset for model training and evaluation, respectively. Once fully trained and converged, the models were employed to make predictions on the test set, with predictions being counted when the confidence level exceeded 0.7.

Performance metrics

To validate the effectiveness of our proposed D-YOLOv8 algorithm, we conducted an evaluation using recognized validation methods. In this evaluation, we employed fundamental metrics such as precision, recall, average precision (AP), mean absolute error (MAE), and root mean square error (RMSE)60. the precision, as depicted in Eq. 14, represents the proportion of correctly detected objects among the total objects detected by the model. Recall, on the other hand, signifies the proportion of correctly detected objects relative to all actual objects. Furthermore, we utilized the AP to assess the overall performance of the model. The AP is calculated by measuring the area under the precision-recall curve (AUC-PROC), which provides a comprehensive evaluation of the model’s detection accuracy and recall, as demonstrated in Eq. 16.

where TP (true positives) denotes the count of correctly classified positive samples, FP (false positives) represents the number of incorrectly classified positive samples, FN (false negatives) signifies the count of incorrectly classified negative samples, and TN (true negatives) represents the number of correctly classified negative samples.

where \(y_i\) denotes the number of instances in the sample image, \({\hat{y}}_i\) represents the predicted number of instances in the sample image, and n indicates the total number of samples in the test set.

In addition to evaluating the detection accuracy, we also calculated the model’s floating point operations (FLOPs) to understand its computational requirements. FLOPs serve as a metric for assessing the model’s computational complexity, aiding in assessing its operational efficiency on different hardware devices and thereby providing insights for practical applications.

These evaluation methods have been verified by numerous studies and are unified evaluation parameters. Even if the objects and methods studied are different, the effectiveness of the research and the performance of the method can be compared through the evaluation parameters. The special RMSE and MAE are used to evaluate the deviation between the count results and the true results and which are commonly used parameters when evaluating performance on the CARPK dataset. A unified calculation and evaluation method is also used in this experiment to compare the performance and effectiveness of the proposed method with those of other methods in the literature.

Datasets

Raw image acquisition

Illustration of the data collection area and equipment. Map created in ArcGIS 10.2 , Inc. (https://www.esri.com/software/arcgis/arcgis-for-desktop). Boundaries made with free vector data provided by National Catalogue Service for Geographic Information (https://www.webmap.cn/commres.do?method=dataDownload).

The data collection efforts of this study aimed to gather image data from apricot flowers. The data were collected in an artificially cultivated apricot orchard located at the Henan Institute of Science and Technology (HIST) in Xinxiang, China. The object of this collection was the apricot tree variety “Armeniaca mume var. bungo Makino”, which is known for its high cold and disease resistance, making it the predominant cultivated variety in the region. Furthermore, this variety is esteemed for its unique flavor and high nutritional value.

The data were collected on March 6, 2023, and March 7, 2023, precisely during the apricot tree flowering period. Notably, the flowering period of apricot trees is relatively short, typically lasting only two to three days under favorable weather conditions, encompassing processes such as blooming, pollination, and withering61.

The DJI MATRICE 300 RTK drones and a Canon 80D digital single-lens reflex (DSLR) camera were used for data collection in this study. The MATRICE 300 RTK is an industry-grade UAV widely employed in the agricultural sector. It was equipped with the DJI DL 35mm F2.8 LS ASPH Lens on the Zenmuse P1 full-frame camera, capable of capturing images with resolutions of up to 45 million pixels. The Canon 80D DSLR camera was capable of capturing images with resolutions of up to 24.2 million pixels.

During the data collection process, images were acquired in two formats: DNG and RAW2. The DNG formatted images had a maximum resolution of 8192 \(\times \) 5456 pixels, while the RAW2 formatted raw images reached a maximum resolution of 6000 \(\times \) 4000 pixels. To assess the model’s robustness in challenging scenarios, a portion of the data was also collected under low-light conditions at night.

The region of data collection and the equipment used are illustrated in Fig. 7. By leveraging these advanced UAV and camera devices, we were able to obtain high-quality data, establishing a reliable foundation for subsequent experiments and research62. After organizing and selecting the collected data, we amassed a total of 900 images, comprising 726 images captured under complex conditions and 174 images obtained under low-light conditions at night. These image datasets will be employed in the subsequent object detection experiments of this study.

Raw image augmentation

In this study, we utilized RAW images as the data collection format. In contrast to the widely used highly compressed JPG and HEIF (High-Efficiency Image File Format) formats, RAW images are direct, uncompressed, and unprocessed raw data obtained from camera sensor. Consequently, RAW images retain more details and image quality56. Most importantly, RAW images possess a larger dynamic range, preserving the wider range of brightness and shadow details that the camera sensor captures. On the other hand, compression formats such as JPG, which we commonly encounter, are the result of camera processing of RAW images based on preset parameters. This processing may discard some details that are unnecessary when aiming to capture a perfect photo. However, for images that require further processing and editing, these details become invaluable. In Raw image Section , we delve more deeply into the methods for enhancing RAW images, demonstrating the contrast in detail preservation between RAW and JPG images in exposure adjustments. It is evident that in the case of JPG images, the enhancement of details in the dark areas after adjusting the exposure is quite limited. In contrast, when adjusted in the same manner, RAW images still retain a significant amount of features in the dark areas.

Illustration depicting lossless image augmentation. The left column illustrates image enhancement in complex scenarios, while the right column demonstrates image enhancement under challenging low-light conditions. Each subfigure is labeled with green text indicating the magnitude of the modifications. The terms ’Original’ and ’Exposure’ correspond to the original image and exposure adjustments, respectively. The ’Original’ image is in JPG format, has already been corrected for lens optical distortions, and may exhibit slight variations from the RAW image. The term ’Exposure’ followed by a numerical value denotes the extent of exposure adjustment.

In this work, we directly utilized RAW images for image enhancement. We created multiple 8-bit JPG images from the 14-bit pixel data of RAW images at different exposure values. These images exhibit varying exposure conditions, with the overall aim of preserving more image details. Specifically, we exported RAW images under different exposure conditions, resulting in four different exposure images each for complex scenarios and low-light conditions. Figure 8 illustrates our lossless image generation process.

Compared with traditional image enhancement techniques, the Raw image enhancement method effectively enhances image quality, preserves more details, enriches the dataset, and provides richer and higher-quality data support for subsequent object detection and flowering period management. By generating images in a lossless manner, we can better handle complex scenarios and low-light conditions, thus enhancing the model’s robustness and detection accuracy. This approach is highly important for research on orchard flowering period management and detection.

In this work, we also employed various enhancement techniques on images adjusted for exposure, including rotation, X or Y-axis flipping, scaling, blurring, and the addition of Gaussian noise. Each enhancement method was applied with a certain probability, resulting in the generation of multiple augmented images. These augmentation methods contribute to increased dataset diversity, accelerated model convergence, and improved model accuracy63. A visualization of the image enhancement effects is presented in Fig. 9.

Visualization of image augmentation techniques. Panels (a–h) showcase instances of image augmentation techniques, including the original image; resizing; horizontal and vertical flipping; rotation; translation; scaling within a cropped region; rotation within a cropped region; and the application of Gaussian blur.

By randomly applying image augmentation techniques, we can simulate the diversity and complexity present in real-world scenarios to some extent, thereby enriching the dataset and expanding its coverage. Such data augmentation strategies contribute to improving the model’s generalizability, enabling it to better adapt to data from various scenarios and environments and resulting in more accurate object detection and flower period management. Furthermore, enhanced images can help address specific situations, such as low lighting or image blurriness, thereby further enhancing the model’s robustness and stability. By comprehensively applying these augmentation methods, we can better explore and harness the potential of the data, providing more reliable and effective solutions for drone-based flower period management and detection in orchards.

Image annotation and building dataset

To construct a dense dataset for apricot flower object detection, we manually annotated the images enhanced through image augmentation. The purpose of this annotation was to create a dataset with rich labeling information for training high-quality object detection models. To accomplish this task, we employed Labelimg annotation software and saved the annotation results in XML (eXtensible Markup Language) format following the VOC (Visual Object Classes) standard.

We created two datasets from the annotated data to evaluate the performance of D-YOLOv8 in different scenarios: the Complex dataset and the LowLight dataset. The purpose of these datasets is to assess D-YOLOv8’s performance under varying conditions. The Complex dataset, labeled “Complex,” consists of 3600 images, encompassing images with diverse lighting conditions, backgrounds, and poses. Within these images, we annotated a total of 257.4k apricot flower instances. This portion of the data serves to evaluate D-YOLOv8’s ability to detect densely clustered objects. The Low-Light dataset, denoted as “LowLight,” comprises 870 images captured under low-light conditions such as nighttime or overcast weather. Within these images, we annotated a total of 43.5k apricot flower instances. This subset of the data is designed to assess D-YOLOv8’s dense detection performance under low-light conditions. The detailed statistics of the datasets are presented in Table 1.

CARPK dataset

The Car Parking Lot Dataset64 (CARPK) contains nearly 90,000 cars from 4 different parking lots collected by means of drones. The images were captured from the air by drones. The car objects in the images are manually labeled. These data support target detection and counting. This dataset has a similar task to that of the UAV dense target image detection dataset studied in this study. Therefore, we introduce these data to evaluate the performance of our proposed method.

Result

In this section, we first evaluate the performance of the D-YOLOv8 model on the constructed complex dataset (referred to as “Complex”) to assess its capabilities on this dataset. Next, we conduct comparative tests using a dataset simulating complex low-light conditions (referred to as “LowLight”) to analyze the differences in performance between the original YOLOv8 and D-YOLOv8 models under such conditions. Subsequently,ablation experiments are performed on the proposed improvements and modules to compare the impacts of different enhancements on the final model. Finally, the robustness of the model was further validated through performance comparisons with other mainstream detection models and scoring on the publicly available CARPK dataset.

Results of D-YOLOv8

In this experimental section, we conducted training on the D-YOLOv8 model using the constructed Complex dataset. The D-YOLOv8 model encompasses five versions, namely D-YOLOv8n, D-YOLOv8s, D-YOLOv8m, D-YOLOv8l, and D-YOLOv8x, each corresponding to different network scales. This design allows models of various scales to better adapt to different environments. Among them, D-YOLOv8n is the version with the least number of parameters, containing only 1.23 million parameters, achieving a refresh rate of up to 123 FPS (frames per second) in the testing environment. This enables this version of the model to achieve good real-time performance on embedded devices in drones. During the model training process, we monitored and recorded specific evaluation parameters for each model. The optimal Epoch model was tested on the verification set, and the results are shown in Table 2.

Visualization of Training Parameters for the D-YOLOv8 Model, with Y-Axis Bounds Restricted, such as Loss Limited to 0 to 1.5 or 2.0, Ignoring Early Random Losses Across Different Models and Emphasizing Contrasts in the Later Stages of Training. Each Subfigure Displays Training Iterations on the X-Axis and Corresponding Parameter Values on the Y-Axis, with Different Colors Representing Different Models. (a,b,c,f,g,h) Illustrate Variations in Box Loss, Class Loss, and DFL Loss During Training and Validation Phases. (d,e) Describe Model Accuracy and Recall Results. (i) Represents AP Results with a Confidence Threshold of 0.5. (j) Displays the Mean AP for All Confidence Thresholds Ranging from 0.5 to 0.95, with an Increment of 0.05.

Figure 10 illustrates the variation in various evaluation parameters during the model training process. The loss function continuously decreases with the progression of training epochs, indicating the gradual optimization of the model’s training. On the other hand, the precision, recall, and AP gradually increase with an increasing number of training epochs, indicating continuous improvement in the model’s performance. These results indicate that as training progresses, the model gradually converges, adapts better to the dataset, and achieves higher detection performance.

The training curves provide a visual representation of the changes in performance during the training process of the proposed D-YOLOv8 model in this study, as well as the differences between models of different scales. Figure 10a,b,c,f,g,h shows that as the number of training iterations increases, both the training and validation losses gradually converge. Larger-scale models, such as D-YOLOv8x, with more parameters, exhibit a higher level of convergence, indicating their greater potential for handling more complex tasks. Additionally, the differences in convergence for class loss (cls loss) and distribution focal loss (dfl loss) among the different-scale D-YOLOv8 models are smaller than those for bounding box loss (box loss). This phenomenon may be attributed to the focus of experiment on a single-object detection task, which involves a limited number of object categories, including only objects and background. This relatively simple classification task makes feature extraction relatively straightforward. Furthermore, the introduction of the DFL LOSS function may have reduced the issue of gradient vanishing, thereby expediting model convergence.

Figure 10d,e,i,j illustrates that as the losses decrease, the model’s precision and recall gradually increase, eventually converging to their maximum values. Due to its evaluation at a lower confidence level, AP@0.5 quickly converges to its maximum value in the early stages of training. On the other hand, AP@0.5–0.95 reflects the model’s precision at higher confidence intervals. With the progression of training, AP@0.5–0.95 experienced rapid initial improvement (approximately 50 iterations), followed by a slower ascent and eventual convergence. This finding suggested that the model’s performance is constrained to some extent by the number of parameters, with larger-scale models exhibiting better performance. However, in practical applications, confidence levels need to be dynamically adjusted according to specific tasks.

The experiments demonstrate that our proposed D-YOLOv8 model exhibits high accuracy and robustness on the constructed dataset. Among the different versions, D-YOLOv8n, the smallest-scale version, with a model file size of only 2.8 Mb and a computational complexity of only 10.2GFLOPs, achieved the highest frame rate of 123FPS on the test platform. Furthermore, it attained an AP of 89.8%, indicating its high performance in real-time drone detection tasks.

As the model scale and parameter count increase, the detection accuracy of the D-YOLOv8 series models also improves significantly. However, this improvement comes at the cost of reduced detection speed. Among them, D-YOLOv8x is the largest-scale model in this series, with a FLOPS of 268.9G. Simultaneously, this model achieved exceptionally high detection accuracy, with an AP@0.5 reaching 99.38%, and an AP@0.5–0.95 increasing to 97.61%. Figure 10j provides a more intuitive visualization of this model’s performance.

The experimental results demonstrate that the proposed D-YOLOv8 model performs excellently across different scales. Smaller-scale versions, such as D-YOLOv8n, maintain high accuracy while meeting the real-time detection requirements of drones. On the other hand, larger-scale versions such as D-YOLOv8x enhance accuracy but may demand additional computational resources, making them potentially better choices for certain tasks.

Visual comparison of D-YOLOv8 predictions at five distinct scales. The predicted results are delineated using green bounding boxes, accompanied by confidence scores displayed above the bounding boxes. All the images were visualized with a confidence threshold set at 0.5. The count and average confidence of the predictions are presented in blue text at the top left corner of each subfigure. Predictions that are poor or abnormal are annotated with red circles.

The trained D-YOLOv8 models were applied to the test dataset images, and the prediction results were visualized as shown in Fig. 11. By comparing different models, it can be observed that as the model scale increases, the number of detected apricot flowers decreases, while the average prediction confidence increases. This phenomenon may be attributed to the occurrence of erroneous predictions in models with fewer parameters.

As shown in Fig. 11a, the D-YOLOv8n model demonstrates good performance in predicting the number of apricot flowers but also leads to several erroneous predictions, such as mistakenly identifying the protrusion of the lower main branch as apricot flowers. Models with larger parameter counts can extract more features, especially in complex scenarios, but they also require more computational power, limiting their applicability. Therefore, we introduced multiple scales of D-YOLOv8 models to meet different accuracy and performance requirements in various scenarios. Depending on specific needs, one can choose a model suitable for a particular scenario, thereby enhancing the applicability of the D-YOLOv8 model proposed in this study.

In scenarios where real-time performance is a high priority, one can opt for smaller-scale models such as D-YOLOv8n. These models are capable of meeting the real-time detection requirements of drones while maintaining a relatively high level of accuracy. Conversely, in complex scenarios where high precision is essential, larger-scale models such as D-YOLOv8x can be selected to achieve superior detection accuracy.

Result under LowLight conditions

The operational environment of agricultural drones is not limited to daytime; sometimes, they need to work under low-light conditions. Especially during the brief blooming period of apricot flowers, agricultural drones must operate in complex environments, even during the nighttime. Therefore, this study specifically conducted comparative experiments focused on complex low-light scenarios and constructed the LowLight dataset. In this experiment, we exclusively used images captured during the evening and nighttime conditions to create this dataset. To capture these images, we relied on the high sensitivity of large-format CMOS image sensors and drone supplementary lighting. This dataset was used for training and validation purposes in both the original YOLOv8 and our proposed improved version, D-YOLOv8.

As shown in Table 3, under low-light and complex conditions, the D-YOLOv8 model outperforms the original YOLOv8 model. This improvement can likely be attributed to the dense object detection layer introduced in the D-YOLOv8 model, which helps enhance the model’s detection capability under low-light conditions.

Under complex low-light conditions, images can become darker due to insufficient exposure. To obtain clearer images, one can attempt to increase the CMOS sensitivity, extend the shutter time, or widen the aperture. However, these methods are not always feasible. For instance, increasing CMOS sensitivity can introduce additional noise, and extending the shutter time is nearly impractical for mobile shooting devices such as drones. Moreover, the aperture size is constrained by the lens design. Therefore, improving images under low-light conditions is quite limited.

In scenarios where operational drones, such as those used during the brief period of almond blossom flowering, may need to work directly under low-light conditions, we constructed the LowLight dataset and conducted comparative experiments between the proposed D-YOLOv8 and YOLOv8 models on this dataset. The experimental results show that the performance of D-YOLOv8 decreases under low-light conditions compared to that under general complex scenarios, particularly for the AP@0.5–0.95 metric. This indicates that the detection algorithm under low-light conditions is influenced by the loss of detail and noise caused by low light, which in turn affects the detection performance.

To further illustrate this situation, we present visualizations of detection results of the D-YOLOv8 and YOLOv8 models under low-light conditions in Fig. 12. It is evident from the images that there are significant missing details and noise in the low-light conditions, resulting in a decrease in the performance of the detection algorithm.

Comparison of detection results between D-YOLOv8 (n and x scale models) and the original YOLOv8 under low-light conditions. (a–d) correspond to different models. Correct detections are highlighted with green bounding boxes and their associated confidence scores, while areas with missed detections are indicated by red circles. The top left corner of each subfigure displays the number of detections and the average confidence score in green text. The right vertical column shows screenshots of the detection results.

From the detection result examples in the figure, it is evident that low-light conditions not only result in the loss of details and noise but also make the background more prone to being disregarded. This may be a key factor preventing further reduction in detection performance. Visualizing the experimental results, we observe that the proposed D-YOLOv8 model consistently outperforms the original YOLOv8 model, especially in scenarios with a high density of object objects. Specifically, when D-YOLOv8n was compared to YOLOv8n at the same scale, D-YOLOv8n correctly detected an additional 5 apricot flower objects, while D-YOLOv8n, compared to YOLOv8x at the same scale detected an additional 8 apricot flower objects. The experimental results indicate that apricot blossoming objects that are not correctly detected are typically found in complex situations, such as when the objects are small, when there is extensive overlap in the object areas, when there is significant occlusion, or when the objects are densely packed.

To address these challenges, we propose the D-YOLOv8 model, which incorporates specific optimizations for dense object detection. This enables the model to effectively detect smaller, less distinctive, and more densely clustered objects. Consequently, in complex orchard environments, the D-YOLOv8 model achieves notable results in dense object detection, especially under challenging low-light conditions. A detailed analysis of the experimental results reveals that the D-YOLOv8 model significantly outperforms the original YOLOv8 model in detecting dense objects within complex orchard settings. Particularly noteworthy is the improved performance of the D-YOLOv8 model under complex low-light conditions.

Ablation experiments

During model training, we applied various improvements to validate the effectiveness of the models, and the experiments were conducted using the D-YOLOv8m scale model. As shown in Table 4, the symbol \(\checkmark \) indicates that the model incorporates a specific improvement, and AP denotes the mean IoU from 0.5 to 0.95. The first row shows as the baseline results for the original YOLOv8 model on the dataset. The second row demonstrates the application of RAW image enhancement to construct the dataset, which results in a direct increase in the model’s AP from 83.64% to 87.97%, a 4.33% improvement while keeping the model parameters unchanged. The third row applies DFPN, reducing the model’s parameters by approximately 20%, and the AP increases to 92.19%. Finally, the application of DAL reduces the model’s parameter count by approximately half, with an increase in AP to 96.35%.

In a comprehensive ablation experiment, we evaluated the proposed improvements and summarize the results in the table. From the table, we observe that even without any modifications to the model, applying only the RAW image enhancement method can enhance accuracy. This highlights that RAW images indeed offer additional information for model training. Both DFPN and DAL are enhanced when targeted at dense targets. These methods involve pruning deeper layers of the network and extracting more effective information through interlayer structures. These enhancements are reflected in the reduced model parameters and improved accuracy. Through experimentation, our proposed improvements have proven to be effective in dense target detection, reducing model parameters, and enhancing model performance.

Evaluation of car counting accuracy

Both MAE and RMSE are employed in counting tasks to assess the overall error between true and predicted values, where smaller values indicate more accurate predictions. For the image in the entire test set, our D-YOLOv8 model predicts the number of cars as \({\hat{y}}\), and the ground truth indicates the number of cars as \(y_i\), as shown in Formula 17. The experimental results are presented in the table, indicating that our proposed method excels in terms of the MAE and RMSE in the field of dense target detection.

Based on the experimental results, we compare the performances of mainstream models on the CARPK dataset. The comparison results are shown in Table5, which indicates that our proposed method reaches reached the performance of mainstream methods and achieved lower RMSE and MAE values. In particular, as an end-to-end lightweight method, we aim to apply it to drone embedded devices. Our goal is not to lower the RMSE and MAE performance, but to achieve a balance in performance. Additionally, we visualize the detection results, as shown in Fig. 13.

Comparison experiment

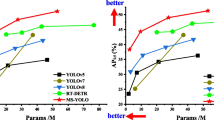

To further validate the model’s performance, we conducted comparisons on the constructed complex dataset, which included popular detection models such as SSD, Faster-RCNN, YOLOv3, YOLOv4, YOLOv5, YOLOv7, the original YOLOv8, Deformable-DETR, RT-DETR-R101, and our proposed improved D-YOLOv873,74. For the comparisons, we selected the medium-scale versions (m) of YOLOv5, YOLOv8, and D-YOLOv8 for experimentation, the result being shown in Table 6.

In addition to exhibiting good well in overall accuracy, the D-YOLOv8 model also achieved high scores on the AP@0.5–0.95 metric, which more accurately reflects the model’s performance in the high-confidence interval (confidence>0.5). Taking D-YOLOv8m as an example, this model achieved a high precision of 94.33% on AP@0.5–0.95, indicating that the model exhibits high confidence in detection and provides more accurate object recognition.

Furthermore, when analyzing the computational resources and model size required, we found that D-YOLOv8 demands fewer computational resources and has a smaller model size than most mainstream models. This is attributed to our lightweight design of the model, which expands its range of application scenarios. Additionally, D-YOLOv8 demonstrates advantages in terms of real-time performance and resource consumption on embedded devices such as drones.

Based on the analysis of the experimental results, we conclude that the proposed D-YOLOv8 model is more accurate than current mainstream detection models while preserving its lightweight characteristics. In contrast to other mainstream detection methods, the D-YOLOv8 model adopts a C2f structure, which provides richer gradient information, alleviating the problems of gradient vanishing and overfitting. Additionally, the D-YOLOv8 model utilizes a free anchor structure, enabling the model to more flexibly detect object shapes. On the constructed complex dataset with dense objects, the anchor-free model performs better than the other models, possibly because it allows the model to detect dense objects more flexibly.

In addition to its overall accuracy, the D-YOLOv8 model also achieves high scores in the AP@0.5–0.95 metric, which more accurately reflects the model’s performance in the high-confidence interval (confidence> 0.5). Taking D-YOLOv8m as an example, this model achieves a high precision of 96.35% in the AP@0.5–0.95 range, indicating high detection confidence and accurate object recognition. Further analysis of the computational power and model size required by the D-YOLOv8 model revealed that it is smaller than most mainstream models currently available. This is attributed to our lightweight model design, which makes the model applicable to a wider range of scenarios. Additionally, the D-YOLOv8 model offers advantages in terms of real-time performance and resource consumption, especially for embedded devices such as drones.

Discussion

A novel dense object detection method is proposed that uses drone images as inputs to extract features, achieving an accurate detection method for dense apricot flowers during the flowering period. Through the construction of data datasets, public data dataset evaluation, comparative experiments, and fusion experimental analysis, the effectiveness of this framework for dense target detection is proven. The proposed method pioneers the use of drone images for orchard management and inspection. The proposed method solves the challenge of low accuracy in dense target detection via traditional methods. These challenges include dense targets with fewer information pixels, severe image occlusion, and difficulty in achieving traditional methods. The existing literature lacks a detailed explanation of the methods for accurate detection of dense targets in apricot flowers and the challenges of using raw images for dense target detection; therefore, so new methods and ideas need to be proposed.

In order to overcome related challenges, this paper introduces a novel target detection framework to improve the accurate detection of dense targets in UAV images. This framework is designed to accurately detect dense almond blossoms in UAV images. This study provides a theoretical reference for similar dense target detection tasks and provides an effective solution for complex orchard environmental management. An important contribution of this work is to propose the proposal of a deep learning-based dense target detection method, which is designed to be used for dense target detection. This detection method introduces D-FPN and DAL modules to extract and analyze features of dense targets. The difference from existing methods is that it proposes to use relatively shallow features and quickly detect proposed for use, and dense targets are quickly detected through simple feature extraction modules, although this approach can cause a certain loss. accuracy but maintaining better lightweight and overall performance. The proposed model was compared and tested on the constructed dataset, and the experimental results showed that it was better than mainstream target detection methods such as SSD, Faster RCNN, YOLOv5, and YOLOv8. AblationThe ablation experimental results demonstrate the effectiveness of the proposed improvements.

The proposed model will also be evaluated to assess the dense target counting performance using the open-source dense dataset CARPARK. The experimental results reveal that the RMSE and MAE evaluation values reach those of the mainstream dense target detection method.

Another important contribution of this work is the proposed dense apricot dataset, which consists of raw images collected using high-quality image sensors. The raw images, such as traditional color images, contain the raw information of the image sensor. When this raw information is processed or compressed during the process of producing traditional images, these changes are completely irreversible. We innovatively introduce original images into dense target detection and use the powerful feature extraction capabilities of deep learning to directly extract information from the original images. This provides a new idea for target detection in complex scenes. The effectiveness of RAW images in dense object detection is demonstrated in the proposed fusion experiments. The literature on using RAW data for deep learning is relatively sparse, and most of these studies focus on proposing a model to replace the traditional DSP to improve the photo imaging effect of mobile phones or electronic cameras. With the development of autonomous driving in recent years, some research teams have begun to turn their perspective to obtaining raw information directly from CMOSs to detect current scenes and targets, thereby improving autonomous driving effects. The RAW data processing ideas and datasets used in this work will greatly enrich research in related fields, especially in the field of dense target detection. This work will also spur additional applied research, inspiring practitioners in related fields to integrate RAW domain data with mainstream technologies for new product development and iterations.

Conclusion

This study introduces a series of research efforts focused on dense object detection in UAV applications, achieving significant outcomes. We propose a novel dense object detection method, D-YOLOv8, incorporating the introduced D-FPN and DAL modules. Experimental evaluations conducted on both constructed and publicly available datasets demonstrate SOTA performance of the proposed method.

This study presents a new model for detecting dense objects in drone scenes, along with a dataset of dense apricot flowers that includes original image data. The proposed D-YOLOv8 model makes many improvements over the YOLOv8 model, including the introduction of D-FPN and DAL modules, a lightweight design for dense target detection in drone scenes and a unique shallow feature extraction method. The collected dense target dataset is collected using drones, in which the original format of the RAW image is stored, which provides more bits of information for deep learning and avoids irreversible modification of the original information by DSP during image processing. This approach provides novel ideas and methods for dense original image datasets and dataset production, which is crucial for the development of new models and data sets in the field of dense target detection, aiming to further improve detection accuracy and application performance.

The proposed D-YOLOv8 method was tested on both the constructed dataset and the public CARPK dataset. The experimental results show that D-YOLOv8 is better than SSD, Faster RCNN, YOLOv5, YOLOv8 and other methods. In particular, car counting in aerial parking lot images was evaluated on the CARPK public data set. D-YOLOv8 achieved good results in terms of the MAE and RMSE, indicating that the proposed method has better performance in dense target detection. Versatility. Despite this, there are still some cases where low-light or severely occluded targets cannot be detected correctly in experiments. Continuous optimization of D-YOLOv8 will be one of the main tasks of our future research. Even though this study is based on UAV images, it is hoped that the proposed method and dataset will not be limited by specific scenarios and that the methods of data processing and model improvement can be applied to a wider range of research. The optimization methods and data processing ideas proposed in this study will provide a large number of researchers with new research directions and can be applied to newer and more advanced models to solve more critical problems and adapt to more complex environments; this approach is another key task for future research.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Tang, Y., Zhou, H., Wang, H. & Zhang, Y. Fruit detection and positioning technology for a Camellia oleifera C. Abel orchard based on improved YOLOv4-tiny model and binocular stereo vision. Exp. Syst. Appl. 211, 118573. https://doi.org/10.1016/j.eswa.2022.118573 (2023).

Anders, M., Grass, I., Linden, V. M. G., Taylor, P. J. & Westphal, C. Smart orchard design improves crop pollination. J. Appl. Ecol. 60, 624–637. https://doi.org/10.1111/1365-2664.14363 (2023).

Liu, Q., Wang, X., Zhao, M. & Liu, T. Synergistic influence of the capture effect of western flower thrips (Frankliniella occidentalis) induced by proportional yellow-green light in the greenhouse. Int. J. Agric. Biol. Eng. 16, 88–94. https://doi.org/10.25165/j.ijabe.20231601.7562 (2023).

Aguilar-Ariza, A. et al. UAV-based individual Chinese cabbage weight prediction using multi-temporal data. Sci. Rep. 13, 20122. https://doi.org/10.1038/s41598-023-47431-y (2023).

Wang, X. et al. Lightweight SM-YOLOv5 tomato fruit detection algorithm for plant factory. Sensors 23, 3336. https://doi.org/10.3390/s23063336 (2023).

Wang, X., Onychko, V., Zubko, V., Wu, Z. & Zhao, M. Sustainable production systems of urban agriculture in the future: A case study on the investigation and development countermeasures of the plant factory and vertical farm in China. Front. Sustain. Food Syst. 7, 973341. https://doi.org/10.3389/fsufs.2023.973341 (2023).

Yang, K. & Song, Z. Deep Learning-Based Object Detection Improvement for Fine-Grained Birds. IEEE Access 9, 67901–67915. https://doi.org/10.1109/ACCESS.2021.3076429 (2021).

Xiang, W., Song, Z., Zhang, G. & Wu, X. Birds detection in natural scenes based on improved faster RCNN. Appl. Sci. 12, 6094. https://doi.org/10.3390/app12126094 (2022).

Xu, D. et al. M2da: Multi-modal fusion transformer incorporating driver attention for autonomous driving (2024). arXiv:2403.12552.

Song, Z. et al. Graphbev: Towards robust bev feature alignment for multi-modal 3D object detection (2024). arXiv:2403.11848.

Wang, Y., Liu, M., Ren, Y., Zhang, X. & Feng, G. Traffic sign attack via pinpoint region probability estimation network. Patt. Recogn. 146, 110035. https://doi.org/10.1016/j.patcog.2023.110035 (2024).

Yang, K. et al. Model optimization method based on vertical federated learning. in 2021 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5, (IEEE, 2021). https://doi.org/10.1109/ISCAS51556.2021.9401521.

Qin, Y., Tang, Z., Wu, H. & Feng, G. Flexible tensor learning for multi-view clustering with Markov chain. IEEE Trans. Knowl. Data Eng. 36, 1552–1565. https://doi.org/10.1109/TKDE.2023.3305624 (2024).

Zualkernan, I., Abuhani, D. A., Hussain, M. H., Khan, J. & ElMohandes, M. Machine learning for precision agriculture using imagery from unmanned aerial vehicles (UAVs): A survey. Drones 7, 382. https://doi.org/10.3390/drones7060382 (2023).

Jiang, S., Luo, B., Jiang, H., Zhou, Z. & Sun, S. Research on dense object detection methods in congested environments of urban streets and roads based on DCYOLO. Sci. Rep. 14, 1127. https://doi.org/10.1038/s41598-024-51868-0 (2024).

Wang, X., Vladislav, Z., Viktor, O., Wu, Z. & Zhao, M. Online recognition and yield estimation of tomato in plant factory based on YOLOv3. Sci. Rep. 12, 8686. https://doi.org/10.1038/s41598-022-12732-1 (2022).

Chen, C. et al. YOLO-based UAV technology: A review of the research and its applications. Drones 7, 190. https://doi.org/10.3390/drones7030190 (2023).

Zhou, P. et al. PSFNet: Efficient detection of SAR image based on petty-specialized feature aggregation. IEEE J. Select. Top. Appl. Earth Observ. Rem. Sens.https://doi.org/10.1109/JSTARS.2023.3327344 (2023).

Song, Z. et al. Fast detection of multi-direction remote sensing ship object based on scale space pyramid. In 2022 18th International Conference on Mobility, Sensing and Networking (MSN), 1019–1024 (IEEE, 2022). https://doi.org/10.1109/MSN57253.2022.00165.

. Song, Z., Wu, P., Yang, K., Zhang, Y. & Liu, Y. MsfNet: A novel small object detection based on multi-scale feature fusion. In 2021 17th International Conference on Mobility, Sensing and Networking (MSN), 700–704 (IEEE, 2021) https://doi.org/10.1109/MSN53354.2021.00108.

Deng, W., Shi, Q. & Li, J. Attention-gate-based encoder–decoder network for automatical building extraction. IEEE J. Select. Top. Appl. Earth Observ. Rem. Sens. 14, 2611–2620. https://doi.org/10.1109/JSTARS.2021.3058097 (2021).

Ignatov, A. et al. AIM 2019 challenge on RAW to RGB mapping: methods and results. In 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 3584–3590 (2019). https://doi.org/10.1109/ICCVW.2019.00443.

Song, Z., Wei, H., Bai, L., Yang, L. & Jia, C. GraphAlign: Enhancing accurate feature alignment by graph matching for multi-modal 3D object detection. In 2023 IEEE/CVF International Conference on Computer Vision (ICCV), 3335–3346 (IEEE, 2023) https://doi.org/10.1109/ICCV51070.2023.00311.

Song, Z., Jia, C., Yang, L., Wei, H. & Liu, L. GraphAlign++: An accurate feature alignment by graph matching for multi-modal 3D object detection. IEEE Trans. Circuits Syst. Video Technol.https://doi.org/10.1109/TCSVT.2023.3306361 (2024).

Zhang, X. et al. Dual radar: A multi-modal dataset with dual 4D radar for autonomous driving (2023). arXiv:2310.07602.

Song, Z. et al. Robofusion: Towards robust multi-modal 3d object detection via sam (2024). arXiv:2401.03907.

Zhang, G. et al. Urformer: Unified representation lidar-camera 3D object detection with transformer. In Pattern Recognition and Computer Vision (eds Liu, Q. et al.) 401–413 (Springer Nature, 2024).

Xu, S. et al. Multi-sem fusion: Multimodal semantic fusion for 3D object detection (2023). arXiv:2212.05265.

Terven, J. & Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 and beyond (2023).https://doi.org/10.48550/arXiv.2304.00501. arXiv:2304.00501.

Wang, N., Liu, H., Li, Y., Zhou, W. & Ding, M. Segmentation and phenotype calculation of rapeseed pods based on YOLO v8 and mask R-convolution neural networks. Plants 12, 3328. https://doi.org/10.3390/plants12183328 (2023).

Song, Z., Zhang, Y., Liu, Y., Yang, K. & Sun, M. MSFYOLO: Feature fusion-based detection for small objects. IEEE Lat. Am. Trans. 20, 823–830. https://doi.org/10.1109/TLA.2022.9693567 (2022).

Chen, S. et al. Info-FPN: An informative feature pyramid network for object detection in remote sensing images. Exp. Syst. Appl. 214, 119132. https://doi.org/10.1016/j.eswa.2022.119132 (2023).

Feng, C., Zhong, Y., Gao, Y., Scott, M. R. & Huang, W. TOOD: Task-aligned one-stage object detection (2021). arXiv:2108.07755.

Li, X. et al. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 33, 21002–21012 (2020).

Wang, L. et al. Fuzzy-NMS: Improving 3D object detection with fuzzy classification in NMS (2023). arXiv:2310.13951.

Jing, J., Gao, T., Zhang, W., Gao, Y. & Sun, C. Image feature information extraction for interest point detection: A comprehensive review. IEEE Trans. Patt. Anal. Mach. Intell. 45, 4694–4712. https://doi.org/10.1109/TPAMI.2022.3201185 (2023).

Tsai, F.-T., Nguyen, V.-T., Duong, T.-P., Phan, Q.-H. & Lien, C.-H. Tomato fruit detection using modified Yolov5m model with convolutional neural networks. Plants 12, 3067. https://doi.org/10.3390/plants12173067 (2023).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors (2022). arXiv preprintarXiv:2207.02696.