Abstract

This paper offers a novel approach to formulate efficient ratio estimator of the population variance using a transformed auxiliary variable. The impact of transformation on auxiliary information has also been discussed. It is observed that incorporating a transformed auxiliary variable result in a high gain in efficiency. Theoretical properties of the newly developed estimators have been derived. The empirical and simulation studies show that the suggested estimators outperformed the existing estimators.

Similar content being viewed by others

Introduction

Sampling is a crucial aspect of making well-informed decisions in various real-life domains. Inferences about statistical populations or data are drawn from samples, and it is imperative that a sample accurately represents every characteristic of the population of interest. Data is characterized by specific parameters, and estimating population parameters from a sample poses a challenging task. Two essential measures for specifying data are the measure of location and scale. This article focuses on the latter, specifically the estimation of variance, and a frequently used measure of data scale. Estimating variance is vital in processes where precise quantification of data variation is necessary. From economics and business to physical science, biological science, and environmental sciences, sophisticated tools are required to measure variation for making informed decisions. For instance, economists analyze the variation in commodity prices, manufacturers assess taste preferences for customer satisfaction, and agriculturists study variability in climate factors to optimize yields and minimize costs.

Extensive research has sought to enhance the efficiency of ratio and product estimators for finite population variance, considering the correlation between survey variables and auxiliary variables. Auxiliary variables are those correlated with the main study variable, either positively or negatively. In environmental studies, for example, auxiliary variables like wind speed or temperature are considered when estimating air quality variance. Economic surveys may involve estimating household income variance using employment rates as an auxiliary variable. In healthcare planning, patient demographics or medical histories serve as auxiliary variables when estimating variance in patient recovery times.

Integrating auxiliary variables with the main survey variable to estimate variance provides additional information, refining the accuracy of estimates. This additional information includes contextual data, environmental factors, or supplementary metrics correlated with main study variable. The use of auxiliary information often leads to more efficient and robust variance estimators. Similarly, the transformative role is notable in enhancing estimate efficacy. Transformations make estimators flexible, allowing them to better utilize additional information from auxiliary variables, such as mean, variance, skewness, kurtosis, quantiles, etc. This flexibility, along with generalization and optimization constants, enhances the robustness of estimates against variations in the sample.

That is why various sample survey statisticians preferred transformed auxiliary variables instead of considering them in their original form. Keeping in view the above, numerous researchers developed many efficient estimators of population variance. Some of the pioneer work on estimating variance of finite population using auxiliary variable are due to1 who proposed an unbiased estimator of variance and2 compares variance estimators under various sample designs with available auxiliary information. Illustrates improved bias and mean squared error over common estimators. Similarly3, introduces chain estimators for finite population variance using double sampling and two auxiliary variables. Compares estimators based on mean square estimator (MSE) criterion. Proposes a ratio-type exponential estimator for population variance, consistently more efficient than previous estimators4. Conducts efficiency comparisons mathematically and numerically. Introduces a family of estimators based on adaptations of previous work, showing efficiency through mean square error comparisons5. A generalized modified ratio-type estimator for population variance using known parameters of the auxiliary variable was suggested by6. Compares with existing estimators for simulated and real data to show the performance of the developed estimator against the competing estimators. Suggests a generalized class of finite population variance, deriving large sample bias and mean square error. Considers special cases and provides numerical examples for comparison7. Suggested estimator of population variance utilizing information on two auxiliary variables under SRSWOR scheme8. A generalized exponential estimator for estimating population variance using two auxiliary variables was proposed by9. Demonstrates efficiency through empirical and simulated studies using real and simulated data. Has suggested efficient formulation of population variance in simple random sampling using supplementary variable10. Using searl’s constants, develop an efficient estimator to estimate the population variance11. The bias and mean squared error of the proposed estimator is obtained up to the first degree of approximation. Suggested a chain ratio type and chain ratio type exponential estimator of variance of finite population using auxiliary information Improved version of the suggested class of estimators is also given along with its properties12. An empirical study is carried out in support of the findings of the study. Addresses estimation of current population variance in the presence of random non-response13. Examines proposed estimators through empirical studies, comparing with estimators for complete response situations. Suggested a class of estimators for finite population variance using an auxiliary attribute14. Developed variance estimator using the tri-mean and third quartile of the auxiliary variable, demonstrating its superior performance over various competing estimators based on sampling properties, bias, and mean squared error15. Suggested finite population variance estimator using unconventional measures16. Demonstrates efficacy and robustness through empirical and simulation studies considering real data sets form various domain of life. Similarly,17 explores the effect of distribution on suggested variance estimators. Compares twelve estimators across eight distributions through simulation studies. Formulate a Searl’s ratio-type estimator using tri-mean and third quartile of the auxiliary variable18. Demonstrates superiority through bias, mean squared error through theoretical comparison and empirical studies. A hybrid-type estimators of population variance developed by19, and demonstrated the efficiency over competing estimators through theoretical and empirical comparisons. A generalized family of estimators of population variance is formulated by20 and demonstrated the performance of the estimators through empirical and simulation study. A robust ratio-type estimator for finite population variance suggested by21, considering robust covariance matrices. Derive conditions for efficiency against competing estimators and demonstrated the performance of the suggested estimators through empirical and simulation study.

Novelty and significance

This work introduces innovative contributions in the field of survey sampling, with several key aspects. The paper puts forward three novel ratio estimators designed for finite population variance. These estimators consider a transformed auxiliary variable under simple random sampling without replacement. Demonstrated to outperform existing methods, these suggested estimators exhibit superior efficacy when there is a positive correlation between the survey variable and auxiliary variable. In addition to theoretical comparisons of mean squared errors (MSEs), the paper includes an empirical analysis using real data sets. Through a simulation study, it substantiates that the newly proposed estimators consistently outperform competing estimators across various scenarios, such as different correlation and sample size, affirming their high efficiency. The versatility of the proposed estimators is highlighted as they can seamlessly adapted into other sampling methodologies. This includes applications in stratified random sampling, non-response sampling, and adaptive cluster sampling, leading to the derivation of efficient versions of the estimators. The applicability of the proposed estimators extends to diverse fields such as environmental studies, agriculture, and economics, especially in situations where a positive correlation between the study and auxiliary variable exists. The heightened efficiency of these estimators can significantly enhance the accuracy of population variance estimates, offering practical implications for decision-making processes in real-world applications. The proposed estimator can significantly contribute in the estimation of parameters other than variance, such as mean, median, coefficient of variation etc.

In conclusion, this paper significantly contributes to the field of survey sampling by introducing novel estimators for finite population variance. The adaptability of these estimators to various sampling schemes and their practical implications underscores their potential impact on enhancing accuracy in real-world decision-making.

Methodology

Consider a population \(\Omega = \left\{ {\left( {y_{i} ,x_{i} } \right)} \right\},i = 1,2,...N.\) of size N. Suppose a random sample \(\left( {y_{i} ,z_{i} } \right)\) of size n is taken from a population under simple random sampling without replacement case, i-e (SRSWOR). Let \(\left( {y_{i} ,x_{i} } \right)\) be the value of ith unit of the main study and auxiliary variable and \(z_{i} = t\left( {x_{i} } \right)\) is the transformed auxiliary variable observed on the sample. The supplementary variate \(\left( x \right)\) is supposed to be correlated positively with the main study variable \(\left( y \right)\). It is to be noted that the correlation between \(\left( {y_{i} ,z_{i} } \right)\) and between \(\left( {y_{i} ,x_{i} } \right)\) is same. Let \(\overline{y} = \frac{1}{n}\sum\limits_{i = 1}^{n} {y_{i}^{{}} }\), \(\overline{x} = \frac{1}{n}\sum\limits_{i = 1}^{n} {x_{i}^{{}} } \,and\,\,\overline{z} = \frac{1}{n}\sum\limits_{i = 1}^{n} {z_{i}^{{}} }\) represents the sample mean of study variable, ancillary variable and transformed ancillary variable.

\(\overline{Y} = \frac{1}{N}\sum\limits_{i = 1}^{N} {y_{i}^{{}} }\) and \(\overline{X} = \frac{1}{N}\sum\limits_{i = 1}^{N} {x_{i}^{{}} } \,\,and\,\,\,\overline{Z} = \frac{1}{N}\sum\limits_{i = 1}^{N} {z_{i}^{{}} }\) be the population mean of variate \(\left( y \right)\),\(\left( x \right)\) and \(\left( z \right)\).

Let us define the random error due to sampling by \(\varepsilon_{0} = \frac{{s_{y}^{2} - S_{y}^{2} }}{{S_{y}^{2} }}\) and \(\varepsilon_{1} = \frac{{s_{x}^{2} - S_{x}^{2} }}{{S_{x}^{2} }}\), such that

where r and s be the non-negative integer and \(\mu_{rs} ,\mu_{20}^{{}} \;\;and\,\;\mu_{02}^{{}}\) are the second order moments and \(\phi_{rs}\) is the moment’s ratio.

where \(C_{y}^{2} ,C_{x}^{2}\) are the coefficient of variation of the survey variable and auxiliary variables Y and X respectively.\(\rho_{yx}\) is the correlation coefficient between main study variable Y and auxiliary variable X, and \(\beta_{(1)x}^{{}} ,\beta_{(2)x}^{{}}\) are the coefficient of skewness and the coefficient of kurtosis of the auxiliary variables respectively.

In literature, some estimators of the population variance are given as

-

1.

The usual classical estimator of population variance is given by

$$ \tau_{1}^{{}} = s_{y}^{2} = \frac{1}{n - 1}\sum\limits_{i = 1}^{n} {\left( {y_{i} - \overline{y}} \right)^{2} } , $$(3)where \(\tau_{1}^{{}}\) is an unbiased estimator. Its variance is as under

$$ Var\left( {\tau_{1} } \right) = S_{y}^{4} V_{40} . $$(4) -

2.

Developed the estimator of population variance, it is given by2

$$ \tau_{2}^{{}} = s_{y}^{2} \left( {\frac{{S_{x}^{2} }}{{s_{x}^{2} }}} \right), $$(5)

The MSE of \(\tau_{2}\) is as below.

-

3.

Provide the estimator of population variance, it is given below22

$$ \tau_{3}^{{}} = s_{y}^{2} \exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right), $$(7)

The MSE of \(\tau_{3}^{{}}\) is given by

-

4.

For ratio estimator of population variance, the linear regression estimator developed by2 is given by

$$ \tau_{4}^{{}} = s_{y}^{2} + b\left( {S_{x}^{2} - s_{x}^{2} } \right), $$(9)here \(b = \frac{{s_{y}^{2} V_{22} }}{{s_{x}^{2} V_{40} }}\) represents the sample regression coefficient.

The variance of \(\tau_{4}^{{}}\) is as below

-

5.

The transformed estimator by23 is as follows:

$$ \tau_{5}^{{}} = \left\{ {k_{1} s_{y}^{2} + k_{2} \left( {S_{x}^{2} - s_{x}^{2} } \right)} \right\}\left\{ {\theta \left( {\frac{{aS_{x}^{2} + b}}{{as_{x}^{2} + b}}} \right) + \left( {1 - \theta } \right)\exp \left( {\frac{{a\left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{a\left( {S_{x}^{2} + s_{x}^{2} } \right) + 2b}}} \right)} \right\}, $$(11)here \(k_{1}\) and \(k_{2}\) are optimization constants, \(\theta\) is generalization constants which can takes value between 0 and 1 and “a” and “b” some function of auxiliary variable. The optimum value of \(k_{1}\) and \(k_{1}\) are as below

$$ k_{{1\left( {opt} \right)}} = \left( {\frac{{1 - \tfrac{1}{8}q^{2} \left( {1 + 3\theta + 4\theta^{2} } \right)V_{04} }}{{1 - \tfrac{1}{4}q^{2} \theta \left( {1 + 3\theta } \right)V_{04}^{2} + V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{04} V_{40} }}} \right)}}} \right) $$and

$$ k_{{2\left( {opt} \right)}} = \frac{{S_{y}^{2} }}{{S_{x}^{2} }}\left\{ {1 + q\left( {1 + \theta } \right) + k_{{1\left( {opt} \right)}} \left( {\frac{{V_{22} }}{{V_{04} }} - q\left( {1 + \theta } \right)} \right)} \right\}, $$

The minimum MSE at \(k_{{1\left( {opt} \right)}}\) and \(k_{{2\left( {opt} \right)}}\) is given by

where \(q = \frac{{aS_{x}^{2} }}{{aS_{x}^{2} + b}}.\)

The MSE of \(\tau\) is minimum at \(\left( {\theta ,a,b} \right) = \left( {1,1,0} \right)\).

-

6.

Introduced the following difference-cum-exponential estimator of population variance7,

$$ \tau_{6} = \left[ {c_{11} s_{y}^{2} + c_{22} \left( {S_{x}^{2} - s_{x}^{2} } \right)} \right]\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right), $$(13)where \(c_{11}\) and \(c_{11}\) are optimization constants. The optimum MSE of \(\tau_{6}\) is given by

$$ MSE\left( {\tau_{6} } \right) = MSE\left( {\tau_{4} } \right) - \frac{{S_{y}^{4} \left[ {\left( {V_{40}^{{}} - 1} \right) + 8V_{04}^{{}} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40}^{{}} V_{04}^{{}} }}} \right)} \right]}}{{64\left\{ {1 + \left\{ {V_{40}^{{}} - \frac{{V_{22}^{2} }}{{V_{04}^{{}} }}} \right\}} \right\}}}. $$(14) -

7.

Developed the following ratio estimator of population variance24

$$ \tau_{7}^{{}} = s_{y}^{2} \left[ {k_{11} \left( {\frac{{S_{x}^{2} }}{{s_{x}^{2} }}} \right) + k_{12} \left( {\frac{{s_{x}^{2} }}{{S_{x}^{2} }}} \right)} \right]\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right) $$(15)

The optimum value of \(k_{11} \,\,and\,\,k_{12}\) are given by

where

The minimum MSE at \(k_{{11\left( {opt} \right)}} \,\,and\,\,k_{{12\left( {opt} \right)}}\) is given by

-

8.

Advocated the following difference-cum-exponential type exponential estimators of population variance given by25

$$ \tau_{8} = \left[ {\frac{{s_{y}^{2} }}{2}\left\{ {\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right) + \exp \left( {\frac{{s_{x}^{2} - S_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right)} \right\} + k_{1}^{{}} \left( {S_{x}^{2} - s_{x}^{2} } \right) + k_{2}^{{}} s_{y}^{2} } \right]\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right), $$(17)$$ \begin{gathered} \tau_{9} = \left[ \begin{gathered} s_{y}^{2} \left\{ {\alpha \exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right) + \left( {1 - \alpha } \right)\exp \left( {\frac{{s_{x}^{2} - S_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right)} \right\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, + k_{11}^{{}} \left( {S_{x}^{2} - s_{x}^{2} } \right) + k_{22}^{{}} s_{y}^{2} \hfill \\ \end{gathered} \right]\left\{ \begin{gathered} \delta \exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right) \hfill \\ + \left( {1 - \delta } \right)\exp \left( {\frac{{s_{x}^{2} - S_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} }}} \right) \hfill \\ \end{gathered} \right\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,, \hfill \\ \end{gathered} $$(18)where \(k_{1}^{{}}\) and \(k_{2}^{{}}\) are optimization constants that minimize the MSE of \(\tau_{8}\).which is given by

$$ MSE\left( {\tau _{8} } \right) \cong MSE\left( {\tau _{4} } \right) - \frac{{S_{y}^{4} \left\{ {\left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right) + \frac{1}{4}V_{{04}} ^{2} } \right\}}}{{\left\{ {1 + \left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right)} \right\}}}. $$(19)$$ MSE\left( {\tau_{9} } \right) \cong S_{y}^{4} \left[ {\left\{ {V_{40}^{{}} + \Omega^{{}} \left( {\Omega V_{04}^{{}} + 2V_{22}^{{}} } \right)} \right\} - \frac{{D_{1}^{{}} D_{5}^{2} + D_{2}^{{}} D_{4}^{2} - 2D_{3}^{{}} D_{4}^{{}} D_{5}^{{}} }}{{D_{1}^{{}} D_{2}^{{}} - D_{3}^{2} }}} \right]. $$(20)$$ \begin{gathered} D_{1} = R^{2} V_{04} , \hfill \\ D_{2} = 1 + \left\{ {V_{04} + 2V_{22} \left( {1 - 2\omega } \right) + \omega^{2} V_{04} } \right\}, \hfill \\ D_{3} = R\left[ {V_{22} + \left( {1 - 2\omega } \right)V_{04} } \right], \hfill \\ D_{4} = R\left[ {V_{22} + \Omega V_{04} } \right] \hfill \\ \end{gathered} $$and

$$ D_{5} = \left[ {V_{40} + \left\{ {\left\{ {2\Omega + \frac{1 - 2\omega }{2}} \right\}V_{22} + \left\{ {\alpha \omega + \frac{1 - 2\omega }{2}} \right\}V_{04} } \right\}} \right]. $$ -

9.

Suggested a general type of estimator of population variance given by20

$$ \tau_{{\left( {a,b} \right)}}^{{}} = \left[ {\left( {\hat{t}_{{\left( {a,b} \right)}} + k\left( {S_{x}^{2} - s_{x}^{2} } \right)} \right)\exp \left( {\frac{{w_{1} \left( {\overline{X} - \overline{x}} \right)}}{{\overline{X} + \left( {\omega_{1} - 1} \right)\overline{x}}} + \frac{{w_{2} \left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{S_{x}^{2} + \left( {\omega_{2} - 1} \right)s_{x}^{2} }}} \right)} \right], $$(21)

The particular case of estimator \(\tau_{9}^{a,b}\) for estimating variance is obtained by putting a = 0, b = 2,\(w_{1} = 0\,and\,\,w_{2} = 1\) and \(\omega_{2}\) = 2 as following

With MSE given by

where

Which in case of variance estimator, the MSE \(\tau\) will induce the following particular case:

Proposed estimators

The first proposed estimator

Motivated by24 and using transformed auxiliary variable, the following class of transformed ratio-product type exponential estimator is suggestedor

where \(c_{1}\) and \(c_{2}\) are optimization constants and \(\gamma_{2}\) and \(\gamma_{2}\) are suitable constants or some function of auxiliary variables.

The second proposed estimator

Motivated by2, we can write the proposed estimator as a linear combination of usual ratio and exponential estimators as followingOr

\(\psi_{1}\), and \(\psi_{2}\) are optimization constants whose value is to be obtained so that the MSE of \(\tau_{P2}^{{}}\) is minimum.

The third proposed estimator

Applying transformation to the auxiliary variable in (26), we can write the third proposed estimator as following

Or

\(\psi_{3} \,\) and \(\psi_{4} \,\) are optimization constants whose value is to be determined so that the MSE of \(\tau_{P3}^{{}}\) is minimum. It is to be noted that for \(\gamma_{1} = 1\,\,and\,\,\gamma_{2} = 0\).

The third proposed estimator \(\tau_{P3}^{{}}\) given by (26) become equivalent to the second proposed estimator \(\tau_{P2}^{{}}\) given by (26).

Similarly, the first proposed estimator \(\tau_{P1}^{{}}\) given by (25) become equivalent to \(\tau_{P7}^{{}}\) as suggested by Muneer et al.9 given by (13).

Theoretical properties of the proposed estimators

This unit aims at, deriving the theoretical properties of the new estimators using the notations given in (1) and (2). Rewriting (25), (26) and (27) respectively in term of error terms, as following

and

where \(\pi_{k} = \frac{{\gamma_{1} S_{x}^{2} }}{{\gamma_{1} S_{x}^{2} - \gamma_{2} }}\,\,\,.\) or

and

Taking expectation of both sides of (28), (29) and (30) respectively, and after simplification we get

Squaring both sides of (31), (32) and (33) respectively and applying expectation to get the MSE of \(\tau_{P1}^{{}} ,\tau_{P2}^{{}} \,\,and\,\,\tau_{P3}^{{}}\), as following.

or

or

and

The optimum value of \(c_{1}\) and \(c_{2}\) is obtain by differentiating (37) w.r.t \(c_{1} \,and\,\,c_{2}\) respectively and equating to zero, as following

where,

putting the optimum value of \(c_{1} \,\,and\,\,c_{2}\) in (37), we get

where

Similarly, using calculus rule, the optimum value of \(\psi_{1} ,\psi_{2} ,\psi_{3} \,\,and\,\psi_{4}\) can also be obtain by differentiating MSE of \(\tau_{P2} \,\,and\,\,\tau_{P3}\) w.r.to \(\psi_{1} ,\psi_{2} ,\psi_{3} \,\,and\,\psi_{4}\) and equating to zero. Hence, we obtain after simplification

and

where

The MSE is given by

Special Cases of \(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}^{{}}\) in response to the transformation introduced

-

1.

For k = 1, \(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}^{{}}\) takes the following form

$$ \tau_{P1,1}^{{}} = s_{y}^{2} \left[ {c_{1} \left( {\frac{{S_{x}^{2} - \rho_{yx} }}{{s_{x}^{2} - \rho_{yx} }}} \right) + c_{2} \left( {\frac{{s_{x}^{2} - \rho_{yx} }}{{S_{x}^{2} - \rho_{yx} }}} \right)} \right]\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} - 2\rho_{yx} }}} \right) $$$$ \tau_{P3,1}^{{}} = s_{y}^{2} \left\{ {\psi_{3} \left( {\frac{{S_{x}^{2} - \rho_{yx} }}{{s_{x}^{2} - \rho_{yx} }}} \right) + \psi_{4} \exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} - 2\rho_{yx} }}} \right)} \right\}. $$where \(\gamma_{1} = 1\,\,and\,\,\gamma_{2} = \rho_{yx} \,\,\,\)

The bias and MSEs are given by

and

\(\,\,V_{04,1} = \pi_{1}^{2} V_{04} \,\,and\,\,V_{22,1} = \pi_{1}^{{}} V_{22}\), \(\pi_{1}^{{}} = \frac{{S_{x}^{2} }}{{S_{x}^{2} - \rho_{yx} }}.\)

-

2.

For k = 2, \(\gamma_{1} = 1\,\,and\,\,\gamma_{2} = C_{x}\), \(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}\) will take the following form

$$ \tau_{P1,2}^{{}} = s_{y}^{2} \left[ {c_{1} \left( {\frac{{S_{x}^{2} - C_{x} }}{{s_{x}^{2} - C_{x} }}} \right) + c_{2} \left( {\frac{{s_{x}^{2} - C_{x} }}{{S_{x}^{2} - C_{x} }}} \right)} \right]\exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} - 2C_{x} }}} \right). $$$$ \tau_{P3,2}^{{}} = s_{y}^{2} \left\{ {\psi_{3} \left( {\frac{{S_{x}^{2} - C_{x} }}{{s_{x}^{2} - C_{x} }}} \right) + \psi_{4} \exp \left( {\frac{{S_{x}^{2} - s_{x}^{2} }}{{S_{x}^{2} + s_{x}^{2} - 2C_{x} }}} \right)} \right\}. $$

The bias and MSEs are given by

and

where \(\,\,V_{04,2} = \pi_{2}^{2} V_{04} \,\,and\,\,V_{22,2} = \pi_{2}^{{}} V_{22}\),\(\pi_{2}^{{}} = \frac{{S_{x}^{2} }}{{S_{x}^{2} - C_{x} }}.\)

-

3.

For k = 3, \(\,\gamma_{1} = \rho_{yx} \,\,and\,\,\gamma_{2} = C_{x}\),\(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}\) will take the following form

$$ \tau_{P1,3}^{{}} = s_{y}^{2} \left[ {c_{1} \left( {\frac{{\rho_{yx} S_{x}^{2} - C_{x} }}{{\rho_{yx} s_{x}^{2} - C_{x} }}} \right) + c_{2} \left( {\frac{{\rho_{yx} s_{x}^{2} - C_{x} }}{{\rho_{yx} S_{x}^{2} - C_{x} }}} \right)} \right]\exp \left( {\frac{{\rho_{yx} \left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{\rho_{yx} \left( {S_{x}^{2} + s_{x}^{2} } \right) - 2C_{x} }}} \right) $$$$ \tau_{P3,3}^{{}} = s_{y}^{2} \left\{ {\psi_{3} \left( {\frac{{\rho_{yx} S_{x}^{2} - C_{x} }}{{\rho_{yx} s_{x}^{2} - C_{x} }}} \right) + \psi_{4} \exp \left( {\frac{{\rho_{yx} \left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{\rho_{yx} \left( {S_{x}^{2} + s_{x}^{2} } \right) - 2C_{x} }}} \right)} \right\}. $$

The bias along with the mean square error (MSE) is given by

and

where \(\,\,V_{04,3} = \pi_{3}^{2} V_{04} \,\,and\,\,V_{22,3} = \pi_{3}^{{}} V_{22}\),\(\pi_{3}^{{}} = \frac{{\rho_{yx} S_{x}^{2} }}{{\rho_{yx} S_{x}^{2} - C_{x} }}.\)

-

4.

For k = 4, \(\,\gamma_{1} = C_{x} \,\,and\,\,\gamma_{2} = \rho_{yx} .\) the estimators \(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}\) will take the following form

$$ \begin{gathered} \tau_{P1,4}^{{}} = s_{y}^{2} \left[ {c_{1} \left( {\frac{{C_{x} S_{x}^{2} - \rho_{yx} }}{{C_{x} s_{x}^{2} - \rho_{yx} }}} \right) + c_{2} \left( {\frac{{C_{x} s_{x}^{2} - \rho_{yx} }}{{C_{x} S_{x}^{2} - \rho_{yx} }}} \right)} \right]\exp \left( {\frac{{C_{x} \left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{C_{x} \left( {S_{x}^{2} + s_{x}^{2} } \right) - 2\rho_{yx} }}} \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\tau_{P3,4}^{{}} = s_{y}^{2} \left\{ {\psi_{3} \left( {\frac{{C_{x} S_{x}^{2} - \rho_{yx} }}{{C_{x} s_{x}^{2} - \rho_{yx} }}} \right) + \psi_{4} \exp \left( {\frac{{C_{x} \left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{C_{x} \left( {S_{x}^{2} + s_{x}^{2} } \right) - 2\rho_{yx} }}} \right)} \right\}. \hfill \\ \end{gathered} $$

The bias and MSEs are obtained as

and

where \(\,\,V_{04,1} = \pi_{1}^{2} V_{04} \,\,and\,\,V_{22,1} = \pi_{1}^{{}} V_{22}\),\(\pi_{4}^{{}} = \frac{{C_{x} S_{x}^{2} }}{{C_{x} S_{x}^{2} - \rho_{yx} }}.\)

-

5.

For k = 5, \(\gamma_{1} = \overline{X}\,and\,\,\gamma_{2} = \rho_{yx} \,\), the estimators \(\tau_{P1}^{{}} \,\,and\,\,\tau_{P3}\) will take the following form

$$ \begin{gathered} \tau_{P1,5}^{{}} = s_{y}^{2} \left[ {c_{1} \left( {\frac{{\overline{X}S_{x}^{2} - \rho_{yx} }}{{\overline{X}s_{x}^{2} - \rho_{yx} }}} \right) + c_{2} \left( {\frac{{\overline{X}s_{x}^{2} - \rho_{yx} }}{{\overline{X}S_{x}^{2} - \rho_{yx} }}} \right)} \right]\exp \left( {\frac{{\overline{X}\left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{\overline{X}\left( {S_{x}^{2} + s_{x}^{2} } \right) - 2\rho_{yx} }}} \right) \hfill \\ \tau_{P3,5}^{{}} = s_{y}^{2} \left\{ {\psi_{3} \left( {\frac{{\overline{X}S_{x}^{2} - \rho_{yx} }}{{\overline{X}s_{x}^{2} - \rho_{yx} }}} \right) + \psi_{4} \exp \left( {\frac{{\overline{X}\left( {S_{x}^{2} - s_{x}^{2} } \right)}}{{\overline{X}\left( {S_{x}^{2} + s_{x}^{2} } \right) - 2\rho_{yx} }}} \right)} \right\}. \hfill \\ \end{gathered} $$

The bias and MSEs are given by

and

where \(\,\,V_{04,5} = \pi_{5}^{2} V_{04} \,\,and\,\,V_{22,5} = \pi_{5}^{{}} V_{22}\) and \(\pi_{5}^{{}} = \frac{{\overline{X}S_{x}^{2} }}{{\overline{X}S_{x}^{2} - \rho_{yx} }}.\)

-

6.

For k = 6, \(\gamma_{1} = \rho_{yx} \,\,and\,\,\gamma_{2} = 1\)

The bias and MSEs are given by

and

where \(\,\,V_{04,6} = \pi_{6}^{2} V_{04} \,\,and\,\,V_{22,6} = \pi_{6}^{{}} V_{22w}\) and \(\pi_{6\left( h \right)}^{{}} = \frac{{\rho_{\left( h \right)} S_{{w_{x} \left( h \right)}}^{2} }}{{\rho_{\left( h \right)} S_{{w_{x} \left( h \right)}}^{2} - 1}}.\) where \(\,\,V_{04,6} = \pi_{6}^{2} V_{04} \,\,and\,\,V_{22,6} = \pi_{6}^{{}} V_{22}\), \(\pi_{6}^{{}} = \frac{{S_{x}^{2} }}{{S_{x}^{2} - 1}}\).

Efficiency comparisons

This section aims to compare the MSEs of the newly developed estimators with the competing estimators discussed in the literature.

Condition 1. By using (37), (38), (39) and (4) we can write

Condition 2. By using (37), (38), (39) and (6) we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{2} } \right) < 0,\;\;{\text{i}}\, = \,{1},{2},{3}.\)

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\frac{1}{16}\left\{ \begin{subarray}{l} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} - 16A_{3,k}^{{}} \left( {V_{40} + V_{04} - 2V_{22} } \right) \end{subarray} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( {V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} } \right) - \hfill \\ 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - D\left( {V_{40} + V_{04} - 2V_{22} } \right) \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) \hfill \\ + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} - D_{k} \left( {V_{40} + V_{04} - 2V_{22} } \right) \hfill \\ \end{gathered} \right) < 0.\)

Condition 3. By using (37), (38), (39) and (8) we can write.

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{3} } \right) < 0\), i = 1,2,3.

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\frac{1}{16}\left\{ \begin{subarray}{l} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} - 16A_{3,k}^{{}} \left( {V_{40} + \frac{{V_{04} }}{4} - V_{22} } \right) \end{subarray} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( {V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} } \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - D\left( {V_{40} + \frac{{V_{04} }}{4} - V_{22} } \right) \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) \hfill \\ + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} - D_{k} \left( {V_{40} + \frac{{V_{04} }}{4} - V_{22} } \right) \hfill \\ \end{gathered} \right) < 0.\)

Condition 4. By using (37), (38), (39) and (10) we can write.

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{4} } \right) < 0\), i = 1,2,3.

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\frac{1}{16}\left\{ \begin{subarray}{l} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} - 16A_{3,k}^{{}} V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40} V_{04} }}} \right) \end{subarray} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( {V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} } \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - DV_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40} V_{04} }}} \right) \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) \hfill \\ + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} - D_{k} V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40} V_{04} }}} \right) \hfill \\ \end{gathered} \right) < 0.\)

Condition 5. By using (37), (38), (39) and (12) we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{5} } \right) < 0\), i = 1,2,3.

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\frac{1}{16}\left\{ \begin{gathered} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \hfill \\ 9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \hfill \\ - 16A_{3,k}^{{}} \left( \begin{subarray}{l} \left\{ {1 - \frac{1}{4}q^{2} \left( {1 + \theta } \right)^{2} V_{04} } \right\} - \\ \frac{{\left\{ {1 - \tfrac{1}{8}q^{2} \left( {1 + 3\theta + 4\theta^{2} } \right)V_{04} } \right\}^{2} }}{{1 - \frac{1}{4}q^{2} \theta \left( {1 + 3\theta } \right)V_{04}^{2} + V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{04} V_{40} }}} \right)}} \end{subarray} \right) \hfill \\ \end{gathered} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( \begin{gathered} V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + \hfill \\ V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - \hfill \\ D\left( {\left\{ {1 - \frac{1}{4}q^{2} \left( {1 + \theta } \right)^{2} V_{04} } \right\} - \frac{{\left\{ {1 - \tfrac{1}{8}q^{2} \left( {1 + 3\theta + 4\theta^{2} } \right)V_{04} } \right\}^{2} }}{{1 - \frac{1}{4}q^{2} \theta \left( {1 + 3\theta } \right)V_{04}^{2} + V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{04} V_{40} }}} \right)}}} \right) \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + \hfill \\ V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} \hfill \\ - D_{k} \left\{ {1 - \frac{1}{4}q^{2} \left( {1 + \theta } \right)^{2} V_{04} } \right\} - \frac{{\left\{ {1 - \tfrac{1}{8}q^{2} \left( {1 + 3\theta + 4\theta^{2} } \right)V_{04} } \right\}^{2} }}{{1 - \frac{1}{4}q^{2} \theta \left( {1 + 3\theta } \right)V_{04}^{2} + V_{40} \left( {1 - \frac{{V_{22}^{2} }}{{V_{04} V_{40} }}} \right)}} \hfill \\ \end{gathered} \right) < 0.\)

Condition 6. By using Eqs. (37), (38), (39) and (14) we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{6} } \right) < 0\), i = 1,2,3.

For i = 1. \(\begin{gathered} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \hfill \\ 9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} - \hfill \\ A_{3,k}^{{}} \left( {16MSE\left( {\tau_{4} } \right) - \frac{{\left[ {\left( {V_{40}^{{}} - 1} \right) + 8V_{04}^{{}} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40}^{{}} V_{04}^{{}} }}} \right)} \right]}}{{4\left\{ {1 + \left\{ {V_{40}^{{}} - \frac{{V_{22}^{2} }}{{V_{04}^{{}} }}} \right\}} \right\}}}} \right) < 0 \hfill \\ \end{gathered}\).

For i = 2.\(\begin{gathered} \left( \begin{gathered} V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + \hfill \\ V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - \hfill \\ D\left( {MSE\left( {\tau_{4} } \right) - \frac{{\left[ {\left( {V_{40}^{{}} - 1} \right) + 8V_{04}^{{}} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40}^{{}} V_{04}^{{}} }}} \right)} \right]}}{{64\left\{ {1 + \left\{ {V_{40}^{{}} - \frac{{V_{22}^{2} }}{{V_{04}^{{}} }}} \right\}} \right\}}}} \right) < 0 \hfill \\ \end{gathered}\).

For i = 3 \(\begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + \hfill \\ V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} \hfill \\ - D_{k} \left( {MSE\left( {\tau_{4} } \right) - \frac{{\left[ {\left( {V_{40}^{{}} - 1} \right) + 8V_{04}^{{}} \left( {1 - \frac{{V_{22}^{2} }}{{V_{40}^{{}} V_{04}^{{}} }}} \right)} \right]}}{{64\left\{ {1 + \left\{ {V_{40}^{{}} - \frac{{V_{22}^{2} }}{{V_{04}^{{}} }}} \right\}} \right\}}}} \right) < 0. \hfill \\ \end{gathered}\).

Condition 7. By using (37), (38), (39) and (16) we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{7} } \right) < 0\), i = 1,2,3.

For i = 1. \(\begin{gathered} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \hfill \\ 9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ - \frac{{A_{3,k}^{{}} }}{{A_{3}^{{}} }}\left[ {\left\{ \begin{gathered} 16V_{22}^{{}} \left\{ {V_{22}^{{}} \left( {4V_{40}^{{}} - 3V_{04}^{{}} + 4} \right) + \left( {3V_{04}^{2} - 8V_{40}^{{}} V_{04}^{{}} } \right)} \right\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, + 9V_{04}^{3} + 64V_{04}^{{}} \left( {V_{04}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \end{gathered} \right\}} \right] < 0 \hfill \\ \end{gathered}\).

For i = 2.\(\begin{gathered} \left( \begin{gathered} V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + \hfill \\ V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} - \hfill \\ \frac{D}{{16A_{3}^{{}} }}\left[ {\left\{ \begin{gathered} 16V_{22}^{{}} \left\{ {V_{22}^{{}} \left( {4V_{40}^{{}} - 3V_{04}^{{}} + 4} \right) + \left( {3V_{04}^{2} - 8V_{40}^{{}} V_{04}^{{}} } \right)} \right\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, + 9V_{04}^{3} + 64V_{04}^{{}} \left( {V_{04}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \end{gathered} \right\}} \right] < 0 \hfill \\ \end{gathered}\).

For i = 3. \(\begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + \hfill \\ V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} \hfill \\ - \frac{{D_{k} }}{{16A_{3}^{{}} }}\left[ {\left\{ \begin{gathered} 16V_{22}^{{}} \left\{ {V_{22}^{{}} \left( {4V_{40}^{{}} - 3V_{04}^{{}} + 4} \right) + \left( {3V_{04}^{2} - 8V_{40}^{{}} V_{04}^{{}} } \right)} \right\} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, + 9V_{04}^{3} + 64V_{04}^{{}} \left( {V_{04}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \end{gathered} \right\}} \right] < 0. \hfill \\ \end{gathered}\).

Condition 8. By using (37), (38), (39) and (19), we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{8} } \right) < 0\), i = 1,2,3.

For i = 1. \( \begin{gathered} 16V_{{22,k}}^{{}} \left\{ {V_{{22,k}}^{{}} \left( {4V_{{40}}^{{}} - 3V_{{04,k}}^{{}} + 4} \right) + \left( {3V_{{04,k}}^{2} - 8V_{{40}}^{{}} V_{{04,k}}^{{}} } \right)} \right\} \hfill \\ + 9V_{{04,k}}^{3} + 64V_{{04,k}}^{{}} \left( {V_{{04,k}}^{{}} - 1} \right)V_{{40}}^{{}} \hfill \\ - A_{{3,k}}^{{}} \left\{ {\frac{{MSE\left( {\tau _{4} } \right)}}{{S_{y}^{4} }} - \frac{{\left\{ {\left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right) + \frac{1}{4}V_{{04}} ^{2} } \right\}}}{{\left\{ {1 + \left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right)} \right\}}}} \right\} < 0 \hfill \\ \end{gathered} \).

For i = 2.\( \begin{gathered} \left( \begin{gathered} V_{{04}}^{6} + \left( {25V_{{40}}^{2} - 10V_{{22}}^{2} } \right)V_{{04}}^{4} + \hfill \\ V_{{04}}^{2} \left( {8V_{{22}}^{{}} - 40V_{{22}}^{{}} V_{{40}}^{2} } \right) + 16V_{{22}}^{2} V_{{40}}^{2} \hfill \\ \end{gathered} \right) - 16V_{{04}}^{2} V_{{40}}^{2} + 16V_{{22}}^{2} \hfill \\ \,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\,\, - D\left\{ {\frac{{MSE\left( {\tau _{4} } \right)}}{{S_{y}^{4} }} - \frac{{\left\{ {\left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right) + \frac{1}{4}V_{{04}} ^{2} } \right\}}}{{\left\{ {1 + \left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right)} \right\}}}} \right\} < 0 \hfill \\ \end{gathered} \).

For i = 3 \( \begin{gathered} \left( \begin{gathered} V_{{04,k}}^{6} + \left( {25V_{{40}}^{2} - 10V_{{22,k}}^{2} } \right)V_{{04,k}}^{4} + \hfill \\ V_{{04,k}}^{2} \left( {8V_{{22,k}}^{{}} - 40V_{{22,k}}^{{}} V_{{40}}^{2} } \right) + 16V_{{22,k}}^{2} V_{{40}}^{2} \hfill \\ \end{gathered} \right) - 16V_{{04,k}}^{2} V_{{40}}^{2} + 16V_{{22,k}}^{2} \hfill \\ - D_{k} \left\{ {\frac{{MSE\left( {\tau _{4} } \right)}}{{S_{y}^{4} }} - \frac{{\left\{ {\left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right) + \frac{1}{4}V_{{04}} ^{2} } \right\}}}{{\left\{ {1 + \left( {V_{{40}}^{{}} - \frac{{V_{{22}} ^{2} }}{{V_{{04}}^{{}} }}} \right)} \right\}}}} \right\} < 0. \hfill \\ \end{gathered} \).

Condition 9. By using (37), (38), (39) and (20), we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{9} } \right) < 0\), i = 1,2,3.

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\left\{ \begin{gathered} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \hfill \\ 9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \hfill \\ - A_{3,k}^{{}} \left[ {\left\{ {V_{40}^{{}} + \Omega^{{}} \left( {\Omega V_{04}^{{}} + 2V_{22}^{{}} } \right)} \right\} - \frac{{D_{1}^{{}} D_{5}^{2} + D_{2}^{{}} D_{4}^{2} - 2D_{3}^{{}} D_{4}^{{}} D_{5}^{{}} }}{{D_{1}^{{}} D_{2}^{{}} - D_{3}^{2} }}} \right] \hfill \\ \end{gathered} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( \begin{gathered} V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + \hfill \\ V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} \hfill \\ \,\, - D\left[ {\left\{ {V_{40}^{{}} + \Omega^{{}} \left( {\Omega V_{04}^{{}} + 2V_{22}^{{}} } \right)} \right\} - \frac{{D_{1}^{{}} D_{5}^{2} + D_{2}^{{}} D_{4}^{2} - 2D_{3}^{{}} D_{4}^{{}} D_{5}^{{}} }}{{D_{1}^{{}} D_{2}^{{}} - D_{3}^{2} }}} \right] \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + \hfill \\ V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} \hfill \\ - D_{k} \left[ {\left\{ {V_{40}^{{}} + \Omega^{{}} \left( {\Omega V_{04}^{{}} + 2V_{22}^{{}} } \right)} \right\} - \frac{{D_{1}^{{}} D_{5}^{2} + D_{2}^{{}} D_{4}^{2} - 2D_{3}^{{}} D_{4}^{{}} D_{5}^{{}} }}{{D_{1}^{{}} D_{2}^{{}} - D_{3}^{2} }}} \right] \hfill \\ \end{gathered} \right) < 0.\)

Condition 10. By using (37), (38), (39) and (24), we can write

\(MSE\left( {\tau_{Pi} } \right) - MSE\left( {\tau_{10} } \right) < 0\), i = 1,2,3.

For i = 1 \(\frac{{S_{y}^{4} }}{{A_{3,k}^{{}} }}\left[ {\left\{ \begin{gathered} 16V_{22,k}^{{}} \left\{ {V_{22,k}^{{}} \left( {4V_{40}^{{}} - 3V_{04,k}^{{}} + 4} \right) + \left( {3V_{04,k}^{2} - 8V_{40}^{{}} V_{04,k}^{{}} } \right)} \right\} + \hfill \\ 9V_{04,k}^{3} + 64V_{04,k}^{{}} \left( {V_{04,k}^{{}} - 1} \right)V_{40}^{{}} \hfill \\ \hfill \\ - A_{3,k}^{{}} \left[ {1 - \frac{{\left[ {\left\{ {f_{3} \left( {0,2} \right)} \right\}^{2} - 2f_{2} \left( {0,2} \right)V_{40} + \left( {V_{04} - 1} \right)\left\{ {f_{2} \left( {0,2} \right)} \right\}^{2} } \right]}}{{\left( {V_{04} - V_{40}^{2} - 1} \right)}}} \right] \hfill \\ \end{gathered} \right\}} \right] < 0\).

For i = 2 \(\frac{{S_{y}^{4} }}{D}\left( \begin{gathered} \left( \begin{gathered} V_{04}^{6} + \left( {25V_{40}^{2} - 10V_{22}^{2} } \right)V_{04}^{4} + \hfill \\ V_{04}^{2} \left( {8V_{22}^{{}} - 40V_{22}^{{}} V_{40}^{2} } \right) + 16V_{22}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04}^{2} V_{40}^{2} + 16V_{22}^{2} \hfill \\ \,\, - D\left[ {1 - \frac{{\left[ {\left\{ {f_{3} \left( {0,2} \right)} \right\}^{2} - 2f_{2} \left( {0,2} \right)V_{40} + \left( {V_{04} - 1} \right)\left\{ {f_{2} \left( {0,2} \right)} \right\}^{2} } \right]}}{{\left( {V_{04} - V_{40}^{2} - 1} \right)}}} \right] \hfill \\ \end{gathered} \right) < 0\).

For i = 3 \(\frac{{S_{y}^{4} }}{{D_{k} }}\left( \begin{gathered} \left( \begin{gathered} V_{04,k}^{6} + \left( {25V_{40}^{2} - 10V_{22,k}^{2} } \right)V_{04,k}^{4} + \hfill \\ V_{04,k}^{2} \left( {8V_{22,k}^{{}} - 40V_{22,k}^{{}} V_{40}^{2} } \right) + 16V_{22,k}^{2} V_{40}^{2} \hfill \\ \end{gathered} \right) - 16V_{04,k}^{2} V_{40}^{2} + 16V_{22,k}^{2} \hfill \\ - D_{k} \left[ {1 - \frac{{\left[ {\left\{ {f_{3} \left( {0,2} \right)} \right\}^{2} - 2f_{2} \left( {0,2} \right)V_{40} + \left( {V_{04} - 1} \right)\left\{ {f_{2} \left( {0,2} \right)} \right\}^{2} } \right]}}{{\left( {V_{04} - V_{40}^{2} - 1} \right)}}} \right] \hfill \\ \end{gathered} \right) < 0.\)

The condition mentioned above always hold true for all type of real data where the correlation is positive between the main study variable and supplementary variable.

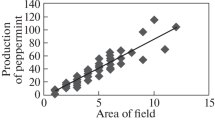

Empirical analysis

This section aims to investigate the performance of the proposed estimators against the competing estimators using data from some real-life situations. Table 1 consists of summary statistics of various datasets.

The percentage relative efficiency (PRE) of all estimators discussed in the literature against \(\tau_{1}\) has been used as performance index. The formula of PRE is given below

where, \(i = 2,3, \cdots ,10\). J = 1,2,3. and k = 1,2 \(\cdots\),6. are denoted by existing estimators and proposed estimators.

It is obvious from the Table 2 that the newly transformed estimators always perform well than existing estimators for all real data sets. The transformation introduced results high gain in efficiency. The first proposed estimator given by (25) is more efficient than the parent estimator suggested by24, moreover it also outperforms all the competing estimators discussed in the literature. The second proposed estimator given by (26) is more efficient even than our first proposed estimators and all other competing estimators. Further, incorporating the transformed auxiliary variable in the second proposed estimator generates the third proposed estimators given by (27) which are more efficient than all other estimators.

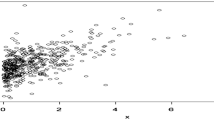

Simulation study

The simulation study of the suggested and existing estimators is conducted to assess the performance of both suggested and existing estimators. Three different populations of size 10,000 have been generated using positive correlation between main study and auxiliary variables. The intensity of correlation between the main study and the supplementary variable is high, moderate, and low in the first, the second and the third population respectively.

Population 1 \(\mu = \left[ {\begin{array}{*{20}c} {5.0} & {10.0} \\ \end{array} } \right]\) \(\sum { = \left[ {\begin{array}{*{20}c} {9.0} & {2.9} \\ {2.9} & {1.0} \\ \end{array} } \right]}\)\(\rho = 0.9665\).

Population 2 \(\mu = \left[ {\begin{array}{*{20}c} {5.0} & {10.0} \\ \end{array} } \right]\) \(\sum { = \left[ {\begin{array}{*{20}c} {10.0} & {3.0} \\ {3.0} & {1.5} \\ \end{array} } \right]}\)\(\rho = 0.7559\).

Population 3 \(\mu = \left[ {\begin{array}{*{20}c} {5.0} & {10.0} \\ \end{array} } \right]\) \(\sum { = \left[ {\begin{array}{*{20}c} {10.0} & {1.5} \\ {1.5} & {1.5} \\ \end{array} } \right]}\)\(\rho = 0.3983\).

We consider sample of sizes n = 50, 150, 300 are consider for each population, using simple random sampling without replacement approach. The steps below summarize the whole simulation procedure in R-Studio.

Step 1: Population is generated using Bivariate normal distribution with mean vector \(\mu = c\left( {\begin{array}{*{20}c} {5.0} & {10.0} \\ \end{array} } \right)\) and \(\sum { = \left[ {\begin{array}{*{20}c} {9.0} & {2.9} \\ {2.9} & {1.0} \\ \end{array} } \right]}\) and calculated all parameters of auxiliary variable and constant from the data.

Step 2: From each of the target population, samples of \(n\) = 50, 150, 300 have been drawn using SRSWOR method respectively, allow the loop to 100,000 times, select the sample, and calculated the estimates in each iteration.

Step 3: Utilizing samples generated in step 2, the 100,000 values of \(\tau_{i}^{{}} ,i = 1,2,..10.\) and \(\tau_{Pjk} ,j = 1,2,3\,and\,k = 1,2,..,6.\), are obtained separately, using (4–20) and (23), (34) and (35), respectively.

Step 4: The estimates obtained from each iteration are store in a matrix and calculate the percent PRE averaging over all iteration of each estimates by using the formula (67), and report the results in Table 3.

The simulation results indicate that the newly developed estimators are highly efficient as compared to the conventional estimators in all situations. It is obvious that for the high correlation \(\rho \ge 0.96\), the estimators are tend to be more efficient than moderate \(\rho \ge 0.75\) and low \(\rho \ge 0.39\) correlation. Thus, the intensity of relationship between the study and supplementary variable plays a vital role in increasing the efficiency. The newly developed estimator retains its efficiency in all three cases. Further, the transformation also plays a remarkable role in enhancing efficiency. As it can be seen from the simulation in the Table 3, the first transformed proposed estimator is efficient than its parent estimator \(\tau_{7}\) for different choices of \(\gamma_{1\,\,} \,\,and\,\,\gamma_{2\,\,}\). Similarly, the third proposed estimator is also efficient not only from the conventional estimators but also from the second proposed estimator for different choices of \(\gamma_{1\,\,} \,\,and\,\,\gamma_{2\,\,}\).

Conclusion

The empirical and simulation findings highlight the remarkable efficiency of the newly developed estimators in comparison to conventional counterparts across diverse scenarios. Particularly noteworthy is the enhanced efficiency of these estimators in situations with higher correlation, emphasizing the pivotal role of the strength of the relationship between the study and supplementary variable. This aligns with the expectation that a stronger correlation contributes to increased estimator efficiency.

Furthermore, the robustness of the newly developed estimator is evident across all correlation levels—high, moderate, and low. This consistent performance underscores the versatility and reliability of the proposed estimators, making them applicable in a broad spectrum of scenarios.

The introduced transformation in the estimators emerges as a key contributor to enhanced efficiency. As depicted in Table 3, simulation results affirm that the first transformed proposed estimator consistently outperforms its parent estimator across various choices. Similarly, the third proposed estimator not only demonstrates efficiency over conventional estimators but also surpasses the second proposed estimator for different choices. This emphasizes the significant role played by transformations in elevating the overall performance of the estimators.

Additionally, the proposed estimators exhibit flexibility and effectiveness in various sampling designs, such as stratified random sampling, non-response sampling, and adaptive cluster sampling. The extension of these estimators to non-conventional sampling designs, including adaptive cluster sampling and stratified adaptive cluster sampling, is also under consideration for variance estimation. These estimators display flexibility in exploring potential improvements in formulating estimates of population parameter utilizing two auxiliary variables.

In conclusion, the proposed estimators shows remarkable efficiency in finite population variance estimation under the simple random sampling scheme without replacement. The encouraging findings suggest their applicability in diverse survey scenarios, and future research avenues could further enhance their adaptability, extending their utility to more intricate sampling designs.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Sankhya, L. T. A general unbiased estimator for the variance of a finite population. Sankhya C 36, 23–32 (1974).

Isaki, C. T. Variance estimation using auxiliary information. J. Am. Stat. Assoc. 78, 117–123 (1983).

Al-Jararha, J. & Ahmed, M. S. The class of chain estimators for a finite population variance using double sampling. Int. J. Inf. Manage. Sci. 13, 13–18 (2002).

Shabbir, J. & Gupta, S. On improvement in variance estimation using auxiliary information. Commun. Stat. Theory Methods 36, 2177–2185 (2007).

Singh, R. & Malik, S. Improved estimation of population variance using information on auxiliary attribute in simple random sampling. Appl. Math. Comput. 235, 43–49 (2014).

Subramani, J. Generalized modified ratio type estimator for estimation of population variance. Sri Lankan J. Appl. Stat. 16, 69 (2015).

Yadav, S. K., Kadilar, C., Shabbir, J. & Gupta, S. Improved family of estimators of population variance in simple random sampling. J. Stat. Theory Pract. 9, 219–226 (2015).

Adichwal, N. K., Sharma, P. & Singh, R. Generalized class of estimators for population variance using information on two auxiliary variables. Int. J. Appl. Comput. Math. 3, 651–661 (2017).

Sanaullah, A., Asghar, A. & Hanif, M. General class of exponential estimator for estimating finite population variance. J. Reliab. Stat. Stud. 2017, 1–16 (2017).

Yadav, S. K., Misra, S., Mishra, S. S. & Khanal, S. P. Variance estimator using tri-mean and inter quartile range of auxiliary variable. Nepal. J. Stat. 1, 83–91 (2017).

Lone, H. A. & Tailor, R. Estimation of population variance in simple random sampling. J. Stat. Manag. Syst. 20, 17–38 (2017).

Singh, H. P., Pal, S. K. & Yadav, A. A study on the chain ratio-ratio-type exponential estimator for finite population variance. Commun. Stat. Theory Methods 47, 1442–1458 (2018).

Singh, G. N. & Khalid, M. Effective estimation strategy of population variance in two-phase successive sampling under random non-response. J. Stat. Theory Pract. 13, 4 (2018).

Shahzad, U., Hanif, M., Koyuncu, N. & Sanaullah, A. On the estimation of population variance using auxiliary attribute in absence and presence of non-response. Electron. J. Appl. Stat. Anal. 11, 608–621 (2018).

Yadav, S. K., Sharma, D. K. & Mishra, S. S. Searching efficient estimator of population variance using tri-mean and third quartile of auxiliary variable. Int. J. Business Data Anal. 1, 30–40 (2019).

Gulzar, M. A., Abid, M., Nazir, H. Z., Zahid, F. M. & Riaz, M. On enhanced estimation of population variance using unconventional measures of an auxiliary variable. J. Stat. Comput. Simul. 90, 2180–2197 (2020).

Harrison Oghenekevwe, E., Cecilia Njideka, E. & Chizoba Sylvia, O. Distribution effect on the efficiency of some classes of population variance estimators using information of an auxiliary variable under simple random sampling. SJAMS 8, 27 (2020).

Sharma, D. K., Yadav, S. K. & Sharma, H. Improvement in estimation of population variance utilising known auxiliary parameters for a decision-making model. Int. J Math. Model. Numer. Optim. 12, 15–28 (2022).

Niaz, I., Sanaullah, A., Saleem, I. & Shabbir, J. An improved efficient class of estimators for the population variance. Concurr. Comput. 34, e6620 (2022).

Kumar-Adichwal, N. et al. Estimation of general parameters using auxiliary information in simple random sampling without replacement. J. King Saud Univ. Sci. 34, 101754 (2022).

Zaman, T. & Bulut, H. An efficient family of robust-type estimators for the population variance in simple and stratified random sampling. Commun. Stat. Theory Methods 52, 2610–2624 (2023).

Singh, M. P. On the estimation of ratio and product of the population parameters. Sankhyā Indian J. Stat. Ser. B (1960-2002) 27, 321–328 (1965).

Yadav, S. K. & Kadilar, C. A two parameter variance estimator using auxiliary information. Appl. Math. Comput. 226, 117–122 (2014).

Muneer, S., Khalil, A., Shabbir, J. & Narjis, G. A new improved ratio-product type exponential estimator of finite population variance using auxiliary information. J. Stat. Comput. Simul. 88, 3179–3192 (2018).

Sanaullah, A., Niaz, I., Shabbir, J. & Ehsan, I. A class of hybrid type estimators for variance of a finite population in simple random sampling. Commun. Stat. Simul. Comput. 51, 5609–5619 (2022).

Murthy, M. N. Sampling theory and methods. Sampling Theory Methods 1967, 145 (1967).

Cochran, W. G. Sampling theory when the sampling-units are of unequal sizes. J. Am. Stat. Assoc. 37, 199–212 (1942).

Subramani, J. & Gnanasegaran, K. Variance estimation using quartiles and their functions of an auxiliary variable. Int. J. Stat. Appl. 2(5), 67–72 (2012).

Author information

Authors and Affiliations

Contributions

Hameed Ali: conceptualization, validation, investigation, data curation, methodology, writing, review & editing, formal analysis, visualization, original draft preparation. Syed Muhammad Asim: supervision, methodology, conceptualization, validation, review & editing, data curation. Muhammad Ijaz: supervision, validation, review & editing. Tolga Zaman: supervision, validation, review & editing. Soofia Iftikhar: supervision, validation, review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ali, H., Asim, S.M., Ijaz, M. et al. Improvement in variance estimation using transformed auxiliary variable under simple random sampling. Sci Rep 14, 8117 (2024). https://doi.org/10.1038/s41598-024-58841-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-024-58841-x

- Springer Nature Limited