Abstract

In the context of gas processing and carbon sequestration, an adequate understanding of the solubility of acid gases in ionic liquids (ILs) under various thermodynamic circumstances is crucial. A poisonous, combustible, and acidic gas that can cause environmental damage is hydrogen sulfide (H2S). ILs are good choices for appropriate solvents in gas separation procedures. In this work, a variety of machine learning techniques, such as white-box machine learning, deep learning, and ensemble learning, were established to determine the solubility of H2S in ILs. The white-box models are group method of data handling (GMDH) and genetic programming (GP), the deep learning approach is deep belief network (DBN) and extreme gradient boosting (XGBoost) was selected as an ensemble approach. The models were established utilizing an extensive database with 1516 data points on the H2S solubility in 37 ILs throughout an extensive pressure and temperature range. Seven input variables, including temperature (T), pressure (P), two critical variables such as temperature (Tc) and pressure (Pc), acentric factor (ω), boiling temperature (Tb), and molecular weight (Mw), were used in these models; the output was the solubility of H2S. The findings show that the XGBoost model, with statistical parameters such as an average absolute percent relative error (AAPRE) of 1.14%, root mean square error (RMSE) of 0.002, standard deviation (SD) of 0.01, and a determination coefficient (R2) of 0.99, provides more precise calculations for H2S solubility in ILs. The sensitivity assessment demonstrated that temperature and pressure had the highest negative and highest positive affect on the H2S solubility in ILs, respectively. The Taylor diagram, cumulative frequency plot, cross-plot, and error bar all demonstrated the high effectiveness, accuracy, and reality of the XGBoost approach for predicting the H2S solubility in various ILs. The leverage analysis shows that the majority of the data points are experimentally reliable and just a small number of data points are found beyond the application domain of the XGBoost paradigm. Beyond these statistical results, some chemical structure effects were evaluated. First, it was shown that the lengthening of the cation alkyl chain enhances the H2S solubility in ILs. As another chemical structure effect, it was shown that higher fluorine content in anion leads to higher solubility in ILs. These phenomena were confirmed by experimental data and the model results. Connecting solubility data to the chemical structure of ILs, the results of this study can further assist to find appropriate ILs for specialized processes (based on the process conditions) as solvents for H2S.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Introduction

Hydrogen sulfide (H2S) exists in abundance in various gas fields, including synthesis gas, natural gas, and refinery gas, as well as in crude oil hydrodesulfurization operations1,2. Hydrogen sulfide must be eliminated from industrial processes due to its high toxicity, flammable gas, and acidity. H2S sequestration from natural gas generated from gas reservoirs is required to conform with environmental legislation, safety requirements, and selling gas production standards2,3,4. The most widely suggested approaches utilized in gas refineries and other associated industries are amine-based remedial activities, which employ aqueous systems of various alkylamines to discrete H2S and CO22,3,4,5,6,7. Absorption in alkanolamine-based solvents is now a commonly utilized technique for the elimination of acid ingredients. For this process, several industrially significant alkanolamines, namely diethanolamine (DEA), di-isopropanol-amine (DIPA), N-methyl-diethanolamine (MDEA), and mono-ethanolamine (MEA) are used. During the absorbent process, however, the alkanolamine solutions have certain drawbacks, including high economic expense, high energy utilization, and caustic by-product. As a result, to properly remove H2S, environmentally friendly and highly efficient absorbents are required4,5.

Ionic liquids (ILs) have recently earned much attentiveness as a suitable replacement for alkanolamine absorbents8. ILs are a novel family of solvents that have several prominent characteristics, such as non-inflammability, trivial vapor pressure, a vast liquid range, excellent temperature stability, good ion and electrical conductivity, electrochemical consistency, simplicity of recycling, high viscosity, and adjustable properties9,10,11,12. As a result, they have the potential to be highly useful in chemical processes, and petroleum engineering. Like salt molecules, ILs are formed of an anion (namely; tetrafluoroborate, chloride, and hexafluorophosphate anions) and a cation (like pyridinium and ammonium cations)13. Because of the small vapor pressure of ILs compared to that of other solvents, they are referred to as polar non-volatile solvents14,15. To develop, optimize, and regulate gas–liquid absorption processes, an exhaustive cognition of the gas solubility in ILs at different thermodynamic conditions is required16,17.

Knowing the gas solubility at various thermodynamic conditions is a key part of evaluating ILs for prospective utilization in gas sweetening systems16,18. Despite numerous recent papers that have been published on gas solubility in ILs, particularly CO2, real H2S/ILs solubility data is infrequent. This may be because of the time-consuming quiddity of laboratory measurements, the high toxicity of H2S, and the high cost of the procedures. As a result, establishing predictive techniques for evaluating the attributes of such systems under a variety of scenarios is highly recommended19,20,21,22,23,24.

The use of empirical correlations25,26, equations of state (EOSs)27,28,29,30,31,32,33, and molecular descriptors34,35 to investigate and model H2S solubility has already been discussed in the literature. These methods are usually restricted to a given system and composition, as well as a defined temperature and pressure range. Systems based on Artificial Intelligence (Al) and Machine Learning (ML) approaches can be appropriate and vigorous in calculating the H2S solubility in ILs since they can be constructed on data from an extensive range of materials under varied thermodynamic circumstances, particularly if a thorough database is utilized for model building36,37,38. Furthermore, AI and ML approaches have improved precision and generality when modeling a variety of phenomena in science and engineering domains such as environmental engineering39, petroleum engineering40,41,42,43,44,45, physical chemistry46,47,48, civil engineering49, earth science49, and chemical engineering46,49,50,51,52,53,54,55,56.

Many ILs have been synthesized during the last few decades using various types of cations and anions, and the solubility of various gases, such as O2, NH3, SO2, CO, N2, CO2, and, H2S in them has been studied at various temperatures and pressures57.

Based on 465 data points acquired from the published works, Ahmadi et al.58 presented a gene expression programming (GEP) system for the calculation of H2S solubility in ILs, where several thermodynamic properties, namely acentric factor (ω), critical pressure (Pc), pressure (P), critical temperature (Tc), and temperature (Mw) were selected as inputs. The results reveal that Soave–Redlich–Kwong (SRK) and Peng–Robinson (PR) EOSs are less accurate than the designed GEP model, which has an average absolute percent relative error (AAPRE) value of 0.04%. Shafiei et al.59 also used their databank to analyze particle swarm optimization (PSO) trained systems and backpropagation (BP). According to the findings, the PSO-ANN method was more precise than those of the BP-ANN system with an AAPRE value of 4.58%. This databank was also used by Ahmadi et al.60 and the findings of the least-squares support vector machine coupled with a genetic algorithm (LSSVM-GA) approach resulted in an AAPRE value of 7.01%. Amedi et al.61 developed three ML approaches to forecast H2S solubility in different ILs with parameters such as the Tc, Mw, and Pc of pure ILs for 664 solubility data points: Radial basis function (RBF), Multilayer perceptron (MLP), and Adaptive neuro-fuzzy inference system (ANFIS). Statistical evaluations indicated that the ANFIS, MLP, and RBF approaches, respectively, had mean absolute errors of 0.021, 0.0089, and 0.042. Zhao et al.62 reported that an extreme learning machine (ELM) system established with a large dataset including 1282 data points could accurately predict H2S/ILs solubility with an AAPRE value of 4.12%. Baghban et al.63 proposed an LSSVM method to predict the H2S solubility based on the chemical structure of cation and anion, P, and T with a vast data bank including 1282 solubility data, where the model's AAPRE value was 2.74%. Hosseini et al.17 employed 1243 data points in 33 different ionic liquids to develop an MLP model for calculating H2S solubility. Some factors were considered in this work, including pressure, acentric coefficient, and critical temperature, and the findings revealed that the proposed system can be utilized to predict H2S solubility with an absolute error of 2.57%. With the equal inputs as the prior work, Menad et al.18 proposed a novel committee machine intelligence system with genetic programming (CMIS-GP) to compute the hydrogen sulfide solubility in 33 ILs (1243 experimental data). The results demonstrate that the CMIS-GP model has a higher accuracy than the other proposed models, with an AAPRE of approximately 2.37%. Mousavi et al.53 established a convolutional neural network (CNN) model to estimate H2S solubility in ILs using constituent substructures of ILs and operating parameters with a large dataset containing 1516 data for 37 ILs. The result demonstrated that the CNN system can determine H2S solubility better than other methods, with an AAPRE value of 2.92%. Zhang et al.64 suggested a least-squares boosting (LSBoost) model based on Pc, Tc, P, the acentric factor of the ILs, and the temperature of biphasic systems to determine the solubility of supercritical CO2 in 24 ILs. The findings demonstrated that the developed LSBoost paradigm had RMSE and MAE of 2.78 and 1.62%, respectively. Zhang et al.65 designed a Gaussian process regression (GPR) model to inquire about the relationship between temperature, nanoparticle volume concentration and specific heat capacity, and the specific heat capacities of nanofluids and base liquids. The results demonstrated a high estimation precision as reflected in the estimation mean absolute error (MAE) and root mean square error (RMSE) which were 0.05% and 0.06% of the average experimental \({C}_{p-nf}\), respectively. Mosavi et al.66 implemented two bagging models and two boosting models for groundwater potential estimation with 339 groundwater resources, where bagging models had a higher undertaken than the boosting models. Mosavi et al.67 presented four novel predictive methods, namely multivariate adaptive regression spline (MARS), flexible discriminate analyses (FDA), generalized linear model (GLM), and random forest (RF), for susceptibility mapping for erosion and flood. The results indicated that in modeling the flood susceptibility, the MARS, FDA, ensemble models, RF, and GLM, respectively, had the area under the curve (AUC) of 0.89, 0.92, 0.94, 0.93, and 0.91. Also, ensemble models, MARS, FDA, GLM, and RF, respectively, had an AUC of 0.97, 0.89, 0.92, 0.93, and 0.96 in determining erosion susceptibility. Mousavi et al.68 considered boosted regression trees (BRT) and RF as two ensemble ML models for the susceptibility mapping of groundwater hardness. The ensemble model results were compared to the multivariate discriminant analysis (MDA) model. The RF, BRT, and MDA models, respectively, showed improved performance based on the modeling findings. For a total set of 171 snow avalanches susceptibility mapping, Mosavi et al.69 proposed an ensemble ML model of random subspace (RS) based on a classifier functional tree. To evaluate the proposed model's goodness-of-fit and estimation accuracy, four benchmark models—logistic model tree (LMT), logistic regression (LR), functional trees (FT), and alternating decision tree (ADT)—were utilized. The presented ensemble model (RSFT), according to the results, performed better than the other soft-computing benchmark systems. Kamran70 demonstrated the use of ensemble learning techniques to forecast the drilling rate index (DRI) of rocks, such as the decision tree (DT), RF, and adaptive boosting (AdaBoost) with 57 data points, where Sievers' J-miniature drill value (Sj), Brazilian tensile strength, Uniaxial compressive strength (UCS), and brittleness test (S20) are some of the mechanical factors of rocks that are utilized as inputs to train the model. Among the ensemble learning approaches, the RF method has the best predictive performance for both the training (RMSE: 0.85 and MAE: 0.21) and testing (RMSE: 2.64 and MAE: 1.93) datasets. Kamran71 revealed a total of 62 datasets to estimate back-break (BB) using a state-of-the-art catboost-based t-distributed stochastic neighbor embedding (t-SNE) method. In the training and testing datasets, the BB estimation model based on t-SNE + Catboost has an RMSE = 0.260, MAE = 0.207, and RMSE = 0.282, MAE = 0.224, respectively. Shahani et al.72 developed four gradient boosting ML approaches including, extreme gradient boosting (XGBoost), Catboost, gradient boosted regression (GBR), and light gradient boosting machine (LightGBM) for estimating UCS of soft sedimentary rocks using a 106-point dataset. According to the findings, the XGBoost approach with statistical parameters such as RMSE: 0.00079 and MAE: 0.00062 in the training step and RMSE: 0.00069 and MAE: 0.00054 in the testing phase was affirmed to be the most precise approach among the four suggested approaches. Ullah et al.73 suggested three methods, including K-means clustering, XGBoost, and t-SNE to forecast the short-term rock-burst risk utilizing 93 rock-burst patterns with six authoritative characteristics from micro-seismic monitoring events. The outcomes of the suggested models provide an excellent benchmark for accurate prediction of future short-term rock-burst levels. Shahani et al.74 indicated the machine learning technology to estimate drilling rate index (DRI) of rocks, namely; the random forest algorithm (RFA), long short-term memory (LSTM), and simple recurrent neural network (RNN), where the LSTM method showed the best estimation of DRI with the lowest RMSE and highest R2 in the training (0.13416, 0.999) and testing (0.19479, 0.998) step, respectively.

Most of intelligent models are black box which means the mathematical relationship is not clear and explicit. These models mostly need special software such as Matlab or Python for their applications. On the other hand, white box models are those ones which generate explicit mathematical formula and can be easily applied by a simple calculator and they do not need any special software for their applications75,76,77. In the present research, two white box methods including genetic programming (GP) and group method of data handling (GMDH) have been used, which are explained in detail.

The main objective of this work is to establish exact models for computing H2S solubility in ILs using wider datasets and powerful techniques. In order to construct genetic programming (GP), group method of data handling (GMDH), deep belief network (DBN), and extreme gradient boosting (XGBoost), a large collection of 1516 experimental data from 37 ILs is employed. Seven thermodynamic features of ILs are chosen as inputs, including T, P, Tc, Pc, ω, Mw, and boiling temperature (Tb). Considering that this research aims to compare the proficiency of intelligent methods with equations of state, therefore, the selected parameters have been tried to have the same agreement with the required features of the EOSs so that we can have an accurate comparison among different methods. The sensitivity assessment is employed to figure out the relative effect of inputs on the H2S/ILs solubility. Finally, the validity of the models is evaluated utilizing William’s plot. Figure 1 shows the steps of this study. One of the circumscriptions that can exist in this research, like all studies based on artificial intelligence techniques, is their data-driven nature, which makes predictions reliable only in the range of experimental data, although the model may be used for new data. Therefore, the bigger the database, the more comprehensive and practical the model can be.

Data collection and preparation

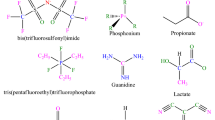

An extensive databank is essential for developing a predictive system with a high degree of trustiness and comprehensiveness. To create models, 1516 experimental data for H2S solubility of 37 ILs were acquired from the literature in the broad range of temperature range between 293.15 and 403.15 K and pressure between 0.001 and 96.3 bar. It is worth noting that H2S solubility in different ILs is from 0.0061 to 0.8900 mol fraction3,4,5,6,7,14,15,26,34,53,57,62,63,78,79,80,81,82,83,84,85,86,87,88,89,90. The provided data was apportioned into two categories before the model was developed. The training and test subsets comprise 80% and 20% of the whole data points, respectively. The test data was used to see how the generated system predict the output for new input parameters, while the training data was used to construct the original system. Features including T, P, Tc, Pc, ω, Tb, and Mw were used as inputs, while H2S solubility was expressed as a mole fraction as an output. Table 1 highlights key details about the ILs we utilized to create our systems. Table 2 also shows the statistical evaluation information, such as the maximum, mode, minimum, and mean of the seven inputs, and H2S solubility employed in the current research. The chemical structures of the diverse anions and cations used in the current work are shown in Fig. 2.

Chemical structures of different cations and anions of ILs53.

Modeling

Genetic programming (GP)

In many engineering fields, Koza91,92 presented a novel evolutionary-based technique known as genetic programming (GP) for addressing issues automatically by introducing an expression characterizing the examined phenomena. Symbolic optimization will be used to identify possible answers utilizing the well-known design of tree illustration91,92. Terminals and functions are used in this sort of illustration, with functions being mathematical algebraic operators (e.g., +, −, log, exp) and terminals being solution factors and input parameters. The potential solution, which incorporates functions and terminals, may therefore be shown using an expression tree91,92. Several genetic operators, such as mutation, crossover, and selection, are used to increase the population of potential solutions regularly. This iterative procedure ends when the system's number of iterations is met or an acceptable solution is found. As a result, a fitness function is created to verify the solution quality, particularly using the mean square error (MSE)93, which must be reduced. The initial stage in computing a fitness function is to train the model, after which it may be used for forecasting93. Figure 3 depicts the schematic of the GP model.

Group method of data handling (GMDH)

Ivakhnenko94 initially proposed the GMDH technique, which consists of mathematical methodologies and a black box nonlinear system characterization idea. He added a polynomial transfer function to the neuron, making it a more complicated unit. An automated technique for design procedure and weight modification was devised, and the links between layers of neurons were reduced. This approach may be thought of as a form of controlled Artificial Neural Network (ANN) that use natural selection to govern the system's size, intricacy, and precision. Simulation of complicated networks, function estimation, nonlinear regression, and pattern identification are the key applications of GMDH. The original model is created using the Volterra–Kolmogorov–Gabor (VKG) polynomial function as follows94:

In the above equation, \(X=({x}_{1}, {x}_{2}, \dots )\), y, \(A=({a}_{1}, {a}_{2}, \dots )\), and \({a}_{0}\) refer to the inputs vector, output vector, weights vector, and bias parameter, respectively.

The initial population of partial representations is created at the first stage of the system utilizing an algorithm that uses all conceivable combinations of two inputs. The sum of the two inputs \(l=\left(\genfrac{}{}{0pt}{}{m}{2}\right)\) determines the total number of neurons produced on the first layer. At each stage, layers are generated depending on error indicators. At each stage of the layer production process, performance is assessed. The next layer starts with the largest number of neurons feasible, determines weights, and then freezes95,96. This differs from the back propagation approach, which allows all layers to engage in the training procedure simultaneously. In the sth repetition, the training of the GMDH system to create second-order polynomials is written in the following form97:

Here w, X, and m signify the vector of factors in each neuron, the vector of system inputs, and the count of iterations of the system training step, respectively. The training and testing data are two subgroups of the sample dataset.

To recognize the count of neurons in each layer, a coefficient named "Selection pressure" should be specified as an appropriate threshold in this technique. After computing the parameters for all of the neurons, the ones that generate the worse results based on a previously set selection criterion should be deleted from the layer. RMSE is used as the threshold. The selection pressure can range from 0 to 1. All of the neurons had a similar configuration, with the best neurons being identified and progressing to the next step based on an external criterion. The precision of the obtained results may be impacted by the application of a critical threshold97.

It is worth noting that just one neuron is chosen in the final layer. Figure 4 demonstrates the schematic structure of the GMDH model.

Deep belief network (DBN)

A DBN is a combination of RBMs that can be trained by moving the RBMs from the bottom to the top layer98. The RBM in the bottom layer trains the initial inputs and takes measurements; the extracted measurements, on the other side, will be the feed of another RBM in the top layer. By continuing this process, further layers of RBM can be produced99. Figure 5 depicts a common DBN system configuration. A DBN system's training procedure may be broken down into two parts100:

First stage: Uncontrolled train each RBM layer individually to ensure that feature information is preserved as much as feasible when feature vectors map to various feature regions.

Second stage: Adding a back propagation (BP) system to the top of the RBM's final layer, which takes the RBM's final layer's output feature vectors, and controlled training the BP system.

Since each layer of the RBM can only ensure that the weights of each layer well select to the input vectors of each layer for individual training, and the vector representation of the entire DBN system does not accomplish the desirable, the entire DBN system must be fine-tuned top-down by the BP system that is established on top. The procedure of fine-tuning the DBN may also be compared to the initialization of weights in a deep BP neural network. When the network trains the BP system, this strategy aids the training process prevent flaws like local optimum and considerable time spent in multi-level training. Initial training in deep learning is the first phase, and fine-tuning is the next stage in the aforementioned training model. Because the RBM can be quickly taught by evaluating divergence, the DBN reduces the complex deep learning neural network training phase by combining the training of several RBMs, making it more efficient than other deep learning neural network systems. A significant number of studies indicate that DBN can overcome the challenges that classic BP algorithms require a significant number of tag samples and have a sluggish convergence time while training multilayer neural networks101.

Restricted Boltzmann machines (RBM)

Figure 6 depicts the structural layout of the RBM. When the status of the input layer is specified, the activation criterion of each concealed layer is independent; on the other hand, when the status of the concealed layer is provided, the activation criterion of each transparent layer is independent101,102. RBM's system energy may be calculated as103:

The activation possibility of the jth concealed unit, according to the formula is103:

Similar manner, when the concealed unit is supplied, the ith transparent unit's activation possibility is103:

When training data are provided, an RBM's variables are adjusted to suit the provided training datasets. It may be expressed as optimizing the logarithm probability function using mathematical terms as follows101:

Extreme gradient boosting (XGBoost)

A tree-based entity approach's primary idea is to utilize a set of classification and regression trees (CARTs) to accommodate training sets utilizing a regularized objective function reduction. The XGBoost is a tree-based algorithm, which is component of the GBoost decision tree architecture. To better illustrate the CART establishment, each CART is consisting of root, internal, and leaf nodes, as demonstrated in Fig. 7. The first, which shows the complete data set, is separated into internal nodes, while the leaf nodes indicate the final categories, based on binary decision procedure104. A collection of n tresses is required to train and forecast the output y for a particular dataset, where m and n indicate dimension characteristics and instances, respectively104.

In the above equation, the decision rule q(x) delineates the sample X to the binary leaf index. f denotes the area of regression trees, fk the kth independent tree, T the count of leaves on the tree, and w the weight of the leaf in Eqs. (1) and (2).

The reduction of the regularized objective function L: is employed to specified the collection of trees:

Here \(\Omega\) is the regularization parameter that aims to minimize overfitting by restricting system intricacy; l refers to a vicissitudinous convex loss function; \(\gamma\) represents the smallest loss minimization required to cleave a new leaf; and \(\lambda\) exhibits the regulation factor.

The objective function for each particular leaf is reduced in the gradient boosting strategy, and further leaves will be created repeatedly105.

The tth repetition of the given training process is denoted by t. The XGBoost technique greedily increases the area of regression trees to significantly amend the ensemble system, which is represented to as a "greedy algorithm". Consequently, the result is modified repeatedly by minimizing the objective function:

The XGBoost employs a shrinkage method that freshly affixed weights are adjusted by a learning component rate after each step of boosting. This reduces the danger of overfitting by limiting the influence of new further trees on each present particular tree106.

Results and discussion

Computational procedure

To properly estimate the H2S/ILs solubility throughout a high diversity of thermodynamic conditions, 1516 data points of 37 ILs were gathered in this work. In this regard, four smart strategies were developed after selecting the inputs: T, P, Tc, Pc, ω, Tb, and Mw. Figure 8 illustrates the pairwise correlation plot of the inputs in this work. The categories for training and testing were created at random from the collected data. To develop the method and find the best model configuration, 80% of the data sets (1212 data points) were chosen at random as the training set. The train set was utilized to study the physical rules that existed in the systems. To assess the suggested models' accuracy and validity, the remaining 20% of the data sets that contained 314 data points were used. As previously stated, the suggested GMDH strategy is the simplest established method for estimating H2S solubility in ILs. This approach is expressed as simple mathematical formulas, such as:

In the aforementioned relationships, T, Tb, and Tc are in (K), P and Pc are in (bar), ω is a unit-less variable, molecular weight is in (g/mole), and H2S solubility is in (mole fraction). As mentioned earlier, the developed GP smart model for computing H2S solubility in ILs is defined by the following correlations:

where

Statistical assessment of the developed methods

Statistical criteria were employed to assess the precision and vigorous of the suggested techniques in forecasting H2S/ILs solubility. The statistical variables used in the analysis were average absolute percent relative error (AAPRE%), root mean square error (RMSE), coefficient of determination (R2), standard deviation (SD), average percent relative error (APRE%), and a-20 index. The mathematical expression of the aforementioned criteria is as below:

-

1.

Average absolute percent relative error:

$$AAPRE=\frac{1}{n}\sum_{i=1}^{n}\left|\{({S}_{i exp.}-{S}_{i pred.})/ {S}_{i exp.}\}\times 100\right|$$(12) -

2.

Root mean square error:

$$RMSE= \sqrt{\frac{1}{n}{\sum }_{i=1}^{n}{\left({S}_{i exp.}-{S}_{i pred.}\right)}^{2}}$$(13) -

3.

Correlation coefficient:

$${R}^{2}=1-\frac{{\sum }_{i=1}^{n}{\left({S}_{i exp.}-{S}_{i pred.}\right)}^{2}}{{\sum }_{i=1}^{n}{\left({S}_{i exp.}-{\overline{S} }_{i exp.}\right)}^{2}}$$(14) -

4.

Standard deviation:

$$SD=\sqrt{\frac{1}{n-1}\sum_{i=1}^{n}{\left(\frac{{S}_{i exp.}-{S}_{i pred.}}{{S}_{i exp.}}\right)}^{2}}$$(15) -

5.

Average percent relative error:

$$APRE=\frac{1}{n}\sum_{i=1}^{n}[({S}_{i exp.}-{S}_{i pred.})/ {S}_{i exp.}]\times 100$$(16) -

6.

a-20 Index:

$$a-20\,\, Index=\frac{m20}{n}$$(17)

where, the count of data points in the original data set is denoted by n. \({S}_{ipred.}\) and \({S}_{iexp.}\), respectively, state the estimated and measured values of ith data. \({\overline{S} }_{iexp}\) defined the mean value of experimental data. The parameter m20 refers to the samples with a value of rate exp./pred. value between 0.8 and 1.2.

Table 3 indicates the computed values for these variables for the testing, training, and the whole dataset of all paradigms. The findings show that XGBoost gives the highest accurate predictions of all the evaluated models. Indeed, the XGBoost paradigm has the highest precise prediction of H2S/ILs solubility, with AAPRE values of 1.14% for the all data-points, 1.30% of testing set, 1.09% for the training.

Graphical analysis of the models

In addition to the presented error evaluation criteria, four graphical analyses were provided in this research to assess the effectiveness of the recommended paradigms. A succinct description of these charts is presented as follows:

-

1.

Cumulative frequency: In this graph, the cumulative frequency is shown versus the absolute relative deviation. The percentage of various error intervals is presented.

-

2.

Error bar: In this graph, the AAPRE of the suggested approaches is shown by bars.

-

3.

Cross-plot: The estimated value is displayed against the experimental value. In such a plot, the tight collection of data points along the Y = X line suggests that the model is the most correct.

-

4.

Taylor diagram: It demonstrates which of the numerous plausible representations of a system or phenomenon is the most realistic. The degree of connection between the estimated and real behavior is measured using three statistical criteria: RMSE, SD, and R2.

Figure 9 shows cross plots of actual values against predicted values for the four techniques utilized in this study, namely DBN, GMDH, GP, and XGBoost. Around the unit slope line, there is a considerable dispersion of data points, both testing and training ones, indicating that three smart models, GP, DBN, and GMDH, have an improper match between predicted and experimental data points. As can be shown from this chart, a tight aggregation of entire dataset is placed around the Y = X line for both training and testing sets for the developed XGBoost model. Indeed, the XGBoost approach outperforms other models in terms of accuracy and performance, and it can estimate the hydrogen sulfide/ILs solubility with less variation from real data (the same results as reported in Table 3). Cross plots of an estimated H2S solubility in ILs versus experimental data are displayed in Fig. 10 to compare the forecasting ability of the XGBoost model as the best model against available methods in the Mousavi et al.53 study, namely, CNN (R2train: 0.9991 and R2test: 0.9989), DBN (R2train: 0.9983 and R2test: 0.9912), and DJINN (R2train: 0.9975 and R2test: 0.9868). The previously developed techniques' data points are scattered around the unit slope line in this figure, but the established XGBoost (R2train: 0.9998 and R2test: 0.9998) model's training and test set data points are tightly gathered around the unit slope line. This reveals the developed XGBoost model's high precision as well as the inability of previous approaches to calculate H2S solubility in ILs. In the following step, Fig. 11 shows a comparison of the present study results and introduced methods by Mousavi et al.53. As this figure demonstrates, about 96% of XGBoost, 86% of CNN53, 75% of DBN and RNN models53, 70% of DJINN53, 57% of DBN, 26% of GMDH, and17% of GP predictions have errors less than 5%. This implies that the XGBoost smart method is the most practical method for calculating H2S solubility in ILs among all previously investigated techniques. Figure 12 compares the AAPRE % of the literature models to the smart methodologies employed in this work to analyze the model's capabilities. Examples of available techniques are including ELM1 (1318 measured data for 28 ILs)34, COSMO-RS (722 (15 ILs) measured data- ADF 2005)57, GEP (465 measured data for 11 ILs)58, SRK-EOS (465 (11 ILs) measured data, kij ≠ 0)58, SRK-EOS (kij = 0, 465 (11 ILs) measured data)58, PR-EOS (kij ≠ 0, 465 (11 ILs) measured data)58, COSMO-RS (722 (15 ILs) measured data—ADF 1998)57, Empirical model (664 measured data for for 14 ILs)89, QSPR1 (1282 measured data for 27 ILs)35, PR-EOS (kij ≠ 0, 664 (14 ILs) measured data)89, ELM (1282 data points for 27 ILs)62, ELM2 (1318 measured data for 28 ILs)34, QSPR2 (27 ILs—1282 data points)35, and Mousavi et al.53 which developed four smart models namely CNN, DBN, RNN, and DJINN for estimation H2S with a large databanks (1516 data-point in 37 various ILs). In compared to previous techniques for computing H2S solubility in ILs based on thermodynamic parameters, the XGBoost (AAPRE = 1.14%) model is clearly the most accurate and adaptable. Furthermore, Fig. 13 introduced Taylor chart, which combines a variety of evaluation criteria for a more intelligible design. This graphic illustrates all of the intelligent models in terms of how well they account H2S solubility in ILs. The RMSE, SD, and R2 of systems, namely XGBoost, DBN, GP, and GMDH, are implemented to quantify the difference between the calculated and actual data. The centered RMSE is calculated using a distance from the total measure as the reference point. The consummate predictive system, which is indicated by a point with R2 equal to 1, is next criterion in the Taylor diagram. The RMSE and R2 values for the developed XGBoost smart method in this study were 0.0024 and 0.999, respectively. The statistical parameters for the smart methods in the current study are shown in Fig. 13 after the models have been put into the Taylor diagram. Based on how far away from the desire instance, which is labeled as "measured" in this chart, each paradigm's overall performance is evaluated. The Taylor diagram further emphasizes XGBoost's domination because it is the closest to actual measurements when evaluating performance globally.

Sensitivity analysis

In the current survey, a sensitivity assessment was accomplished to detect the influence of each input variable (i.e., P, T, Pc, Tc, ω, Mw, and Tb) on the output prediction using the XGBoost as the best model developed. In this regard, the relevancy factor (r) was used to determine the degree of each parameter's effect on the H2S solubility in ILs as an output parameter55,107. The r value is shown below:

here \(\overline{\mathrm{O} }\) and \({\mathrm{O}}_{\mathrm{j}}\) are the mean jth values of the forecasted parameters (O \(=\) H2S solubility in ILs), respectively. While, \({\mathrm{inp}}_{\mathrm{i}}\) and \({\mathrm{inp}}_{\mathrm{i},\mathrm{j}}\) represent the mean of the ith input parameter and the jth value, respectively (\({\mathrm{inp}}_{\mathrm{i}}\) is P, T, Pc, Tc, ω, Mw, and Tb).

The relevance factor (r), which ranges from [− 1, 1], depicts the impression of inputs on the model's output as follows:

-

1.

If \(\left(r>0\right)\), each of the input variables has an increasing effect on the predicted parameter. In other words, the output value increases when the desired parameter is increased. As a result, the larger the impact, the closer the relevance factor is to 1.

-

2.

If \(\left(r=0\right)\), the output data and the input parameters have no relationship or this is increasing a region and decreasing in another region.

-

3.

If \(\left(r<0\right)\), the input variable's effect on the output is decreasing. Enhancing the targeted variable decreases the output parameter's value. The larger the effect, the closer the relevance factor is to − 1.

The relevancy factor value for the XGBoost model's inputs as the best paradigm is indicated in Fig. 14. According to this figure, each of input parameters including P, Mw, Tb, and Tc have direct effects on H2S solubility, whereas T, Pc, and ω have contrary effects. This means that changing the value of each input will change the value of H2S solubility. Among the positive input parameters, the pressure with a relevancy factor of + 0.537 has the greatest impact. As a result, the solubility of H2S increased as pressure increased. This phenomenon is explained by Henry's law, which expresses that the gas solubility in liquids is proportional to the pressure of that gas above the solution's surface. Gas molecules are driven into the solution as pressure increases, therefore this leads to higher amount of gas molecules dissolve in the solution58. On the other hand, the temperature with a relevancy value of − 0.230 has the highest influence through the negative input variables. This implies that when the temperature is raised, the solubility of H2S decreased. Consequently, heating the solution creates thermal energy, which overcome the gas/solvent molecules attraction, decreasing the gas solubility53.

Applicability domain of the XGBoost method

To evaluate the validity region of the suggested XGBoost system and to distinguish any data that is dubious, the Leverage approach was utilized108,109,110. The Leverage evaluation, that has been formed graphically through Williams' plot, is one of the most substantial methods of outlier distinction. This method includes computing residuals and the Hat matrix for the input data. The deviation of actual data and method predictions is represented by residual values (R), while the Hat matrix is determined as indicated here111:

where X is an (a × b) matrix, where, a is the number of data points and b is the number of method variables, \({X}^{t}\) is the transpose of the matrix X. Furthermore, H* (the Leverage limit) is calculated as \(\frac{3(a+1)}{b}\). In this study, the H* value for the XGBoost model was 0.015. The R values are then plotted against the hat variable to produce the Williams plot, which shows the suspicious data as well as the usable region of the model. The developed paradigm is regarded authentic and its forecasts are performed in the validity scope if majority of the data points were collected between the ranges of 0 ≤ H ≤ H∗, and − 3 ≤ R ≤ 3. Figure 15 shows the Williams plot of the XGBoost method. Virtually all data points in Fig. 15 appeared to be between 0 ≤ H ≤\(0.015\) and − 3 ≤ R ≤ 3. Therefore, only 56 data points are over and above the aforementioned range, according to the outlier’s identification findings. Indeed, 24 data points of whole data set (1.58% of databank) are suspected data, and the rest of the data (32 data points, 2.11% of all data) are outside the allowed leverage (0.015 ≤ H). The findings in Fig. 15 show that the XGBoost model used to predict H2S in ILs is statistically valid, and the datasets used to create the model are of sufficient quality to be used.

Qualitative investigation of H2S solubility in ILs

Hydrogen sulfide/ILs solubility increases with rising pressure and decreasing temperature, and a similar pattern is also evident for CO2 solubility in ionic liquids112. This gas dissolves due to its molecules interact with those of the liquid. Since heat is generated when these new attractive interactions create, dissolution is an exothermic process. Thermal energy is created when heat is applied to a solution, which prevails the gas/liquid molecules attractions, decreasing the gas solubility. In compared to the anion, the cation has a minor effect on H2S solubility. Figure 16 shows the influence of cation alkyl chain length on H2S solubility at 313.15 K with an identical anion. In comparison to ILs with shorter chains, those with longer alkyl chains may have greater H2S solubility ([C8MIm]+ > [C6MIm]+ > [C2MIm]+). This effect is explained by the verity that ILs with longer alkyl chains have larger free volumes, which allows them to raise van der Waals interactions while also admitting more H2S molecules5,6,62. Figure 17 shows the influence of fluorine content in anion on H2S solubility at 313.15 K for three ILs with various kinds of anions but the same cation. Three ILs are including: 1-(2-hydroxyethyl)-3-methyl-imidazolium bis(trifluoromethylsulfonyl)amide [C2OHMIM] [NTf2], 1-(2-hydroxyethyl)-3-methyl-imidazolium hexafluorophosphate [C2OHMIM] [PF6], and 1-(2-hydroxyethyl)-3-methyl-imidazolium trifluoromethanesulfonate [C2OHMIM] [TfO]62,63. As a result, increased H2S solubility is linked to higher fluorine content in anion ([NTf2] > [PF6] > [BF4])5,6. Finally, based on Figs. 16 and 17, it can be stated that the constructed XGBoost could predict this phenomenon as an accurate and highly reliable model.

Pressure trend analysis of the developed XGBoost approach

Different visual evaluations were performed as the last assessment step to examine the XGBoost model's capacity in the H2S solubility in various ILs. The validity of developed models, on the other hand, may be assessed by comparing the trend of measured variations to experimental and estimated data. Figure 18 illustrates the effect of pressure on H2S solubility for two different ILs, methyldiethanolammonium acetate [MEDAH] [Ac] and 1-ethyl-3-methylimidazolium propionate [C2MIM] [CH3CH2CO2], at temperatures of 303.2 K and 293.15 K, respectively. Figure 18 depicts that the suggested XGBoost model was able to correctly determine the physical trend of the behavior, as demonstrated in this diagram. The H2S becomes more soluble in ILs as the pressure increases. This phenomenon is explained by Henry's law58. Gas molecules are compelled into the solution as pressure rises, therefore this leads to a higher quantity of gas molecules incorporated in the solution. As a result, based on thermodynamic characteristics, temperature, and pressure, the proposed technique may produce exact and reliable predictions for the H2S solubility in various ILs. As previously stated, Fig. 14 depicts a sensitivity assessment of the inputs and output of the XGBoost. Pressure has a favorable influence on H2S solubility in various ILs, which is consistent with the findings shown in Fig. 18.

Conclusions

In the current study, 1516 real data points were used to forecast the H2S solubility in ILs. A comprehensive databank was prepared from literature and includes variables such as T, P, Tc, Pc, ω, Tb, and Mw. In this work, four intelligent models—DBN, GMDH, GP, and XGBoost—were developed to compute the H2S solubility in ILs. The statistical indices (namely; AAPRE, APRE, RMSE, SD, and R2) and graphical analyses (such as; cross-plot, Tylor diagram, error chart, and cumulative frequency) findings of these recently implemented tools were applied to assess the achievements of the suggested methods. The following findings are found from this research:

-

1.

The H2S solubility in ILs was estimated using four models in the current study, of which XGBoost was determined to be the most precise and valid.

-

2.

The newly established XGBoost model for computing the H2S solubility in ILs systems is smart enough to make very accurate predictions about the H2S solubility values (total AAPRE: 1.14%, train AAPRE: 1.09%, and test AAPRE: 1.30%).

-

3.

Furthermore, the XGBoost is more accurate and trustworthy when results are compared to those of other well-known earlier models.

-

4.

The relevancy factor was applied in order to examine how each input parameter affected the H2S solubility. Pressure (based on Henry’s law) has a more favorable impact on model results than the other input variables for the XGBoost method. While the solubility of H2S is inversely related to temperature.

-

5.

According to the leverage technique, the majority of the data are empirically valid, and only a small number of data points are found outside the XGBoost model's application area.

-

6.

In terms of pressure, the XGBoost model's output trends make logical.

-

7.

The developed XGBoost model is not only quick and inexpensive, but it also accurately predicts the solubility of H2S.

Data availability

All the data have been collected from literature. We cited all the references of the data in the manuscript. However, the data will be available from the corresponding author on reasonable request.

References

Rahmati-Rostami, M., Ghotbi, C., Hosseini-Jenab, M., Ahmadi, A. N. & Jalili, A. H. Solubility of H2S in ionic liquids [hmim][PF6],[hmim][BF4], and [hmim][Tf2N]. J. Chem. Thermodyn. 41, 1052–1055 (2009).

Kohl, A. L. & Nielsen, R. B. Gas Purification, 1997 (Elsevier, 2011).

Shiflett, M. B., Niehaus, A. M. S. & Yokozeki, A. Separation of CO2 and H2S using room-temperature ionic liquid [bmim][MeSO4]. J. Chem. Eng. Data 55, 4785–4793 (2010).

Jalili, A. H., Rahmati-Rostami, M., Ghotbi, C., Hosseini-Jenab, M. & Ahmadi, A. N. Solubility of H2S in ionic liquids [bmim][PF6],[bmim][BF4], and [bmim][Tf2N]. J. Chem. Eng. Data 54, 1844–1849 (2009).

Jalili, A. H. et al. Solubility of CO2, H2S, and their mixture in the ionic liquid 1-octyl-3-methylimidazolium bis (trifluoromethyl) sulfonylimide. J. Phys. Chem. B 116, 2758–2774 (2012).

Shokouhi, M., Adibi, M., Jalili, A. H., Hosseini-Jenab, M. & Mehdizadeh, A. Solubility and diffusion of H2S and CO2 in the ionic liquid 1-(2-hydroxyethyl)-3-methylimidazolium tetrafluoroborate. J. Chem. Eng. Data 55, 1663–1668 (2010).

Jalili, A. H., Shokouhi, M., Maurer, G. & Hosseini-Jenab, M. Solubility of CO2 and H2S in the ionic liquid 1-ethyl-3-methylimidazolium tris (pentafluoroethyl) trifluorophosphate. J. Chem. Thermodyn. 67, 55–62 (2013).

Barati-Harooni, A. et al. Prediction of H2S solubility in liquid electrolytes by multilayer perceptron and radial basis function neural networks. Chem. Eng. Technol. 40, 367–375 (2017).

Zhang, X. et al. Carbon capture with ionic liquids: Overview and progress. Energy Environ. Sci. 5, 6668–6681 (2012).

Zhang, S., Sun, N., He, X., Lu, X. & Zhang, X. Physical properties of ionic liquids: Database and evaluation. J. Phys. Chem. Ref. Data 35, 1475–1517 (2006).

Seddon, K. R. Ionic liquids for clean technology. J. Chem. Technol. Biotechnol. Int. Res. Process. Environ. Clean Technol. 68, 351–356 (1997).

Plechkova, N. V. & Seddon, K. R. Applications of ionic liquids in the chemical industry. Chem. Soc. Rev. 37, 123–150. https://doi.org/10.1039/b006677j (2008).

Davis, J. H. Jr. Task-specific ionic liquids. Chem. Lett. 33, 1072–1077 (2004).

Jou, F.-Y. & Mather, A. E. Solubility of hydrogen sulfide in [bmim][PF 6]. Int. J. Thermophys. 28, 490 (2007).

Pomelli, C. S., Chiappe, C., Vidis, A., Laurenczy, G. & Dyson, P. J. Influence of the interaction between hydrogen sulfide and ionic liquids on solubility: Experimental and theoretical investigation. J. Phys. Chem. B 111, 13014–13019 (2007).

Tiwikrama, A. H., Taha, M. & Lee, M.-J. Experimental and computational studies on the solubility of carbon dioxide in protic ammonium-based ionic liquids. J. Taiwan Inst. Chem. Eng. 112, 152–161 (2020).

Hosseini, M., Rahimi, R. & Ghaedi, M. Hydrogen sulfide solubility in different ionic liquids: An updated database and intelligent modeling. J. Mol. Liq. 317, 113984 (2020).

Amar, M. N., Ghriga, M. A. & Ouaer, H. On the evaluation of solubility of hydrogen sulfide in ionic liquids using advanced committee machine intelligent systems. J. Taiwan Inst. Chem. Eng. 118, 159–168 (2021).

Eslamimanesh, A., Gharagheizi, F., Mohammadi, A. H. & Richon, D. Artificial neural network modeling of solubility of supercritical carbon dioxide in 24 commonly used ionic liquids. Chem. Eng. Sci. 66, 3039–3044 (2011).

Torrecilla, J. S., Palomar, J., García, J., Rojo, E. & Rodríguez, F. Modelling of carbon dioxide solubility in ionic liquids at sub and supercritical conditions by neural networks and mathematical regressions. Chemom. Intell. Lab. Syst. 93, 149–159 (2008).

Ji, X. & Adidharma, H. Thermodynamic modeling of CO2 solubility in ionic liquid with heterosegmented statistical associating fluid theory. Fluid Phase Equilib. 293, 141–150 (2010).

Shariati, A., Ashrafmansouri, S.-S., Osbuei, M. H. & Hooshdaran, B. Critical properties and acentric factors of ionic liquids. Korean J. Chem. Eng. 30, 187–193 (2013).

Arce, P. F., Robles, P. A., Graber, T. A. & Aznar, M. Modeling of high-pressure vapor–liquid equilibrium in ionic liquids+ gas systems using the PRSV equation of state. Fluid Phase Equilib. 295, 9–16 (2010).

Zhang, Y. & Xu, X. Machine learning bioactive compound solubilities in supercritical carbon dioxide. Chem. Phys. 550, 111299 (2021).

Mortazavi-Manesh, S., Satyro, M. A. & Marriott, R. A. Screening ionic liquids as candidates for separation of acid gases: Solubility of hydrogen sulfide, methane, and ethane. AIChE J. 59, 2993–3005 (2013).

Mesbah, M. et al. Rigorous correlations for predicting the solubility of H2S in methylimidazolium-based ionic liquids. Can. J. Chem. Eng. 98, 441–452 (2020).

Yokozeki, A. & Shiflett, M. B. Gas solubilities in ionic liquids using a generic van der Waals equation of state. J. Supercrit. Fluids 55, 846–851 (2010).

Rahmati-Rostami, M., Behzadi, B. & Ghotbi, C. Thermodynamic modeling of hydrogen sulfide solubility in ionic liquids using modified SAFT-VR and PC-SAFT equations of state. Fluid Phase Equilib. 309, 179–189 (2011).

Al-fnaish, H. & Lue, L. Modelling the solubility of H2S and CO2 in ionic liquids using PC-SAFT equation of state. Fluid Phase Equilib. 450, 30–41 (2017).

Llovell, F., Marcos, R. M., MacDowell, N. & Vega, L. F. Modeling the absorption of weak electrolytes and acid gases with ionic liquids using the soft-SAFT approach. J. Phys. Chem. B 116, 7709–7718 (2012).

Shahriari, R., Dehghani, M. R. & Behzadi, B. A modified polar PHSC model for thermodynamic modeling of gas solubility in ionic liquids. Fluid Phase Equilib. 313, 60–72 (2012).

Shojaeian, A. Thermodynamic modeling of solubility of hydrogen sulfide in ionic liquids using Peng Robinson-Two State equation of state. J. Mol. Liq. 229, 591–598 (2017).

Panah, H. S. Modeling H2S and CO2 solubility in ionic liquids using the CPA equation of state through a new approach. Fluid Phase Equilib. 437, 155–165 (2017).

Kang, X., Qian, J., Deng, J., Latif, U. & Zhao, Y. Novel molecular descriptors for prediction of H2S solubility in ionic liquids. J. Mol. Liq. 265, 756–764 (2018).

Zhao, Y. et al. Predicting H 2 S solubility in ionic liquids by the quantitative structure–property relationship method using S $σ$-profile molecular descriptors. RSC Adv. 6, 70405–70413 (2016).

Atashrouz, S., Mirshekar, H., Hemmati-Sarapardeh, A., Moraveji, M. K. & Nasernejad, B. Implementation of soft computing approaches for prediction of physicochemical properties of ionic liquid mixtures. Korean J. Chem. Eng. 34, 425–439. https://doi.org/10.1007/s11814-016-0271-7 (2017).

Amar, M. N., Ghriga, M. A. & Hemmati-Sarapardeh, A. Application of gene expression programming for predicting density of binary and ternary mixtures of ionic liquids and molecular solvents. J. Taiwan Inst. Chem. Eng. 117, 63–74 (2020).

Mehrjoo, H., Riazi, M., Nait Amar, M. & Hemmati-Sarapardeh, A. Modeling interfacial tension of methane-brine systems at high pressure and high salinity conditions. J. Taiwan Inst. Chem. Eng. 114, 125–141. https://doi.org/10.1016/j.jtice.2020.09.014 (2020).

Shishegaran, A., Saeedi, M., Kumar, A. & Ghiasinejad, H. Prediction of air quality in Tehran by developing the nonlinear ensemble model. J. Clean. Prod. 259, 120825 (2020).

Ahmadi, M. A. & Chen, Z. Machine learning models to predict bottom hole pressure in multi-phase flow in vertical oil production wells. Can. J. Chem. Eng. 97, 2928–2940 (2019).

Ahmadi, M. A. & Ebadi, M. Evolving smart approach for determination dew point pressure through condensate gas reservoirs. Fuel 117, 1074–1084 (2014).

Ahmadi, M.-A., Masumi, M., Kharrat, R. & Mohammadi, A. H. Gas analysis by in situ combustion in heavy-oil recovery process: Experimental and modeling studies. Chem. Eng. Technol. 37, 409–418 (2014).

Ahmadi, M. A., Ebadi, M., Marghmaleki, P. S. & Fouladi, M. M. Evolving predictive model to determine condensate-to-gas ratio in retrograded condensate gas reservoirs. Fuel 124, 241–257 (2014).

Ahmadi, M. A., Ebadi, M. & Yazdanpanah, A. Robust intelligent tool for estimating dew point pressure in retrograded condensate gas reservoirs: Application of particle swarm optimization. J. Pet. Sci. Eng. 123, 7–19 (2014).

Al-Kharusi, A. S. & Blunt, M. J. Network extraction from sandstone and carbonate pore space images. J. Pet. Sci. Eng. 56, 219–231 (2007).

Korol, R. & Segal, D. Machine learning prediction of DNA charge transport. J. Phys. Chem. B. 123, 2801–2811 (2019).

Zhang, P., Shen, L. & Yang, W. Solvation free energy calculations with quantum mechanics/molecular mechanics and machine learning models. J. Phys. Chem. B. 123, 901–908 (2018).

Nait Amar, M., Ghriga, M. A., Ben Seghier, M. E. A. & Ouaer, H. Prediction of lattice constant of a2xy6 cubic crystals using gene expression programming. J. Phys. Chem. B. 124, 6037–6045 (2020).

Shishegaran, A., Khalili, M. R., Karami, B., Rabczuk, T. & Shishegaran, A. Computational predictions for estimating the maximum deflection of reinforced concrete panels subjected to the blast load. Int. J. Impact Eng. 139, 103527 (2020).

Mousavi, S. P. et al. Modeling thermal conductivity of ionic liquids: A comparison between chemical structure and thermodynamic properties-based models. J. Mol. Liq. 322, 114911 (2021).

Mousavi, S.-P. et al. Modeling surface tension of ionic liquids by chemical structure-intelligence based models. J. Mol. Liq. 11, 6961 (2021).

Ottman, N. et al. Soil exposure modifies the gut microbiota and supports immune tolerance in a mouse model. J. Allergy Clin. Immunol. 143, 1198–1206 (2019).

Mousavi, S. P. et al. Modeling of H2S solubility in ionic liquids using deep learning: A chemical structure-based approach. J. Mol. Liq. 351, 118418 (2022).

Nakhaei-Kohani, R. et al. Machine learning assisted structure-based models for predicting electrical conductivity of ionic liquids. J. Mol. Liq. 11, 9509 (2022).

Mousavi, S. P. et al. Viscosity of ionic liquids: Application of the Eyring’s theory and a committee machine intelligent system. Molecules 26, 156 (2020).

Zhang, Y. & Xu, X. Modeling oxygen ionic conductivities of ABO3 Perovskites through machine learning. Chem. Phys. 558, 111511 (2022).

Lei, Z., Dai, C. & Chen, B. Gas solubility in ionic liquids. Chem. Rev. 114, 1289–1326 (2014).

Ahmadi, M. A., Haghbakhsh, R., Soleimani, R. & Bajestani, M. B. Estimation of H2S solubility in ionic liquids using a rigorous method. J. Supercrit. Fluids 92, 60–69 (2014).

Shafiei, A. et al. Estimating hydrogen sulfide solubility in ionic liquids using a machine learning approach. J. Supercrit. Fluids 95, 525–534. https://doi.org/10.1016/j.supflu.2014.08.011 (2014).

Ahmadi, M. A., Pouladi, B., Javvi, Y., Alfkhani, S. & Soleimani, R. Connectionist Technique Estimates H2S Solubility in Ionic Liquids Through a Low Parameter Approach (Elsevier B.V, 2015). https://doi.org/10.1016/j.supflu.2014.11.009.

Amedi, H. R., Baghban, A. & Ahmadi, M. A. Evolving machine learning models to predict hydrogen sulfide solubility in the presence of various ionic liquids. J. Mol. Liq. 216, 411–422 (2016).

Zhao, Y. et al. Hydrogen sulfide solubility in ionic liquids (ILs): An extensive database and a new ELM Model mainly established by imidazolium-based ILs. J. Chem. Eng. Data 61, 3970–3978. https://doi.org/10.1021/acs.jced.6b00449 (2016).

Baghban, A., Sasanipour, J. & Habibzadeh, S. others, Estimating solubility of supercritical H2S in ionic liquids through a hybrid LSSVM chemical structure model. Chin. J. Chem. Eng. 27, 620–627 (2019).

Zhang, Y. & Xu, X. Solubility predictions through LSBoost for supercritical carbon dioxide in ionic liquids. New J. Chem. 44, 20544–20567 (2020).

Zhang, Y. & Xu, X. Machine learning specific heat capacities of nanofluids containing CuO and Al2O3. AIChE J. 67, e17289 (2021).

Mosavi, A. et al. Ensemble boosting and bagging based machine learning models for groundwater potential prediction. Water Resour. Manage. 35, 23–37 (2021).

Mosavi, A. et al. Ensemble models of GLM, FDA, MARS, and RF for flood and erosion susceptibility mapping: A priority assessment of sub-basins. Geocarto Int. 37, 2541–2560 (2022).

Mosavi, A. et al. Susceptibility prediction of groundwater hardness using ensemble machine learning models. Water 12, 2770 (2020).

Mosavi, A. et al. Towards an ensemble machine learning model of random subspace based functional tree classifier for snow avalanche susceptibility mapping. IEEE Access. 8, 145968–145983 (2020).

Kamran, M. A probabilistic approach for prediction of drilling rate index using ensemble learning technique. J. Min. Environ. 12, 327–337 (2021).

Kamran, M. A state of the art catboost-based T-distributed stochastic neighbor embedding technique to predict back-break at dewan cement limestone quarry. J. Min. Environ. 12, 679–691 (2021).

Shahani, N. M., Kamran, M., Zheng, X., Liu, C. & Guo, X. Application of gradient boosting machine learning algorithms to predict uniaxial compressive strength of soft sedimentary rocks at Thar Coalfield. Adv. Civ. Eng. 20, 21 (2021).

Ullah, B., Kamran, M. & Rui, Y. Predictive modeling of short-term rockburst for the stability of subsurface structures using machine learning approaches: T-SNE, K-Means clustering and XGBoost. Mathematics 10, 449 (2022).

Shahani, N. M., Kamran, M., Zheng, X. & Liu, C. Predictive modeling of drilling rate index using machine learning approaches: LSTM, simple RNN, and RFA. Pet. Sci. Technol. 40, 534–555 (2022).

Loyola-Gonzalez, O. Black-box vs white-box: Understanding their advantages and weaknesses from a practical point of view. IEEE Access. 7, 154096–154113 (2019).

Velez, M., Jamshidi, P., Siegmund, N., Apel, S. & Kästner, C. White-box analysis over machine learning: Modeling performance of configurable systems. In 2021 IEEE/ACM 43rd International Conference on Software Engineering, 1072–1084 (2021).

Haley, A. & Zweben, S. Development and application of a white box approach to integration testing. J. Syst. Softw. 4, 309–315 (1984).

Sakhaeinia, H., Jalili, A. H., Taghikhani, V. & Safekordi, A. A. Solubility of H2S in Ionic Liquids 1-Ethyl-3-methylimidazolium Hexafluorophosphate ([emim][PF6]) and 1-Ethyl-3-methylimidazolium Bis (trifluoromethyl) sulfonylimide ([emim][Tf2N]). J. Chem. Eng. Data 55, 5839–5845 (2010).

Sakhaeinia, H., Taghikhani, V., Jalili, A. H., Mehdizadeh, A. & Safekordi, A. A. Solubility of H2S in 1-(2-hydroxyethyl)-3-methylimidazolium ionic liquids with different anions. Fluid Phase Equilib. 298, 303–309 (2010).

Safavi, M., Ghotbi, C., Taghikhani, V., Jalili, A. H. & Mehdizadeh, A. Study of the solubility of CO2, H2S and their mixture in the ionic liquid 1-octyl-3-methylimidazolium hexafluorophosphate: Experimental and modelling. J. Chem. Thermodyn. 65, 220–232. https://doi.org/10.1016/j.jct.2013.05.038 (2013).

Huang, K. et al. Thermodynamic validation of 1-alkyl-3-methylimidazolium carboxylates as task-specific ionic liquids for H2S absorption. AIChE J. 59, 2227–2235 (2013).

Handy, H. et al. H2S–CO2 separation using room temperature ionic liquid [BMIM][Br]. Sep. Sci. Technol. 49, 2079–2084 (2014).

Huang, K. et al. Protic ionic liquids for the selective absorption of H2S from CO2: Thermodynamic analysis. AIChE J. 60, 4232–4240 (2014).

Nematpour, M., Jalili, A. H., Ghotbi, C. & Rashtchian, D. Solubility of CO2 and H2S in the ionic liquid 1-ethyl-3-methylimidazolium trifluoromethanesulfonate. J. Nat. Gas Sci. Eng. 30, 583–591. https://doi.org/10.1016/j.jngse.2016.02.006 (2016).

Jalili, A. H., Mehrabi, M., Zoghi, A. T., Shokouhi, M. & Taheri, S. A. Solubility of carbon dioxide and hydrogen sulfide in the ionic liquid 1-butyl-3-methylimidazolium trifluoromethanesulfonate. Fluid Phase Equilib. 453, 1–12 (2017).

Wang, X. et al. Selective separation of hydrogen sulfide with pyridinium-based ionic liquids. Ind. Eng. Chem. Res. 57, 1284–1293 (2018).

Jalili, A. H. et al. Measuring and modelling the absorption and volumetric properties of CO2 and H2S in the ionic liquid 1-ethyl-3-methylimidazolium tetrafluoroborate. J. Chem. Thermodyn. 131, 544–556 (2018).

Faúndez, C. A., Fierro, E. N. & Valderrama, J. O. Solubility of hydrogen sulfide in ionic liquids for gas removal processes using artificial neural networks. J. Environ. Chem. Eng. 4, 211–218 (2016).

Sedghamiz, M. A., Rasoolzadeh, A. & Rahimpour, M. R. The ability of artificial neural network in prediction of the acid gases solubility in different ionic liquids. J. CO2 Util. 9, 39–47 (2015).

Jalili, A. H. et al. Solubility and diffusion of CO2 and H2S in the ionic liquid 1-ethyl-3-methylimidazolium ethylsulfate. J. Chem. Thermodyn. 42, 1298–1303 (2010).

Koza, J. R., et al. Evolution of subsumption using genetic programming. In Proceedings of First European Conference on Artificial Life, 110–119 (1992).

Koza, J. R., Bennett, F. H., Andre, D. & Keane, M. A. Genetic programming: Biologically inspired computation that creatively solves non-trivial problems. In Evolution as Computer 95–124 (Springer, 2002).

Chakraborty, U. K. Static and dynamic modeling of solid oxide fuel cell using genetic programming. Energy 34, 740–751 (2009).

Ivakhnenko, A. G. The group method of data handling in prediction problems. Sov. Autom. Control 9, 21–30 (1976).

Najafzadeh, M., Barani, G.-A. & Hessami Kermani, M. R. Estimation of pipeline scour due to waves by GMDH. J. Pipeline Syst. Eng. Pract. 5, 6014002 (2014).

Najafzadeh, M., Barani, G.-A. & Azamathulla, H. M. GMDH to predict scour depth around a pier in cohesive soils. Appl. Ocean Res. 40, 35–41 (2013).

Ghazanfari, N., Gholami, S., Emad, A. & Shekarchi, M. Evaluation of GMDH and MLP networks for prediction of compressive strength and workability of concrete. Bull. Soc. R. Des. Sci. Liège 86, 855–868 (2017).

Larochelle, H., Bengio, Y., Louradour, J. & Lamblin, P. Exploring strategies for training deep neural networks. J. Mach. Learn. Res. 10, 25 (2009).

Hinton, G. E., Osindero, S. & Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 18, 1527–1554 (2006).

Fischer, A., & Igel, C. An introduction to restricted Boltzmann machines. In Iberoamerican Congress on Pattern Recognition, 14–36 (2012).

Tan, Q., Huang, W., & Li, Q. An intrusion detection method based on DBN in ad hoc networks. In Wireless Communications and Sensor Networks Proc. Int. Conf. Wirel. Commun. Sens. Netw. (WCSN 2015), 477–485 (2016).

Ackley, D. H., Hinton, G. E. & Sejnowski, T. J. A learning algorithm for Boltzmann machines. Cogn. Sci. 9, 147–169 (1985).

Hinton, G. E. A practical guide to training restricted Boltzmann machines. In Neural Networks: Tricks of the Trade 599–619 (Springer, 2012).

Chen, T., & Guestrin, C. In Proceedings of the 22nd ACM Sigkdd International Conference on Knowledge Discovery and Data Mining (2016).

Zhang, J. et al. A unified intelligent model for estimating the (gas+ n-alkane) interfacial tension based on the eXtreme gradient boosting (XGBoost) trees. Fuel 282, 118783 (2020).

Dev, V. A. & Eden, M. R. Gradient boosted decision trees for lithology classification. In Computer Aided Chemical Engineering 113–118 (Elsevier, 2019).

Mousazadeh, M. H. & Faramarzi, E. Corresponding states theory for the prediction of surface tension of ionic liquids. Ionics (Kiel). 17, 217–222 (2011).

Leroy, A. M., & Rousseeuw, P. J. Robust regression and outlier detection. Rrod (1987).

Goodall, C. R. 13 Computation using the QR decomposition (1993).

Gramatica, P. Principles of QSAR models validation: Internal and external. QSAR Comb. Sci. 26, 694–701 (2007).

Menad, N. A., Noureddine, Z., Hemmati-Sarapardeh, A. & Shamshirband, S. Modeling temperature-based oil-water relative permeability by integrating advanced intelligent models with grey wolf optimization: Application to thermal enhanced oil recovery processes. Fuel 242, 649–663 (2019).

Pérez-Salado Kamps, Á., Tuma, D., Xia, J. & Maurer, G. Solubility of CO2 in the ionic liquid [bmim][PF6]. J. Chem. Eng. Data 48, 746–749 (2003).

Author information

Authors and Affiliations

Contributions

S.P.M.: writing—original draft, visualization, data curation, software, R.N.-K.: writing—original draft, visualization, data curation, software, S.A.: supervision, conceptualization, methodology, reviewing and editing, investigation, F.H.: software, methodology, conceptualization, A.A.: methodology, investigation, software. A.H.-S.: supervision, conceptualization, reviewing and editing, methodology, investigation. A.M.: supervision, conceptualization, reviewing and editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mousavi, SP., Nakhaei-Kohani, R., Atashrouz, S. et al. Modeling of H2S solubility in ionic liquids: comparison of white-box machine learning, deep learning and ensemble learning approaches. Sci Rep 13, 7946 (2023). https://doi.org/10.1038/s41598-023-34193-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-34193-w

- Springer Nature Limited