Abstract

Humans perceive the external world by integrating information from different modalities, obtained through the sensory organs. However, the aforementioned mechanism is still unclear and has been a subject of widespread interest in the fields of psychology and brain science. A model using two reservoir computing systems, i.e., a type of recurrent neural network trained to mimic each other's output, can detect stimulus patterns that repeatedly appear in a time series signal. We applied this model for identifying specific patterns that co-occur between information from different modalities. The model was self-organized by specific fluctuation patterns that co-occurred between different modalities, and could detect each fluctuation pattern. Additionally, similarly to the case where perception is influenced by synchronous/asynchronous presentation of multimodal stimuli, the model failed to work correctly for signals that did not co-occur with corresponding fluctuation patterns. Recent experimental studies have suggested that direct interaction between different sensory systems is important for multisensory integration, in addition to top-down control from higher brain regions such as the association cortex. Because several patterns of interaction between sensory modules can be incorporated into the employed model, we were able to compare the performance between them; the original version of the employed model incorporated such an interaction as the teaching signals for learning. The performance of the original and alternative models was evaluated, and the original model was found to perform the best. Thus, we demonstrated that feedback of the outputs of appropriately learned sensory modules performed the best when compared to the other examined patterns of interaction. The proposed model incorporated information encoded by the dynamic state of the neural population and the interactions between different sensory modules, both of which were based on recent experimental observations; this allowed us to study the influence of the temporal relationship and frequency of occurrence of multisensory signals on sensory integration, as well as the nature of interaction between different sensory signals.

Similar content being viewed by others

Introduction

The brain uses numerous sensory inputs to recognize the external world and acquire knowledge. In addition, handling multiple pieces of information in an integrated manner increases the reliability of perceiving the external world and knowledge acquisition. Researchers have investigated this phenomenon, termed sensory integration or multisensory integration, in the fields of psychology, medicine, neuroscience, and artificial intelligence.

In psychology, the "McGurk effect" describes the perception of a third phoneme when people listen to one phoneme being vocalized while simultaneously seeing another phoneme being vocalized in a video1. The co-occurrence and combination of specific visual and auditory stimuli exert a significant impact on perception2,3. In neuroscience, most experimental studies employ the experimental design requiring perceptual decision-making to distinguish between multimodal and unimodal sensory effects4,5. The models based on Bayesian decision theory succeed in explaining the mechanism underlying multisensory integration, especially the mechanism of perceptual enhancement for combinations of multiple sensory stimuli. The models based on the drift–diffusion theory could explain reaction time in perceptual decision-making of multisensory stimuli6,7. These models are based on the hierarchical structure, namely feedforward structure, in which sensory information is processed in the corresponding unimodal sensory system (e.g., visual cortex) and then transferred to association areas (via a single pathway) for integration with other types of sensory information. According to evidence that the view of the feedforward system is not necessarily consistent with the results of perturbation studies, in which a relevant brain region is inactivated, systems for multimodal sensory integration consist of multiple pathways and are more multifaceted than the feedforward view8. Such interaction between sensory modalities and integration of sensory information through these pathways before it reaches the association cortex would allow for faster perception than the conventional feedforward processing. In addition, because responses of single neurons during multisensory integration are heterogenous, it is also suggested that the contribution of population-level dynamics to the mechanism should be considered8. From the perspective of computer science, one made attempts to model a visual system based on the convolutional neural network (CNN)9 and the mechanism for sound source localization10. Regarding audio-visual integration, the model with self-supervised learning, CNN, and Bayesian theory proposed the mechanism that utilizes correspondence and temporal coincidence between features, each of which is extracted from visual or auditory stimuli11,12,13,14,15,16,17,18,19,20,21,22,23,24.

Sensory information comprises an uninterrupted time series, and sensory organs transmit it as a continuous signal to downstream areas. Sensory neurons during such a sensory stimulus presentation exhibit dynamic firing patterns, but not steady firing intensities25. In the early stage of multimodal integration, sensory organs or low-level brain regions, such as the retina/primary visual cortex for the visual system or cochlea/primary auditory cortex for the auditory system, need to extract useful information from such a continuous signal and to encode extracted information as a dynamical state of neural activity. Most of the studies concerning multisensory integration have focused on how to utilize acquired information, i.e., the features obtained after processing the continuous signals, but not on how to extract information from continuous signals26,27. In addition, the encoded sensory information is assumed to be represented by steady firing rates of a neural population (i.e., a static vector), not by a dynamical state of them. Indeed, the time-varying activity of a neural population has been observed in various brain regions and showed its relevance to brain functions28. Perception of multisensory stimuli depends on the timings of stimuli such as stimuli presented with a certain delay3. This dependence of multisensory integration on the timings could be achieved only with the model based on the dynamical state. Furthermore, because the process is carried out in an unsupervised manner, it is likely that frequent simultaneous presentation of signals from different sensory modes should serve as the cue. Although how multimodal information is constructed from continuous signals has been studied22 from the perspective of machine learning, most of the studies utilize a steady state of the neural population, rather than a dynamical state, as the encoding relies on an artificially layered structure that is not seen in the brain11,12,13,14,15,16,17,18,19,20,21,22,23,24.

In this study, we aimed to propose a potent model for understanding the mechanism of multisensory integration in the early stage implemented by the brain based on the previously proposed model for chunking29. The model is based on reservoir computing (RC) because RC is a recurrent network model that handles time series data30,31. While most models for chunking focus on the transition probability between elements that make up a chunk32,33,34,35, the model utilizes trajectories of states of the neural network responding to sequential inputs. Because sensory signals are not always comprised of separable elements such as letters in a string, the model is more likely to provide a plausible mechanism. Furthermore, because the neuronal network in sensory organs, such as the retina for the visual system, rarely shows experience-dependent plasticity, RC in which recurrent connections are fixed is structurally closer to the system engaging in sensory processing than other types of recurrent neural networks in which all connections are supposed to be modified for learning. Similarly, a reservoir computing model based on predictive coding (PCRC) was proposed36. The PCRC model was constructed such that the sensory information of each modality was supposed to be encoded as predicted information, namely, the encoding neural activity is supposed to reproduce the input. Therefore, in the model, each sensory input is essentially unaffected by the other types of sensory signals, and interaction between different sensory modalities was not considered. On the other hand, the proposed model enables us to consider the effect of direct interactions between sensory modules, which has been suggested by several studies37,38,39. In this study, we intended to apply the previously proposed model29 to realize information integration between different modalities through the segmentation of specific stimuli co-occurrence in repeatedly presented time series data and to encode it as a dynamical state.

Results

Collaborative multimodal RC model

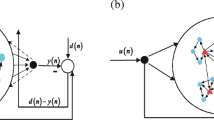

We demonstrated the performance of the proposed collaborative RC model for multimodal time-series data29. In the brain, there are different systems, each of which is responsible for the processing of a specific sensory signal, such as signals from the visual system or the auditory system. Therefore, in the model used in this study, two RC networks, each of which is later referred to as an RC module, interacted with each other by feeding their output to the other module for learning. As described below, the model received different modal inputs (images and text tones) to be integrated, each of which is processed by an RC module that corresponds to a sensory system. Each RC module consisted of rate-based neurons in a recurrent network and linear readout units in the output layer. An RC module received the time-varying inputs of a modality, which differed among the modules (Fig. 1A). A neuron in a reservoir received input from one of the input neurons. All neurons were mutually connected within each reservoir, and a portion of these neurons projected to the readout units, which projected back to all neurons belonging to similar RC modules. The two reservoirs had different recurrent wiring patterns and were not identical. Readout activity z(t) was determined by the weighted sum of the activities \({\varvec{r}}\left(t\right)\) of the reservoir neurons projecting to the readout:

Collaborative multimodal reservoir computing model and signals used. (A) In the collaborative multimodal reservoir computing (RC) model, two non-identical reservoirs are activated by different input neurons' activity. The readout weights of each RC system undergo supervised learning with a teaching signal generated by the output of the partner network. Each input neuron of RC receives different modality inputs. In this simulation, we used a text-tone signal and an image signal. (B) Example responses are provided for input neurons to the text-tone modality signal. (C) Example responses are provided for input neurons to the image modality signal. Our demonstration uses three chunks, with each chunk displaying a fruit, namely an apple, grape, and banana.

In addition, we set a higher layer in which the units received outputs of the readout units in the RC modules. \({o}_{i}\left(t\right)\) denotes the activity of a unit in the association layer, used to calculate the accuracy rate (refer to “Methods”). The weight vector \({{\varvec{W}}}^{{\varvec{o}}{\varvec{u}}{\varvec{t}}}\) was modified through the first-order reduced and controlled error (FORCE) learning algorithm40, whereas recurrent and feedback connections were non-plastic because the model could solve the present task without modifying these connections. In our model, each RC used the teaching signal generated by the output \({\varvec{z}}\left(t\right)\) of the partner network through the learning process of the FORCE algorithm, which enabled the model to demonstrate unsupervised learning29.

During the demonstration, we used chunks that consisted of modal input pairs, which mimicked the multimodal sensory inputs. In the present study, one RC received an image, whereas the other received text comprising a short tone signal representing a character (Fig. 1B). Text inputs were implemented as the input with a time course different from that of images. A letter input transiently activates the corresponding input neuron to the letter, that is, the number of input neurons equals the number of letters. Random sequence inputs were generated by randomly chosen letters, the length of which ranges from 5 to 8. Contrarily, after scaling up or down to an identical size, the image was decomposed into RGB components (Fig. 1C). Subsequently, each of these components was divided into 10 sections from top to bottom. A section was scanned from left to right in a window, followed by pooling the scanned image within the window. Eventually, an image was converted into a 30-dimensional series of data and the length of the scanned image was converted into time series data, according to the length of the text tone inputs. Thus, the number of input neurons coincided with 30 neurons. We used a Gaussian noise image as the random sequence, which was processed similar to image signals. Image signals can be constructed in other ways; for example, an image is encoded as a static vector, following which the value of an element in the vector is fed as a static input throughout the duration. However, our goal was to determine if the proposed model achieved responses to simultaneously presented time-varying signals with varying characteristics because they originated from different modalities. Therefore, we composed input signals such that a set of co-current time series represented a chunk, each of which originated from different modalities. We intended to demonstrate the behavior of the proposed model in response to inputs without co-occurrence.

Learning of multimodal multiple chunks

We demonstrated the mechanism by which the model learned multiple chunks. The input was made by alternating input sequence and a chunk that was randomly chosen with the same probability among the three. (Fig. 2A, left). In the model, the number of the readout units was set to three, \({z}_{i}^{RCj}\) for the jth RC module (i = 1, 2, 3). The teaching signals are defined by the following equations.

Learning of multimodal multiple chunks. (A) Left: Three chunks of apple (red), grape (blue), and banana (green) separated by random sequences recur at equal frequencies in the input. Right: These chunks are repeated without random sequence intervals. (B) The activity of the output units upon presenting each chunk while using input signals separated by random sequence intervals. The red, green, and blue shades indicate periods of learned input “apple,” input “banana,” and input “grape,” respectively. (C) The behavior of the output units upon presenting each chunk with input signals not separated by intervals. The red, green, and blue shades indicate periods of learned input “apple,” input “banana,” and input “grape,” respectively.

We trained the model by using input sequences in which three chunks appeared randomly and consecutively with equal probability (Fig. 2A, right). Thus, a similar RC system could easily learn multiple chunks (Fig. 2C). The selective response to each chunk was self-organized by stimulus co-occurrence upon each RC receiving input from different modalities.

Next, we investigated the mechanism by which activities of reservoir neurons encoded chunks. We trained the network on sequences containing three chunks and random sequences (Fig. 2A left). Each output neuron responded to the presentation of a specific chunk (Fig. 2B). Concerning reservoir neurons, some reservoir neurons responded to more than one chunk in each reservoir. As learning proceeds, the readout units could be classified into three groups, each of which exhibits selectivity to a specific chunk. The readout units showed time-locked responses to the chunk presentation (Fig. 2B). The activity emerged from the onset of the chunk presentation and then fell to the end of the presentation. This delayed response is presumably due to the teacher signal that is increased as selectivity becomes stronger. Readout activities of RC1 and RC2 in response to chunk presentation were considerably similar to each other. The detailed connection patterns within the reservoir units differed between the modules. Furthermore, these modules received time-varying inputs with different characteristics owing to dissimilar modalities. Nonetheless, the teacher signal based on the readout activity was provided to the partner RC module, such that the readout activity tended to imitate that of the partner RC module, thereby generating similar outputs.

Following learning, the norm of \({{\varvec{W}}}^{{\varvec{o}}{\varvec{u}}{\varvec{t}}}\) became smaller (Fig. 3A1 and A2), thus suggesting that a smaller portion of the units in the reservoir contributed to the readout activity after learning than before learning. As shown in Fig. 3B, the reservoir neurons projecting to readout units were organized such that those selectively respond to a chunk.

Evolution of reservoir-readout connections during and after learning. (A1) Time changes of distribution in the weight matrix connecting the reservoir internal unit to the readout unit. On the far right, a distribution of normalized connection magnitudes is shown for comparison with the distribution shapes across the stages of learning. The connections were normalized by the maximum value for each timing (A2). A detailed distribution of reservoir-readout connections is shown before, during, and after learning within the indicated range of Wout. White indicates that only a small portion of connections fall within a given range, whereas black indicates that many connections fall within a given range. (B) Selective decode responses to individual chunks (colored intervals) are self-organized. The input contains random sequences. The responses are gray-scaled according to their selectivity to the chunks. Left: the case where selectivity to a specific chunk by reservoir neuron projecting to readout units was clear. Right: the case where the selectivity was less clear.

Next, to characterize the dynamics of reservoir networks during chunk presentation, we performed the principal component analysis to all reservoir units N. During each chunk presentation, the activity of the RC internal nodes drew a specific trajectory in the PC1 to PC3 space (Fig. 4A)41,42,43. The trajectories of reservoir neurons in RC1 (Fig. 4A top) and RC2 (bottom) appear different even for the same chunk owing to different connection configurations of reservoir networks and different time courses of inputs. Next, we examined the degree to which the trajectories corresponding to each chunk are separated from each other. Figure 4B displays the time course of relative distances between the "apple" trajectory and the other trajectories. Those trajectories were separated during the stimulus presentation without passing near the "apple" trajectory. The averaged distances between the trajectories show that the separation is sufficient (Fig. 4C). Thus, the trajectories were well distinguished between the chunks. It has been reported that population neural activity responsible for cognitive tasks, such as sensory perception, decision making, and motor control, is embedded in a lower dimensional subspace of the dimension N of the neural population28. To verify if the present model can achieve this feature, we characterized the dimension of the reservoir dynamics by calculating the accumulative contributions from the eigenstate with the largest eigen value. As shown in Fig. 4D1, the characteristics of the cumulative contribution of eigenstates differed between RC1 and RC2, presumably due to differences in temporal features between stimuli. We found a common tendency of increase in the dimensions immediately after learning. The trajectories were encoded with a low dimension for the network before learning (C = 0, which stored no information at all), yet as learning progressed, fewer eigenstates were needed for reconstruction, suggesting that the trajectories of reservoir networks were embedded within the lower-dimensional spaces. Although the effective dimension44 for the trajectory of RC1 was smaller than that of RC2 (Fig. 4D2), the gradual decrease of both these trajectories and the increase in the learning iteration C were consistent with the pattern seen in the cumulative contribution ratio (Fig. 4D1). The difference between trajectories in RC1 and RC2 was examined by varying the input parameters, and the results suggest that this difference depends on the timescale and gain of the input signal (Supplementary Materials, Fig. S1).

The characteristics of the trajectories of reservoir dynamics during stimulus presentation. (A) Results of projecting the activity inside the reservoir computing model for individual chunks (colored intervals) into the space from PC1 to PC3. Responses are self-organized for each chunk. The input contains a random sequence. Responses are color-coded according to their selectivity for chunks. Top: for the RC1 module. Bottom: for the RC2 module. (B) Distances between chunks of low-dimensional trajectories of reservoir dynamics. The distances \({d}_{XY}\left(t\right)\) were measured with respect to the “apple” trajectory (Y was set to “apple”). The shaded area indicates the deviation \({\sigma }^{\text{X}}(t)\). Because presentation times vary between chunks, the midpoint of the presentation time was set to 0, and the relative time from that point was used to display the data. (C) The degree of separation \({r}_{\text{XY}}\) between trajectories. The left and right sides indicate RC1 and RC2, respectively. The degree was defined in the Methods sections. The smaller the value is, the longer the distance between trajectories. (D1) Change in the cumulative contribution ratio before and during learning. The ratios for RC1 and RC2 are displayed on the left and right sides, respectively. (D2) Changes in the effective dimension trajectories for RC1 and RC2 after an increasing number of learning iterations. See the “Methods” section for the calculation of the effective dimension. PC principal component.

These results used the optimal parameter set, obtained by parameter exploration. The effect of different parameters on performance is summarized in the Supplementary Materials. The performance was found to depend on the time constant (τ) of reservoir neurons and the strength (g) of recurrent connections among reservoir neurons (Supplementary Figs. S2, S4). In addition to the model parameters, the timescale of the inputs was also found to affect the performance (Supplementary Figs. S2, S3, S4).

Variations of interaction between sensory modules

As shown above, the interactive RC model could segment paired sensory signals from continuous inputs and encode them as the dynamical state of sensory RC modules. In the model, the interaction between sensory modules is implemented in the teaching signal. Some studies have reported that interaction between different sensory information is achieved by direct connections between different sensory systems, as well as by top-down control from the higher systems, such as the association cortex37,38,39. For the interactive RC model, we could consider an alternative form of interaction between sensory modules when the readout neurons are interpreted as elements within the corresponding sensory module (Fig. 5A). In a possible form shown in Fig. 5A1, a portion of reservoir neurons in an RC module projects to those in the other RC module. This is the straightforward model of direct interaction between sensory systems. In another possible variation, the readout neurons feedback not only to reservoir neurons in the same module but also to the ones in the other module (Fig. 5A2). In the last model, the readout neurons of both modules are connected (Fig. 5A3). Unlike the original model, outputs of RC1 and RC2 are not necessarily associated in the models A1 to A3, to examine whether the association was essential for the readout’s learning. Therefore, the neurons in the association layer must be responsible for the associations between the outputs from RC1 and RC2. To do this, we introduced a simple learning rule based on the Hebb rule into the connections from readouts of RC modules to neurons in the association layer (see “Methods”) while, as in the original model, connections from reservoir neurons to readouts were trained by FORCE learning. Figure 5B shows a comparison between these alternative models and the original model (Fig. 1A) in terms of learning accuracy. Among these variations from A1 to A3, model A2 performed the best, but it was worse than the original model. With respect to the models A1 to A3, it is necessary that readouts of each RC module should show selectivity to the corresponding sensory modal input of a specific chunk. To confirm this, the accuracy at the level of RC modules was examined (Fig. 5C). These results indicate that alternative models did not percept the corresponding sensory modal inputs well. According to the result that Fig. 5C are similar to Fig. 5B, the low accuracy of models A1 to A3 can be attributed to the processing at RC modules, suggesting that the ways of interaction in those models should not be appropriate.

Variations of the model that have direct interactions between sensory modules. (A) Variations of ways of interaction between sensory RC modules. A1: The reservoir neurons in an RC module send connections to those in the other RC module. The connection matrices between the reservoirs Win, inter were determined in the same manner as Win, but the probability of connections p was set to 0.1. A2: The readouts in an RC module feedback to reservoir neurons in the other RC module. The connection matrices of the inter-RC feedback Wback, inter were determined in the same manner as Wback with the same parameters as those for Wback. A3: The readouts in RC1 and RC2 were connected with each other. All-to-all connections determined the connectivity across RC modules. (B) Comparison of the accuracy between the original and alternative models A1 to A3. (C) The accuracy of the readout responses in RC1 (green) and RC2 (orange).

The importance of co-occurrence matters

The original model could recognize the co-occurrence of paired stimuli with different modalities. We demonstrated the model's response to inputs for missing paired stimuli and delayed stimuli. The co-occurrence of the corresponding stimuli in each modality was the key to activating the model. We divided the validation into a case where the model was trained with manipulated signals and the learning accuracy was verified with an unmanipulated input and a case where the manipulated signals were input to the model trained with unmanipulated signals in the validation phase. The accuracy was computed using o(t) and the integrated output from the model. The missing input signal replaced one of the signals in a chunk pair with another signal or a random sequence, with a probability m (Fig. 6A). This probability varied from 0.0 to 1.0 in increments of 0.1. The correct response rate tended to decrease with an increase in the non-co-occurrence rate of the stimulus (Fig. 6B, left).

Reactions activated by non-contingent input through replacement and delay. (A) Three chunks of an apple (red), grape (blue), and banana (green) separated by random sequences recur at equal frequencies in the input. In non-co-occurrence signals, these chunks are swapped with a certain probability m. (B) Left: The relationship between non-coincidence m and the accuracy in training signals. Right: The relationship between non-coincidence m and the accuracy in testing signals. (C) Three chunks of an apple (red), grape (blue), and banana (green) separated by random sequences recur at equal frequencies in the input. In non-co-occurrence signals, these chunks are delayed with a certain time d. (D) Left: The relationship between the delay time d and the accuracy in training signals. Right: The relationship between the delay time d and the accuracy in testing signals.

Next, we added a delay of time d to one of the signals, followed by its input (Fig. 6C). The delay time d varied from 0 to 250 in increments of 25 ms. Upon its input during learning, the accuracy decreased (Fig. 6D). Thus, in the case of missing signal input, the correct response rate tended to decrease with an increase in the inconsistency rate.

The aforementioned validation results indicated the importance of the temporal co-occurrence of specific stimulus patterns of each modality for model activation. In other words, the co-occurrence of specific information patterns in each heterogeneous modality was important for model activation and is key to information integration.

Discussion

The brain is likely to associate with simultaneously presented visual and auditory stimuli. Repetitive simultaneous presentation of stimuli of different modes would promote the integration of the stimuli into a chunk by the brain to recognize the external event. This may be possible even in cases where simultaneous stimuli are not necessarily attributable to an identical event or object. Considering the importance of the temporal relationship between stimuli and the frequency of occurrence, we proposed a possible mechanism to integrate time-varying stimuli from different modalities. By examining how interactions between sensory modules affect multisensory integration, we found that the best performance (among the examined ways of interaction) was by the interaction of feedback from the outputs of appropriately learned sensory modules with alignment between sensory modules, suggesting that anatomical connectivity facilitates such an interaction between different sensory systems.

Given that an input activates the i-th readout in either RC module, the teaching signal is supposed to reinforce the i-th readout in the partner RC module by adjusting reservoir-readout connections. Thus, the input will be encoded by the i-th readouts of both RC modules. Considering that the brain possesses sensory module such as visual and auditory cortices and the modules directly interact each other, the interaction between RC modules might be the key for understanding the brain mechanism of multisensory integration. The earliest region where different sensory information converges is the superior colliculus (SC)45,46. Input from the association cortex assists the SC neurons in learning how to respond to multisensory inputs. In the proposed model, neurons in the association layer correspond to SC neurons, and connections between readout and association neurons should be regulated by the association cortex. However, the proposed model could not incorporate such learning because the association neurons are simplified and the higher layer was not included. In the computational model of multisensory integration based on such anatomical connectivity47,48, the unisensory modules that send sensory signals to SC neurons are designed to compete with each other in a winner-take-all fashion during the learning phase; this corresponds with the teaching signals employed in the proposed model.

The PCRC model has been proposed as a model to process time-varying sensory signals and encode them as the dynamical states of neural activity36. In the model with a similar structure to the present model, each sensory signal is encoded by the corresponding sensory RC such that the error between a sensory input and the RC output is minimized. Then, a multisensory signal is encoded by combining visual and auditory sensory information processed by the sensory RCs at the upper layer. Because the PCRC model uses predictive coding for encoding49,50, each sensory RC is trained such that it can generate the output that is the same as the given sensory input. Therefore, a sensory signal of a modality is essentially processed within the corresponding sensory module, while the proposed models interact with the other sensory module. (It should be noted that feedback from readouts is affected by the other sensory module, even in the original model.) If direct interaction between sensory modalities is to be considered, it may be necessary to redefine the essential mechanism; for example, what an RC module predicts is the input of a single modality or integrated inputs of related sensory modalities. On the other hand, the interaction between modalities is incorporated in the proposed model, although it is designed as the teaching signal. Furthermore, the model can be transformed as shown in Fig. 6, allowing us to evaluate how the interactions should be, although we need to find a better learning mechanism. From the viewpoint of the learning principle, it is essential in reservoir computing to project an input into a higher dimensional complex time-series and to construct the desired output from the time-series. In the basic form of reservoir computing, these two processes can be performed simultaneously by training connections from reservoir units to readouts. While the former is achieved in both the PCRC and the proposed model, the latter is different between these two models. In the PCRC model, these processes are achieved so that the output of readouts can reproduce inputs by using existing inputs as teaching signals. Therefore, to construct outputs for a higher-level structure such as the integration module, separate processing is required. The proposed model avoids this by using outputs from the other module as teaching signals.

The co-occurrence of stimuli of different modes would be attributable to an identical cause, particularly in the case of frequent presentation. The proposed model was capable of integrating simultaneously presented paired stimuli, and its performance deteriorates with decreased probability of co-occurrence or an increased delay of the presentation of one of the paired stimuli (Fig. 6). Concerning the influence of the delay on multisensory integration, asynchronous presentation (i.e., with the delay) causes weak integration51. Perception by multimodal integration decreased as the delay became longer. The percentage of correct perception drops to 50% when the delay increases to 200–300 ms3. Although the accuracy shown in Fig. 6D is not necessarily comparable to the correct perception percentage, the model showed a similar dependence on the delay. However, the effect of the auditory and visual stimuli on the perception is asymmetric; for example, pairs of visual and auditory stimuli in which the visual one precedes the auditory one are sometimes recognized as a synchronous stimulus pair3. Furthermore, such perception highly depends on stimulus types (such as flash and beep, a tool and its sound, and speech with video), suggesting that a higher cortical area may be involved in the process. This property would be difficult to reproduce within the present model because the top-down control that would arise from such a higher structure is not represented in the present model.

The probability of co-occurrence of paired stimuli and the temporal delay between these stimuli displayed a strong association with the “McGurk effect”3 and “ventriloquism effect”52; the “McGurk effect” is a phenomenon in which an unfamiliar combination of sensory information, each of which is familiar, leads to the new perception, and “ventriloquism effect” is the auditory illusion that sound is perceived as coming from the different location, but not from the location where the sound is actually generated owing to visual stimuli. Both are major issues in multisensory integration because the dependence of perception on temporal aspects of the stimuli would imply that the integration process depends on dynamic shifts in properties of neural activity; the chunking mechanism based on neural population dynamics may also be involved in the illusional perception. The “McGurk effect” can be resolved by treating the multimodal information as a static representation of a joint probability distribution representing combined stimuli of different modalities53,54; however, it is impossible to treat the “ventriloquism effect” by such representation because it does not incorporate the time course of sensory signals. In particular, it is reported that the “ventriloquism effect” is affected by the temporal difference between visual and auditory stimuli and the symmetrical/asymmetrical effect between visual and auditory stimuli exits52. The present model would enable us to consider the effect of the temporal difference or how to interact between the modules. In the ventriloquism effect, when there is a mismatch between the location of auditory and visual stimuli, a calibration occurs in which the perception of the location of auditory stimulus is drawn to the location of visual presentation. Traditionally, it has been assumed that repeated experience of such mismatch stimuli is required for this calibration to be achieved, but one study found it to occur after only the immediately preceding trial55. Extensions of this model that can encode stimulus location may allow us to test whether such short-term calibration can occur via a bottom-up mechanism, such as memory retention by recurrent dynamics. Therefore, the proposed model may contribute to a unified understanding of how temporal characteristics affect multisensory integration efforts beyond the McGurk and ventriloquism effects.

In the proposed model, neurons in the higher association layer were modelled to encode the results of multimodal integration by responding to specific co-occurrences of paired stimuli. This layer should be modelled to integrate multimodal information from the lower layers in a self-organizing manner, which would enable each neuron in this layer to display its response to specific combinations of multisensory information among numerous combinations. This modified version could be tested as a possible underlying mechanism of the “McGurk effect.” For example, the model could be validated by evidence showing that the degree of the “McGurk effect” depends on the temporal order and intervals of auditory and visual stimuli2. In our model, the interaction between the different modal modules (RC1 and RC2) was direct. The interaction via a higher layer may be possible because perception requires top-down control from higher-order brain areas, such as the association cortices, whereas direct connections between primary sensory areas allow those areas to interact with each other. In the ventriloquism effect, the influence of visual information on auditory information could be stronger than vice-versa, although either is influenced by the accuracy of modal information.56,57. It is important to determine whether the above-mentioned asymmetric result is caused by the characteristics of bottom-up processing (which may arise from asymmetry between processing of different sensory signals), by an asymmetric interaction between different sensory modules, or by asymmetric top-down control56,57,58,59,60,61.

Methods

Details of the network models

In this study, we adopted a collaborative RC model that was originally developed to extract meaningful segments from information streams. The model consisted of two recurrent networks called echo state networks (ESNs)62, each of which has three layers: the input, reservoir, and output. The reservoir layer is a recurrent neural network that consists of N rate-based neurons. A neuron in the reservoir layer obeys the following dynamics:

where i(t) is the activity of the neuron in the input layer, g is the parameter that scales connections between neurons in the reservoir layer and controls the complexity of the network behavior; the reservoir network shows chaotic spontaneous activity for g > 1. W, Win, and Wback are connections within reservoir neurons, those from the input to the reservoir layer, and those from the reservoir to output layers, respectively. r(t) and Wout are the output of the reservoir neurons, and connection matrix from the reservoir neurons to output neurons that is also called the readout weight matrix, respectively. Each output neuron receives inputs from S reservoir neurons by the weights Wout. The output neurons projected back to all reservoir neurons. Learning was conducted by modifying the readout weight matrix Wout based on the FORCE algorithm that minimizes the error between the output and the teaching signal40. The elements of W are generated by a Gaussian distribution of N(0, 1/(pN)) where p is the probability of connections between reservoir neurons. The elements of Win were determined such that each row had only one non-zero element generated by a normal distribution N(0,1). Elements of Wback were drawn from a uniform distribution [-1, 1]. The initial values of Wout are generated by a Gaussian distribution N(0, 1/N). Table 1 summarizes parameter values. The time step of the simulation is 1 ms. All experiments were performed 60 times31,63.

Teaching signals

In the learning rule, we normalized the outputs such that the mean and the standard deviation of outputs were satisfied with 0 and unity, respectively:

where \({\varvec{\upmu}}\left(t\right)\) and \({\varvec{\upsigma}}\left(t\right)\) were calculated as follows:

with a sufficiently long period T = 15,000 ms.

The teacher signal for the proposed model was determined using the following equation:

where \({\widehat{z}}_{i}\left(t\right)\) is the i-th normalized output. The threshold linear function [x]+ returns 0 for x ≤ 0 and x for x > 0; therefore, the output value was positive. The numerator inside the function tanh() takes a positive value if the i-th readout showed the largest response because the second term of the numerator represents an average except the i-th readout. Therefore, when the i-th readout of an RC module responded to a chunk the most, the teacher signal reinforces the i-th readout of the other RC module. Each output neuron in an RC module received the teacher signal defined by Eq. (10) from the partner RC module. The signal exerted a collaborative or competitive influence depending on the chunk presentation. The type of chunk an output neuron should learn was not assigned in advance, and the model was self-organized based on the teacher signals. Because the teacher signal is symmetric concerning the permutation of the index, output neurons with the same index responded to the same chunk. In the results, output neurons in both RC modules showed responses similar to each other. Usage of the tanh function limited output ranges and prevented the model from responding to a specific chunk too much. β and γ were set to 3 and 0.5, respectively. The error between the teacher signal and the output was defined using Eq. (11).

Designed output

From the symmetry of the teacher signals for RC1 and RC2, \({z}_{i}^{{\text{RC}}1}\) and \({z}_{i}^{RC2}\) react to a similar chunk. However, multimodal information may lead to varied response profiles of the readout units for RC1 and RC2. We should integrate the RC1 and RC2 outputs to calculate the accuracy rate of the model. Therefore, we used oi(t) for the final performance comparison of the model. We set the constants to δ = 0.5.

In the alternative models presented in Fig. 6A1, A2, and A3, the teacher signal is closed in the own RC modules unlike the original model. Therefore, readouts between RC1 and RC2 do not always correspond. For example, the i-the readout in the RC1 is responsible for the chunk “apple”, while the j-th readout in the RC2 may respond to the chunk “apple”. The neurons in the association layer must organize the outputs of RC1 and RC2 so that each neuron in the layer represents one of the learned chunks. For this purpose, we introduced the learning rule into connections from the readouts of RC1 and RC2. Let \({w}_{ij}^{x}\) be the connection from the j-th readout of RCx to the i-th neuron in the association layer. The output of oi(t) and the dynamics \({w}_{ij}^{x}\) is defined as

where N is the number of readouts or neurons in the association layer (N = 3). The first and second term of the right-hand side represents the Hebbian rule and the normalization, respectively.

Evaluation method

Let o(t) be the output to be analyzed. Convolution of o(t) with the window function w(t): \({\int }^{t}w\left(\tau \right)o\left(\tau -t\right)d\tau\) was taken. For the window function, the Gaussian function was used. Then the value of convoluted output was normalized within the range of 0 and 1. The normalized time course of the convoluted output was segmented into periods of chunk presentation. For each period, the unit showing the largest response was determined. After this process, we found which output unit responded to each chunk presentation for all chunk presentation. According to which chunk an output unit most responded to, we determined the relationship between output units and chunks. We should note that “no responses” of all output neurons were regarded as the correct response to random sequences. Based on this result, we could obtain a confusion matrix: each row of the matrix represents a chunk, whereas each column represents an output unit. An element of the matrix includes the number of counts that the corresponding unit (column) responded to the corresponding chunk (row). If the indices of output units were sorted based on the indices of chunks that each unit was related to, then diagonal elements contain the counts of correct responses, which represent true-positives for binary discrimination.

According to the obtained confusion matrix, the accuracy rate was calculated as follows:

where C represents the total number of stimulus presentation epochs, including random sequences (the sum of the counts in all boxes of the matrix). cij represents the element in the i-th row and the j-th column. Therefore, the numerator is the sum of diagonal elements, indicating the sum of correct responses for all presentations. Considering learning was unsupervised, the stimulus selectivity was not explicitly imposed on each unit. Therefore, we determined the assignment of chunk selectivity for each unit such that the accuracy could be maximized.

Activities of the reservoir unit

To define the response selectivity of reservoir neurons, we sorted them based on their mean activation phases:

where \(\overline{{\varvec{r}} }\left(t\right)\) is the normalized output of a reservoir neuron. Each reservoir neuron exhibited a phasic response to a specific chunk, which indicated its selectivity. We considered only the reservoir neurons projecting to output neurons.

Calculation of relative distance between trajectories

A state of an RC module in low-dimensional space spanning from PC1 to PC3 at t when an input X is presented for the i-th trial is denoted by \({{\varvec{x}}}_{\text{X}}^{i}\left(t\right)=({x}_{\text{X}}^{i}\left(t\right), {y}_{\text{X}}^{i}\left(t\right), {z}_{\text{X}}^{i}\left(t\right))\) where X = apple, grape, or banana. The point at t averaged over trials, which is denoted as the center point at t for input X, is obtained as \(\overline{{{\varvec{x}} }_{\text{X}}}\left(t\right)=(\overline{{x }_{\text{X}}}\left(t\right), \overline{{y }_{\text{X}}}\left(t\right), \overline{{z }_{\text{X}}}\left(t\right))\), where \(\overline{{x }_{\text{X}}}\left(t\right)=\frac{1}{{n}_{\text{X}}}\sum_{i=1}^{{n}_{\text{X}}}{x}_{\text{X}}^{i}\left(t\right), \overline{{y }_{\text{X}}}\left(t\right)=\frac{1}{{n}_{\text{X}}}\sum_{i=1}^{{n}_{\text{X}}}{y}_{\text{X}}^{i}\left(t\right), \overline{{z }_{\text{X}}}\left(t\right)=\frac{1}{{n}_{\text{X}}}\sum_{i=1}^{{n}_{\text{X}}}{z}_{\text{X}}^{i}\left(t\right)\) And nX is the number of trials for input X.

Thus, the distance between the center points of input X and Y, \({d}_{\text{XY}}(t)\), is obtained by \({d}_{\text{XY}}\left(t\right)=\left|\overline{{{\varvec{x}} }_{\text{X}}}\left(t\right)-\overline{{{\varvec{x}} }_{\text{Y}}}\left(t\right)\right|\), where | | denotes the Euclidean norm. The variance in the direction of PC1 \({{\sigma }_{x}^{\text{X}}(t)}^{2}\) is \({{\sigma }_{x}^{\text{X}}(t)}^{2}=\frac{1}{{n}_{\text{X}}}{\sum }_{i=1}^{{n}_{\text{X}}}{({x}_{X}^{i}\left(t\right)-\overline{{x }_{\text{X}}(t)})}^{2}\). The deviation of the trajectories X around the center point at t is defined as \({\sigma }^{\text{X}}\left(t\right)=\sqrt{{{\sigma }_{x}^{\text{X}}(t)}^{2}+{{\sigma }_{y}^{\text{X}}(t)}^{2}+{{\sigma }_{z}^{\text{X}}(t)}^{2}}\).

Therefore, we defined the degree of separation between trajectories of input X and Y as \({r}_{\text{XY}}\), where \(r_{{{\text{XY}}}} = \sum _{{s = t - 100}}^{{t + 100}} \frac{{\sigma ^{X} \left( s \right) + \sigma ^{Y} (s)}}{{d_{{{\text{XY}}}} (s)}}\) .The trajectories have left transient states at the midpoint of presentation times t = 0. Therefore, we decided to characterize it by cutting out the 100 steps before and after t = 0 and integrating the time points.

The smaller the value of \({r}_{\text{XY}}\) is, the more separated the two trajectories are.

Effective dimension

Effective dimension is a measure of the effective number of principal components describing a trajectory44. For example, in the effective dimension, if n principal components equally share the total variance and the remaining N principal components have zero variance, then \({N}_{eff}=n\).

The effective dimension is given by

where \({\lambda }_{a}^{2}\) is the eigenvalue.

Data availability

The source code (Python code) is available at https://zenodo.org/record/5835322#.Yd0hUi2MuP0.

References

McGurk, H. & MacDonald, J. Hearing lips and seeing voices. Nature 264, 746–748 (1976).

Munhall, K. G., Gribble, P., Sacco, L. & Ward, M. Temporal constraints on the McGurk effect. Percept. Psychophys. 58, 351–362 (1996).

Stevenson, R. A. & Wallace, M. T. Multisensory temporal integration: Task and stimulus dependencies. Exp. Brain Res. 227, 249–261 (2013).

Gu, Y., Angelaki, D. E. & DeAngelis, G. C. Neural correlates of multisensory cue integration in macaque MSTd. Nat. Nerurosci. 11, 1201–1210 (2008).

Raposo, D., Sheppard, J. P., Schrater, P. R. & Churchland, A. K. Multisensory decision-making in rats and humans. J. Neurosci. 32, 3726–3735 (2012).

Ma, W. J., Beck, J. M., Latham, P. E. & Pouget, A. Bayesian inference with probabilistic population codes. Nat. Neurosci. 9, 1432–1438 (2006).

Schwartz, W. Diffusion, superposition and the redundant-targets effect. J. Math. Psychol. 38, 504–520 (1994).

Chandrasekaran, C. Computational principles and models of multisensory integration. Curr. Opin. Neurobiol. 43, 25–34 (2017).

Lindsay, G. W. Convolutional neural networks as a model of the visual system: Past, present, and future. J. Cogn. Neurosci. 33, 2017–2031 (2021).

van der Heijden, K. & Siamak, M. Goal-driven, neurobiological-inspired convolutional neural network models of human spatial hearing. Neurocomputing 470, 432–442 (2022).

Hu, D., Nie, L. X. Deep multimodal clustering for unsupervised audiovisual learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 9248–9257 (2019)

Owens, A., Wu, J., McDermott, J. H., Freeman, W. T., Torralba, A. Ambient sound provides supervision for visual learning. In European Conference on Computer Vision (Springer, 2016).

Pedro, M., Vasconcelos, N., Misra, I. Audio-visual instance discrimination with cross-modal agreement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (2021).

Owens, A., Efros, A. A. Audio-visual scene analysis with self-supervised multisensory features. In Proceedings of the European Conference on Computer Vision (ECCV) (2018).

Korbar, B., Tran, D., Torresani, L. Cooperative learning of audio and video models from self-supervised synchronization. Adv. Neural Inf. Process. Syst. 31 (2018).

Asano, Y., Patrick, M., Rupprecht, C. & Vedaldi, A. Labelling unlabelled videos from scratch with multi-modal self-supervision. Adv. Neural Inf. Process. Syst. 33, 4660–4671 (2020).

Arandjelovic, R., Zisserman, A. Look, listen and learn. In Proceedings of the IEEE International Conference on Computer Vision (2017).

Chung, J. S., Zisserman. A. Out of time: Automated lip sync in the wild. In Asian Conference on Computer Vision (Springer, 2016).

Alwassel, H. et al. Self-supervised learning by cross-modal audio-video clustering. Adv. Neural Inf. Process. Syst. 33, 9758–9770 (2020).

Aytar, Y., Vondrick, C., Torralba, A. Soundnet: Learning sound representations from unlabeled video. Adv. Neural Inf. Process. Syst. 29 (2016).

Jaegle, A., Borgeaud, S., Alayrac, J. B., Doersch, C., Ionescu, C., Ding, D., Koppula, S., Zoran, D., Brock, A., Shelhamer, E., Hénaff, O. Perceiver io: A general architecture for structured inputs and outputs. arXiv preprint arXiv:2107.14795 (2021).

Martínez, H. P., & Yannakakis, G. N. Deep multimodal fusion: Combining discrete events and continuous signals. In Proceedings of the 16th International Conference on Multimodal Interaction (ICMI '14) 34–41 (Association for Computing Machinery, 2014).

Zhu, H., Luo, M. D., Wang, R., Zheng, A. H. & He, R. Deep audio-visual learning: A survey. Int. J. Autom. Comput. 18, 351–376 (2021).

Tavanaei, A., Ghodrati, M., Kheradpisheh, S. R., Masquelier, T. & Maida, A. Deep learning in spiking neural networks. Neural Netw. 111, 47–63 (2019).

Okun, M. et al. Population rate dynamics and multineuron firing patterns in sensory cortex. J. Neurosci. 32, 17108–171019 (2012).

Ursino, M., Cuppini, C. & Magosso, E. Neurocmputational approaches to modelling multisensory integration in the brain: A review. Neural Netw. 60, 141–165 (2014).

Fang, Y. Y., Zhaofei, L. J. K. & Chen, F. A unified circuit of causal inference and multisensory integration. Neurocomput. 358, 355–368 (2019).

Duncker, L. & Sahani, M. Dynamics on the manifold: Identifying computational dynamical activity from neural population recordings. Curr. Opin. Neurobiol. 70, 163–170 (2021).

Asabuki, T., Hiratani, N. & Fukai, T. Interactive reservoir computing for chunking information streams. PLoS Comput. Biol. 14, e1006400 (2018).

Maass, W., Natschläger, T. & Markram, H. Real-time computing without stable states: A new framework. Neural Comput. 14, 2531–2560 (2002).

Jaeger, H. & Haas, H. Harnessing nonlinearity: Predicting chaotic systems and saving energy in wireless communication. Science 304, 78–80 (2004).

Dehaene, S., Meyniel, F., Wacongne, C., Wang, L. & Pallier, C. The neural representation of sequences: From transition probabilities to algebraic patterns and linguistic trees. Neuron 88, 2–19 (2015).

Perruchet, P. & Vinter, A. Parser: A model for word segmentation. J. Mem. Lang. 39, 246–263 (1998).

Wacongne, C., Changeux, J. P. & Dehaene, S. A neural model of predictive coding accounting for the mismatch negativity. J. Neurosci. 32, 3665–3678 (2012).

Botvinick, M. & Watanabe, T. From numerousity to ordinal rank: A gain-field model of serial order representation in cortical working memory. J. Neurosci. 27, 8636–8642 (2007).

Yonemura, Y. & Katori, Y. Network model of predictive coding based on reservoir computing for multi-modal processing of visual and auditory signals. Nonlinear Theory Appl. IECIE 12, 143–156 (2021).

Eckert, M. A. et al. A cross-modal system linking primary auditory and visual cortices: Evidence from intrinsic fMRI connectivity analysis. Hum. Brain Mapp. 29, 848–857 (2008).

Bizley, J. K., Nodal, F. R., Bajo, V. M., Nelken, I. & King, A. J. Physiological and anatomical evidence for multisensory interactions in auditory cortex. Cer. Cortex 17, 2172–2189 (2007).

Cappe, C., Rouiller, E. M. & Barone, P. Multisensory anatomical pathways. Hear. Res. 258, 28–36 (2009).

Sussillo, D. & Abbott, L. F. Generating coherent patterns of activity from chaotic neural networks. Neuron 65, 544–557 (2009).

Sussillo, D., Churchland, M. M., Kaufman, M. T. & Shenoy, K. V. A neural network that finds a naturalistic solution for the production of muscle activity. Nat. Neurosci. 18, 1025–1033 (2015).

Rivkind, A. & Barak, O. Local dynamics in trained recurrent neural networks. Phys. Rev. Lett. 118, 258101 (2017).

Newman, M. E. J. The structure and function of complex networks. SIAM Rev. 45, 167–256 (2003).

Abbott, L. F., Rajan, K., & Sompolinsky, H. Interactions between intrinsic and stimulus-evoked activity in recurrent neural networks. In The Dynamic Brain: An Exploration of Neuronal Variability and Its Functional Significance, 1–16. (2011).

Stein, B. E. & Stanford, T. R. Multisensory integration: Current issues from the perspective of the single neuron. Nat. Rev. Neurosci. 9, 255–266 (2008).

Alvarado, J. C., Stanford, T. R., Rowland, B. A., Vaughan, J. W. & Stein, B. E. Multisensory integration in the superior colliculus requires synergy among corticocollicular inputs. J. Neurosci. 29, 6580–6592 (2009).

Cuppini, C., Stein, B. E., Rowland, B. A., Magosso, E. & Ursino, M. A computational study of multisensory maturation in the superior colliculus (SC). Exp. Brain Res. 213, 341–349 (2011).

Cuppini, C., Stein, B. E. & Rowland, B. A. Development of the mechanisms governing midbrain multisensory integration. J. Neurosci. 38, 3453–3465 (2018).

Huang, Y. & Rao, R. P. N. Predictive coding. Wiley Interdiscip. Rev. Cogn. Sci. 2, 580–593 (2011).

Summerfield, C. et al. Predictive codes for forthcoming perception in the frontal cortex. Science 24, 1311–1314 (2006).

Liu, B., Jin, Z., Wang, Z. & Gong, C. The influence of temporal asynchrony on multisensory integration in the processing of asynchronous audio-visual stimuli of real-world events: An event-related potential study. Neuroscience 10, 254–264 (2010).

Vroomen, J., de Gelder, B. & Vroomen, J. Temporal ventriloquism: sound modulates the flash-lag effect. J. Exp. Psychol. Hum. Percept. Perform. 30, 513–518 (2004).

Magnotti, J. F. & Beauchamp, M. S. A causal inference model explains perception of the McGurk effect and other incongruent audiovisual speech. PLoS Comput. Biol. 13, e1005229 (2017).

Lindborg, A. & Andersen, T. S. Bayesian binding and fusion models explain illusion and enhancement effects in audiovisual speech perception. PLoS ONE 16, e0246986 (2021).

Wozny, D. R. & Shams, L. Recalibration of auditory space following milliseconds of cross-modal discrepancy. J. Neurosci. 31, 4607–4612 (2011).

Bonath, B. et al. Neural basis of the ventriloquist illusion. Curr. Biol. 17, 1697–1703 (2007).

Alais, D. & Burr, D. The ventriloquist effect results from near-optimal bimodal integration. Curr. Biol. 14, 257–262 (2004).

Choi, I., Lee, J. Y. & Lee, S. H. Bottom-up and top-down modulation of multisensory integration. Curr. Opin. Neurobiol. 52, 115–122 (2018).

Bruns, P. The ventriloquist illusion as a tool to study multisensory processing: An Update. Front. Integr. Neurosci. 13, 51 (2019).

Magosso, E., Cuppini, C. & Ursino, M. A neural network model of ventriloquism effect and aftereffect. PLoS ONE 7, e42503 (2012).

Chen, L. & Vroomen, J. Intersensory binding across space and time: A tutorial review. Atten. Percept. Psychophys. 75, 790–811 (2013).

Yildiz, I. B., Jaeger, H. & Kiebel, S. J. Re-visiting the echo state property. Neural Netw. 35, 1–9 (2012).

Toyoizumi, T. & Abbott, L. F. Beyond the edge of chaos: amplification and temporal integration by recurrent networks in the chaotic regime. Phys. Rev. E Stat. Nonlinear Soft Matter Phys. 84, 051908 (2011).

Acknowledgements

This work was supported by JSPS KAKENHI Grant Number 19H01140. We would like to thank Editage (www.editage.com) for English language editing.

Author information

Authors and Affiliations

Contributions

Conceptualization: I.K., K.K. Formal analysis: I.K. Investigation: I.K., K.K. Methodology: I.K. Supervision: K.K. Writing—original draft: I.K. Writing—review and editing: K.K.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kanemura, I., Kitano, K. Association between different sensory modalities based on concurrent time series data obtained by a collaborative reservoir computing model. Sci Rep 13, 173 (2023). https://doi.org/10.1038/s41598-023-27385-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-27385-x

- Springer Nature Limited