Abstract

Somatosensory processing can be probed empirically through vibrotactile psychophysical experiments. Psychophysical approaches are valuable for investigating both normal and abnormal tactile function in healthy and clinical populations. To date, the test-retest reliability of vibrotactile detection and discrimination thresholds has yet to be established. This study sought to assess the reproducibility of vibrotactile detection and discrimination thresholds in human adults using an established vibrotactile psychophysical battery. Fifteen healthy adults underwent three repeat sessions of an eleven-task battery that measured a range of vibrotactile measures, including reaction time, detection threshold, amplitude and frequency discrimination, and temporal order judgement. Coefficients of variation and intraclass correlation coefficients (ICCs) were calculated for the measures in each task. Linear mixed-effects models were used to test for length and training effects and differences between tasks within the same domain. Reaction times were shown to be the most reproducible (ICC: ~0.9) followed by detection thresholds (ICC: ~0.7). Frequency discrimination thresholds were the least reproducible (ICC: ~0.3). As reported in prior studies, significant differences in measures between related tasks were also found, demonstrating the reproducibility of task-related effects. These findings show that vibrotactile detection and discrimination thresholds are reliable, further supporting the use of psychophysical experiments to probe tactile function.

Similar content being viewed by others

Introduction

Psychophysical experiments can be used to probe somatosensory processing empirically. Quantitative measures of vibrotactile sensitivity, in particular, have been used to elucidate on the cortical mechanisms underlying such processing1,2. Such behavioural approaches have proven to be valuable and may reflect cortical mechanisms underlying somatosensory function. For example, GABAergic inhibition drives neuronal responses to sensory stimulation3,4 linked to behavioural outcomes. Measuring such behavioural outcomes may, therefore, provide information about cortical inhibition. The targeting of specific neuronal mechanisms of tactile function through psychophysics allows for the investigation of individual differences in healthy brain function or of impairments in disorders hypothesized to be driven by inhibitory dysfunction. Early work established the neurophysiological basis of tactile function in both nonhuman and human primates5,6,7,8,9,10. Psychophysical testing of sensory processing in general also has a long history11,12 upon which present-day research has been built.

A behavioural battery of psychophysical paradigms for assessing vibrotactile function13 was previously developed and shown to be successfully implementable in both adults and typically developing children. The vibrotactile stimuli used in the paradigms fall within the flutter range of touch (<50 Hz), which are processed by rapidly adapting (RA) I mechanoreceptors in the glabrous skin7,9. The neurophysiology of amplitude and frequency discrimination of low-frequency stimuli is well-characterized1,8,14,15, which has engendered the investigation of the neural coding linking sensation and complex cognition16. Thus, psychophysical testing using flutter-range stimuli permits interrogation of the somatosensory and higher-level systems in healthy and pathological neurobiology. This battery and its variants have been used to investigate tactile (dys)function in autism spectrum disorder17,18,19,20,21,22, Tourette syndrome23, and attention-deficit hyperactivity disorder24; in ageing25,26; in multimodal studies involving magnetic resonance spectroscopic measures of GABA27,28,29; in concussion30,31; and in conjunction with neurostimulation32. Dynamic detection thresholds, for example, are thought to reflect local feed-forward inhibitory mechanisms33,34,35. On the other hand, using an amplitude discrimination paradigm to present two stimuli simultaneously can probe lateral inhibition, where an adapting stimulus presented beforehand can sharpen the resulting response function15,36.

Despite the extensive use of vibrotactile stimulation paradigms in the literature, the reproducibility of detection and discrimination thresholds is as yet unknown. Determining their reproducibility is essential for the validation of past and future findings from basic science and clinical studies. It is also not well known whether these measures represent states or traits. Furthermore, as psychophysical experiments typically involve relatively long test sessions, it is worthwhile to investigate whether paradigms can be shortened without loss in reliability so that they may be applicable in clinical settings. Therefore, this study aimed to assess the test-retest reliability of vibrotactile detection and discrimination thresholds in healthy adults using an established vibrotactile processing battery comprised of paradigms (in both short and long forms) designed to target different aspects of tactile processing.

Methods

Participants

Fifteen adult participants (8 females/7 males) were recruited (mean age: 28.3 ± 3.9 years; age range: 21–37 years). Participants were required to meet the following eligibility criteria to take part in experiments: aged 18–40 years; no history of a clinically diagnosed neurological or psychiatric disorder; no history of concussion; right-handed; non-smoker; no recreational drug use (alcohol was permissible); not colour-blind; no stimulant medication use (birth control was permissible); educated to a high-school diploma level (or the equivalent) or higher. All criteria (except for handedness) were confirmed verbally by participants. All volunteers were right-handed, which was confirmed using the Edinburgh Handedness Inventory37. This study was approved by the Johns Hopkins Medicine Institutional Review Board and was performed in accordance with all relevant institutional guidelines and federal regulations. All participants provided written informed consent prior to their participation in the study.

Participants were tested three times over an approximately three-week period to test the reproducibility of their vibrotactile detection and discrimination thresholds. To the extent that was practicable given time constraints and participants’ availability, repeat sessions occurred approximately one week after another (mean session-to-session interval: 7.2 ± 2.0 days; interval range: 4–15 days).

Stimulus delivery

A CM-4 four-digit vibrotactile stimulator38 (Cortical Metrics, Carrboro, NC) was used for stimulus delivery. All stimuli were delivered to the glabrous skin of the left digits 2 and 3 (LD2 and LD3) using cylindrical probes (5-mm diameter) and presented within the flutter range (25–50 Hz) using sinusoidal pulses. The stimulator operates with 16-bit resolution and has a displacement accuracy of less than 1 μm. Its temporal accuracy is less than 1 ms39. Visual feedback, task responses and data collection were performed using a Dell Inspiron Mini laptop running Cortical Metrics software38 in Windows 7. Participants responded by clicking on the left or right buttons of a mouse using their right hand. The left mouse button corresponded to LD3 and the right mouse button correspond to LD2. In all tasks, stimuli were delivered pseudorandomly to LD2 and LD3. Great efforts were made in the design and fabrication of the vibrotactile stimulator to minimize any detectable noise. The internal mechanism of the CM-4 head unit is driven by a voice coil actuator and does not produce any audible cues during tactile stimulation38. This was confirmed from experimental data collected from three additional participants (see Data Availability statement for access to these results).

Vibrotactile paradigms

A previously published vibrotactile battery13 consisting of 11 separate paradigms was used in this study. The paradigms are illustrated in Fig. 1. Each task was preceded by three practice trials to familiarize participants with the goal of the specific paradigm. Participants were required to successfully complete all three trials to proceed to the testing component. Feedback was provided during training but not during the testing component. Participants underwent two versions of the battery: a short and long version. Each task in the short version had the same number of trials compared to the work published previously13 and was delivered twice over the three sessions (denoted Short(1) and Short(2)); tasks in the long version had twice the number of trials compared to the short, original, version, and was delivered only once over the three sessions (denoted Long). The short version took approximately 40 min to complete, while the long version took approximately 1 hr. Session order was randomized across participants. This experimental design allowed us to study the effect of version (Short vs. Long) and effect of training (week effect). Finally, the long version could be truncated to reflect a “Short(3)” measurement.

Schematic of the vibrotactile paradigms. (a) Simple and choice reaction time; (b) static detection threshold; (c) dynamic detection threshold; (d) amplitude discrimination with no adaptation; (e) amplitude discrimination with dual-/single-site adaptation; (f) sequential frequency discrimination; (g) simultaneous discrimination; (h) temporal order judgement; and (i) temporal order judge with carrier stimulus.

Simple (sRT) and choice (cRT) reaction time

A suprathreshold stimulus (frequency = 25 Hz; amplitude = 300 μm; duration = 40 ms) was delivered to LD2 or LD3, and participants were asked to respond as soon as they felt the stimulus (Fig. 1a). In the sRT task, participants simply needed to click any mouse button, whereas in the cRT task participants additionally had to indicate, using the left and right mouse buttons, on which finger they felt the stimulus (intertrial interval (ITI) = 3 s; Short(1) and Short(2): 20 trials; Long: 40 trials). For each individual, reaction times (for correct trials only in the cRT task) were calculated as the median reaction time. Intrasubject variability (ISV) was also calculated as the standard deviation of the values from all trials (outliers were removed using the method described in “Statistical analysis” below).

Static (sDT) and dynamic (dDT) detection threshold

In the sDT task, a suprathreshold stimulus (frequency = 25 Hz; starting amplitude = 20 μm; duration = 500 ms) was delivered to one of the two digits and participants were asked to respond on which finger they felt the stimulus (Fig. 1b,c). A one-up–one-down tracking paradigm (stimulus amplitude was decreased for a correct answer and increased for an incorrect answer) was used for the first 10 trials and a two-up–one-down (two correct answers were necessary for a reduction in test amplitude) was used for the remainder of the task (ITI = 5 s; Short(1) and Short(2): 24 trials; Long: 48 trials). sDT was calculated as the mean of the amplitudes of the last five values. In the dDT task, after a variable delay (0–2500 ms), a 25-Hz stimulus increased from zero amplitude (rate of amplitude increase = 2 μm/s). Participants were asked to respond as soon as they felt the stimulus and on which finger they felt it (ITI = 10 s; Short(1) and Short(2): 7 trials; Long: 14 trials). dDT was calculated as the mean stimulus amplitude at the time of pressing the button, across all correct trials. These thresholds were not corrected for reaction time. As was the case for all subsequent tasks, convergence scores were calculated as the accuracy of performance in the final five trials (i.e., the trials used to estimate thresholds).

Amplitude discrimination with no adaptation (nAD), dual-site adaptation (dAD) and single-site adaptation (sAD)

In the nAD task, participants were asked to choose which of two simultaneously delivered stimuli had the higher amplitude (frequency = 25 Hz; duration = 500 ms; standard stimulus amplitude = 100 μm; initial comparison stimulus amplitude = 200 μm) (Fig. 1d,e). In the dAD condition, each trial was preceded by dual-site-delivered adapting stimuli (frequency = 25 Hz; duration = 1 s, amplitude = 100 μm) and in the sAD task each trial was preceded by a single-site-delivered adapting stimulus (duration = 1 s, amplitude = 100 μm). The single-site adapting stimulus was presented to the same digit that received the test stimulus of higher amplitude. A one-up–one-down tracking paradigm (comparison stimulus amplitude was decreased for a correct answer and increased for a wrong answer) was used for the first 10 trials and a two-up–one-down (two correct answers were necessary for a reduction in comparison stimulus amplitude) was used for the remainder of the task (ITI = 5 s; Short(1) and Short(2): 20 trials; Long: 40 trials). Amplitude discrimination thresholds were calculated as the mean of the amplitudes of the last five values. In the Long nAD task, the initial comparison stimulus was inadvertently set to 300 μm. While inconsistent with the short versions of the task, this allowed for an investigation of nAD thresholds between short and long versions where the long version had a larger range of stimulus parameters (initial difference between stimuli = 200 μm).

Sequential (sqFD) and simultaneous (smFD) frequency discrimination

In the sqFD task, stimuli (duration = 500 ms; amplitude = 200 μm) were delivered to LD2 and LD3 sequentially (interstimulus interval (ISI) = 500 ms) (Fig. 1f,g). In the smFD task, the two stimuli were delivered simultaneously to both digits. One finger always received the standard stimulus (frequency = 30 Hz) while the other received the comparison stimulus (initial frequency = 40 Hz). The two stimuli were delivered to either location pseudorandomly. In both conditions, the participants were asked which finger received the higher frequency stimulus. An one-up–one-down tracking paradigm (the comparison stimulus frequency was decreased for a correct answer and increased for a wrong answer) was used for the first 10 trials and the two-up–one-down (two correct answers were necessary for a reduction in comparison stimulus frequency) was used for the remainder of the task (ITI = 5 s; Short(1) and Short(2): 20 trials; Long: 40 trials). Frequency discrimination thresholds were obtained as the mean of the frequencies of the last five trials.

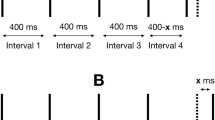

Temporal order judgment without (TOJs) and with carrier stimulus (TOJc)

In this task, two single-cycle vibrotactile pulses (duration = 40 ms; frequency = 25 Hz; amplitude = 200 μm) were delivered to LD2 and LD3 separated temporally by a starting ISI of 150 ms (the first pulse was assigned to either digit pseudorandomly) within a 1-s interval (Short(1) and Short(2): 20 trials; Long: 40 trials) (Fig. 1h,i). Participants were asked to respond which digit received the first pulse. TOJ thresholds were calculated as the mean of the ISI of the last five trials. In the TOJs condition, there was no concurrent stimulation, while in the TOJc condition a 25-Hz, 20-μm concurrent carrier stimulus was delivered throughout each 1-s trial interval.

Statistical analysis

All statistical analyses were performed in R40 (version 3.5.3). For each task, outliers were first detected using the median absolute deviation method41 with a threshold value of 2.542 by collapsing measurements from all three sessions together to better estimate the dispersion of the measurements. All results are presented with outliers removed. A repository for the raw data can be accessed on the Open Science Framework website (see Data Availability statement).

Between-subject (CVbs) (both within session and over all sessions) and within-subject (CVws) coefficients of variation were calculated for each task:

where σg and μg are the group standard deviations and means across subjects’ measurements, either within each session or over all three sessions (g); σs and μs are the standard deviation and mean of measurements across the three sessions for subject s. CVs are useful as they provide a standardized estimate of intra- and interindividual variability that can be compared across different measures. To assess test-retest reliability, intraclass correlation coefficients (ICCs) were calculated. The ICC is a relative measure of reliability and is formulated as:

where \({\sigma }_{g}^{2}\) is the between-subjects variance and \({\sigma }_{e}^{2}\) is the error variance, which includes biological or state variability, errors from subjects, and errors from the instrumentation or tester. As the ICC is driven by between-subjects variance, a large value indicates that the variability in a measure is predominately driven by individual differences (i.e., the true variance) rather than trial-to-trial variability that is due to error (i.e., the difference between the true variance and the observed variance). Several versions of the ICC exist; in the present study, the ICC was calculated using a two-way mixed-effects model with average measures of absolute agreement43,44 using the psych R package45.

Repeated-measures linear mixed-effects modelling was performed using the lme4 R package46 (using maximum likelihood for parameter estimation) to test (i) whether there were significant differences between the short and long versions of each task (a version effect), (ii) whether there were training effects within task (a time effect), and (iii) whether there were significant differences between tasks within a domain (a task effect). This is formulated as:

where yij is the threshold measurement of a given task (or tasks within the same domain) for subject j from session i; β0 is the model intercept (the grand mean); sj is the by-subject random effect (which accounts for variation across subjects) with mean 0 and variance \({\sigma }_{s}^{2}\); xij is the predictor variable (i.e., the fixed effect of version, time, or task) with a grand mean slope of β1; and εij is the residual error with mean 0 and variance \({\sigma }_{\varepsilon }^{2}\). For these analyses, Short(1) and Short(2) threshold measurements were collapsed in the mixed-effects models. Goodness-of-fit was calculated as a log-likelihood statistic. To test for significant effects, likelihood ratio tests were performed by comparing the log-likelihood statistic of one model to that of a reduced model (i.e., a model excluding the effect of interest). The alpha level was set at 0.05. Effect sizes were calculated as the proportional reduction in residual variance between the reduced model and the model of interest47. Where applicable, two-tailed post hoc comparisons were performed using Tukey’s HSD test. Multiple comparisons were corrected for Type I error rate inflation using the Holm-Bonferroni method48 using the multcomp R package49 (adjusted p-values are denoted: pHolm).

To test whether shortening the long version of the paradigms improved the test-retest reliability of detection and discrimination thresholds, a secondary analysis was performed where the number of recorded trials for the long version were truncated to the same number of trials of the short version and thresholds were subsequently calculated on the basis of the last five trials. CVs and ICCs were then recalculated and compared to those from the primary analysis.

To test whether there were any differences of convergence in the paradigms where staircase tracking was implemented, a convergence score was calculated as the accuracy of performance in the final five trials (i.e., the trials used to estimate thresholds). A convergence score of 5 would suggest that the participant did not make any inaccurate responses in the final five trials, whereas a score of 0 would mean that the participant did not make an accurate response in the final five trials. Note that these scores were not used to indicate whether convergence was good or bad. Rather, they simply allowed us to determine whether convergence was comparable between the tasks, versions, and sessions. While it is presumable that a convergence score of 0 or 5 rather than 3 would suggest worse convergence, a consensus on what is considered an acceptable level of convergence has not yet been established. Since the scope of this study was to assess the reproducibility of flutter-range tactile detection and discrimination thresholds, rather than reliability of convergence, only group comparisons of the convergence between tasks, versions and sessions is provided. To this end, convergence between the protocols was compared using repeated-measures linear mixed-effects modelling using the methods described above to determine whether there was (i) a task effect, (ii) a version effect, (iii) a time effect, (iii) a task × version effect, and (iv) a task × time effect.

Results

All participants were able to complete the 11 paradigms successfully. Table 1 summarizes the behavioural results for each paradigm, including the mean (±1 standard deviation) vibrotactile detection and discrimination thresholds, CVbs, CVws and ICCs. Bland-Altman plots displaying the agreement between participants’ repeated measurements are shown in Figs. 2 and 3. These are presented as the mean of pairs of measurements (e.g., the mean of Short(1) and Short(2) thresholds) versus their percentage difference (e.g., [Short(1) – Short(2) thresholds] / mean of Short(1) and Short(2) thresholds × 100). Table 2 shows the results following truncation of the long version of each paradigm.

Bland-Altman plots visualizing the pairwise agreement between each participant’s measurements between versions (i.e., Short(1) vs. Short(2) (grey), Short(1) vs. Long (orange), and Short(2) vs. Long (blue)) for simple and choice reaction time (RT), simple and choice RT intrasubject variability (ISV), static and dynamic detection threshold (DT), and amplitude discrimination (AD) with no, dual-site, and single-site adaptation. The comparisons are overlaid within each subplot to better illustrate the agreement across all three comparisons.

Bland-Altman plots for sequential and simultaneous frequency discrimination (FD) and temporal order judgment (TOJ) without and with carrier stimulus. Plot details as in Fig. 2.

Reaction time

The mean reaction times across the three sessions for the sRT and cRT tasks were 215.45 ± 51.13 ms and 427.60 ± 93.81 ms, respectively. The CVbs across all sessions were 23.7% and 21.9% and the CVws were 10.9% and 9.5%, respectively. The ICCs were 0.90 and 0.90. Truncating the long versions of these tasks resulted in ICCs of 0.91 and 0.88. No task showed a significant time or version effect. Reaction times for cRT were shown to be significantly longer compared to the reaction times for sRT [χ2(1) = 136.22, p < 0.001; effect size = 0.84], as shown in Fig. 4a. The mean ISV across the three sessions for the sRT and cRT tasks were 48.05 ± 18.07 ms and 73.17 ± 28.24 ms. The CVbs across all sessions were 37.6% and 38.6% and the CVws were 23.4% and 26.5%, respectively. The ICCs were 0.78 and 0.63. Truncating the long versions of these tasks resulted in ICCs of 0.77 and 0.56. There were no significant version or time effects for either task. The ISV for cRT was significantly greater compared to the ISV for sRT [χ2(1) = 29.97, p < 0.001; effect size = 0.33] (Fig. 4b).

Violin plots overlaid by boxplots displaying the distribution of vibrotactile detection and discrimination thresholds for each paradigm and for each version of each paradigm. Results for (a) reaction time; (b) intrasubject variability (ISV) of reaction time; (c) detection threshold; (d) amplitude discrimination; (e) frequency discrimination; and (f) temporal order judgment are shown. sRT, simple reaction time; cRT, choice reaction time; sDT, static detection threshold; dDT, dynamic detection threshold; nAD, amplitude discrimination with no adaptation; dAD, amplitude discrimination with dual-site adaptation; sAD, amplitude discrimination threshold with single-site adaptation; sqFD, sequential frequency discrimination; smFD, simultaneous frequency discrimination; TOJs, temporal order judgment without carrier stimulus; TOJc, temporal order judgment with carrier stimulus. **p < 0.01; ***p < 0.001; Holm-Bonferroni correction was applied on the amplitude discrimination threshold comparisons.

Detection threshold

The mean detection thresholds across the three sessions for the sDT and dDT tasks were 5.10 ± 2.14 μm and 8.32 ± 1.87 μm, respectively. The CVbs across all sessions were 42.0% and 22.5% and the CVws were 30.9% and 13.5%, respectively. The ICCs were 0.65 and 0.78. Truncating the long versions of these tasks resulted in ICCs of 0.34 and 0.67. There were no significant version or time effects for either task. As shown in Fig. 4c, dDTs were significantly higher than sDTs [χ2(1) = 54.64, p < 0.001; effect size = 0.57].

Amplitude discrimination

The mean AD threshold across the three sessions for the nAD, dAD and sAD tasks were 34.14 ± 18.81 μm, 21.95 ± 12.69 μm and 49.73 ± 24.68 μm, respectively. The CVbs across all sessions were 55.1%, 57.8% and 49.6% and the CVws were 40.5%, 45.7% and 31.4%, respectively. The ICCs were 0.44, 0.53 and 0.72. Truncating the long versions of these tasks resulted in ICCs of 0.56, 0.30 and 0.07. Only the nAD task showed a significant version effect [χ2(1) = 5.53, p = 0.02; effect size = 0.17]; no task showed a significant time effect. After accounting for the version effect in the nAD task, a significant difference in AD thresholds was found [χ2(2) = 42.84, p < 0.001; effect size = 0.30]. Post hoc pairwise comparisons showed that dAD thresholds were significantly lower than nAD (z = −3.06, pHolm = 0.002) and sAD thresholds (z = 7.14, pHolm < 0.001) (Fig. 4d). In addition, nAD thresholds were significantly lower than sAD thresholds (z = 4.07, pHolm < 0.001).

Frequency discrimination

The mean FD thresholds across the three sessions for the sqFD and smFD tasks were 6.85 ± 3.08 Hz and 7.82 ± 3.61 Hz, respectively. The CVbs across all sessions were 45.0% and 46.2% and the CVws were 41.7% and 37.0%, respectively. The ICCs were 0.25 and 0.43. Truncating the long versions of these tasks resulted in ICCs of < 0.01 and 0.30. There were no significant version or time effects for either task. No significant difference was found between the two tasks (effect size = 0.03) (Fig. 4e).

Temporal order judgment

The mean TOJ thresholds across the three sessions for the TOJs and TOJc tasks were 23.78 ± 12.75 ms and 34.93 ± 19.74 ms, respectively. The CVbs across all sessions were 53.6% and 56.5% and the CVws were 46.0% and 36.0%, respectively. The ICCs were 0.07 and 0.74. Truncating the long versions of these tasks resulted in ICCs of 0.72 and 0.62. Only the TOJs task showed a significant version effect [χ2(1) = 5.27, p = 0.02; effect size = 0.17]; no task showed a significant time effect. After accounting for the version effect in the TOJs task, TOJc thresholds were found to be significantly higher than TOJs thresholds [χ2(1) = 10.45, p = 0.001; effect size = 0.13], as shown in Fig. 4f.

Convergence

The mean convergence score was 3.42 ± 1.05 across all tasks. While there was a significant task effect [χ2(7) = 31.36, p < 0.001; effect size = 0.09], there was no significant version effect [χ2(1) = 0.05, p = 0.83; effect size = 0.00] or a time effect [χ2(1) = 0.98, p = 0.32; effect size = 0.00]. There was also no significant task × version effect [χ2(8) = 4.10, p = 0.85; effect size = 0.01] or task × time effect [χ2(8) = 3.66, p = 0.89; effect size = 0.00]. Post hoc pairwise comparisons showed that convergence scores were significantly lower for the simultaneous frequency discrimination (z = −4.87, pHolm < 0.001) and temporal order judgement (z = −4.13, pHolm = 0.001) paradigms compared to the static detection protocol. Convergence scores were also significantly lower for the simultaneous frequency discrimination paradigm compared to the amplitude discrimination with single-site adaptation (z = −3.28, pHolm = 0.03) and dual-site adaptation paradigms (z = −3.28, pHolm = 0.03). The convergence scores were otherwise comparable between paradigms.

Discussion

In this study, the test-retest reliability of vibrotactile detection and discrimination thresholds was assessed for the first time. As has been shown extensively in other behavioural studies50,51,52, simple and choice reaction times were highly reproducible. Additionally, the ISV of subjects’ reaction times was shown to have excellent test-retest reliability, a finding observed in other domains and modalities53,54. The reproducibility of the remaining measures (detection thresholds, amplitude discrimination, frequency discrimination, and TOJ) is less well-documented; this study presents some of the first evidence that these measures are reproducible to a variable extent. Moreover, the intersubject variability of the various thresholds falls in line with previous findings in adults13,27,28,29, with reaction times having around 20–30% variation, detection and frequency discrimination thresholds having 20–40% variation, and amplitude discrimination thresholds having 40–60% variation. The intrasubject variability of detection thresholds has been shown to be around ~25–30%55,56, which also falls in line with the present findings.

Notwithstanding the findings of this study, the interpretation of ICCs must be considered carefully, given that an ICC is a ratio of between-subject variability and the sum of between-subject variability and error—pointing to its sensitivity to interindividual differences. In clinical settings, it would be worthwhile to consider individuals’ inherent ability to perform each task. For instance, some participants may exhibit increased trial-to-trial variability that reflects specific pathological processes, rather than pointing toward an unreliable psychophysical measure of vibrotactile processing per se. An analysis of individual differences in detection and discrimination performance was beyond the scope of this study but is a potential avenue for future research. There is also the matter of what is considered a “good” ICC for clinically relevant behavioural measures. Based on Cicchetti’s guidelines57, we conclude that the vibrotactile reaction times have excellent reliability, the ISV of reaction times has good-to-excellent reliability, detection thresholds have good-to-excellent reliability, amplitude discrimination thresholds have fair-to-good reliability, frequency discrimination thresholds have poor-to-fair reliability, and TOJ has good reliability. The poorer reliability of the frequency discrimination thresholds can be explained in part by the difficulty of the task. As reported previously13, many participants stated that they were not able to discriminate between the two frequencies, with some having to repeat the practice trials multiple times to proceed to the testing component.

Task-related effects that have been shown previously were also replicated in this study. Differences between simple and choice reaction times are a well-established effect58, so it is unsurprising to see this replicated in this study. Significantly higher dynamic detection thresholds compared to static detection thresholds13,17,19,20,23,24,25, higher single-site-adaptation amplitude discrimination thresholds13,17,19,23,24,25,59 and lower dual-site-adaptation amplitude discrimination thresholds59 compared to no-adaptation amplitude discrimination thresholds, and higher TOJ thresholds with a carrier stimulus compared to simple TOJ thresholds17,22,24,60 also appear to be consistent effects in healthy, clinical, younger and older cohorts, demonstrating high reproducibility across the general population. However, this study failed to reproduce the effect of higher frequency discrimination thresholds when stimuli are simultaneously delivered rather than being sequentially delivered despite previous evidence of this13,23,24. The within- and between-subject variability of each of these measures will directly influence the likelihood of observing a differential effect in experimental designs (e.g., comparing measures from a clinical cohort and healthy controls). It is worth noting that increased measurement and participant variability could point toward specific somatosensory deficits, and more importantly, heterogeneity of phenotypes in clinical disorders such as autism spectrum61,62 and attention-deficit hyperactivity disorders63,64. To reiterate, systematic probing of individual differences in vibrotactile detection and discrimination thresholds would provide a different perspective on somatosensory (dys)function.

The tasks applied in this battery have been strongly linked to neurophysiological mechanisms, particularly those related to inhibitory function. Static detection threshold and its dynamic counterpart, in particular, have been linked to feed-forward inhibition and sensory gating. The significantly higher dynamic detection thresholds replicated in this study follow the theory that the ramping up of a stimulus from an undetectable level leads to increased thresholds as a result of initial adaptation from inhibitory interneurons, which have a lower spiking threshold25,33. The differential effects of single- and dual-site adaptation on amplitude discrimination—also replicated in this study—reflect the engagement of lateral inhibition that either enhances or diminishes the ability to distinguish between stimuli59,65. This is supported by the lack of such effects in ASD17,66, where inhibition is thought to be dysfunctional. Additionally, prior work has linked aberrant vibrotactile processing to abnormal GABA levels in the brain19,23,29. High ICCs suggest that vibrotactile thresholds as a measure are very stable in a healthy population and thus are useful in determining abnormal cortical physiology as well.

Psychophysical experiments historically involve hundreds of trials and low subject numbers. The vibrotactile battery used here was originally designed so that it could be completed within 50–60 min, making it suitable for a paediatric cohort. While this is somewhat atypical in psychophysical terms, there were no significant effects of battery length for most of the tasks, signifying that the original length of the battery is appropriate (at the very least for adults). Moreover, the relatively short length makes it more practical to collect data from a higher number of participants, which would counter the lower reliability of some of the vibrotactile measures.

While there did not appear to be any effects of training or battery length, several factors that may influence reproducibility were not taken into account. For instance, attentional effects were not systematically controlled for or assessed. There is evidence that attention can enhance the cortical dynamics of tactile processing67,68. Another possible drawback of this study was that was the order of tasks was kept fixed in each session. The less reproducible and ostensibly more difficult tasks (frequency discrimination) were always toward the end of the session, meaning fatigue effects could have played a detrimental effect on test-retest reliability. To counter such possible systematic effects, future implementations of vibrotactile paradigms would benefit from randomizing the presentation order of tasks. Additional effects not controlled for in the current study were caffeine consumption, sleep, and menstrual cycle, which could lead to different results in different sessions as well. We did, however, test whether participants could use audible cues to aid their performance (as was reported for a different device69), but this was not the case.

In conclusion, vibrotactile detection and discrimination thresholds show good reproducibility. This study lends further support to the value of psychophysical approaches to probe tactile function in an array of human populations.

Data availability

Raw data, R markdown files, and supplementary figures generated in this study are publicly available on the Open Science Framework: https://doi.org/10.17605/OSF.IO/5ZA9F.

References

Hernández, A., Zainos, A. & Romo, R. Neuronal correlates of sensory discrimination in the somatosensory cortex. Proc. Natl. Acad. Sci. 97, 6191–6196 (2000).

Romo, R., Hernández, A., Zainos, A., Lemus, L. & Brody, C. D. Neuronal correlates of decision-making in secondary somatosensory cortex. Nat. Neurosci. 5, 1217–1225 (2002).

Dykes, R. W., Landry, P., Metherate, R. & Hicks, T. P. Functional role of GABA in cat primary somatosensory cortex: shaping receptive fields of cortical neurons. J. Neurophysiol. 52, 1066–1093 (1984).

Juliano, S., Whitsel, B., Tommerdahl, M. & Cheema, S. Determinants of patchy metabolic labeling in the somatosensory cortex of cats: a possible role for intrinsic inhibitory circuitry. J. Neurosci. 9, 1–12 (1989).

Mountcastle, V. B., Talbot, W. H., Darian-Smith, I. & Kornhuber, H. H. Neural Basis of the Sense of Flutter-Vibration. Science 155, 597–600 (1967).

LaMotte, R. H. & Mountcastle, V. B. Capacities of humans and monkeys to discriminate vibratory stimuli of different frequency and amplitude: a correlation between neural events and psychological measurements. J. Neurophysiol. 38, 539–559 (1975).

Johansson, R. S. & Vallbo, A. B. Detection of tactile stimuli. Thresholds of afferent units related to psychophysical thresholds in the human hand. J. Physiol. 297, 405–422 (1979).

LaMotte, R. H. & Mountcastle, V. B. Disorders in somesthesis following lesions of parietal lobe. J. Neurophysiol. 42, 400–419 (1979).

Löfvenberg, J. & Johansson, R. S. Regional differences and interindividual variability in sensitivity to vibration in the glabrous skin of the human hand. Brain Res. 301, 65–72 (1984).

Vallbo, A. B. & Johansson, R. S. Properties of cutaneous mechanoreceptors in the human hand related to touch sensation. Hum. Neurobiol. 3, 3–14 (1984).

von Bekesy, G. Sensory Inhibition. (Princeton University Press, 1967).

Gescheider, G. A. Psychophysics: The Fundamentals. (Psychology Press, 1997).

Puts, N. A. J., Edden, R. A. E., Wodka, E. L., Mostofsky, S. H. & Tommerdahl, M. A vibrotactile behavioral battery for investigating somatosensory processing in children and adults. J. Neurosci. Methods 218, 39–47 (2013).

Mountcastle, V. B., Talbot, W. H., Sakata, H. & Hyvärinen, J. Cortical neuronal mechanisms in flutter-vibration studied in unanesthetized monkeys. Neuronal periodicity and frequency discrimination. J. Neurophysiol. 32, 452–484 (1969).

Whitsel, B. L. et al. Time-dependence of SI RA neuron response to cutaneous flutter stimulation. Somatosens. Mot. Res. 20, 45–69 (2003).

Romo, R. & Salinas, E. Flutter discrimination: Neural codes, perception, memory and decision making. Nat. Rev. Neurosci. 4, 203–218 (2003).

Puts, N. A. J., Wodka, E. L., Tommerdahl, M., Mostofsky, S. H. & Edden, R. A. E. Impaired tactile processing in children with autism spectrum disorder. J. Neurophysiol. 111, 1803–1811 (2014).

Wodka, E. L. et al. The Role of Attention in Somatosensory Processing: A Multi-trait, Multi-method Analysis. J. Autism Dev. Disord. 46, 3232–3241 (2016).

Puts, N. A. J. et al. Reduced GABA and altered somatosensory function in children with autism spectrum disorder. Autism Res. 10, 608–619 (2017).

Tavassoli, T. et al. Altered tactile processing in children with autism spectrum disorder. Autism Res. 9, 616–620 (2016).

Tommerdahl, M., Tannan, V., Cascio, C. J., Baranek, G. T. & Whitsel, B. L. Vibrotactile adaptation fails to enhance spatial localization in adults with autism. Brain Res. 1154, 116–123 (2007).

Tommerdahl, M., Tannan, V., Holden, J. K. & Baranek, G. T. Absence of stimulus-driven synchronization effects on sensory perception in autism: Evidence for local underconnectivity? Behav. Brain Funct. 4, 19 (2008).

Puts, N. A. J. et al. Reduced GABAergic inhibition and abnormal sensory symptoms in children with Tourette syndrome. J. Neurophysiol. 114, 808–817 (2015).

Puts, N. A. J. et al. Altered tactile sensitivity in children with attention-deficit hyperactivity disorder. J. Neurophysiol. 118, 2568–2578 (2017).

Zhang, Z., Francisco, E. M., Holden, J. K., Dennis, R. G. & Tommerdahl, M. Somatosensory Information Processing in the Aging Population. Front. Aging Neurosci. 3, 18 (2011).

Hanley, C. J., Burianová, H. & Tommerdahl, M. Towards Establishing Age-Related Cortical Plasticity on the Basis of Somatosensation. Neuroscience 404, 407–412 (2019).

Puts, N. A. J., Edden, R. A. E., Evans, C. J., McGlone, F. & McGonigle, D. J. Regionally Specific Human GABA Concentration Correlates with Tactile Discrimination Thresholds. J. Neurosci. 31, 16556–16560 (2011).

Mikkelsen, M., Harris, A. D., Edden, R. A. E. & Puts, N. A. J. Macromolecule-suppressed GABA measurements correlate more strongly with behavior than macromolecule-contaminated GABA+ measurements. Brain Res. 1701, 204–211 (2018).

Sapey-Triomphe, L., Lamberton, F., Sonié, S., Mattout, J. & Schmitz, C. Tactile hypersensitivity and GABA concentration in the sensorimotor cortex of adults with autism. Autism Res. 12, 562–575 (2019).

Tommerdahl, M. et al. Neurosensory Assessments of Concussion. Mil. Med. 181, 45–50 (2016).

Favorov, O. V. et al. Quantification of Mild Traumatic Brain Injury via Cortical Metrics: Analytical Methods. Mil. Med. 184, 228–236 (2019).

Hanley, C. J., Tommerdahl, M. & McGonigle, D. J. Stimulating somatosensory psychophysics: a double-blind, sham-controlled study of the neurobiological mechanisms of tDCS. Front. Cell. Neurosci. 9, 1–13 (2015).

Blankenburg, F. et al. Imperceptible stimuli and sensory processing impediment. Science 299, 1864 (2003).

Middleton, J. W., Omar, C., Doiron, B. & Simons, D. J. Neural Correlation Is Stimulus Modulated by Feedforward Inhibitory Circuitry. J. Neurosci. 32, 506–518 (2012).

Swadlow, H. A. Fast-spike Interneurons and Feedforward Inhibition in Awake Sensory Neocortex. Cereb. Cortex 13, 25–32 (2003).

Whitsel, B. L. et al. Dynamic processes govern the somatosensory cortical response to natural stimulation. in Sensory Processing in the Mammalian Brain. Neural Substrates and Experimental Strategies 84–116 (Oxford University Press, 1989).

Oldfield, R. C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 9, 97–113 (1971).

Holden, J. K. et al. A novel device for the study of somatosensory information processing. J. Neurosci. Methods 204, 215–220 (2012).

Holden, J. et al. Accuracy of different modalities of reaction time testing: Implications for online cognitive assessment tools. bioRxiv (2019) https://doi.org/10.1101/726364.

R Core Team. R: A Language and Environment for Statistical Computing. (2018).

Rousseeuw, P. J. & Croux, C. Alternatives to the Median Absolute Deviation. J. Am. Stat. Assoc. 88, 1273–1283 (1993).

Leys, C., Ley, C., Klein, O., Bernard, P. & Licata, L. Detecting outliers: Do not use standard deviation around the mean, use absolute deviation around the median. J. Exp. Soc. Psychol. 49, 764–766 (2013).

Shrout, P. E. & Fleiss, J. L. Intraclass correlations: Uses in assessing rater reliability. Psychol. Bull. 86, 420–428 (1979).

McGraw, K. O. & Wong, S. P. Forming inferences about some intraclass correlation coefficients. Psychol. Methods 1, 30–46 (1996).

Revelle, W. psych: Procedures for Psychological, Psychometric, and Personality Research. (2018).

Bates, D., Mächler, M., Bolker, B. M. & Walker, S. C. Fitting linear mixed-effects models using lme4. J. Stat. Softw. 67, (2015).

Peugh, J. L. A practical guide to multilevel modeling. J. Sch. Psychol. 48, 85–112 (2010).

Holm, S. A simple sequentially rejective multiple test procedure. Scand. J. Stat. 6, 65–70 (1979).

Hothorn, T., Bretz, F. & Westfall, P. Simultaneous Inference in General Parametric Models. Biometrical J. 50, 346–363 (2008).

Baker, S. J., Maurissen, J. P. J. & Chrzan, G. J. Simple Reaction Time and Movement Time in Normal Human Volunteers: A Long-Term Reliability Study. Percept. Mot. Skills 63, 767–774 (1986).

Lemay, S., Bédard, M.-A., Rouleau, I. & Tremblay, P.-L. Practice Effect and Test-Retest Reliability of Attentional and Executive Tests in Middle-Aged to Elderly Subjects. Clin. Neuropsychol. 18, 284–302 (2004).

Hockey, A. & Geffen, G. The concurrent validity and test-retest reliability of a visuospatial working memory task. Intelligence 32, 591–605 (2004).

Saville, C. W. N. et al. On the stability of instability: Optimising the reliability of intra-subject variability of reaction times. Pers. Individ. Dif. 51, 148–153 (2011).

Saville, C. W. N. et al. Is reaction time variability consistent across sensory modalities? Insights from latent variable analysis of single-trial P3b latencies. Biol. Psychol. 91, 275–282 (2012).

Güçlü, B. & Bolanowski, S. J. Vibrotactile thresholds of the Non-Pacinian I channel: I. Methodological issues. Somatosens. Mot. Res. 22, 49–56 (2005).

Güçlü, B. & Dinçer, Ş. M. Neural coding in the Non-Pacinian I tactile channel: A psychophysical and simulation study of magnitude estimation. Somatosens. Mot. Res. 30, 1–15 (2013).

Cicchetti, D. V. Guidelines, criteria, and rules of thumb for evaluating normed and standardized assessment instruments in psychology. Psychol. Assess. 6, 284–290 (1994).

Heitz, R. P. The speed-accuracy tradeoff: history, physiology, methodology, and behavior. Front. Neurosci. 8, 1–19 (2014).

Tannan, V., Simons, S., Dennis, R. G. & Tommerdahl, M. Effects of adaptation on the capacity to differentiate simultaneously delivered dual-site vibrotactile stimuli. Brain Res. 1186, 164–170 (2007).

Tommerdahl, M., Tannan, V., Zachek, M., Holden, J. K. & Favorov, O. V. Effects of stimulus-driven synchronization on sensory perception. Behav. Brain Funct. 3, 61 (2007).

Charman, T. et al. Defining the cognitive phenotype of autism. Brain Res. 1380, 10–21 (2011).

Jeste, S. S. & Geschwind, D. H. Disentangling the heterogeneity of autism spectrum disorder through genetic findings. Nat. Rev. Neurol. 10, 74–81 (2014).

Wåhlstedt, C., Thorell, L. B. & Bohlin, G. Heterogeneity in ADHD: Neuropsychological Pathways, Comorbidity and Symptom Domains. J. Abnorm. Child. Psychol. 37, 551–564 (2009).

Fair, D. A., Bathula, D., Nikolas, M. A. & Nigg, J. T. Distinct neuropsychological subgroups in typically developing youth inform heterogeneity in children with ADHD. Proc. Natl. Acad. Sci. 109, 6769–6774 (2012).

Goble, A. K. & Hollins, M. Vibrotactile adaptation enhances amplitude discrimination. J. Acoust. Soc. Am. 93, 418–424 (1993).

Tannan, V., Holden, J. K., Zhang, Z., Baranek, G. T. & Tommerdahl, M. A. Perceptual metrics of individuals with autism provide evidence for disinhibition. Autism Res. 1, 223–230 (2008).

Bauer, M., Oostenveld, R., Peeters, M. & Fries, P. Tactile Spatial Attention Enhances Gamma-Band Activity in Somatosensory Cortex and Reduces Low-Frequency Activity in Parieto-Occipital Areas. J. Neurosci. 26, 490–501 (2006).

van Ede, F., de Lange, F., Jensen, O. & Maris, E. Orienting Attention to an Upcoming Tactile Event Involves a Spatially and Temporally Specific Modulation of Sensorimotor Alpha- and Beta-Band Oscillations. J. Neurosci. 31, 2016–2024 (2011).

Rahman, S., Barnes, K. A., Crommett, L. E., Tommerdahl, M. & Yau, J. M. Multimodal frequency representations are embedded in modality-defined cortical sensory systems. bioRxiv https://doi.org/10.1101/628305 (2019).

Acknowledgements

This work was supported by NIH grants R00 MH107719, R01 MH106564, and R01 MH085328. Partial support was provided by the Office of Naval Research to M.T. The authors thank Abigail Benjamin for her assistance with testing room bookings and participant data management.

Author information

Authors and Affiliations

Contributions

N.A.J.P. designed the study. M.M. collected and analysed the data and drafted the manuscript. J.H. analysed the data. M.T. provided the vibrotactile stimulator and Cortical Metrics software. All authors reviewed, revised and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

M.T. is co-founder and president of Cortical Metrics, which designed and manufactured the vibrotactile stimulator used in this study. The rest of the authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mikkelsen, M., He, J., Tommerdahl, M. et al. Reproducibility of flutter-range vibrotactile detection and discrimination thresholds. Sci Rep 10, 6528 (2020). https://doi.org/10.1038/s41598-020-63208-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-63208-z

- Springer Nature Limited

This article is cited by

-

Could Sensory Differences Be a Sex-Indifferent Biomarker of Autism? Early Investigation Comparing Tactile Sensitivity Between Autistic Males and Females

Journal of Autism and Developmental Disorders (2024)

-

Atypical Tactile Perception in Early Childhood Autism

Journal of Autism and Developmental Disorders (2023)

-

Disorder-specific alterations of tactile sensitivity in neurodevelopmental disorders

Communications Biology (2021)