Abstract

Research has shown that the addition of abstention as an option transforms social dilemmas to rock-paper-scissor type games, where defectors dominate cooperators, cooperators dominate abstainers (loners), and abstainers (loners), in turn, dominate defectors. In this way, abstention can sustain cooperation even under adverse conditions, although defection also persists due to cyclic dominance. However, to abstain or to act as a loner has, to date, always been considered as an independent, third strategy to complement traditional cooperation and defection. Here we consider probabilistic abstention, where each player is assigned a probability to abstain in a particular instance of the game. In the two limiting cases, the studied game reverts to the prisoner’s dilemma game without loners or to the optional prisoner’s dilemma game. For intermediate probabilities, we have a new hybrid game, which turns out to be most favorable for the successful evolution of cooperation. We hope this novel hybrid game provides a more realistic view of the dilemma of optional/voluntary participation.

Similar content being viewed by others

Introduction

Over the last decades, the prisoner’s dilemma game has been adopted in a variety of studies which seek to explore and resolve the dilemma of cooperation1,2,3. These studies include the use of the network reciprocity mechanism4, which has gained increasing attention for its support of cooperative behaviour. In this mechanism, each agent is represented as a node in the network (graph) and is constrained to interact only with its neighbours, which are linked by edges in the network5,6. Research concerning network reciprocity includes the use of different topologies such as lattices7, scale-free graphs8,9,10, small-world graphs11,12,13, cycle graphs14, multilayer networks15,16,17 and bipartite graphs18,19 which have a considerable impact on the evolution of cooperation, and also favour the formation of different patterns and phenomena20,21. Moreover, approaches adopting coevolutionary networks, where both game strategies and the network itself are subject to evolution have also been investigated22,23,24,25,26,27,28,29,30,31.

In essence, evolutionary game theory and its most-often used game, the prisoner’s dilemma (PD) game, provides a simple and powerful framework to study the conflict between choices that are beneficial to an individual and those that are good for the whole community. The game is played by pairs of agents, who simultaneously decide to either cooperate (C) or defect (D), receiving a payoff associated with their pairwise interaction as follows: R for mutual cooperation, P for mutual defection, S for cooperating with a defector and T for successfully defecting a cooperator. The dilemma holds when T > R > P > S32. In addition to theoretical research, there is also a lot of work using experimental games. The experimental prisoner’s dilemma has been used by several researchers to find mechanisms to promote cooperative behaviour, including the benefit-to-cost ratio of cooperation33, group size34,35, dynamic spatial structure36,37, just to name a few examples.

Despite the overwhelming amount of scenarios that can be described as a PD game, it has been discussed that in many scenarios agents’ interactions are not compulsory, and in those cases, the PD game would not be suitable. Thus, extensions of this game such as the optional prisoner’s dilemma (OPD) game, also known as the prisoner’s dilemma game with voluntary participation, have been explored in order to allow agents to abstain from a game interaction, that is, do not play the game and receive the so-called loner’s payoff (L), which is the same regardless of the other agent’s strategy (i.e., if either one or both agents abstain, both agents will get L). The dilemma is maintained when T > R > L > P > S38,39. Studies reveal that the concept of abstaining can lead to entirely different outcomes and eventually help cooperators to avoid exploitation from defectors40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57. Of relevance for our research is also the literature on games with an exit strategy. For example, research has been done on the dictator game with an exit strategy58,59.

However, we believe that in many situations involving voluntary participation, such as in human interactions, the use of abstention as a pure strategy may not be ideal to capture the social dilemma. In reality, depending on the context and the type of social relationships we are modelling, abstention can also mean laziness, shyness or lack of proactivity, and all those emotions, feelings or characteristics may exist within a certain range. Thus, we propose that in a round of interactions, some agents might be interested in interacting with all of its neighbours (i.e., never abstain), while others may be willing to interact with only a few of them and abstain from interacting with others. To give another example, in the context of a poll of a number of individuals, there might be some who vote and others who do not. In the latter case, considering all the non-voters as abstainers might be too simplistic. In reality, there might be some who abstain because they do not have a view at all and those who occasionally abstain from convenience, lack of interest or because of some external event. In this way, we believe that abstention should be seen and explored as an extra attribute of each agent, and not as a pure strategy.

Given this motivation, in this paper, we introduce a prisoner’s dilemma with probabilistic abstention (PDPA), which is a hybrid of two well-known games in evolutionary game theory: the PD and the OPD game (also known as the PD game with voluntary participation). As occurs in the PD game, in the hybrid game each agent can choose either to cooperate or defect. The only difference is that in the PDPA game, in addition to the game strategy, each agent is defined by a value α = [0, 1] to denote a probability of abstaining from any interaction.

This work aims to investigate the differences between the PDPA game and the classic PD and OPD games. A number of Monte Carlo simulations are performed to investigate the effects of α in the evolution of cooperation. In order to have a more complete analysis of the evolutionary dynamics, both synchronous and asynchronous updating rules60,61,62 are explored.

Results

In order to increase the understanding of the outcomes associated with the hybrid game proposed in this paper (i.e., the prisoner’s dilemma game with probabilistic abstention – PDPA), in the following experiments we adopt ε = (1 − s)(1 − α) to denote the effective cooperation rate of an agent, where s = {0, 1} and α = [0, 1] correspond to the agent’s strategy and its probability of abstaining from a game interaction respectively. Note that here s = 0 means cooperator, s = 1 means defector, α = 0 indicates that the agent never abstains and α = 1 indicates that the agent always abstains. In this way, we can have two types of agents for each strategy: the pure-cooperators and the pure-defectors (i.e., the agents who always play the game, α = 0); and the agents who sporadically play the game (i.e., the sporadic-cooperators and sporadic-defectors, 0 < α < 1). Thus, the value of ε is very important to easily distinguish between a cooperator who always abstains (i.e., {s = 0, α = 1} ⇒ ε = 0), from the sporadic-cooperators (i.e., {s = 0, α = (0, 1)} ⇒ ε > 0), and the pure-cooperators (i.e., {s = 0, α = 0} ⇒ ε = 1).

We start by comparing the outcomes of the PDPA game with those obtained for the classic prisoner’s dilemma (PD) and optional prisoner’s dilemma (OPD) games for both synchronous and asynchronous updating rules (Fig. 1). We test a number of randomly initialized populations of agents playing the PDPA game with three different setups:

-

α = 0 for all agents (equivalent to the PD game);

-

α is either 0 or 1 with equal probability (equivalent to the OPD game);

-

α = [0, 1] uniformly distributed.

Comparing the average fractions of α (i.e., the probability of abstaining frequency) and ε (i.e., the effective cooperation frequency — ε = (1 − s)(1 − α), where s denotes the agent’s strategy) for different values of the temptation to defect T. The results are obtained by averaging 100 independent runs at the stationary state (after 105 Monte Carlo steps) of the classic prisoner’s dilemma (PD), the optional prisoner’s dilemma (OPD) and the hybrid of them, i.e., prisoner’s dilemma with probabilistic abstention (PDPA). All games are tested in both synchronous and asynchronous updating fashions with a regular square lattice grid populated with N = 102 × 102 agents, for a fixed reward for mutual cooperation R = 1, punishment for mutual defection P = 0, sucker’s payoff S = 0 and loner’s payoff L = 0.4.

For all setups, we investigate the relationship between the fraction of effective cooperation ε and the probability of abstaining α for different values of the temptation to defect T and the loner’s payoff L.

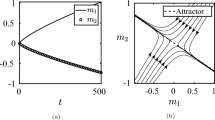

As shown in Fig. 1, for the synchronous rule, it is possible to observe that the PDPA sustains higher levels of cooperation even for large values of the temptation to defect T. The difference between the outcomes of the synchronous and asynchronous versions in the classic games occur as expected: cooperation has more chance of surviving when the updating rules are synchronous, with less stochasticity and more awareness of the neighbourhood’s behaviour, i.e., the agent knows who is the best player in its neighbourhood. Surprisingly, results indicate that when the PDPA is considered, this enhancement also holds for the asynchronous updating model, which is a well-known adverse scenario for both classic games62. In general, it is clear that irrespective of the updating rule, the PDPA game is most beneficial for the evolution of cooperation. Moreover, when comparing the OPD with the PDPA game, we see a correlation between their levels of abstention, showing that abstention may act as an important mechanism to maintain cooperation and avoid defector’s dominance, which is also supported by Fig. 2, which features the time course of the fraction of ε and α for both updating rules of agents playing the PDPA game for three values of the temptation to defect (i.e., T = {1.1, 1.4, 1.9}).

Time course of the effective cooperation ε and the probability of abstaining α for different values of the temptation to defect T. All curves refer to the prisoner’s dilemma with probabilistic abstention (PDPA) game for a regular square lattice grid populated with N = 102 × 102 agents, for a fixed reward for mutual cooperation R = 1, punishment for mutual defection P = 0, sucker’s payoff S = 0 and loner’s payoff L = 0.4.

Given the nature of the classic PD and OPD games, it is known that in a well-mixed population, defection and abstention are usually the dominant strategies respectively. As discussed in previous work22,63, this happens because cooperators need to form clusters to be able to protect themselves against exploitation from defectors, and if we consider a randomly initialized population, it takes a few steps for cooperators to cluster. Meanwhile, the defection rate increases quickly in the initial steps until the agents reach a stage where defectors have more chance of finding another defector than a cooperator. Consequently, defection starts to be a bad strategy and if abstention is an option, the agents prefer to abstain; otherwise defectors will hardly become cooperators as they do not have the incentive to change their strategies. Interestingly, as shown in Fig. 2, a similar pattern can be observed in the PDPA game, i.e., in the initial steps, the rate of pure-defectors (α = 0) increases more quickly, causing the sporadic-cooperators (α > 0) to have a better performance. Then, with the increase of the pure-defectors, sporadic-defectors (α > 0) start to be a better choice. At this point, with less pure-defectors in the population, cooperators with smaller values of α start to perform better, producing a wave towards the decrease of α. This simple mechanism explains the initial bell-shaped curve in the average fraction of α in Fig. 2.

In order to further investigate the results obtained for the PDPA game, some typical distributions of the strategies, probability of abstaining α, and the effective cooperation rate ε are shown in Fig. 3. In addition to the similarities with the classic games, other interesting phenomena can be observed in the PDPA game, such as robust coexistence of cooperation and defection for different values of T and L. Results show that agents who always refuse to interact (α = 1) are wiped out in most scenarios when T < 1.9 and L < 0.8. That is, agents who interact at least once will usually have a better performance. Moreover, it was observed that irrespective of the high heterogeneity of values of α in the initialization, the population usually converges to two values of α for the synchronous model, and three distinct values of α for the asynchronous model. However, the higher heterogeneity of states in the initial steps plays a key role in increasing the performance of cooperators in the PDPA game. This happens because the intermediate values of α help to reduce the exposure of cooperators to the risk of being exploited by defectors too quickly.

Typical distributions of the effective cooperation ε, probability of abstaining α, and game strategy s for different values of the temptation to defect T. All screenshots refer to the prisoner’s dilemma game with probabilistic abstention (PDPA) at the 105 Monte Carlo step for a regular square lattice grid populated with N = 102 × 102 agents, with a fixed reward for mutual cooperation R = 1, punishment for mutual defection P = 0, sucker’s payoff S = 0 and loner’s payoff L = 0.4.

Finally, Fig. 4 shows the average fraction of ε and α on the plane T − L (i.e., temptation to defect vs loner’s payoff) for both PDPA and OPD games, with synchronous and asynchronous updating rules. It is possible to observe that the PDPA acts like an enhanced version of the OPD game. In addition, its performance with the asynchronous updating rules is remarkable; we see that when the concept of optionality is given in levels, i.e., the introduction of the probability of abstaining α, the population succeeds in controlling the dominance of abstention behaviour, which maintains the diversity of strategies and also helps to promote cooperation.

Heat map of the average effective cooperation ε and probability of abstaining α in the T − L plane (i.e., temptation to defect vs loner’s payoff) for 100 independent runs at the stationary state (i.e., after 105 Monte Carlo steps). Both synchronous and asynchronous fashions of the optional prisoner’s dilemma game (OPD) and the prisoner’s dilemma game with probabilistic abstention (PDPA) are explored. A regular square lattice grid is adopted, populated with N = 102 × 102 agents, for a fixed reward for mutual cooperation R = 1, punishment for mutual defection P = 0, sucker’s payoff S = 0 and loner’s payoff L = 0.4.

Furthermore, as discussed previously, despite being more effective in promoting cooperation than the classic games, we observed that cooperation is the dominant strategy only if T is relatively small in a synchronous updating fashion. In summary, for both updating rules, the possibility of not interacting with all neighbours (α > 0) helps cooperators to decrease the risk of being exposed to defectors in the initial steps (when most of them could not yet cluster), which consequently allows them to survive even when T is very high. However, this possibility also hampers them from dominating the environment afterwards, which results in the promotion of a robust state of coexistence of both strategies.

Discussion

We have studied a novel evolutionary game called the prisoner’s dilemma with probabilistic abstention (PDPA), which is essentially the merger of two well-known games: the prisoner’s dilemma (PD) game and the optional prisoner’s dilemma (OPD) game. A number of Monte Carlo simulations with both synchronous and asynchronous updating rules were carried out, where it was shown that the PDPA game is much more beneficial for promoting cooperation than the classic PD and OPD games.

It was discussed that in most evolutionary scenarios (i.e., T < 1.9 and L < 0.8), the agents who interact at least once (α < 1) usually have a better performance. This indicates that intermediate values of α are a better option for promoting both cooperative behaviour and diversity of strategies (cyclic dominance) in the population. Moreover, results suggest that the higher heterogeneity of states in the initial steps play a key role in slowing down the evolution of defection, which increases the chance of the formation of cooperative clusters. It is noteworthy that the precise role of heterogeneity in the PDPA game needs to be further explored. To conclude, it was observed that PDPA is, in fact, an enhanced version of the OPD game, which provides a more realistic representation of the concept of voluntary/optional participation.

Methods

This work considers the prisoner’s dilemma game with probabilistic abstention (PDPA), which is an evolutionary theoretical-game with two pure competing strategies: cooperate (C) and defect (D). In this game, each agent is characterized by two different attributes: game strategy s and the probability of abstaining α, which determines how likely it is that an agent will interact in each pairwise play. When an agent abstains from a game interaction, both agents acquire the same loner’s payoff L. In this way, α is a number from zero to one where α = 0 denotes an agent who never abstains (always plays the game), and α = 1 denotes an agent who always abstains (never plays the game). When both agents play the game, their payoffs follow the same structure of the classic prisoner’s dilemma game, i.e., the reward for mutual cooperation R = 1, punishment for mutual defection P = 0, T for the temptation to defect and the sucker’s payoff S = 0. To ensure the proper nature of the dilemma, 1 < T < 2 and 0 < L < 138.

Without loss of generality, we discretize the values of α to |α| = 2κ in equal intervals, where κ is the agent’s degree. We adopt a regular square lattice grid with periodic boundary conditions (i.e., a toroid) fully populated with N = 102 × 102 agents playing the PDPA game. Each agent interacts with its four immediate neighbours (von Neumann neighborhood) and is initially assigned a strategy s = {C, D} and a probability of abstaining \(\alpha =\{0,0.125,0.250\cdots 0.750,0.875,1.0\}\) with equal probability. The evolution process is performed through a number of Monte Carlo (MC) simulations64 in both synchronous and asynchronous fashion as follows60:

-

Synchronous updating: at each time step, all agents x in the population play the game once with each of their four neighbours y acquiring the payoff pxy for each interaction. After that, for the current time step, each agent copies the strategy and the value of α of the best performing agent in the neighbourhood. In case of ties, or if x is the best in the neighbourhood, its strategy and α remains the same.

-

Asynchronous updating: at each time step, each agent is selected once on average to play the game and update its strategy and α immediately. That is, in one time step, N agents are randomly selected to perform the following elementary procedures: the agent x plays the game with all neighbours y, acquiring the payoffs pxy for each play (i.e., obtaining the utility of \({u}_{x}=\sum {p}_{xy}\)); one randomly chosen neighbour of x (y) also acquires its payoffs pyz by playing with all its neighbours z (i.e., obtaining the utility of \({u}_{y}=\sum {p}_{yz}\)); finally, if uy > ux, agent x copies the strategy and the value of α from its neighbour y with a probability:

where K = 0.1 denotes the amplitude of noise21.

In our experiments, all Monte Carlo simulations are run for 105 steps, which is a sufficiently long thermalization time to determine the stationary states. Furthermore, to ensure proper accuracy and alleviate the effect of randomness in the approach, the final results are obtained by averaging 100 independent runs.

It is noteworthy that the PDPA game allows us to perform both classic games (PD and OPD). That is, by setting all agents to have α = 0, we ensure that they will always play the game, which is essentially the same as considering the classic PD game. Similarly, by setting agents to have α = {0, 1} we ensure that some agents will purely abstain, while others will play the game, which is the same as considering the OPD game.

References

Perc, M. et al. Statistical physics of human cooperation. Physics Reports 687, 1–51 (2017).

Smith, J. M. Evolution and the Theory of Games (Cambridge University Press, 1982).

Axelrod, R. & Hamilton, W. The evolution of cooperation. Science 211, 1390–1396 (1981).

Nowak, M. A. Five rules for the evolution of cooperation. Science 314, 1560–1563 (2006).

Nowak, M. A., Tarnita, C. E. & Antal, T. Evolutionary dynamics in structured populations. Philosophical Transactions of the Royal Society of London B: Biological Sciences 365, 19–30 (2009).

Lieberman, E., Hauert, C. & Nowak, M. A. Evolutionary dynamics on graphs. Nature 433, 312–316 (2005).

Nowak, M. A. & May, R. M. Evolutionary games and spatial chaos. Nature 359, 826–829 (1992).

Szolnoki, A. & Perc, M. Leaders should not be conformists in evolutionary social dilemmas. Scientific Reports 6, 23633 (2016).

Xia, C.-Y., Meloni, S., Perc, M. & Moreno, Y. Dynamic instability of cooperation due to diverse activity patterns in evolutionary social dilemmas. EPL 109, 58002 (2015).

Santos, F. C. & Pacheco, J. M. Scale-free networks provide a unifying framework for the emergence of cooperation. Phys. Rev. Lett. 95, 098104 (2005).

Chen, X. & Wang, L. Promotion of cooperation induced by appropriate payoff aspirations in a small-world networked game. Physical Review E 77, 017103 (2008).

Fu, F., Liu, L.-H. & Wang, L. Evolutionary prisoner’s dilemma on heterogeneous Newman-Watts small-world network. The European Physical Journal B 56, 367–372 (2007).

Abramson, G. & Kuperman, M. Social games in a social network. Phys. Rev. E 63, 030901 (2001).

Altrock, P. M., Traulsen, A. & Nowak, M. A. Evolutionary games on cycles with strong selection. Phys. Rev. E 95, 022407 (2017).

Boccaletti, S. et al. The structure and dynamics of multilayer networks. Physics Reports 544, 1–122 (2014).

Wang, Z., Szolnoki, A. & Perc, M. Interdependent network reciprocity in evolutionary games. Scientific reports 3, 1183 (2013).

Gómez-Gardenes, J., Reinares, I., Arenas, A. & Floría, L. M. Evolution of cooperation in multiplex networks. Scientific reports 2, 620 (2012).

Peña, J. & Rochat, Y. Bipartite graphs as models of population structures in evolutionary multiplayer games. PLOS ONE 7, 1–13 (2012).

Gómez-Gardeñes, J., Romance, M., Criado, R., Vilone, D. & Sánchez, A. Evolutionary games defined at the network mesoscale: The public goods game. Chaos: An Interdisciplinary Journal of Nonlinear Science 21, 016113 (2011).

Perc, M., Gómez-Gardeñes, J., Szolnoki, A., Floría, L. M. & Moreno, Y. Evolutionary dynamics of group interactions on structured populations: a review. Journal of The Royal Society Interface 10, 20120997 (2013).

Szabó, G. & Fáth, G. Evolutionary games on graphs. Physics Reports 446, 97–216 (2007).

Cardinot, M., Griffith, J. & O’Riordan, C. A further analysis of the role of heterogeneity in coevolutionary spatial games. Physica A: Statistical Mechanics and its Applications 493, 116–124 (2018).

Li, D. et al. The co-evolution of networks and prisoner’s dilemma game by considering sensitivity and visibility. Scientific Reports 7, 45237 (2017).

Huang, K., Zheng, X., Li, Z. & Yang, Y. Understanding cooperative behavior based on the coevolution of game strategy and link weight. Scientific Reports 5, 14783 (2015).

Cao, L., Ohtsuki, H., Wang, B. & Aihara, K. Evolution of cooperation on adaptively weighted networks. Journal of Theoretical Biology 272, 8–15 (2011).

Zhang, C., Zhang, J., Xie, G., Wang, L. & Perc, M. Evolution of interactions and cooperation in the spatial prisoner’s dilemma game. PLOS ONE 6, 1–7 (2011).

Perc, M. & Szolnoki, A. Coevolutionary games – A mini review. Biosystems 99, 109–125 (2010).

Szolnoki, A. & Perc, M. Resolving social dilemmas on evolving random networks. EPL 86, 30007 (2009).

Zimmermann, M. G., Eguíluz, V. M. & San Miguel, M. Coevolution of dynamical states and interactions in dynamic networks. Physical Review E 69, 065102 (2004).

Ebel, H. & Bornholdt, S. Coevolutionary games on networks. Phys. Rev. E 66, 056118 (2002).

Zimmermann, M. G., Eguíluz, V. M. &Miguel, M. S. Cooperation, Adaptation and the Emergence of Leadership, 73–86 (Springer, Berlin, Heidelberg, 2001).

Rapoport, A. & Chammah, A. M. Prisoner’s dilemma: A study in conflict and cooperation, vol. 165 (University of Michigan Press, 1965).

Capraro, V., Jordan, J. J. & Rand, D. G. Heuristics guide the implementation of social preferences in one-shot prisoner’s dilemma experiments. Scientific Reports 4, 6790 (2014).

Barcelo, H. & Capraro, V. Group size effect on cooperation in one-shot social dilemmas. Scientific Reports 5, 7937 (2015).

Capraro, V. & Barcelo, H. Group size effect on cooperation in one-shot social dilemmas II: Curvilinear effect. PLoS ONE 10, e0131419 (2015).

Rand, D. G., Arbesman, S. & Christakis, N. A. Dynamic social networks promote cooperation in experiments with humans. Proc. NAtl. Acad. Sci. USA 108, 19193–19198 (2011).

Suri, S. & Watts, D. J. Cooperation and contagion in web-based, networked public goods experiments. PLOS ONE 6, e16836 (2011).

Szabó, G. & Hauert, C. Evolutionary prisoner’s dilemma games with voluntary participation. Physical Review E 66, 062903 (2002).

Batali, J. & Kitcher, P. Evolution of altruism in optional and compulsory games. Journal of Theoretical Biology 175, 161–171 (1995).

Cardinot, M., O’Riordan, C. & Griffith, J. The impact of coevolution and abstention on the emergence of cooperation. ArXiv e-prints 1705.00094 (2017).

Jia, D. et al. The impact of loners’ participation willingness on cooperation in voluntary prisoner’s dilemma. Chaos Solitons Fractals 108, 218–223 (2018).

Mao, D. & Niu, Z. Donation of richer individual can support cooperation in spatial voluntary prisoner’s dilemma game. Chaos Solitons Fractals 108, 66–70 (2018).

Shen, C. et al. Cooperation enhanced by the coevolution of teaching activity in evolutionary prisoner’s dilemma games with voluntary participation. PLOS ONE 13, 1–8 (2018).

Wang, L., Ye, S.-Q., Cheong, K. H., Bao, W. & gang Xie, N. The role of emotions in spatial prisoner’s dilemma game with voluntary participation. Physica A: Statistical Mechanics and its Applications 490, 1396–1407 (2018).

Niu, Z., Mao, D. & Zhao, T. Impact of self-interaction on evolution of cooperation in voluntary prisoner’s dilemma game. Chaos Solitons Fractals 110, 133–137 (2018).

Priklopil, T., Chatterjee, K. & Nowak, M. Optional interactions and suspicious behaviour facilitates trustful cooperation in prisoners dilemma. Journal of Theoretical Biology 433, 64–72 (2017).

Canova, G. A. & Arenzon, J. J. Risk and interaction aversion: Screening mechanisms in the prisoner’s dilemma game. Journal of Statistical Physics (2017).

Geng, Y. et al. Historical payoff promotes cooperation in voluntary prisoner’s dilemma game. Chaos Solitons Fractals 105, 145–149 (2017).

Chu, C., Liu, J., Shen, C., Jin, J. & Shi, L. Win-stay-lose-learn promotes cooperation in the prisoner’s dilemma game with voluntary participation. PLOS ONE 12, 1–8 (2017).

Cardinot, M., Griffith, J. & O’Riordan, C. Cyclic dominance in the spatial coevolutionary optional prisoner’s dilemma game. In Greene, D., Namee, B. M. & Ross, R. (eds) Artificial Intelligence and Cognitive Science 2016, vol. 1751 ofCEUR Workshop Proceedings, 33–44 (Dublin, Ireland, 2016).

Luo, C., Zhang, X. & Zhenga, Y. Chaotic evolution of prisoner’s dilemma game with volunteering on interdependent networks. Communications in Nonlinear Science and Numerical Simulation 47, 407–415 (2017).

Jeong, H.-C., Oh, S.-Y., Allen, B. & Nowak, M. A. Optional games on cycles and complete graphs. Journal of Theoretical Biology 356, 98–112 (2014).

Cardinot, M., Gibbons, M., O’Riordan, C. & Griffith, J. Simulation of an optional strategy in the prisoner’s dilemma in spatial and non-spatial environments. In From Animals to Animats 14 (SAB 2016), 145–156 (Springer International Publishing, Cham, 2016).

Chen, C.-L., Cao, X.-B., Du, W.-B. & Rong, Z.-H. Evolutionary prisoners dilemma game with voluntary participation on regular lattices and scale-free networks. Physics Procedia 3, 1845–1852 (2010).

Wu, Z.-X., Xu, X.-J., Chen, Y. & Wang, Y.-H. Spatial prisoner’s dilemma game with volunteering in Newman-Watts small-world networks. Phys. Rev. E 71, 037103 (2005).

Szabó, G. & Vukov, J. Cooperation for volunteering and partially random partnerships. Phys. Rev. E 69, 036107 (2004).

Hauert, C. & Szabo, G. Prisoner’s dilemma and public goods games in different geometries: compulsory versus voluntary interactions. Complexity 8, 31–38 (2003).

Lazear, E. P., Malmendier, U. & Weber, R. A. Sorting in experiments with application to social preferences. Am. Econ. Journal: Appl. Econ. 4, 136–163 (2012).

Capraro, V. The emergence of hyper-altruistic behaviour in conflictual situations. Sci. Reports 5, 9916 (2015).

Hauert, C. Effects of space in 2 × 2 games. International Journal of Bifurcation and Chaos 12, 1531–1548 (2002).

Nowak, M. A., Bonhoeffer, S. & May, R. M. Spatial games and the maintenance of cooperation. Proc. Natl. Acad. Sci. 91, 4877–4881 (1994).

Huberman, B. A. & Glance, N. S. Evolutionary games and computer simulations. Proc. Natl. Acad. Sci. 90, 7716–7718 (1993).

Szolnoki, A., Perc, M. & Danku, Z. Making new connections towards cooperation in the prisoner’s dilemma game. EPL 84, 50007 (2008).

Cardinot, M., O’Riordan, C. & Griffith, J. Evoplex: an agent-based modeling platform for networks. Zenodo. https://doi.org/10.5281/zenodo.1340734 (2018).

Acknowledgements

This work was supported by the National Council for Scientific and Technological Development (CNPq-Brazil). Grant number 234913/2014-2.

Author information

Authors and Affiliations

Contributions

M.C. conceived and conducted the experiments. All authors analysed the results and reviewed the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cardinot, M., Griffith, J., O’Riordan, C. et al. Cooperation in the spatial prisoner’s dilemma game with probabilistic abstention. Sci Rep 8, 14531 (2018). https://doi.org/10.1038/s41598-018-32933-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-32933-x

- Springer Nature Limited

Keywords

This article is cited by

-

The Unification of Evolutionary Dynamics through the Bayesian Decay Factor in a Game on a Graph

Bulletin of Mathematical Biology (2024)

-

The Price Identity of Replicator(–Mutator) Dynamics on Graphs with Quantum Strategies in a Public Goods Game

Dynamic Games and Applications (2024)

-

Nash Equilibria in the Response Strategy of Correlated Games

Scientific Reports (2019)

-

Direct reciprocity and model-predictive rationality explain network reciprocity over social ties

Scientific Reports (2019)

-

Effort Perception is Made More Accurate with More Effort and When Cooperating with Slackers

Scientific Reports (2019)