Abstract

Chaotic dynamics has been shown in the dynamics of neurons and neural networks, in experimental data and numerical simulations. Theoretical studies have proposed an underlying role of chaos in neural systems. Nevertheless, whether chaotic neural oscillators make a significant contribution to network behaviour and whether the dynamical richness of neural networks is sensitive to the dynamics of isolated neurons, still remain open questions. We investigated synchronization transitions in heterogeneous neural networks of neurons connected by electrical coupling in a small world topology. The nodes in our model are oscillatory neurons that – when isolated – can exhibit either chaotic or non-chaotic behaviour, depending on conductance parameters. We found that the heterogeneity of firing rates and firing patterns make a greater contribution than chaos to the steepness of the synchronization transition curve. We also show that chaotic dynamics of the isolated neurons do not always make a visible difference in the transition to full synchrony. Moreover, macroscopic chaos is observed regardless of the dynamics nature of the neurons. However, performing a Functional Connectivity Dynamics analysis, we show that chaotic nodes can promote what is known as multi-stable behaviour, where the network dynamically switches between a number of different semi-synchronized, metastable states.

Similar content being viewed by others

Introduction

Over the past decades, a number of observations of chaos have been reported in the analysis of time series from a variety of neural systems, ranging from the simplest to the more complex1,2. It is generally accepted that the inherent instability of chaos in nonlinear systems dynamics, facilitates the extraordinary ability of neural systems to respond quickly to changes in their external inputs3, to make transitions from one pattern of behaviour to another when the environment is altered4, and to create a rich variety of patterns endowing neuronal circuits with remarkable computational capabilities5. These features are all suggestive of an underlying role of chaos in neural systems (For reviews, see5,6,7), however these ideas may have not been put to test thoroughly.

Chaotic dynamics in neural networks can emerge in a variety of ways, including intrinsic mechanisms within individual neurons8,9,10,11,12 or by interactions between neurons3,13,14,15,16,17,18,19,20,21. The first type of chaotic dynamics in neural systems is typically accompanied by microscopic chaotic dynamics at the level of individual oscillators. The presence of this chaos has been observed in networks of Hindmarsh-Rose neurons8 and biophysical conductance-based neurons9,10,11,12. The second type of chaotic firing pattern is the synchronous chaos. Synchronous chaos has been demonstrated in networks of both biophysical and non-biophysical neurons3,13,15,17,22,23,24, where neurons display synchronous chaotic firing-rate fluctuations. In the latter cases, the chaotic behaviour is a result of network connectivity, since isolated neurons do not display chaotic dynamics or burst firing. More recently, it has been shown that asynchronous chaos, where neurons exhibit asynchronous chaotic firing-rate fluctuations, emerge generically from balanced networks with multiple time scales in their synaptic dynamics20.

Different modelling approaches have been used to uncover important conditions for observing these types of chaotic behaviour (in particular, synchronous and asynchronous chaos) in neural networks, such as the synaptic strength25,26,27, heterogeneity of the numbers of synapses and their synaptic strengths28,29, and lately the balance of excitation and inhibition21. The results obtained by Sompolinsky et al.25 showed that, when the synaptic strength is increased, neural networks display a highly heterogeneous chaotic state via a transition from an inactive state. Other studies demonstrated that chaotic behaviour emerges in the presence of weak and strong heterogeneities, for example a coupled heterogeneous population of neural oscillators with different synaptic strengths28,29,30. Recently, Kadmon et al.21 highlighted the importance of the balance between excitation and inhibition on a transition to chaos in random neural networks. All these approaches identify the essential mechanisms for generating chaos in neural networks. However, they give little insight into whether chaotic neural oscillators make a significant contribution to relevant network behaviour, such as synchronization. In other words, whether the dynamical richness of neural networks is sensitive to the dynamics of isolated neurons has not been systematically studied yet.

To cope with this question, in the present paper we studied synchronization transition in heterogeneous networks of interacting neurons. Here we make use of an oscillatory neuron model (Huber & Braun model + Ih, referred here as HB + Ih) that exhibits either chaotic or non-chaotic behaviour depending on parameter values. Compared to other conductance-based models that display a variety of firing patterns and chaos, the HB + Ih consists on fewer variables and parameters while still retaining a biophysical meaning of its parameters and equations. Moreover, chaos is found in biologically plausible parameter regions, as we showed in our previous study12. Taking advantage of the mapping of chaotic regions that we previously performed, we simulated small-world31 neural networks consisting on a heterogeneous population of HB + Ih neurons, connected by electrical synapses, and sampled their parameters from either chaotic or non-chaotic regions of the parameter space.

Our first finding is that isolated chaotic neurons in networks do not always make a visible difference in process of network synchronization. The heterogeneity of firing rates and the type of firing patterns make a greater contribution to the steepness of the synchronization transition curve. Moreover, macroscopic chaos is observed regardless of the dynamic nature of the neurons. However, the results of Functional Connectivity Dynamics (FCD) analysis show that chaotic nodes can promote what is known as the multi-stable behaviour, where the network dynamically switches between a number of different semi-synchronized, metastable states. Finally, our results suggest that chaotic dynamics of the isolated neurons is not always a predictor of macroscopic chaos, but macroscopic chaos can be a predictor of meta and multi-stability.

Materials and Methods

Single neuron dynamics

We use a parabolic bursting model inspired by the static firing patterns of cold thermoreceptors, in which a slow sub-threshold oscillation is driven by a combination of a persistent Sodium current (I sd ), a Calcium-activated Potassium current (I sr ) and a hyperpolarization-activated current (I h ). Depending on the parameters, it exhibits a variety of firing patterns including irregular, tonic regular, bursting and chaotic firing12,32. Based on the Huber & Braun (HB) thermoreceptor model11, here it will be referred to as the HB + Ih model.

The membrane action potential of a HB + Ih neuron follows the dynamics:

where V is the membrane capacitance; I d , I r , I sd , I sr are depolarizing (NaV), repolarizing (Kdr), slow depolarizing (NaP/CaT) and slow repolarizing (KCa) currents, respectively. I h stands for hyperpolarization-activated current, I l represents the leak current, and lastly the term I syn is the synaptic current. Currents (except I syn ) are defined as:

where a i is an activation term that represents the open probability of the channels (a l ≡ 1), with the exception of a sr that represents intracellular Calcium concentration. Parameter g i is the maximal conductance density, E i is the reversal potential and the function ρ(T) is a temperature-dependent scale factor for the current.

The activation terms a r , a sd and a h follow the differential equations:

where

\({{V}}_{{i}}^{0}\) is the Voltage for half-activation and s i is the voltage-dependency or slope of the sigmoid function. On the other hand, a sr follows

where η is a factor that relates the mixed Na/Ca I sd current to the increment of intracellular Calcium. This is made negative such that inward currents will produce an increase in a sr . κ is a rate for Calcium decrease, given by buffering and/or active extrusion. Finally,

The function ϕ(T) is a temperature factor for channel kinetics. The temperature-dependent functions for conductance ρ(T) in Eqs (2) and (3), and for kinetics ϕ(T) in Eqs (4) and (6) are given, respectively, by:

In the simulation, we vary the maximal conductance density g sd , g sr and g h values. Unless stated otherwise, the parameters used are given in Table 1.

Synaptic interactions

The synaptic input current into neuron k is given by:

In this article, the current I kl between neuron k and l is modelled as a gap junction (electrical synapse):

where g kl is the conductance (coupling strength) of synapse from cell k to cell l. In this work we selected a uniform value g kl = g for all connections within the neural networks, and simulations were performed with different values of g in order to observe the transition to total network synchrony.

Structural connectivity matrix

We define the connectivity matrix by C kl = 1 if the neuron k is connected to neuron l and C kl = 0 otherwise. We employed a Newman-Watts small world topology31, implemented as two basic steps of the standard algorithm33,34,35: (1) Create a ring lattice with N nodes of mean degree 2 K. Each node is connected to its K nearest neighbours on either side. (2) For each node in the graph, add an extra edge with probability p, to a randomly selected node. The added edge cannot be a duplicate or self-loop. Finally, as we are simulating electrical synapses, the matrices were made symmetric. The results presented in the main text correspond to networks with N = 250, K = 5, p = 0.1. Results obtained with other networks are shown in the Supplementary Material. The random seed for adding extra edges was controlled in order to use the same set of connectivity matrices under each condition. 10 or 20 different seeds were used and the results reported are averages of the different network realizations.

Quantifying chaos

The method for establishing whether a system is chaotic or not is to use the Lyapunov exponents. In particular, Maximal Lyapunov exponent (MLE) greater than zero is widely used as an indicator of chaos36,37,38,39. We calculated MLEs from trajectories in the full variable space, following a standard numerical method based on Sprott36 (also see Jones et al.)37.

Measurement of network dynamics

The voltage trajectory of each neuron was low-pass filtered (50 Hz) and a continuous Wavelet transform40,41,42 was applied to determine in one step the predominant frequency and the instantaneous phase at that frequency. We use the complex Morlet wavelet as mother wavelet function to calculate instantaneous phase.

We describe global dynamical behaviour of the neural networks using the mean and the standard deviation of the order parameter amplitude over a time-course, which indicate respectively the global synchrony and the global metastability of the system43,44. The order parameter45,46, R, describes the global level of phase synchrony in a system of N oscillators, given by:

where φ k (t) is the phase of oscillator k at time t, 〈 f 〉 N = ϕ c (t) denotes the average of f over all k in networks, |•| is absolute value and 〈 f 〉 t is the average in time. R = 0 corresponds to the maximally asynchronous (disordered) state, whereas R = 1 represents the state where all oscillators are completely synchronized (phase synchrony state). The global metastability χ of neural networks is given by:

Metastability is zero if the system is either completely synchronized or completely desynchronized during the full simulation–a high value is present only when periods of coherence alternate with periods of incoherence43. Δt in Eq. (12) is the time windows to quantify the global metastability.

Functional Connectivity Dynamics

A series of functional connectivity (FC) matrices were calculated using phase synchrony (order parameter) in a pair-wise fashion. This was done in a series of M overlapping time windows T1, T2, T3, …, T M . The FC matrix at the window m is defined by:

where t corresponds to all times inside window m. We chose 2s as the width of the time windows, with an overlap of 90% between consecutive windows. In this way, 20 to 25 oscillation cycles are included and the measured synchronization patterns consider this time scale. Then, the Functional Connectivity Dynamics (FCD) matrix47,48 consists on a pair-wise comparison of all the FCs, revealing how similar or different are the synchronization patterns found at different times. We performed this comparison by taking the values in the lower triangle of FC, discarding the diagonal and the values adjacent to it, and calculating a correlation matrix between the vectors. Thus the FCD matrix is defined by

where cov (X, Y) is the covariance between vectors X and Y, and σ(X) is the standard deviation of X. Note that the (i, j) indices in Eq. (14) refer to FC matrices obtained at different times, while the (k, l) indices in Eq. (13) refer to network nodes. Finally, an histogram of FCD values and their variance offer a rough measure of multi-stability (see Results section).

Numerical integration

Equations (1–8) were solved by the Euler method with a fixed step size dt = 0.025. Most simulations were also repeated with an adaptive integration algorithm (odeint routine of Scipy package) without noticeable difference in the results. Data analysis and plotting were performed with Python and the libraries Numpy, Scipy, and Matplotlib. Example code used in this work is available at https://github.com/patoorio/HBIh-synchrony.

Results

Synchronization transitions with parameters drawn from fixed-size regions

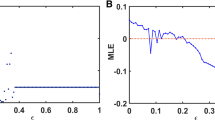

Our main goal is to study how the dynamics of isolated neural oscillators can propagate to the network level, in terms of relevant behaviours. To do this, we use a model of neural oscillator that can display either chaotic or non-chaotic behaviour depending on the parameters (Figs 1 and 2A, also see12). We simulated networks of 250 neurons connected by electrical coupling (gap junctions) in a small world topology. When drawing the g sd and g h parameters, we selected different regions of the parameter space that made them behave as either chaotic or non-chaotic oscillators, while maintaining a similar average firing rate (Fig. 2A). Then, the inter-cellular conductance g was varied from 0 to 1 in order to evidence the transition from asynchrony to complete synchronization. The whole procedure was repeated with 10 different random seeds for the generation of the networks, to check that the results are not particular to an specific connectivity.

Examples of non-chaotic (A) and chaotic oscillation (B) of HB + Ih neurons at different combinations of conductance parameters (shown at the top together with the corresponding Maximum Lyapunov Exponent, MLE). Below each voltage time course, inter-spike interval (ISI) plots are shown. Right panels show three-dimensional phase space projections of variables a sd , a sr and a h .

Synchronization transitions on the neural networks with parameters drawn from fixed-size regions of the parameter space. (A) Maximal Lyapunov exponent (MLE), firing rate (FR) and firing pattern (FP) obtained from each parameter values. Red rectangles denote regions with chaotic oscillations, characterized by MLE > 0, while regions in green are non-chaotic oscillators (MLE ≤ 0). The colour bar of FP indicates: 0, no oscillations; 1, sub-threshold oscillations (no spikes); 2, oscillations and spikes with skipping; 3, regular tonic spiking; 4, burst firing (the shade represents the number of spikes per bursts); 5, tonic with firing rate between 20 and 50 spikes/second; 6, firing rate higher than 50 spikes/second. (B) and (C) Transition dynamics in heterogeneous networks of 250 neurons, as the g coupling value is increased. Order parameter (R), metastability and MLE are shown. The results are averages of 10 realizations of the networks and the error bars indicate the standard deviation. Subplots B and C correspond to the parameters drawn from range 1 and range 2 of A, respectively. Throughout this article, Non-chaotic networks and Chaotic networks in the legend respectively denote networks built with non-chaotic and chaotic oscillators.

Figure 2B,C show the transition curves for networks built with parameters drawn from regions 1 and 2, showing the order parameter (global synchrony), metastability (time variability of the global synchrony) and the network MLE as a measure of global chaos. In the first pair of parameter regions, we observe that networks of chaotic oscillators show a shallower transition to synchrony, with a higher metastability and a higher network MLE. This suggests that the chaotic nature of the oscillators indeed impacts the network behaviour. In networks of non-chaotic oscillators, when g is between 10−4 and 10−2, we observe that the networks become chaotic while transition from asynchrony (low R) to synchrony (high R). However, when phase synchronization is reached, chaos is lost. Thus, only asynchronous chaos is observed. In the case of chaotic neurons, we find that the networks always exhibit chaotic behaviour at a wide range (with g from 0 to 1) of synaptic coupling. In that case, both asynchronous and synchronous chaos are observed. When the same analysis was applied to the second pair of parameter regions, we observe a strange behaviour of the non-chaotic oscillators, with a non-smooth transition associated to a higher metastability and network MLE. An inspection of the firing patterns (Fig. 2A, right) reveals that the regions labelled as ‘2’ contain transitions between different firing patterns: tonic regular to bursting for non-chaotic, and skipping to bursting for the chaotic region. This made us think that the ‘kink’ observed in the curves of non-chaotic oscillators, was due to a transition between firing patterns occurring in the network. It can be seen in Fig. 3A that, as synaptic coupling is increased in networks of non-chaotic neurons, bursting firing pattern disappears, while in chaotic neurons they are increased. Moreover, in non-chaotic neurons there is a rebound of bursting firing patterns at g = 0.0433. This finding suggests that the dramatic changes in firing patterns induce the non-smooth transition shown in Fig. 2C.

(A) Mean fraction of bursting events (MB) in networks of chaotic and non-chaotic oscillators from region 2. The fraction of bursting events for a given neuron k is defined as: b k = Nb/Te, where Nb and Te represent the number of bursts (two or more spikes) and total events for isolated neurons, respectively. The mean fraction of bursting events of the whole neural networks is expressed as \(\frac{1}{N}{\sum }_{k\mathrm{=1}}^{N}{b}_{k}\). (B) Histogram of the (isolated) firing rates in each pair of parameter regions that are shown in Fig. 2A.

A closer examination of the firing rates in each parameter range revealed another consequence of chaos. A histogram of the (isolated) firing rates in each parameter region (Fig. 3B) reveals that, when drawing from fix-sized regions of the parameter space, chaotic oscillators show a more heterogeneous distribution of firing rates than non-chaotic. Thus, the steeper transition to synchrony and lower metastability observed with non-chaotic oscillators could be due to a more homogeneous nature of the network, rather than the chaotic oscillation by itself. Although this is already an effect of chaos, it is of dubious biological relevance because neurons will never control their levels of channel expression within a fixed range, as we did here. If any, neurons control for function, and a simple approximation to this is to consider that they try to maintain a certain average firing rate with whatever ion channel density relationship that can attain it. Thus, we developed a parameter sampling procedure that replicated, for both chaotic and non-chaotic populations, a similar firing rate distribution rather than the mean. We also shifted to another parameter subspace (g sd /g sr ) to take advantage of the complete absence of chaos when g h = 012. Nevertheless, the simulations that follow were also performed in the g sd /g h parameter subspace with very similar results (see Supplementary Material Fig. S1).

Synchronization transitions using same distribution of firing rates

Figure 4A shows a region of the g sr /g sd parameter subspace, plotting Maximal Lyapunov exponent and firing rate obtained with each parameter pair. The example of desired regions with lower firing rate (from 3.0 to 4.5 spikes/s) is plotted in the firing rate (Fig. 4A, middle and right). The regions shown in a darker tone of blue correspond, respectively, to chaotic (Fig. 4A, middle) and non-chaotic oscillations (Fig. 4A, right) behaviour. The 3.0–4.5 Hz interval was divided in bins of 0.1 Hz, and in each bin the same number of g sr /g sd combinations was randomly picked from each region (chaotic or non-chaotic). In addition, we picked parameter pairs from the model without the I h current (NoIh oscillators) that do not display chaotic behaviour under any parameter combination (See12 and Fig. 4B). The desired region of this case in firing rate is shown in the Fig. 4B (bottom). The histogram of firing rates in each selected set of parameters (Fig. 4C), shows that they have the same distribution of firing rates. This is supported by a pair-wise testing for equality of distributions using the non-parametric Kolmogorov-Smirnov test49. The same operation was used to select parameter sets with higher firing rate (from 7.0 to 9.5 Hz, not shown).

Selection of parameter sets with same distribution of firing rates. (A) Maximum Lyapunov Exponent (MLE) and Firing Rate (FR) obtained in the selected g sr /g sd parameter region. The FR plot is shown twice, highlighting in light blue either chaotic (MLE > 0, left) or non-chaotic (MLE ≤ 0, right) oscillations with a Firing Rate between 3.0 and 4.5 spikes/s. (B) MLE and FR in the same g sr /g sd region as in (A), for the model without I h . Highlighted in light blue, FR between 3.0 and 4.5 spikes/s. (C) Histogram of firing rates in each selected set of parameters and p-value from a pair-wise comparison of distributions with the non-parametric Kolmogorov-Smirnov test.

Networks of 250 neurons were built by randomly picking g sr /g sd pairs from the populations described above. Fig. 5 plots the synchrony transition curves for networks using the parameters drawn from the lower (Fig. 5A) and higher firing rate (Fig. 5B) ranges, showing the order parameter, metastability and the network MLE. In the lower firing rate regions, all types of networks show a similar slope in their transition to synchrony. Networks of chaotic oscillators, however, show a higher metastability and a higher network MLE. In the higher firing rate regions, we observe that networks of both chaotic and non-chaotic oscillators show not only a similar transition to synchrony, but also the same degree of metastability and network MLE. These results suggest that MLE at the network level (macroscopic chaos) can be a predictor of metastability, however the chaotic nature of the isolated oscillators will not always translate to network chaos in a direct or predictable fashion. The blue curves of Fig. 5 show that networks of NoIh oscillators have a transition to synchrony at lower values of g, with the similar degree of metastability and lower values of network MLE compared to the other networks. However, it is worth to mention that NoIh systems have 250 dimensions less (1 per node) and thus the magnitude of the Lyapunov exponents may not be comparable. Simulations presented in the Supplementary Material (see Fig. S3 for details) show that the transition dynamics is robust to network size.

Transition curves for networks with parameters g sr /g sd , as described in Fig. 4. Synchronization transition characterized by Order parameter, metastability and the network MLE. (A) and (B) denote parameters drawn from the lower and higher firing rate, respectively. NoIh networks refers to networks built with NoIh oscillators (g h = 0).

Multi-stable behavior in neural networks

Finally, we measured the ability of our network models to display multi-stable behaviour by characterizing their functional connectivity dynamics (FCD). This analysis is being extensively applied to fMRI and M/EEG recordings47,48,50 and is explained in Fig. 6 and Methods. Briefly, the time series is divided in overlapping time windows and for each window a matrix of pair-wise synchrony between the nodes is calculated. Then, the synchrony matrices are compared against each other in the FCD matrix, where the axes represent time.

Functional Connectivity Dynamics Analysis. (A) Time course of 50 chaotic nodes (only 5 traces are shown), showing the time windows for synchrony analysis. (B) Functional Connectivity (FC) matrices obtained in 5 sample time windows. (C) All the FCs are compared against each other by Pearson correlation and this constitutes the FCD matrix. The dotted lines and the colour dots at right and top represent the FCs shown in (B).

The FCD matrices in Fig. 7A show distinctive patterns for the unsynchronized and synchronized situations. In the first case (synaptic conductance g = 0), all values outside the diagonal are 0 or close to 0. This means that the pair-wise synchronization patterns or FC matrices continuously evolve in time and never repeated during the simulation. On the other hand, when synaptic conductance is maximal, all the values in the matrix are equal to 1, meaning that the synchronization is the same and maintained through all the simulation. However, at intermediate values of g, some FCD matrices show a mixture of values between 0 and 1, with noticeable ‘patches’ that evidence the transient maintenance of some synchronization patterns. We call this a multi-stable regime. The histograms of FCD values (shown in Fig. 7A below each FCD) are also useful in detecting the three situations described. As a rough measure of multi-stability, we took the variance of the FCD values (outside the diagonal) and plotted them against the synaptic conductance, averaging several simulations with different seed for the random connectivity matrix (Fig. 7B). In the 3.0 to 4.5 firing rate range (for the isolated oscillators), it is clear that chaotic nodes produce networks with higher multi-stability than both non-chaotic and NoIh nodes. Moreover, the g range in which the multi-stable behaviour is observed is wider. In the 7.0 to 9.5 firing rate range, the variance of the FCD is not higher for chaotic nodes, however the g range for multi-stability is still wider. This shows that FCDs with signatures of multi-stable behaviour are more easily obtained when the networks are composed of chaotic nodes. FCD analysis were also performed in the g sd /g h parameter subspace with very similar results (Fig. S2).

FCD in networks of chaotic and non-chaotic oscillators. (A) FCD matrices obtained at different values of synaptic conductance g in networks of either chaotic, non-chaotic or NoIh nodes. Below each matrix, an histogram of the values is shown. The diagonal and the neighbouring values were not included. (B) Variance of the FCD values (the same values plotted in the histograms) plotted against g. Average (±SEM) of 20 simulations with different random seeds for the small-world connectivity and parameters.

Discussion

In this work, we investigated how a complex node dynamics can affect the synchronization behaviour of a heterogeneous neural network. While several works have focused on network connectivity, few studies have explored the impact of node dynamics to the network. An interesting study by Reyes et al.51 has shown that very small networks (2–3 neurons), when composed of irregular or chaotic nodes can provide a wider frequency range than when the nodes are regular. Moreover, in medium-size networks but using a much simpler node dynamics, Hansen et al.47 showed that bi-stable nodes enhance the dynamical repertoire of the network when looking at the FCD.

To focus on the dynamics of the nodes, we systematically controlled the chaotic nature of the oscillators, trying to keep other variables, such as network heterogeneity, constant. At first glance, our results are not as straightforward to interpret as in the previously mentioned works. Chaotic node oscillations do not always make a visible difference in terms of mean network synchronization and, most notably, chaos at the network level (macroscopic chaos) was always obtained regardless of the nature of the nodes. Other factors, such as network heterogeneity and the transitions between different firing patterns, seem to be more determinant to the steepness of the synchronization transition curves. However, we found that chaotic nodes can promote multi-stable behaviour, where the network dynamically switches between a number of different semi-synchronized, metastable states. Our results suggest that macroscopic chaos can be a predictor of metastability, as the greatest values of this measure (as well as multi-stability) coincide with intermediate g values where the maximum MLEs were found. However this must be taken with caution as the MLE is not necessarily a quantitative measure of chaos52.

The chaotic nature of the isolated oscillators did not always convey to network chaos in a direct or predictable fashion. More specifically, our networks always showed chaotic behaviour at some g values regardless of the dynamics of the isolated nodes. This is not surprising, as chaotic behaviour arises in networks of very simple units and seems to depend more strongly on other factors such as synaptic weights and network topology25,26,27,53. Moreover, just the high-dimensionality of the systems seems to be enough to assure that chaos will emerge under some conditions, for example, the quasi-periodic route to chaos in high-dimensional systems54. On the other hand, assessing chaos in a large network or in a high-dimensional system can be a difficult task. It is generally accepted that a unique intrinsic and observable signature of systems exhibiting deterministic chaos is a fluctuating power spectrum with an exponential frequency dependency55. Thus, some studies introduced the broad power spectrum to characterize the chaos of networks13,56. Here we use the most popular and direct method of maximal Lyapunov exponent (MLE) to quantify chaos on the level of networks in the way as we did for single cells12,36,37. As usual, we define the state of the network as chaotic if the MLE is greater than zero.

Network heterogeneity has been shown to promote synchrony in neural networks57,58, as well as in other fields of physics59,60,61. As the assumption of non-identical units in the network is the most realistic setting for a biophysically-inspired system, and in order to focus on the effect of nodes dynamics, we intended to keep a constant degree of heterogeneity between the different simulated networks. In a first approach, maintaining a constant distribution of parameters yielded oscillatory nodes that were functionally more heterogeneous in the case of chaotic nodes than non-chaotic. Then we shifted to an approach consisting on obtaining sets (or ‘populations’) of chaotic, non-chaotic and NoIh nodes that shared the same distribution (or heterogeneity) in their firing rates. Still, this approach may be improved because the firing rate by itself may not be most relevant measure to take into account for the promotion of synchrony in this system. The role of heterogeneity–and finding a more functionally relevant measure for it–in the promotion of FCD can be the subject for future work.

Macroscopic chaos can arise from the network’s global properties, the propensity of isolated neurons to oscillate, the nature of synaptic dynamics, or a mixture of the them, as shown in earlier works25,26,27,28,29. In this paper, the focus is different. First, finding both asynchronous and synchronous chaos in the same network, only by changing the synaptic strength, is new. Secondly, the route from asynchronous to synchronous chaos in networks of chaotic and non-chaotic oscillations has a slight difference and has not been found in previous studies. Specifically, networks switch directly from asynchronous to synchronous chaos when composed of chaotic neurons, while networks of non-chaotic neurons usually can go through four phases of network state, that are asynchronous activity, asynchronous chaos, then again asynchronous activity and lastly synchronous chaos.

While discussing chaos in neural systems we have used completely deterministic dynamics. Random variables were used to define network connectivity and node parameters, but the time evolution of the networks and nodes was calculated in the absence of noise. However, neural systems are subject to a number of noise sources, being the most important the stochastic opening an closing of ion channels and synaptic variability62. How the synchronization transitions and meta/multi-stable behaviour will emerge in a noisy system remains to be studied, and it will be interesting to assess how much the dynamics introduced by chaos can prevail in the presence of noise.

In summary, we have shown that chaotic neural oscillators can make a significant contribution to relevant network behaviours, such as states transition and multi-stability. Our results open a new way in the study of the dynamical mechanisms and computational significance of the contribution of chaos in neuronal networks.

References

Arbib, M. A. The handbook of brain theory and neural networks. 208–212 (MIT press, 2003).

Korn, H. & Faure, P. Is there chaos in the brain? ii. experimental evidence and related models. Comptes rendus biologies 326, 787–840 (2003).

Hansel, D. & Sompolinsky, H. Synchronization and computation in a chaotic neural network. Physical Review Letters 68, 718–721 (1992).

Shriki, O., Hansel, D. & Sompolinsky, H. Rate models for conductance-based cortical neuronal networks. Neural computation 15, 1809–1841 (2003).

Rabinovich, M. & Abarbanel, H. The role of chaos in neural systems. Neuroscience 87, 5–14 (1998).

Rabinovich, M. I., Varona, P., Selverston, A. I. & Abarbanel, H. D. Dynamical principles in neuroscience. Reviews of Modern Physics 78, 1213–1265 (2006).

Breakspear, M. Dynamic models of large-scale brain activity. Nature Neuroscience 20, 340–352 (2017).

Hindmarsh, J. L. & Rose, R. A model of neuronal bursting using three coupled first order differential equations. Proceedings of the Royal Society of London B: Biological Sciences 221, 87–102 (1984).

Fan, Y.-S. & Chay, T. R. Generation of periodic and chaotic bursting in an excitable cell model. Biological Cybernetics 71, 417–431 (1994).

Chay, T. R. Chaos in a three-variable model of an excitable cell. Physica D: Nonlinear Phenomena 16, 233–242 (1985).

Braun, H., Huber, M., Dewald, M., Schäfer, K. & Voigt, K. Computer simulations of neuronal signal transduction: the role of nonlinear dynamics and noise. International Journal of Bifurcation and Chaos 8, 881–889 (1998).

Xu, K. et al. Hyperpolarization-activated current induces period-doubling cascades and chaos in a cold thermoreceptor model. Frontiers in Computational Neuroscience 11 (2017).

Hansel, D. & Sompolinsky, H. Chaos and synchrony in a model of a hypercolumn in visual cortex. Journal of computational neuroscience 3, 7–34 (1996).

Roxin, A., Brunel, N. & Hansel, D. Role of delays in shaping spatiotemporal dynamics of neuronal activity in large networks. Physical Review Letters 94, 238103 (2005).

Roxin, A., Brunel, N. & Hansel, D. Rate models with delays and the dynamics of large networks of spiking neurons. Progress of Theoretical Physics Supplement 161, 68–85 (2006).

Battaglia, D., Brunel, N. & Hansel, D. Temporal decorrelation of collective oscillations in neural networks with local inhibition and long-range excitation. Physical Review Letters 99, 238106 (2007).

Battaglia, D. & Hansel, D. Synchronous chaos and broad band gamma rhythm in a minimal multi-layer model of primary visual cortex. PLoS Comput Biol 7, e1002176 (2011).

Harish, O. Network mechanisms of working memory: from persistent dynamics to chaos. Ph.D. thesis, Paris 5 (2013).

Hoerzer, G. M., Legenstein, R. & Maass, W. Emergence of complex computational structures from chaotic neural networks through reward-modulated hebbian learning. Cerebral cortex 24, 677–690 (2014).

Harish, O. & Hansel, D. Asynchronous rate chaos in spiking neuronal circuits. PLoS Comput Biol 11, e1004266 (2015).

Kadmon, J. & Sompolinsky, H. Transition to chaos in random neuronal networks. Physical Review X 5, 041030 (2015).

Sussillo, D. & Abbott, L. F. Generating coherent patterns of activity from chaotic neural networks. Neuron 63, 544–557 (2009).

Barak, O., Sussillo, D., Romo, R., Tsodyks, M. & Abbott, L. From fixed points to chaos: three models of delayed discrimination. Progress in neurobiology 103, 214–222 (2013).

Toyoizumi, T. & Abbott, L. Beyond the edge of chaos: Amplification and temporal integration by recurrent networks in the chaotic regime. Physical Review E 84, 051908 (2011).

Sompolinsky, H., Crisanti, A. & Sommers, H.-J. Chaos in random neural networks. Physical Review Letters 61, 259 (1988).

Burioni, R., Casartelli, M., di Volo, M., Livi, R. & Vezzani, A. Average synaptic activity and neural networks topology: a global inverse problem. Scientific Reports 4 (2014).

Ostojic, S. Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nature Neuroscience 17, 594–600 (2014).

Yazdanbakhsh, A., Babadi, B., Rouhani, S., Arabzadeh, E. & Abbassian, A. New attractor states for synchronous activity in synfire chains with excitatory and inhibitory coupling. Biological cybernetics 86, 367–378 (2002).

Teramae, J.-N. & Fukai, T. Local cortical circuit model inferred from power-law distributed neuronal avalanches. Journal of computational neuroscience 22, 301–312 (2007).

Pazó, D. & Montbrió, E. From quasiperiodic partial synchronization to collective chaos in populations of inhibitory neurons with delay. Physical Review Letters 116, 238101 (2016).

Newman, M. E. & Watts, D. J. Renormalization group analysis of the small-world network model. Physics Letters A 263, 341–346 (1999).

Orio, P. et al. Role of i h in the firing pattern of mammalian cold thermoreceptor endings. Journal of neurophysiology 108, 3009–3023 (2012).

Newman, M., Barabasi, A.-L. & Watts, D. J. The structure and dynamics of networks (Princeton University Press, 2011).

Newman, M. Networks: an introduction (Oxford university press, 2010).

Chen, G., Wang, X. & Li, X. Fundamentals of complex networks: models, structures and dynamics (John Wiley & Sons, 2014).

Sprott, J. C. Chaos and time-series analysis, vol. 69 (Oxford: Oxford University Press, 2003).

Jones, D. S., Plank, M. & Sleeman, B. D. Differential equations and mathematical biology (CRC press, 2009).

Skokos, C. The lyapunov characteristic exponents and their computation. In Dynamics of Small Solar System Bodies and Exoplanets, 63–135 (Springer, 2010).

Lynch, S. Dynamical systems with applications using MATLAB (Springer, 2004).

Lee, D. T. & Yamamoto, A. Wavelet analysis: theory and applications. Hewlett Packard journal 45, 44–52 (1994).

Addison, P. The illustrated wavelet transform handbook: Introductory theory and applications in science, engineering. Medicine and Finance. IOP Publishing, Bristol (2002).

Hramov, A. E., Koronovskii, A. A., Makarov, V. A., Pavlov, A. N. & Sitnikova, E. Wavelets in Neuroscience (Springer, 2015).

Shanahan, M. Metastable chimera states in community-structured oscillator networks. Chaos: An Interdisciplinary Journal of Nonlinear Science 20, 013108 (2010).

Váša, F. et al. Effects of lesions on synchrony and metastability in cortical networks. Neuroimage 118, 456–467 (2015).

Ponce-Alvarez, A. et al. Resting-state temporal synchronization networks emerge from connectivity topology and heterogeneity. PLoS Comput Biol 11, e1004100 (2015).

Zhang, X., Zou, Y., Boccaletti, S. & Liu, Z. Explosive synchronization as a process of explosive percolation in dynamical phase space. Scientific Reports 4, 5200 (2014).

Hansen, E. C., Battaglia, D., Spiegler, A., Deco, G. & Jirsa, V. K. Functional connectivity dynamics: modeling the switching behavior of the resting state. Neuroimage 105, 525–535, https://doi.org/10.1016/j.neuroimage.2014.11.001 (2015).

Preti, M. G., Bolton, T. A. & Van De Ville, D. The dynamic functional connectome: State-of-the-art and perspectives. Neuroimage (2016).

Wilcox, R. R. Introduction to robust estimation and hypothesis testing (Academic press, 2011).

Cabral, J., Kringelbach, M. & Deco, G. Functional connectivity dynamically evolves on multiple time-scales over a static structural connectome: Models and mechanisms. Neuroimage (2017).

Reyes, M. B., Carelli, P. V., Sartorelli, J. C. & Pinto, R. D. A modeling approach on why simple central pattern generators are built of irregular neurons. PloS one 10, e0120314 (2015).

Dingwell, J. B. Lyapunov exponents. Wiley Encyclopedia of Biomedical Engineering (2006).

Wainrib, G. & Touboul, J. Topological and dynamical complexity of random neural networks. Physical Review Letters 110, 118101 (2013).

Anishchenko, V. S., Astakhov, V., Neiman, A., Vadivasova, T. & Schimansky-Geier, L. Nonlinear dynamics of chaotic and stochastic systems: tutorial and modern developments (Springer Science & Business Media, 2007).

Schuster, H. G. & Just, W. Deterministic chaos: an introduction (John Wiley & Sons, 2006).

Reynolds, A. M., Bartumeus, F., Kölzsch, A. & Van De Koppel, J. Signatures of chaos in animal search patterns. Scientific Reports 6, 23492 (2016).

Mejias, J. F. & Longtin, A. Optimal heterogeneity for coding in spiking neural networks. Physical Review Letters 108, https://doi.org/10.1103/physrevlett.108.228102 (2012).

Mejias, J. F. & Longtin, A. Differential effects of excitatory and inhibitory heterogeneity on the gain and asynchronous state of sparse cortical networks. Frontiers in Computational Neuroscience 8, https://doi.org/10.3389/fncom.2014.00107 (2014).

Braiman, Y., Lindner, J. F. & Ditto, W. L. Taming spatiotemporal chaos with disorder. Nature 378, 465 (1995).

Tessone, C. J., Mirasso, C. R., Toral, R. & Gunton, J. D. Diversity-induced resonance. Physical Review Letters 97, 194101 (2006).

Valizadeh, A., Kolahchi, M. & Straley, J. Single phase-slip junction site can synchronize a parallel superconducting array of linearly coupled josephson junctions. Physical Review B 82, 144520 (2010).

Faisal, A. A., Selen, L. P. & Wolpert, D. M. Noise in the nervous system. Nature Reviews Neuroscience 9, 292–303 (2008).

Acknowledgements

KX is funded by Proyecto Fondecyt 3170342 (Chile). JM is Recipient Of A PhD Grant FIB-UV From UV. SC is recipient of a Ph.D. fellowship grant from CONICYT 21140603 (Chile). PO is partially funded by the Advanced Center for Electrical and Electronic Engineering (FB0008 Conicyt, Chile). The Centro Interdisciplinario de Neurociencia de Valparaíso (CINV) is a Millennium Institute supported by the Millennium Scientific Initiative of the Ministerio de Economía (ICM-MINECON, Proyecto Codigo P09-022-F, CINV, Chile).

Author information

Authors and Affiliations

Contributions

K.X. and P.O. performed numerical simulations and analysis. J.M., S.C. and P.O. performed Functional Connectivity Dynamics Analysis. K.X. and P.O. wrote the manuscript. K.X., J.M., S.C. and P.O. revised and approved the manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xu, K., Maidana, J.P., Castro, S. et al. Synchronization transition in neuronal networks composed of chaotic or non-chaotic oscillators. Sci Rep 8, 8370 (2018). https://doi.org/10.1038/s41598-018-26730-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-26730-9

- Springer Nature Limited

This article is cited by

-

Chaos shapes transient synchrony activities and switchings in the excitatory-inhibitory networks

Nonlinear Dynamics (2024)

-

Obstacle induced spiral waves in a multilayered Huber-Braun (HB) neuron model

Cognitive Neurodynamics (2023)

-

Chaos break and synchrony enrichment within Hindmarsh–Rose-type memristive neural models

Nonlinear Dynamics (2021)