Abstract

There is intense interest in uncovering design rules that govern the formation of various structural phases as a function of chemical composition in multi-principal element alloys (MPEAs). In this paper, we develop a machine learning (ML) approach built on the foundations of ensemble learning, post hoc model interpretability of black-box models, and clustering analysis to establish a quantitative relationship between the chemical composition and experimentally observed phases of MPEAs. The originality of our work stems from performing instance-level (or local) variable attribution analysis of ML predictions based on the breakdown method, and then identifying similar instances based on k-means clustering analysis of the breakdown results. We also complement the breakdown analysis with Ceteris Paribus profiles that showcase how the model response changes as a function of a single variable, when the values of all other variables are fixed. Results from local model interpretability analysis uncover key insights into variables that govern the formation of each phase. Our developed approach is generic, model-agnostic, and valuable to explain the insights learned by the black-box models. An interactive web application is developed to facilitate model sharing and accelerate the design of MPEAs with targeted properties.

Similar content being viewed by others

Introduction

Multi-principal element alloys (MPEAs) are made by combining multiple elements, where every element contributes a significant atom fraction to the alloy1. High entropy alloys (HEAs) represent a materials class within the broader family of MPEAs with outstanding mechanical, thermal, and electrochemical properties2,3,4,5,6,7,8,9,10,11,12. HEAs are unique amongst MPEAs because they contain multiple (at least five) principal alloying elements of nearly equi-atomic concentration and yet have a global crystal structure with well-defined Bragg reflections indicative of long-range order. HEAs are typically solid solutions of face centered cubic (FCC), body centered cubic (BCC), or hexagonally closed packed (HCP) phases. Recently, the community has started to explore high entropic versions of intermetallic and ceramic compounds13,14. To date, numerous elements in the periodic table have been explored to tune the properties of HEAs. However, not all compositions have resulted in the desired microstructure for application in extreme environments. In general, the physical and mechanical properties of HEAs vary depending on phase selection and their relative fractions in the microstructure15,16,17. In some applications, mixed phases are preferred18; whereas in others, a single-phase is desired19. Nonetheless, these observations have led many groups to develop effective and efficient phase prediction models for enabling discoveries of previously unexplored HEAs for targeted applications.

Traditional high-throughput approaches based on first-principles calculations are particularly not suitable to search for MPEAs due to the need for large supercells and complex crystal structure space involving multiple prototypes. Although computational thermodynamics-based methods have played an important role20,21, their limitations are also documented in the published literature22. More recently, various groups have demonstrated the potential of data-driven machine learning (ML) methods to guide the design of MPEAs and HEAs towards promising regions in the search space22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37.

One of the most explored ML implementations on MPEAs research is the phase classification problem, where the objective is to train ML models for predicting whether a given chemical composition will form in: (1) single-phase FCC, BCC, or HCP solid solutions, (2) FCC+BCC dual phase with varying phase fractions, (3) single-phase intermetallics, (4) mixed phases (FCC+intermetalics, BCC+intermetallics, FCC+BCC+intermetallics, two different intermetallics etc.), or (5) amorphous phase. ML models with fairly high accuracy (over 75%) have been trained using small and large data set sizes and different choices of outputs. Various elemental and thermodynamic properties have been considered as input features for the phase classification problem23,26,29,30,31,32,33. A number of published studies also report descriptor importance based on cross-entropy, Gini index, or permutation methods to gain some insight into the descriptor contribution to the overall predictive power of the model. There are also shortcomings in the current approaches. As an example, none of the published papers explain the predictions of the black-box models at the granularity of each observation. There is a lack of principled approach to glean insights that shed light on the formation of each phase in the training set. This is important because it is not straight-forward to compare the predictive performance of every published ML study using the data sets generated from different research groups because the ML models are not published along with the research paper.

In this work, we advance the application of ML methods in the MPEA phase classification problem in two significant ways. First, we apply two complementary instance-level (or local) post hoc model interpretability approaches, namely breakdown (BD) plots and Ceteris Paribus (CP) profiles, to glean insights into each observation. The BD method is based on the variable attribution principle, which decomposes the prediction of each individual observation into particular variable contributions38. In contrast, the more traditional global variable importance method provides a high-level or generic understanding of the inner workings of a black-box model and captures the relative importance of a given variable in impacting the overall model performance on the entire data set (that includes all phases). The CP profile method, on the other hand, evaluates the prediction response of a trained ML model to changes in a particular variable under the assumption that the values of all other variables do not change. We then develop an algorithm that combines the variable attribution data from the BD method with k-means clustering method to infer insights about similar instances. These results provide insight into explaining the relative variable contributions in the prediction of each phase or class label as inferred by the ML models. In addition, the CP profile plot captures the average partial relationship between the predicted response and the input variables. In this paper, we demonstrate the power of local model interpretability methods as a key post hoc model analysis tool for materials informatics research. We apply them to explain the predictions from an ensemble of support vector machine (eSVM) models trained on a high-dimensional, multi-class MPEA phase classification problem data set. SVMs belong to a class of black-box models that lack transparency39,40. More details about the eSVM approach are given in the “Methods” section. Although the idea of a global vs local model interpretability has been discussed before in the literature41, its impact is not fully realized in the materials informatics literature. Second, we build an interactive web application (https://adaptivedesign.shinyapps.io/AIRHEAD/) that allows the user to query our trained models directly and predict previously unexplored MPEA or HEA compositions with the desired phase. This effort is aimed at allowing interested researchers to examine carefully the model predictions and facilitate the decision-making process. Moreover, this will also allow the MPEA community to objectively compare future models and document the progress.

Results

Dataset

Our initial data set for ML was constructed by referring to several previous reports that meticulously compiled experimental data from the published literature22,32,42,43,44,45,46,47,48,49,50,51,52. The merged data set contained 3,715 compositions ranging from binary to multi-component alloys. The frequency of occurrence of elements in our data set is illustrated in Supplementary Fig. 1 and Supplementary Table 1, which indicates that the frequency of occurrence of d-block elements is higher compared to that of the p-block elements. Therefore, we anticipate our trained ML models to have a relatively large uncertainty when describing the chemical compositions containing the p-block elements compared to that of the the d-block elements. Each composition was also augmented with the phase information as reported in the literature. The phases were then simplified into seven classes: BCC, FCC, BCC+FCC, HCP, Amorphous (AM), Intermetallics (IM), and Mixed-phases (MP). The simplification mainly pertains to the IM and MP labels. As an example, the IM label indicates that the microstructure contains at least one intermetallic phase. Whereas, the MP label indicates the presence of complex mixtures of multiple phase combinations in the microstructure. For instance, we used the IM label to present ordered phases of B2, C14, and L12 structure type47. While the MP label was used to represent 2BCC+B2, B2+σ, BCC+B2, BCC+FCC+B2, BCC+IM, FCC+B2, FCC+C14, FCC+IM, FCC+σ, 2BCC, and 2FCC phases in the microstructure. A final data set with 1,817 observations were obtained by removing all the duplicate data, missing values, and excluding the alloys showing inconsistent phase data depending on the source. Each of the 1,817 observation was represented by a total number of 125 variables53,54. We did not track the processing history, which can have an impact on the thermodynamics and kinetics of phase formation in the MPEAs.

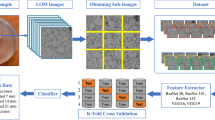

The number of variables were then reduced based on linear Pearson correlation coefficient (PCC)55. This is a common data pre-processing step in materials informatics and cheminformatics literature56,57,58,59,60. The workflow is shown in Fig. 1a. We considered two different absolute PCC threshold values (∣0.4∣ and ∣0.6∣) to down-select least linearly correlated input variables. Our choice of using a PCC criterion of ∣0.6∣ was motivated by the work of Pei et al.32. In addition, we also imposed a more stringent PCC criterion of ∣0.4∣ for further simplification. The PCC analysis resulted in identifying 12 and 20 variable sets for the ∣0.4∣ and ∣0.6∣ criterion, respectively. We note that the approach that we have explored in our work is more rigorous than some of the previous ML work on MPEAs in the literature55, where only one PCC criterion was used. We have tested two separate thresholds (PCC > ∣0.4∣ and PCC > ∣0.6∣) for feature selection. Unfortunately, there is no standard way to choose the thresholds, which makes the PCC analysis a challenging and an exploratory pre-processing step. In principle, one can resort to automated methods such as sure-independence screening61, but there are no convincing evidences in the literature showing that one method is better compared to the other on all data sets. In this work, we have made a concerted effort to retain features that have been well-studied in the literature (domain knowledge) so that we can show how our work advances the insights compared to the previous efforts. In our opinion, a brute-force, automated approach—agnostic of the domain knowledge—is not helpful in advancing the understanding of the MPEAs phase formation problem. The list of down-selected variables is given in Table 1. We can broadly subdivide the down-selected variables into three categories: (1) those that are chemistry-agnostic (e.g., Mixing Entropy), (2) those that depend on element pairs (e.g., DeltaHf), (3) those that depend on chemistry (everything else in Table 1).

The flow chart for a feature selection using Pearson correlation coefficients (PCC) and b machine learning and local model interpretability approach. In this work, we used an ensemble of support vector machines (eSVM) for multi-class classification learning, breakdown plots and Ceteris Paribus (CP) profiles for local model interpretability, and k-means clustering.

After the correlation analysis, we ended up with two pre-processed data sets (one with a 12 variable set and the other with a 20 variable set) for ML model building. The pre-processed data set was randomly split into two subsets with 75 and 25% data for training and testing, respectively. We used the eSVM algorithm for training the ML models. The optimal hyperparameters were determined using a grid search. The out-of-bag error rate was used to evaluate the performance. We systematically varied the number of bootstrap samples and found the 50 and 100 bootstrap eSVM models to show the best predictive performance on the test data for the 12 and 20 variable sets, respectively. Supplementary Tables 2 and 3 compare the relative performance of eSVM models on the test set in terms of accuracy, precision, recall, and F1-score. Both 12 and 20 feature sets of eSVM showed similar performance. Finally, we chose the simpler 12 feature set eSVM models for further analysis. The next step is the post hoc analysis of the trained eSVM models. We start with the global variable importance analysis, which is also the most common method within the ML MPEA community.

Global variable importance

The objective of global variable importance analysis is to evaluate the relative importance of each variable in impacting the overall predictive performance of the trained ML models. In this work, we used the well known permutation-method and cross-entropy loss function to assess the global variable importance62. In Fig. 2, we show the averaged global variable importance analysis from the 12 feature set eSVM model. All features appear to contribute to the prediction performance of the eSVM model. The error bar is the standard deviation from the 50 bootstrap samples. We note that Fig. 2 should be interpreted with caution because of the large standard deviation associated with the feature importance values. While it is not entirely common in the materials informatics literature to add error bars to the global feature importance analysis63,64,65, our work highlights the importance of adding them for improving the interpretability of the analysis. Mixing entropy, number of filled d or s valence electrons, covalent radius, and atomic weight are identified as relatively more important to affect the prediction performance. This result agrees well with the various ML papers in the literature23,26,29,30,31,33. While helpful, the global variable importance approach does not shed light on the following question: what variables contribute to the prediction of each phase (or class label) and how are these variables related to the predicted phase? This requires an implementation of local variable importance methods, which we discuss next.

Local variable importance

We focused on two complementary local model interpretability methods: (1) Breakdown plots and (2) Ceteris Paribus profiles. In the breakdown (BD) approach, we decompose the model prediction for a single observation into contributions that can be attributed to different input variables62,66. The BD analysis can start from either a null set of indexes or a full set of relaxed features, which are referred to as step-up and step-down approaches, respectively. In the case of step-down approach (as considered in our work), each contribution of input variable is calculated by sequentially removing a single variable from a set followed by variable relaxation in a way that the distance to the prediction is minimized. For example, in Fig. 3, a BD plot is shown for the NbTaTiV composition. The eSVM model predicted the composition to form in BCC with 100% probability score. Thus, we will obtain only one BD plot for this composition representing the BCC phase prediction. The BD plots resemble a bar graph. Each variable can either contribute positively (positive weight) or negatively (negative weight) to the overall prediction. The intercept of the BD plot indicates the baseline, which is the average prediction of the ensemble SVM model. The size of each bar shows the feature contributions to the difference between a final prediction and the baseline. As an example, the average MixingEntropy for the entire training set (1,364 compositions) is 9.025. However, for the specific NbTaTiV composition the MixingEntropy value corresponds to 11.526. According to the BD plot, the MixingEntropy value of 11.526 for NbTaTiV will reduce the baseline value by a small amount (negative contribution). In NbTaTiV, the mean_MeltingT, mean_NValence, and mean_NsValence variables carry the largest weight and are recognized as important for predicting the composition as forming in the BCC phase. In a similar manner, we calculated the BD plots for all compositions in the training data. Readers can access the BD plots through our Web App (https://adaptivedesign.shinyapps.io/AIRHEAD/).

The BD plot for NbTaTiV composition, which is predicted to form in BCC phase by the eSVM model. Each bar represents the averaged contribution for that variable towards the overall prediction. Both min_NpUnfilled and frac_pValence descriptors, strictly, take a value of 0 for NbTaTiV. However, it is important to note that the mean values for both min_NpUnfilled and frac_pValence in the entire training data set are not equal to 0. Thus, the plot indicates that when the min_NpUnfilled = frac_pValence = 0 for NbTiTaV, the lack of p-electrons in the valence configuration has a small but sizeable contribution to the overall prediction.

The Ceteris Paribus (CP) profiles convey complementary insights about the relationship between a variable and the response by showing how the prediction would be affected if we changed a value of one variable while keeping all other variables unchanged62. The method is based on the Ceteris Paribus principle; “Ceteris Paribus” is a Latin phrase meaning “other things held constant” or “all else unchanged”. CP profiles are an intuitive method to gain insights into how the black-box model works by investigating the influence of input variables separately, changing one at a time62. In essence, a CP profile shows the dependence of the conditional expectation of the dependent (or output) variable on the values of a particular input variable. In Fig. 4, we show a representative CP profile plot for the same NbTaTiV composition that was discussed in the previous BD section. Unlike the BD plot, we also observe the functional dependence of each variable on the model performance. In Fig. 4, x-axes are the input variables and the y-axes are the prediction probabilities from the eSVM models. There are seven curves in each panel and each curve represents a particular phase. For example, the red curve traces the prediction for the BCC phase. The CP profile plot highlights the presence of non-linear relationship between each of the features and the response. CP profiles for other compositions can be accessed through our Web App (https://adaptivedesign.shinyapps.io/AIRHEAD/).

The CP profile for NbTaTiV composition with respect to the 12 input variables. The black dots indicate the true feature values. The gray region indicates the upper and lower boundaries based on standard deviation. Line colors denote phase information: blue, MP; violet, AM; cyan, FCC; orange, BCC+FCC; lightblue, HCP; red, BCC; green, IM.

While the global variable importance analysis functions at the entire data set level, the BD and CP analyses function at the granularity of each instance or composition. These two methods represent the two extremes in the spectrum of post hoc model interpretability analysis. In addition, there is a need for model interpretability at the intermediate level that will yield insights specific to each phase in our data set (based on the collective similarity or clustering of similar observations). To address this question, we combined the BD plots with the k-means clustering analysis and CP profile data. The pseudocode is summarized in Algorithm 1, which describes the implementation sequence of the BD method, k-means clustering, and CP analysis.

Algorithm 1

Local interpretable ML algorithm using the BD and CP methods along with k-means clustering.

The algorithm starts with the BD analysis for each composition. For a given composition, the BD values are calculated from each trained SVM model in the ensemble and averaged across all 50 ensembles. The results are stored as a data frame. We then perform clustering analysis using the k-means algorithm, assigning a cluster label to each data point. We also construct CP profiles for each composition in the data set and group them according to the cluster labels. We then calculate the average CP profile for each cluster. The final outcome is two plots for each cluster: (1) averaged BD plots and (2) averaged CP profiles. Visualization of the two plots will yield phase-specific interpretation of the eSVM model. For k-means clustering, we plotted the total within sum of square as a function of the number of clusters to infer about the optimal k value (Supplementary Fig. 2a). The common recommendation in the literature is to select a k that provides the most useful or interpretable solution67. Although we could not find a clear elbow point, we selected k = 10 clusters after exploring several k-values. In Supplementary Fig. 2b, we project the high-dimensional data into two-dimensions using principal component analysis. The 10 clusters were then analyzed using histograms as shown in Fig. 5 and Supplementary Fig. 3, where we plot the frequency of occurrence of the number of components in the alloy composition for each cluster. Figure 5 shows that clusters 1, 5, 7, and 10 capture patterns that are representative of the binary systems. Given our interest in the design of HEAs, which normally consists of more than four components, we do not discuss the results from clusters 1, 5, 7, and 10. All other cluster provide important clues for uncovering phase-specific variable importance analysis that pertain to the MPEAs and HEAs. Instead of explaining each cluster in detail (which is beyond the scope of this paper), we only focused on specific clusters where the ML predictions agreed closely with the experimental labels in the data set.

In Supplementary Table 4, we compared the ML prediction accuracy for each of the 10 clusters. Figure 5 indicates that clusters 8 and 9 are representative of the MPEAs. Although cluster 4 is also representative of MPEAs (six-component alloys), it contained fewer data points than clusters 8 and 9. Therefore, we focused on clusters 8 and 9 for model interpretation. The prediction accuracy data from eSVM reveals that clusters 8 and 9 are representative of the BCC and AM phases, respectively. The averaged variable attribution analyses from the BD method for clusters 8 and 9 are shown in Fig. 6a, b, respectively. The mean_NsValence and maxdiff_AtomicWeight variables are identified as important variables for both BCC and AM phases. Since the maxdiff_AtomicWeight variable can be related to the atomic size mismatch, this result is in good agreement with the previous studies68,69. Figure 6a indicates that mean_MeltingT, maxdiff_NUnfilled, and mean_DeltaHf are key variables for the formation of BCC phase. From Fig. 6b, it can be inferred that maxdiff_Electronegativity, mean_NValence, and MixingEntropy are important for forming the AM phase. The relationship between mean_DeltaHf and BCC phase also agrees well with the previous published results70.

The averaged BD plots from other clusters are also displayed in Supplementary Fig. 4, and the interpretations are summarized in Supplementary Table 5. The analysis reveals similarities between BCC and IM phases, and between FCC and AM phases. The MP phase does not appear to have distinct characteristics. This may be due to the fact that the alloys of MP phase have a wider range of data distribution arising from relatively more abundant data and many different types of mixed phases compared to those with other phases that are more unique.

We next visualize the averaged CP profiles for clusters 8 and 9, which provide a more detailed account of the relationship between the input variables and the phases. The CP profiles for BCC and AM phases are shown in Fig. 7a, b, respectively. Not all input variables have unique functional relationships. For example, in Fig. 7a (representative of BCC phase), similar functional relationships are observed between: (1) frac_pValence, maxdiff_NUnfilled, and min_NpUnfilled, (2) mean_CovalentRadius and mean_DeltaHf, (3) dev_NdValence, maxdiff_Electronegativity, and mean_NValance, and (4) mean_NsValence and mean_MeltingT. The maxdiff_AtomicWeight and MixingEntropy are the only two variables that do not share a similar relationship with any other variable. From Fig. 7a (with all else being equal), we infer that any change in the MixingEntropy will not affect the overall composition-phase relationship of the elements in the BCC phases. This gives us the flexibility to tune the composition, without having to worry about any competition with other phases. However, we cannot infer the same for the compositions in Fig. 7b (that correspond to the AM phase). At lower values of MixingEntropy, the Blue curve (MP phase) has a higher predicted probability compared to the Violet curve (AM phase). The phase formation region of the AM phase is predicted to be relatively narrow. This example shows the promise of CP profile plots to uncover important insights that govern the composition-phase relationships in the MPEA family.

The averaged CP profiles for a cluster 8 (BCC phase) and b cluster 9 (AM phase) with respect to the 12 input variables. The black dots indicate the true feature values (normalized) for all the data points within that cluster. Line colors denote phase information: blue, MP; violet, AM; cyan, FCC; orange, BCC+FCC; lightblue, HCP; red, BCC; green, IM.

We also made an attempt to connect the averaged BD plots (Fig. 6a) with the averaged CP profiles (Fig. 7a) for the BCC phase. We found that high mean_MeltingT, high mean_NsValence, and mean_DeltaHf values between 0.3 and 0.5 favor BCC phase formation. From the standpoint of maxdiff_AtomicWeight and maxdiff_NUnfilled variables, MPEAs tend to form in BCC phase when the constituent elements have moderately different atomic weights and similar number of the unfilled valence orbitals. In the case of AM phase (Fig. 7b), while high mean_NsValence values are preferred, low mean_NValence values favor AM phase formation. Low MixingEntropy should be avoided, because it appears to favor the formation of mixed phase (blue curve in Fig. 7b). There is a window of values for maxdiff_AtomicWeight and maxdiff_Electronegativity that favor AM phase formation. In Fig. 7b, extreme values of maxdiff_AtomicWeight and maxdiff_Electronegativity appear to favor mixed phase.

So far, we have been comparing the averaged CP profiles within a cluster. We also observe some interesting patterns between the two clusters. For example, maxdiff_AtomicWeight, mean_CovalentRadius, mean_NValance, mean_NsValence, frac_pValence, mean_DeltaHf, and min_NpUnfilled have similar functional forms. In contrast dev_NdValence, maxdiff_Electronegativity, MixingEntropy, maxdiff_NUnfilled and mean_MeltingT show distinct functional dependencies. The implications of these results are not entirely clear, but showcases the potential of local model interpretability methods for in-depth examination of the black-box models.

In Fig. 8, we show the distribution of constituent elements in clusters 8 and 9. The elements on the left side of the d-block in the periodic table, along with Al, are found in the BCC cluster (cluster 8). In contrast, the compositions representing the AM phase (cluster 9) show a scattered distribution of elements from the d-block. The existence of Be atom in the AM cluster likely implies the connection between the AM phase and a large difference in atomic weight. From the pie charts, we can see that both Ti and Zr are the major elements in both BCC and AM clusters. When it comes to unique elemental constituents, the elements of Nb, Ta, Mo, and V are commonly found in the BCC phase, whereas Cu, Ni, and Al are in the AM phase. Other clusters are also analyzed in the same manner and the results are shown in Supplementary Fig. 5. For FCC, the constituent elements are distributed in the first and second rows of the d-block from the periodic table. The MP phase is similarly related to the first row of the d-block, but several of the p-block elements also participate in the formation of MP phase.

The constituent elements present in clusters 8 (BCC phase) and 9 (AM phase) are a depicted in the periodic table and b analyzed by pie charts, where each number shows their frequency of occurrence. The purple (dashed), red (solid), and blue (dotted) circles indicate the elements appearing in both BCC and AM phases, only BCC phase, and only AM phase, respectively.

Discussion

There is an increasing interest in the application of model interpretability tools to problems in materials science71,72,73,74,75. The expectation that the ML model should also explain the underlying patterns of materials phenomena in addition to the predictions has been steadily increasing. There are also papers from other disciplines, such as bioinformatics, that share similar goals76. We have developed a post hoc ML model interpretability framework for the MPEA phase classification problem. The methodology provide an in-depth analysis of the complex black-box models and extracts interpretable patterns from an ensemble of trained models. In the materials informatics literature, the results from global variable importance are widely used to interpret which variables are strongly related to the ML performance. We argue that phase-specific (or class label specific) variable importance analysis based on local model interpretability offers a distinctive way to gain much deeper insights into the global variable importance results. To illustrate this point, we also compared the global and local variable importance plots to glean additional insights (main results are distilled in Supplementary Table 6). Note that the top three variables from the global variable importance analysis (interpreted solely on the basis of the mean values), namely MixingEntropy, dev_NdValence, and mean_CovalentRadius, are not associated with either the single-phase BCC or FCC compositions that have attracted interest for tailoring the mechanical properties of the HEAs77. The fact that these variables are connected to the MP phase indicates that the presence of a large fraction of the MP phase in the dataset significantly affects (or biases) the global variable importance analysis. One can also find that the important variables for BCC and FCC from the BD plots are not ranked highly by the global variable importance. Therefore, pursuing MPEA design based solely from global variable importance analysis could potentially mislead the researchers especially from the context of a multi-class classification learning setting. Augmenting global variable importance analysis with local feature importance has many desirable characteristics for rationally tailoring MPEA compositions with desired properties.

Methods

Data preprocessing

The dataset collected from the literature consists of 1,817 compositions after deleting the duplicate data and missing values. Descriptors are generated by the Magpie program53 which is a package to compute the concentration-weighted values of materials using the elemental or pairwise properties of components. The mixing enthalpy is calculated based on the Miedema semi-empirical theory54 for binary alloys and the Pauling electronegativity is considered for electronegativity descriptors whose data are also available in the Magpie program. All the formulae used in the Magpie program are summarized in Supplementary Note 1. To find the independent descriptors among 125 descriptors, the feature values are normalized by min-max scaling and then analyzed using pair-wise Pearson correlation within the RSTUDIO environment78.

Machine learning

We employed the eSVM models for multi-class classification learning tasks79. The eSVM algorithm comprises multiple SVM models generated by the bootstrap sampling method80. We used the nonlinear Gaussian radial basis function kernel, as implemented in the e1071 package81. One can generate a large number of training sets using the bootstrap sampling, where samples are randomly drawn with replacement. Every resampling produces two types of samples: (1) in-bag and (2) out-of-bag (OOB), which are used for training and testing the ML models, respectively. The optimization of eSVM hyperparameters is done by the OOB evaluation using grid search.

Breakdown and Ceteris Paribus methods

To interpret the trained eSVM model, the BD and CP profile methods as implemented in the DALEX package62 were applied to compute the contributions of features and individual profiles to ML prediction, respectively. The k-mean clustering algorithm from the factoextra package82 was used to divide the dataset containing the BD values into clusters in an unsupervised fashion. Local feature importance is analyzed based on the averaged BD data by identifying the correlation between each cluster and the phase selections as predicted by the BD method. The global variable importance of the eSVM is obtained by averaging the outputs of global variable importance for each individual SVM part across all the bootstrap samples.

Web application

Applications developed with the Shiny package83 in the R programming language allow users to interactively engage with models defined in the server end (server.R). The front end of the application, contained in the user-interface script (ui.R), takes a user inputted string composed of element symbols followed by the amount of the element (e.g., Al1.0V1.0Nb1.0T1.0) representing the composition of the HEA. The trained eSVM model in the backend generates the phase probability for the given composition. Additionally, the users can obtain the set of 12 descriptors (Table 1), generated using an R script based on the Magpie package. For each composition, the user can add the phase probability and descriptor information to a dynamic history, able to be exported as a comma-separated value file at the end of the session. For each of the 1,367 points in the training set, users can see the associated BD plot and CP profiles. The web app can be accessed at https://adaptivedesign.shinyapps.io/AIRHEAD/.

Data availability

The dataset used for the ML study is freely available in our Web App (https://adaptivedesign.shinyapps.io/AIRHEAD/), on Figshare84, and on Supplementary Information.

Code availability

The code used are available from the corresponding author upon reasonable request

References

Senkov, O., Miller, J., Miracle, D. & Woodward, C. Accelerated exploration of multi-principal element alloys with solid solution phases. Nat. Commun. 6, 6529 (2015).

Cantor, B., Chang, I., Knight, P. & Vincent, A. Microstructural development in equiatomic multicomponent alloys. Mater. Sci. Eng.: A 375–377, 213–218 (2004).

Yeh, J.-W. et al. Nanostructured high-entropy alloys with multiple principal elements: Novel alloy design concepts and outcomes. Adv. Eng. Mater. 6, 299–303 (2004).

Zhang, Y. et al. Microstructures and properties of high-entropy alloys. Prog. Mater. Sci. 61, 1–93 (2014).

Senkov, O. N., Miracle, D. B., Chaput, K. J. & Couzinie, J.-P. Development and exploration of refractory high entropy alloys—A review. J. Mater. Res. 33, 3092–3128 (2018).

Kumar, A. & Gupta, M. An insight into evolution of light weight high entropy alloys: A review. Metals 6, 199 (2016).

Gandy, A. S. et al. High temperature and ion implantation-induced phase transformations in novel reduced activation Si-Fe-V-Cr (-Mo) high entropy alloys. Front. Mater. 6, 146 (2019).

Chen, J. et al. A review on fundamental of high entropy alloys with promising high-temperature properties. J. Alloy. Compd. 760, 15–30 (2018).

Miracle, D. B. et al. Exploration and development of high entropy alloys for structural applications. Entropy 16, 494–525 (2014).

Praveen, S. & Kim, H. S. High-entropy alloys: Potential candidates for high-temperature applications—an overview. Adv. Eng. Mater. 20, 1700645 (2018).

Miracle, D. High entropy alloys as a bold step forward in alloy development. Nat. Commun. 10, 1805 (2019).

George, E. P., Raabe, D. & Ritchie, R. O. High-entropy alloys. Nat. Rev. Mater. 4, 515–534 (2019).

Oses, C., Toher, C. & Curtarolo, S. High-entropy ceramics. Nat. Rev. Mater. 5, 295–309 (2020).

Zhou, N. et al. Single-phase high-entropy intermetallic compounds (HEICs): Bridging high-entropy alloys and ceramics. Sci. Bull. 64, 856–864 (2019).

Wong, S.-K., Shun, T.-T., Chang, C.-H. & Lee, C.-F. Microstructures and properties of Al0.3CoCrFeNiMnx high-entropy alloys. Mater. Chem. Phys. 210, 146–151 (2018).

Li, Z., Pradeep, K. G., Deng, Y., Raabe, D. & Tasan, C. C. Metastable high-entropy dual-phase alloys overcome the strength-ductility trade-off. Nature 534, 227–230 (2016).

Chen, R. et al. Composition design of high entropy alloys using the valence electron concentration to balance strength and ductility. Acta Mater. 144, 129–137 (2018).

Tang, Z., Zhang, S., Cai, R., Zhou, Q. & Wang, H. Designing high entropy alloys with dual fcc and bcc solid-solution phases: Structures and mechanical properties. Metall. Mater. Trans. A 50, 1888–1901 (2019).

Feuerbacher, M., Lienig, T. & Thomas, C. A single-phase bcc high-entropy alloy in the refractory Zr-Nb-Ti-V-Hf system. Scr. Mater. 152, 40–43 (2018).

Zhang, C. & Gao, M. C. High-Entropy Alloys 399–444 (Springer, 2016).

Feng, R. et al. High-throughput design of high-performance lightweight high-entropy alloys. Nat. Commun. 12, 4329 (2021).

Qi, J., Cheung, A. M. & Poon, S. J. High entropy alloys mined from binary phase diagrams. Sci. Rep. 9, 15501 (2019).

Islam, N., Huang, W. & Zhuang, H. L. Machine learning for phase selection in multi-principal element alloys. Comput. Mater. Sci. 150, 230–235 (2018).

Kim, G. et al. First-principles and machine learning predictions of elasticity in severely lattice-distorted high-entropy alloys with experimental validation. Acta Mater. 181, 124–138 (2019).

Zhou, Z. et al. Machine learning guided appraisal and exploration of phase design for high entropy alloys. npj Comput. Mater. 5, 128 (2019).

Huang, W., Martin, P. & Zhuang, H. L. Machine-learning phase prediction of high-entropy alloys. Acta Mater. 169, 225–236 (2019).

Li, Y. & Guo, W. Machine-learning model for predicting phase formations of high-entropy alloys. Phys. Rev. Mater. 3, 095005 (2019).

Qu, N., Chen, Y., Lai, Z., Liu, Y. & Zhu, J. The phase selection via machine learning in high entropy alloys. Procedia Manuf. 37, 299–305 (2019).

Kaufmann, K. & Vecchio, K. S. Searching for high entropy alloys: A machine learning approach. Acta Mater. 198, 178–222 (2020).

Zhang, L. et al. Machine learning reveals the importance of the formation enthalpy and atom-size difference in forming phases of high entropy alloys. Mater. Des. 193, 108835 (2020).

Dai, D. et al. Using machine learning and feature engineering to characterize limited material datasets of high-entropy alloys. Comput. Mater. Sci. 175, 109618 (2020).

Pei, Z., Yin, J., Hawk, J. A., Alman, D. E. & Gao, M. C. Machine-learning informed prediction of high-entropy solid solution formation: Beyond the Hume-Rothery rules. npj Comput. Mater. 6, 50 (2020).

Zhang, Y. et al. Phase prediction in high entropy alloys with a rational selection of materials descriptors and machine learning models. Acta Mater. 185, 528–539 (2020).

Risal, S., Zhu, W., Guillen, P. & Sun, L. Improving phase prediction accuracy for high entropy alloys with machine learning. Comput. Mater. Sci. 192, 110389 (2021).

Lee, S. Y., Byeon, S., Kim, H. S., Jin, H. & Lee, S. Deep learning-based phase prediction of high-entropy alloys: Optimization, generation, and explanation. Mater. Des. 197, 109260 (2021).

Beniwal, D. & Ray, P. Learning phase selection and assemblages in high-entropy alloys through a stochastic ensemble-averaging model. Comput. Mater. Sci. 197, 110647 (2021).

Yan, Y., Lu, D. & Wang, K. Accelerated discovery of single-phase refractory high entropy alloys assisted by machine learning. Comput. Mater. Sci. 199, 110723 (2021).

Staniak, M. & Biecek, P. Explanations of model predictions with live and breakDown Packages. R. J. 10, 395 (2019).

Cortes, C. & Vapnik, V. Support-vector networks. Mach. Learn. 20, 273–297 (1995).

Vapnik, V. N. Estimation of Dependences Based on Empirical Data: Empirical Inference Science: Afterword of 2006 2nd edn (Springer, 2006).

Murdoch, W. J., Singh, C., Kumbier, K., Abbasi-Asl, R. & Yu, B. Definitions, methods, and applications in interpretable machine learning. Proc. Natl Acad. Sci. USA 116, 22071–22080 (2019).

Yang, X. & Zhang, Y. Prediction of high-entropy stabilized solid-solution in multi-component alloys. Mater. Chem. Phys. 132, 233–238 (2012).

Hu, Q. et al. Parametric study of amorphous high-entropy alloys formation from two new perspectives: Atomic radius modification and crystalline structure of alloying elements. Sci. Rep. 7, 39917 (2017).

Senkov, O. & Miracle, D. A new thermodynamic parameter to predict formation of solid solution or intermetallic phases in high entropy alloys. J. Alloy. Compd. 658, 603–607 (2016).

Guo, S., Hu, Q., Ng, C. & Liu, C. More than entropy in high-entropy alloys: Forming solid solutions or amorphous phase. Intermetallics 41, 96–103 (2013).

Toda-Caraballo, I. & del Castillo, P. R.-D. A criterion for the formation of high entropy alloys based on lattice distortion. Intermetallics 71, 76–87 (2016).

Miracle, D. & Senkov, O. A critical review of high entropy alloys and related concepts. Acta Mater. 122, 448–511 (2017).

Parlinski, K., Li, Z. Q. & Kawazoe, Y. First-principles determination of the soft mode in cubic ZrO2. Phys. Rev. Lett. 78, 4063–4066 (1997).

Gao, M. et al. Thermodynamics of concentrated solid solution alloys. Curr. Opin. Solid State Mater. Sci. 21, 238–251 (2017).

Tan, Y., Li, J., Tang, Z., Wang, J. & Kou, H. Design of high-entropy alloys with a single solid-solution phase: Average properties vs. their variances. J. Alloy. Compd. 742, 430–441 (2018).

Ye, Y., Wang, Q., Lu, J., Liu, C. & Yang, Y. High-entropy alloy: Challenges and prospects. Mater. Today 19, 349–362 (2016).

Borg, C. K. H. et al. Expanded dataset of mechanical properties and observed phases of multi-principal element alloys. Sci. Data 7, 430 (2020).

Ward, L., Agrawal, A., Choudhary, A. & Wolverton, C. A general-purpose machine learning framework for predicting properties of inorganic materials. npj Comput. Mater. 2, 16028 (2016).

Takeuchi, A. & Inoue, A. Classification of bulk metallic glasses by atomic size difference, heat of mixing and period of constituent elements and its application to characterization of the main alloying element. Mater. Trans. 46, 2817–2829 (2005).

Kelleher, J. D., D'Arcy, A. & Namee, B. M. Fundamentals of Machine Learning for Predictive Data Analytics: Algorithms, Worked Examples, and Case Studies (The MIT Press, 2020).

Kunka, C., Shanker, A., Chen, E. Y., Kalidindi, S. R. & Dingreville, R. Decoding defect statistics from diffractograms via machine learning. npj Comput. Mater. 7, 67 (2021).

Wang, Q. et al. Predicting the propensity for thermally activated β events in metallic glasses via interpretable machine learning. npj Comput. Mater. 6, 194 (2020).

Im, J. et al. Identifying Pb-free perovskites for solar cells by machine learning. npj Comput. Mater. 5, 37 (2019).

Medasani, B. et al. Predicting defect behavior in B2 intermetallics by merging ab initio modeling and machine learning. npj Comput. Mater. 2, 1 (2016).

Shen, M., Xiao, Y., Golbraikh, A., Gombar, V. K. & Tropsha, A. Development and validation of k-nearest-neighbor QSPR models of metabolic stability of drug candidates. J. Med. Chem. 46, 3013–3020 (2003).

Fan, J. & Lv, J. Sure independence screening for ultrahigh dimensional feature space. J. R. Stat. Soc.: Ser. B (Stat. Methodol.) 70, 849–911 (2008).

Biecek, P., Maksymiuk, S. & Baniecki, H. moDel Agnostic Language for Exploration and eXplanation. https://dalex.drwhy.ai, https://github.com/ModelOriented/DALEX. R package version 2.2.0 (2021).

Lu, Z. et al. Interpretable machine-learning strategy for soft-magnetic property and thermal stability in Fe-based metallic glasses. npj Comput. Mater. 6, 187 (2020).

Pimachev, A. K. & Neogi, S. First-principles prediction of electronic transport in fabricated semiconductor heterostructures via physics-aware machine learning. npj Comput. Mater. 7, 93 (2021).

Liu, Z. et al. Machine learning on properties of multiscale multisource hydroxyapatite nanoparticles datasets with different morphologies and sizes. npj Comput. Mater. 7, 142 (2021).

Staniak, M. & Biecek, P. Explanations of model predictions with live and breakDown Packages. R. J. 10, 395–409 (2018).

Friedman, J., Hastie, T. & Tibshirani, R. The Elements of Statistical Learning Vol. 1 (Springer, 2001).

Takeuchi, A. & Inoue, A. Calculations of mixing enthalpy and mismatch entropy for ternary amorphous alloys. Mater. Trans., JIM 41, 1372–1378 (2000).

Takeuchi, A. & Inoue, A. Classification of bulk metallic glasses by atomic size difference, heat of mixing and period of constituent elements and its application to characterization of the main alloying element. Mater. Trans. 46, 2817–2829 (2005).

Zhang, Y., Zhou, Y., Lin, J., Chen, G. & Liaw, P. Solid-solution phase formation rules for multi-component alloys. Adv. Eng. Mater. 10, 534–538 (2008).

Ling, J. et al. Building data-driven models with microstructural images: Generalization and interpretability. Mater. Discov. 10, 19–28 (2017).

Xie, T. & Grossman, J. C. Crystal graph convolutional neural networks for an accurate and interpretable prediction of material properties. Phys. Rev. Lett. 120, 145301 (2018).

Lopez, S. A., Sanchez-Lengeling, B., de Goes Soares, J. & Aspuru-Guzik, A. Design principles and top non-fullerene acceptor candidates for organic photovoltaics. Joule 1, 857–870 (2017).

Gurnani, R., Yu, Z., Kim, C., Sholl, D. S. & Ramprasad, R. Interpretable machine learning-based predictions of methane uptake isotherms in metal-organic Frameworks. Chem. Mater. 33, 3543–3552 (2021).

Xiong, J., Shi, S.-Q. & Zhang, T.-Y. Machine learning of phases and mechanical properties in complex concentrated alloys. J. Mater. Sci. Technol. 87, 133–142 (2021).

Clough, J. R. et al. Global and local interpretability for cardiac mri classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 656–664 (Springer, 2019).

George, E., Curtin, W. & Tasan, C. High entropy alloys: A focused review of mechanical properties and deformation mechanisms. Acta Mater. 188, 435–474 (2020).

R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria (2012). http://www.R-project.org/.

Vapnik, V. The Nature of Statistical Learning Theory (Springer-Verlag, 2000).

MacKinnon, D. P., Lockwood, C. M. & Williams, J. Confidence limits for the indirect effect: Distribution of the product and resampling methods. Multivar. Behav. Res. 39, 99–128 (2004).

Meyer, D., Dimitriadou, E., Hornik, K., Weingessel, A. & Leisch, F. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071), TU Wien. http://CRAN.R-project.org/package=e1071. R package version 1.6-7. (2015).

Kassambara, A. & Mundt, F. Extract and Visualize the Results of Multivariate Data Analyses. http://www.sthda.com/english/rpkgs/factoextra. R package version 1.0.7. (2020).

Chang, W., Cheng, J., Allaire, J., Xie, Y. & McPherson, J. shiny: Web Application Framework for R . https://CRAN.R-project.org/package=shiny. R package version 1.5.0. (2020).

Lee, K., Ayyasamy, M. V., Delsa, P., Hartnett, T. Q. & Balachandran, P. V. Phase classification of multi-principal element alloys via interpretable machine learning. figshare https://doi.org/10.6084/m9.figshare.15098094.v2 (2021).

Acknowledgements

Research was sponsored by the Defense Advanced Research Project Agency (DARPA) and The Army Research Office and was accomplished under Grant Number W911NF-20-1-0289. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of DARPA, the Army Research Office, or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Author information

Authors and Affiliations

Contributions

The study was planned by K.L., T.Q.H., and P.V.B. The manuscript was prepared by K.L, M.V.A., P.D., T.Q.H., and P.V.B. The data set construction was done by K.L. and T.Q.H. The machine learning studies were performed by K.L. and M.V.A. The web app was built by P.D., K.L., and P.V.B. All authors discussed the results, wrote, and commented on the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, K., Ayyasamy, M.V., Delsa, P. et al. Phase classification of multi-principal element alloys via interpretable machine learning. npj Comput Mater 8, 25 (2022). https://doi.org/10.1038/s41524-022-00704-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41524-022-00704-y

- Springer Nature Limited

This article is cited by

-

Impact of ratings of content on OTT platforms and prediction of its success rate

Multimedia Tools and Applications (2024)

-

Machine learning-assisted efficient design of Cu-based shape memory alloy with specific phase transition temperature

International Journal of Minerals, Metallurgy and Materials (2024)

-

Structure-Phase Status of the High-Entropy AlNiNbTiCo Alloy

Russian Physics Journal (2024)

-

Machine Learning-Based Classification, Interpretation, and Prediction of High-Entropy-Alloy Intermetallic Phases

High Entropy Alloys & Materials (2023)

-

Overview: recent studies of machine learning in phase prediction of high entropy alloys

Tungsten (2023)