Abstract

This paper’s major goal is to provide a numerical approach for estimating solutions to a coupled system of convection–diffusion equations with Robin boundary conditions (RBCs). We devised a novel method that used four homogeneous RBCs to generate basis functions using generalized shifted Legendre polynomials (GSLPs) that satisfy these RBCs. We provide new operational matrices for the derivatives of the developed polynomials. The collocation approach and these operational matrices are utilized to find approximate solutions for the system under consideration. The given system subject to RBCs is turned into a set of algebraic equations that can be solved using any suitable numerical approach utilizing this technique. Theoretical convergence and error estimates are investigated. In conclusion, we provide three illustrative examples to demonstrate the practical implementation of the theoretical study we have just presented, highlighting the validity, usefulness, and applicability of the developed approach. The computed numerical results are compared to those obtained by other approaches. The methodology used in this study demonstrates a high level of concordance between approximate and exact solutions, as shown in the presented tables and figures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The convection–diffusion equation is a mathematical representation that combines the diffusion and convection equations. It is used to explain many physical events in which the transfer of particles, energy, or other physical variables occurs inside a physical system as a result of two distinct processes: diffusion and convection. The nomenclature for a certain equation may vary depending on the specific context in which it is used. In some cases, the equation may be referred to as the convection–diffusion equation, while in other cases it may be termed the drift-diffusion equation. Additionally, it is also possible for the equation to be referred to as the generic scalar transport equation [7, p.64].

The study of singularly perturbed coupled systems of convection–diffusion equations has a rich history in the field of applied mathematics. Such systems arise in various scientific disciplines, including fluid mechanics, chemical engineering, and environmental modeling, among others. In these systems, convection and diffusion work together. Convection is the movement of a substance due to the motion of the fluid as a whole, and diffusion is the spreading or dispersing of the substance due to random molecular movement. One of these effects is significantly dominant compared to the other, leading to a disparity in the magnitudes of the associated coefficients. This results in a multiscale behavior of the solution, where rapid variations occur in thin transition layers, while the solution behaves more slowly away from these layers. Understanding and accurately estimating the solutions of such systems present challenges due to the presence of sharp gradients and the need to resolve these layers accurately.

The choice of boundary conditions is crucial when solving the singularly perturbed coupled system of convection–diffusion equations. Different forms of boundary conditions, including Dirichlet, Neumann, and Robin, can be considered [10, 11, 21, 25]. However, the focus of this paper is on the application of Robin boundary conditions. Robin boundary conditions, also referred to as impedance or mixed boundary conditions, combine Dirichlet and Neumann conditions. They offer modeling flexibility for systems in which the values of the solution and its derivative affect the exchange or transmission of a substance across a boundary [9, 20]. By adding Robin boundary conditions to the coupled convection–diffusion system, physical phenomena can be represented more accurately, making the model more accurate.

The Robin boundary condition problem for a set of singularly perturbed convection–diffusion equations is the subject of this research. This problem in the form:

subject to the set of four RBCs:

where \(F_{1}\) and \(F_{2}\) are either linear or nonlinear functions, \(0\le \varepsilon _{j}\ll 1,\) are the perturbation parameters and \(\alpha _{ij},\,\beta _{ij},\,\gamma _{ij},\,i,j=1,2,\) are all constants.

In the case of \(F_{1}\) and \(F_{2}\) are linear functions, this system has been the subject of several related investigations: the adaptive grid method [21], hybrid difference schemes on the Shishkin mesh [26], upwind finite difference scheme on a Shishkin meshes [6], a parameter uniform numerical method [13].

Three well-known spectral methods are the collocation method, the tau method, and the Galerkin method. These methods provide very accurate approximations for a wide range of various differential equations. The form of these methods depends on the kind of differential equation being solved and the boundary conditions being considered. The computational cost of solving differential equations using one of these methods may be reduced by using operational matrices to construct efficient approaches (see for instance, [1,2,3,4,5, 8, 22, 24]).

To the best of our knowledge, a Galerkin operational matrix with any basis function that fulfills the homogeneous RBCs (In the form (1.2) with \(\gamma _{i,j}= 0,i,j=1,2\)) is unknown and untraceable in the literature. This contributes to our interest in such an operational matrix. The numerical approach of BVP (1.1) and (1.2) using this kind of operational matrix is another inspiration.

The primary goals of this study are as follows:

-

(i)

Using GSLPs to build a new class of basis polynomials that meet the four homogeneous Robin boundary conditions, we called them Robin-Modified Legendre polynomials (RMLP).

-

(ii)

Creating operational matrices for the derivatives of the calculated polynomials.

-

(iii)

Creating a numerical technique for solving BVP (1.1) and (1.2) utilizing the collocation method and the operational matrices of derivatives given.

-

(iv)

Estimating the error obtained for the approximate solution.

The structure of the paper is as follows. The Legendre polynomials and their shifted ones GSLPs are explored in Sect. 2. Section 3 is confined to building RMLP that satisfies homogenous RBCs. Section 4 focuses on creating a new operational matrix of RMLP’ derivatives to handle BVP (1.1) and (1.2). The section 5 looks at the use of the collocation method to provide numerical approache for BVP, (1.1), and (1.2). Section 6 discusses theoretical convergence and error estimates. Section 7 includes three examples as well as comparisons with other approaches from the literature. Finally, some conclusions are presented in Sect. 8.

2 An Overview on Legendre Polynomials and their Shifted Ones

One of the several approaches that may be used to define the Legendre polynomials of degree n, \(L_{n}(t)\), is the recurrence formula, which reads as follows [14]:

and they are orthogonal polynomials and satisfy the relation

The generalized shifted Legendre polynomials (GSLPs) \(L^*_{n}(x;a,b)\), are defined as follows:

and their orthogonality relation is

Lemma 2.1

The GSLPs can be represented as

where

Proof

The known shifted Legendre polynomials \(L^*_{n}(x;0,1)\) are expressed analytically as

Then

Substituting the relation

to Eq.(2.6), expanding and collecting similar terms - and after some rather manipulation - one can see that \(L^{*(q)}_{n}(0;a,b), q\le n,\) take the form (2.4) and this completes the proof of Lemma 2.1. \(\square\)

It follows logically from Lemma 2.1 that

Note 2.1

Here, it is important to remember that the generalized hypergeometric function is defined as Luke [23]

where \(b_j\ne 0\), for all \(1\le j\le q\).

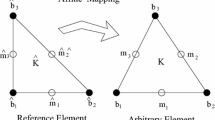

3 Robin-Modified Legendre Polynomials

This section introduces two new classes of polynomials denoted by \(\varphi _{j,k}(x),\,j=1,2,\) and they are referred to as RMLP and meet the homogeneous RBCs:

respectively. To achieve this aim, it is proposed that RMLP be written as

where the constants \(\mathbb {A}_{j,k},\,\mathbb {B}_{j,k},\,j=1,2,\) will be computed such that \(\varphi _{j,k}(x)\) fulfill the conditions (3.1), respectively. Substitution of \(\varphi _{j,k}(x)\) into (3.1) yields the two systems for \(j=1,2\):

respectively, which directly provides

where \(d_{k}=k^2+k\), \(L=b-a\), \(r=a+b\) and

The proposed RMLP have the special values

4 Operational Matrix of Derivatives of RMLP

Operational derivative matrices for \(\varphi _{j,n}(x),\,n=0,1,2,\dots ,\) will be developed in this section. They will be new Galerkin operational matrices of derivatives. The following Theorem must be proven first:

Theorem 4.1

\(D\varphi _{j,n}(x),\,j=1,2,\) for all \(n\ge 0\), have the following expansions:

and

where \(a_{0}(n),\,a_{1}(n),\,\dots ,a_{n-1}(n)\), satisfy the system

while the coefficients \(\tilde{a}_{0}(n),\,\tilde{a}_{1}(n),\,\dots ,\tilde{a}_{n-1}(n)\), satisfy the system

where \(\textbf{a}_{n}=[a_{0}(n),\,a_{1}(n),\,\dots ,a_{n-1}(n)]^{T}\), \(\tilde{\textbf{a}}_{n}=[\tilde{a}_{0}(n),\,\tilde{a}_{1}(n),\,\dots ,\,\tilde{a}_{n-1}(n)]^{T}\), \(\textbf{G}_{n}=(g_{i,j}(n))_{0\le i,j\le n-1}\), \(\tilde{\textbf{G}}_{n}=(\tilde{g}_{i,j}(n))_{0\le i,j\le n-1}\), \(\textbf{B}_{n}=[b_{0}(n),\,b_{1}(n),\,\dots ,b_{n-1}(n)]^{T}\) and \(\tilde{\textbf{B}}_{n}=[\tilde{b}_{0}(n),\,\tilde{b}_{1}(n),\,\dots ,\tilde{b}_{n-1}(n)]^{T}\). The elements of \(\textbf{G}_{n}\), \(\tilde{\textbf{G}}_{n}\), \(\textbf{B}_{n}\) and \(\tilde{\textbf{B}}_{n}\) are defined as follows:

and

In addition, \(e_{i}(n)\) and \(\tilde{e}_{i}(n)\), \(i=0,1,\) have the forms:

Proof

It is easy to see that \(e_{0}(n)\) and \(e_{1}(n)\) take the form in (4.5). This enable us to write (4.1) in the form:

Using Maclaurin series for \(\varphi _{1,j}(x)\) and \(D\varphi _{1,n}(x)\), Eq.(4.6) takes the form:

This gives the following triangle system of n equations in the unknowns \(a_{j}(n),j=0,1,\dots ,n-1\),

which can be written in the matrix form (4.3). As before, it is possible to show that \(D\varphi _{2,n}(x)\) has the expansion (4.2). Here, \(\tilde{e}_{0}(n)\) and \(\tilde{e}_{1}(n)\) have the forms in (4.5), and \(\tilde{a}_{j}(n),j=0,1,\dots ,n-1\) satisfy the system (4.4). This completes the proof of Theorem 4.1. \(\square\)

This section’s primary objective is to introduce the operational matrices of derivatives of

which is stated in the following corollary:

Corollary 4.1

The mth derivative of the vectors \(\varvec{\Phi }_{j}(x),\,j=1,2,\) have the forms:

where \({\varvec{\epsilon }_{1}}(x)=\left[ \epsilon _{0}(x),\epsilon _{1}(x),\dots ,\epsilon _{N}(x)\right] ^T\), \({\varvec{\epsilon }_{2}}(x)=\left[ \tilde{\epsilon }_{0}(x),\tilde{\epsilon }_{1}(x),\dots ,\tilde{\epsilon }_{N}(x)\right] ^T\), \({\varvec{H}}_{1}=\big (h_{i,j}\big )_{0\le i,j\le N}\) and \({\varvec{H}}_{2}=\big (\tilde{h}_{i,j}\big )_{0\le i,j\le N}\),

For instance, if \(N=5, a = 0,b = 1,\,\alpha _{i1}=\beta _{i1}=1,\,\alpha _{i2}=1,\,\beta _{i2}=-1,\,i=1,2\), we get

and

5 A Collocation Algorithm for Handling the System (1.1) Subject to RBCs(1.2)

In this part, we go through how to get numerical solutions for BVP (1.1)–(1.2) using the operational matrix mentioned in Corollary 4.1.

5.1 Homogeneous Boundary Conditions

In this section, consider the homogeneous case of BCs (1.2), i.e., \(\gamma _{i,j}=0,\,i,j=1,2\). In this case, we propose approximations to \(u_{1}(x)\) and \(u_{2}(x)\) as follows:

and

Corollary 4.1 allows us to estimate the derivatives \(u^{(m)}_{j,N}(x)\), \(m,j=1,2,\) as follows:

Using estimates (5.3), we can define the residuals of two Equations (1.1) as follows:

To solve the system (1.1)–(1.2) (with \(\gamma _{i,j}=0,\,i,j=1,2,\)) numerically, a spectral technique is proposed: the Robin shifted Legendre collocation operational matrix mathod RSLCOMM. The collocation points \(x_{i}\), are chosen such that either the \((N+1)\) zeros of \(L^{*}_{N+1}(x;a,b)\) or \(x_{i}=\dfrac{i+1}{N+2},\,i=0,1,...,N\), so we have

then solving the system (5.5) gives the coefficients \(c_i\) and \(\tilde{c}_i\,(i=0,1,...,N)\).

5.2 Nonhomogeneous Boundary Conditions

The transformation of the equation (1.1) with non-homogeneous RBCs (1.2) into the appropriate homogeneous conditions is a crucial step in the construction of the suggested method. To accomplish this, the following transformation is suggested:

where

As a result, it is sufficient to solve the system

subject to the homogeneous RBCs:

6 Convergence and Error Estimates For RSLCOMM

In this part, we analyze the convergence and error estimates of the proposed technique. For a nonnegative integer N, consider the two spaces \(S_{j,N},\,j=1,2,\) defined by

Furthermore, the differences between the function \(u_{j}(x)\) and its estimated value \(u_{j,N}(x)\) are denoted by

This study examines the errors of the suggested method by using the \(L_{2}\) norm error estimate,

and the \(L_{\infty }\) norm error estimate,

The proof of the following theorem has similarities to the proofs of theorems expounded in the research articles [5, 15,16,17,18,19, 27N.

Theorem 6.1

Assume that \(u_{j}^{(i)}(x)\in C[a,b],\,i=0,1,...,N+1,\) with \(|u_{j}^{(N+1)}(x)|\le M_j, \forall x\in [a,b],\,j=1,2\). Assume that \(u_{j,N}(x),\,j=1,2,\) have the expansions (5.1) and (5.2), respectively, represent the best possible approximations for \(u_{j}(x)\) out of \(S_{j,N}, j=1,2\), respectively. Then, the estimates obtained are as follows:

and

Proof

The proof of this theorem is similar to [, Theorem 6.1]. 5\(\square\)

The following corollary demonstrates that the obtained errors converge rapidly.

Corollary 6.1

For all \(N\ge 1\), the following two estimates hold:

and

Proof

The proof of this corollary is similar to [, Corollary 6.1]. 5\(\square\)

7 Computational Simulations

In this section, we show that the proposed algorithm in Sect. 5 has high flexibility and accuracy. In order to evaluate the precision of RSLCOMM, we define the following error:

and the order of convergence \(R_{N}\) by

By solving three numerical problems, we show that RSLCOMM yields reliable results, and when the provided system has polynomial solutions \(u_{j}(x),\,j=1,2,\) of degree less than or equal to N, these solutions have the forms:

In addition, the obtained errors of order \(10^{-15}\) are produced for \(N = 10,11\) when employing the suggested technique RSLCOMM, as shown in two Tables 1 and 3. The computational outcomes in these tables are outstanding. Table 2 provide comparisons between our technique and other approaches in [21], and it shows that RSLCOMM provides greater precision outcomes than these approaches.

Moreover, as illustrated in Figs. 3 and 4, the exact and numerical solutions for the two cases: \(\varepsilon _{1}=10^{-8}\), \(\varepsilon _{2}=10^{-5}\) and \(\varepsilon _{1}=10^{-8}\), \(\varepsilon _{2}=10^{-10}\), to the provided problem 7.2, are in great agreement, and their corresponding errors are represented by two Figs. 1 and 2. Additionally, two Figs. 5a and 6a show that the absolute error function \(E_{j,N}(x),\,j=1,2,\) for different values of N and they emphasize the dependency of error on N. Also, they demonstrate that when RSLCOMM is used, the convergent behavior of the calculated numerical solutions to the Problem 7.3 performs well. Furthermore, Figs. 6b, and 6b illustrate the stability of solutions.

Example 7.1

Consider the following system as a linear test problem to illustrate the theoretical result of RSLCOMM:

subject to the set of four RBCs

where the exact solutions are \(u_{1}(x)=U^{*}_{3}(x;0,1)\) and \(u_{2}(x)=U^{*}_{2}(x;0,1)\). In this problem, the used bases polynomials have the forms

The application of proposed method RSLCOMM gives the exact solution for \(N\ge 1\):

Errors \(E_{1,11}(x)\) and \(E_{2,11}(x)\) using \(\varepsilon _{1}=10^{-8}\) and \(\varepsilon _{2}=10^{-5}\) for Problem 7.2

Errors \(E_{1,11}(x)\) and \(E_{2,11}(x)\) using \(\varepsilon _{1}=10^{-8}\) and \(\varepsilon _{2}=10^{-10}\) for Problem 7.2

Exact and Approximate solutions for Problem 7.2 using \(N=11\), \(\varepsilon _{1}=10^{-8}\) and \(\varepsilon _{2}=10^{-5}\)

Exact and Approximate solutions for Problem 7.2 using \(N=11\), \(\varepsilon _{1}=10^{-8}\) and \(\varepsilon _{2}=10^{-10}\)

Example 7.2

Consider the singularly perturbed convection–diffusion:

subject to the set of four RBCs

where \(f_{1}(x)\) and \(f_{2}(x)\) are chosen such that the exact solutions are

In this problem, the computed bases polynomials take the form:

where

The application of RSLCOMM gives the following numerical solutions \(u_{1,11}(x)\) and \(u_{2,11}(x)\) for \(\varepsilon _{1}=10^{-8}\) and \(\varepsilon _{2}=10^{-k}\), \(k=5,10\):

and

respectively. These solutions corresponds precisely to the exact solution of precision \(10^{-15}\) as shown in Table 1.

Example 7.3

Consider the singularly perturbed convection–diffusion:

subject to the set of four RBCs

where \(f_{1}(x)\) and \(f_{2}(x)\) are chosen such that the exact solutions are

In this problem, the computed bases polynomials have the forms (7.10). The application of RSLCOMM gives the following numerical solutions \(u_{1,9}(x)\) and \(u_{2,9}(x)\) for \(\varepsilon _{1}=10^{-10}\) and \(\varepsilon _{2}=10^{-4}\), and \(u_{1,10}(x)\) and \(u_{2,10}(x)\) for \(\varepsilon _{1}=10^{-10}\) and \(\varepsilon _{2}=10^{-8}\):

and

respectively. These solutions corresponds precisely to the exact solution of precision \(10^{-15}\) as shown in Table 3.

Errors results at \(\varepsilon _{1}=10^{-10}\), \(\varepsilon _{2}=10^{-8}\) for Problem 7.3

Errors results at \(\varepsilon _{1}=10^{-10}\), \(\varepsilon _{2}=10^{-8}\) for Problem 7.3

8 Conclusion

In this article, two RMLP systems that meet homogeneous four-boundary Robin conditions (3.1) were built. The combination of these polynomials and the collocation spectral technique results in an approximation to the system (1.1)–(1.2). The proposed technique, RSLCOMM, was tested on three problems, confirming the algorithm’s high accuracy and efficiency. The theoretical insights offered in this paper may be applied to a variety of ordinary, partial, and fractional differential equation systems. Theoretical convergence and error analysis were also studied. The presented numerical problems demonstrated the method’s applicability, utility, and accuracy. By exploring the connection between our research and the field of inverse scattering problems in future studies and incorporating ideas from the mentioned works [12, 28, 29], we can further investigate the applicability and potential extensions of our method for solving inverse scattering problems, which would provide valuable insights for the scientific community.

Availability of Data and Materials

Not Applicable.

References

Abd-Elhameed, W.M., Ahmed, H.M.: Tau and Galerkin operational matrices of derivatives for treating singular and Emden–Fowler third-order-type equations. Int. J. Mod. Phys. C 33(05), 2250061 (2022). https://doi.org/10.1142/S0129183122500619

Abd-Elhameed, W.M., Youssri, Y.H.: Spectral solutions for fractional differential equations via a novel Lucas operational matrix of fractional derivatives. Rom. J. Phys. 61(5–6), 795–813 (2016)

Abd-Elhameed, W.M., Ahmed, H.M., Youssri, Y.H.: A new generalized Jacobi Galerkin operational matrix of derivatives: two algorithms for solving fourth-order boundary value problems. Adv. Differ. Equ. 2016(1), 1–16 (2016). https://doi.org/10.1186/s13662-016-0753-2

Abd-Elhameed, W.M., Al-Harbi, M.S., Amin, A.K., Ahmed, H.M.: Spectral treatment of high-order Emden–Fowler equations based on modified chebyshev polynomials. Axioms 12(2), 99 (2023). https://doi.org/10.3390/axioms12020099

Ahmed, H.M.: Highly accurate method for boundary value problems with robin boundary conditions. JNMP, 1–25 (2023). https://doi.org/10.1007/s44198-023-00124-6

Ansari, A.R., Hegarty, A.F.: Numerical solution of a convection diffusion problem with Robin boundary conditions. J. Comput. Appl. 156(1), 221–238 (2003). https://doi.org/10.1016/S0377-0427(02)00913-5

Atangana, A.: Fractional operators with constant and variable order with application to geo-hydrology. Academic Press (2017)

Balaji, S., Hariharan, G.: An efficient operational matrix method for the numerical solutions of the fractional Bagley–Torvik equation using Wavelets. J. Math. Chem. 57(8), 1885–1901 (2019). https://doi.org/10.1007/s10910-019-01047-8

Basha, P.M., Shanthi, V.: A numerical method for singularly perturbed second order coupled system of convection–diffusion Robin type boundary value problems with discontinuous source term. Int. J. Appl. Comput. 1, 381–397 (2015). https://doi.org/10.1007/s40819-014-0021-7

Bellew, S., O’Riordan, E.: A parameter robust numerical method for a system of two singularly perturbed convection–diffusion equations. Appl. Numer. Math. 51(2–3), 171–186 (2004)

Cen, Z.: Parameter-uniform finite difference scheme for a system of coupled singularly perturbed convection–diffusion equations. Int. J. Comput. Math. 82(2), 177–192 (2005)

Gao, Y., Liu, H., Wang, X., Zhang, K.: On an artificial neural network for inverse scattering problems. J. Comput. Phys. 448, 110771 (2022)

Geetha, N., Tamilselvan, A.: Parameter uniform numerical method for a weakly coupled system of second order singularly perturbed turning point problem with Robin boundary conditions. Proc. Eng. 127, 670–677 (2015). https://doi.org/10.1016/j.proeng.2015.11.363

Hussaini, M.Y., Zang, T.A.: Spectral methods in fluid dynamics. Annu. Rev. Fluid Mech. 19(1), 339–367 (1987). https://doi.org/10.1146/annurev.fl.19.010187.002011

Izadi, M.: A comparative study of two Legendre-collocation schemes applied to fractional logistic equation. Int. J. Appl. Comput. Math. 6, 1–18 (2020). https://doi.org/10.1007/s40819-020-00823-4

Izadi, M., Cattani, C.: Generalized bessel polynomial for multi-order fractional differential equations. Symmetry 12(8), 1260 (2020). https://doi.org/10.3390/sym12081260

Izadi, M., Samei, M.E.: Time accurate solution to Benjamin–Bona–Mahony–Burgers equation via Taylor–Boubaker series scheme. Bound. Value Probl. 2022(1), 17 (2022). https://doi.org/10.1186/s13661-022-01598-x

Izadi, M., Srivastava, H.M.: Fractional clique collocation technique for numerical simulations of fractional-order Brusselator chemical model. Axioms 11(11), 654 (2022). https://doi.org/10.3390/axioms11110654

Kazem, S., Abbasbandy, S., Kumar, S.: Fractional-order Legendre functions for solving fractional-order differential equations. Appl. Math. Model. 37(7), 5498–5510 (2013). https://doi.org/10.1016/j.apm.2012.10.026

Lawley, S.D., Keener, J.P.: A New derivation of Robin boundary conditions through homogenization of a stochastically switching boundary. SIAM J. Appl. Dyn. Syst. 14(4), 1845–1867 (2015). https://doi.org/10.1137/15M1015182

Liu, L.-B., Liang, Y., Bao, X., Fang, H.: An efficient adaptive grid method for a system of singularly perturbed convection-diffusion problems with Robin boundary conditions. Adv. Differ. Equ. 2021(1), 1–13 (2021). https://doi.org/10.1186/s13662-020-03166-y

Loh, J.R., Phang, C.: Numerical solution of Fredholm fractional integro-differential equation with Right-sided Caputo’s derivative using Bernoulli polynomials operational matrix of fractional derivative. Mediterr. J. Math. 16(2), 28 (2019). https://doi.org/10.1007/s00009-019-1300-7

Luke, Y., L.: Special Functions and Their Approximations: v. 2. Academic press. (1969)

Napoli, A., Abd-Elhameed, W.M.: A new collocation algorithm for solving even-order boundary value problems via a novel matrix method. Mediterr. J. Math. 14(4), 170 (2017). https://doi.org/10.1007/s00009-017-0973-z

O’Riordan, E., Stynes, J., Stynes, M.: A parameter-uniform finite difference method for a coupled system of convection–diffusion two-point boundary value problems. Numer. Math. Theor. Meth. Appl 1(2), 176–197 (2008)

Priyadharshini, R., Ramanujam, N.: Uniformly-convergent numerical methods for a system of coupled singularly perturbed convection–diffusion equations with mixed type boundary conditions. Math. Model. Anal. 18(5), 577–598 (2013). https://doi.org/10.3846/13926292.2013.851629

Srivastava, H.M., Adel, W., Izadi, M., El-Sayed, A.A.: Solving some Physics problems involving fractional-order differential equations with the Morgan–Voyce polynomials. Fractal Fract. 7(4), 301 (2023). https://doi.org/10.3390/fractalfract7040301

Yin, Y., Yin, W., Meng, P., Liu, H.: On a hybrid approach for recovering multiple obstacles. Commun. Comput. Phys. 31(3), 869–892 (2022)

Zhang, P., Meng, P., Yin, W., Liu, H.: A neural network method for time-dependent inverse source problem with limited-aperture data. J. Comput. Appl. Math. 421, 114842 (2023)

Acknowledgements

The author would like to thank the editor for his assistance, as well as the referees for the comments and suggestions that have improved the paper in its current form.

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

Not Applicable.

Corresponding author

Ethics declarations

Conflict of interest

The author declares no competing interests.

Ethical Approval

Hereby I confirm that article is not under consideration in other journals.

Consent for Publication

Not Applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ahmed, H.M. Highly Accurate Method for a Singularly Perturbed Coupled System of Convection–Diffusion Equations with Robin Boundary Conditions. J Nonlinear Math Phys 31, 17 (2024). https://doi.org/10.1007/s44198-024-00182-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44198-024-00182-4

Keywords

- Legendre polynomials

- Generalized hypergeometric functions

- Collocation method

- Boundary value problems

- Robin boundary conditions