Abstract

Convolutional neural network (CNN) has shown dissuasive accomplishment on different areas especially Object Detection, Segmentation, Reconstruction (2D and 3D), Information Retrieval, Medical Image Registration, Multi-lingual translation, Local language Processing, Anomaly Detection on video and Speech Recognition. CNN is a special type of Neural Network, which has compelling and effective learning ability to learn features at several steps during augmentation of the data. Recently, different interesting and inspiring ideas of Deep Learning (DL) such as different activation functions, hyperparameter optimization, regularization, momentum and loss functions has improved the performance, operation and execution of CNN Different internal architecture innovation of CNN and different representational style of CNN has significantly improved the performance. This survey focuses on internal taxonomy of deep learning, different models of vonvolutional neural network, especially depth and width of models and in addition CNN components, applications and current challenges of deep learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For many disorders, medical imaging is a crucial screening method. In 1895, Roentgen revealed that X-rays could be utilized to examine into the internal organs without causing any harm. Shortly after, X-ray radiology evolved into the earliest method for diagnosing diseases. Since then, a variety of imaging techniques have been created, with Computed Tomography (CT) scanning, Positron Emission Tomography (PET), ultrasonography and Magnetic Resonance Imaging (MRI) being some of the most widely utilized. Additionally, increasingly intricate scanning techniques have been created. At numerous points in the healthcare system, encompassing diagnosis, characterization, grading, clinical outcome evaluation, tracking of tumor recurrence, as well as directing intervention treatments, surgeries and radiosurgery, image information is vital to judgment and finalizing decision.

Minimal two-dimensional (2D) imagegraphs are used for a specific individual instance, but several are used for 3D imaging and vast numbers are used for 4D interactive imaging. The quantity of image data that has to be evaluated is significantly increased by the use of multi-modality imaging. It is challenging for medical practitioners and radiologists and doctors to sustain operational efficiencies while employing all the diagnostic data at their disposal to increase reliability and individual treatment as a result of the rising burden. The promise and necessity of creating computerized tools to aid medical practitioners and radiologists in image interpretation and detection have been recognized as a significant field of study and advancement in medical imaging in light of current developments in computer vision and computational approaches.

Beginning in the 1960 s, attempts were made to use machines to autonomously interpret healthcare images [1,2,3]. Numerous research showed that using a machine to analyze clinical data was feasible, but the research received little focus, most likely due to the lack of accessibility to slightly elevated digitized visual information and computing power. In the 1980 s, [4] at the University of Chicago’s Kurt Rossmann Laboratory started systematically developing machine learning and image analysis methods for medical data with the intention of creating Computer-Aided Diagnosis (CAD) as a better solution to support radiologists in visual explanation [5]. In order to recognize microcalcifications on mammography, [6] created a CAD platform and carried out the initial spectator ability research that showed how well CAD improved breast radiologists’ capability to recognize microcalcifications [7]. In 1998, the Food and Drug Administration (FDA) authorized the use of the initial professional CAD system as a backup for diagnostic radiography. Over the recent decades, one of the main areas of study and innovation in diagnostic imaging has been CAD and computer-assisted image recognition.

Multiple medical disorders in both youngsters and people can now be diagnosed and treated more accurately thanks to medical imaging. Medical imaging processes come in a variety of forms, or modalities, and each one employs a unique set of tools and methods. Ionizing radiation is used by radiography, particularly Computed Tomography (CT), mammography and fluoroscopy to provide images of the human body. The risk of acquiring disease throughout the course of one’s lifetime may increase if an individual is exposed to ionising radiation, a type of radiation with enough intensity to possibly harm DNA.

The remaining paper is organized as follows. Section 1 describes the history of artificial intelligence and usage in CAD. Section 2 explain the medical imaging modalities. In Sect. 3, we explain the development of Computer Aided Diagnosis (CAD) and usage and Sect. 4 elaborate the selection criteria of Literature review. In Sect. 5, we explain the deep learning in depth and further we identify the major CNN model in Sect. 6. In Sect. 7, we explain the major development in medical image analyses using deep learning and Sect. 8 elaborate the anatomical structure of medical images. Further, in Sect. 9, we explain the deep learning model development for medical image analysis and Sect. 10 depict the basic tool used in deep learning. Section 11 summarized the extensive survey.

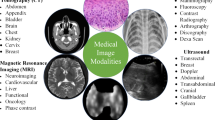

2 Medical Imaging Modalities

Each imaging technique in the healthcare profession has particular data and features. As illustrated in Table 1 and Fig. 1, the various electromagnetic (EM) scanning techniques utilized for monitoring and diagnosing various disorders of the individual anatomy span the whole spectrum. Each scanning technique uses a distinct frequency and wavelength and exhibits various qualities [8]. EM waves are dispersed, mirrored, or received by an item whenever they come into contact with it. The magnetic field produced by Magnetic Resonance Imaging causes the body’s natural protons to coordinate. When a radio-frequency signal is delivered to the individual, protons are triggered and begin to swirl at an angle to the magnetic force. The greater region of 3 × 1016 to 3 × 1019 Hz of Computed Tomography and X-ray imaging is extremely radiation and detrimental to public health. Gamma rays are used in nuclear imaging techniques like Positron Emission Tomography and Single Photon Emission Computed Tomography to diagnose biological functions in a person’s tissues. The frequency of gamma rays is larger than 1019 Hz, and their wavelength is fewer than 10 picometers. Magnetic Resonance Image and Ultrasound are governed by the non-ionization concept, whereas X-rays, Single Photon Emission Computed Tomography, Computed Tomography, and Positron Emission Tomography are dependent on the ionization theory [9].

3 Computer Aided Diagnosis

Despite an increase in CAD development, relatively several CAD solutions are commonly employed in clinical settings. One of the main causes might be that CAD solutions created using traditional machine learning techniques may not have attained the excellent productivity needed to satisfy radiologist and medical practitioners demands for increasing both diagnosis reliability and operational effectiveness. Given the development of deep learning in numerous computer vision and Artificial Intelligence (AI) based systems over the previous few decades, including text and signal processing, face identification, driverless cars, board games and go, there are unrealistic hopes that deep learning will lead to an innovation in CAD effectiveness and mass adoption of deep-learning-based CAD or AI for a variety of activities in the individual treatment plan. The passion has inspired a large number of deep learning projects and articles in CAD. In this section, we’ll talk about a number of problems and obstacles that have arisen while trying to design CAD for diagnostic imaging that is founded on deep learning, as well as the things that should be taken into account when doing so.

The development of CAD based applications uses techniques for conventional machine learning. Image processing techniques were utilized in the traditional machine learning methodology to CAD in diagnostic imaging to identify abnormalities of cancer and discriminate between several categories of features, such as healthy or aberrant, cancerous or mild, on the images. Depending on subject matter expertise, CAD designers create image analysis and separation of features algorithms to describe the image features that may differentiate between the numerous states. The competence of the theoretical conceptions or practical image processing approaches that are meant to convert the image features to quantitative data, as well as the subject experience of the CAD designers, are frequently factors that affect how well the feature classifiers perform. A method employs the extracted features as incoming response variable and a forecast strategy is created by varying the weights of the different characteristics based on numerical characteristics of a series of training instances to determine the likelihood that a image corresponds to a particular categories. Although if they have observed a plethora of cases from the user community, the individual programmer might not be capable of converting the complicated illness trends into a limited amount of feature descriptors using the traditional machine learning technique. The hand-engineered characteristics could also have trouble standing up to the public’s wide range of ordinary and atypical behaviors. The generated CAD system frequently performs poorly in terms of classifier or generalization, leading to a large percentage of wrongful convictions at great sensitivity or conversely.

In several domains, deep learning has become the cutting-edge machine learning technique. Deep learning is a kind of representation learning technique that employs a sophisticated multi-layer neural network topology autonomously trains data interpretations by abstracting the raw data into several layers. Deep convolutional neural networks (DCNN) represent the most widely utilised deep learning systems for sequence identification applications in images. By continuously modifying its parameters through training algorithm, DCNN may be taught to autonomously retrieve pertinent features from the training instances for a specific job. CNN model does not demand explicitly generated features as input because feature representations are discovered during training. The DCNN features are anticipated to outperform hand-engineered features in terms of selectivity and in-variance if adequately trained using a sizable training set that is representative of the population of interest. Deep learning will rapidly examine dozens or hundreds of examples that even individual specialists would not be capable of seeing and memories in their lifetimes since the training procedure is mechanized. As provided as the training dataset is big and diversified sufficient for it to evaluate, deep learning can consequently be highly resilient to the vast scope of changes in characteristics across distinct groups to be discriminated [17]. Machine learning algorithms are well-known to discover and learn the relationship between data and explore to retrieve hidden information from the data. Machine learning have influential techniques and methods that can learn from previous actions. The machine learning algorithms observe and interact with environment to improve the efficacy of objective functions.

Image recognition, tracking and identification is an essential research area in machine learning that is used in a wide range of technologies such as gesture identification, driver-less cars, medical assessment such as medical image processing, tumor cell categorization, imagegrammetry and so on. Object spotting is the task of locating many objects in images and recognizing their locations and categorization. Object identification is regarded to be one of the challenging tasks in the area of machine sensing since the appearance of objects varies substantially based on a range of factors [18, 19].

All machine learning technologies and associated artificial intelligence (AI) models, medical evidence and image analysis may offer the highest opportunity for creating a significant, long-term impact on individual experiences in a relatively short period of time [17]. Image searching, production, computer vision and image-based visualization are all components of the software evaluation and interpretation of medical images [20]. In numerous dimensions, medical image analysis has evolved to encompass image preprocessing techniques, pattern matching, data mining especially image based dataset and deep learning [21]. Deep learning is a popular way for determining the correctness of the future situation. This offered up additional possibilities for interpretation of medical images. Computational intelligence approaches in healthcare handle a diverse range of concerns, from early diagnosis to infection tracking to individualized therapy recommendations. A vast amount of material is now available to clinicians via multiple media platforms such as mri scans, genetic sequencing and pathological imaging [22]. To convert each of this input into valuable advice, the particularly related utilized for patient data include X-ray, Ultrasound, Positron Emission Tomography (PET), Magnetic Resonance Imaging (MRI) and Computed Tomography (CT) [23, 24]. Deep learning is the process of discovering trends in complex objects by utilizing neural network models comprised of multiple convolution layers (comprising several nodes) of artificial neurons [25]. An artificial neuron is a sort of cell that act like a real brain, accepts numerous input images, does a computation and then delivers the optimal outcome [26,27,28]. This straightforward approach uses a nonlinear activation function before a linear input pattern matching form [26]. Numerous often deployed nonlinear activation functions of a system include the sigmoid transition, ReLU and their variations and tanh (hyperbolic tangent) [29,30,31].

In recent years, advances in health science and image processing have been made thanks to deep learning algorithms [26, 32,33,34]. Deep learning techniques using CNNs and computational techniques, in especially, execute better when analyzing large amounts of data, and this has received a lot of study interest. Recent studies imply that the application of deep-learning-based computer-aided identification in medical practice can dramatically decrease the amount of time needed to analyze films and increase diagnosing effectiveness [35, 36].

For healthcare system, numerous research have explored CADe/CADx technology [37,38,39]. Studies that have already been published examined CADe/CADx methods for medical images using deep learning algorithms [40, 41]. All of these investigations, meanwhile, are missing explanations of the imaging procedures diagnostic procedures and supporting documentation used in the different engineering solutions. Additionally, released assessments must offer a much more thorough assessment of contemporary literature. As a result, we thoroughly review the most recent state-of-the-art implementations of cutting-edge CADe/CADx ideas that address medical images using CNN deep learning algorithms and computational techniques. Due to the dearth of papers using additional models from deep learning in CADe/CADx, we did not include them. We also creatively divide the four phases in the conventional CADe/CADx of medical images into two phases by gaining knowledge from the diagnostic imaging methodology in order to straightforwardly relate their pivotal technology superior properties, taking into account that the primary technology components of these research findings lie in various medical steps. By discussing the implementation, benefits, and drawbacks of CNN in the identification of medical images, as well as potential approaches for investigators to address these challenges, we may indicate the path of future study in this area and potentially other healthcare domains.

4 Literature Review Selection Criteria

For this analysis benchmark, there were four steps: (1) keyword search queries in the IEEE Xplore, ACM Digital Library, Scopus, Google Scholar, Science Direct, PubMed, and Web of Science libraries and databases; (2) gathering relevant things and eliminating multiple copies; (3) choosing the benchmark of use; only the lung nodule detection technology based on CT image deep learning was maintained; and (4) evaluating detection systems utilizing the established metrics. The database search terms and logical expressions that we used are listed below: ‘deep learning’ or ‘deep convolutional neural network’ or ‘convolutional neural network’ or ‘CNN’ or ‘DCNN’ and ‘healthcare’ or ‘health-care’ or ‘health care’ or ‘medical’ or ‘clinical’ or ‘image’ or ‘images’ or ‘brain’ or ‘brain injury’ or ‘head’ or ‘head injury’ or ‘skin’ or ‘breast’ or ‘chest’ or ‘pulmonary’ or ‘lung’ or ‘lungs’, and ‘nodule’ or ‘nodules’ or ‘tumor’ or ‘tumors’ or ‘cancer’ and ‘detection’ or ‘detect’ or ‘detected’ or ‘detecting’ or ‘computer-aided detection (CADe)’or ‘computer-aided diagnosis (CADx)’ or ‘CAD’ or ‘CADx’ or ‘CAD’ or ‘CADe’ and ‘histology’ or ‘histopathology’ or ‘histopathological’ or ‘X-ray’ or ‘Xray’ or ‘CXR’ or ‘MRI’ or ‘Magnetic Resonance Imaging’ or ‘Computed Tomography Scan’ or ‘CT Scan’ or ‘CT-Scan’ or ‘Computed Tomography’ or ‘CT’.

5 Deep Learning Methodologies

Deep learning-based medical image segmentation is a popular topic in image classification, registration, segmentation and tumor detection research and has great use in the medical field. Deep learning technology can improve computer-aided diagnosis accuracy and efficacy while also easing resource constraints in healthcare, decreasing doctor stress, and reducing reliance on expert knowledge. An overview of some of the most well-known deep learning frameworks is provided below.

The purpose of this part is to formally introduce and define the deep learning ideas, methods, and frameworks that we discovered in the articles on medical image interpretation that were analyzed for this study.

5.1 Neural Network

The fact that neural networks are generic function capable of approximating, or that they are able estimate any mathematical expression to any degree of precision, is a crucial characteristic of neural networks. In other sense, if any process-whether biological or not-can be conceptualized as a function of a collection of variables, then that behavior may be simulated to any unlimited amount of precision, constrained only by the magnitude or complication of the system. Although the aforementioned concept of general approximation is not theoretically precise, it does illustrate one factor that has contributed to the long-standing curiosity in neural networks. This assurance, however, does not offer a mechanism to identify the neural network model’s ideal characteristics that will yield the closest estimation for a particular dataset. Additionally, there is no assurance that the system will deliver precise forecasts for fresh data. All artificial neural models’ underlying components are synthetic neurons. A mathematical expression that specifically translates sources into outcomes is all that constitutes an artificial neuron. Any amount of input numbers are accepted by a single artificial neuron, which then processes them using a particular mathematical expression to produce an outcome [42].

A network system which is used in machine learning is known as Neural Network. It took inspiration from human brain and works similar to human brain. The network architecture of Neural Network is made up of Artificial Neurons. It is a network that has weights on it, you can adjust those weights so that it can learn from it. A neural network has a number of layers which groups the number of neurons together. Each of them has its own function. Network’s complexity depends on the number of layers. That is why the Neural Network is also known as multi-layer perceptron. There are three types of neural network layers. (1) Input Layer, (2) Hidden Layer and (3) Output Layer. Each of them has its own specific purpose. These layers are made up of nodes and each of them has its own domain of knowledge. Neural Networks are highly efficient because they can learn very quickly.

5.2 Multi-layer Perceptrons

An neural network model’s another very basic structure is layer upon layer of densely integrated and interconnected neurons. In this structure, a set quantity of “input neurons” stand in for the input feature values that are determined from the records and transmitted to the sub net, and each linkage among a couple of neurons stands in for one learnable weight parameter. Artificial neural learning refers to the process of maximizing these components, which are the primary variables that may be changed in a neural network [43,44,45].

The network’s ultimate results are represented by a quantity of output nodes at the opposite end of the network. When properly set up, a network of this kind may be used to create hierarchical, sophisticated judgments regarding the input since each neuron in a particular layer obtains information from every neuron in the layer below. The earliest networks helpful for bioinformatics implementation were layers of neurons arranged in this straightforward manner; these layers are sometimes referred to as “multilayer perceptrons.”

5.3 Feed Forward Neural Networks

The earliest and most fundamental model of a neuron is the perceptron. A group of inputs are taken, added, and then an activation function is applied before sending the results to the output layer. A fundamental class of neural networks is called a feed forward neural network (FFNN). The intermediate levels are buried, with the input layer at the top and the output layer at the bottom. There is no feedback in the entire network as the signal propagates unidirectional from the input layer to the output layer [46,47,48].

Multilayer perceptrons is another name for Feed Forward Neural Network depicted in Eq. 1.

5.4 Recurrent Neural Networks (RNNs)

When processing data with time series characteristics, RNN excels. It can also help with data analysis and mining for information about time series features and semantic change. The weight of the single unit that makes up the RNN layer is shared. Each training sample example will only go through one unit in the state of the various time series, after which its weight will be changed continuously [49,50,51].

5.4.1 Long-Short Term Memory (LSTM)

The vanishing/exploding gradient issue is solved with gates and an explicitly designated memory cell in Long-Short Term Memory (LSTM) networks. The main driving force behind these is electronics, not biology. Input, output, and forget gates, together with a memory cell, are all present in every neuron. These gates’ function is to safeguard information by controlling the flow of it or blocking it [52,53,54]. The Eq. 2 show the LSTM’s feedforward calculation procedure in more detail.

Among them, \(W_{hi}\), \(W_{hf}\), \(W_{ho}\), \(W_{hi}\) represent the matrix parameters related to the input and 3 Gates, and then \(b_{i}\), \(b_{f}\), \(b_{o}\), \(b_{c}\) represent the bias parameters related to the input and the three Gates, represents the Sigmoid function, and finally, 0 represents the same position of the two vectors Elements are multiplied together.

5.4.2 Gated Recurrent Units (GRU)

Although the gradient problem in RNN can be greatly reduced by LSTM, a single LSTM unit has four times as many parameters as a single RNN unit due to the addition of three extra Gates. Greater parameters necessitate more computing power. Although it has fewer parameters than LSTM because it lacks an output gate, GRU is similar to LSTM with forget gate [55].

A very powerful LSTM neural network variant is the GRU. GRU makes the structure shallower and computationally less expensive while maintaining the LSTM effect. It is also the most used type of neural network at the moment because it has a simpler structure and better results than an LSTM network. Due to the fact that GRU is a variation of LSTM, it can alleviate the lengthy reliance issue in RNN [56]. The GRU feedforward calculation is displayed in Eq. 3.

\({\textbf{W}}_{xr}, {\textbf{W}}_{xz} \in {\mathbb {R}}^{d \times h}\) represent the input training parameters and \({\textbf{W}}_{hr}, {\textbf{W}}_{hz} \in {\mathbb {R}}^{h \times h}\) are bias parameters, and the two Multiply elements of the same position of the vector, respectively.

5.4.3 Bidirectional Recurrent Neural Networks

Bidirectional Long Short-Term Memory Networks (BiLSTM), Bidirectional Gated Recurrent Units (BiGRU), and Bidirectional Recurrent Neural Networks (BiRNN) all resemble their unidirectional counterparts in appearance. On the other hand, conventional RNNs cannot process data for the future and can only process input in one direction. The forward, backward, and output Eq. 4 indicate how these bidirectional networks can extract complete temporal information at time t by combining past and future data, improving the model’s performance on sequence issues [57, 58].

5.5 Unsupervised Models

Unsupervised learning research’s major objective is to pre-train a deep learning model (also known as a “discriminator” or “encoder”) that will be utilized for many other challenges. The encoder characteristics must be broad sufficient to be applied to classification techniques, such as training on ImageNet and producing outcomes that are as near to supervised models as feasible [59].

As of right now, supervised models consistently outperform unsupervised trained models. This is to ensure that the model can more effectively incorporate the dataset’s features thanks to the supervision. However, if the model is subsequently extended to other activities, monitoring may likewise become less effective. Unsupervised training is hoped to be able to offer more universal characteristics for learning to complete any work in this respect [60, 61].

5.5.1 Autoencoders (AEs)

In that they are an adaptation of FFNNs rather than a fundamentally unique design, autoencoders (AEs) are similar to FFNNs in this regard. The only thing to keep in mind is that the number of input characteristics (number of neurons) in the input layer and the output layer should match (the number of neurons). Check to see if the input and output are both equal. The basic idea behind autoencoders is to automatically compress data rather than encrypt it, hence the name. The entire network has hidden layers that are thinner than the input and output layers and is structured like an hourglass [62, 63]. The Variational Autoencoders (VAEs) is depicted in equation ??.

A unique network called Sparse Autoencoders (SAEs) adds sparsity to the network. Sparse Autoencoder wants to use the original data to create a low-dimensional representation. The preparation of the image typically involves sparse automatic coding to decrease the size of the data and retrieve possibly useful information.

5.5.2 Boltzmann Machine (BM)

Unbalanced connections make up a Boltzmann machine. In terms of graph theory, it is a full graph. Any device is linked. Neurons and other components will make the decision to switch on or off. Initially, BM was solely employed to refer to models that contained just binary variables. The limited Boltzmann machine primarily adds “restriction” as compared to the Boltzmann machine. To make the complete graph a bipartite graph is the alleged restriction. In in addition to other things, restricted Boltzmann machines may be employed to automatically learn (hidden state outcomes are features), develop deep belief networks, and decrease complexity (fewer hidden layers) [64, 65].

5.5.3 Deep Belief Network (DBN)

Multi-layer Restricted Bolt Machine (RBM) based neural networks are called Deep Belief Networks (DBN). It can be categorized as either a discriminative or generative model. To put on weight, the training strategy adopts the unsupervised hungry layer-by-layer approach. Deep Belief Network learning has been finished layer by layer. After being employed to estimate the hidden layer in each level, the array of objects is then utilised as the data vector for the following (higher) layer. Numerous Restricted BMs must be “connected in series” to form a DBN, with the outcome of one BM serving as the intake of the following and the hidden layer of the first BM serving as the feature map of the second. During the learning phase, it is essential to thoroughly train the Restricted BM of the former layer before learning the Restricted BM of the current layer to the previous layer. Hua et al.[66, 67].

5.5.4 Generative Adversarial Network (GAN)

Generative Adversarial Networks (GANs) are essentially a training mode and not a final network structure. The fundamental tenet of Generative Adversarial Network is that the discriminator and generator work in tandem. The discriminator must attempt to differentiate among real samples (such as real pictures) and fake samples produced by the generator, or “Fake images.” The ideal competitive environment is one where both sides continuously improve, where the capability to differentiate between them becomes increasingly stronger, and where the capacity to produce deception becomes greater as well. The outcome of the opposition is unimportant. What matters most in the conclusion is the generator’s capacity to produce instances that are sufficiently equivalent to the real instances, resemble the sample data in the training set, and have a dispersion that is substantially close to that of the training examples distribution. Xin et al. [68, 69]. Define the objective function that GAN must optimize, which is represented by the expression in Eq. 6.

5.6 Convolutional Neural Networks (CNNs)

An uncommon variety of neural network is called a convolutional neural network (CNN) or a deep convolutional neural network (DCNN). They can be used for other types of input, such as audio, though image processing is where they are most frequently used. Convolutional neural networks (CNNs/ConvNets) use neurons with biases and weights that can be learned. The classification score is generated after each neuron computes the dot product using the data it has been given. By incorporating specific characteristics into the network structure using CNN, we can improve the effectiveness of the feedforward function and pass parameters [70]. People who share reduce many variables. Equation 7 explain the input and output capabilities of the CNN as well as the network’s overall non linearity and cost function are depicted in Eqs. 8 and 9 respectively.

5.7 Convolutional Layer

Convolutional Layer is a first layer of Convolutional Neural Network. This layer consists of sets of Filters or Kernel. Their job is to use a Convolutional operation to the input and passing the result to the succeeding layer. The filter takes a subset of the input data. The territorial relationship between pixels by learning image options using tiny squares of input data ensures by this layer. The Convolutional layer’s vector is the final output. This layer performs linear multiplications with the goal of extracting the input image’s high-level characteristics as a convolution operational activity. Due to the linear nature of the convolutional process at this layer, the final size is also produced by layering the activation maps of all filters on the depth dimension. Similar to an old neural network, linear operation primarily entails the multiplication of weights with the input [71, 72].

5.8 Deconvolution

Deconvolution also known as transposed convolution is a mathematical operation by which the effect of convolution reverses. It is exactly the multivariate Convolutional function’s inverse. For example, giving input to the Convolutional layer and getting output then give the same output to deconvolutional layer can get you to the same input you given first. Deconvolution is just to reverse the input back to large size [73, 74].

5.9 Dilated Convolution (Atrous Convolution)

A type of convolution by which the kernel inflates by inserting holes between the kernel elements. Another parameter to the Convolutional layers has been introduced by dilated convolution called the dilation rate. Additional parameter of dilation rate as indicates that how much the kernel is expended. It’s targeted to increase the size of reception field by avoiding the increase in parameter sizes. There are normally spaces inserted between the elements of kernel [75].

5.10 Striding

Striding defines the step size of the kernel while sliding through the image. Stride of one defines that the kernel slides through the image pixel by pixel. Stride of two defines that the kernel slides through image by moving 2 pixels per step i.e., skipping of 1 pixel. Stride (\(\ge 2\)) can be used for down-sampling an image [76].

5.11 Padding

How the border of an image is handled is defined by padding. Spatial output dimensions kept equal to the input image by padded convolution, by padding 0 around the input boundaries (if necessary). On the other side, without adding 0 around the input boundaries, unpadded convolution only perform convolution on the pixels of the input image [77]. The size of output is smaller than the input size. For an input image, size i, kernel size k, padding p and stride s, from convolution the output image has size o:

5.12 Pooling Layer

A new layer added after the Convolutional layer is a pooling layer. Specially, after the Convolutional layer applies a nonlinearity to the feature maps output. The inclusion of pooling layer right after the Convolutional layer is a usual pattern used in the ordering of layers in a convolutional neural network and can be repeated once or more then once in a given model. The pooling layer separately operates upon each feature map so that it can create a new set of pooled feature maps of same number. Pooling involves selection of pooling operation just like a filter going to be applied on feature maps. Size of pooling filter or operation is smaller in size than the size of the feature map. Mainly, it is always 2 × 2 pixels applied with a 2 pixels stride. It means that pooling layer always going to reduce the size by a factor of 2 of each feature map [78]. Two of the common functions used in pooling operation are given below:

-

1.

Average Pooling A convolutional neural network’s layers repeatedly apply learnt filters to input images to produce feature maps that list the features present in the image. For each region on the feature map, determine the average value. In order to construct a down-sampled (pooled) feature map, the average value for regions of a feature map is calculated using the average pooling method. It is frequently applied following a Convolutional layer. It provides a tiny bit of translation in-variance, which means that changing the image’s size slightly has little impact on the numbers of the majority of pooled outcomes. Max Pooling collects higher obvious characteristics like edges, although it collects characteristics more uniformly.

-

2.

Maximum Pooling A feature map is down-sampled (pooled) by using the Max Pooling pooling procedure, which determines the highest value for regions of the feature map. It is frequently used following a Convolutional layer. It adds a tiny bit of translation in-variance, which means that changing the image’s size slightly has little impact on the numbers of the majority of pooled outcomes [79].

5.13 Fully Connected Layer

Fully connected layer is the very important component of the neural networks. It is very successful in the field of recognizing and classifying the images. Fully connected layer is the classic neural network architecture. Fully connected layers are those layers where all the inputs from one layer is connected to every activation unit of the next layer. In this layer, all the input units have a separable weight to each output unit. There are two of fully connected layer one is for input and other is for output. The fully connected input layer flattens the input and change it into the vector for the input of next stage. Then the next stage analyzes it and apply weight to project the right label. Then the fully connected output provides the expected labels to each label [80].

5.14 Activation Functions

Mathematical equations that determine the output of a neural network are known as activation functions. The function is attached with each and every neuron in the network. It determines whether it should be fired (activated) or not based on whether the input of each neuron is relevant for the model’s prediction. Activation functions are also help normalize the output of each neuron to a specific range between -1 and 1 or between 1 and 0. Activation functions must be computationally efficient because for each data sample they are calculated across thousands or millions of neurons. Modern day neural networks use a backpropagation technique to train the model which places increased computational strain on activation function and its derivative function [81].

5.15 Batch Normalization

Batch normalization is also known as batch norm. It’s a layer which allows each and every layer of network to do learning more independently. Its use is to normalize the previous layers output. The input layer is scaled by the activation in normalization. By using batch normalization, learning becomes more efficient. Moreover, to avoid over-fitting of a model it can be used as a regularization. To standardize the input or the outputs, the layer is added to the sequential model. It can be used at some several points in between layers of model. It is placed right after defining sequential model and after the convolution layers and pooling layers [82].

5.16 Dropout

The dropout term relates to “dropping out units” in a neural network. The thing dropping a unit out means removing the unit temporarily from the network and all the connections whether incoming or outgoing all are removed. To prevent neural networks from over-fitting the Dropout method is used. In simple way dropout ignores the units during the time period of training of the system [83].

5.17 Softmax

SoftMax is a function, it is additionally referred as soft argmax or normalized exponential function. This function which is used as the activation function in output layer of the neural network models that predict the multinomial probability distribution. It is a function that changes the K real value into the vector of K real value whose sum is 1. The input value can be anything like positive, negative less than or greater than one, but this function changes the value to 0 and 1, for the probability. If the input is greater than this function will change the value in large probability or if the input is lesser than the function will change the value in small probability, but the value will always lie between 0 or 1 [83].

5.18 Optimizer

Optimizer is the techniques or algorithms which are used to change the attributes of the neural networks. They are used to solve optimization issues by minimizing the function. Optimizer is the one which is used to reduce the losses and to provide us with accurate results [84]. Some strategies are used to initialize the weight and with the period of time it is updated by using the following equation:

This equation is used to update the weight and to get the accurate results.

5.19 Momentum

Neural network momentum is a simple technique or method which improves accuracy and training speed both. Momentum helps in flattening the variations if there is continuous change in the direction of the gradient. The momentum value is used to avoid the situation of getting stuck in local minima. It is the value which is between 0 and 1. If the value of momentum is greater than the learning is kept smaller. The larger value of the momentum is also considered as that the convergence will occur rapidly. The small value of the momentum cannot avoid local minima and can also result in the delay of systems training [85].

5.20 Learning Rate

Learning rate are the hyper-parameters in the configuration of the neural networks. It used in the training of neural networks that has small positive value. It controls the adaptation of the model to the problem. Learning rate controls how much change in the model is required in response to estimate the error when the values of weights are updated. It is very challenging to choose learning rate because its values are too small which may result in a lengthy training process [86].

6 CNN Model Zoo

6.1 LeNet

LeNet architecture is very compact and simplified. LeNet was introduced by Yann LeCunn in 1989 [87]. It is simplified to such an extent that it can be trained on CPU if you do not have any kind of GPU support but if you have any kind of GPU support for your computer, a lot faster results could be achieved. LeNet has many versions which are LeNet-5, LeNet-4, LeNet-1, Boosted LeNet-4. LeNet is used for handwritten words recognition. Mostly it was used with the applications related to MNIST dataset. It consists upon basic CNN parts like pooling layers, Convolutional layers and fully connected layers [88]. (Figs. 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23 and 24)

LeNet is a classic convolutional neural network employing the use of convolutions, pooling and fully connected layers. It was used for the handwritten digit recognition task with the MNIST dataset(dataset of 60,000 small square 28 × 28 pixel gray-scale images of handwritten single digits between 0 and 9) [87]

LeNet-5 CNN architecture is made up of 7 layers. The layer composition consists of 3 Convolutional layers, 2 subsampling layers and 2 fully connected layers.

-

The first layer is the input layer. In this layer you have a simple digit range from 0-9. It is a 32 × 32 grayscale image and each grayscale image have a digit range from 0-9. You need to classify what digit image has in it. The first you used a Convolutional technique in which you are using a filter size of 5 × 5 and stride is 1. The next layer is of size 28 × 28 × 6 where 6 is the no. of filters you’ve used.

-

Next the subsampling technique in which you used a avg pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 14 × 14 × 6 where 6 is the no. of filters you’ve used.

-

Further they employed Convolutional with 5× 5 filter and stride is of 1. The next layer is of size 10 × 10 × 16 where 16 is the no. of filters you’ve used.

-

They applied average pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 5 × 5 × 16 where 16 is the no. of filters you’ve used. Here 5 × 5 × 16 means 400 neurons. Number of layers are 16 and each layer have 25 neurons in it.

-

For dense connection, they employ fully connection each 400 neurons to first hidden layer having 180 neurons which have 180 × 400 connections.

-

These 180 neurons are fully connected to the next hidden layer having 84 neurons size of 84 × 180 connections.

-

Finally the output layer will be of 10 × 10 which is fully connected to the previous layer size of 10 × 84 connections.

The main disadvantage of LeNet-5 is at time padding technique is not used to it takes some extra effort to retain the size of the image.

6.2 AlexNet

AlexNet CNN architecture is more complex as compared to the LeNet architecture. AlexNet was introduced by Alex Krizhevsky. In 2012, AlexNet competed in ImageNet Large Scale Visual Recognition Challenge. AlexNet won the competition and was at par then the opponent. AlexNet was able to get 15.3% top-5 error which was 10.8% lower then the opponent. AlexNet requires more depth in its architecture as compare to LeNet. AlexNet consist of pooling layers, Convolutional layers and fully connected hidden layer [89].

AlexNet is a CNN architecture and its basic building blocks are max pooling and dense layer. It has imageNet classification with the deep learning techniques. This architecture will be use in pre trained model for the object detection in computer vision [89]

AlexNet CNN architecture is made up of 8 layers. The first 5 are Convolutional layers, some of them followed by 3 max pooling layer and last 3 are fully connected layers. It also uses ReLU Nonlinearity, multiple GPU’s (on two parallel GPU’s) and overlapping pooling.

-

The first layer is the input layer. It is a 224 × 224 × 3 RGB image. The first you used a Convolutional technique in which you are using a filter size of 11 × 11 and stride is 4 and pool size of 2. The next layer is of size 55 × 55 × 96 where 96 is the no. of filters you’ve used.

-

Next the pooling technique in which you used a max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 27 × 27 × 96 where 96 is the no. of filters you’ve used.

-

They use same Convolutional with 5 × 5 × 96 filter. The next layer is of size 27 × 27 × 256 which means there are total of 256 filters and each filter have 5 × 5 × 96 filters in it.

-

Furthermore, they employ max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 13 × 13 × 296 where 256 is the no. of filters you’ve used.

-

They apply same Convolutional with 3 × 3 × 256 filter. The next layer is of size 13 × 13 × 384 which means there are total of 384 filters and each filter have 3 × 3 × 256 filters in it.

-

They engage same Convolutional with 3 × 3 × 384 filter. The next layer is of size 13 × 13 × 354 which means there are total of 384 filters and each filter have 3 × 3 × 384 filters in it.

-

Next the same Convolutional with 13 × 13 × 384 filter is used. The next layer is of size 13 × 13 × 256 which means there are total of 256 filters and each filter have 3 × 3 × 384 filters in it.

-

They enforce max pooling where the filter size is 3 x 3 and stride is of 2. The next layer is of size 6 × 6 × 256. Here 6 × 6 × 256 means 9216 neurons. Number of layers are 256 and each layer have 36 neurons in it.

-

For dense connection, they employ fully connect each 9216 neurons to first hidden layer having 4096 neurons which have 4096 × 9216 connections.

-

These 4096 neurons are fully connected to the next hidden layer having 4096 neurons size of 4096 × 4096 connections.

-

Finally the output layer will be of 1000 neurons which is fully connected to the previous layer size of 100 × 4096 connections.

The main difference between LeNet-5 and AlexNet is that it uses ReLU activation function that will output the input directly if it is positive, otherwise, it will output zero.

6.3 ZfNet

ZfNet CNN is an improvement over the AlexNet. ZfNet was introduced in 2013 in ILSVRC (ImageNet Large Scale Visual Recognition Challenge). In ZfNet the size of Filter is reduced and Convolutional strides are also reduced. The design of ZfNet came across the motivation of visualizing intermediate feature layers and classifiers operation [90].

ZfNet is a classic convolutional neural network. The design was motivated by visualizing intermediate feature layers and the operation of the classifier [90]

-

The first layer is the input layer. It is a 224 × 224 × 3 RGB image. The first you used a Convolutional technique in which you are using a filter size of 7 × 7 and stride is 2 and pool size of 3. The next layer is of size 55 × 55 × 96 where 96 is the no. of filters you’ve used.

-

Used the pooling technique in which you used a max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 27 × 27 × 96 where 96 is the no. of filters you’ve used.

-

Next they employ Convolutional with 5 × 5 × 96 filter. The next layer is of size 7 × 7 × 256 which means there are total of 256 filters and each filter have 5 × 5 × 256 filters in it.

-

Further, they apply max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 13 × 13 × 256 where 256 is the no. of filters you’ve used.

-

They used interactively used same Convolutional with 3 × 3 × 256 filter. The next layer is of size 13 × 13 × 384 which means there are total of 384 filters and each filter have 3 × 3 × 256 filters in it.

-

They employ same Convolutional with 3 × 3 × 384 filter. The next layer is of size 13 × 13 × 348 which means there are total of 384 filters and each filter have 3 × 3 × 384 filters in it.

-

Further, they employ same Convolutional with 3 × 3 × 384 filter. The next layer is of size 13 × 13 × 256 which means there are total of 256 filters and each filter have 3 × 3 × 384 filters in it.

-

They apply max pooling where the filter size is 3x3 and stride is of 2. The next layer is of size 6 × 6 × 256. Here 6 × 6 × 256 means 9216 neurons. Number of layers are 256 and each layer have 36 neurons in it.

-

For dense connection, they employ fully connect each 9216 neurons to first hidden layer having 4096 neurons which have 4096 × 9216 connections.

-

These 4096 neurons are fully connected to the next hidden layer having 4096 neurons size of 4096 × 4096 connections.

-

Finally the output layer will be of 1000 neurons which is fully connected to the previous layer size of 1000 × 9216 connections.

6.4 VGG

The convolutional neural network architecture called the VGG framework, or VGGNet, that covers 16 layers is also known as VGG16. It was developed by A. Zisserman and K. Simonyan from the University of Oxford. The study article titled “Very Deep Convolutional Networks for Large-Scale Image Recognition” contains the framework that these authors released. In ImageNet, the VGG16 model outperforms top-5 accuracy results of about 92.7%. A resource called ImageNet has over 14 million photos that fall into about 1000 categories. It was also among the most well-liked models presented at ILSVRC-2014 [91].

The convolution neural network receives a fixed-size RGB image with a dimension of 224 by 224. The primary preprocessing it performs is removing out of each pixel the average RGB numbers calculated from the training sample. The image is then sent via a series of convolutional (Conv.) layers that contain filters with a tiny 3 × 3 input patch, the smallest number necessary to retain the concepts of up/down, left/right and center portion [91]

VGGNet architecture is made up of 16 layers. The layer composition consists of 13 Convolutional layers, 5 pooling layer and 3 fully connected layers.

-

The first layer is the input layer. It is a 224 × 224 × 3 RGB image. The first you used a Convolutional technique in which you are using a filter size of 3 × 3 and stride is 1 and same padding size. You Convolutional this layer 2 times with the same filter size. The next layer is of size 224 × 224 × 64 where 64 is the no. of filters you’ve used.

-

Further, they employ the pooling technique in which you used a max pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 112 × 112 × 64 where 64 is the no. of filters you’ve used.

-

Regularly, they use the same Convolutional filter size of 3 × 3 and stride is 1 and same padding size. Again Convolutional this layer 2 times. The next layer is of size 112 × 112 × 128 which means there are total of 128 filters.

-

Repeatedly they use max pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 56 × 56 × 128 where 128 is the no. of filters you’ve used.

-

They continuously employ the same Convolutional filter size of 3 × 3 and stride is 1 and same padding size. But Convolutional this layer 3 times. The next layer is of size 56 × 56 × 256 which means there are total of 256 filters.

-

They use max pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 28 × 28 × 256 where 256 is the no. of filters you’ve used.

-

Repeatedly use same Convolutional filter size of 3 × 3 and stride is 1 and same padding size. Again Convolutional this layer 3 times. The next layer is of size 28 × 28 × 512 which means there are total of 512 filters.

-

Regularly apply max pooling where the filter size is 2 × 2 and stride is of 2. The next layer is of size 14 × 14 × 512 where 512 is the no. of filters you’ve used.

-

They employ the same Convolutional filter size of 3 × 3 and stride is 1 and same padding size. Again Convolutional this layer 3 times. The next layer is of size 14 × 14 × 512 which means there are total of 512 filters.

-

Use max pooling where the filter size is 2x2 and stride is of 2. The next layer is of size 7 × 7 × 512 where 512 is the no. of filters you’ve used. Here 7 × 7 × 512 means 25088 neurons. Number of layers are 512 and each layer have 49 neurons in it.

-

For dense connection, fully connect each 25088 neurons to first hidden layer having 4096 neurons which have 4096 × 25088 connections.

-

These 4096 neurons are fully connected to the next hidden layer having 4096 neurons size of 4096 × 4096 connections.

-

Finally the output layer will be of 1000 neurons which is fully connected to the previous layer size of 1000 × 4096 connections.

6.5 GoogleNet

GoogleNet was introduced in 2014 by a team at google. GoogleNet was the 2014 winner of ILSVRC (ImageNet Large Scale Visual Recognition Challenge). GoogleNet achieve top-5 error rate of 6.67% which was very close of the error rate of human level. GoogleNet used the convolutional neural network inspired by the LeNet CNN. GoogleNet implemented Inception module in it. GoogleNet used RMSprop, image distortions and batch normalization [92].

GoogLeNet is a deep CNN and it has a 22-layer architecture and researchers at Googles motivated by visualizing intermediate feature layers and the operation of the classifier [92]

The GoogLeNet architecture consists of 22 layers (27 layers including pooling layers) and part of these layers are a total of 9 inception modules.

-

The first layer is the input layer. It is a 224 × 224 × 3 RGB image. The first you used a convolutional technique in which you are using a filter size of 7 × 7 and stride is 2. The next layer is of size 112 × 112 × 64 where 64 is the no. of filters you’ve used.

-

Further, they employ the pooling technique in which you used a max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 56 × 56 × 54 where 64 is the no. of filters you’ve used.

-

They apply Convolutional with 3 × 3 filter and stride 1. The next layer is of size 56 × 56 × 192 where 192 is the no. of filters you’ve used.

-

Frequently apply max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 28 × 28 × 192 where 192 is the no. of filters you’ve used.

-

The inception technique is used in which you perform 3 filters (1 × 1),(3 × 3),(5 × 5) and then the max pooling. The next layer will be of size 28 × 28 × 256.

-

Regularly they employ the inception technique, the next layer will be of size 28 × 28 × 480.

-

They apply max pooling where the filter size is 3 x 3 and stride is of 2. The next layer is of size 14 × 14 × 480 where 480 is the no. of filters you’ve used.

-

The inception technique is used 5 times and you have the next layer of size 14 × 14 × 832.

-

Further, they apply max pooling where the filter size is 3 × 3 and stride is of 2. The next layer is of size 7 × 7 × 832 where 832 is the no. of filters you’ve used.

-

Do the inception 2 × times and you found the next layer size of 7 × 7 × 1024.

-

Regularly, they apply average pooling with the filter size of 7 × 7 and stride 1. You have the next layer of size 1 × 1 × 1024 and drop the 40% and you have finally 1 × 1 × 100 output.

GoogleNet has the benefit of training more quickly than VGG. Pre-trained GoogleNets are less in size than VGGs. GoogleNet does have a volume of only 96MB, but a VGG model might have > 500 MBs.

6.6 ResNet

ReNet is an application of Keras. ResNet is a short form of Residual Network. It was introduced in 2015 and it changed the research community forever by ground breaking results. There are many version of ResNet and each version is different from other and serve a different purpose. The different version of ResNet are ResNet-18, ResNet-34, ResNet-50, ResNet-101, ResNet-110, ResNet-152, ResNet-164, ResNet-1202 [93, 94].

The really quite initial point we can see in the accompanying graphic is that there is a direct link that bypasses various model levels. The core of leftover blocks is a link known as a “skip connection.” This skip connection causes the outcome to differ. Input ’X is calculated by the layers weight in the absence of the skip connection, and then a bias factor is added [94]

ResNet based on two intuitions:

-

1.

Error rate shouldn’t decline as we add more layers and go deeper.

-

2.

To reconcile the expected with the real, continue to training the residuals.

These are the functions of a Residual Network.

Residual Block [94]

These two are the equations used where x & y are input and output vectors.

6.7 Highway Networks

Highway networks are used to increase the depth of the neural network and it also does networks optimization. Highway networks uses gating functions approach to regulate information flow. Highway networks are inspired by Long-Short Term Memory recurrent neural networks (LTSM). Highway networks are mostly used in speech recognition tasks and sequence labeling [95].

It enables direct communication between neurons in various levels. Data transmission is regulated by a gating technique. Data can go through various levels of neural networks thanks to gating processes [95]

In highway network, two non-linear transforms T and C are introduced:

where T is the Transform Gate and C is the Carry Gate. In particular, C = 1 - T,

We can have below conditions for particular T values:

When \(\hbox {T}=0\), we pass the input as output directly which creates an information highway. That’s why it is called Highway Network. When \(\hbox {T}=1\), we use the non-linear activated transformed input as output.

6.8 DenseNet

DenseNet is used in object recognition. DenseNet has proven better than the ResNet because it outperforms ResNet in object Recognition. The architecture of DenseNet and ResNet are almost similar but there is a slight change that plays an important part in outperforming ResNet. DenseNet uses concatenation between layers while ResNet uses additive method. DenseNet requires GPU support because it uses concatenation [96].

ResNet and DenseNet are relatively similar, however there are several key distinctions. While DenseNet combine the outcome of the prior level to the next level, it employs the addition technique(+) that combines the previous layer with the subsequent layer [96]

Its main advantage is that it doesn’t allow data to vanish from the input layer to the output layer.

6.9 Wide ResNet

Wide ResNet is a modified version of ResNet. Wide ResNet is called wide Residual Network because there is increase in feature map size per each layer. WRN architecture is quite identical to the ResNet architecture but there is increase in the feature map size per layer it means that there is increase in the number of channels created in per convolutional layer [97].

It has same accuracy as ResNet but number of layers is reduced and training time is shorter. WRN-22-8 outperform ResNet-1001 by 0.92% on CIFAR-10 and 3.46% on CIFAR-100. WRN-40-4 is 8 times faster than ResNet-1001 [97]

In WideResNet, order changed to BN-RELU-CONV. It add more convolutional layers per block and Increases filter size in convolutional layers. It has widening factor k and network includes 40 layers with \(\hbox {k}=2\) times wider than the original would in WRN-40-2 and 4 times in WRN-40-4.

6.10 Pyramidal Net

It has two approaches (1) Bottom Up Pathway is the feedforward calculation of the spine Convolutional Net. It is characterized that one pyramid level is for each stage. (2)Top down pathway and Lateral Connection—The higher goal highlights are up inspected spatially coarser, yet semantically more grounded, include maps from higher pyramid levels. All the more explicitly, the spatial goal is up examined by a factor of 2 utilizing the closest neighbor for straightforwardness [98].

Pyramidal Net: [98] Two popular approaches top down and bottom-up are used in pyramidal network of CNN

Specifically, the element maps from base up pathway goes through 1 × 1 convolutions to lessen the channel measurements and the element maps from the base up pathway and the top-down pathway are converged by component astute expansion. Finally, a 3 × 3 convolution is annexed on each combined guide to create the last component map, which is to diminish the associating impact of up inspecting. This last arrangement of highlight maps is called P2, P3, P4, P5, relating to C2, C3, C4, C5 that are separately of similar spatial sizes.

6.11 Inception

Inception is a convolutional neural network (CNN) that is used for object detection and image analysis. Contrarily, the Inception structure was intricate (heavily engineered). It employed several strategies to increase speed and accuracy of execution. Numerous variations of the network were produced as a result of its ongoing development. Deep neural networks are costly to compute. The researchers reduce the cost by including an additional 1 × 1 convolution w preceding 3 × 3 and 5 × 5 convolution layers. This reduces the amount of input vectors. Contrary to what would seem logical, 1 × 1 convolutions are significantly more affordable than 5 × 5 convolution layers, and the fewer input streams also aid. But keep in mind that the 1 × 1 convolution is added after the max pooling layer, not before [99].

-

Inception V1 It performs convolution on the input with 3 different size i.e. (1 × 1, 3 × 3, 5 × 5).

-

It also performed max pooling.

-

The output will be concatenated and send to the next inception module.

-

Inception V2

-

It performs convolutional on the input with 2 different size i.e. (1 × 1, 3 × 3) as the major change is that 5 × 5 replaced to two 3 × 3 convolution.

-

This decrease computational time and thus increase computational speed.

-

3 × 3 convolutional is 2.78 lesser than 5 × 5.

-

It also converts \(\hbox {n}\times \hbox {n}\) factorization to 1xn and nx1.

-

It is 33% cheaper than the nxn factorization.

-

Inception V3 It is similar to V2 with the following changes:

-

RMSprop optimizer.

-

Batch normalization in the fully connected layer.

-

7 × 7 factorized conv.

-

Label Smoothing Regularization.

Inception V1 [99]

Inception V2 [100]

Inception V3 [101]

6.12 Xception

Xception is a convolutional neural network that was introduced by Francois Chollet. Xecption net is the further extension of Inception neural network. Its architecture is similar to the architecture of the inception model but it is further extended by using depth wise separable convolutions. In Xception network the model parameters are used efficiently and that’s why it outperforms inception model. The channel-wise \(\hbox {n}times\hbox {n}\) spatial convolution is known as depth-wise convolution. If there are five channels in the aforementioned diagram, we will get five \(\hbox {n}times\hbox {n}\) spatial convolution. The 1x1 convolution used to adjust the scale via pointwise convolution [102].

Xception is a convolutional neural network architecture that relies solely on depth wise separable convolution layers [102]

Although Xception uses the same amount of parameter estimation as Inception V3, it does so more effectively. This framework, known as Xception, considerably surpasses Inception V3 on a bigger image classification sample that consists of 350 million images and 17,000 classes, outperforming Inception V3 on the ImageNet dataset (about which Inception V3 was built). The model is substantially lightweight and has fewer components, which is its major benefit.

6.13 ResNeXt

ResNeXt is designed for efficient image classification. The architecture of ResNeXt consist of stacks of topology blocks. Width and Filter size is also shared which are also known as hyper-parameters. It uses cardinality which refers to the size of the set of the transformations. It is an important part in the addition to dimensions [103].

A straightforward, extremely modular design network framework called ResNeXt is used to classify images. Recurring a building block that combines a group of transformations with the identical structure forms the foundation of our network [103]

Comparable to an Inception Module, it collects a collection of transformations using a “split-transform-merge” technique. Our straightforward approach yields a homogenous, multi-branch framework with a minimal amount of hyper-parameters. In contrast to the measurements of depth and breadth, this technique also reveals a new aspect, which we refer to as “cardinality” (the magnitude of the collection of transformations).

6.14 Squeeze Net

Squeeze Net is a deep convolutional neural network that was introduced in 2016. Squeeze Net was introduced over Caffe framework but later on it has been formed on different networks. It is a CNN model that uses different design strategies to minimize the parameters. Smaller network so less communication across serves within training phase. Due to the lesser so its require minimum bandwidth to export a new model from the cloud to an autonomous car [104]. More flexible to deploy on FPGAs and other types of hardware with limited memory. It uses the following strategy for uniqueness:

-

Replace filters (replace the 3 × 3 filters with 1 × 1)

-

Decreases the number of input (decreases the number of input channels to 3 × 3 filters if layer has 3 × 3 filters so total parameters in layer is (number of input channels) × (number of filters) × (3 × 3)

-

Down Sampling (Down sample late in the networks do convolutional layers have large activation function maps.

When we use Fire modules so we set 1 × 1 to be less than (\(\hbox {e1}\times 1+\hbox {e}3 \times 3\)), so the squeeze layer helps to limit the number of input channels to the 3 × 3 filters.

Fire module is comprised of squeeze convolutional layer feeding into an expand layer and that has a mix of 1 × 1 and 3 × 3 convolution filters [104]

Squeeze Net is smaller network architecture that was designed as a more compact replacement for AlexNet and it perform 3 times faster and it is used in mage classification [104]

In left side begins with s single layer followed by 8 Fire module (fire 2–9) ending with final layer (Convolutional 10). Both complex and simple bypass connection yield an accuracy improvement over the vanilla Squeeze Net architecture. With using the fire module, we can reduce the size of model while maintaining the prediction the accuracy.

6.15 U-Net

U-Net is a convolutional neural network that was introduced for image segmentation in the biomedical field. It was introduced by Olag Ronneberger. Its architecture consist upon encoder and decoder. The encoder in its architecture deals with covenant layers that are followed by the pooling operation. The Decoder part in U-Net architecture uses transport convolutions for localization [105].

UNet is a CNN network architecture that expanded with few changes in CNN architecture. This model was invented to deal with biomedical field I this image can take input and this model target was not only classifying the image it can also identify the infection as well as infection location or area [105]

According to the image this model architecture looks like u and its justify the name. this model architecture consists of three section

-

Contraction

-

Bottleneck

-

Expansion section

Contraction section is made of many blocks and each block takes input applies × convolutional layers followed by a 2 × 2 max pooling. We can say that main part or heart of this architecture lies in expansion section this section was similar to the contraction like make block and each block several layers etc. But each block of feature mas used by convolutional layer get half to maintain the symmetry. Loss Calculation in UNet so it is the energy function that is computed by a pixel wise soft max over the final feature map combined with the cross entropy loss function. Image segmentation is important so UNet contributed significantly in such manner to help and solve the problem.

6.16 V-Net

V-Net (Volumetric-Convolutional Network) is a Convolutional network that was introduced medical image segmentation of 3D images. Before Vnet most of the bio medical image segmentation was done using 2D images. Vnet CNN model is trained on 3D MRI scans to perform image segmentation [106].

It is convolutional neural network architecture that can handle biomedical field and help out to perform the segmentation so it called volumetric network because detailed medical terms are not easy so experts required for detail which is high cost and automatic segmentation can help to reduce the cost in other words we can say that to achieve the high accuracy [106]

Left part of the architecture consists of the compression path and right part decompress the signal until its original size reached.

-

Left Side This side divided into different stages and every stage comprise one to three convolutional layers. Each stage residual function is learnt all layers connected and added to the last output convolutional layer of that stage in order to enable learning a residual function. Convolutional performed in each stage use volumetric kernels having size 5 × 5 × 5 voxels. (Voxels represent the value on s regular grid in 3d space). Output of the pooling layers needed for back propagation. Down sampling also perform because it helps to increase the respective field. In this architecture PReLU is used as activation function.

-

Right Side This side of network extract the features and expand the spatial of the lower resolution feature maps in degree to collect and generate the important information to output two channel V segmentation.

At each stage reverse of the convolutional layer perform deconvolution to increase the size of the input followed by one to three convolutional layers. Residual function learnt is similar to the left part. Last convolutional layer computed two feature maps having 1 × 1 × 1 kernel size and produce the outputs of the same size as input volume. These features maps are the probabilistic segmentation of foreground and background regions by applying soft max function voxel wise. Feature extracted from early stages of left part so that will have forwarded to the right part through this connection and this will help to provide location information to the right side so that’s cause improve or better the quality of the final contour prediction.

6.17 SegNet

SegNet is a convolutional network that was introduced by the researchers of the University of Cambridge. SegNet architecture consist of encoder and decoder. SegNet uses encoder and decoder based architecture for multiclass pixel-wise segmentation. SegNet performs very well in indoor scene understanding [107].

It is deep encoder and decoder architecture for multi class and in this we can segmentation pixel wise [107]

In encoder convolutional layers with batch normalization and a ReLU non-linearity followed by non-overlapping max pooling and subsampling in other words we can say that down sampling. In this network there are 13 convolutional layers from VGG-16. During the 2 × 2 max pooling corresponding max pooling locations can be stored. In the decoder convolutional as well as up sampling are performed in the end softmax classifier is present for each pixel. In above image show during the up sampling max poling indices the corresponding encoder layer then it is called up sampling. So ate end soft max classifier is present which can predict class for each pixel.

6.18 Unet++

Unet++ is the modified version of Unet CNN. It provides more accuracy with a series of nested, dense and skip pathways. The changes that are made in Unet to modify it into a Unet++ are redesigned skip pathways, dense skip connections, deep supervision [108].

Unet is a CNN architecture that expanded with few changes in the CNN. This architecture was invented to segment the bio medical images like not only classify the image it can also identify the infection as well as infection area [108]

Unet++ is uses the dense block and its differ from Unet with three ways:

-

CL (Convolutional layers) on skip pathways.

-

Dense skip connections on skip pathways.

-

Deep supervision which enables model pruning.

In first point or step which bridges the semantic gap between encoder and decoder and second point will tell improves the gradient flow third and last point tells achieve comparable performance to using only one loss layer. This network starts with encoder and decoder sub-network and backbone followed by a decoder sub network. There are re-designed skip pathways green and blue that connect the two sub networks and use of deep supervision (red). Above figure shows that how the feature map travel through top skip pathway of unet++. Each layer in convolutional is preceded by a concatenation layer that fuses the output from previous layer of the same dense block with corresponding up sample output.

6.19 FCNN

Fully convolutional neural network model is a deep learning model based on simple and classic convolutional neural network. It can take input in any arbitrary size. In Fully convolutional neural network there are no fully connected convolutional layers. FCNN has learning filters everywhere. FCNN also has filters in decision making layers.

6.20 Fast-RCNN

Fast-RCNN was introduced in 2015 by [109]. Fast-RCNN is used for faster object detection. Its architecture is pretty much similar to the RCNN but in Fast-RCNN we direct give input image to convolutional neural network to generate a convolutional feature map. The reason that the fast-RCNN is faster than the RCNN is that there is no need to give input a lot of region proposals to CNN every time.

6.21 Mask R-CNN

Mask R-CNN is used for instance segmentation. Mask R-CNN is formed on the base of Faster R-CNN. Mask R-CNN uses anchor boxes to detect multiple objects and overlapping objects in an image. This method increases its speed [110].

6.22 DeepLab

DeepLab was introduced in 2016 by Google. It is one of the best semantic segmentation models present. DeepLab has been modified and improved over the years and now there are many promising versions of DeepLab present [111]. The following are the versions of DeepLab:

-

1.

DeepLab-v2 DeepLabv2 was built on DeepLab it contained an atrous spatial pooling scheme. It consist of parallel dilated convolutions with different rates applied in the input feature map.

-

2.

DeepLabv3 DeepLabv3 was an improvement made over DeepLabv2, in this version the problem of segmenting objects at multiple scale was solved by designing and employing atrous in cascade to capture multiscale context by adopting multiple atrous rates.

-

3.

DeepLabv3+ Deeplabv3+ is an improvement over DeepLabv3+. In this version semantic labels are assigned to every pixel in the given input image.

6.23 RetinaNet

RetinaNet is used for one stage object detection models. RetinaNet provides good results in dense and small objects detection. RetinaNet is mostly used with aerial and satellite object imagery. RetinaNet is formed by making improvements in existing object detecting models which are Feature Pyramid networks and Focal Loss [112].

6.24 YOLO

YOLO (You only look once) is a network that is used for object detection in deep learning. YOLO is faster than its competition and is mostly used in real time object detection. YOLO does object detection by classifying different objects in the given frame [113]. Yolo has been modified year by year and has been converted into different versions:

-

1.

YOLOv4 YOLOv4 is an object detection network that can be operated on single GPU with a smaller mini batch size. YOLOv4 increases the speed of object detection for the systems with a single GPU.

-

2.

PP-YOLO PP-YOLO (Paddle Paddle-YOLO) is an improvement over YOLOv4 it has improved inference speed and mAP score.

Channel (Input) exploitation based CNNs The lack of variance and the absence of distinct information within the input might have an effect on CNN’s performance as an individual that’s why the concept of channel boosting was proposed to boost the representation of the networks.

Attention is the method that is used to copy cognitive attentions. This effect will magnify the important parts of input data and will fade out the all-other data.

7 Deep Learning for Medical Image Analysis and CAD