Abstract

Among women, breast cancer remains one of the most dominant cancer types. In the year 2022, around 2,87,800 new cases were diagnosed, and 43,200 women faced mortality due to this disease. Analysis and processing of mammogram images is vital for its earlier identification and thus helps in reducing mortality rates and facilitating effective treatment for women. Accordingly, several deep-learning techniques have emerged for mammogram classification. However, it is still challenging and requires promising solutions. This study proposed a newer automated computer-aided implementation for breast cancer classification. The work starts with enhancing the mammogram contrast using a haze-reduced adaptive technique followed by augmentation. Afterward, EfficientNet-B4 pre-trained architecture is trained for both original and enhanced sets of mammograms individually using static hyperparameters’ initialization. This provides an output of 1792 feature vectors for each set and then fused using a serial mid-value-based approach. The final feature vectors are then optimized using a chaotic-crow-search optimization algorithm. Finally, the obtained significant feature vectors were classified with the aid of machine learning algorithms. The evaluation is made using INbreast and CBIS-DDSM databases. The proposed framework attained a balanced computation time with a maximum classification performance of 98.459 and 96.175% accuracies on INbreast and CBIS-DDSM databases, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Being a deadly disorder, breast cancer is the most cruel one among women [1]. The tissues of the breast are affected initially and spread over further to other areas. Due to this, breast cancer has become one of the most cruel cancer disorders among females [1]. As per the study given by the World Health Organization (WHO), around 8% are only diagnosed and around 6% have passed away [2]. In addition, around 8% of females are affected by this cancer type at some point in time. In the year 2022, the lives of around 43,000 were claimed by this type of cancer [3]. In specific, the tumors in this cancer are categorized as malignant or benign. The first type is so cruel which means it invades surrounding tissues whereas the next category does not invade. So, malignant tumors are found to be the most hazardous for women's lives [4]. Many types of imaging modalities are available for this tumor diagnosis. Out of all, the biopsy is proven as an effective methodology due to its good accuracy. At the same time, several studies insist that women should avoid multiple biopsies for further diagnosis and treatment [5]. Moreover, computer-aided diagnosis (CAD) assists the earlier cancer diagnosis by using ultrasound (US) breast image samples [6]. The breast ultrasound can act as a supplementary imaging tool that supports in discriminating between solid masses and fluid-filled cysts [6]. Thus, the advantages of breast ultrasound images include non-invasive, no radiation exposure, and can be used to guide clinicians during biopsy procedures.

Breast MRI is an imaging modality that utilizes powerful magnets and radio waves to generate breast images. This imaging procedure is employed and recommended for patients at high risk of breast tumors [7]. In addition to the above, Positron Emission Tomography (PET) scanning procedures are employed for identifying breast regions with increased metabolic activities. Thus, this procedure can be adopted in staging breast cancer and monitoring treatment responses [41]. The powerful gamma rays are utilized in PET scanning procedure for diagnosing breast tumors. In the same way, mammographic investigation has become the most adopted approach for earlier tumor identification [7]. Herein, a lower X-ray dose has been utilized for breast examination. It is noteworthy that mammogram images are employed for earlier tumor identification with low risk. Thus, the cruelty of this medical disorder will be tackled at earlier stages using mammogram images [7]. On the other hand, the interpretation of mammogram images by doctors is highly non-reliable due to factors such as bias and tiredness. So it becomes difficult to interpret mammogram images by clinicians with their naked eyes. This is particularly due to several complications of tumors present in mammogram images [7]. The aforementioned mammographic procedure is classified into two categories: Screening and Diagnostic types [40]. In screening mammographic procedure, mammograms are utilized effectively for detecting early signs of breast tumors (microcalcifications and masses) even before sensed by the patients. In diagnostic mammographic procedure, mammograms provide a detailed information for accurate diagnosis when abnormalities are found [41]. Herein, the role of CAD systems is highly useful for better interpretation and further classification. Accordingly, different approaches to artificial intelligence have become predominant in detecting and classifying breast tumors over the past two decades.

1.1 Challenges and Objectives of the Research

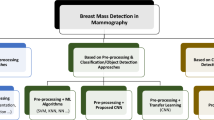

In the applications of medical imaging and analysis, specifically for the classification of breast tumors, various challenges will be encountered with deep learning (DL) approaches. The first one involves the minimal availability of mammograms since DL demands a requirement of a larger amount of inputs for better training and for providing promising solutions [8]. The next one is feature engineering which extricates deep features with more redundancy and this affects the overall classification performance and computation [9]. Accordingly, the work proposed a CAD framework that fuses deep features optimally with enhanced and original mammograms. Accordingly, the contributions to solving the employed classification problem are:

-

Two different mammographic databases are employed.

-

An improved contrast enhancement technique, termed as haze-reduced adaptive technique (HRAT) is proposed.

-

Examined the mammogram classification with and without contrast enhancement.

-

Investigation of feature fusion using serial mid-value-based (SMVB) approach for the features extracted through EfficientNet-B4.

-

Implementation of an improved Chaotic-Crow Search Optimization algorithm (CCSOA) for selecting the optimal features set.

The points as discussed above regarding the proposed work for the classification of breast tumors are illustrated in Fig. 1.

2 Background and Related Works

As discussed in the previous section, breast cancer has become the most prevalent cancer type. Globally, around 1.7 million women were reported in the year of 2012. This is due to several factors such as age, family history, and medical background [9]. An alarming thing about breast cancer is that it is anticipated around 2 million new cases are identified every year. Shockingly, around 60,000 women were endangered by cancer disorders whereas, with breast cancer, 15% of women were subjected to mortality in the year 2018 [10]. As discussed above, both the detection and classification of breast tumors often rely on CAD frameworks, particularly, deep-learning (DL) approaches are influencing the CAD tools to give better performance. However, several risks and challenges with respect to the presence of tumors in mammogram images always lead to inaccurate predictions from the mammogram samples [11]. This makes it more important to utilize the CAD framework employed with appropriate DL approaches for getting promising solutions to breast cancer diagnosis.

Tan et al. [12] proposed a convolutional neural network (CNN)-based system to classify digital mammograms into benign, normal, and malignant tumors. Their methodology involved the preprocessing of mammogram images, followed by training on a DL model for feature extraction. The extricated features from the final layer were then classified using a CNN model employing the Softmax function. This selected framework substantially improved the accuracy of mammography image classification. The results indicate that their proposed model outperformed existing methods, achieving accuracies of 0.85 and 0.82, respectively. Falconí et al. [13] proposed an approach for classifying breast tumor cells using transfer learning (TL). The study explored multiple DL models and found 2 top-performing models, MobileNet and ResNet50 architectures. Accordingly, the study achieved around 74 and 78% of classification accuracies, respectively. In addition, to enhance the classification performance, the work has undergone different preprocessing methodologies. Samee et al. [14] introduced a novel hybrid approach combining logistic regression (LR) and principal component analysis (PCA). Here, the work obtained better classification results evaluated using the mini-MIAS and INbreast databases. The hybrid approach in this work attained notable performance, achieving around 98% of accuracy with MIAS data and 98.6% with INbreast mammogram data.

Hekal et al. [15] introduced a CAD-based approach for classifying two types of mammographic tumors. An Otsu thresholding-based approach was employed in the CAD framework for identifying microcalcifications in mammogram images. Afterward, two deep CNNs (ResNet50 and AlexNet) were employed for processing the identified tumor-like regions and for extracting mammographic features. In this way, the work utilized two distinct datasets for evaluation and attained a performance of 84 and 91% accuracy, respectively. A customized neural network based on the ResNet architecture was proposed by Siddeeq et al. [16]. The designed CAD model was evaluated with an unbalanced dataset after appropriate pyramid scaling and data augmentation. The results of this study revealed that the classification performance evaluated with INbreast data is found to be increasing when there is an increase in training data.

Hikmah et al. [17] introduced a CAD approach for enhancing tumor classification based on multi-view screening. A texture-based technique was implemented in the work for segmenting tumor regions in digital mammograms using an I-order local entropy function. In this way, the extricated features were utilized for computing the area and radius values in malignant areas. Accordingly, an improved detection of 80.5% for MLO and 88% for CC views of accuracies were obtained. Alruwaili et al. [18] presented a CAD-based approach utilizing the advantages of transfer learning (TL). Different augmentation of mammograms was done in the study to mitigate overfitting and to attain promising solutions. Accordingly, the outcomes of the study revealed that the classification performance is substantially improved for tumor diagnosis. Almalki et al. [19] introduced a CAD-based approach that was evaluated using larger mammographic inputs. The work involved several stages such as noise and pectoral portion removal from mammogram samples. Then contrast enhancement was done and mammogram segmentation was then carried out for detecting abnormal spots in the input samples. In this way, the implementation is supported for better outcomes regarding tumor classification. Thus, the work attained 92% of accuracy in classifying the BIRADS database with multi-class targets. In addition to this, several other research studies using DL-based techniques are also available on the implementation of breast tumor problems. Out of which, some of them are as follows: CNN with fuzzy c-mean and median support [20], and optimized stacking learning model [21].

From the above discussion, it is evident that the breast cancer classification problem demands promising and reliable approaches to save human lives. In addition to the valuable approaches in the literature, two more steps are required to attain robust classification performance. This involves contrast enhancement in mammograms and appropriate feature selection. In this way, our proposed work involves mammogram contrast enhancement and feature optimization after deep feature extrication. This leads to attaining reduced computational time, tackling overfitting problems, and thereby enhancing classification outcomes. Thus the work employed the above steps for substantial improvement of the overall performance of the model.

3 Materials and Methods

The discussion on the input database, contrast enhancement, data augmentation, and proposed framework to classify microcalcifications in digital mammograms is done in this section.

3.1 Mammogram Datasets

The research employed mammogram inputs taken from two distinct standard databases: INbreast [22] and CBIS-DDSM [23]. The first dataset is the combination of multiple FFDM (Full Field Digital Mammograms). The next one is a curated subset of the standard DDSM (Digital Database for Screening Mammograms), focusing on several breast imaging samples. The FFDM samples of INbreast data are found to be more peculiar in terms of providing information than the mammogram samples of DDSM data [24]. Several researchers utilized these databases to evaluate the effectiveness of the proposed CAD models. This makes these databases as widely popular and standard for evaluation among the breast tumor research community [24]. A sample mammogram image taken from the INbreast and CBIS-DDSM databases is illustrated in Fig. 2.

3.2 Contrast Enhancement Using Haze-Reduced Adaptive Technique (HRAT)

Contrast enhancement is a highly demandable one in digital mammograms for the effective classification of breast tumors. The conventional haze-removal algorithm intends to give higher-quality of rebuilt images through the balanced adjustment of saturation and contrast [25]. For mammogram images, the lesions and microcalcifications are made to be visible clearly via haze-reduction algorithms. Accordingly, the study proposed a newer contrast enhancement approach that combines haze removal [25] and adaptive global–local transformations [26], termed as the Haze-Reduced Adaptive Technique (HRAT). The original and contrast-enhanced sample mammograms from the INbreast and CBIS-DDSM datasets are given in Fig. 3. The algorithmic description of HRAT will be discussed next.

Algorithm for HRAT approach

Step 1: Load the mammogram input from the INbreast and CBIS-DDSM datasets.

Step 2: Haze removal based on dark channel PriorMethod

-

Calculation of the dark channel prior to the mammogram input with a window size for local analysis.

-

Computation of the minimum value in the local patch \(\Omega (x)\) for all image channels for each pixel \((x, y)\) in the mammogram.

-

Obtain the minimum value as the dark channel value \(J(x)\) at pixel \((x, y)\) and is mathematically given in Eq. (1).

$${\varvec{J}}(x, y) = {\text{min}}\_c({\text{min}}\left\{\left({x}^{\prime},{y}^{\prime}\right)\in \boldsymbol{\Omega }\left(x\right)\right\} ({\varvec{I}}(x^{\prime}, y^{\prime}, c)))$$(1)

In Eq. (1), \({\varvec{J}}(x, y)\) denotes the dark channel value at pixel \((x, y),\) and \({\varvec{I}}(x^{\prime}, y^{\prime}, c)\) represents the intensity in channel \(c\) at pixel \((x^{\prime}, y^{\prime})\). And \(\boldsymbol{\Omega }(x)\) denotes a local patch centered at pixel \((x, y)\).

Step 3: Adaptive global–local transformation

-

Enhancement of contrast in mammograms using an adaptive transformation.

-

The study utilizes the standard adaptive histogram equalization which is represented as given in Eq. (2).

$${\varvec{T}}(I(x, y, c)) = {\text{AdEHist}}(I(x, y, c))$$(2)

In Eq. (2), \(T(I(x, y, c))\) illustrates the enhanced pixel value at location \((x, y)\) in channel \(c\) and \({\text{AdEHist}}(I(x, y, c))\) denotes the operation of adaptive histogram equalization approach.

Step 4: Integration of haze removal and adaptive transformation

-

Adjustment of the mammogram contrast enhancement by controlling the involvement of haze removal and adaptive transformation using the parameter, \(\alpha\).

-

This can be mathematically given in Eq. (3).

$${\varvec{E}}{\varvec{I}}(x, y, c) = \boldsymbol{\alpha } * {\varvec{J}}(x, y, c) + (1 - \boldsymbol{\alpha }) * {\varvec{T}}(I(x, y, c))$$(3)

In Eq. (3), \(EI(x, y, c)\) represents the enhanced pixel value at location \((x, y)\) in channel \(c\), and \(\alpha\) denotes the weighting coefficient for controlling the influence of haze removal.

Step 5: Repetition of the above steps for all pixels and channels of mammograms.

3.3 Augmentation of Mammograms

For generating more mammogram images using the available data present in INbreast and CBIS-DDSM datasets, the work performed the processing of mammograms with degrees of rotation of 45, 90, 135, 180, 235, 270, and horizontal and vertical flipping. In this way, more mammogram data are generated for the robust training of the employed deep-learning model. The summary of how many mammograms were generated as compared with the original mammogram datasets is illustrated in Table 1.

3.4 EfficientNet-B4 Architecture

In the deep transfer learning models, the researchers are trying to make the model efficient by making them so wider, deeper, or with more resolution. But this makes the model to be saturated for the classification problem and provides false results [27]. In EfficientNet architectures, all the scaling in terms of width, resolution, and depth is done in an effective and controlled manner to obtain better performance [28]. In specific, the research employed the EfficientNEt-B4 architecture [29] for breast tumor classification. This is due to the reason of attaining a better balance between the size and performance of the model [29]. As illustrated in Fig. 4, MBConv represents the mobile inverted convolution blocks and this is a reversed residual block. This provides depthwise separable convolutional operations on applied inputs. This leads to the advantage of learning more diverse features from the mammogram inputs [29]. Another advantage is that this learning requires fewer parameters with effective computation. In this way, MBConv blocks of the EfficientNet-B4 model provide a robust representation of feature vectors. As in Fig. 4, the feature maps are then passed to the subsequent global average pooling (GAP) block [30]. This helps in getting a reduced spatial dimension and improved global representation of feature maps. This process is expressed mathematically in Eq. (4) [30].

In Eq. (4), \({\text{GAP}}(x)\) represents the global average pooling result, \(x(i, j)\) denotes the value of the feature map at position \((i, j)\), \(H\) and \(W\) are the height and width of the feature maps, respectively. As shown in the architecture of Fig. 4, the GAP block is connected next to the convolutional blocks. This will help the overall architecture by reducing the feature dimensions and capturing higher-level representations. The output of the fully connected layers serves as the deep features extracted from the mammogram images which is \(N\times 1792\) for each mammogram database. A sample visualization of intermediate activations of the EfficientNet-B4 model for an input Mammogram of the CBIS-DDSM database is illustrated in Fig. 5. This plot illustrates the intermediate activations of the 'block2a_expand_activation' layer of EfficientNet-B4 for visualization. Herein, it illustrates how deep the learning is, and thus it provides robust feature vectors. In addition, Fig. 5 tells the significance of contrast in training the deep learning models.

3.5 Serial Mid-Value Based (SMVB) Feature Fusion

The process of fusing feature vectors is a key factor in improving the quality of features used for multiple applications. But at the same time, the fusing is meaningless if the features are combined using invalid operations [31]. In medical imaging analysis problems, the serial-based feature fusion approach is the most commonly used one among researchers [32]. The aforementioned technique is simple and powerful since the fused data holds all the feature vectors. On the other hand, the fused data contains both relevant and redundant vectors. For classification problems, this will increase the computational complexity with a chance of a higher error rate [32]. In this way, the work employed a serial mid-value-based (SMVB) approach for improved fusion of vectors. This method of feature fusion works by considering a mid-value-based function for computing a central value for generating efficient fused data. Thus, the SMVB approach balances the fusion process and computational complexity, and in turn, enhances the quality of fused features.

For both databases, the feature dimensions attained finally using EfficientNet-B4 architecture are \(N\times 1792\) and \(N\times 1792\), respectively. This makes the dimension of serially fused vectors as \(N\times 3584\) which is mathematically represented as shown in Eq. (5).

In Eq. (5), \({f}_{1}\) and \({f}_{2}\) denote the feature vectors with respect to the original and enhanced mammograms of two datasets. Based on Eq. (5) as discussed above, the extricated features are combined for fusion. Now, as per the approach, the computation of mid-value is done using the fused feature vectors and this is mathematically illustrated in Eq. (6).

Based on the calculation of Eq. (7), the obtained mid-value will be passed to the threshold function to determine the final set of fused feature vectors as given mathematically in Eq. (8).

In Eq. (8), \(T\) represents the threshold function, \({\text{Fu}}\left(.\right)\) is the fused feature vector of the INbreast database. In a similar way, the SMVB approach is applied to the CBIS-DDSM database as illustrated on Eqs. (5) to (8). In this research, the dimensions of the finally fused feature vector are \(N\times 1906\) and \(N\times 1932\) for the INbreast and CBIS-DDSM databases, respectively.

3.6 Chaotic-Crow-Search Optimization Algorithm (CCSOA) for Feature Selection

The crow-search algorithm is used for solving various optimization problems, which are inspired by the behavior of crow species [33]. The simple crow-search algorithm with chaotic maps is employed in this research for selecting dominant features from the above-fused feature vectors. This improves the quality of feature vectors by either maximizing or minimizing the objective function. For this, the algorithm employs two parameters namely fitness function (\(F\)) and complexity penalty (\(P\)). The fitness function gives the measurement of the quality of a feature subset. Another parameter, the complexity penalty finetunes feature subsets with a higher amount of features. The above two parameters are intended to balance model performance in terms of selecting significant features. In this way, the objective function (\(O\)) can be expressed mathematically as given in Eq. (9).

\(\lambda\) given in Eq. (9) represents a trade-off parameter between model performance and simplicity. After trial-and-error-based experimentations with respect to the performance accuracy of the KNN model, the value of \(\lambda\) has been tuned to 0.15. In Python, this tuning has been done using a Numpy function, ‘np.logspace(−3, 0, 10)’ that creates an array of \(10\) experimental values for the trade-off parameter \(\lambda\), ranging between \(0.001\) and \(1.0\) on a logarithmic scale. This way of logarithmic exploration is chosen for covering a wider range of \(\lambda\) values so that smaller values are emphasized in regulating the performance. As discussed above, the work utilized a chaotic map function for implementing an efficient search for obtaining optimal solutions. In this work, the CCSOA is used for obtaining a significant set of features by maximizing the considered objective function.

The steps involved are given below:

-

Initialization: the CCSOA begins with an initial population of solutions. Each solution characterizes the formation of a binary feature subset. In this subset, the value of \(1\) indicates that the feature is significant, and \(0\) indicates non-significance.

-

Evaluation of objective function: this is evaluated for each obtained solution in the population.

-

Chaotic-map-based search: the employed chaotic maps (Tent Maps) [34] will support the search process. That is, chaotic sequences are generated for updating the solutions, and this provides an effective exploration of search space for the considered task.

-

Social behavior of crows: similar to the social behavior of crow species, the solutions will interact with each other. The interactions help to provide awareness of fitness and features which generates better solutions.

-

Updation of solutions: the solutions are finally updated in accordance with the chaotic sequences, fitness values, and information shared from other solutions. The work repeats the above process for a \(20\)-iteration count.

During the above-discussed process for feature selection, the binary representation of solutions will generate the optimal features. This illustrates that features representing \(1\)s are being ‘selected’, while \(0\)s denote ‘not-significant’. This can be mathematically illustrated in Eq. (10).

\(S\) in Eq. (10) represents the binary representation of solutions, and \({s}_{i}\) denotes a binary value illustrating whether the \({i}\)th feature is selected or not. The fitness as discussed above can be given as in Eq. (11).

The updation of the selected feature set is based on Eqs. (10) and (11). Thus, CCSOA-based feature selection explores the feature space iteratively. Accordingly, the feature subset will be generated by maximizing the objective function. As a final point, CCSOA utilizes chaotic maps [34] and crow’s powerful social interactions [33] for achieving effective feature selection. As given in Eq. (11), a simple but powerful K-Nearest Neighbor (KNN) [35] algorithm is adopted as a function. The final selected features are then applied to the machine learning classifiers for further experimentation. In this way, the dimensions of the selected features are obtained as \(N\times 1157\) and \(N\times 1181\). This reveals that the above-said approach has substantially reduced the feature vector size.

4 Experimentation Results and Discussion

4.1 Experimental Setup

The experimental results obtained for the proposed CAD framework applied with two open mammographic datasets are presented here in detail. The evaluation is done using augmented INbreast and CBIS-DDSM databases with and without contrast enhancement. The training and testing of these mammograms are 70:30 along with a cross-validation split of 10. The employed EfficientNet-B4 model includes the fine-tuning of hyperparameters such as 0.004 as a learning rate, 0.7226 as momentum with hundred epochs, 32 as a batch size, and the optimizer utilized is a stochastic gradient descent algorithm. In the testing phase, a ten-fold cross-validation is performed for each experiment. Herein, the results are computed and will be presented for each database as follows: (i) classification using deep extracted features on the original database, (ii) classification using deep extracted features on a contrast-enhanced database, (iii) fusion of the original and enhanced database deep extracted features using serial mid-value based (SMVB) feature fusion approach, and (iv) feature selection using the Chaotic Crow-Search Optimization algorithm. The machine learning algorithms employed for classification are as follows: ensemble subspace KNN (EKNN), fine KNN (FKNN), weighted KNN (WtKNN) [36], linear SVM (LSVM), cubic Support Vector Machine (CSVM), multi-kernel SVM (MKSVM), quadratic SVM (QSVM) [37], medium neural networks (MNN), wide neural networks (TNN), and bi-layered neural network (BLNN) [38]. All the experimentations are implemented using a computer with a RAM specification of 16 GB, 8 GB of graphic card, and 2 TB of storage on Windows 10 pro with Python 3.6. The performance metrics employed are sensitivity (Sen), specificity (Spc), accuracy (Acc), precision (Prc), F1 score, and Kappa [39]. The mathematical equations of the above metrics [40, 41] are illustrated in Eqs. (12) to (17). In addition, the computation time is also computed and compared against each experiment.

4.2 Results Using INbreast Database

-

(i)

Classification results obtained for deep-extracted features of the original database

Table 2 provides the outcomes of the classifiers applied with the original INbreast data. In this experimentation, the deep features are extricated through EfficientNet-B4 architecture as given in Fig. 4. The results as presented in this table provide insights into the performance of different classification algorithms employed for this experimentation. As in Table 2, the classification performance of WKNN and EKNN algorithms are better for the employed breast cancer problem as compared with the performance of FKNN. However, among neural network-based classifiers, the BLNN algorithm provided a better classification performance of accuracy, 92.180% as compared with other models. Finally, the MKSVM algorithm achieved a supreme classification performance of sensitivity of 89.88%, specificity of 92.11%, accuracy of 91.039%, precision of 91.29%, F1 score of 90.58%, Kappa of 0.820, and took around 31.64 s for computation.

-

(ii)

Classification results obtained for deep-extracted features of the contrast-enhanced data

Table 3 presents the results of classification algorithms applied to HRAT (Haze Reduced Adaptive Technique) based contrast-enhanced INbreast data using deep features extracted by the EfficientNet-B4 model. Overall, the results reveal that the classification algorithms, especially MKSVM and BLNN models, achieved higher accuracy and precision. This indicates robust performance in classifying the INbreast data with contrast-enhanced features extracted by the EfficientNet-B4 model. Accordingly, the BLNN model achieved a sensitivity of 93.45%, specificity of 93.20%, accuracy of 93.322%, precision of 92.68%, F1 score of 93.06%, and Kappa of 0.866. Thus, the BLNN algorithm provided higher accuracy and precision, indicating better classification performance than others. In particular, the MKSVM model achieved a sensitivity value of 93.45%, specificity of 93.75%, accuracy of 93.607%, precision of 93.23%, F1 score of 93.34%, and Kappa of 0.872. This indicates its superior performance in correctly classifying both positive and negative cases. Hence, the MKSVM algorithm achieved a higher accuracy and precision, indicating robust classification with a computation time of around 12.87 s.

-

(iii)

Classification results obtained for SMVB-fused features

Figure 6 illustrates the confusion matrix visualization of the results obtained using the WKNN algorithm applied with SMVB fused feature vectors of the INbreast database. Table 4 represents the results obtained for this experimentation with all classification algorithms. The results illustrated that the classification algorithms, especially WKNN, MKSVM, and BLNN algorithms, achieved higher values of accuracy, precision, and F1 score. In particular, the WKNN algorithm achieved a sensitivity of 95.83%, specificity of 95.39%, accuracy of 95.605%, precision of 95.04%, F1 score of 95.44%, and Kappa score of 0.912. This indicates a robust performance in classifying INbreast data with features fused through the SMVB approach with the WKNN model. However, the computation time for all the classification algorithms has been increased substantially. This implies that the highest of 55.97 s of computation time is attained while using the FKNN algorithm. This is due to the reason that the FKNN model provides finely detailed discriminations between benign and malignant mammogram cases. This is done with the number of neighbours tuned as \(1\) and uses Euclidian distance for calculating the nearest neighbours. However, the least computation time of 14.99 s is attained using the CSVM classification model.

-

(iv)

Classification results obtained for CCSOA-based feature selection approach

Figure 7 illustrates the confusion matrix visualization of the results obtained using the MKSVM algorithm with CCSOA-based selected features of the INbreast database. Table 5 represents the results obtained for this experimentation with all classification algorithms. The results revealed that employed classification algorithms, especially WKNN, MKSVM, and BLNN, achieved higher accuracy, precision, and F1 score. In particular, the MKSVM model outperforms others with a sensitivity of 98.21%, specificity of 98.68%, accuracy of 98.459%, precision of 98.57%, F1 score of 98.39%, and Kappa of 0.969. Thus, MKSVM performed exceptionally well, with higher classification performance for the employed problem with a computation of 7.92 s.

The results obtained using four experimentations applied with the INbreast database are graphically compared and plotted in Fig. 8. From this plot, the performance of the WKNN algorithm is found to be consistent, achieving higher sensitivity, specificity, accuracy, and F1 score across multiple experimentations. However, the MKSVM algorithm gave a superior performance among others, making it a robust model for accurate classification of breast tumors using the INbreast database. Also, noted that the TNN algorithm provided a descent classification performance of an accuracy value of 95.320% with a very minimal computation complexity as shown in Fig. 8. Thus, the proposed work with the MKSVM model provided a better balance in attaining good classification results with minimal computational complexity.

4.3 Results Using CBIS-DDSM Database

-

(i)

Classification results obtained for deep-extracted features of the original database

Table 6 provides the outcomes of the classifiers applied with the original CBIS-DDSM data. In this experimentation, the deep features are extricated through EfficientNet-B4 architecture as given in Fig. 4. The results as presented in this table provide insights into the performance of different classification algorithms employed for this experimentation. As in Table 6, EKNN, FKNN, and WKNN algorithms provided better values of sensitivity and accuracy. In addition to this, the WKNN algorithm provided a balanced F1 score. The Support Vector Machine (SVM) variants (LSVM, QSVM, CSVM, MKSVM) and neural network model variants (MNN, TNN, BLNN) consistently showed balanced performance in sensitivity, specificity, and accuracy. However, the EKNN algorithm provided supreme classification results of sensitivity (Sen) of 91.84%, specificity (Spc) of 90.14%, accuracy (Acc) of 91.046%, precision (Prc) of 91.42%, F1 score of 91.63%, and Kappa of 0.820. On the other hand, the EKNN model provided a higher computational complexity as compared with others for this experimentation.

-

(ii)

Classification results obtained for deep-extracted features of the contrast-enhanced data

Table 7 presents the results of classification algorithms applied to HRAT (Haze Reduced Adaptive Technique) based contrast-enhanced CBIS-DDSM data using deep features extracted by the EfficientNet-B4 model. Overall, the results reveal that the classification algorithms, especially EKNN, FKNN, and WKNN provided better sensitivity and accuracy values. In addition to this, the EKNN provided the highest sensitivity. The Support Vector Machine (SVM) models (LSVM, QSVM, CSVM, MKSVM) and neural network models (MNN, TNN, BLNN) consistently showed balanced performance in terms of sensitivity, specificity, and accuracy. Finally, the EKNN model provided superior results of sensitivity (Sen) of 93.01%, specificity (Spc) of 91.56%, accuracy (Acc) of 92.337%, precision (Prc) of 92.65%, F1 score of 92.83%, and Kappa of 0.846. This reveals that the EKNN model provided a higher sensitivity and specificity, resulting in balanced accuracy. However, the model had a longer runtime of 596.32 s.

-

(iii)

Classification results obtained for SMVB-fused features

Figure 9 illustrates the confusion matrix visualization of the results obtained using the MKSVM algorithm with SMVB-based fused features of the CBIS-DDSM database. Table 8 represents the results obtained for this experimentation with all classification algorithms. The results revealed that the EKNN model provided the highest sensitivity and accuracy but had a considerably longer runtime. On the other hand, algorithms such as FKNN, WKNN, QSVM, and CSVM algorithms consistently showed balanced performance in sensitivity, specificity, and accuracy. Consequently, the MKSVM algorithm provided a supreme performance of sensitivity of 94.78%, specificity of 93.13%, accuracy of 94.012%, precision of 94.04%, F1 score of 94.41%, and Kappa of 0.880. Thus, the MKSVM model provided robust performance with higher sensitivity and accuracy. However, the algorithm executed the classification task in 139.96 s.

-

(iv)

Classification results obtained for CCSOA-based feature selection approach

Figure 10 illustrates the confusion matrix visualization of the results obtained using the EKNN algorithm with CCSOA-based selected features of the CBIS-DDSM database. Table 9 represents the results obtained for this experimentation with all classification algorithms. The results revealed that employed classification algorithms, especially EKNN and MKSVM algorithms consistently achieved the highest sensitivity, specificity, and accuracy. Also, noted that the models of FKNN, WKNN, QSVM, CSVM, and MNN algorithms yield balanced performance with good accuracy and F1 score. Consequently, the EKNN algorithm provided a sensitivity (Sen) of 96.81%, specificity (Spc) of 95.45%, accuracy (Acc) of 96.175%, precision (Prc) of 96.05%, F1 score of 96.43%, and Kappa of 0.923. Thus, the EKNN model yields the highest sensitivity and accuracy, indicating excellent performance in identifying positive cases. It is also noted that the EKNN algorithm took a moderate runtime of 165.74 s.

The results obtained using four experimentations applied with the CBIS-DDSM database are graphically compared and plotted in Fig. 11. As from this plot, the EKNN algorithm consistently provided a higher sensitivity and accuracy, and this makes it a robust performer in the classification of the CBIS-DDSM database. Also, noted that the WKNN algorithm consistently attained the highest sensitivity and accuracy, indicating the effectiveness of SMVB-based feature fusion and CCSOA-based feature selection. Moreover, algorithms such as FKNN, LSVM, and QSVM models consistently delivered balanced performance in different experimentations. The HRAT-based contrast enhancement (Table 7) and SMVB-based feature fusion (Table 8) improved Sensitivity and Accuracy, particularly for the WKNN algorithm. The CCSOA-based feature selection (Table 9) further enhanced sensitivity and accuracy for WKNN and EKNN models. As a final point, for the CBIS-DDSM dataset of mammograms, the MKSVM algorithm consistently achieved higher Sensitivity and Accuracy with reasonable runtimes as given in Fig. 11.

4.4 Comparison with State-of-the-Art Approaches

Finally, the proposed framework is compared against the existing research studies and its summary is listed in Table 10. From the list, it is evident that the proposed framework provided a robust performance in classifying breast tumors as illustrated in the plots of Figs. 8 and 11.

5 Conclusion and Future Work

The paper proposed a newer framework applied with digital mammograms taken from two public databases namely INbreast and CBIS-DDSM data. The proposed approach includes significant experimentations starting with database selection and finally analysed with the classification performance. At first, the contrast enhancement on mammograms is carried out for the employed data. The contrast-enhanced mammograms are then trained using EfficientNet-B4 pretrained architecture and compared the outcomes with the results of the same experimentation with original mammogram data. This revealed that the performance of the experimentation using contrast-enhanced mammograms is better. To improve the performance further, serial mid-value-based fusion is employed and it results in substantially improved performance. However, better performance is attained with improved computation. So Chaotic Crow-Search optimization is applied for selecting significant features and thus the classification performance is enhanced in terms of both accuracy and computation. Finally, the proposed framework attained a maximum classification performance of 98.459% (MKSVM algorithm) and 96.175% (EKN algorithm) accuracies with better computation time on INbreast and CBIS-DDSM databases, respectively. The future direction of the proposed framework is towards the segmentation of tumor regions before enhancement and employing breast ultrasound clinical images with U-Net variant architectures for making a robust CAD framework for breast cancer diagnosis.

Data Availability

Data used for the findings will be shared by the corresponding author upon request.

References

Ali Salman, R.: Prevalence of women breast cancer. Cell. Mol. Biomed. Rep. 3(4), 185–196 (2023)

Trieu, P.D.Y., Mello-Thoms, C.R., Barron, M.L., Lewis, S.J.: Look how far we have come: BREAST cancer detection education on the international stage. Front. Oncol. 12, 1023714 (2023)

Arnold, M., Morgan, E., Rumgay, H., Mafra, A., Singh, D., Laversanne, M., Vignat, J., et al.: Current and future burden of breast cancer: global statistics for 2020 and 2040. Breast 66, 15–23 (2022)

Acs, B., Leung, S.C.Y., Kidwell, K.M., Arun, I., Augulis, R., Badve, S.S., Bai, Y., et al.: Systematically higher Ki67 scores on core biopsy samples compared to corresponding resection specimen in breast cancer: a multi-operator and multi-institutional study. Mod. Pathol. 35(10), 1362–1369 (2022)

Sannasi Chakravarthy, S.R., Rajaguru, H.: SKMAT-U-Net architecture for breast mass segmentation. Int. J. Imaging Syst. Technol. 32(6), 1880–1888 (2022)

SR, S.C., Rajaguru, H.: A systematic review on screening, examining and classification of breast cancer. In: 2021 Smart Technologies, Communication and Robotics (STCR), pp. 1–4 (2021)

Bai, J., Posner, R., Wang, T., Yang, C., Nabavi, S.: Applying deep learning in digital breast tomosynthesis for automatic breast cancer detection: a review. Med. Image Anal. 71, 102049 (2021)

Samieinasab, M., Torabzadeh, S.A., Behnam, A., Aghsami, A., Jolai, F.: Meta-health stack: a new approach for breast cancer prediction. Healthc. Anal. 2, 100010 (2022)

Nardin, S., Mora, E., Varughese, F.M., Davanzo, F., Vachanaram, A.R., Rossi, V., Saggia, C., Rubinelli, S., Gennari, A.: Breast cancer survivorship, quality of life, and late toxicities. Front. Oncol. 10, 864 (2020)

Bray, F., Ferlay, J., Soerjomataram, I., Siegel, R.L., Torre, L.A., Jemal, A.: Global cancer statistics 2018: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 68(6), 394–424 (2018)

Dar, R.A., Rasool, M., Assad, A.: Breast cancer detection using deep learning: datasets, methods, and challenges ahead. Comput. Biol. Med. 149, 106073 (2022)

Tan, Y.J., Sim, K.S., Ting, F.F.: Breast cancer detection using convolutional neural networks for mammogram imaging system. In: 2017 International Conference on Robotics, Automation and Sciences (ICORAS), pp. 1–5. IEEE (2017)

Falconí, L.G., Pérez, M., Aguilar, W.G.: Transfer learning in breast mammogram abnormalities classification with MobileNet and NASNet. In: 2019 International Conference on Systems, Signals and Image Processing (IWSSIP), pp. 109–114. IEEE (2019)

Samee, N.A., Alhussan, A.A., Ghoneim, V.F., Atteia, G., Alkanhel, R., Al-Antari, M.A., Kadah, Y.M.: A hybrid deep transfer learning of CNN-based LR-PCA for breast lesion diagnosis via medical breast mammograms. Sensors 22(13), 4938 (2022)

Hekal, A.A., Elnakib, A., Moustafa, H.E.-D.: Automated early breast cancer detection and classification system. SIViP 15, 1497–1505 (2021)

Siddeeq, S., Li, J., Bhatti, H.M.A., Manzoor, A., Malhi, U.S.: Deep learning RN-BCNN model for breast cancer BI-RADS classification. In: Proceedings of the 2021 4th International Conference on Image and Graphics Processing, pp. 219–225. (2021)

Hikmah, N.F., Sardjono, T.A., Mertiana, W.D., Firdi, N.P., Purwitasari, D.: An image processing framework for breast cancer detection using multi-view mammographic images. EMITTER Int. J. Eng. Technol. 136–152 (2022)

Alruwaili, M., Gouda, W.: Automated breast cancer detection models based on transfer learning. Sensors 22(3), 876 (2022)

Almalki, Y.E., Soomro, T.A., Irfan, M., Alduraibi, S.K., Ali, A.: Computerized analysis of mammogram images for early detection of breast cancer. In: Healthcare, vol. 10, no. 5, p. 801. MDPI (2022)

Girija, O.K., Elayidom, S.: Mammogram pectoral muscle removal using fuzzy C-means ROI clustering and MS-CNN based multi classification. Optik 170465 (2022)

Sannasi Chakravarthy, S.R., Rajaguru, H.: Performance analysis of ensemble classifiers and a two-level classifier in the classification of severity in digital mammograms. Soft. Comput. 26(22), 12741–12760 (2022)

Moreira, I.C., Amaral, I., Domingues, I., Cardoso, A., Cardoso, M.J., Cardoso, J.S.: Inbreast: toward a full-field digital mammographic database. Acad. Radiol. 19(2), 236–248 (2012)

Lee, R.S., Gimenez, F., Hoogi, A., Miyake, K.K., Gorovoy, M., Rubin, D.L.: A curated mammography data set for use in computer-aided detection and diagnosis research. Sci. Data 4(1), 1–9 (2017)

Sannasi Chakravarthy, S.R., Bharanidharan, N., Rajaguru, H.: Multi-deep CNN based experimentations for early diagnosis of breast cancer. IETE J. Res. 1–16 (2022)

Huang, S., Liu, Y., Wang, Y., Wang, Z., Guo, J.: A new haze removal algorithm for single urban remote sensing image. IEEE Access 8, 100870–100889 (2020)

Chakravarthy, S.R.S., Bharanidharan, N., Rajaguru, H.: Processing of digital mammogram images using optimized ELM with deep transfer learning for breast cancer diagnosis. Multimed. Tools Appl. 1–25 (2023)

Chakravarthy, S.R.S., Rajaguru, H.: Automatic detection and classification of mammograms using improved extreme learning machine with deep learning. IRBM 43(1), 49–61 (2022)

Tan, M., Le, Q.: Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning, pp. 6105–6114. PMLR (2019)

Zhang, P., Yang, L., Li, D.: EfficientNet-B4-Ranger: a novel method for greenhouse cucumber disease recognition under natural complex environment. Comput. Electron. Agric. 176, 105652 (2020)

Espejo-Garcia, B., Malounas, I., Mylonas, N., Kasimati, A., Fountas, S.: Using EfficientNet and transfer learning for image-based diagnosis of nutrient deficiencies. Comput. Electron. Agric. 196, 106868 (2022)

Meng, T., Jing, X., Yan, Z., Pedrycz, W.: A survey on machine learning for data fusion. Inf. Fusion 57, 115–129 (2020)

Khan, S., Khan, M.A., Alhaisoni, M., Tariq, U., Yong, H.-S., Armghan, A., Alenezi, F.: Human action recognition: a paradigm of best deep learning features selection and serial based extended fusion. Sensors 21(23), 7941 (2021)

Askarzadeh, A.: A novel metaheuristic method for solving constrained engineering optimization problems: crow search algorithm. Comput. Struct. 169, 1–12 (2016)

Naskar, P.K., Bhattacharyya, S., Nandy, D., Chaudhuri, A.: A robust image encryption scheme using chaotic tent map and cellular automata. Nonlinear Dyn. 100, 2877–2898 (2020)

Rajaguru, H., Sannasi Chakravarthy, S.R.: Analysis of decision tree and k-nearest neighbor algorithm in the classification of breast cancer. Asian Pac. J. Cancer Prev. APJCP 20(12), 3777 (2019)

Uddin, S., Haque, I., Lu, H., Moni, M.A., Gide, E.: Comparative performance analysis of K-nearest neighbour (KNN) algorithm and its different variants for disease prediction. Sci. Rep. 12(1), 6256 (2022)

Kumar, B., Vyas, O.P., Vyas, R.: A comprehensive review on the variants of support vector machines. Mod. Phys. Lett. B 33(25), 1950303 (2019)

Farizawani, A.G., Puteh, M., Marina, Y., Rivaie, A.: A review of artificial neural network learning rule based on multiple variant of conjugate gradient approaches. J. Phys. Conf. Ser. 1529(2), 022040 (2020)

Sannasi Chakravarthy, S.R., Rajaguru, H.: Detection and classification of microcalcification from digital mammograms with firefly algorithm, extreme learning machine and non-linear regression models: a comparison. Int. J. Imaging Syst. Technol. 30(1), 126–146 (2020)

He, Z., Lin, M., Xu, Z., Yao, Z., Chen, H., Alhudhaif, A., Alenezi, F.: Deconv-transformer (DecT): a histopathological image classification model for breast cancer based on color deconvolution and transformer architecture. Inf. Sci. 608, 1093–1112 (2022)

Fu, C., Wu, Z., Chang, W., Lin, M.: Cross-domain decision making based on criterion weights and risk attitudes for the diagnosis of breast lesions. Artif. Intell. Rev. 1–29 (2023)

Surendiran, B., Ramanathan, P., Vadivel, A.: Effect of BIRADS shape descriptors on breast cancer analysis. Int. J. Med. Eng. Inform. 7(1), 65–79 (2015)

Ragab, D.A., Sharkas, M., Marshall, S., Ren, J.: Breast cancer detection using deep convolutional neural networks and support vector machines. PeerJ 7, e6201 (2019)

Falconí, L., Pérez, M., Aguilar, W., Conci, A.: Transfer learning and fine tuning in mammogram bi-rads classification. In: 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), pp. 475–480. IEEE (2020)

Mohiyuddin, A., Basharat, A., Ghani, U., Peter, V., Abbas, S., Naeem, O.B., Rizwan, M.: Breast tumor detection and classification in mammogram images using modified YOLOv5 network. Comput. Math. Methods Med. 2022, 1–16 (2022)

Li, H., Niu, J., Li, D., Zhang, C.: Classification of breast mass in two-view mammograms via deep learning. IET Image Proc. 15(2), 454–467 (2021)

El Houby, E.M.F., Yassin, N.I.R.: Malignant and nonmalignant classification of breast lesions in mammograms using convolutional neural networks. Biomed. Signal Process. Control 70, 102954 (2021)

Muduli, D., Dash, R., Majhi, B.: Automated diagnosis of breast cancer using multi-modal datasets: a deep convolution neural network based approach. Biomed. Signal Process. Control 71, 102825 (2022)

Baccouche, A., Garcia-Zapirain, B., Elmaghraby, A.S.: An integrated framework for breast mass classification and diagnosis using stacked ensemble of residual neural networks. Sci. Rep. 12(1), 12259 (2022)

Funding

This research received no external funding.

Author information

Authors and Affiliations

Contributions

SC and BN took care of the review of literature and methodology. VKV and TRM have done the formal analysis, data collection and investigation. JRA has done the initial drafting and statistical analysis. VKV, RS and TRM have supervised the overall project. All the authors of the article have read and approved the final article.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable as the work is carried out on publicly available dataset.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chakravarthy, S., Nagarajan, B., Kumar, V.V. et al. Breast Tumor Classification with Enhanced Transfer Learning Features and Selection Using Chaotic Map-Based Optimization. Int J Comput Intell Syst 17, 18 (2024). https://doi.org/10.1007/s44196-024-00409-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00409-8