Abstract

This article presents a perspective that the interplay between high-level ethical principles, ethical praxis, plans, situated actions, and procedural norms influences ethical AI practices. This is grounded in six case studies, drawn from fifty interviews with stakeholders involved in AI governance in Russia. Each case study focuses on a different ethical principle—privacy, fairness, transparency, human oversight, social impact, and accuracy. The paper proposes a feedback loop that emerges from human-AI interactions. This loop begins with the operationalization of high-level ethical principles at the company level into ethical praxis, and plans derived from it. However, real-world implementation introduces situated actions—unforeseen events that challenge the original plans. These turn into procedural norms via routinization and feed back into the understanding of operationalized ethical principles. This feedback loop serves as an informal regulatory mechanism, refining ethical praxis based on contextual experiences. The study underscores the importance of bottom-up experiences in shaping AI's ethical boundaries and calls for policies that acknowledge both high-level principles and emerging micro-level norms. This approach can foster responsive AI governance, rooted in both ethical principles and real-world experiences.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Research in Human-AI Interaction (HAII) often investigates the distinct ways individuals perceive interactions with humans vis-à-vis computers [28, 31]. Since the Turing test (Turing 1950), this has been a topic of debate. Contemporary HAII studies suggest that humans perceive interactions with AI systems differently from human-to-human interactions, with a discernible preference for the latter [1, 27]. However, the HAII process is often overlooked in discussions about AI governance, as ethical and policy frameworks tend to neglect such ground-level issues. This article underscores the significance of individual-level interactions with AI in the development of comprehensive governance approaches by integrating micro-level situated interactions into wider normative frameworks.

While there is a considerable amount of academic research examining AI technology governance at a macro scale, focusing on high-level ethical principles [33], the everyday contextual experiences that shape AI use and perception often garner less attention. This paper delves into how, in the absence of clear-cut policy and regulatory guidance for AI systems, informal norms emerge from the HAII process. These norms, influenced by specific ethical values, are shaped by context-specific actions in real-world settings. This process can be understood through a five-part conceptual model: international, national, or industry-level ethical principles; company-level ethical praxis (operationalization of ethical principles); plans; situated actions; and procedural norms.

The discussion is structured as follows: a literature review is presented first, providing an overview of previous research on HAII and AI governance. This is followed by a section explaining the methodology used for data collection and analysis and case studies illustrating the emergence of informal norms in HAII. Subsequent sections present the conceptual framework employed in this study, which is derived from the inductive analysis of the data, and the conclusion summarizes the findings and their implications.

2 Literature review

Policy documents for AI often argue that there is a need to embed ethical principles into the technology's design [45], utilizing a variety of technical and organizational methods. These methods may be instituted by the developers [2] or by including end-users in the decision-making process to define the ethical criteria in the technology's design [30].

Much like other academic literature addressing AI governance issues, the literature on HAII governance extensively explores various normative principles that could shape the HAII process. These principles often encompass explainability and interpretability [5, 9, 16, 43], transparency [16, 51], usability [34, 48], human-in-the-loop considerations [3, 10, 19, 53], and human-centered AI [12, 26, 40].

However, the mere existence of ethical principles does not guarantee their successful integration into the technology's design [23, 25]. Early evidence indicates the prevalence of 'ethics washing' practices [37], and corporate lobbying for self-regulatory regimes is a common occurrence [22].

The high-level ethical principles articulated in policy documents are seen to be disconnected from the actual industry practices and the development of tangible governance mechanisms trails behind [20, 23, 25, 29]. Consequently, the academic literature often focuses on case studies where macro-ethical issues arise, such as companies breaching high-level principles leading to theft, harm, or privacy violations. However, these investigations seldom delve into micro-ethical issues—how individuals respond to ethical dilemmas [4].

While the research body on what kind of ethical principles are needed for AI systems and how these principles can be translated into practice is growing, little empirical and highly contextual research is conducted on the issues that emerge once the systems are implemented in practice and interact with people in an everyday working setting [6]. This highlights a significant gap between research on principles and empirical investigation of industrial practices [24]. Research in the latter domain indicates that existing ethical metrics, regardless of their technological efficacy, are often met with skepticism in the practice [15].

The challenges inherent in the process of HAII can be uniquely different from human interactions with other technologies, given the high degree of uncertainty surrounding the capabilities of AI systems once implemented. These systems can produce increasingly complex outputs due to their adaptive nature [52]. Moreover, the continuous learning and evolving behavior of AI systems render HAII a complex socio-technical mechanism with a feedback loop between the system and its operators [8]. Notably, not all AI systems necessitate human involvement in the decision-making loop. Some systems can fully automate specific activities or form hybrid decision-making structures, where artificial and human agents collectively make decisions [11, 49].

The reality of interacting with AI systems frequently deviates from the original design intent. The interface between AI systems and their human operators necessitates further study, regardless of whether it involves the translation of ethical principles in highly contextual environments [23, 25] or the deployment of systems without overt ethical considerations. As ethical controversies in AI companies multiply and signs of employee pushback become increasingly evident, studies suggest that while institutional pressures shape how AI companies tackle ethical dilemmas, they do not solely determine how they can be resolved [50]. A significant portion of these dilemmas is tackled at the individual level rather than the organizational one.

Yet, this micro-social dimension of the socio-technical apparatus frequently gets sidetracked in academic discussions. This holds true even as ethical concerns converge across diverse AI application fields [36], underscoring the urgent need for more holistic research approaches that fully acknowledge the intricate dynamics of AI systems in contextual settings.

This research paper targets this gap in the literature by not looking at how high-level ethical principles can be translated into practice in a top-down manner [21] but rather by investigating how individuals interact with AI systems in their everyday work to see what kind of bottom-up practices emerge from it [7]. The actual problems that AI users face in their work are drastically different from how AI systems are problematized in the policy documents because practitioners do “not consider ethics and, more specifically, ethical principles to be genuine responses to the actual problems that they were facing ‘on the ground’” [17].

This issue is approached through the usage of the concepts of plans and situated actions, where plans can be interpreted as the intended functioning of the machines by design, while situated actions represent how these machines are employed in reality [42]. This distinction between plans and situated actions, when applied to empirical cases, reveals that certain practices that stem from contextual experiences are turned into procedural norms (informal rules) over time by the AI practitioners and operate as a substitute for the abstract ethical principles that often bear little relevance to ground-level practices.

3 Data and methodology

The project was approved by the Human Research Ethics Committee of the Hong Kong University of Science and Technology with Protocol Number: HREP-2021–0071. The project is based on 50 interviews with individuals from AI companies of various sizes, and representatives from the academic and public sectors who are immersed in AI governance and regulatory issues within Russia (see Table 1). The respondents were sent an email invitation to participate in the interview based on online information about their companies. Additionally, a snowballing strategy was utilized to interview other participants with relevant experience. The main areas of focus during these interviews were the difficulties encountered in governing AI, the operationalization of AI ethics in practice, and the broader regulatory obstacles associated with this technology. Field research was carried out in Russia during the months of January and February 2022, with all personal and company data anonymized to guarantee participant confidentiality. Each semi-structured interview lasted between 60 and 90 min.

The interviews were recorded and later transcribed into text documents. By utilizing the grounded theory approach [14], the texts were subjected to inductive coding using NVivo software. Inductive coding was implemented as a systematic process to distill raw interview data into meaningful themes without preconceived categories. Initially, the interview transcripts were thoroughly read multiple times to gain a deep understanding of the content. Using NVivo software, the codes were extracted directly from the participants' language, capturing the essence of their experiences and perspectives. These codes—980 in total—were identified organically.

As the research unfolded, the process of analyzing interview transcripts led to the identification of a reappearing pattern of codes related to plans and situated actions. Six representative case studies related to this issue were chosen for further analysis. These case studies were not preconceived, but rather emerged from the data, reflecting the grounded theory approach [14]. This methodology allows for theories to be developed based on the data, rather than trying to fit the data into existing theories.

These instances were unique in their ability to distinctly highlight the practical application of AI ethics in the face of real-world AI development challenges. They also provided insight into the gap between high-level ethical principles and the realities faced by AI companies. These six instances, therefore, were chosen as case studies because they each encapsulated a unique aspect of the broader themes that emerged from the analysis.

It was observed that each of the case studies describes a certain interplay between the operationalization of ethical principles and using AI technologies in practice. This allowed for the creation of a conceptual framework, which is derived from the data. The framework highlights that ethical principles often exist at a level of abstraction that leaves too much room for superficial operationalization. As such, companies create their own organizational and technical interpretations for each of these principles (praxis). Based on such an operationalized understanding (for example, of the transparency principle), they adjust their work to align with it.

This praxis dictates the development of plans for the technology, where developers strive to ensure that the way they design a technological solution and the way they envision its usage are in line with the ethical praxis.

Situated actions are the real-world behaviors and decisions that occur when individuals interact with AI. These actions are influenced by the context in which the AI is used. It is within these contexts that the best-laid plans encounter reality, leading to moments of compliance, conflict, or compromise.

Over time, the repeated situated actions give rise to procedural norms. These are the established ways of interacting with AI that are recognized and shared among users. They can be thought of as the unwritten rules that govern AI interactions, filling the gaps left by formal regulations. These norms are not static; they evolve as the collective experience with AI grows, leading to a continuous feedback loop to the ethical praxis at the company level.

In the next section, each of the six case studies will be analyzed, showing how they relate to the conceptual framework, which will be introduced in more detail in a subsequent section.

4 Case studies

This section introduces six case studies. Each of the case studies is related to one of the high-level ethical principles associated with the governance of AI. Each of the case studies follows a structure where at first the background and summary of the case is introduced, followed by quotes from the interview respondents relevant to the discussion of the case study. An analysis of the case follows next, where the case is investigated via a lens looking at four characteristics, which include ethical praxis, plans, situated actions, and procedural norms.

4.1 Privacy

A company was developing facial recognition software for potential implementation at an automobile showroom. The client's objective was to identify returning customers as they entered the showroom, display their name and purchase history, and enable staff to provide personalized service. However, this seemingly innocuous request had far-reaching privacy implications, as revealed when the system inadvertently disclosed sensitive personal information, leading to a privacy scandal.

While this request treads a fine line in terms of privacy protection, it is not explicitly unethical. However, a single representative situated action altered the company's approach to such requests in the future. This event is described in the quote that follows.

“Ivan Ivanovich – the director of a company, a wealthy man – came with a family to choose a new car. Toyota Land Cruiser. He comes (to the showroom), and the sales manager comes to him and says that Ivan Ivanovich bought a Toyota Yaris three months ago, asking whether he is satisfied with the car. Then Ivan Ivanovich’s wife asks what kind of Toyota Yaris he bought. Because the Toyota Yaris was bought for his mistress, as it often happens with such Ivan Ivanovichs”. Company CEO

According to the CEO, this situation made the company that developed this solution rethink its attitude toward privacy issues. Before, technological capacities were the only limit to the kind of services the company would provide, but the attitude changed after.

“This is an impudent intervention in the private life of a person. We should not do it anymore. It’s good that you know a lot about a person. You may know his history of purchases and what kind of things you can approach him with. But you shouldn’t be so upfront about saying, ‘hey dude, I remember you, and I know everything about you’”. Company CEO

Analyzing this case study using the categories within the conceptual framework can provide a deeper understanding of the dynamics of ethical AI development in a real-world context.

Ethical Praxis: The company's original operational definition of the privacy principle did not consider the use of facial recognition technology to greet returning customers as a privacy violation. Although the omission of this interpretation of the principle in the initial planning stage was unintentional, it led to the development of a solution that was eventually recognized as an infringement on privacy.

Plans: The company intended to develop a facial recognition system for an automobile showroom that would identify returning customers and display their names and purchase histories. The goal was to enhance customer service by providing personalized greetings and follow-ups on their satisfaction with previous purchases.

Situated Actions: The actual implementation of this plan resulted in an unexpected event or "situated action" in the showroom. The system exposed sensitive personal information, which led to a scandal. This event was not anticipated in the original planning stage and occurred as a result of applying the system in a real-world context.

Procedural Norms: The scandal prompted a significant shift in the company's understanding of the ethical boundaries of its solutions. The CEO acknowledged that their technology had intruded upon a customer's privacy in an inappropriate manner and decided that such behavior would be unacceptable in the future.

Ethical Praxis: Stemming from the real-world application of their technology, this acknowledgment fed back into the company's ethical praxis, establishing new behavioral norms based on a refined operationalization of the privacy principle.

4.2 Fairness

A company was commissioned to design a credit scoring algorithm for one of Russia's poorest regions. As the company processed the region's behavioral data, it discovered that a strictly accurate scoring algorithm would deny credit to nearly all residents due to their economic circumstances. Consequently, the system had to be designed to be more lenient, allowing for credit approvals despite not accurately reflecting the region's economic reality.

The situation is aptly summarized in the following quote:

“We have an underdeveloped region and a scoring system. This scoring system will always deny credits to the inhabitants of this underdeveloped region. We think that this algorithm is unethical because it is not fair. But the problem is not that this algorithm is unfair; it is unfair that we have poor regions. This algorithm is unfair not because it creates this data but because it anchors it. It is correct in how it simulates the behavior of a real human who would also deny credit to these people. But it does not fix this problem. And when we are changing the mathematics of the scoring system—at this moment, the algorithms start working incorrectly… We are discussing the bias of the algorithm and data, that our data is wrong, our developers are wrong, and the companies are wrong. Everyone is wrong, but the real world is right. If we get rid of our biases and start reading the information from the ideal real world, then the algorithm would work perfectly—this is wrong, of course. The reality that we face in our practice is harrowing and different…The true solution to this problem is not making changes in the scoring algorithm, but investments in the region”. Company CEO

Looking at this case study through the lens of a research framework highlights such observations:

Ethical Praxis: The principle of fairness is central to this case. The company acknowledged that an algorithm designed purely for accuracy in credit scoring would unjustly deny nearly all residents of a certain region access to credit, given their economic circumstances.

Plans: The initial plan involved developing a credit scoring algorithm that would accurately reflect the ability of individuals in a specific region to repay credit. The company aimed to create a system that would determine creditworthiness fairly and accurately based on behavioral data.

Situated Actions: However, the real-world implementation of this plan revealed an unexpected issue. When applied to actual data from the region, the system would have excluded almost all residents from access to credit. Recognizing this outcome as unjust, the company decided to modify the algorithm to be less strict, despite it resulting in a less accurate reflection of the region's economic reality. This decision represents a situated action that diverged from the initial plans due to the real-world context.

Procedural Norms: The company's decision to adjust the algorithm for fairness, taking into account the region's economic challenges, established a new procedural norm. In order to meet their client's needs (a loan-giving organization), they modified their algorithms accordingly.

Ethical Praxis: This modification prompted a reevaluation of what fairness meant for the company, paving the way for a potential re-operationalization of the principle.

4.3 Transparency

In a project focused on digitizing the Customer Relationship Management (CRM) system for a country’s public service, a company designed a system to collect data on numerous processes within public facilities and monitor the performance and time management of the employees. The intent was to suggest actions to enhance productivity. However, the transparency provided by the system about employees' work habits, time allocation, and effectiveness led to discontent among workers.

This attitude is captured in the following quote:

“The increase of transparency is traditionally not suitable, is not beneficial, and is not needed. Especially if we are talking about the employees of the lower level, for whom the implementation of the procedural system, which controls the time, the responsibility, and the quality of the service –is not always seen in a positive light. Because people feel different about their work, not everyone is fully devoted to it”. Head of Machine Learning Department

Analyzing this case study using the four categories within the research framework can shed light on the intricate dynamics involving transparency in AI applications:

Ethical Praxis: The ethical principle at stake in this case is transparency. Although transparency is generally regarded as a positive attribute in AI systems, its application to human performance in the workplace unexpectedly resulted in discontent. Therefore, the original operationalization of the principle did not consider its potential impact on human actions.

Plans: The initial plan was to digitize the CRM system for a public service, aiming to collect data on various processes within public facilities, monitor employee performance and time management, and suggest actions to improve productivity.

Situated Actions: However, the implementation of this plan revealed that the transparency provided by the AI system about employees' work habits and effectiveness led to resentment. This adverse reaction was an unforeseen situated action that emerged from applying the plan in a real-world context. The developers had not predicted that transparency, typically valued in AI systems, would encounter resistance when directed at human behavior in the workplace.

Procedural Norms: The unexpected employee response necessitated a reevaluation of the methods used to monitor human performance and disclose behavioral patterns.

Ethical Praxis: The recognition that transparency, while beneficial for AI, can lead to negative reactions when applied to human aspects, prompted the company to broaden its interpretation of what the principle of transparency entails.

4.4 Human oversight

A company developed a recommender AI system for an industrial facility to suggest actions for tuning chemical equipment based on predictive analytics of ongoing chemical reactions. However, the system's implementation led to two distinct operator behavioral patterns: distrust in the system and lack of motivation to use it. These behaviors were influenced by the real-world context at the industrial facility and the operators' past experiences.

The following quote encapsulates this dynamic:

“We distinguish distrust in the system and the lack of motivation to use it. Distrust arises when a system lies to a human. Initially, the operator has no reason not to trust the system. Suppose he was involved in the process of the development of the system if he knew that there are no imaginary causal mechanisms. When the pressure in the barrel grows, the amount of product exiting from below will also increase, and the temperature will rise. But the air humidity will not affect how the product moves from one reservoir to another. These correlations are physically impossible, so we do not account for them in the system. When everything is explained to the operator, he has no reason not to trust the system. But distrust arises only when the system makes a mistake, and the operator is punished. Or he sees that it is making a mistake in real time and tries to give him the wrong instructions. The distrust grows…

On the other hand, the lack of motivation to use the system grows when the system tells him what to do. And this contradicts his usual patterns of working behavior. Then a stark resistance increases in the operator. He is used to working in a specific range of temperatures. For example, hold the temperature in the oven between 500 and 510 degrees. But the system tells him to change the temperature to 512 degrees… He starts to feel uncomfortable. ‘Why does the system recommend me something? I have been working here for 20 years, and everything is good and stable. If I follow the recommendation, something will change, I will stop the production and not fulfill the plan, and I will be punished. I’d rather sit quietly and not follow the recommendations”. Company CEO

This case study can be interpreted through the lens of the research framework as follows:

Ethical Principle: The principle in focus here is human oversight. In AI regulatory documents, this principle is frequently advocated as a safeguard against the perils of full automation. The underlying assumption is that human involvement in the decision-making process will maintain control over critical decisions, particularly in high-risk situations.

Plans: The intention was to implement a recommender AI system at an industrial facility. This system was engineered to propose specific actions for adjusting chemical equipment, utilizing predictive analytics of ongoing chemical reactions.

Situated Actions: However, the practical application of the system prompted unexpected reactions from the operators. Two distinct behavioral patterns emerged: distrust in the system and a decrease in motivation to engage with it. These behaviors were shaped by the real-world environment of the industrial facility and the operators' previous experiences. For example, if the system's error resulted in repercussions for an operator, distrust would intensify. Conversely, if the system's suggestions conflicted with the operator’s established work routines, resistance and a decline in motivation would ensue.

Procedural Norms: These unforeseen reactions brought about the insight that the principle of human oversight does not inherently engender trust between the operator and the AI system. The designers recognized that situational contexts and personal experiences significantly impact the successful integration of an AI system in the workplace. Consequently, training sessions were established for system operators to educate them about the system's capabilities and limitations.

Ethical Praxis: From this experience, a more sophisticated operationalization of the human oversight principle evolved. It accounts for the complexities of fostering trust between the operator and the AI system.

4.5 Social impact

This case study revolves around an automated device utilizing facial recognition to determine if industrial or construction workers are under the influence of alcohol. The implementation of this device led to strong opposition from employees, resulting in a legal clash that eventually saw the system deemed illegal under Russian law. As per the law, only a qualified medical professional can determine whether a person is intoxicated. This ruling forced the company to uninstall the systems.

This situation is aptly summarized in the quote below:

“Alcoholism is a real problem… We’ve had a big client in the far eastern region. There were problems like this. A new foreman joins the company. The same day a delegation of workers came to him saying they hadn’t been paid for six months. He gave out the salary on the same day. The next day nobody came to work. Everyone went on a bender… One facility installed the devices. Every device costs around 1.5 million rubles. They installed 12 devices in the facility. Big money was spent—more than 20 million rubles. The next day the system was turned off because someone wrote a complaint to the prosecutor’s office… The complaint was that employees are forced to undergo a procedure that is not mandated and regulated by law. They do not have to undergo it. There is a presumption of guilt. Though, according to law, the employee has to be sober at work, no regulations would allow the company to test the employee. Except for the classical situation, when his actions are suspicious, he can be sent for a medical examination… According to the law, the only way to determine if someone is alcoholically intoxicated is for the medical worker to examine according to the protocols… In the case of our devices, you cannot fire a person just because our device shows that he is drunk. You cannot fire, fine, or implement sanctions against him. The only thing you can do is to ask if he agrees with the results of the test. If he says yes and signs it on the paper, then you can sanction him. If he says no, the only option is to send him to the medical examination”. Company CEO

This case study can be analyzed using the provided framework as follows:

Ethical Praxis: The principle at stake in this case is social impact, with particular emphasis on privacy rights and the use of automated devices to monitor employee behavior.

Plans: The initial plan involved introducing an automated system at an industrial site designed to detect alcohol influence among employees. This system employed facial recognition technology to verify the identity of the individuals being tested.

Situated Actions: The deployment of this system met with considerable resistance from employees, who felt that it infringed upon their privacy rights. A formal complaint was filed with the local prosecutor's office, which concurred with the employees and declared that the system violated Russian law. Consequently, the devices were removed.

Procedural Norms: This incident precipitated a reassessment of the approach to deploying such systems. The company recognized the necessity of engaging legal services to establish appropriate regulatory procedures before the installation of similar devices, to reduce legal risks and employee resistance.

Ethical Praxis: This acknowledgment—that systems intended to promote a positive social impact (such as maintaining a sober workforce to potentially reduce workplace accidents) might encounter resistance—has influenced the refinement of the social impact principle.

4.6 Accuracy

This case highlights the challenge of ensuring accuracy in AI systems, particularly those used for pattern recognition in medical imaging. The case focuses on the inconsistencies and inaccuracies that can arise during the data labeling process, which is crucial for training AI models.

This issue is particularly prevalent in AI technologies used for pattern recognition in the medical imaging services [18]. Initial image labeling for model training must be conducted by professional medical radiologists. However, when radiologists label the data, there can be considerable differences in their interpretations. One radiologist might identify a clear pathology, while another may see nothing of concern. Moreover, a single radiologist's opinion about a particular image could change over time.

Respondents indicated that there is a complete consensus among different radiologists for only one-third of the images. Despite the low likelihood of validating the system and benchmarking it against a definitive ground truth, these systems continue to be developed and implemented as part of pilot projects in various clinics across the country. These concerns were voiced by several companies working with AI systems for medical pattern recognition and are encapsulated in the following quotes:

“Variations in the answers of radiologists is the talk of the town… One-third of all the answers with full consensus is very little”. Head of Machine Learning Department

“A general opinion about data labeling by doctors is that it is [obscenity]. Generally, labeling of medical images is [obscenity]. Because it is not consistent. There is never ground truth. Because nobody knows what it is. When one doctor sees a pathology, another one can see nothing. The same doctor can label the same image differently. It is a big [obscenity] [obscenity]”. Head of Machine Learning Department

“Another problem is that data labeling happens in a vacuum, as all images are anonymized. In real life, the doctor sees not only the medical image but also a patient; he can ask him something. Here it is a full vacuum. Both the doctors and the system work without this meta-information. This is very, very difficult. To overcome this, we are cross-labeling the data with the help of 3-5 doctors for each research project. This, on the one hand, helps but also brings additional difficulties. Because it is hard to understand how to train the model on this data and to validate it later”. Company CEO

“The main problem stemming from the lack of the ground truth is that you cannot validate the model. The model can learn certain features. And even if the labeling is bad, sometimes it can make a good prediction. But the problem is that you cannot validate it”. Company CEO

This case study can be evaluated using the provided framework as follows:

Ethical Praxis: The central principle in this case is accuracy. AI systems are expected to deliver reliable and precise results, particularly in critical domains such as healthcare, where inaccuracies can have dire consequences.

Plans: The initial strategy was to utilize AI technology for pattern recognition in medical imaging, with model training image labeling carried out by professional medical radiologists.

Situated Actions: The rollout of this strategy uncovered significant challenges due to divergent interpretations among radiologists. Disparities in diagnoses from different radiologists for the same image, as well as fluctuations in a single radiologist's assessments over time, introduced inconsistencies. These discrepancies compromised the data quality used to train the AI models, ultimately impacting the systems' outputs. Despite these issues, the development and testing of the systems persist across various clinics.

Procedural Norms: In response to these challenges, a new protocol was adopted. Medical staff are now advised to consider the system's output as a consultative second opinion, not a conclusive diagnosis. To enhance accuracy, some organizations have begun employing multiple radiologists, often 3–5, to collaboratively annotate each set of data for research purposes. Nevertheless, the fundamental problem of inconsistent labeling remains an ongoing obstacle, complicating the validation and training processes for these models.

Ethical Praxis: Consequently, the operationalization of the accuracy principle in this scenario has become less rigid, allowing the continued development and implementation of these systems despite the lack of comprehensive validation. In addition to that, a new method to achieve higher accuracy through involving multiple radiologists was introduced.

5 Conceptual framework

A conceptual framework—derived from the inductive analysis of the data—consists of five interrelated parts used as a lens to analyze the empirical case studies in Sect. 4. There are five key categories used in the framework: international, national, or industry-level ethical principles; company-level ethical praxis (operationalization of ethical principles); plans; situated actions; and procedural norms.

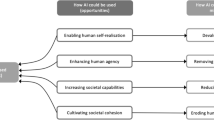

First, high-level ethical principles are operationalized at the company level in the form of ethical praxis. Ethical praxis then informs the plans for the technology, which align with the operationalized ethical principles. These plans are subsequently shaped by situated actions, which become routinized into procedural norms, feeding back and refining the ethical praxis—altering the understanding of how high-level ethical principles can be operationalized based on contextual experiences. Each of these categories will be introduced in more detail in the following paragraphs (Fig. 1).

In the realm of AI governance, high-level ethical principles function as guiding frameworks. These frameworks primarily outline the ultimate objectives at the international, national, or industry level, such as embedding ethical principles like transparency and fairness into the system's design. However, they often lack specific guidelines for AI practitioners, leaving much of the execution to individual discretion.

This ambiguity regarding the actual meaning of the principles paves the way for varying interpretations and operationalizations at the company level. Given that there are no unified guidelines on how to operationalize principles such as transparency in different contexts, two companies may have different understandings of what this principle means. The manner in which a company decides to operationalize high-level ethical principles and put them into their technological development can be referred to as ethical praxis. Ethical praxis, therefore, embodies the practical steps and methodologies that organizations adopt to interpret and integrate ethical principles into their AI systems, ensuring these principles are not just theoretical ideals but active components of the socio-technical fabric. As the reinterpretation of ethical principles and their role in practice is a continuous process, which also includes approaches to conducting a certain task without explicit ethical motivation behind it, praxis is a more holistic term encompassing a wider variety of actions than just conducting a certain practice in a regulated way.

In order to align the design and envisioned usage of technologies with the ethical praxis, companies create plans for it. In her seminal work "Human–Machine Reconfigurations," Lucy Suchman [42] defines a plan as a series of actions designed to achieve a predetermined outcome. This model suggests action as a form of problem-solving, wherein an individual must chart a course from a starting point to a desired goal, given certain constraints. Plans serve as general guidelines for conducting specific activities. These guidelines aid individuals in effectively navigating tasks [41].

Through observation of how “first-time” users of Xerox photocopier machines interact with it to conduct specific tasks, Suchman found that in-situ interactions often take a very different shape from the interactions that were initially planned by the designers of the machine [42], because the execution of any plan always involves additional actions and work—beyond to what has been specified in the original plan [35]. Once put into the contextual environment, every plan can be followed, reformulated, worked around, or abandoned based on external contextual factors' influence over the purposeful action [35].

This notion has been conceptualized as situated actions—“the view that every course of action depends in essential ways on its material and social circumstances” [42]. As such, plans represent future action, but the action in a real-life situation can be drastically different from how it was envisaged in the plan. However, plans and situated actions should not be understood through a dichotomic lens (actions in the head versus actions in the world [32]) as they are not opposed to each other [38], despite its common perception as such [47].

Situated actions, then, can be understood as a pragmatist effect produced when the plans are implemented in real-world circumstances and how the behavior of those implementing these plans has to be adjusted for the plan to be executed [39]. The social circumstances within which the action is to be completed influence the process of decision-making by narrowing down the number of options for possible actions and allowing for unintended (unplanned) consequences to occur—showing that the possibility of mistake is embedded in the idea of situated actions [46]. The concept of situated actions, however, does not argue that the success of every action is derived from the environment within which it is conducted, acknowledging the importance of the planning aspect in it [32].

Over time, a series of situated actions within a routine environment can evolve into a practiced behavior independent of the original plan [13]—a procedural norm. These norms then feed back into the understanding of what ethical praxis entails, by enriching it with contextual peculiarities of its application in a specific situation.

6 Discussion

The six case studies presented exhibit the intricate dynamics between high-level ethical principles, ethical praxis, plans, situated actions, and procedural norms in the realm of AI development and implementation. Each case study sheds light on a distinct ethical principle: privacy, fairness, transparency, human oversight, social impact, and accuracy. Analyzing each case study through the proposed framework highlights that the relationships between these four categories form a complex feedback loop that shapes the ethical boundaries of the design and use of AI systems.

While all the case studies originate from fieldwork conducted in Russia, a region with a distinct regulatory environment for AI, their insights remain applicable globally. This is largely attributed to the global trend wherein despite ongoing discussions about formal AI regulatory initiatives, the majority of AI applications still operate in a regulatory void. Consequently, the feedback loop model conceptualized in this article holds relevance across any region where the implementation of AI systems remains largely unregulated.

Firstly, high-level ethical principles serve as the cornerstone for AI development, setting the moral compass for what the technology should and should not do. However, these principles can be overlooked, misinterpreted, or insufficiently operationalized as part of the ethical praxis, leading to unintended ethical implications during the implementation phase. For instance, the case study on privacy (4.1) demonstrates how the lack of an all-encompassing operationalized definition of personal privacy led to a system that infringed on this principle. Similarly, the case studies on fairness (4.2) and accuracy (4.6) highlight how the initial plans, although ethically sound, resulted in unfair and inaccurate outcomes due to real-world complexities.

Situated actions refer to the unforeseen situations and problems that arise when the planned AI systems are implemented in real-world contexts. As seen in the case studies, these actions often reveal ethical considerations that were not addressed or anticipated in the planning stage. For example, the case studies on transparency (4.3), human oversight (4.4), and social impact (4.5) demonstrate how real-world implementation can lead to employee discontent, distrust, and legal disputes, respectively.

The feedback loop is completed by procedural norms, which emerge from the practical experience of implementing AI systems. These norms reflect the lessons learned from the situated actions and have the potential to influence new ways to operationalize ethical principles at a company level (praxis). For instance, the case study on social impact (4.5) shows how a legal clash led to a new procedural norm where legal services are provided before installing AI systems. Similarly, the case study on accuracy (4.6) reveals a new practice of treating the AI system's prediction as a second opinion and cross-labeling the data with the help of multiple doctors.

The framework derived from these case studies indicates that the ethical development and implementation of AI is an iterative process, where each execution of the feedback loop refines the alignment between ethical praxis and actual usage of technologies. This process continues to evolve, guided by the continuously changing landscape of AI implementation, affecting the evolution of ethical praxis.

However, establishing a fully closed feedback loop that also encompasses high-level ethical principles is not currently feasible (Fig. 1). This limitation is primarily due to the absence of systematic investigations into the range of ethical practices at the company level. Consequently, it remains speculative whether different companies are exhibiting similar behaviors as they navigate the framework presented here. Comprehensive studies involving a diverse array of companies—varying in size and focusing on AI solutions across different sectors—should be conducted to identify such patterns. Such research could integrate high-level ethical principles into the feedback loop, refining abstract categories through empirical evidence from the ground up.

7 Conclusion

This article captures a feedback loop between high-level ethical principles, ethical praxis, plans, situated actions, and procedural norms. The study traces how situated actions that challenge original plans for ethical operationalization turn into procedural norms over time for each of the case studies. These real-world examples underscore the significance of context in the application of these technologies and the pivotal role of human factors in this process. It is human behavior that often catalyzes changes to preconceived plans for technology use. Situated actions arise at the crossroads of human and machine behavior in a deeply contextual manner. When these actions become routine, they evolve into informal norms that dictate how technologies are utilized.

From a practical standpoint, the evidence gathered calls for a more granular approach to AI governance, particularly within corporate entities. The study demonstrates that, beyond adhering to overarching ethical tenets, it is vital for corporate stakeholders—such as Chief Legal Officers, Risk Managers, or CEOs in smaller firms—to actively engage with and shape the informal norms that arise from HAII. These ground-level norms, emerging from direct operational experiences, exert a profound impact on the application of AI technologies.

Therefore, it is crucial for organizational policies to be crafted with a keen awareness of these evolving norms, incorporating them into the existing governance structures. Such an approach would lead to a more holistic and efficacious AI governance strategy that truly reflects the intricate and evolving nature of HAII. This strategy should be initiated at the inception of an AI use case, encompassing the design, development, and continuous operation phases, ensuring that the feedback loop informs each stage.

The communication between corporate officers and data science leaders within the company is pivotal in championing this adaptive governance. They should begin by embedding these considerations into the AI lifecycle at the earliest conceptual stage and continue to adapt and refine these norms throughout the system’s active use. By doing so, governance would not only be grounded in high-level ethical principles but also deeply connected to the hands-on experiences and emerging standards from AI's practical application. The result would be a governance model that is both resilient and flexible, capable of guiding ethical AI development and deployment at every level.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Ashktorab Z., Liao, Q. V., Dugan, C., Johnson, J., Pan, Q., Zhang, W., Kumaravel, S., & Campbell, M. (2020). Human-AI Collaboration in a Cooperative Game Setting: Measuring Social Perception and Outcomes. Proceedings of the ACM on Human-Computer Interaction, 4(CSCW2), 96:1–96:20. https://doi.org/10.1145/3415167

Ayling, J., Chapman, A.: Putting AI ethics to work: Are the tools fit for purpose? AI and Ethics (2021). https://doi.org/10.1007/s43681-021-00084-x

Benedikt, L., Joshi, C., Nolan, L., Henstra-Hill, R., Shaw, L., & Hook, S. (2020). Human-in-the-loop AI in government: A case study. Proceedings of the 25th International Conference on Intelligent User Interfaces, 488–497. https://doi.org/10.1145/3377325.3377489

Bezuidenhout, L., Ratti, E.: What does it mean to embed ethics in data science? An integrative approach based on microethics and virtues. AI & Soc. 36(3), 939–953 (2021). https://doi.org/10.1007/s00146-020-01112-w

Bhatt, U., Andrus, M., Weller, A., & Xiang, A. (2020). Machine Learning Explainability for External Stakeholders. arXiv:2007.05408 [Cs]. http://arxiv.org/abs/2007.05408

Broomfield, H., Reutter, L.: In search of the citizen in the datafication of public administration. Big Data Soc. 9(1), 20539517221089304 (2022). https://doi.org/10.1177/20539517221089302

Brusseau, J.: From the ground truth up: Doing AI ethics from practice to principles. AI & Soc. (2022). https://doi.org/10.1007/s00146-021-01336-4

Calero Valdez, A., & Ziefle, M. (2018). Human Factors in the Age of Algorithms. Understanding the Human-in-the-loop Using Agent-Based Modeling. In G. Meiselwitz (Ed.), Social Computing and Social Media. Technologies and Analytics (pp. 357–371). Springer International Publishing. https://doi.org/10.1007/978-3-319-91485-5_27

Carvalho, D. V., Pereira, E. M., & Cardoso, J. S. (2019). Machine Learning Interpretability: A Survey on Methods and Metrics. Electronics, 8(8), Article 8. https://doi.org/10.3390/electronics8080832

Crockett, K., Garratt, M., Latham, A., Colyer, E., Goltz, S.: Risk and trust perceptions of the public of artifical intelligence applications. Int. Joint Conf. Neural Netw. (IJCNN) 2020, 1–8 (2020). https://doi.org/10.1109/IJCNN48605.2020.9207654

Enarsson, T., Enqvist, L., Naarttijärvi, M.: Approaching the human in the loop—legal perspectives on hybrid human/algorithmic decision-making in three contexts. Inform. Commun. Technol. Law 31(1), 123–153 (2022). https://doi.org/10.1080/13600834.2021.1958860

Garcia-Gasulla, D., Cortés, A., Alvarez-Napagao, S., & Cortés, U. (2020). Signs for Ethical AI: A Route Towards Transparency. arXiv:2009.13871 [Cs]. http://arxiv.org/abs/2009.13871

Gherardi, S. (2008). Situated knowledge and situated action: What do practice-based studies promise? (pp. 516–525). https://doi.org/10.4135/9781849200394.n89

Glaser, B., Strauss, A.: Discovery of grounded theory: strategies for qualitative research. Routledge (2017). https://doi.org/10.4324/9780203793206

Gordon, M., Zhou, K., Patel, K., Hashimoto, T., & Bernstein, M. (2021). The Disagreement Deconvolution: Bringing Machine Learning Performance Metrics In Line With Reality (p. 14). https://doi.org/10.1145/3411764.3445423

Hagras, H.: Toward human-understandable. Explainable AI. Comput. 51(9), 28–36 (2018). https://doi.org/10.1109/MC.2018.3620965

Henriksen, A., Enni, S., & Bechmann, A. (2021). Situated Accountability: Ethical Principles, Certification Standards, and Explanation Methods in Applied AI (p. 585). https://doi.org/10.1145/3461702.3462564

Kawamleh, S.: Against explainability requirements for ethical artificial intelligence in health care. AI Ethics 3(3), 901–916 (2023). https://doi.org/10.1007/s43681-022-00212-1

Koulu, R.: Human control over automation: EU policy and AI ethics. Euro. J. Legal Stud. 12, 9–46 (2020). https://doi.org/10.2924/EJLS.2019.019

Larsson, S. (2020). On the Governance of Artificial Intelligence through Ethics Guidelines. Asian Journal of Law and Society, 1–15. https://doi.org/10.1017/als.2020.19

Li, B., Qi, P., Liu, B., Di, S., Liu, J., Pei, J., Yi, J., & Zhou, B. (2022). Trustworthy AI: From Principles to Practices (arXiv:2110.01167). arXiv. https://doi.org/10.48550/arXiv.2110.01167

Lock, I., Seele, P.: Deliberative lobbying? Toward a noncontradiction of corporate political activities and corporate social responsibility? J. Manag. Inq. 25(4), 415–430 (2016). https://doi.org/10.1177/1056492616640379

Morley, J., Elhalal, A., Garcia, F., Kinsey, L., Mökander, J., Floridi, L.: Ethics as a service: a pragmatic operationalisation of AI ethics. Mind. Mach. 31(2), 239–256 (2021). https://doi.org/10.1007/s11023-021-09563-w

Morley, J., Floridi, L., Kinsey, L., & Elhalal, A. (2019). From What to How: An Initial Review of Publicly Available AI Ethics Tools, Methods and Research to Translate Principles into Practices. arXiv:1905.06876 [Cs]. http://arxiv.org/abs/1905.06876

Morley, J., Kinsey, L., Elhalal, A., Garcia, F., Ziosi, M., Floridi, L.: Operationalising AI ethics: Barriers, enablers and next steps. AI & Soc. (2021). https://doi.org/10.1007/s00146-021-01308-8

Morris, A., Siegel, H., & Kelly, J. (2020). Towards a Policy-as-a-Service Framework to Enable Compliant, Trustworthy AI and HRI Systems in the Wild. arXiv:2010.07022 [Cs]. http://arxiv.org/abs/2010.07022

Mou, Y., Xu, K.: The media inequality: comparing the initial human-human and human-AI social interactions. Comput. Hum. Behav. 72, 432–440 (2017). https://doi.org/10.1016/j.chb.2017.02.067

Mühlhoff, R.: Human-aided artificial intelligence: Or, how to run large computations in human brains? Toward a media sociology of machine learning. New Media Soc. 22(10), 1868–1884 (2020). https://doi.org/10.1177/1461444819885334

Munn, L.: The uselessness of AI ethics. AI and Ethics 3(3), 869–877 (2023). https://doi.org/10.1007/s43681-022-00209-w

Nakao, Y., Stumpf, S., Ahmed, S., Naseer, A., & Strappelli, L. (2022). Towards Involving End-users in Interactive Human-in-the-loop AI Fairness (arXiv:2204.10464). arXiv. https://doi.org/10.48550/arXiv.2204.10464

Neumayer, C., Sicart, M.: Probably not a game: Playing with the AI in the ritual of taking pictures on the mobile phone. New Media Soc. 25(4), 685–701 (2023). https://doi.org/10.1177/14614448231158654

Norman, D. A. (1993). Cognition in the Head and in the World: An Introduction to the Special Issue on Situated Action. Cognitive Science, 17(1), 1–6. https://doi.org/10.1207/s15516709cog1701_1

Prem, E.: From ethical AI frameworks to tools: a review of approaches. AI Ethics 3(3), 699–716 (2023). https://doi.org/10.1007/s43681-023-00258-9

Pyarelal, S., & Das, A. K. (2018). Automating the design of user interfaces using artificial intelligence. NordDesign 2018. DS 91: Proceedings of NordDesign 2018, Linköping, Sweden, 14th - 17th August 2018. https://www.designsociety.org/publication/40913/Automating+the+design+of+user+interfaces+using+artificial+intelligence

Rooksby, J. (2013). Wild in the Laboratory: A Discussion of Plans and Situated Actions. ACM Transactions on Computer-Human Interaction (TOCHI), 20. https://doi.org/10.1145/2491500.2491507

Ryan, M., Antoniou, J., Brooks, L., Jiya, T., Macnish, K., Stahl, B.: Research and practice of AI ethics: a case study approach juxtaposing academic discourse with organisational reality. Sci. Eng. Ethics 27(2), 16 (2021). https://doi.org/10.1007/s11948-021-00293-x

Schultz, M.D., Seele, P.: Towards AI ethics’ institutionalization: Knowledge bridges from business ethics to advance organizational AI ethics. AI and Ethics (2022). https://doi.org/10.1007/s43681-022-00150-y

Sharrock, W., Button, G.: Plans and situated action ten years on. J. Learn. Sci. 12(2), 259–264 (2003)

Shilling, C.: Physical capital and situated action: a new direction for corporeal sociology. Br. J. Sociol. Educ. 25(4), 473–487 (2004)

Shneiderman, B. (2020). Bridging the Gap Between Ethics and Practice: Guidelines for Reliable, Safe, and Trustworthy Human-centered AI Systems. ACM Transactions on Interactive Intelligent Systems, 10(4), 26:1–26:31. https://doi.org/10.1145/3419764

Suchman, L.: Writing and reading: a response to comments on plans and situated actions. J. Learn. Sci. 12(2), 299–306 (2003). https://doi.org/10.1207/S15327809JLS1202_10

Suchman, L. (2006). Human-machine reconfigurations: Plans and situated actions (2nd Edition).

Tomsett, R., Preece, A., Braines, D., Cerutti, F., Chakraborty, S., Srivastava, M., Pearson, G., Kaplan, L.: Rapid trust calibration through interpretable and uncertainty-aware AI. Patterns 1(4), 100049 (2020). https://doi.org/10.1016/j.patter.2020.100049

TURING, A. M. (1950). I.—COMPUTING MACHINERY AND INTELLIGENCE. Mind, LIX(236), 433–460. https://doi.org/10.1093/mind/LIX.236.433

Urquhart, L., & Rodden, T. (2016). A Legal Turn in Human Computer Interaction? Towards ‘Regulation by Design’ for the Internet of Things [SSRN Scholarly Paper]. https://doi.org/10.2139/ssrn.2746467

Vaughan, D.: Rational choice, situated action, and the social control of organizations. Law Soc. Rev. 32(1), 23–61 (1998). https://doi.org/10.2307/827748

Vera, A.H., Simon, H.A.: Situated action: a symbolic interpretation. Cogn. Sci. 17(1), 7–48 (1993). https://doi.org/10.1207/s15516709cog1701_2

Vorm, E.S.: Computer-centered humans: why human-AI interaction research will be critical to successful AI integration in the DoD. IEEE Intell. Syst. 35(04), 112–116 (2020). https://doi.org/10.1109/MIS.2020.3013133

Wiethof, C., & Bittner, E. (2021). Hybrid Intelligence – Combining the Human in the Loop with the Computer in the Loop: A Systematic Literature Review. ICIS 2021 Proceedings. https://aisel.aisnet.org/icis2021/ai_business/ai_business/11

Winecoff, A.A., Watkins, E.A.: Artificial Concepts of Artificial Intelligence: Institutional Compliance and Resistance in AI Startups. (2022). https://doi.org/10.1145/3514094.3534138

Wu, W., Huang, T., Gong, K.: Ethical principles and governance technology development of AI in China. Engineering 6(3), 302–309 (2020). https://doi.org/10.1016/j.eng.2019.12.015

Yang, Q., Steinfeld, A., Rosé, C., & Zimmerman, J. (2020). Re-examining Whether, Why, and How Human-AI Interaction Is Uniquely Difficult to Design. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–13). Association for Computing Machinery. https://doi.org/10.1145/3313831.3376301

Zetzsche, D. A., Arner, D. W., Buckley, R. P., & Tang, B. (2020). Artificial Intelligence in Finance: Putting the Human in the Loop (SSRN Scholarly Paper ID 3531711). Social Science Research Network. https://papers.ssrn.com/abstract=3531711

Funding

Open access funding provided by Hong Kong University of Science and Technology.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author states that there is no conflict of interest.

Research involving human participants

The project was approved by the Human Research Ethics Committee of the Hong Kong University of Science and Technology with Protocol Number: HREP-2021–0071.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Papyshev, G. Governing AI through interaction: situated actions as an informal mechanism for AI regulation. AI Ethics (2024). https://doi.org/10.1007/s43681-024-00446-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43681-024-00446-1