Abstract

Many papers in the intersection of theoretical and applied algorithms show that the simple, asymptotically less efficient algorithm, performs better than the bestcomplex theoretical algorithms on random data or in specialized “real world” applications. This paper considers the Knuth–Morris–Pratt automaton, and shows a counter-intuitive practical result. The classical pattern matching paradigm is that of seeking occurrences of one string—the pattern, in another—the text, where both strings are drawn from an alphabet set \(\Sigma\). Assuming the text length is n and the pattern length is m, this problem can naively be solved in time O(nm). In Knuth, Morris and Pratt’s seminal paper of 1977, an automaton, was developed that allows solving this problem in time O(n) for any alphabet. This automaton, which we will refer to as the KMP-automaton, has proven useful in solving many other problems. A notable example is the parameterized pattern matching model. In this model, a consistent renaming of symbols from \(\Sigma\) is allowed in a match. The parameterized matching paradigm has proven useful in problems in software engineering, computer vision, and other applications. It has long been believed that for texts where the symbols are uniformly random, the naive algorithm will perform as well as the KMP algorithm. In this paper, we examine the practical efficiency of the KMP algorithm versus the naive algorithm on a randomly generated text. We analyze the time under various parameters, such as alphabet size, pattern length, and the distribution of pattern occurrences in the text. We do this for both the original exact matching problem and parameterized matching. While the folklore wisdom is vindicated by these findings for the exact matching case, surprisingly, the KMP algorithm always works significantly faster than the naive in the parameterized matching case. We check this hypothesis for DNA texts and image data and observe a similar behavior as in the random text. We also show a very structured exact matching case where the automaton is much more efficient.

Similar content being viewed by others

Introduction

Algorithms design has two, almost distinct, tracks. The theoretical track devotes itself to ingenious algorithms and data structures on idealized problem versions, that offer efficient asymptotic complexity. The applications track solves real problems with their specialized inputs and the need for concrete fast solutions. These cross purposes may lead to different approaches for the solution to the same problem.

There is some research at the intersection of these two tracks. The nature of this research has, traditionally, two purposes:

-

1.

Experimental tests that show superiority of a simple, asymptotically less efficient algorithm, when applied to random or concrete data (see e.g., [14, 15, 20,21,22,23, 25, 30, 33, 36]).

-

2.

Experimental tests that try to pinpoint the size or type of data where the theoretically sophisticated algorithm does perform better. [6]

The main goal of this paper is to present a case where the naive algorithm performs worse than the sophisticated algorithm even for small-sized uniformly random data. We also show a few concrete “real world” applications where this is the case. The results were counter-intuitive to us and to many pattern matching researchers. Yet, when we analyzed these results, the simple theoretical explanation was, indeed, extremely convincing. So much so that we all were a bit stupefied as to why our intuition was “wrong” to begin with. The data structure we study is the Knuth–Morris–Pratt automaton, or the KMP automaton [32].

The KMP automaton is one of the most well-known data structures in Computer science. It allows solving the exact string matching problem in linear time. The exact string matching problem has input text T of length n and pattern P of length m, where the strings are composed of symbols from a given alphabet \(\Sigma\). The output is all text locations where the pattern occurrs in the text. The naive way of solving the exact string matching problem takes time O(nm). This can be achieved by sliding the pattern to start at every text location, and comparing each of its elements to the corresponding text symbol. Using the KMP automaton, this problem can be solved in time O(n). In fact, analysis of the algorithm shows that at most 2n comparisons need to be done.

It has long been known in the folklore Footnote 1 that if the text is composed of uniformly random alphabet symbols, the naive algorithm’s time is also linear. This belief is bolstered by the fact that the naive algorithm’s mean number of comparisons for text and pattern over a binary alphabet is bounded by

The number of comparisons in the KMP algorithm is also bounded by 2n. However, because control in the naive algorithm is much simpler, then it may be practically faster than the KMP algorithm.

The last few decades have prompted the evolution of pattern matching from a combinatorial solution of the exact string matching problem [24, 32] to an area concerned with approximate matching of various relationships motivated by computational molecular biology, computer vision, and complex searches in digitized and distributed multimedia libraries [8, 19]. An important type of non-exact matching is the parameterized matching problem which was introduced by Baker [11, 12]. Her main motivation lay in software maintenance, where program fragments are to be considered “identical” even if variable names are different. Therefore, strings under this model are comprised of symbols from two disjoint sets \(\Sigma\) and \(\Pi\) containing fixed symbols and parameter symbols, respectively. In this paradigm, one seeks parameterized occurrences, i.e., exact occurrences up to renaming of the parameter symbols of the pattern string in the respective text location. This renaming is a bijection \(b: \Pi \rightarrow \Pi\). An optimal algorithm for exact parameterized matching appeared in [5]. It makes use of the KMP automaton for a linear-time solution over fixed finite alphabet \(\Sigma\). Approximate parameterized matching was investigated in [9, 11, 27]. Idury and Schäffer [29] considered multiple matching of parameterized patterns.

Parameterized matching has proven useful in other contexts as well. An interesting problem is searching for images (e.g., [3, 10, 38]). Assume, for example, that we are seeking a given icon in any possible color map. If the colors were fixed, then this is exact two-dimensional pattern matching [2]. However, if the color map is different the exact matching algorithm would not find the pattern. Parameterized two dimensional search is precisely what is needed. If, in addition, one is also willing to lose resolution, then a two dimensional function matching search should be used, where the renaming function is not necessarily a bijection [1, 7]. Another degenerate parameterized condition appears in DNA matching. Because of the base pair bonding, exchanging A with T and C with G, in both text and pattern, produces a match [28].

Parameterized matching can also be naively done in time O(nm). Based on our intuition for exact matching, it is expected that here, too, the naive algorithm is competitive with the KMP automaton-based algorithm of [5] in a randomly generated text.

In this paper, we investigate the practical efficiency of the automaton-based algorithm versus the naive algorithm both in exact and parameterized matching. We consider the following parameters: pattern length, alphabet size, and distribution of pattern occurrences in the text. Our findings are that, indeed, the naive algorithm is faster than the automaton algorithm in practically all settings of the exact matching problem. However, it was conter-intuitive to see that the automaton algorithm is always more effective than the naive algorithm for parameterized matching over randomly generated texts. We analyze the reason for this difference.

Having established that the randomness of the text is what made the naive algorithm so efficient for exact matching. We, therefore, ran the comparison in a very structured artificial text, and the automaton algorithm was a clear winner.

Having understood the practical behavior of the naive versus automaton algorithm over randomly generated texts, we were curious if there were “real” texts with a similar phenomenon. We did two case studies. We ran the same experiments over DNA texts and observed a similar behavior as that of a randomly generated text. We also ran these experiments over image data and observed the same results.

Problem Definition

We begin with basic definitions and notation generally following [17].

Let \(S=s_1 s_2\ldots s_n\) be a string of length \(|S|=n\) over an ordered alphabet \(\Sigma\). By \(\varepsilon\) we denote an empty string. For two positions i and j on S, we denote by \(S[i.. j]=s_i.. s_j\) the factor (sometimes called substring) of S that begins at position i and ends at position j (it equals \(\varepsilon\) if \(j<i\)). A prefix of S is a factor that begins at position 1 (S[1..j]) and a suffix is a factor that ends at position n (S[i..n]).

The exact string matching problem is defined as follows:

Definition 1

( Exact String Matching) Let \(\Sigma\) be an alphabet set, \(T=t_1\cdots t_n\) the text and \(P=p_1\cdots p_m\) the pattern, \(t_i,p_j \in \Sigma ,\ \ i=1,\ldots {},n; j=1,\ldots {},m\). The exact string matching problem is:

input: text T and pattern P.

output: All indices \(j,\ \ j\in \{1,\ldots ,n-m+1\}\) such that

We simplify Baker’s definition of parameterized pattern matching.

Definition 2

(Parameterized-Matching) Let \(\Sigma\), T and P be as in Definition 1. We say that P parameterize-matches or simply p-matches T in location j if \(p_i\cong t_{j+i-1},\quad i=1,\ldots {},m\), where \(p_i\cong t_j\) if and only if the following condition holds:

for every \(k=1,\ldots {},i-1,\quad p_i=p_{i-k}\) if and only if \(t_{j}=t_{j-k}\).

The p-matching problem is to determine all p-matches of P in T.

It two strings \(S_1\) and \(S_2\) have the same length m then they are said to parameterize-match or simply p-match if \(s_{1_i} \cong s_{2_i}\) for all \(i\in \{1,\ldots ,m\}\).

Intuitively, the matching relation \(\cong\) captures the notion of one-to-one mapping between the alphabet symbols. Specifically, the condition in the definition of \(\cong\) ensures that there exists a bijection between the symbols from \(\Sigma\) in the pattern and those in the overlapping text, when they p-match. The relation \(\cong\) has been defined by [5] in a manner suitable for computing the bijection.

Example: The string ABABCCBA parameterize matches the string XYXYZZYX. The reason is that if we consider the bijection \(\beta :\{A,B,C\} \rightarrow \{X,Y,Z\}\) defined by \(A\xrightarrow {\beta } X,\ \ B\xrightarrow {\beta } Y,\ \ C\xrightarrow {\beta } Z\), then we get \(\beta (ABABCCBA) = XYXYZZYX\). This explains the requirement in Def. 2, where two sumbols match if they also match in all their previous occurrences.

Of course, the alphabet bijection need not be as extreme as bijection \(\beta\) above. String ABABCCBA also parameterize matches BABACCAB, because of bijection \(\gamma :\{A,B,C\} \rightarrow \{A,B,C\}\) defined as: \(A\xrightarrow {\gamma } B,\ \ B\xrightarrow {\gamma } A,\ \ C\xrightarrow {\gamma } C\).

For completeness, we define the KMP automaton.

Definition 3

Let \(P=p_1 \ldots p_m\) be a string over alphabet \(\Sigma\). The KMP automaton of P is a 5-tuple \((Q,\Sigma ,\delta _s, \delta _f, q_0, q_a)\), where \(Q=\{0,\ldots ,m\}\) is the set of states, \(\Sigma\) is the alphabet, \(\delta _s:Q \rightarrow Q\) is the success function, \(\delta _f:Q \rightarrow Q\) is the failure function, \(q_0=0\) is the start state and \(q_a=m\) is the accepting state.

The success function is defined as follows:

\(\delta _s(i)=i+1\), \(i=0,\ldots ,m-1\) and

\(\delta _s(0)=0\)

The failure function is defined as follows:

Denote by \(\ell (S)\) the length of the longest proper prefix of string S (i.e., excluding the entire string S) which is also a suffix of S.

\(\delta _f(i)=\ell (P[1..i]),\ \ \mathrm{for\ } i=1,..m\).

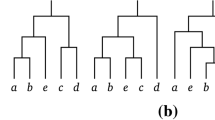

For an example of the KMP automaton see Fig. 1.

Theorem 1

[32] The KMP automaton can be constructed in time O(m).

The Exact String Matching Problem

The Knuth–Morris–Pratt (KMP) search algorithm uses the KMP automaton in the following manner:

Theorem 2

[32] The time for the KMP search algorithm is O(n). In fact, it does not exceed 2n comparisons.

The Parameterized Matching Problem

Amir, Farach, and Muthukrishnan [5] achieved an optimal time algorithm for parameterized string matching by a modification of the KMP algorithm. In fact, the algorithm is exactly the KMP algorithm, however, every equality comparison “\(x=y\)” is replaced by “\(x\cong y\)” as defined as follows.

Implementation of “\(x\cong y\)”

Construct table \(A[1],\ldots {},A[m]\) where \(A[i]=\) the largest \(k,\quad 1\le k < i\), such that \(p_k=p_i\). If no such k exists then \(A[i]=i\).

The following subroutines compute “\(p_i\cong t_j\)” for \(j\ge i\), and “\(p_i\cong p_j\)” for \(j\le i\).

Compare(\(p_i\),\(t_j\))

if\(A[i]=i\) and \(t_j\ne t_{j-1},\ldots {},t_{j-i+1}\) then return equal

if \(A[i]\ne i\) and \(t_j=t_{j-i+A[i]}\) then return equal

return not equal

end

Compare(\(p_i\),\(p_j\))

if (\(A[i]=i\) or \(i-A[i]\ge j\)) and \(p_j\ne p_1,\ldots {},p_{j-1}\) then return equal

if \(i-A[i]< j\) and \(p_j=p_{j-i+A[i]}\) then return equal

return not equal

end

Theorem 3

[5] The p-matching problem can be solved in \(O(n\log \sigma )\) time, where \(\sigma = \text{min} (m,|\Sigma |)\).

Proof

The table A can be constructed in \(O(m\log \sigma )\) time as follows: scan the pattern left to right keeping track of the distinct symbols from \(\Sigma\) in the pattern in a balanced tree, along with the last occurrence of each such symbol in the portion of the pattern scanned thus far. When the symbol at location i is scanned, look up this symbol in the tree for the immediately preceding occurrence; that gives A[i].

Compare can clearly be implemented in time \(O(\log \sigma )\). For the case \(A[i]\ne i\), the comparison can be done in time O(1). When scanning the text from left to right, keep the last m symbols in a balanced tree. The check \(t_j\ne t_{j-1},\ldots {},t_{j-i+1}\) in Compare(\(p_i\),\(t_j\)) can be performed in \(O(\log \sigma )\) time using this information. Similarly, Compare(\(p_i\),\(p_j\)) can be performed using A[i]. Therefore, the automaton construction in KMP algorithm with every equality comparison “\(x=y\)” replaced by “\(x\cong y\)” takes time \(O(m\log \sigma )\) and the text scanning takes time \(O(n\log \sigma )\), giving a total of \(O(n\log \sigma )\) time.

As for the algorithm’s correctness, Amir, Farach and Muthukrishnan showed that the failure link in automaton node i produces the largest prefix of \(p_1\ldots p_i\) that p-matches the suffix of \(p_1\ldots p_i\). \(\square\)

Our Experiments

Our implementation was written in \(C++\). The platform was Dell latitude 7490 with intel core i7 - 8650U, 32 GB RAM, with 8 MB cache. The running time was computed using the chrono high-resolution clock. The time for a tick of that clock is one nanosecond. The random strings were generated using the random Python package.

We implemented the naive algorithm for exact string matching and for parameterized matching. The same code was used for both, except for the implementation of the equivalence relation for parameterized matching, as described above. This required implementing the A array. We also implemented the KMP algorithm for exact string matching, and used the same algorithm for parameterized matching. The only difference was the implementation of the equivalence parameterized matching relation.

The text length n was 1,000,000 symbols. Theoretically, since both the automaton and naive algorithm are sequential and only consider a window of the pattern length, it would have been sufficient to run the experiment on a text of size twice the pattern [4]. However, for the sake of measurement resolution we opted for a large text. Yet the size of 1,000,000 comfortably fits in the cache, and thus we avoid the caching issue. In general, any searching algorithm for patterns of length less than 4MB would fit in the cache if appropriately constructed in the manner of [4]. Therefore our decision gives as accurate a solution as possible.

We ran patterns of lengths \(m=32,64,128,256,512,\) and 1024. The alphabet sizes tested were \(|\Sigma |=2,4,6,8,10,20,40,80,160,320\). For each size, 10 tests were run, for a total of 600 tests.

Methodology: We generated a uniformly random text of length 1, 000, 000. If the pattern would also be randomly generated, then it would be unlikely to appear in the text. However, when seeking a pattern in the text, one assumes that the pattern occurs in the text. An example would be searching for a sequence in the DNA. When seeking a sequence, one expects to find it but just does not know where. Additionally, we considered the common case where one does not expect many occurrences of the pattern in the text. Consequently, we planted 100 occurrences of the pattern in the text at uniformly random locations. The final text length was always 1,000,000. The reason for inserting 100 pattern occurrences is the following. We do not expect many occurrences, and a 100 occurrences in a million-length text means that less than \(0.1\%\) of the text has pattern occurrences. On the other hand, it is sufficient to introduce the option of actually following all elements of the pattern 100 times. This would make a difference in both algorithms. They would both work faster if there were no occurrences at all. There are many possible ways of planting 100 copies of the pattern at random locations. Our method was randomly generating the indices of the planted copies. In order to avoid overlap, we generated 100 indices in a range from 1 to 1,000,000-L, where L is the total length of planted patterns. We inserted a pattern copy at each of these indices, starting from the smallest. for each such insertion, we added the pattern length to each of the remaining indices.

We also implemented a variation where half of the pattern occurrences were in the last quarter of the text. For each alphabet size and pattern length we generated 10 tests and considered the average result of all 10 tests. It should be noted that from a theoretical point of view, the location of the pattern should not make a difference. We tested the different options in order to verify that this is, indeed, the case.

The software code can be found at https://github.com/Ora70/Automaton.

Exact Matching

Results

Tables 3 and 4 in the Appendix show the alphabet size, the pattern length, the average of the running times of the naive algorithm for the 10 tests, the average of the running time of the KMP algorithm for the 10 tests, and the ratio of the naive algorithm running time over the KMP algorithm running time. Any ratio value below 1 means that the naive algorithm is faster. A small value indicates a better performance of the naive algorithm. Any value above 1 indicates that the KMP algorithm is faster than the naive algorithm. The larger the number, the better the performance.

To enable a clearer understanding of the results, we present them below in graph form. The following graphs show the results of our tests for the different pattern lengths. In Figs. 2 and 3, the x-axis is the pattern size. The y-axis is the ratio of the naive algorithm running time to the KMP algorithm running time. The different colors depict alphabet sizes. In Fig. 2, the patterns were inserted at random, whereas in Fig. 4 the patterns appear at the last half of the text.

To better see the effect of the pattern distribution in the text, Fig. 4 maps, on the same graph, both cases. In this graph, the x-axis is the average running time of all pattern lengths per alphabet size, and the y-axis is the ratio of the naive algorithm running time to the KMP algorithm running time. The results of the uniformly random distribution are mapped in one color, and the results of all pattern occurrences in the last half of the text are mapped in another.

We note the following phenomena:

-

1.

The naive algorithm performs better than the automaton algorithm. Of the 600 tests we ran, there were only 3 instances where the KMP algorithm performed better than the naive, and all were subsumed by the average. In the vast majority of cases the naive algorithm was superior by far.

-

2.

The naive algorithm performs relatively better for larger alphabets.

-

3.

For a fixed alphabet size, there is a slight increase in the naive/KMP ratio, as the pattern length increases.

-

4.

The distribution of the pattern occurrences in the text does not seem to make a change in performance.

An analysis of these implementation behaviors appears in the next subsection.

Analysis

We analyze all four results noted above.

Better Performance of the Naive Algorithm

We have seen that the mean number of comparisons of the naive algorithm for binary alphabets is bounded by

The running time of the KMP algorithm is also bounded by O(2n). However, the control of the KMP algorithm is more complex than that of the naive algorithm, which would indicate a constant ratio in favor of the naive algorithm. However, when the KMP algorithm encounters a mismatch it follows the failure link, which avoids the need to re-check a larger substring. Thus, for longer length patterns, where there are more possibilities of following the failure links for longer distances, there is a lessening advantage of the naive algorithm.

Better Performance of the Naive Algorithm for Larger Alphabets

This is fairly clear when we realize that the mean performance of the naive algorithm for alphabet of size k is:

This is clearly decreasing the larger the alphabet size. However, the repetitive traversal of the failure link, even in cases where there is no equality in the comparison check, will still raise the relative running time of the KMP algorithm. Here too, the longer the pattern length, the more failure link traversals of the KMP, and thus less overall comparisons, which slightly decreases the advantage of the naive algorithm.

The Distribution of Pattern Occurrences in the Text

If the pattern is not periodic, and if the patterns are not too frequent in the text, then there will be at most one pattern in a text substring of length 2m. In these circumstances, there is really no effect to the distribution of the pattern in the text. We would expect a difference if the pattern is long with a small period. Indeed, an extreme such case is tested in Sect. 5.1.3.

A Very Structured Example

All previous analyses point to the conviction that the more times a prefix of the pattern appears in the text, and the more periodic the pattern, the better will be the performance of the KMP algorithm. The most extreme case would be of text \(A^n\) (A concatenated n times), and pattern \(A^{m-1}B\). Indeed the results of this case appear in Fig. 5.

Theoretical analysis of the naive algorithm predicts that we will have nm comparisons, where n is the text length and m is the pattern length. The KMP algorithm will have 2n comparisons, for any pattern length. Thus the ratio q of naive to KMP will be \(O({m\over 2})\). In fact, when we plot \({m\over q}\) we get twice the cost of the control of the KMP algorithm. This is shown in Fig. 5 to be 5.

Parameterized Matching

Results

The exact matching results behaved roughly in the manner we expected. The surprise came in the parameterized matching case. Below are the results of our tests. It should be remarked that, as in the classical exact matching case, we compared the naive algorithm with a straight-forward automaton implementation. There exist sophisticated parameterized matching implementations that are sublinear on random texts [13, 26]. Yet for our tests we used the straight-forward automaton-based algorithm, and it was sufficient for achieving a clear-cut superiority over the naive algorithm. As in the exact matching case, the tables show the alphabet size, the pattern length, the average of the running times of the naive algorithm for the 10 tests, the average of the running time of the automaton-based algorithm for the 10 tests, and the ratio q of the naive algorithm running time over the automaton-based algorithm running time. Any ratio value above 1 means that the automaton-based algorithm is faster. A large value indicates a better performance of the automaton-based algorithm.

The following graphs show the results of our tests for the different pattern lengths. The x-axis is the pattern size. The y-axis is the ratio of the naive algorithm running time to the automaton-based algorithm running time. The different colors depict alphabet sizes. To better see the effect of the pattern distribution in the text, we also map, on the same graph, both cases. In this graph, the x-axis is the average running time of all pattern lengths per alphabet size, and the y-axis is the ratio of the naive algorithm running time to the automaton-based algorithm running time. The results of the uniformly random distribution are mapped in one color, and the results of all pattern occurrences in the last half of the text are mapped in another.

The parameterized matching results appear in Tables 5 and 6 in the appendix. Figures 6 and 7 map the results of the parameterized matching comparisons for the case where the patterns were inserted at random versus the case where the patterns appear at the last half of the text. In Fig. 8, we map at the same graph the average results of both the cases where the patterns appear at the text uniformly at random, and where the patterns appear at the last half of the text.

The results are very different from the exact matching case. We note the following phenomena:

-

1.

The automaton-based algorithm always performs significantly better than the naive algorithm.

-

2.

The automaton-based algorithm performs relatively better for larger alphabets.

-

3.

For a fixed alphabet size, the pattern length does not seem to make much difference.

-

4.

The distribution of the pattern occurrences in the text does not seem to make a change in performance.

An analysis of these implementation behaviors and an explanation of the seemingly opposite results from the exact matching case appear in the next subsection.

Analysis

We analyze all four results noted above.

Better Performance of the Automaton-based Algorithm

We have established that the mean number of comparisons for the naive algorithm in size k alphabet is

However, when it comes to parameterized matching, any order of the alphabet symbols is a match, thus if one consider a pattern prefix of length i where all k alphabet symbols occur, then the mean number of comparisons is to be multiplied by k!. We explain this at greater length:

When doing exact matching, the probabilty that the first pattern symbol equals the text symbol is \(1\over k\). However, in parameterized matching, the first element always matches the text element, so that probabilty needs to be multiplied by k. This indeed gives us probaility 1 for matching the first pattern symbol. As long as the first symbol appears in a run, the probabilty of matching it in the text is \(1\over k\) per location. But once a new alphabet symbol appears in the pattern, the probability of a match is \({(k-1)\over k}\). In general, when the jth alphabet symbol appears for the first time in the pattern, its probability of matching the text is \({(k+1-j)\over k}\). All other pattern symbols (i.e., those that have appeared previously) have matching probability \(1\over k\).

This explains why the probability of matching a prefix of length j where all symbols of the alphabet occur is

.

By the discussion above, the probability of maching a prefix of length j where \(\ell _j\) different alphabet symbols occur is

, where

.

This bounds the mean number of comparisons for a size k alphabet by:

.

Therefore, for size 2 alphabet we get 3n comparisons, and the number rises exponentially with the alphabet size. Also, the automaton-based algorithms is constant at 2n comparisons. Even for a size 2 alphabet, the number of comparisons in the naive algorithm is greater by 50% than in the automaton-based algorithm. Note, also, that because of the need to find the last parameterized match, the control mechanism even of the naive algorithm, is more complex. This results in a superior performance of the automaton-based algorithm even for small alphabets. Of course, the larger the alphabet, the better the performance of the automaton-based algorithm.

Pattern Length

The pattern length does not play a role in the automaton-based algorithm, where the number of comparisons is always bounded by 2n. In the naive case, the multiplication of the factorial of the alphabet size is so overwhelming that it dominates the pattern length. For example, note that for an extremely large alphabet, there would be a leading prefix of different alphabet symbols. That prefix will always be traversed by the naive algorithm. The larger the alphabet, the longer will be the mean length of that prefix.

Pattern Distribution

As in the exact matching case, for a non-periodic pattern that does not appear too many times, the distribution of occurrences will have no effect on the complexity.

DNA Data

Having understood the behavior of the naive algorithm and the automaton-based algorithm in randomly generated texts, the natural question is are there any “real” texts for which the naive algorithm performs better than the automaton-based algorithm.

We performed the same experiments on DNA data. The experimental setting was identical to that of the randomly generated texts with the following differences:

-

1.

The DNA of the fruit fly, Drosophila melanogaster is 143.7 MB long. We extracted 10 subsequences of length 1,000,000 each, as FASTA data from the NIH National Library of Medicine, National Center for Biotechnology Information. We ran a test for each of the six pattern lengths \(\ell =\) 32, 64, 128, 256, 512, 1024, on each of the 10 DNA subsequences, and noted the average. In order to be as close to “real life” as possible, we assume that only one copy of the pattern occurs in the text, thus the pattern was chosen to be the first \(\ell\) symbols in the sequence. This also has the advantage of having a pattern with a “similar” structure to the text.

-

2.

The alphabet size is 4, due to the four bases in DNA sequence.

Figures 9 and 10 show the ratio between the average running time of the naive algorithm and the automaton based algorithm. As in the uniformly random text we see that for the exact matching case the ratio is less than 1, i.e., the naive algorithm is faster, whereas in the parameterized matching case, the ratio is more than 1, indicating that the automaton based algorithm is faster.

Image Data

For our case study we used the famous “lena” image (see Fig. 11), that is a historic benchmark for many computer vision algorithms. We converted the lena image to a gray level image, and did the search on the pixel level. The conversion was done via the python code at https://colab.research.google.com/drive/1JLCezhduNtCqYO4nMTKLIwi0AaFejsZW?usp=sharing/.

The text size was 65536. The pattern sizes are the same six: 32, 64, 128, 256, 512, and 1024. For each size, 10 different patterns were run, chosen from the text, and the average running time was used.

The results can be seen in Tables 1 and 2 below. They are similar to the randomized data and DNA data cases - the automaton is significantly more efficient in parameterized matching, while the naive algorithm is faster in exact matching.

Conclusions

The folk wisdom has been that simple algorithms generally outperform sophisticated algorithms over uniformly random data. In particular, the naive string matching algorithm will outperform the automaton-based algorithm for uniformly random texts. Indeed this turns out to be the case for exact matching. This study shows that this is not the case for parameterized matching, where the automaton-based algorithm always outperforms the naive algorithm. This advantage is clear and is impressively better the larger the alphabets. The study also shows that the automaton algorith is clearly superior for searches over DNA data, and image data.

The conclusion to take away from this study is that one should not automatically assume that the naive algorithm is better. In string matching, the matching relation should be analyzed. There are various matchings for which an automaton-based algorithm exists. We considered here parameterized matching, but other matchings, such as ordered matching [16, 18, 31], or Cartesian tree matching [34, 35, 37], can also be solved by automaton-based methods. In a practical application it is worthwhile spending some time considering the type of matching one is using. It may turn out to be that the automaton-based algorithm will perform significantly better than the naive, even for uniformly random texts. Alternately, even nonuniformly random data may be such that the naive algorithm performs better than the automaton based algorithm for exact matching.

An open problem is to compare the search time in DNA data to the search time in uniformly random data. While it is clear that DNA data are not uniformly random, it would be interesting to devise an experimental setting to compare search efficiency in both types of strings. A similar study for image data would be interesting.

Notes

The second author heard this for the first time from Uzi Vishkin in 1985. Since then this belief has been mentioned, in many occasions, by various researchers in the community.

References

Amir A, Aumann A, Lewenstein M, Porat E. Function matching. SIAM J Comput. 2006;35(5):1007–22.

Amir A, Benson G, Farach M. An alphabet independent approach to two dimensional pattern matching. SIAM J Comp. 1994;23(2):313–23.

Amir A, Church KW, Dar E. Separable attributes: a technique for solving the submatrices character count problem. In: Proceedings of 13th ACM-SIAM symposium on discrete algorithms (SODA); 2002. pp. 400–401.

Amir A, Farach M. Efficient 2-dimensional approximate matching of half-rectangular figures. Inf Comput. 1995;118(1):1–11.

Amir A, Farach M, Muthukrishnan S. Alphabet dependence in parameterized matching. Inf Process Lett. 1994;49:111–5.

Amir A, Levy A, Reuveni L. The practical efficiency of convolutions in pattern matching algorithms. Fundam Inform. 2008;84(1):1–15.

Amir A, Nor I. Generalized function matching. J Discret Algorithm. 2007;5(3):514–23.

Apostolico A, Galil Z (editors). Pattern Matching Algorithms. Oxford University Press; 1997.

Apostolico A, Lewenstein M, Erdös P. Parameterized matching with mismatches. J Discret Algorithm. 2007;5(1):135–40.

Babu GP, Mehtre BM, Kankanhalli MS. Color indexing for efficient image retrieval. Multimed Tools Appl. 1995;1(4):327–48.

Baker BS. Parameterized pattern matching: algorithms and applications. J Comput Syst Sci. 1996;52(1):28–42.

Baker BS. Parameterized duplication in strings: algorithms and an application to software maintenance. SIAM J Comput. 1997;26(5):1343–62.

Baker Brenda S. Parameterized pattern matching by Boyer–Moore-type algorithms. In: Proceedings 6th annual ACM-SIAM symposium on discrete algorithms (SODA), ACM/SIAM; 1995, pp. 541–550.

Barton C, Iliopoulos CS, Pissis SP. Fast algorithms for approximate circular string matching. Algorithm Mol Biol. 2014;9:9.

Baumstark N, Gog S, Heuer T, Labeit J. Practical range minimum queries revisited. In: 16th Int’l symposium on experimental algorithms, (SEA), vol. 75 ,LIPIcs, Schloss Dagstuhl - Leibniz-Zentrum für Informatik; 2017, pp. 12:1–12:16.

Cho S, Na JC, Park K, Sim JS. Fast order-preserving pattern matching. In: Proceedings 7th conference combinatorial optimization and applications COCOA, vol. 8287, Lecture Notes in Computer Science, Springer; 2013, pp. 295–305.

Crochemore M, Hancart C, Lecroq T. Algorithms on strings. Cambridge University Press; 2007.

Crochemore M, Iliopoulos C.S, Kociumaka T, Kubica M, Langiu A, Pissis SP, Radoszewski J, Rytter W, Walen T. Order-preserving incomplete suffix trees and order-preserving indexes. In: Proceedings 20th international symposium on string processing and information retrieval (SPIRE), vol. 8214, LNCS, Springer; 2013, pp. 84–95.

Crochemore M, Rytter W. Text algorithms. Oxford University Press; 1994.

Dantzig GB. Linear programming. Oper Res. 2002;50(1):42–7.

Dinklage P, Fischer J, Herlez A. Engineering predecessor data structures for dynamic integer sets. In: 19th Int’l symposium on experimental algorithms, (SEA), vol. 190, LIPIcs, Schloss Dagstuhl - Leibniz-Zentrum für Informatik; 2021, pp. 7:1–7:19.

Dinklage P, Fischer J, Herlez A, Kociumaka T, Kurpicz F. Practical performance of space efficient data structures for longest common extensions. In: 28th annual European symposium on algorithms, (ESA), vol. 173, LIPIcs, Schloss Dagstuhl - Leibniz-Zentrum für Informatik; 2020, pp. 39:1–39:20.

Ferrada H, Navarro G. Improved range minimum queries. J Discr Algorithm. 2017;43:72–80.

Fischer M.J, Paterson M.S. String matching and other products. In: Karp RM, editor. Complexity of computation, SIAM-AMS Proceedings; 1974, vol. 7, pp. 113-125.

Franek F, Islam ASMS, Rahman MS, Smyth WF. Algorithms to compute the lyndon array. In: Holub J, Zdárek J, editors, Proceedings of prague stringology Conference;2016, pp. 172–184

Fredriksson K, Mozgovoy M. Efficient parameterized string matching. Inf Process Lett. 2006;100(3):91–6.

Hazay C, Lewenstein M, Sokol D. Approximate parameterized matching. In: Proceedings of 12th annual European symposium on algorithms (ESA 2004); 2004. pp. 414–425.

Holub J, Smyth WF, Wang S. Fast pattern-matching on indeterminate strings. J Discr Algorithm. 2008;6(1):37–50.

Idury R.M, Schäffer AA. Multiple matching of parameterized patterns. In: Proceedings of 5th combinatorial pattern matching (CPM), vol. 807, LNCS, Springer-Verlag; 1994, pp. 226–239.

Ilie L, Navarro G, Tinta L. The longest common extension problem revisited and applications to approximate string searching. J Discr Algorithm. 2010;8(4):418–28.

Kim J, Amir A, Na J.C, Park K., Sim J.S. On representations of ternary order relations in numeric strings. In: Proceedings of 2nd international conference on algorithms for big data (ICABD), vol. 1146, CEUR Workshop Proceedings; 2014. pp. 46–52.

Knuth DE, Morris JH, Pratt VR. Fast pattern matching in strings. SIAM J Comp. 1977;6:323–50.

Loukides G, Pissis SP. Bidirectional string anchors: a new string sampling mechanism. In: 29th annual European symposium on algorithms, (ESA), vol. 204, LIPIcs, Schloss Dagstuhl - Leibniz-Zentrum für Informatik; 2021, pp. 64:1–64:21.

Park SG, Amir A, Landau GM, Park K. Cartesian Tree Matching and Indexing. In: Nadia P and Pissis SP, editors, Proceedings 30th symposium on combinatorial pattern matching (CPM), vol. 128, leibniz international proceedings in informatics (LIPIcs); 2019, pp. 16:1–16:14.

Park SG, Bataa M, Amir A, Landau GM, Park K. Finding patterns and periods in cartesian tree matching. Theor Comput Sci. 2020;845:181–97.

Puglisi SJ, Smyth WF, Turpin A. Inverted files versus suffix arrays for locating patterns in primary memory. In: Proceedings of 13th international symposium on string processing and information retrieval (SPIRE), vol. 4209, LNCS, Springer; 2006, pp. 122–133.

Song S, Gu G, Ryu C, Faro S, Lecroq T, Park K. Fast algorithms for single and multiple pattern cartesian tree matching. Theor Comput Sci. 2021;849:47–63.

Swain M, Ballard D. Color indexing. Int J Comput Vis. 1991;7(1):11–32.

Acknowledgements

The authors are grateful to Gonzalo Navarro and Solon Pissis for their helpful suggestions.

Funding

Open access funding provided by Bar-Ilan University. Amihood Amir: Partly supported by ISF Grant 1475/18 and BSF Grant 2018141.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “String Processing and Combinatorial Algorithms” guest edited by Simone Faro.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Amir, O., Amir, A., Fraenkel, A. et al. On the Practical Power of Automata in Pattern Matching. SN COMPUT. SCI. 5, 400 (2024). https://doi.org/10.1007/s42979-024-02679-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-024-02679-7